Facial expression recognition method of improved MobileNet model

A facial expression recognition and facial expression technology, applied in the field of facial expression recognition of the MobileNet model, can solve problems such as the complexity of the network model, the large number of model parameters, and the difficulty in meeting hardware requirements for mobile terminals and embedded devices

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] The present invention will be further described below in conjunction with accompanying drawing:

[0017] The specific method of facial expression recognition of the improved MobileNet model is as follows:

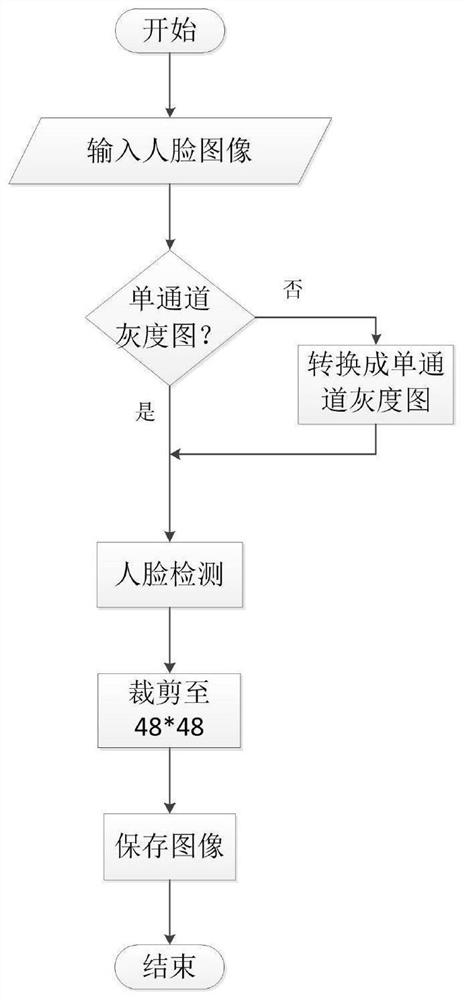

[0018] First follow the attached figure 2 The human facial expression image preprocessing process preprocesses the input facial expression image. In this preprocessing process, first judge whether to convert to a single-channel grayscale image according to the type of the input image. If the image is already a single-channel grayscale image, go to the next step directly, otherwise, convert it. Then perform face detection on the output image of the previous step to determine the face area. Finally, the image is cropped according to the obtained face area, and cropped to a single-channel grayscale image with a size of 48*48, so far the preprocessing process is completed.

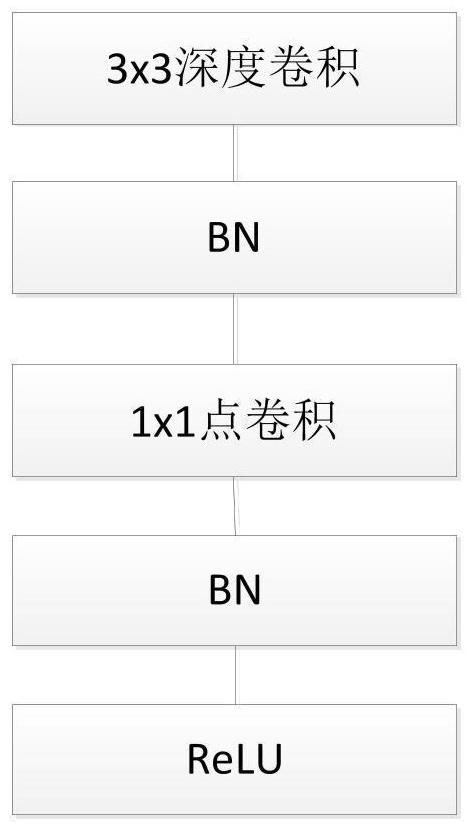

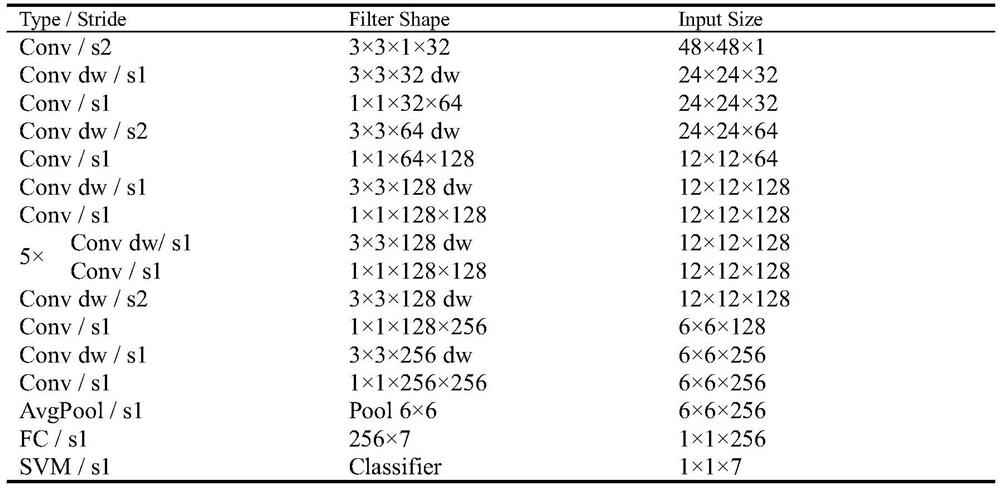

[0019] Then input the preprocessed image into the network model built as shown in Table 1 for...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com