Target tracking method based on deep convolution feature adaptive integration

A deep convolution and target tracking technology, applied in the fields of image processing and computer vision image processing, can solve the problems of inaccurate target position, unstable tracking, and inability to make full use of the tracker to avoid ambiguity, enhance accuracy and reliability. sexual effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The following Examples further embodiment and effects of the present invention is described with the accompanying drawings.

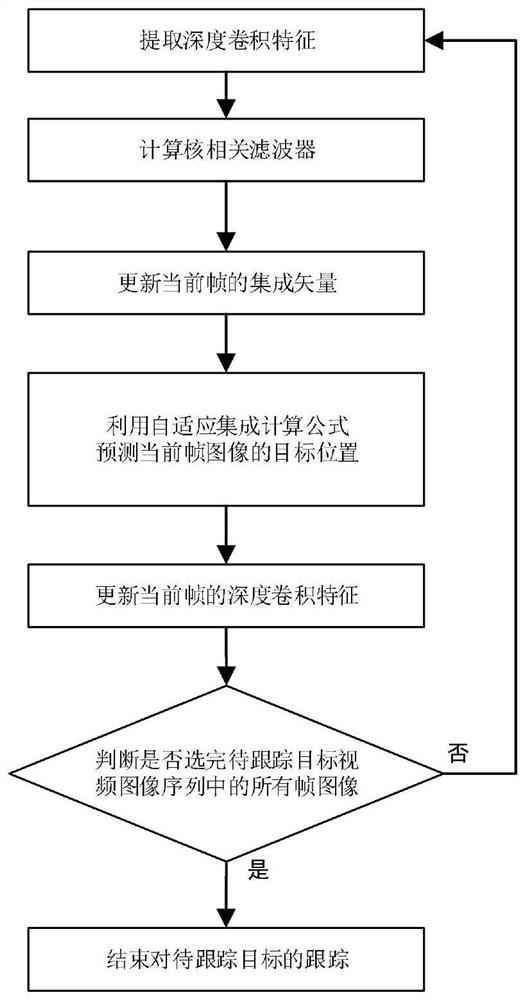

[0035] Refer figure 1 , The step of carrying out the invention further described.

[0036] Step 1, the depth of convolution feature extraction.

[0037] Selecting an unselected image over the video image sequence from a target to be tracked is contained in the current frame.

[0038] All the pixels contained within the target region of the current frame is input to a convolutional neural networks VGG-19, the network of the first layer 10, second layer 28, wherein stitching the first three channels of the output layer 37 as a target area multichannel convolution feature depth.

[0039] Step 2, calculating correlation filter core.

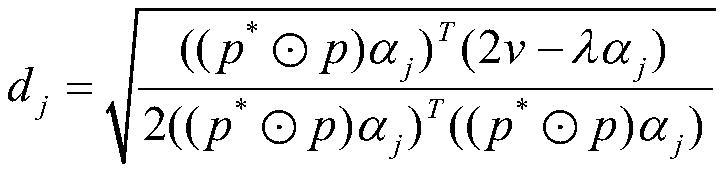

[0040] Step 1, according to the following formula to calculate the current frame correlation filter core current iteration:

[0041]

[0042] Among them, α j Indicates that the current frame iteration j correlation filter...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com