Information acquisition method for English pronunciation

A technology for information collection and speech, applied in speech analysis, speech recognition, instruments, etc., can solve the problems of incoherent speech, inaccurate recognition, and output distortion, and achieve the effect of speech smoothing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

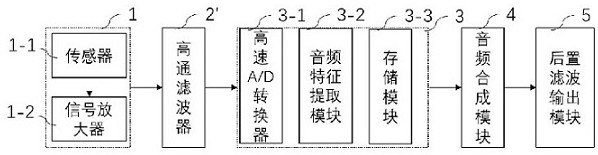

[0039] like Figure 4 As shown, a kind of information collection method for English voice is also provided, and the concrete steps of described information collection method are as follows:

[0040] S1, collecting audio signals and amplifying them;

[0041] S2, performing analog filtering on the amplified audio signal;

[0042] S3, converting the analog filtered signal into a digital signal and extracting audio characteristic parameters of the digital audio signal: attack time, spectral centroid, spectral flux, pitch frequency, sharpness, etc.;

[0043] S4. Match the above-mentioned audio feature parameters with the sound source model in the standard sound source database, then match the digital audio signal with the syllable and phoneme in the sound source model to obtain a matching degree, and perform phoneme correction according to the matching degree gap;

[0044] S5. Combining the corrected phonemes into the digital audio signal;

[0045] S6. Perform fuzzy filtering on...

Embodiment 2

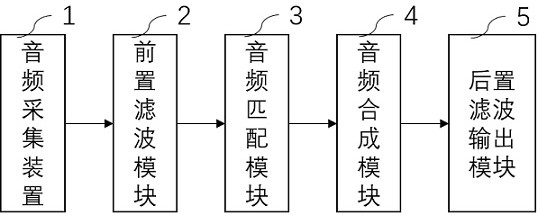

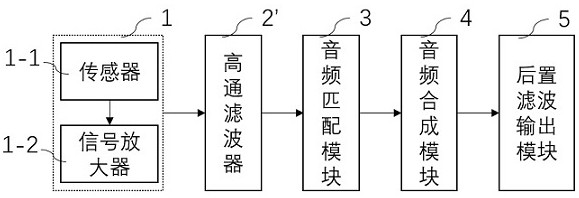

[0051] Such as figure 1 As shown, a specific embodiment provided by the present invention is an English pronunciation information collection system, including an audio collection device 1, a pre-filter module 2, an audio matching module 3, an audio synthesis module 4 and a post-filter output module 5;

[0052] The audio collection device 1 is used to collect audio signals and amplify them,

[0053] The pre-filter module 2 is used for analog filtering the amplified audio signal,

[0054] The audio matching module 3 converts the analog filtered signal into a digital signal and extracts audio features such as attack time, spectral centroid, spectral flux, pitch frequency, and sharpness of the digital audio signal, and compares the above audio features with the standard sound source The sound source model in the database is matched, and then the digital audio signal is matched with the syllable and phoneme in the sound source model to obtain the matching degree, and the phoneme i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com