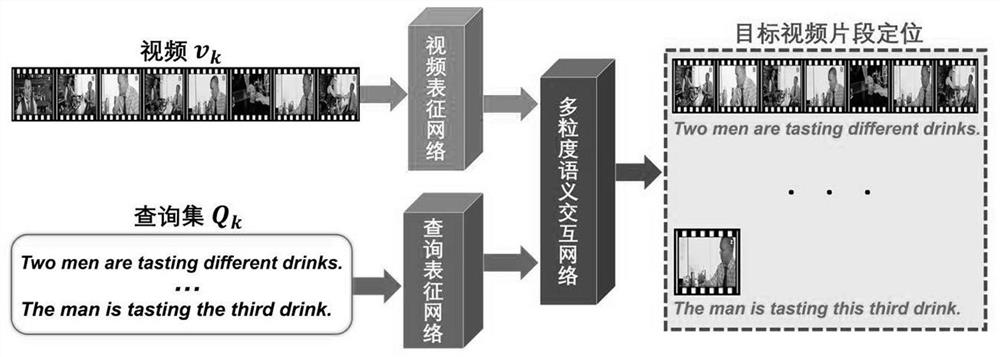

Monitoring video intelligent early warning method based on multimedia semantic analysis

A technology for monitoring video and semantic analysis, applied in neural learning methods, instruments, biological neural network models, etc., can solve problems affecting the efficiency of video positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

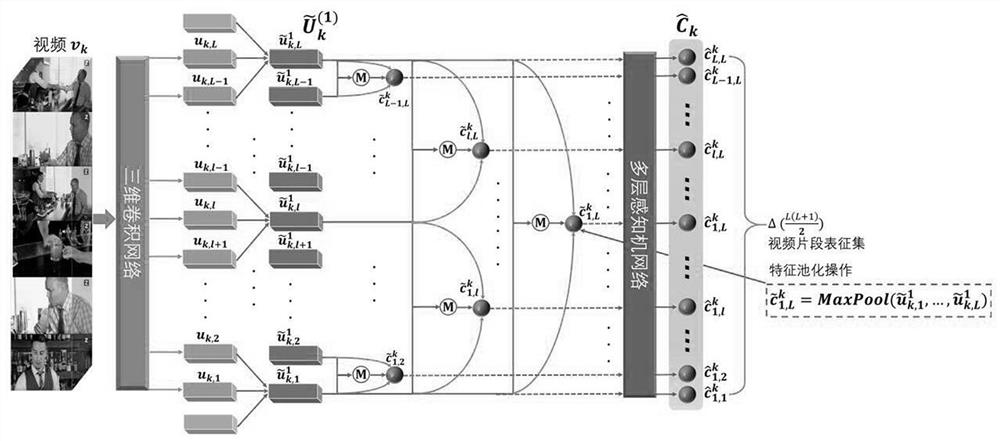

[0045] Step A) Take 16 frames to the minimum unit to the row video data V k The unit segmentation is performed, and the network data V after the network is divided into the network and the two-way timing. k Convolution processing.

Embodiment 2

[0047] Step d) includes the following steps:

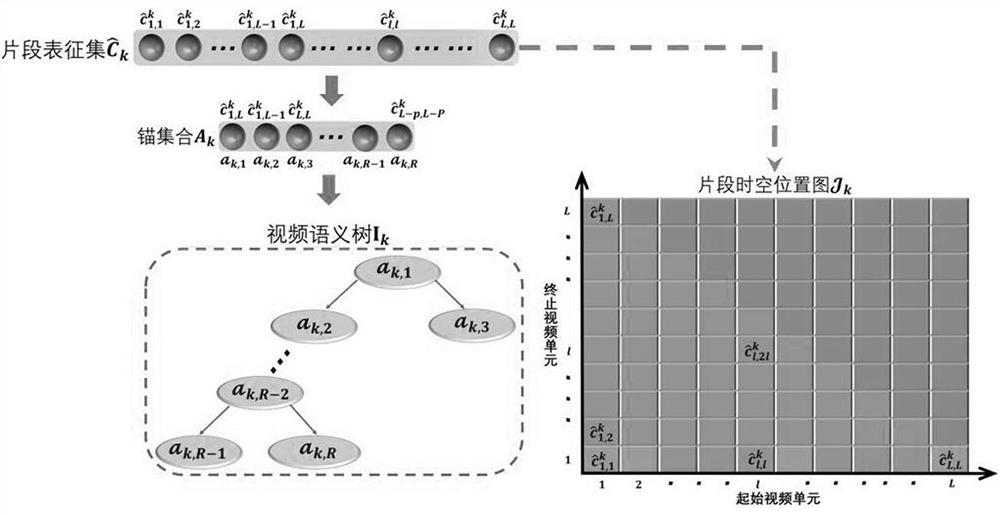

[0048] According to formula From matrix J k Select the R bar iconic fragment to form anchor set A k , Where

[0049] L is the number of video units, In addition to the default, PMOD2 is the remainder of the division operation, and a k,1 Set to video semantic tree I k Root node, will a k,2 And a k,3 Set to a separately k,1 The left and right subtitches, repeat the above steps until A k,p And a k,p+1 Set to a k,p-2 The left and right sub-nodes, 1 ≤ p ≤ r, so that until the anchor set A k The R anchor node is set.

Embodiment 3

[0051] Step f) through the formula Calculate the loss function φ of the full connection neural network 1 , Where For the Fronius norm, T is the transposition, and L is a unified dimension of multi-modal feature settings.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com