Video description data processing method, device and storage medium

A video description and data processing technology, applied in the field of video processing, which can solve the problems of large model calculation, complex calculation, and verbose sentences.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

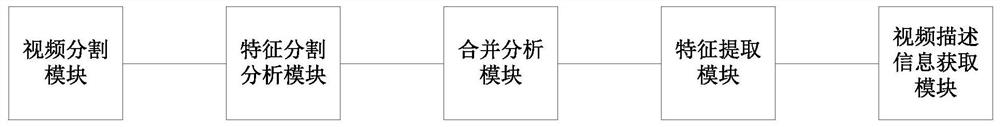

[0057] Such as figure 1 As shown, a video description data processing method includes the following steps:

[0058] Import a video sequence, and divide the video sequence into a plurality of video pictures;

[0059] Perform feature segmentation analysis on all the video pictures through a preset convolutional neural network to obtain multiple lens data sets;

[0060] Merging and analyzing all the shot datasets through the preset convolutional neural network to obtain multiple merged shot datasets;

[0061] performing feature extraction on a plurality of the combined lens data sets through the preset convolutional neural network to obtain a video description feature sequence;

[0062] The video description feature sequence is converted into video description information through a preset video description model.

[0063] It should be understood that the shot data sets are sequentially generated according to the time of occurrence of events of the input video, for example, the...

Embodiment 2

[0069] Such as figure 1 As shown, a video description data processing method includes the following steps:

[0070] Import a video sequence, and divide the video sequence into a plurality of video pictures;

[0071] Perform feature segmentation analysis on all the video pictures through a preset convolutional neural network to obtain multiple lens data sets;

[0072] Merging and analyzing all the shot datasets through the preset convolutional neural network to obtain multiple merged shot datasets;

[0073] performing feature extraction on a plurality of the combined lens data sets through the preset convolutional neural network to obtain a video description feature sequence;

[0074] converting the video description feature sequence into video description information through a preset video description model;

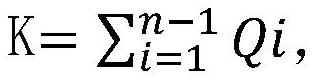

[0075] The process of performing feature segmentation analysis on all the video pictures through the preset convolutional neural network to obtain multiple lens data ...

Embodiment 3

[0082] Such as figure 1 As shown, a video description data processing method includes the following steps:

[0083] Import a video sequence, and divide the video sequence into a plurality of video pictures;

[0084] Perform feature segmentation analysis on all the video pictures through a preset convolutional neural network to obtain multiple lens data sets;

[0085] Merging and analyzing all the shot datasets through the preset convolutional neural network to obtain multiple merged shot datasets;

[0086] performing feature extraction on a plurality of the combined lens data sets through the preset convolutional neural network to obtain a video description feature sequence;

[0087] converting the video description feature sequence into video description information through a preset video description model;

[0088] The process of performing feature segmentation analysis on all the video pictures through the preset convolutional neural network to obtain multiple lens data ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com