Visual feature segmentation semantic detection method and system in video description

A visual feature and video description technology, applied in the field of deep learning video understanding, can solve problems such as unfavorable security monitoring and short video content review, easy loss of local semantic information, and affect video text description results, so as to improve work efficiency and model performance effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

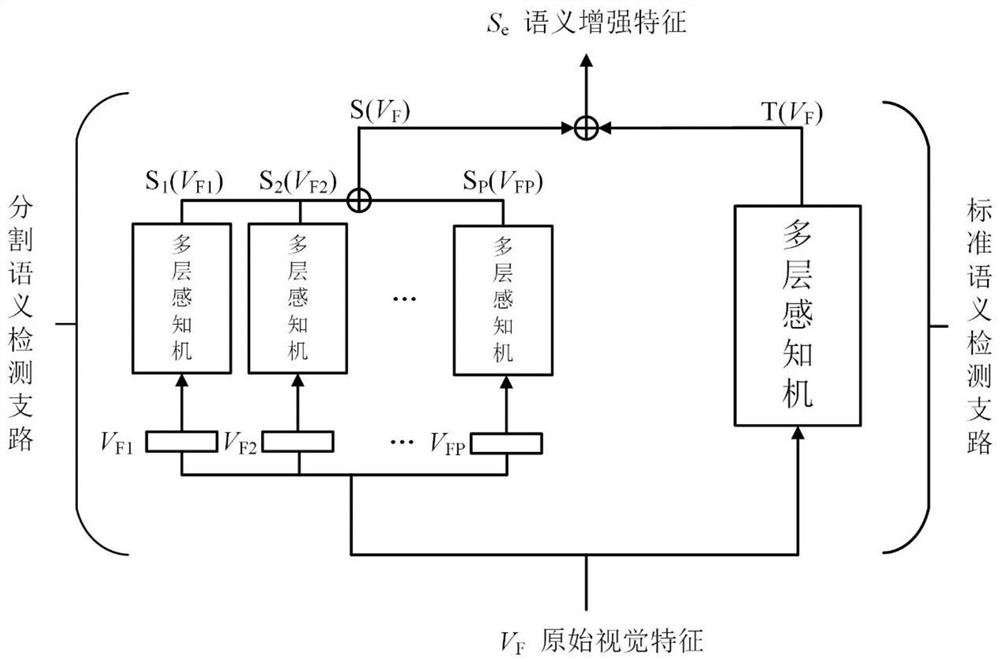

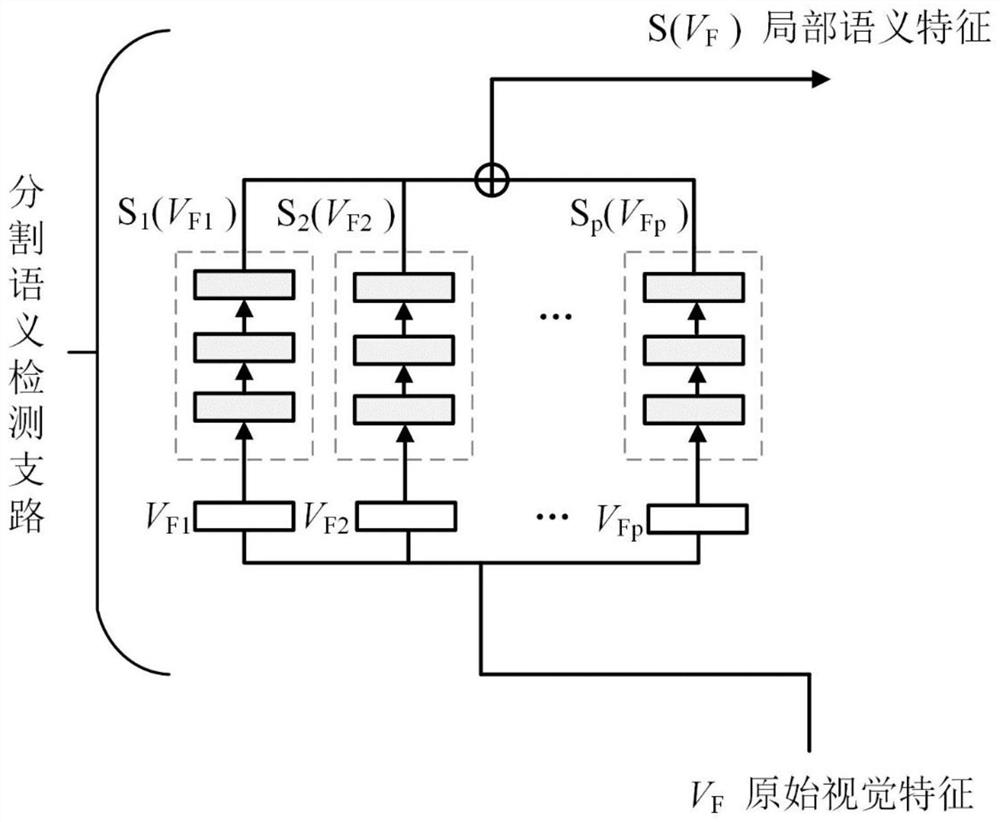

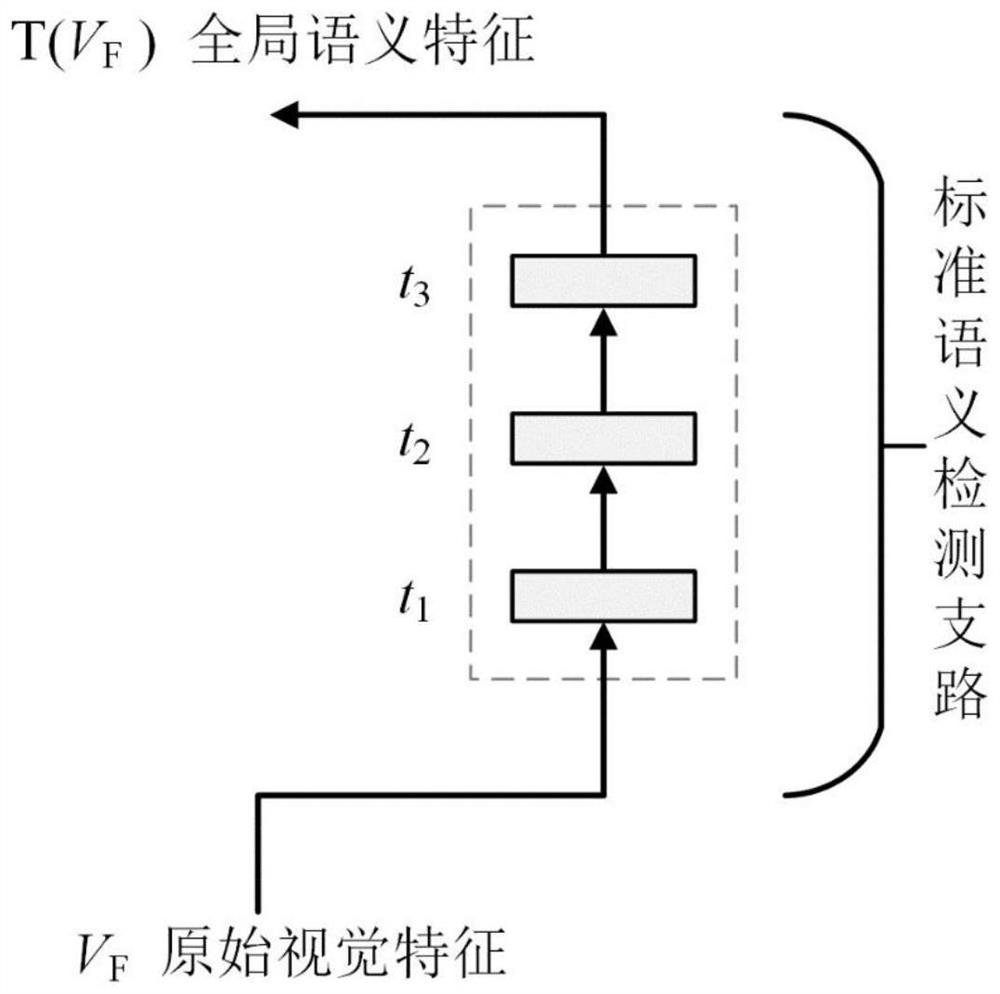

[0036] This embodiment proposes a semantic detection method for visual feature segmentation in video description, such as Figure 1-3 As shown, the specific implementation steps are as follows:

[0037] Step 1: The original visual feature vector V obtained after convolution processing the video F As input, read the eigenvector, V F The concrete form is V F ={v 1 ,v 2 ,...v Q} The feature vector of size 1*Q.

[0038] Step 2: In the segmentation semantic detection branch, the original visual feature V in step 1 F Evenly divided into p parts, get p visual segmentation features. As shown in formula (1) and formula (2), the visual segmentation feature V is obtained after segmentation F1 ,V F2 ,...,V Fp .

[0039]

[0040] q=Q / p (2)

[0041] Among them, F a is the uniform partition function, Q is the visual feature V F Dimensions, divide it evenly into p parts, and obtain the visual segmentation feature V Fi The dimensions of all are q, and the specific forms of vi...

Embodiment 2

[0108] Security monitoring outdoor scene

[0109] Apply this example to the outdoor scene of security monitoring to obtain the video semantic features with strong expressive ability, so as to obtain the text description. This text information can effectively prevent the occurrence of outdoor dangerous accidents, and can improve the efficiency of checking surveillance video. Figure 5 shown.

Embodiment 3

[0111] Censorship of short video content

[0112] Apply this example to the short video content review system to obtain video semantic features with strong expressive ability, so as to obtain text description. This text information can effectively prevent negative energy content such as illegal and illegal content in the short video, and is conducive to the construction of a good network environment. The review of short video content is as follows: Figure 6 shown.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com