Visual navigation method based on deep reinforcement learning and direction estimation

A technology of reinforcement learning and direction estimation, which is applied in the field of visual navigation based on deep reinforcement learning and direction estimation, and can solve problems such as abnormal navigation and the inability of intelligent robots to quickly build maps.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The present invention will be further described in detail below in conjunction with the embodiments and the accompanying drawings, but the embodiments of the present invention are not limited thereto.

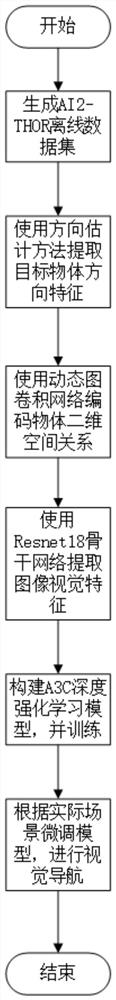

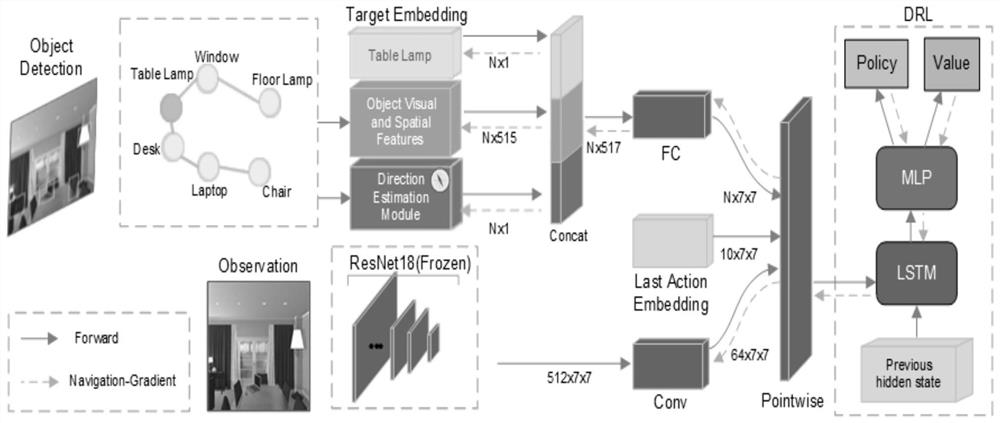

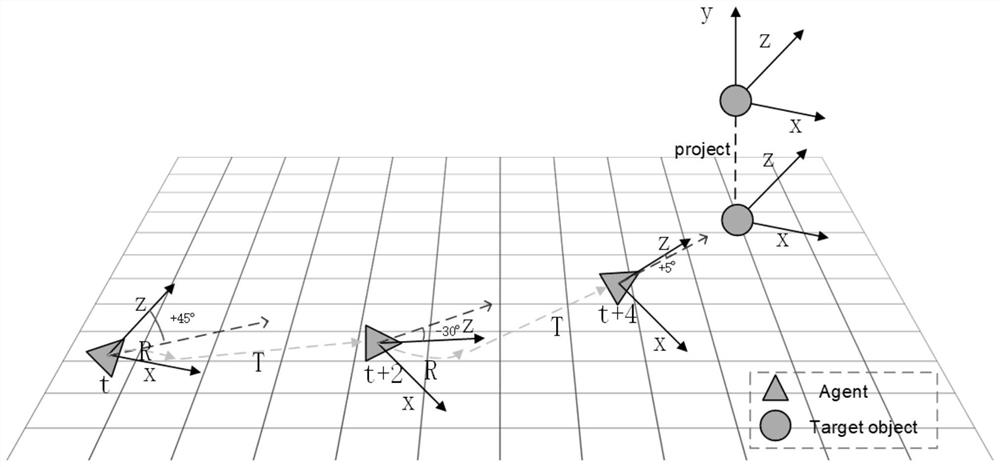

[0051] Such as figure 1 As shown, the visual navigation method based on deep reinforcement learning and direction estimation provided in this embodiment includes the following steps:

[0052] 1) Generate AI2-THOR simulation platform offline data set: Generate AI2-THOR simulation environment data to generate offline data set through the script of simulating robot movement. The offline data set contains RGB-D image and robot position information, among which RGB-D image D represents the depth image, RGB-D image contains RGB image and depth image, the specific process is as follows:

[0053] 1.1) Download the python package of AI2-THOR, use the corresponding command to download 30 simulation scenes of AI2-THOR, take 25 of them as the training set, and the remaining 5 as th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com