Self-adaptive learning implicit user trust behavior method based on depth map convolutional network

An adaptive learning and convolutional network technology, applied in the field of adaptive learning implicit user trust behavior, can solve problems such as algorithm performance degradation, and achieve the effect of improving recommendation performance and strengthening connections

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] In order to better understand the object, structure, and function of the present invention, a method of further detailed understanding of adaptive learning implicit user trust behavior based on depth map splitting networks will be described in connection with the accompanying drawings.

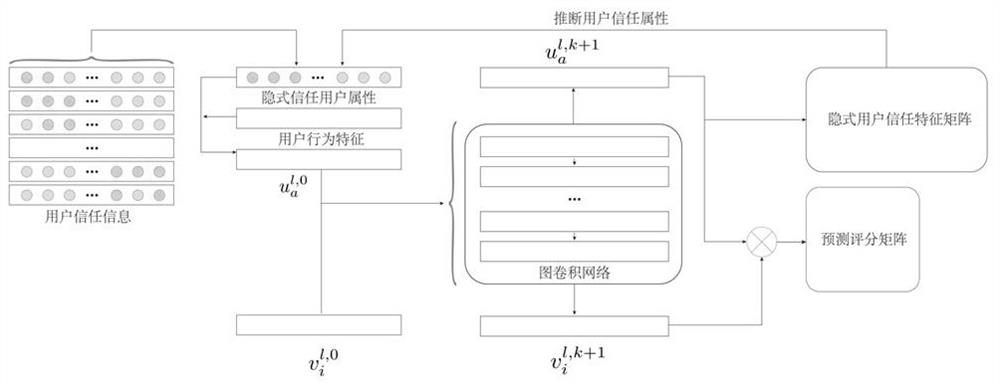

[0047] like figure 1As shown, an adaptive learning implicit user trust behavior method based on deep map volume network is to let depth neural networks to learn user trust behavior by filtering out unreliable trust information, reacting user implicit Behavioral logic. The model is divided into three parts. The first part is to filter out unreliable trust information by using the user's historical behavior to alleviate the noise problem of trust; the second part is the map volume learning section, depth map volume network The user's scoring data and the trust feature are learned, and the user's feature is output. The third part is to learn reliable user information using an adaptive learning...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com