Video human body behavior recognition method based on CNN and accumulated hidden layer state ConvLSTM

A recognition method and state technology, applied in character and pattern recognition, neural learning methods, instruments, etc., can solve the problems of lack of time information modeling ability, reduce data utilization, increase computing cost, etc., to solve convergence difficulties, identify The effect of improving accuracy and saving time and cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

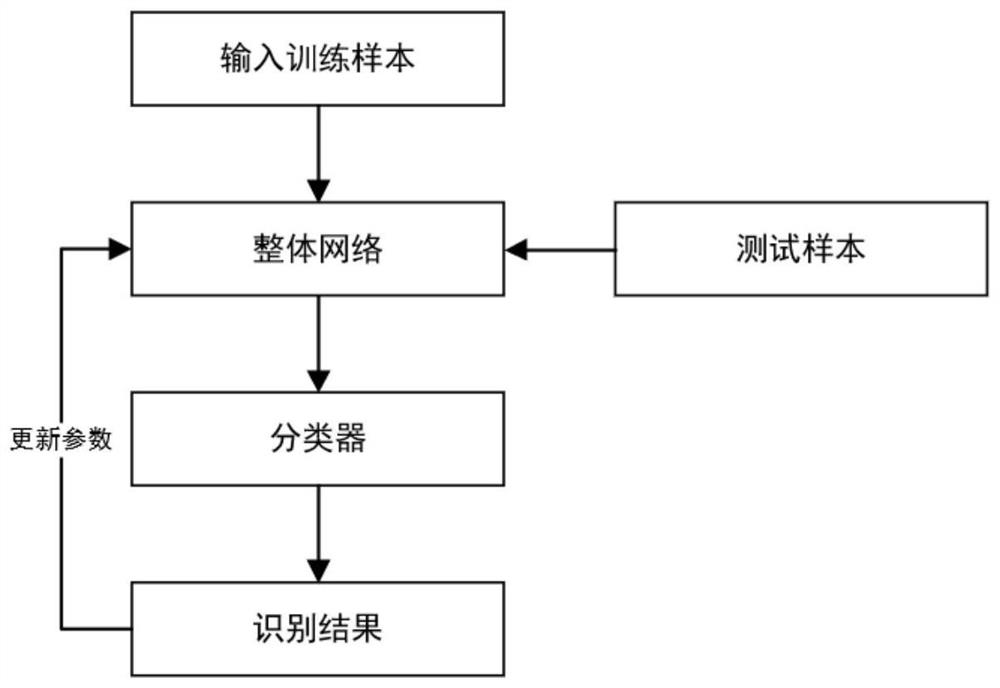

[0027] see figure 1 , is a flowchart of the method of the present invention.

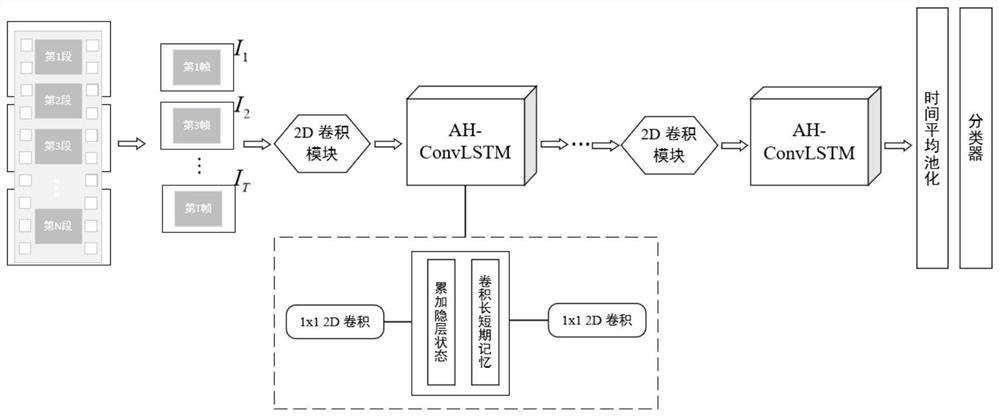

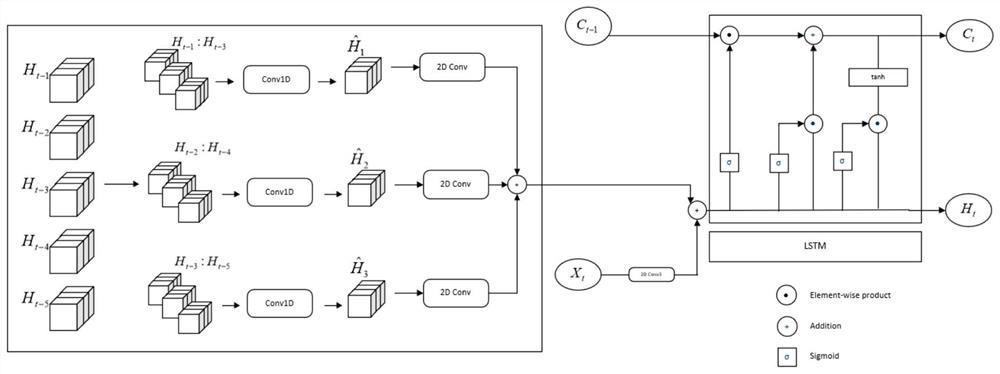

[0028] In order to realize the overall network architecture of the present invention: first, use the two-dimensional convolutional neural network (2D CNN) pre-trained on ImageNet as the backbone model, and insert the accumulated hidden layer state ConvLSTM ( AH-ConvLSTM) module is used to build the overall network; secondly, obtain the data sample set including video data and labels, and divide it into training sample set and test sample set, use segment sampling method to sample the video, and sample the obtained The frame is sent to the overall network as input; the learning rate is set to train the overall network, the training data uses the training sample set, and the AH-ConvLSTM module is trained with 5 times the learning rate set to accelerate the convergence speed of the overall network; use The classifier generates a recognition score, and updates the network parameters through backpropaga...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com