Face image animation method and system based on action and voice features

A face image and voice feature technology, which is applied in the field of face image animation methods and systems, can solve the problems of insufficient image effect and insufficient resolution of the result, and achieve the effect of various driving methods and avoiding graphics card resources.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

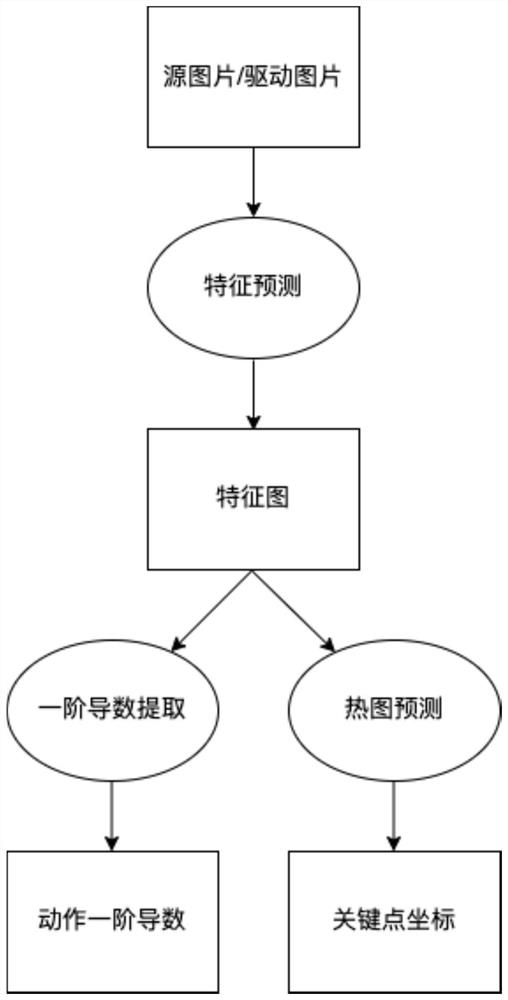

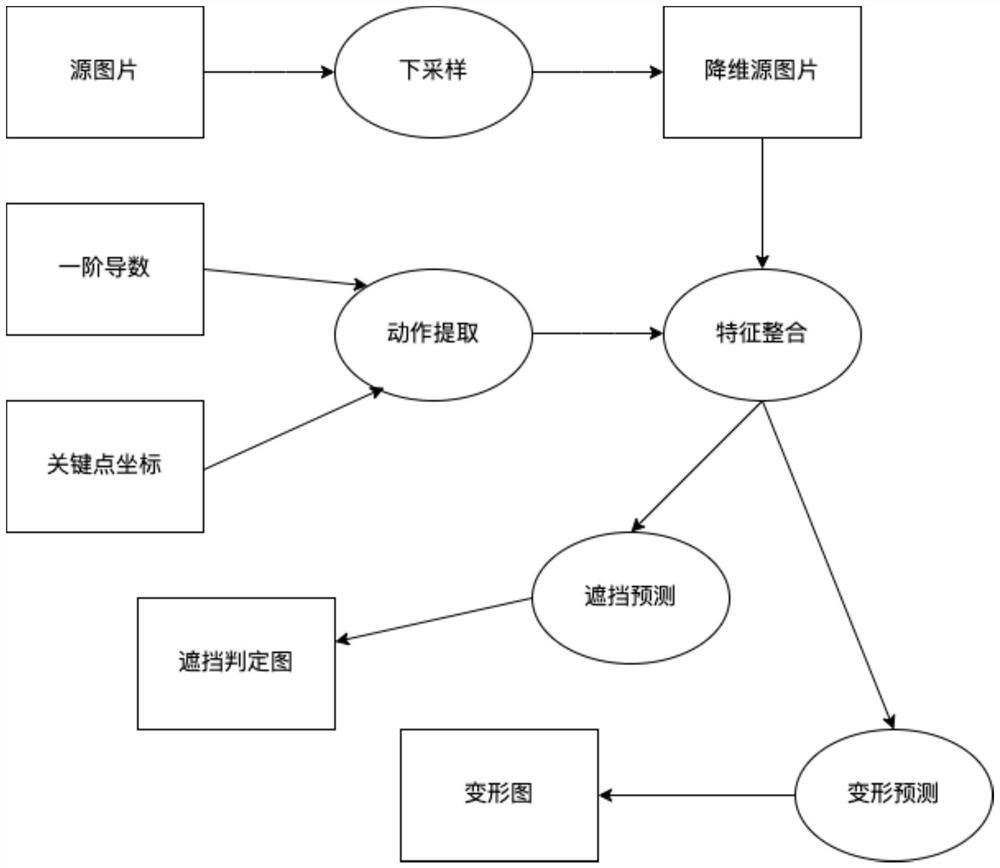

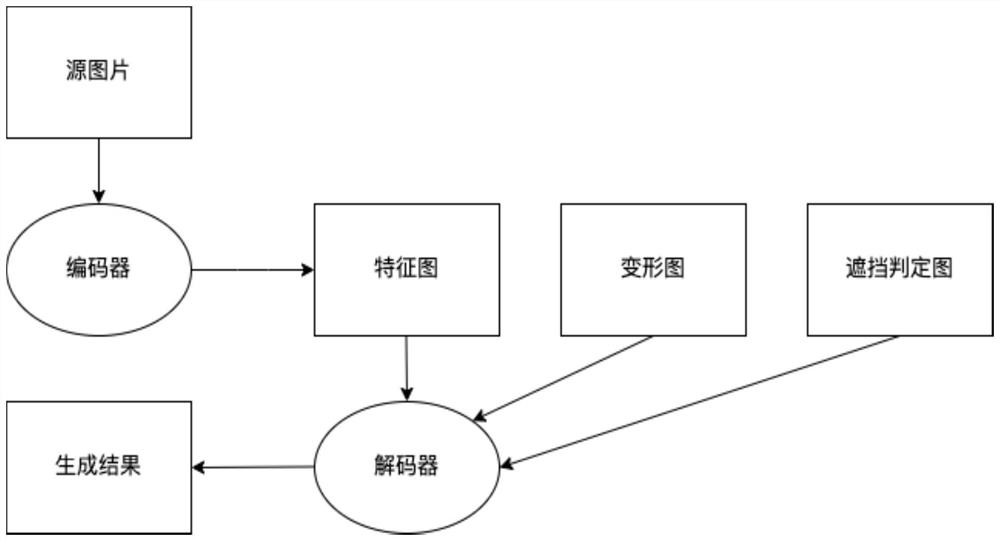

[0036] refer to Figure 1-4 , the present invention provides a kind of facial image animation method based on action and voice feature, comprising: image driving mode and voice driving mode; wherein the image driving mode is: when there is a video of one of the characters talking, the action Completely transfer to another face, that is, input the talking video of one face and the face of another person, and get the dynamic image video of the other person who was originally a static picture; the voice driving method is: training for a specific person , when using the features of another person for prediction, perform a one-step transformation of the features, convert them into the voice features of the trained person, convert the voice features into face features, and obtain a face image animation.

[0037] Note that the image driving method of the present invention is fundamentally different from the popular DeepFake face-changing technology. The face-swapping technology is t...

Embodiment 2

[0051] The present embodiment provides a kind of facial image animation system based on action and voice features, including an image driver module and a voice driver module; wherein,

[0052] The image driver module is used to input the talking video of a human face and the human face of another person to obtain the dynamic image video of the other person who was originally a static picture;

[0053] The voice-driven module is trained for a specific person. When using the features of another person for prediction, the features are converted into the voice features of the trained person in one step, and the voice features are transformed into face features. , get face image animation.

[0054] The image driving module includes a key point detection unit, an action extraction unit and an image generation unit; the key point detection unit is used to input a frame of image of the target person and the driving video respectively, and obtain multiple key points and their correspon...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com