ACT-Apriori algorithm based on self-encoding technology

A self-coding and algorithmic technology, applied in computing, database models, instruments, etc., can solve the problems of increasing the computing burden and combinatorial explosion of the global database, and achieve the effect of solving the difficulty of establishing and updating, reducing the dimension of data and the degree of reduction.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

[0065] If the given transaction database is shown in Table 1,

[0066] Table 1 The original transaction database

[0067] TID Items 1 A,C,E,F,H 2 A,B,E,F,G 3 A,B,C,D,F,G 4 A,B,C,F,G 5 A,B,C,E 6 A,B,F,G 7 A,C,E,F 8 A,B,C,F,G 9 A,C,D 10 C,B,G

[0068] There are 10 transactions in the transaction database. First, the support count of each item in the entire transaction database is calculated as shown in Table 2.

[0069] Table 2 Support count of each item

[0070]

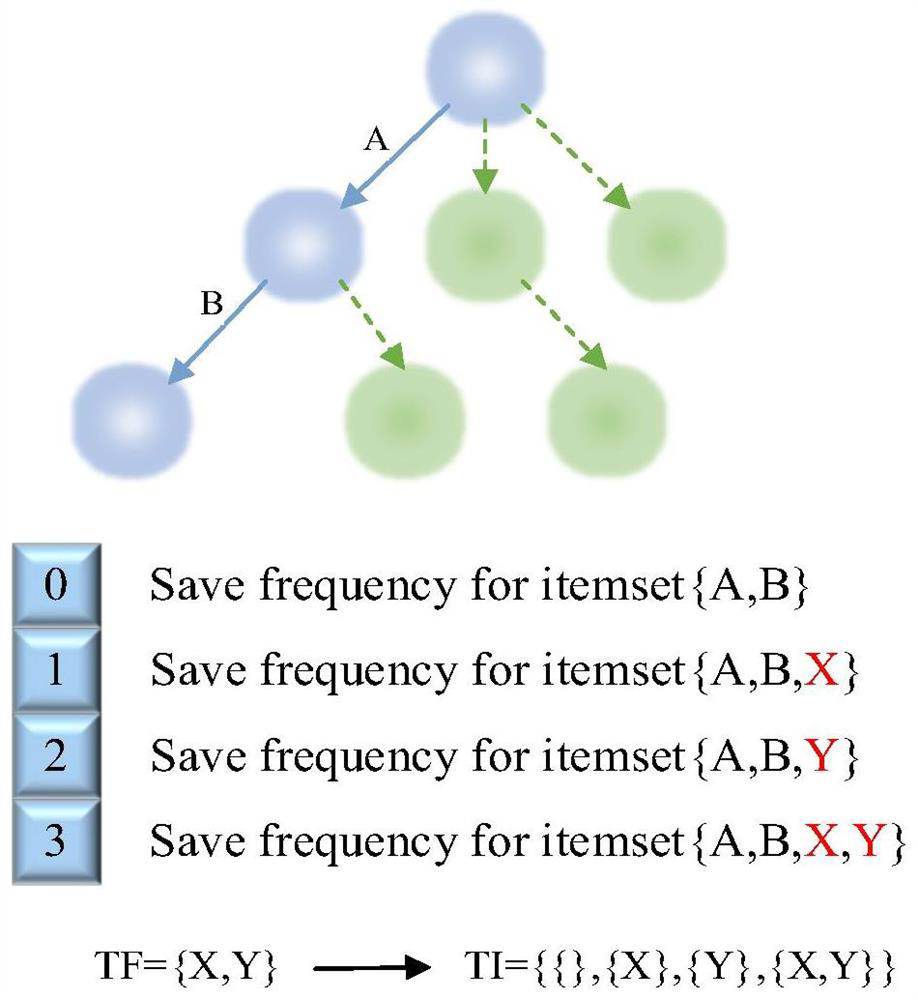

[0071] Taking NS=2, then A and C are items with higher support thresholds (ie high-frequency items), then the item set list TF={A,C}. By definition, the itemset list TI is all possible permutations in TF, so we can create 2 from TF 2 itemsets, so TI={{}, {A}, {C}, {A, C}}. Second, delete the item contained in TF from the original transaction database, and replace its position in the original database with a self-encoding bit vector to ...

example 2

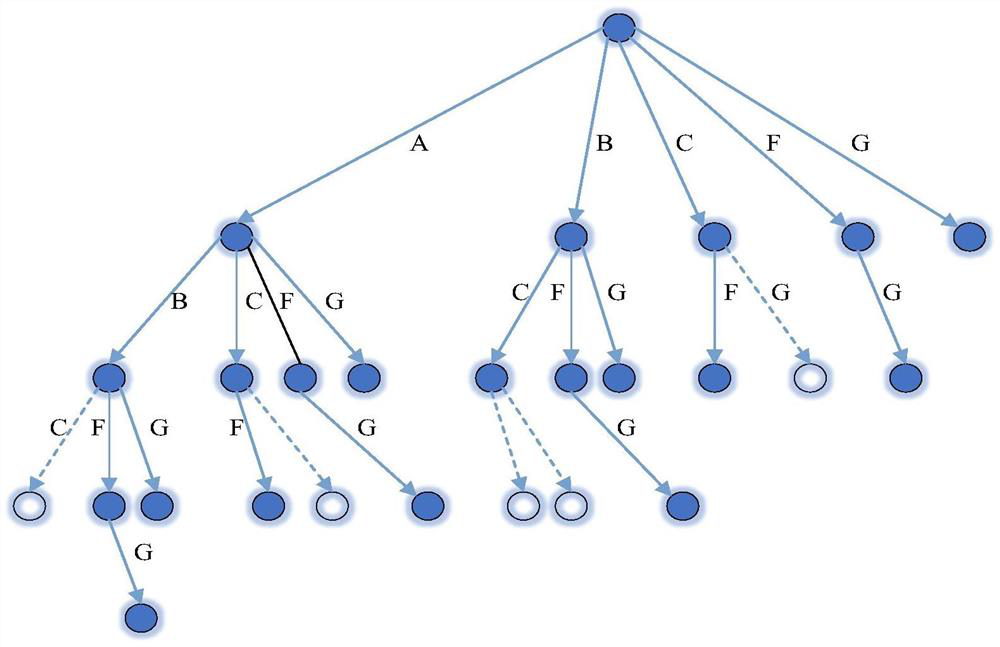

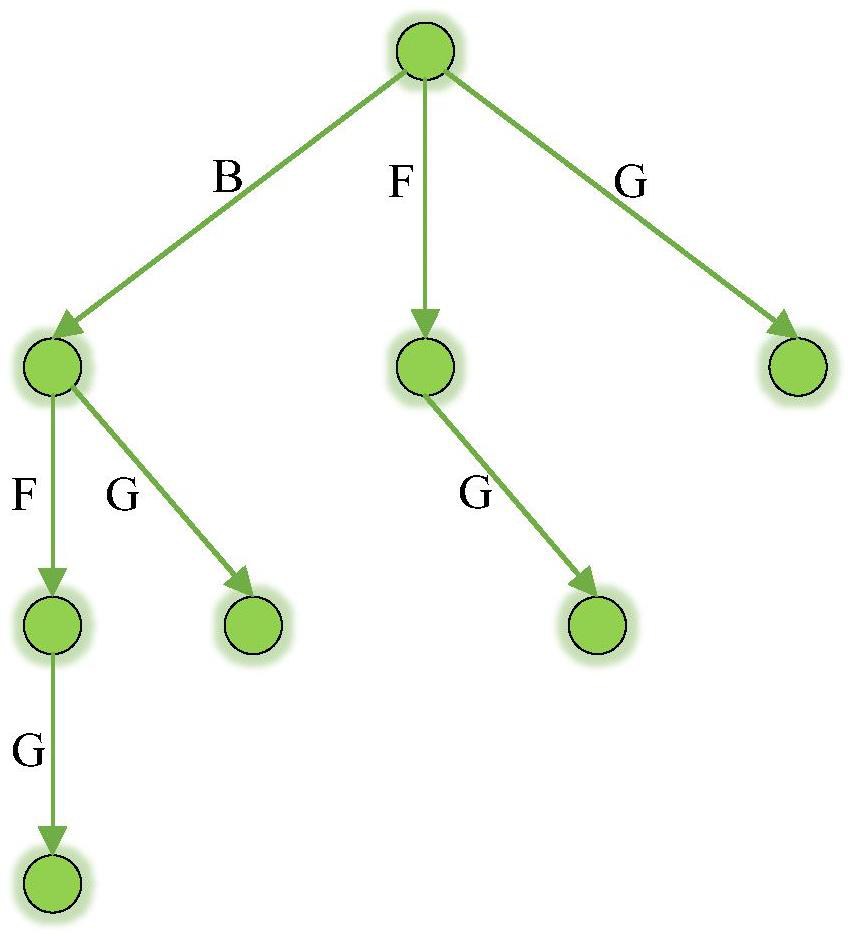

[0086] This example shows the operation of the frequent itemset generation stage by using the RDB, TF, and TI represented in Example 1. In this example, the support threshold is set to 50%. In the first pass, the ACT-Apriori algorithm creates candidate item sets c[1] = {B}, {D}, {E}, {F}, {G}, {H}. Then, with the transaction bits (SNT) computed from the data preprocessing stage, the algorithm computes the support for each candidate itemset and its combination with the TI list. For example, for the candidate item set {B}, compute the support counts for {B}, {B, A}, {B, C} and {B, A, C}, where TI = {{}, {A}, { C}, {A, C}}. Tables a1 to a6 show the results when k=1. All itemsets with support greater than the minimum support threshold will be retained. So the set of items kept at the end of pass 1 are: {B}, {B, A}, {B, C}, {F}, {F, A}, {F, C}, {F, A , C}, {G}, {G, A}.

[0087] Table a1 Candidate Frequent 1 Itemset Generation Table (c[1]={B})

[0088]

[0089] Table a2 ca...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com