Data processing apparatus

A data processing device and data structure technology, applied in the direction of electrical digital data processing, special data processing applications, data recording, etc., can solve problems such as difficulty in summarizing user's moving images

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

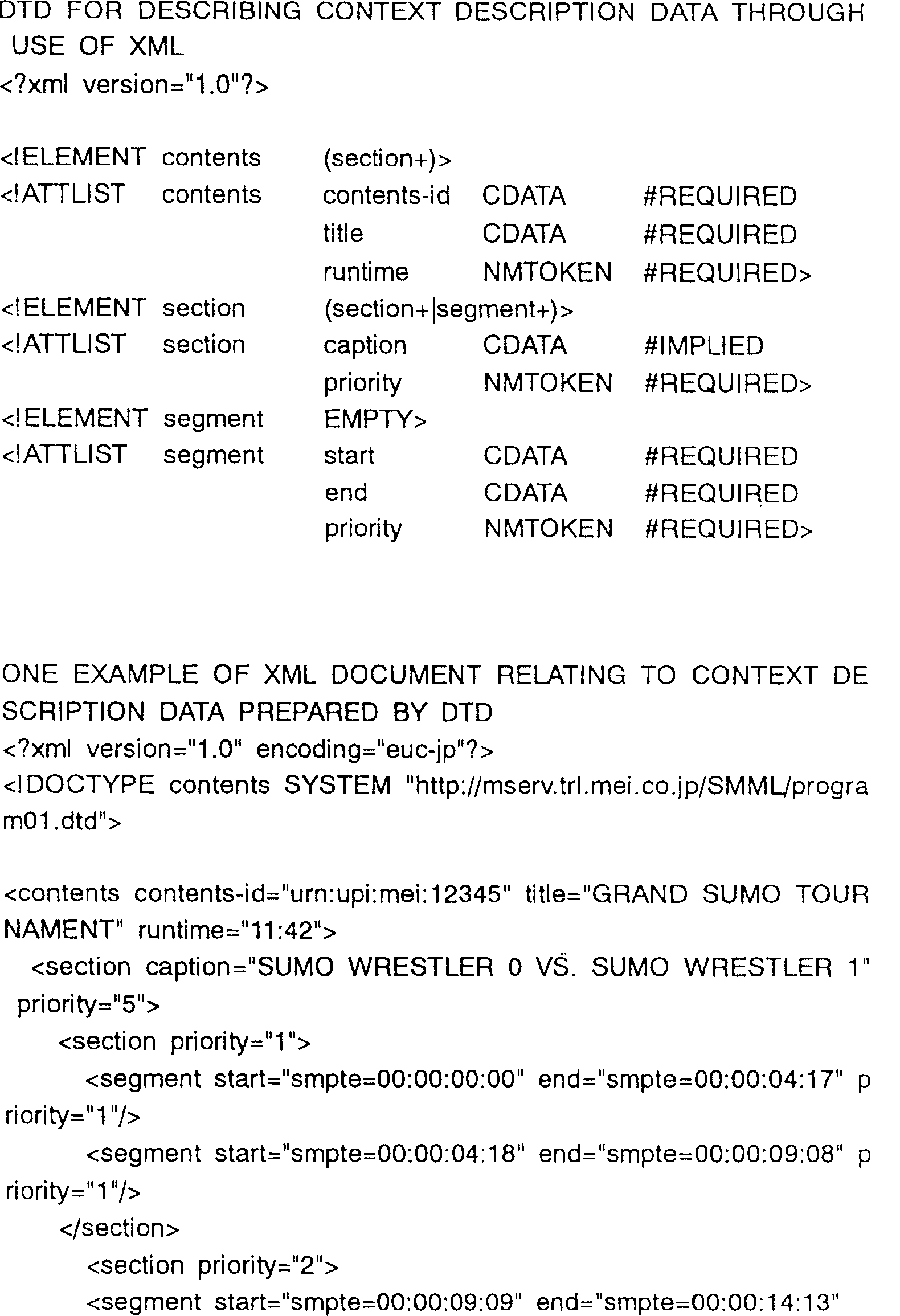

[0171] A first embodiment of the present invention will be described below. In this embodiment, moving pictures of MPEG-1 system streams are used as the media content. In this case, a media segment corresponds to a single scene segmentation, and a score represents the objective degree of contextual importance of the scene of interest.

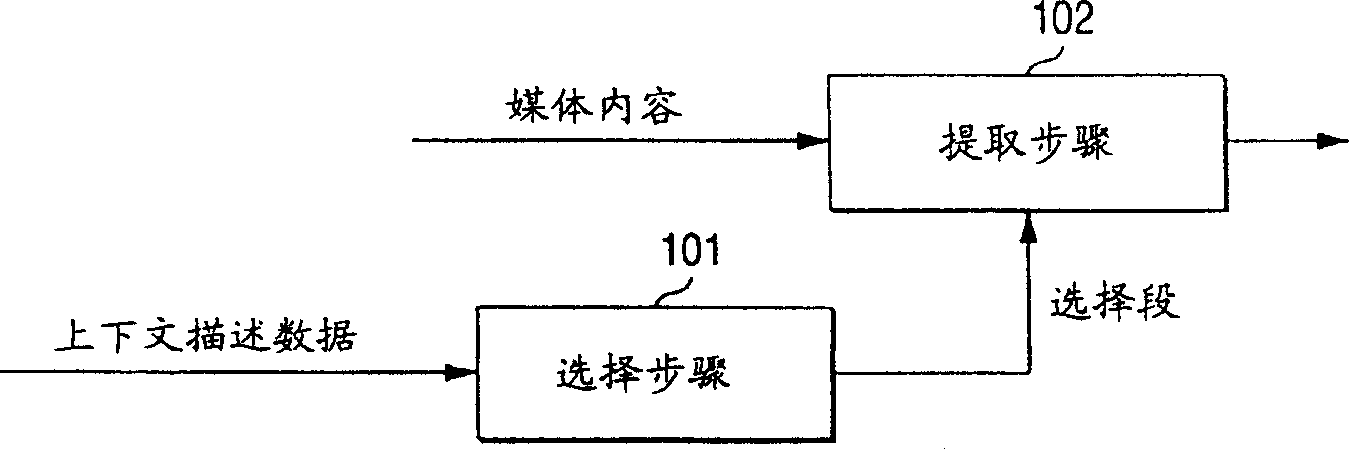

[0172] figure 1 The block diagram of is showing the data processing method according to the first embodiment of the present invention. exist figure 1 Among them, reference numeral 101 indicates the selection step; reference numeral 102 indicates the extraction step. In the selection step 101, a scene of media content is selected from the context description data, and the start time and end time of the scene are output. In an extraction step 102, data related to a piece of media content specified by the start time and end time output in the selection step 101 is extracted.

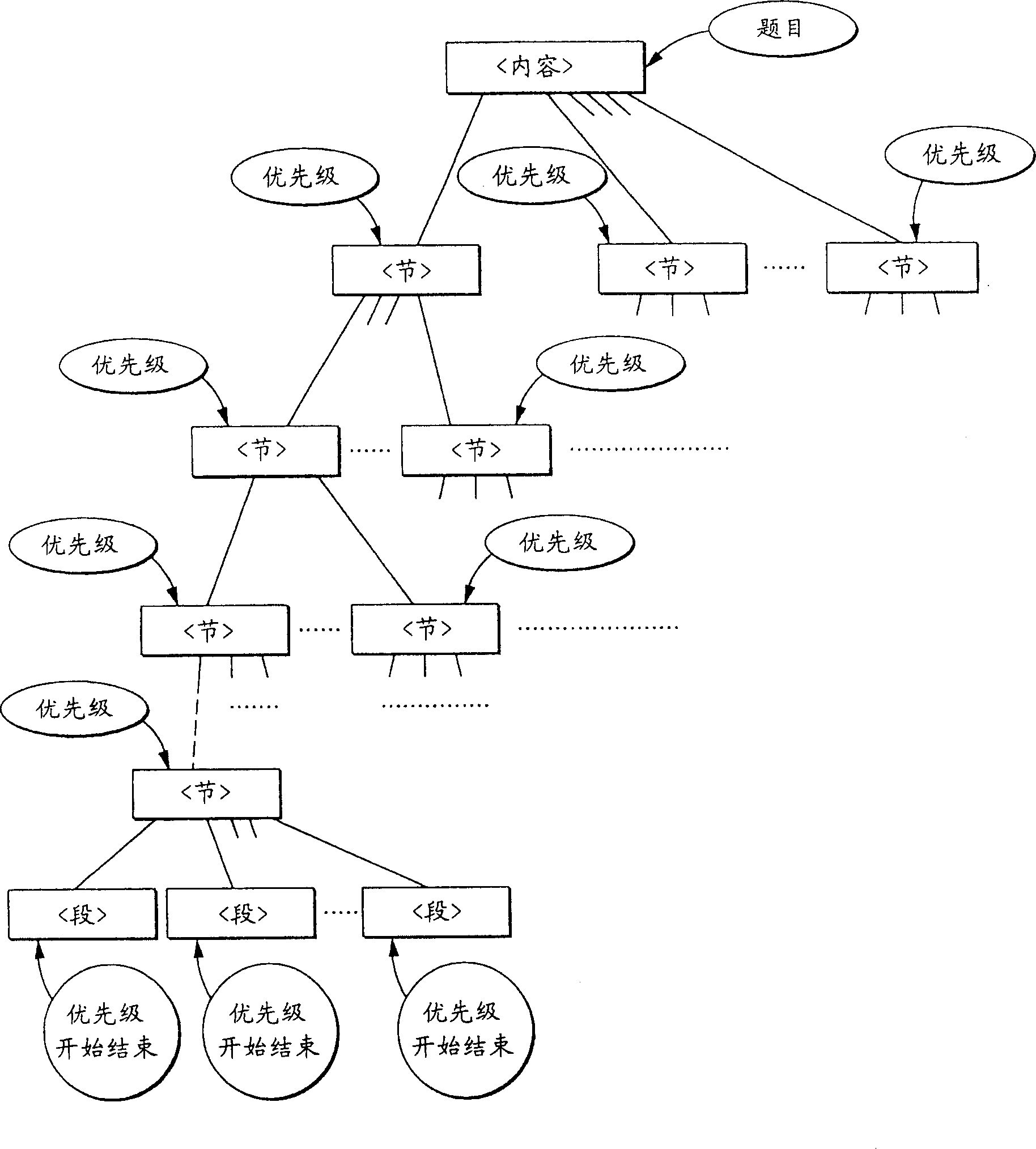

[0173] figure 2 The structure of the context description data ac...

no. 2 example

[0193] A second embodiment of the present invention will be described below. This second embodiment differs from the first embodiment only in the processing related to the selection step.

[0194] Processing related to the selection step 101 according to the second embodiment will be described below with reference to the drawings. In the selection step 101 according to the second embodiment, the priority values assigned to all elements ranging from the highest ranked to the lowest are utilized. The priority assigned to each element and indicates the objective degree of contextual importance. Refer below Figure 31 The processing related to the selection step 101 is described. exist Figure 31Among them, reference numeral 1301 represents one of a plurality of elements included in the highest level in the context description data; 1302 represents a child element of element 1301; 1303 represents element 1302 A child element ; 1304 represents a child element of the...

no. 3 example

[0199] A third embodiment according to the present invention will be described below. The third embodiment differs from the first embodiment only in the processing related to the selection step.

[0200] Processing related to the selection step 101 according to the third embodiment will be described below with reference to the drawings. As in the case of the processing described in conjunction with the first embodiment, in the selection step 101 according to the third embodiment, the selection is performed only for elements each of which has a child . In a third embodiment, a threshold is set which takes into account the sum of the duration intervals of all scenes to be selected. Specifically, the element is selected in order of decreasing priority until the sum of the duration intervals of the elements that have been selected so far is the largest but remains smaller than the threshold. Figure 33 The flowchart of shows the processing related to the selection step 101 ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com