Method and data processing system optimizing performance through reporting of thread-level hardware resource utilization

一种数据处理系统、硬件资源的技术,应用在数据处理领域,能够解决下降等问题

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

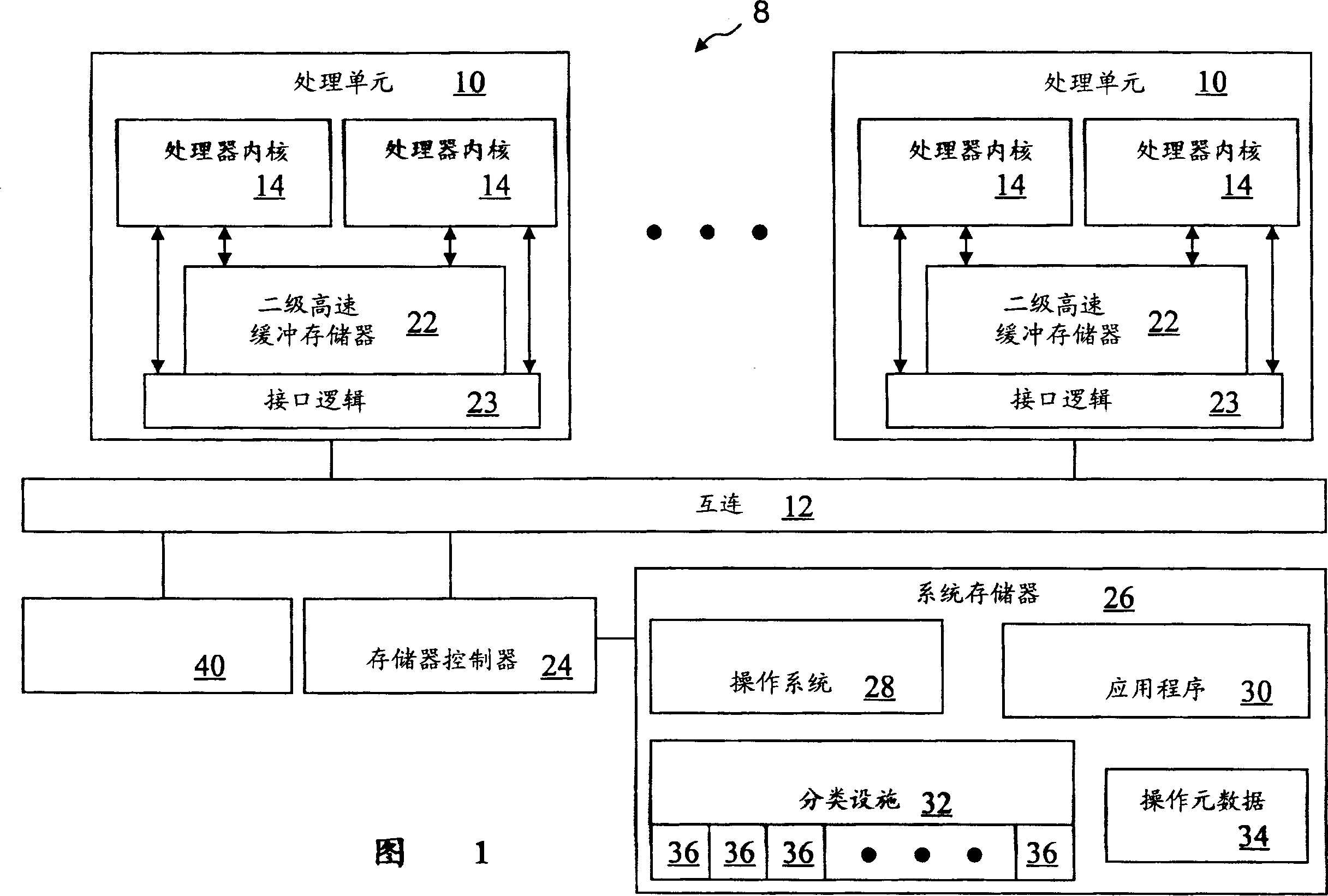

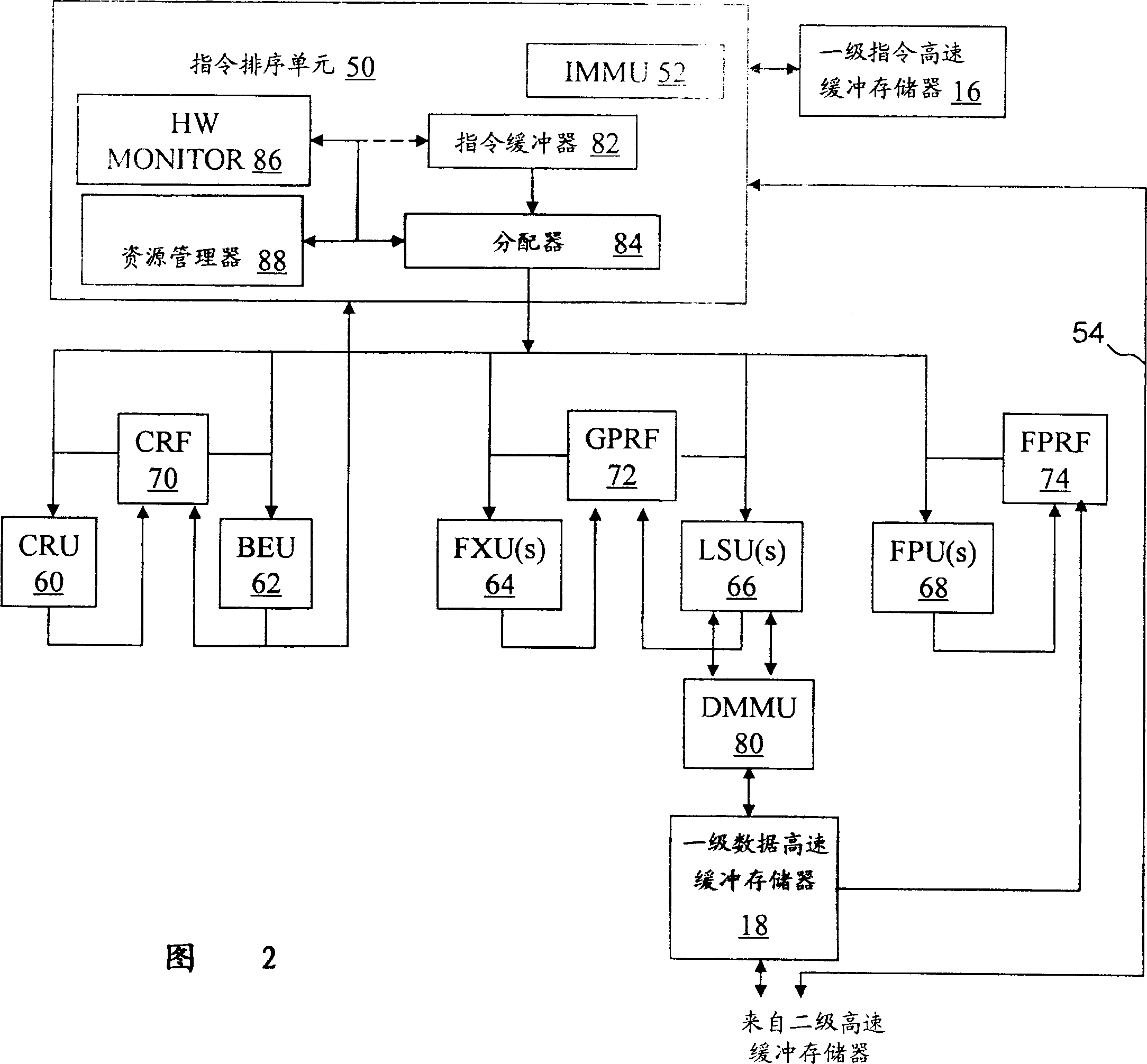

[0017] Referring now to the drawings and in particular to FIG. 1 there is illustrated a high level block diagram of a multiprocessor (MP) data processing system which provides improved performance optimization according to one embodiment of the present invention. As depicted, data processing system 8 includes a plurality (eg, 8, 16, 64, or more) of processing units 10 coupled for communication by a system interconnect 12 . Each processing unit 10 is a signal integrated circuit including interface logic 23 and one or more processor cores 14 .

[0018] As also shown in FIG. 1, the memory hierarchy of data processing system 8 includes one or more system memories 26, which form the lowest level of volatile data storage in the memory hierarchy; and one or more levels of cache memory, For example, on-chip Level 2 (L2) cache 22 is used for staging instructions and operational metadata from system memory 26 to processor core 14 . As will be appreciated by those skilled in the art, ea...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com