A transparent edge-of-network data cache

A high-speed cache and edge data technology, applied in data processing applications, electrical digital data processing, special data processing applications, etc., can solve problems such as complex consistency management, complex maintenance, and invalid query response

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

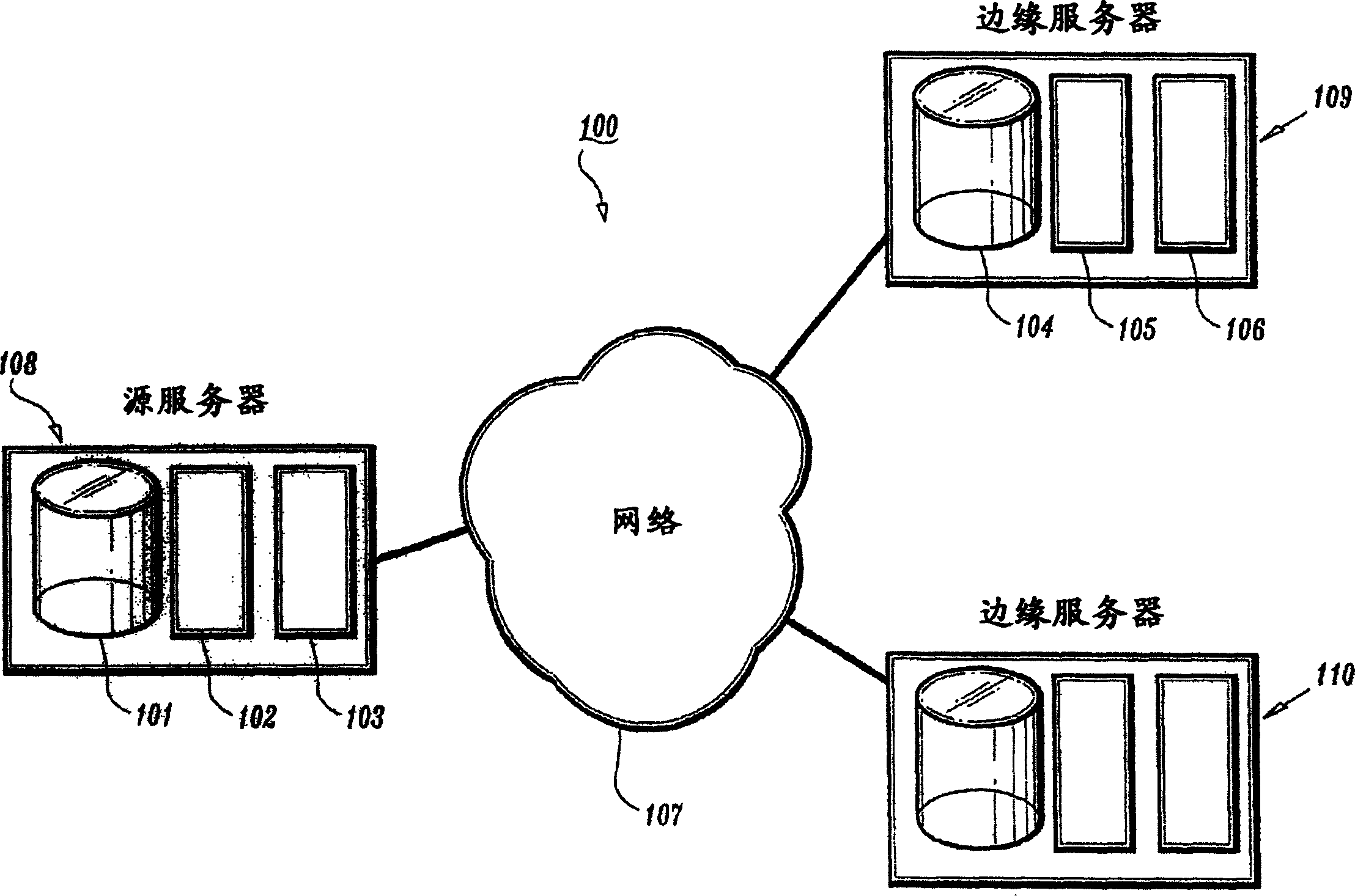

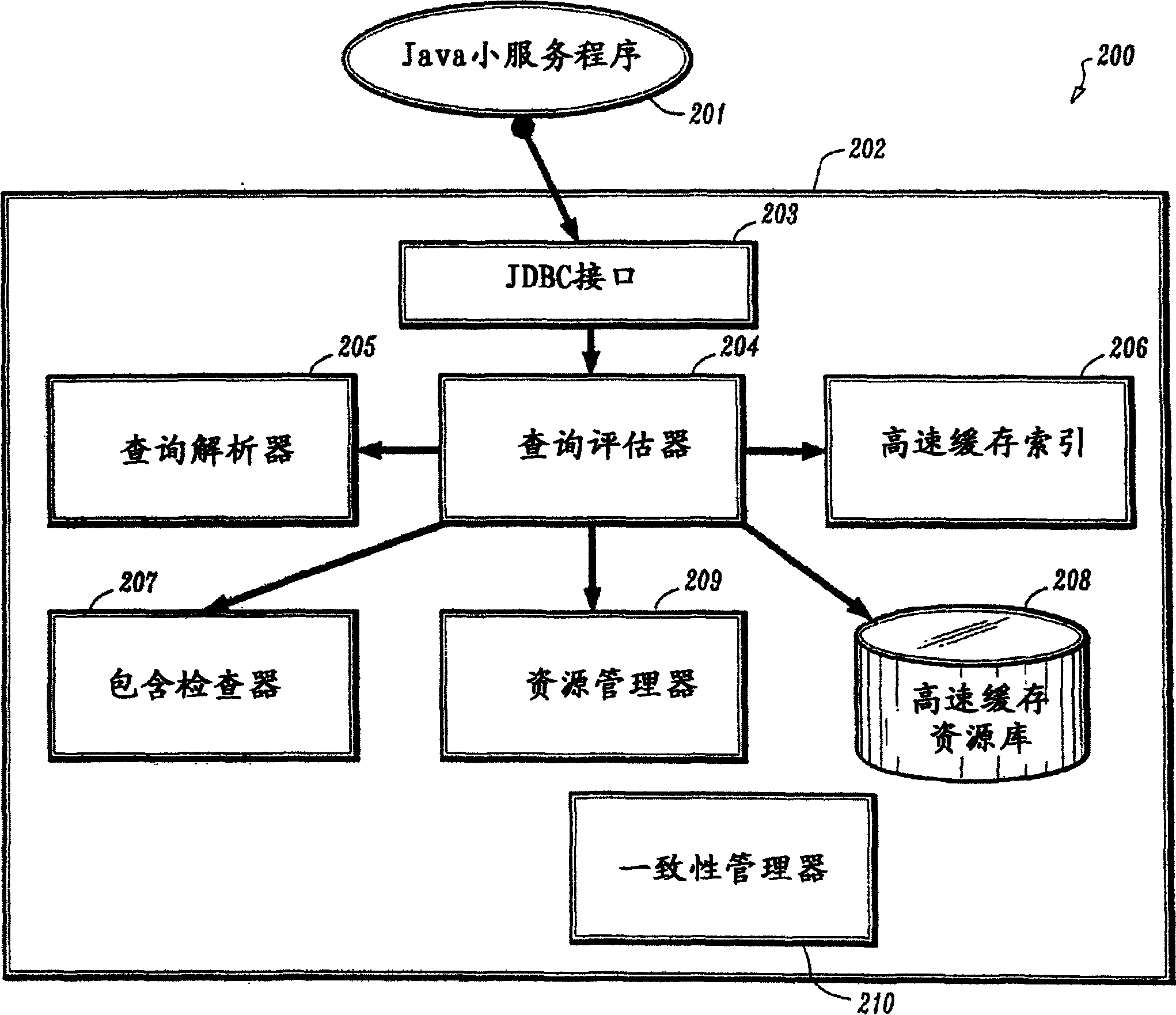

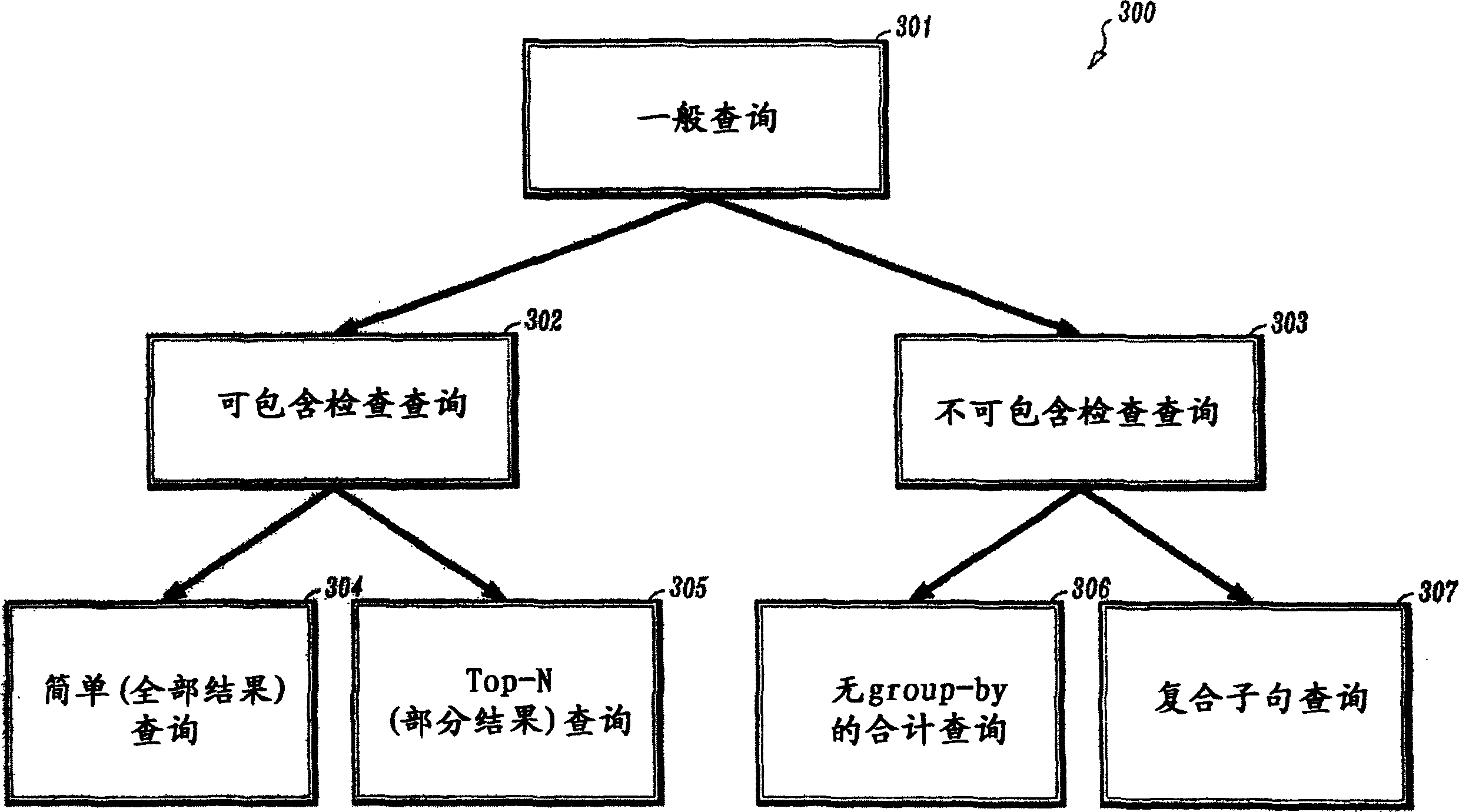

[0030] Embodiments of the present disclosure provide a dynamic database cache to be maintained by a local machine allowing database queries to remote servers. This cache utilizes the local database engine to maintain a partial but semantically consistent "materialized view" of previous query results. It is dynamically populated based on the application query stream. Containment checkers that work on query predicates are used to determine whether the results of a new query are contained in the cached result union. Ordinary local tables are used to share possible physical memory between overlapping query results. Data consistency is maintained by propagating inserts, deletes, and updates from source databases to their cached local copies. The background purge algorithm continuously or periodically deletes the contents of ordinary local tables by evicting excess rows propagated by the coherence protocol and rows belonging to queries that have been marked for eviction from the c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com