Cloud storage of geotagged maps

a geotagged map and cloud technology, applied in the field of augmented or virtual reality systems, can solve the problems of limited support and high cost of large items

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

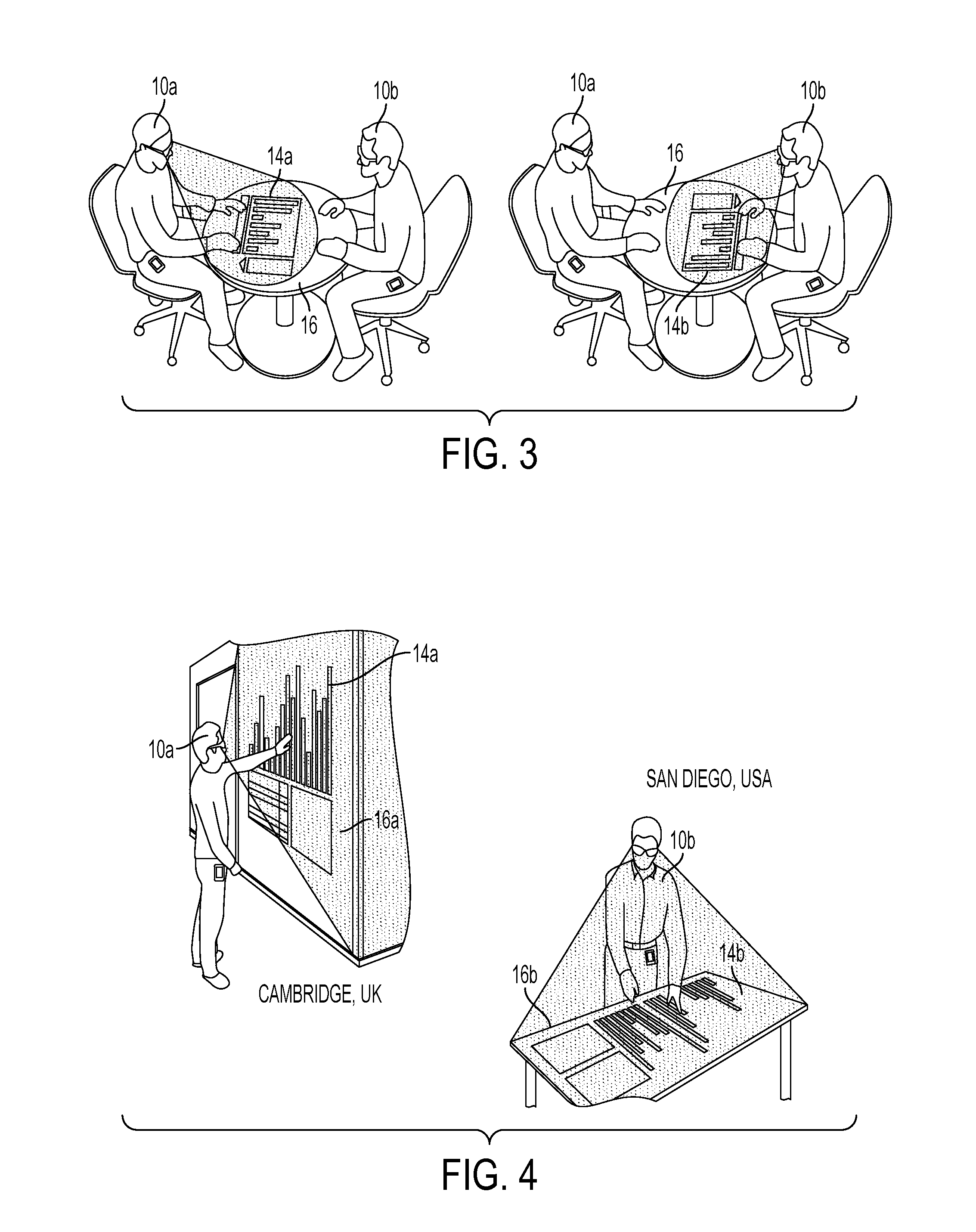

third embodiment

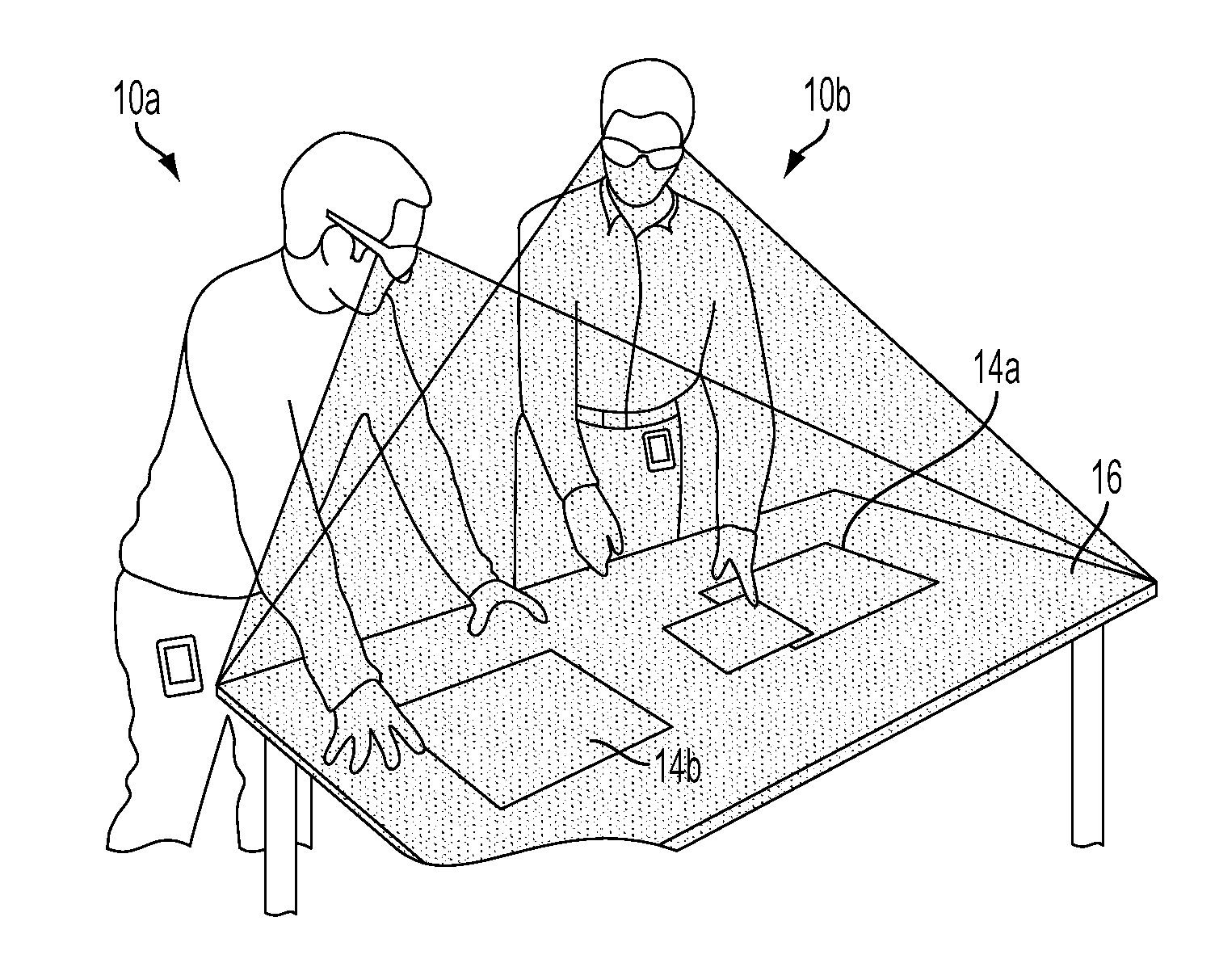

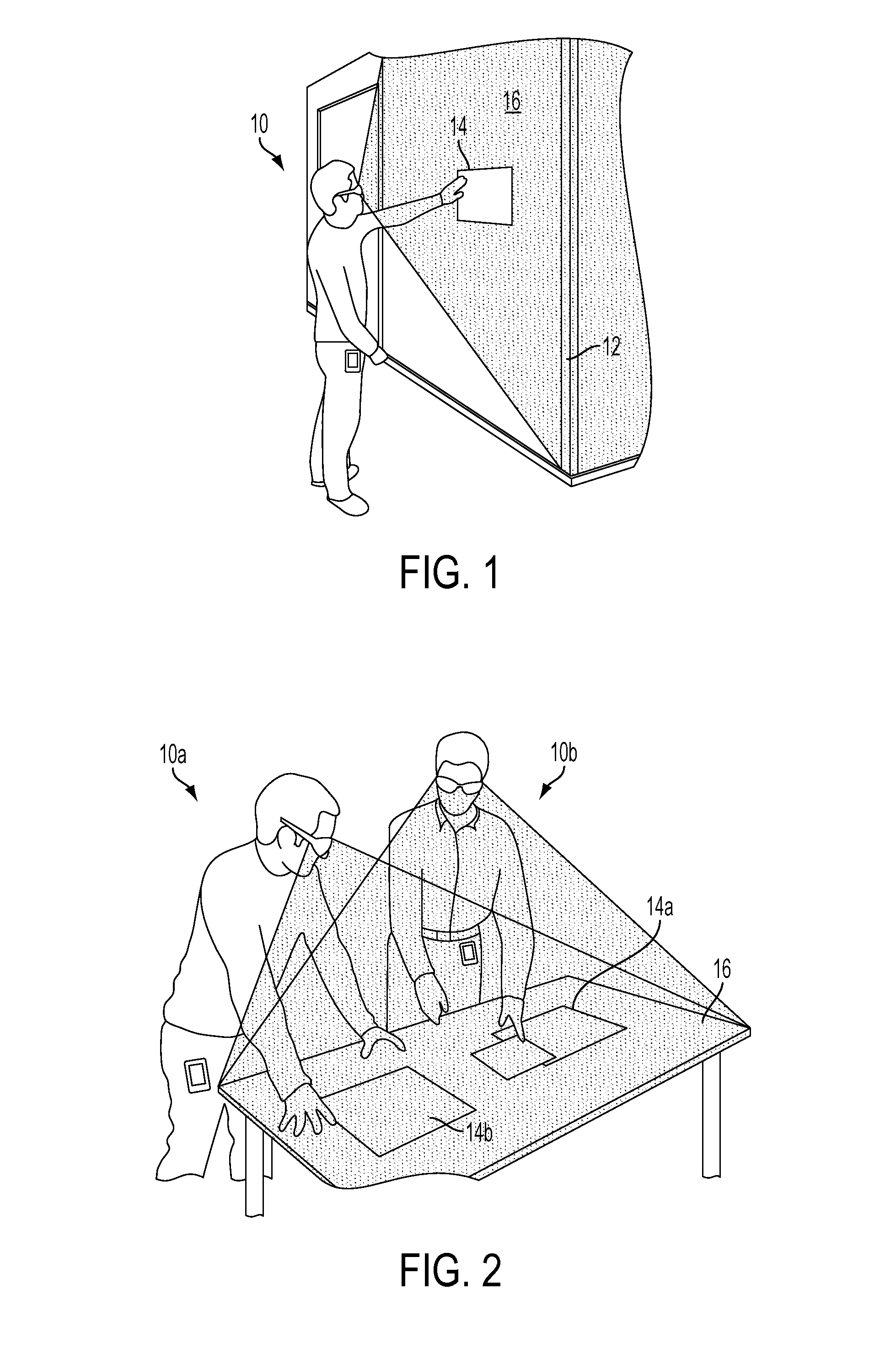

[0112]In an embodiment, the processor within or coupled to the head mounted device 10a may capture an image with a head mounted or body mounted camera or cameras which may be full-color video cameras. Distances to objects within the imaged scene may be determined via trigonometric processing of two (or more) images obtained via a stereo camera assembly. Alternatively or in addition, the head mounted device may obtain spatial data (i.e., distances to objects in the images) using a distance sensor which measures distances from the device to objects and surfaces in the image. In an embodiment, the distance sensor may be an infrared light emitter (e.g., laser or diode) and an infrared sensor. In this embodiment, the head mounted device may project infrared light as pulses or structured light patterns which reflect from objects within the field of view of the device's camera. The reflected infrared laser light may be received in a sensor, and spatial data may be calculated based on a mea...

embodiment

[0222 method 2100 enables collaboration and sharing resources to minimize an amount of processing performed by the head mounted devices themselves. In method 2100, the processor may commence operation by receiving an input request to collaborate from a first head mounted device that may be running an application in block 2101. In block 2102, the processor may initiate a peer to peer search for near devices for collaboration. In determination block 2103, the processor may determine whether to collaborate with discovered devices. If so (i.e., determination block 2103=“Yes”), the processor may create a connection between the devices. The processor may collaborate using a two way communication link. The communication link may be formed between the first and the second head mounted devices 10 and 10b in block 2104.

[0223]The processor may access a directory in a server. Processor utilizing the directory may determine if other users are available for collaboration in block 2105 by scanning...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com