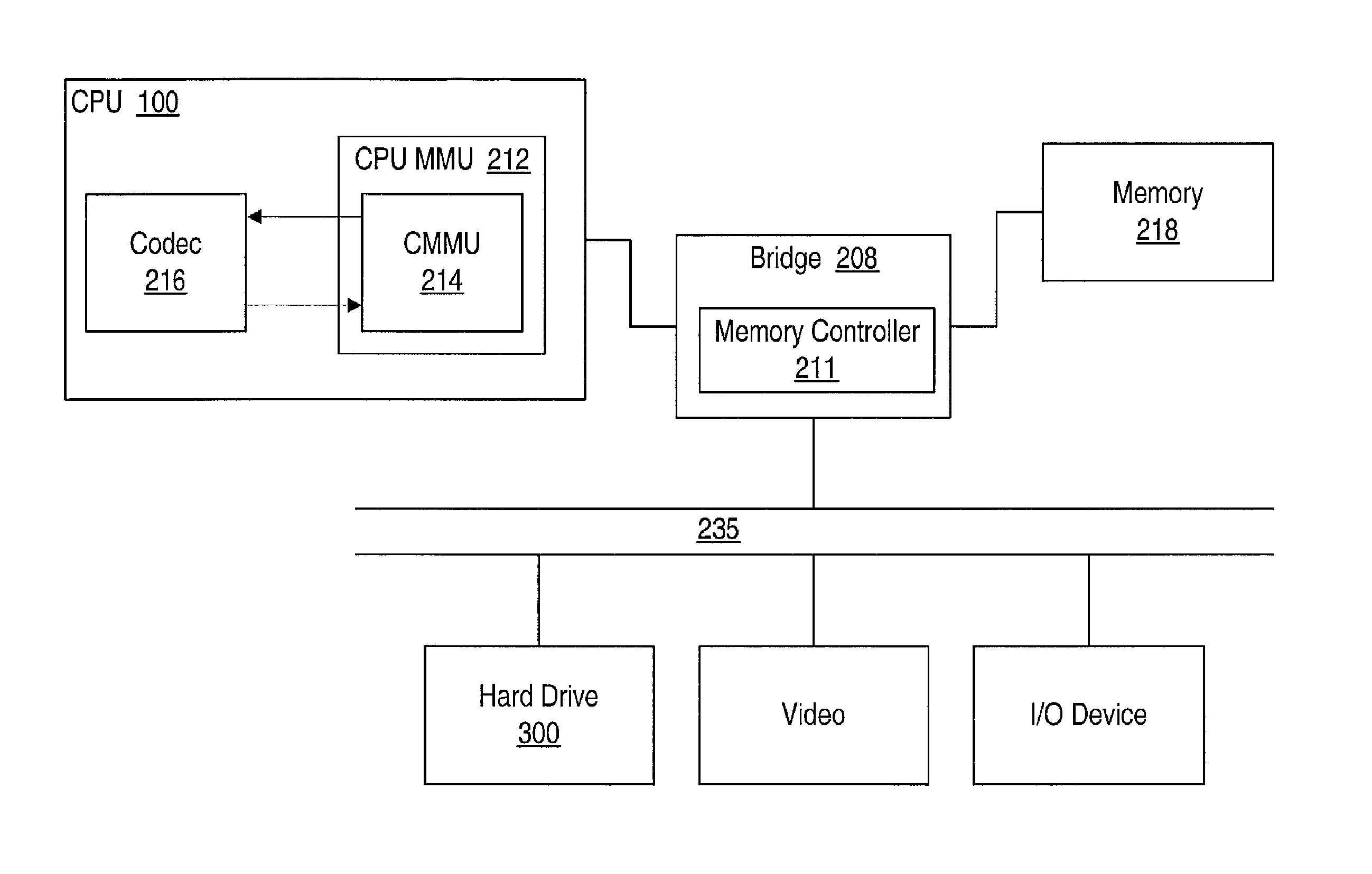

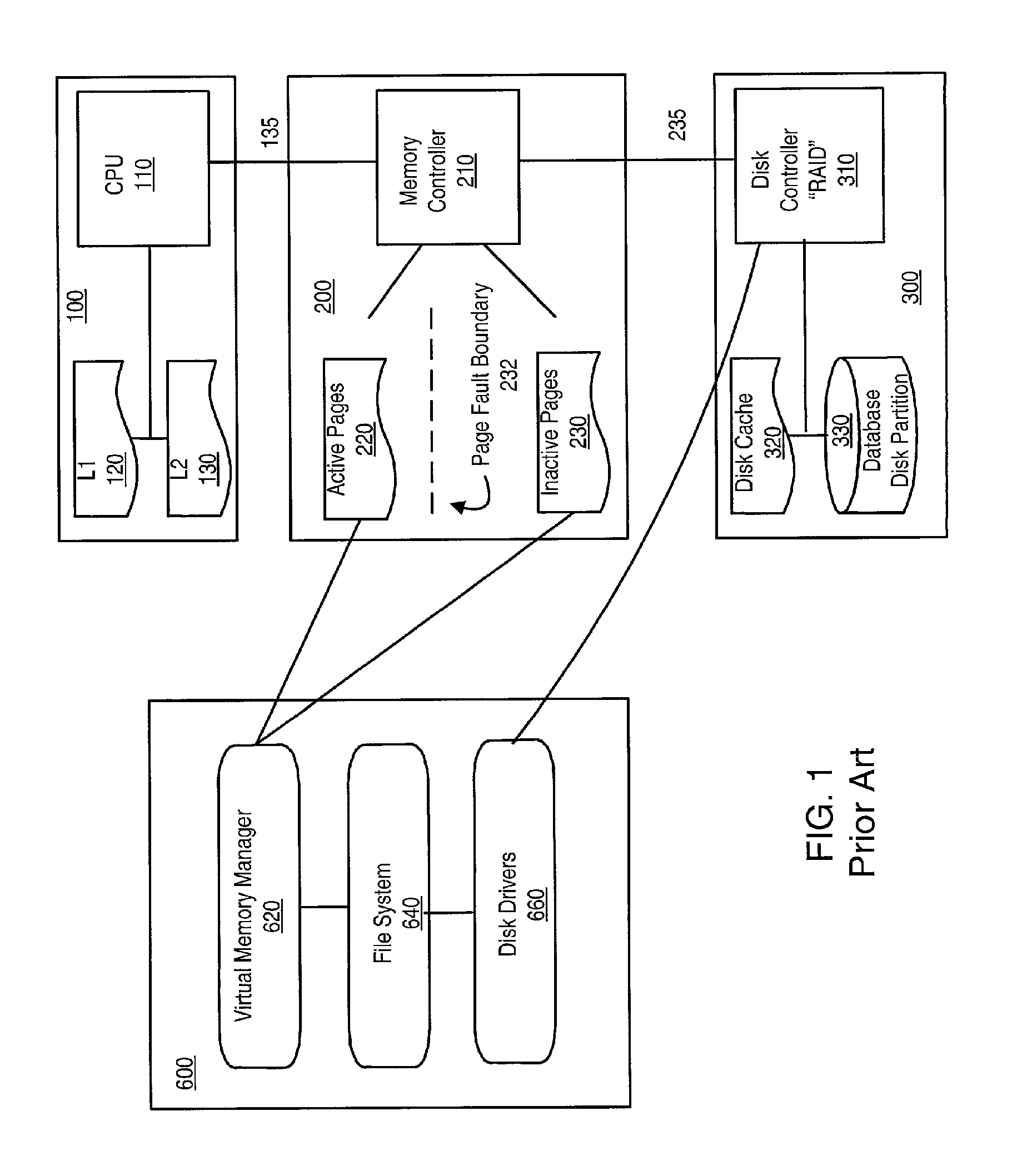

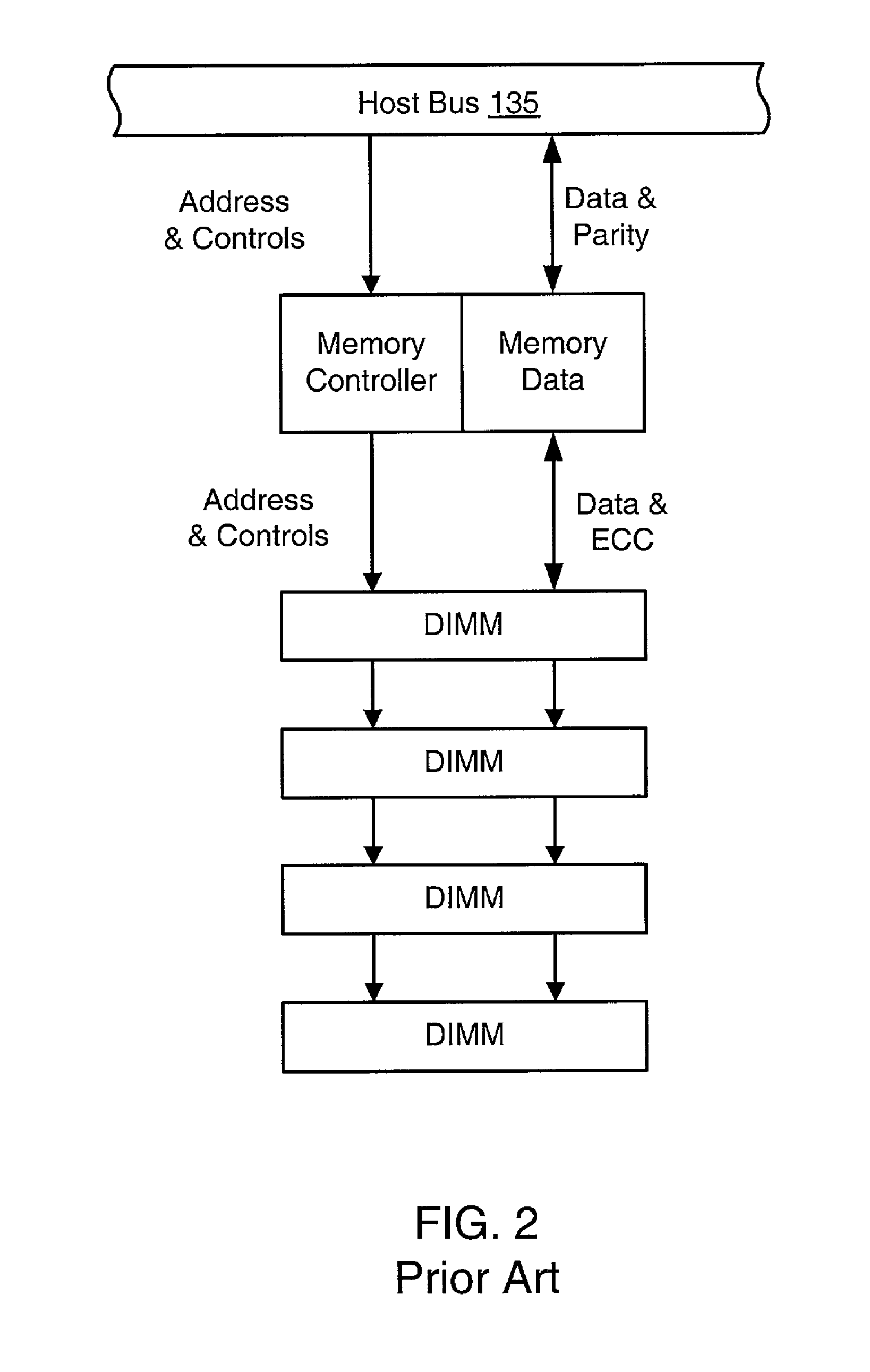

System and method for managing compression and decompression of system memory in a computer system

a computer system and system memory technology, applied in the field of memory systems, can solve the problems of reducing the cost per storage bit, not being able to achieve significant improvement in the effective operation of the memory subsystem or the software which manages the memory subsystem, and the software solution typically using too many cpu compute cycles and/or adding too much bus traffic, so as to reduce data bandwidth and storage requirements, and safe use of the entire system memory space. , the effect of reducing the average compression ratio

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

Incorporation by Reference

[0056]The following patents and patent applications are hereby incorporated by reference in their entirety as though fully and completely set forth herein.

[0057]U.S. Pat. No. 6,173,381 titled “Memory Controller Including Embedded Data Compression and Decompression Engines” issued on Jan. 9, 2001, whose inventor is Thomas A. Dye.

[0058]U.S. Pat. No. 6,170,047 titled “System and Method for Managing System Memory and / or Non-volatile Memory Using a Memory Controller with Integrated Compression and Decompression Capabilities” issued on Jan. 2, 2001, whose inventor is Thomas A. Dye.

[0059]U.S. patent application Ser. 09 / 239,659 titled “Bandwidth Reducing Memory Controller Including Scalable Embedded Parallel Data Compression and Decompression Engines” whose inventors are Thomas A. Dye, Manuel J. Alvarez II and Peter Geiger and was filed on Jan. 29, 1999. Pursuant to a Response to Office Action of Aug. 5, 2002, this application is currently pending a title change fr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com