Patents

Literature

32results about How to "Reduce access overhead" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

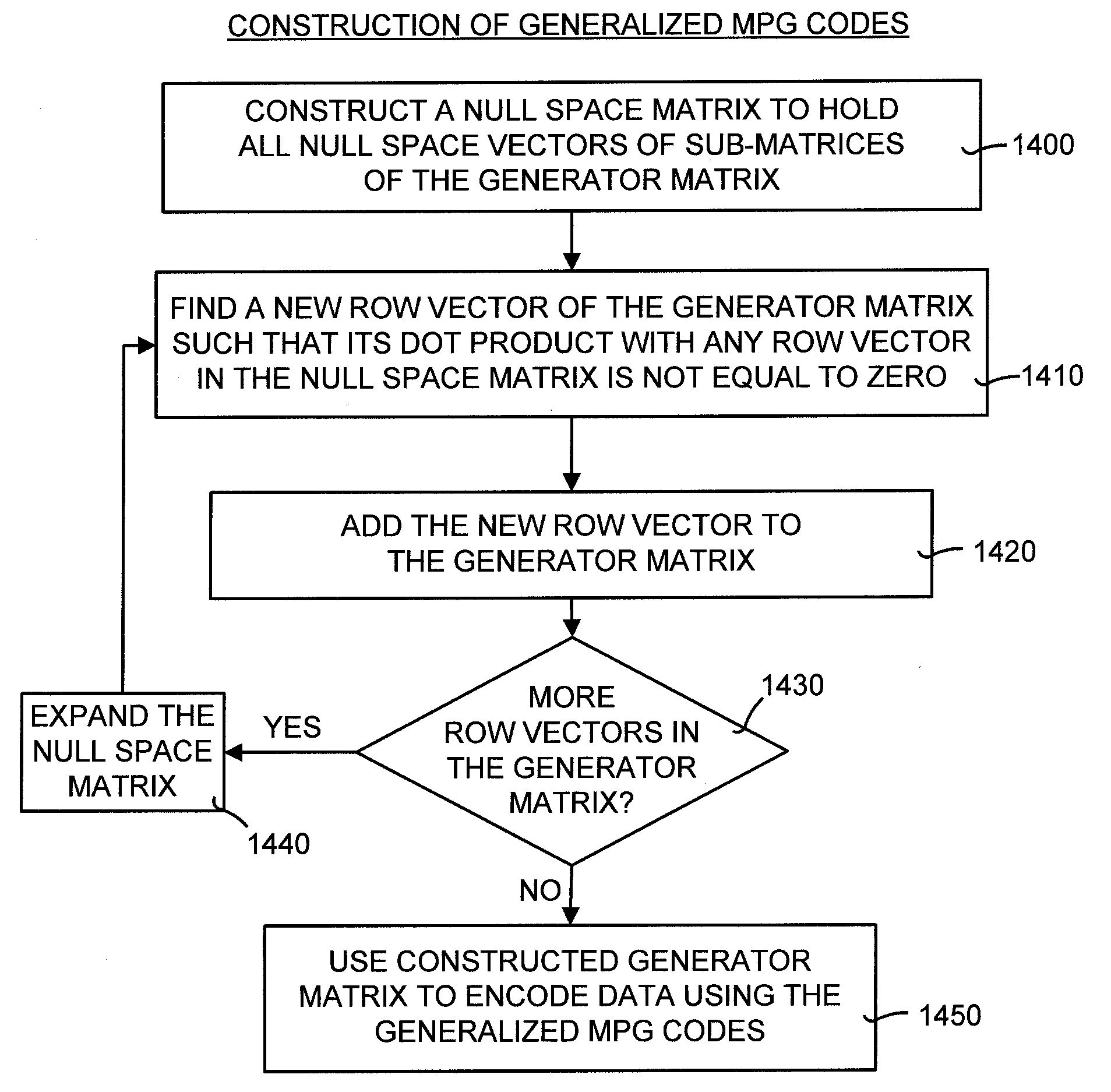

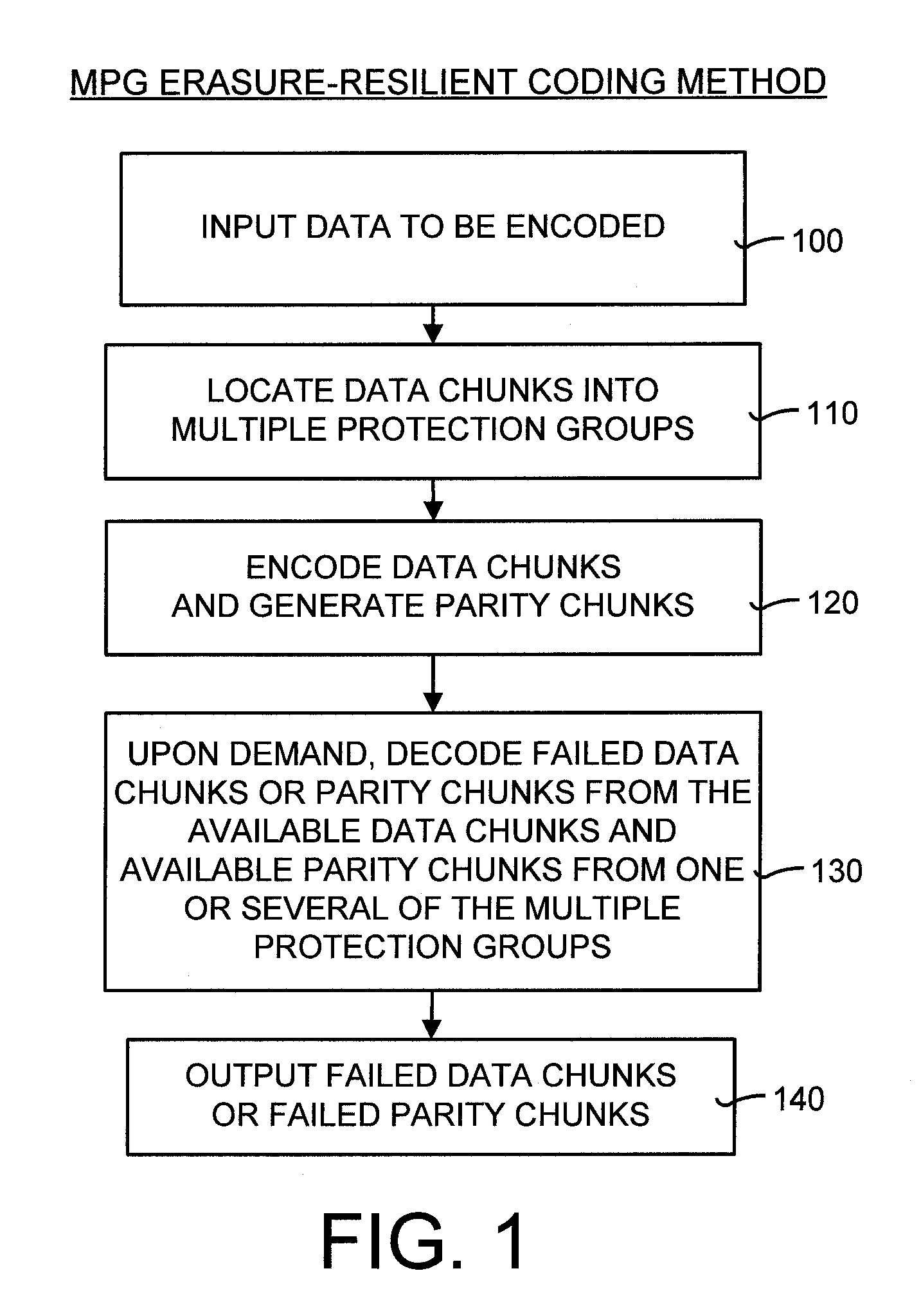

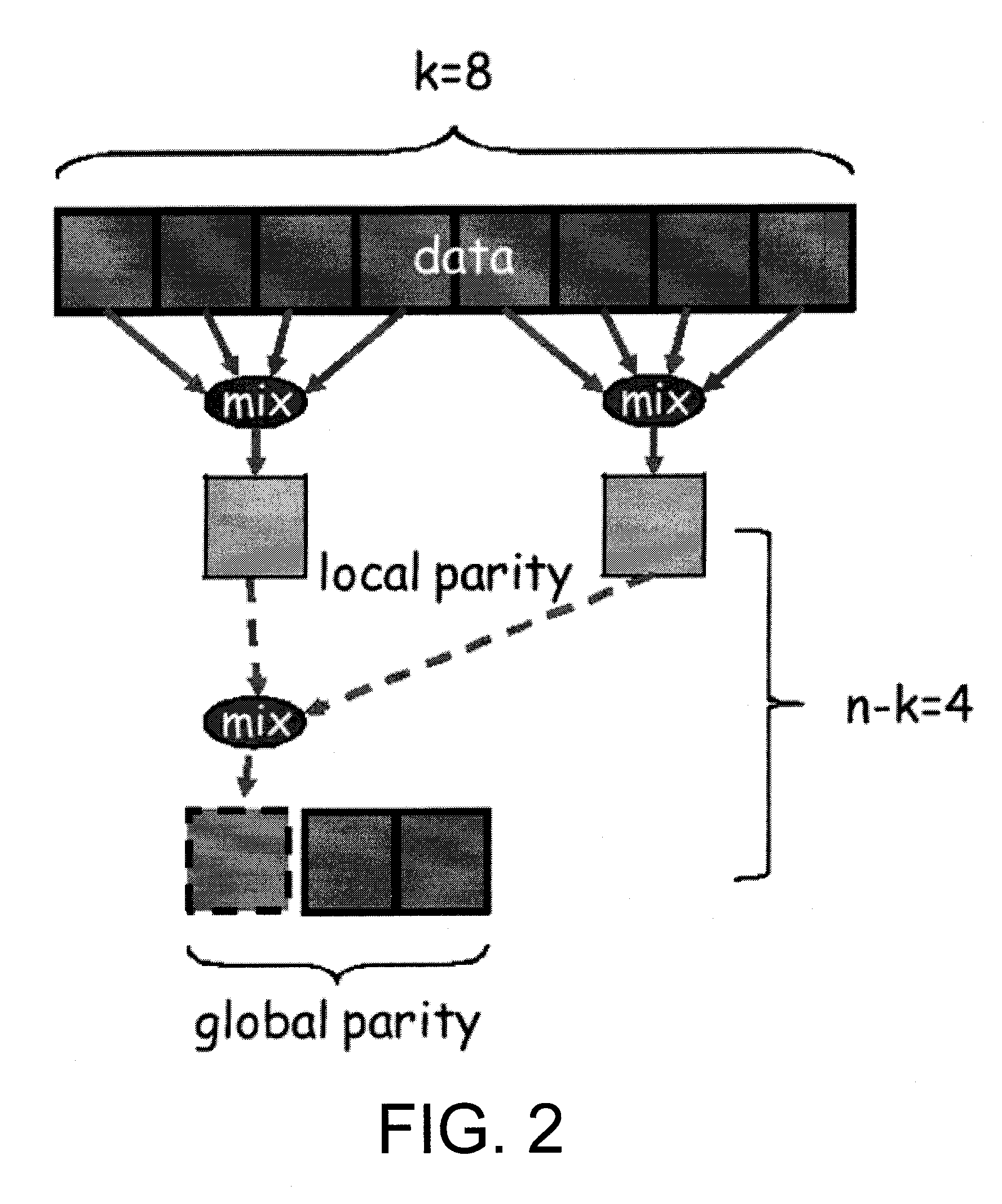

Multiple protection group codes having maximally recoverable property

InactiveUS20080222481A1Reduce access overheadCode conversionCyclic codesTheoretical computer scienceGroup code

Owner:MICROSOFT TECH LICENSING LLC

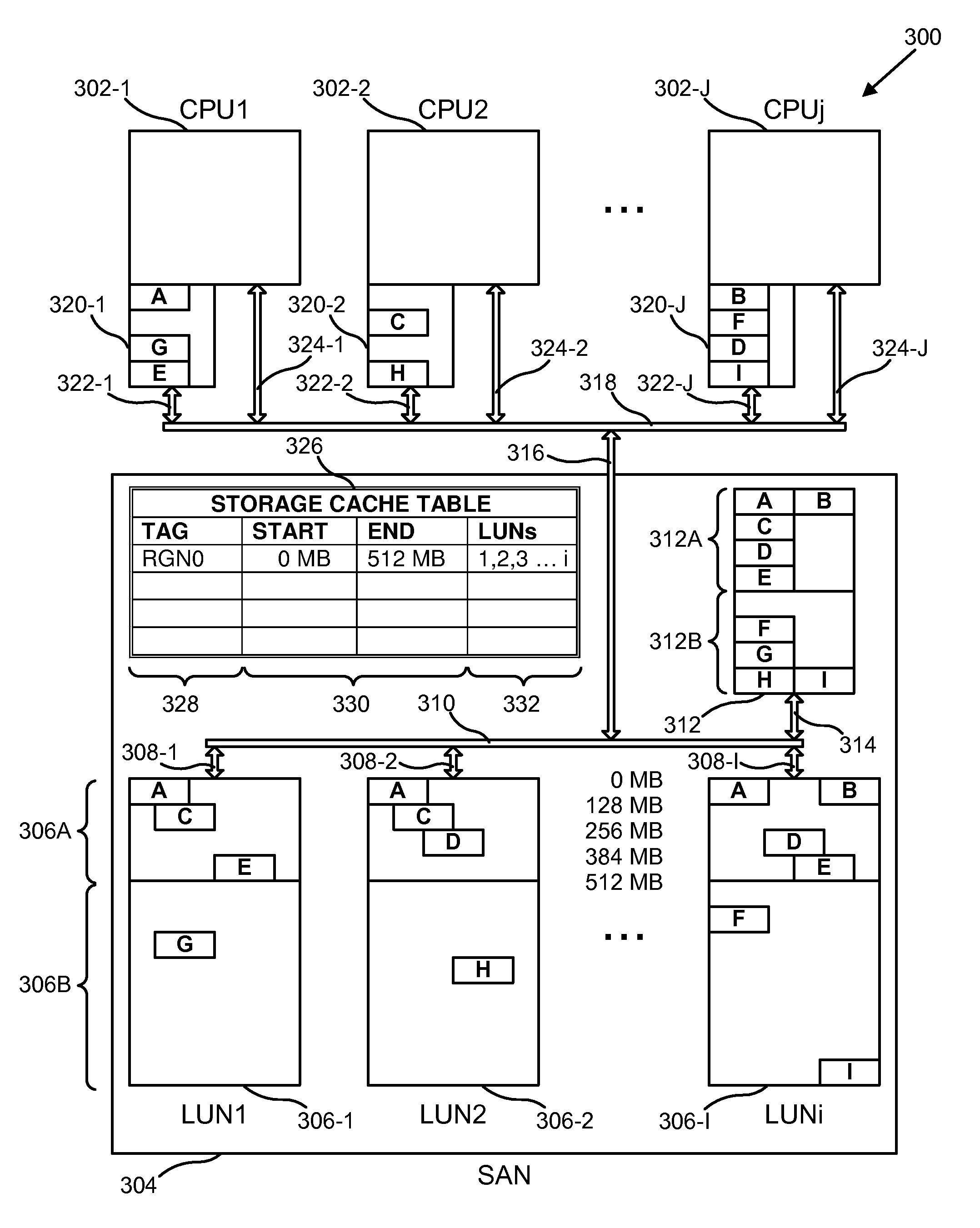

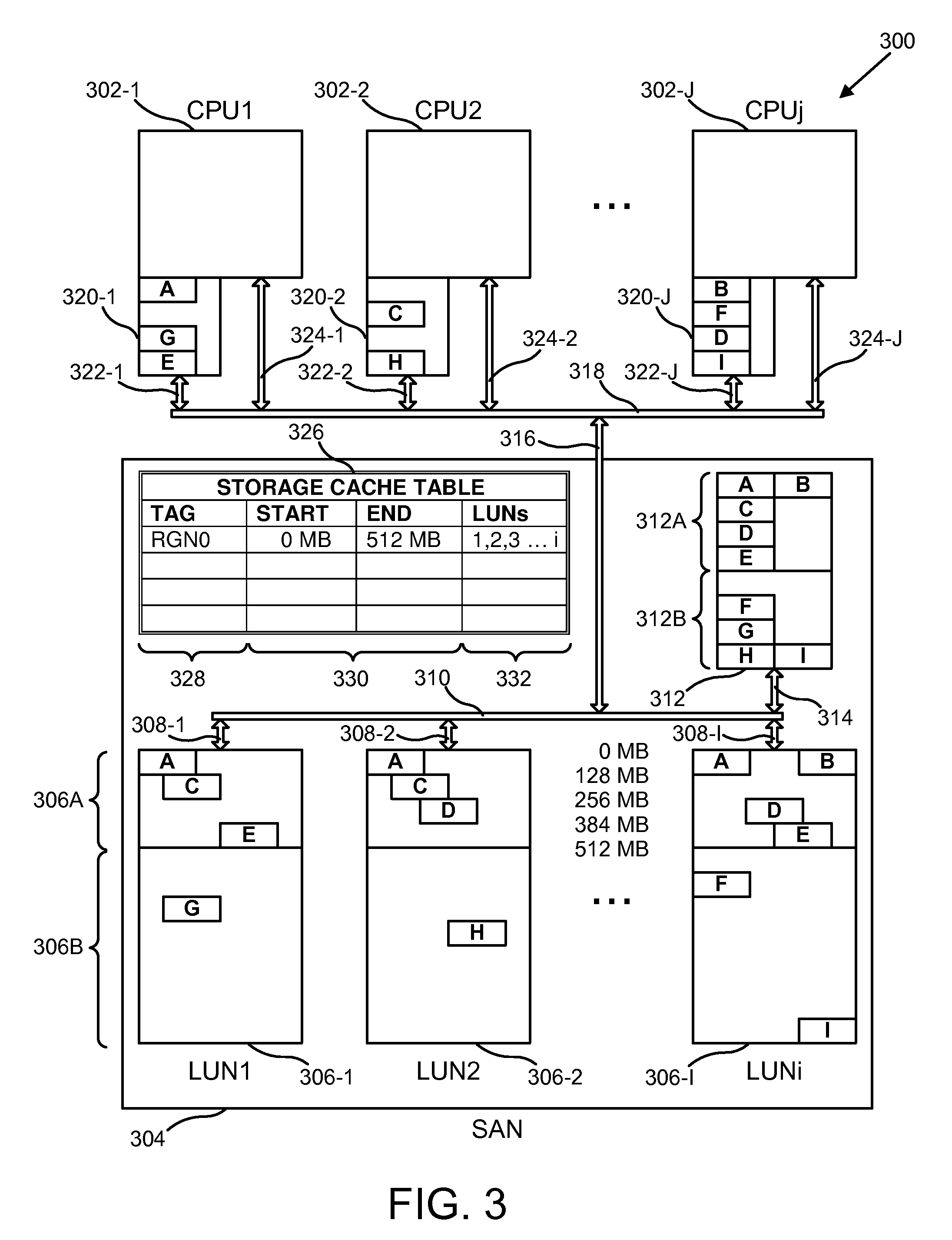

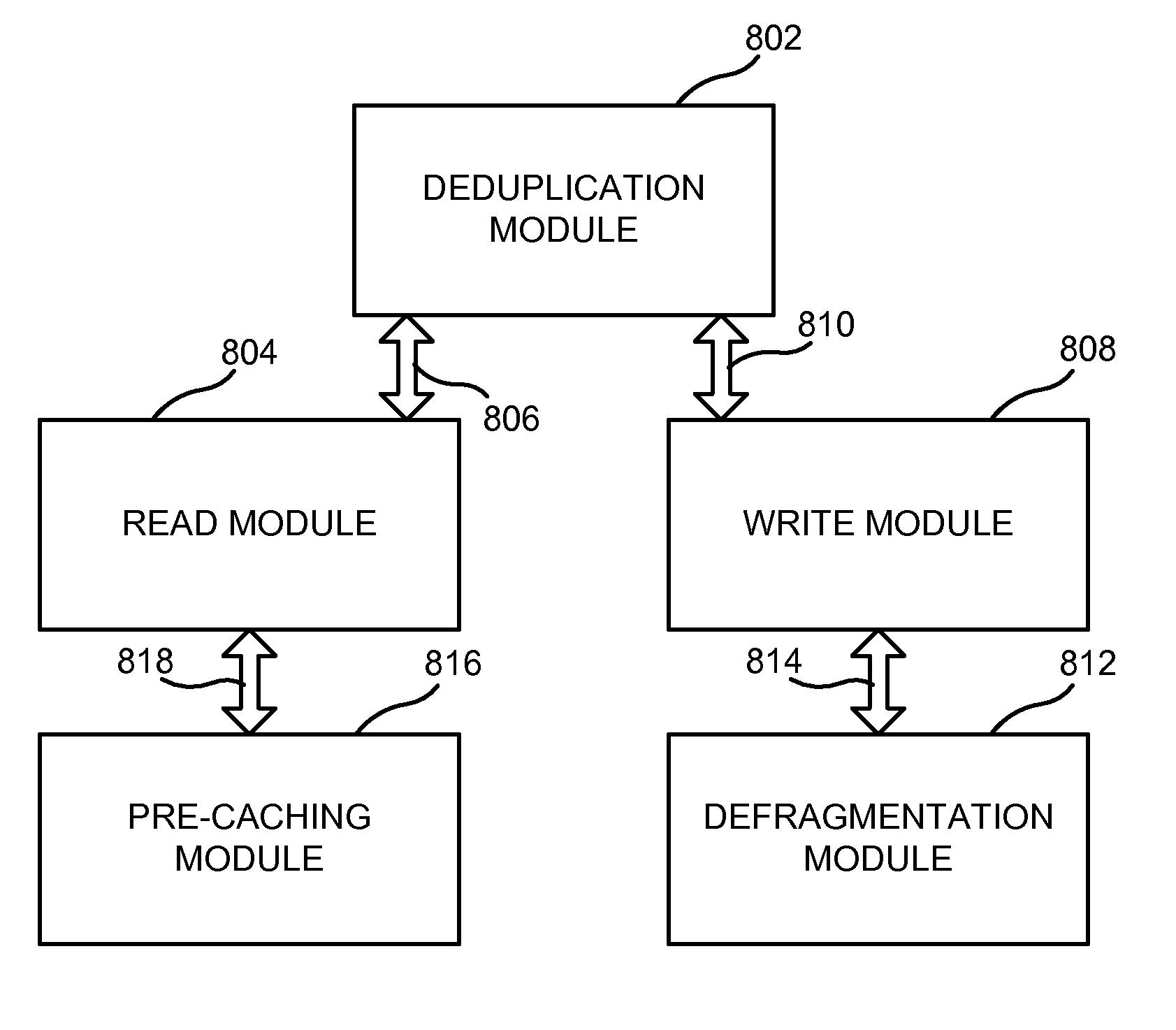

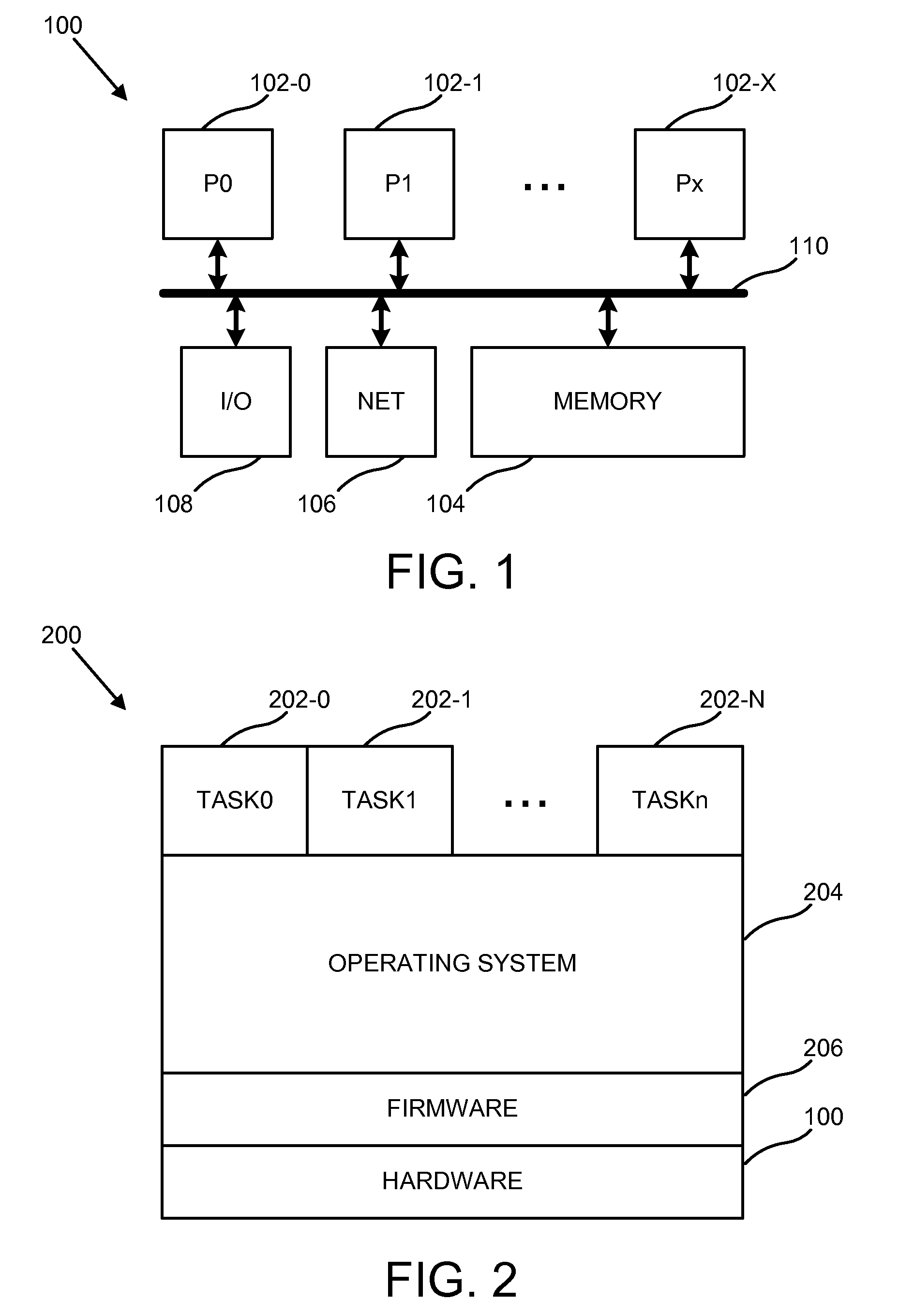

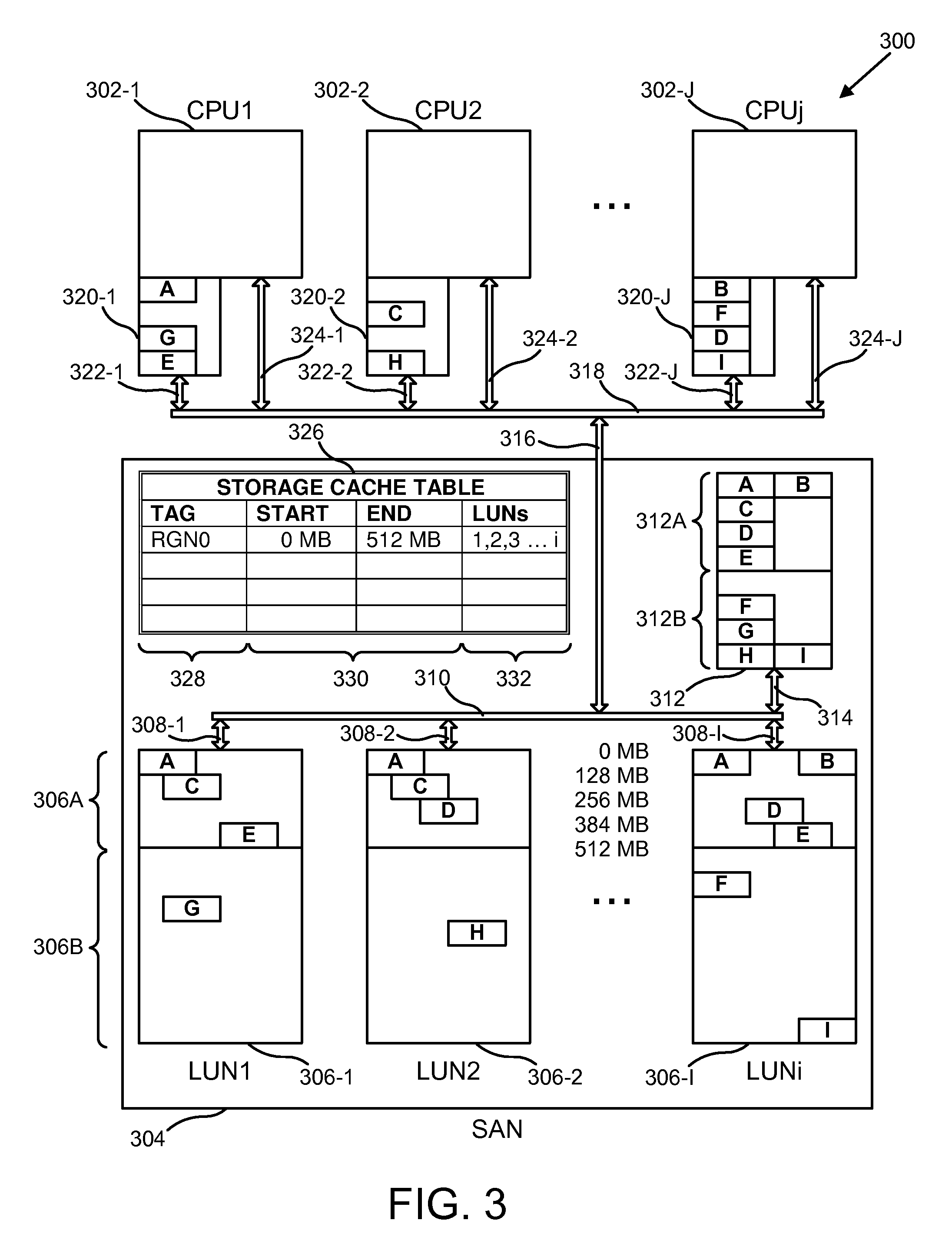

Apparatus, system and method for storage cache deduplication

ActiveUS20100070715A1Reduces initial storage access overheadEffective miss rateMemory architecture accessing/allocationMemory adressing/allocation/relocationData deduplication

An apparatus, system, and method are disclosed for deduplicating storage cache data. A storage cache partition table has at least one entry associating a specified storage address range with one or more specified storage partitions. A deduplication module creates an entry in the storage cache partition table wherein the specified storage partitions contain identical data to one another within the specified storage address range thus requiring only one copy of the identical data to be cached in a storage cache. A read module accepts a storage address within a storage partition of a storage subsystem, to locate an entry wherein the specified storage address range contains the storage address, and to determine whether the storage partition is among the one or more specified storage partitions if such an entry is found.

Owner:LENOVO PC INT

Apparatus, system and method for storage cache deduplication

ActiveUS8190823B2Reduce access overheadEffective miss rateMemory architecture accessing/allocationDigital computer detailsData deduplication

Owner:LENOVO PC INT

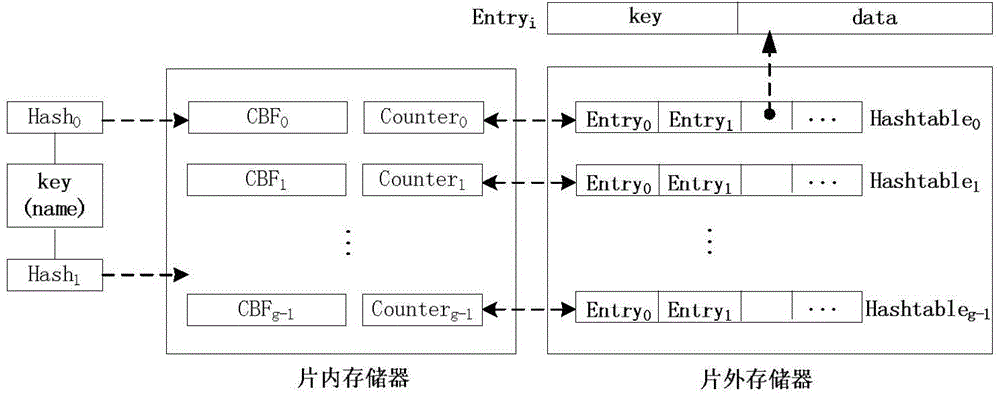

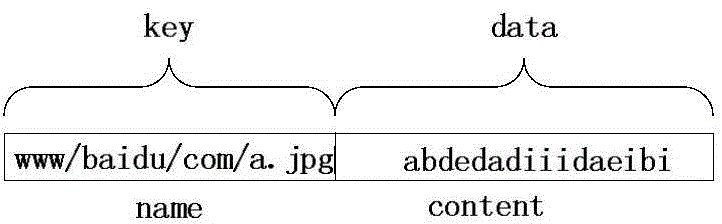

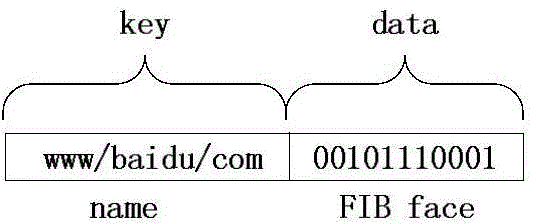

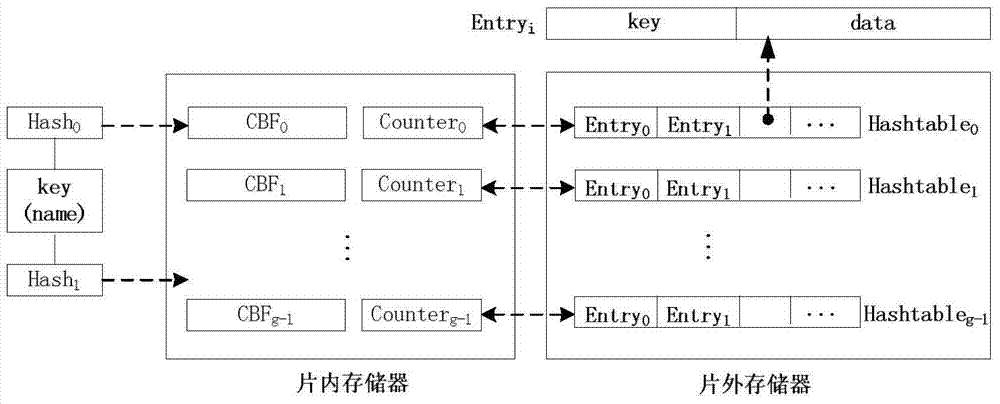

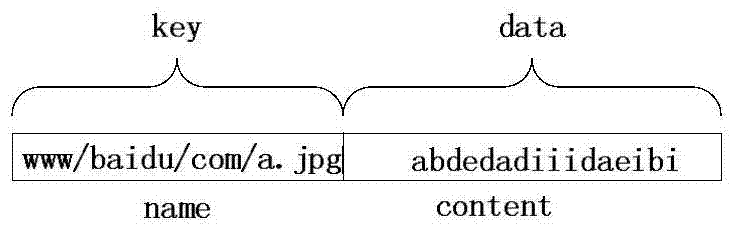

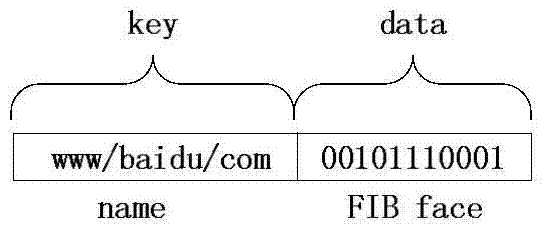

Hash Bloom filter (HBF) for name lookup in NDN and data forwarding method

ActiveCN104579974ALower overall cost of accessReduce access overheadData switching networksComputer hardwareBloom filter

The invention discloses a Hash Bloom filter (HBF) for name lookup in an NDN and a data forwarding method. The Hash Bloom filter comprises g counter Bloom filters positioned in an on-chip memory, g counters and g Hash tables positioned in an off-chip memory, wherein each Hash table is associated with one counter Bloom filter and one counter; the Hash Bloom filter uniformly disperses and stores FIB / CS / PIT table entry complete information in an NDN router in the g counter Bloom filters and g Hash tables. The HBF utilizes the positioning and filtering functions of CBF in the on-chip memory to greatly reduce the access expenses of the off-chip memory, so that the overall access cost of the HBF is reduced, the data package forwarding rate is increased, and flooding attack is effectively avoided.

Owner:HUNAN UNIV

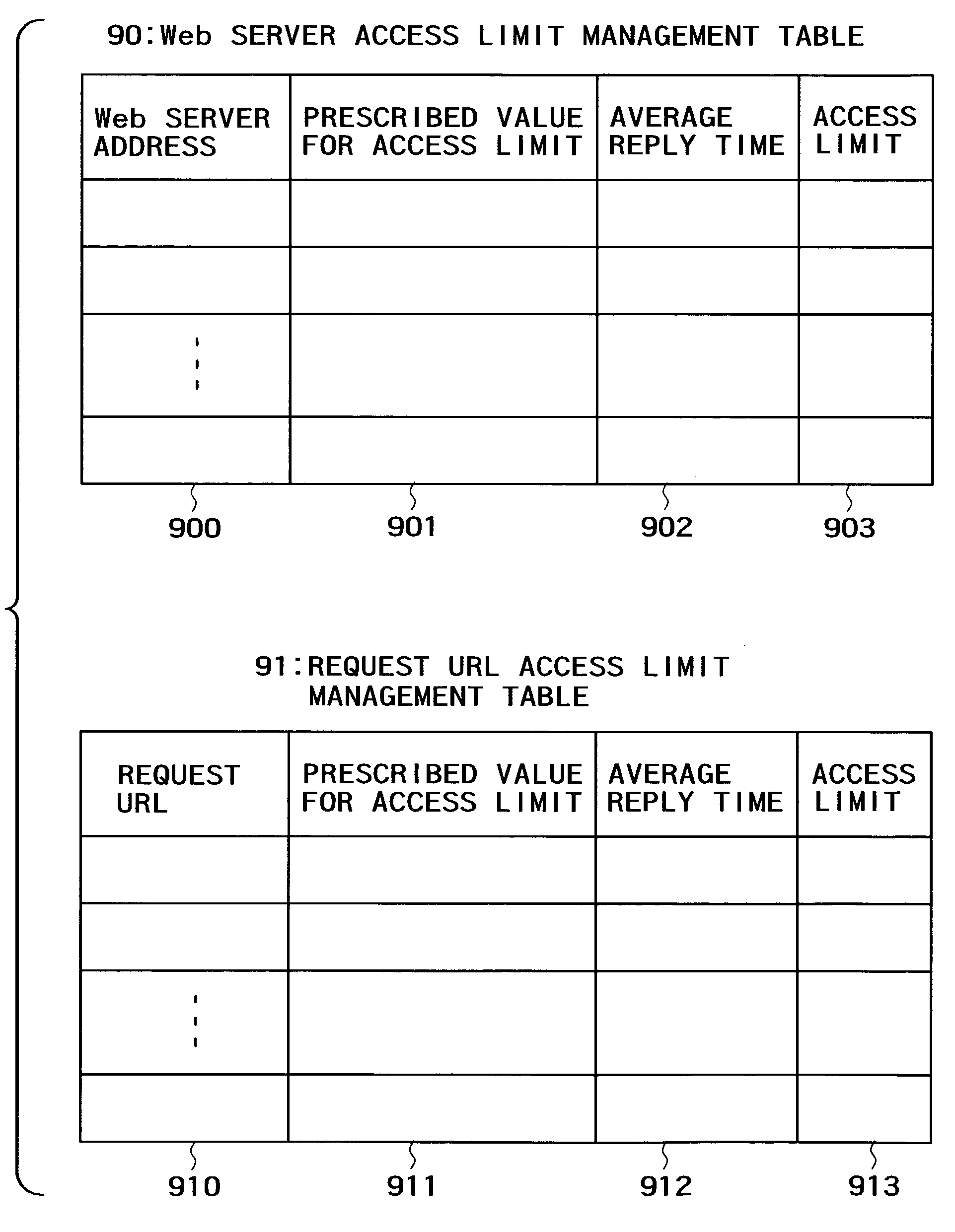

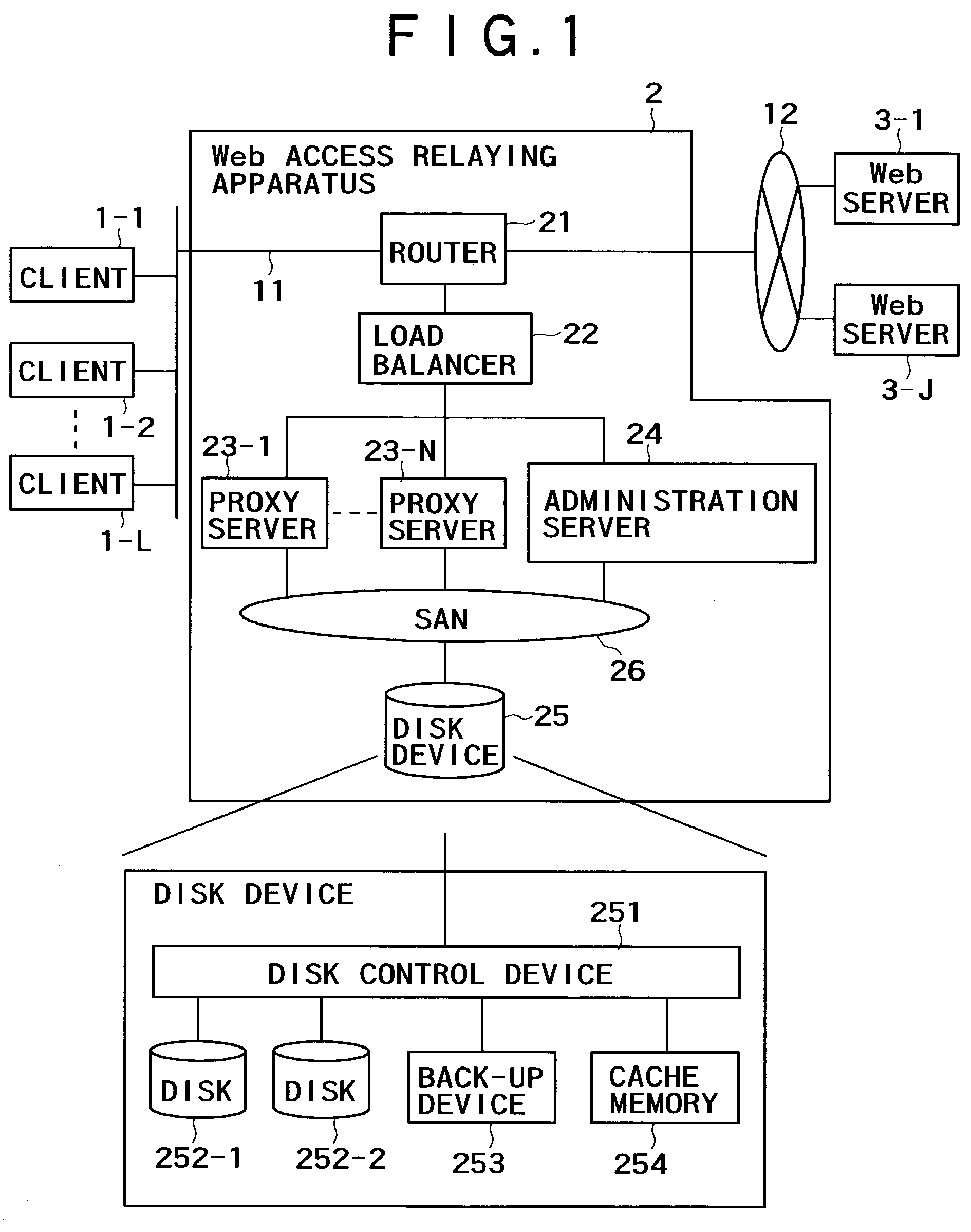

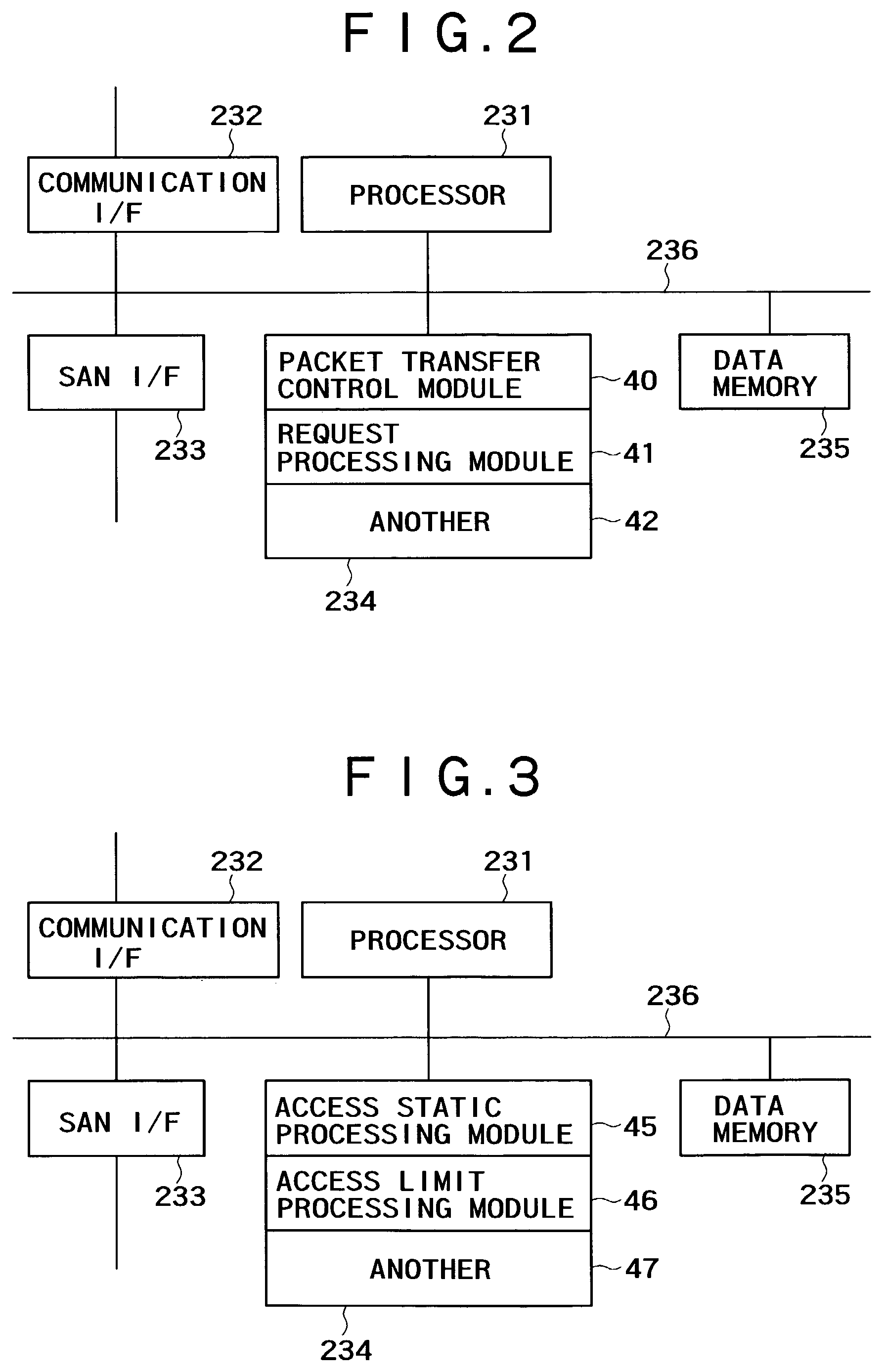

Access relaying apparatus

InactiveUS7558854B2Reduce access overheadPreventing reduction in service providingMultiple digital computer combinationsData switching networksWeb serviceProxy server

To lower risks of overhead on management of an access log and of a loss of the access log due to a default and to prevent deterioration in service providing performance caused by access concentration to a specific Web server in an access relaying apparatus including a plurality of proxy servers. An access relaying apparatus has a plurality of proxy servers 23, an administration server 24 for statistic processing, and a shared disk 25 accessible from them. Each of the proxy servers outputs an access log to the shared disk, and the administration server reads the access logs from the shared disk and performs statistic processing. An access limit to a specific Web server is determined based on a result of the statistic processing, it is notified to each of the proxy servers, and a number of accesses to the Web server is controlled.

Owner:GOOGLE LLC

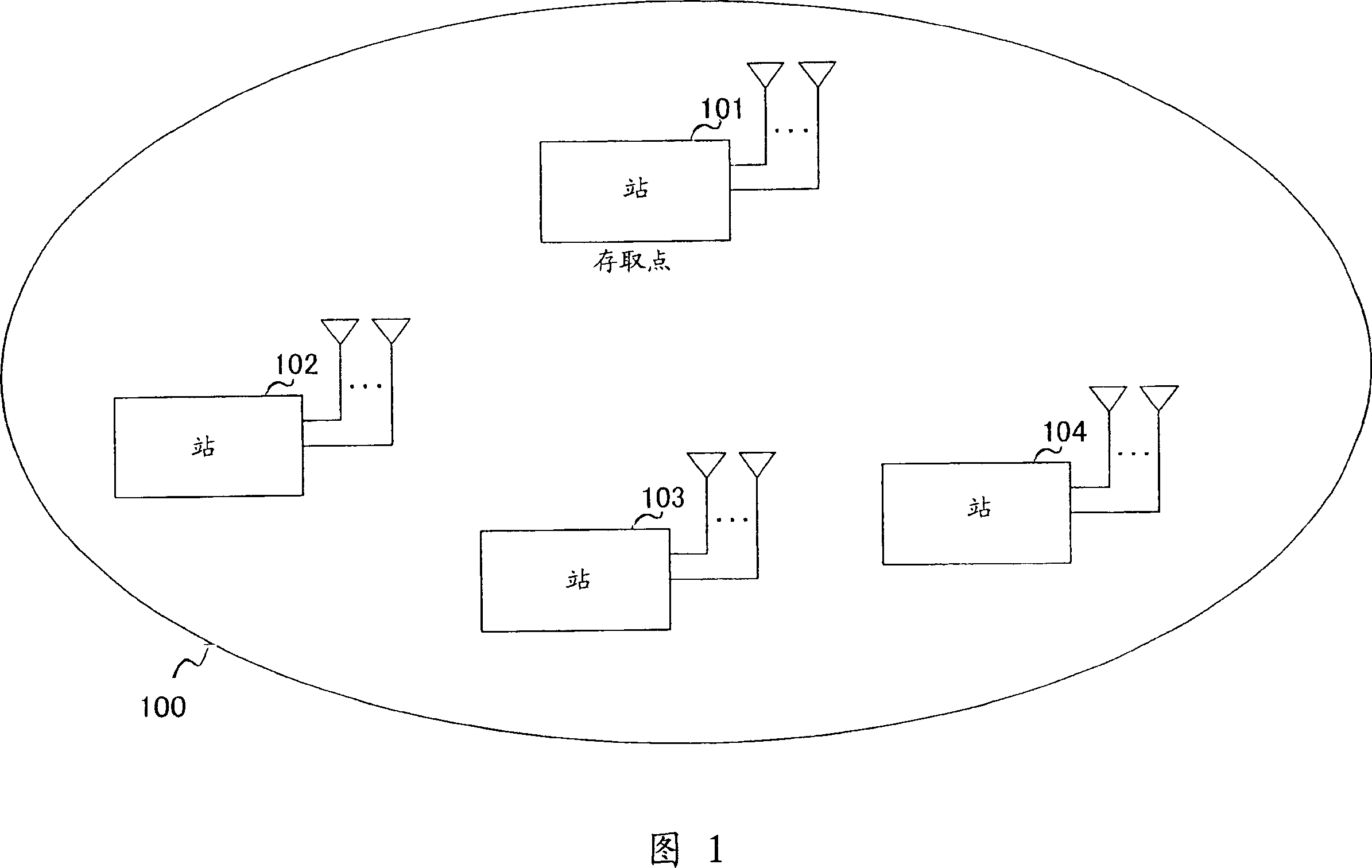

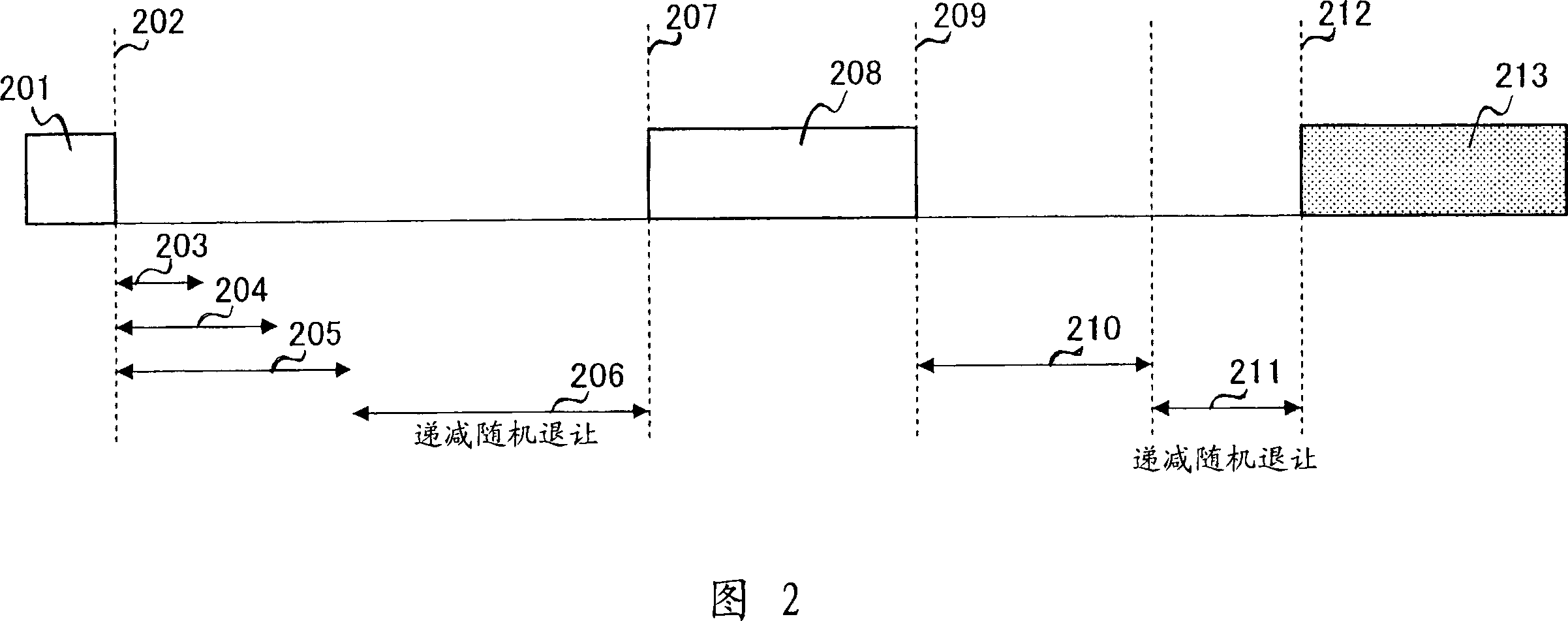

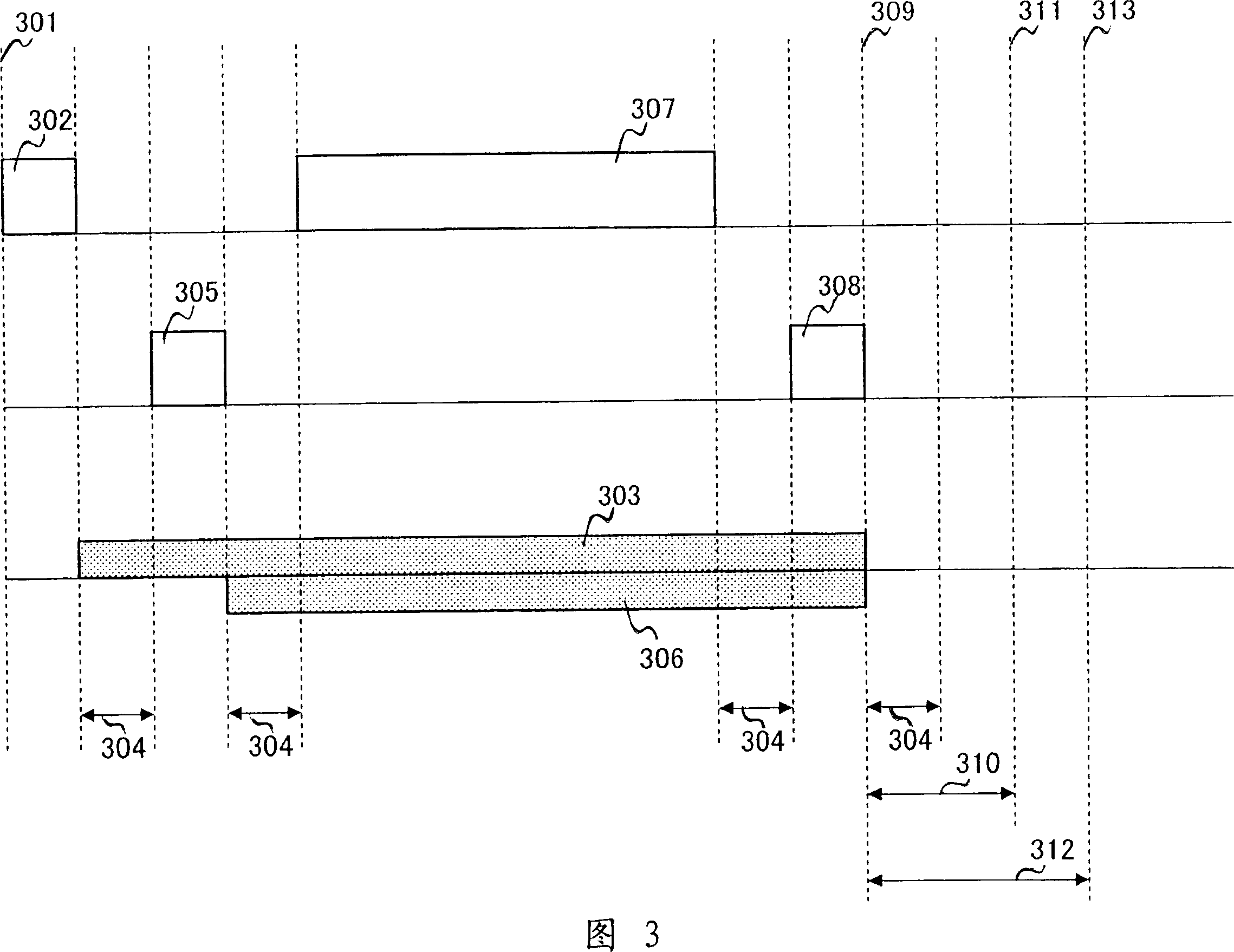

Method for reducing medium access overhead in a wireless network

InactiveCN1926814AReduce access overheadIncrease profitData switching by path configurationCommunications systemContext sensitivity

The invention includes methods for achieving efficient channel access in a wireless communications system. The invention is embodied in a wireless network adapter that is present in all stations belonging to the network. The invention describes methods by which access overheads may be reduced by introducing the concept of context sensitive frame timing - using which stations redefine and interpret frame timing depending on context and signaling. The result of realizing the invention is an improvement in medium utilization efficiency and consequently, an overall improvement in network throughput.

Owner:PANASONIC CORP

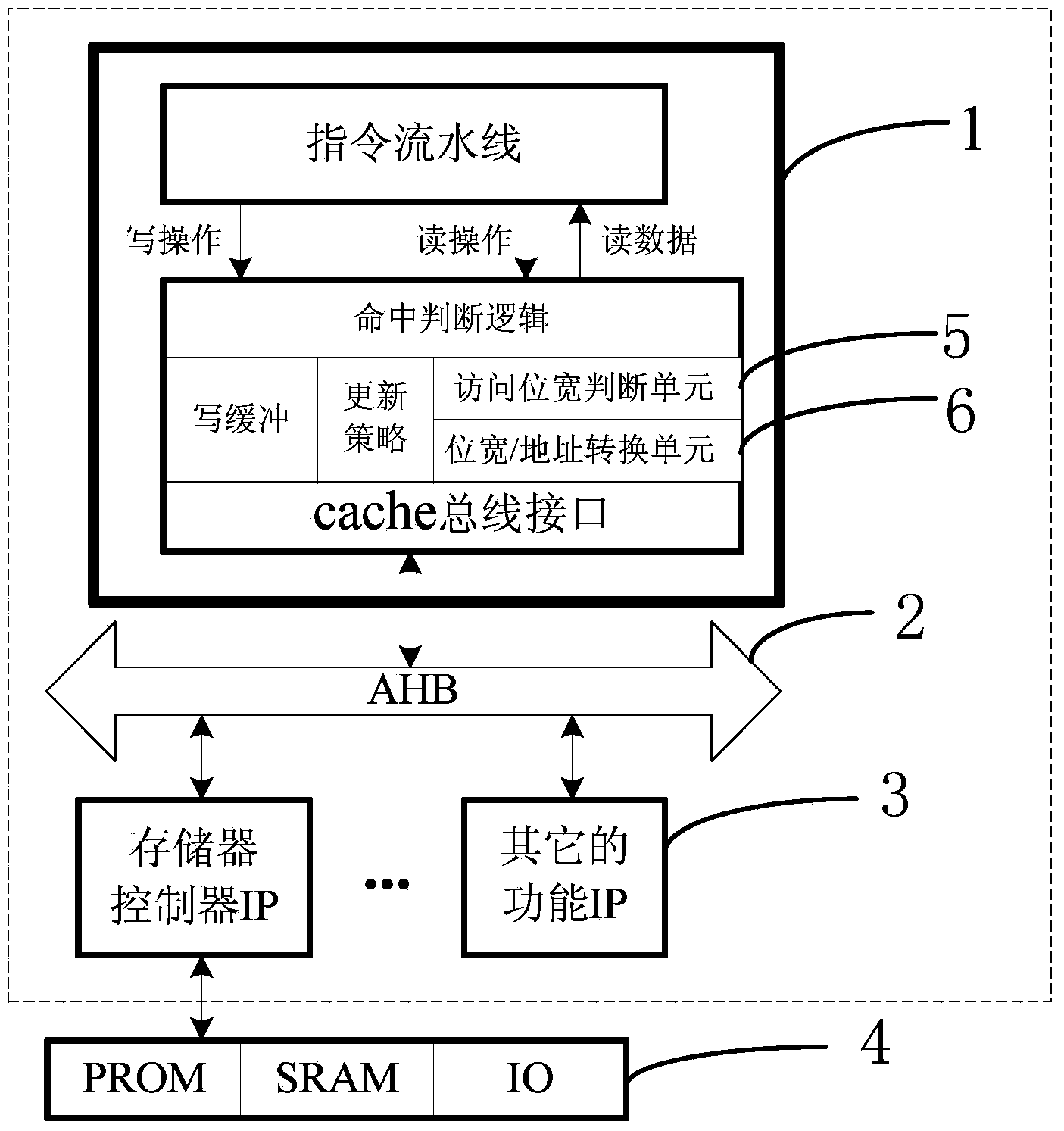

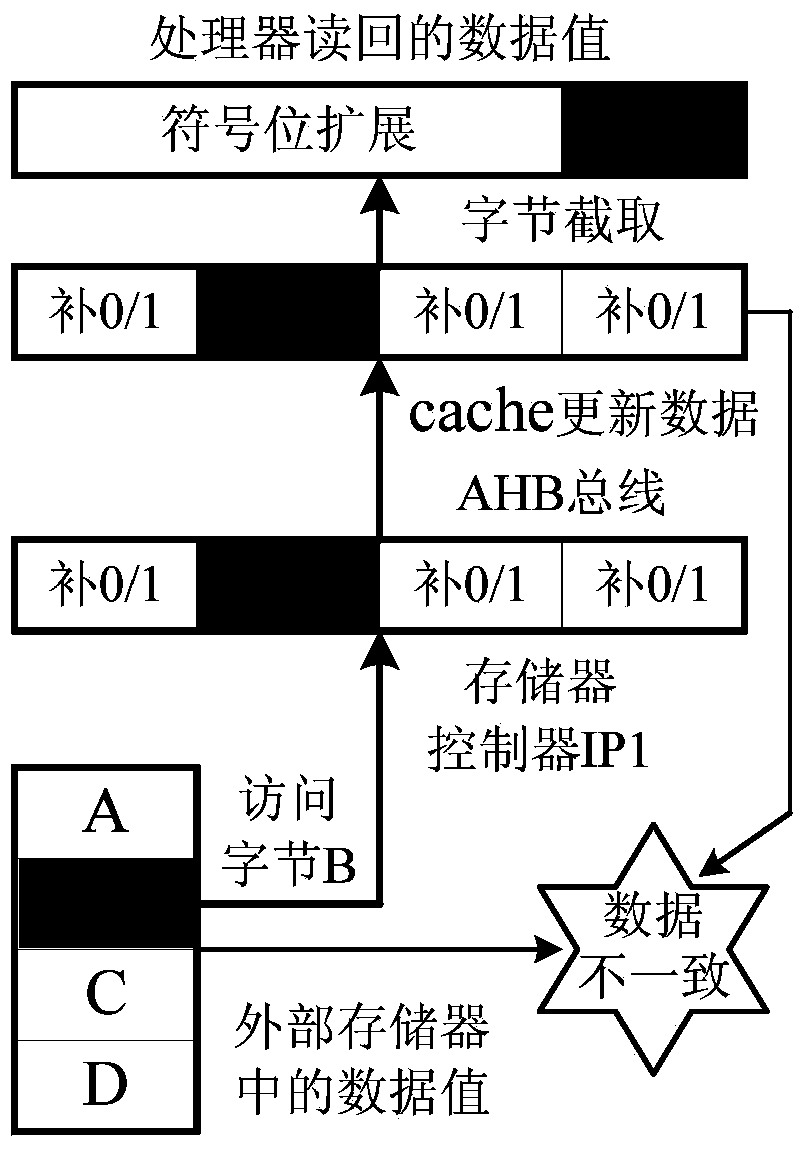

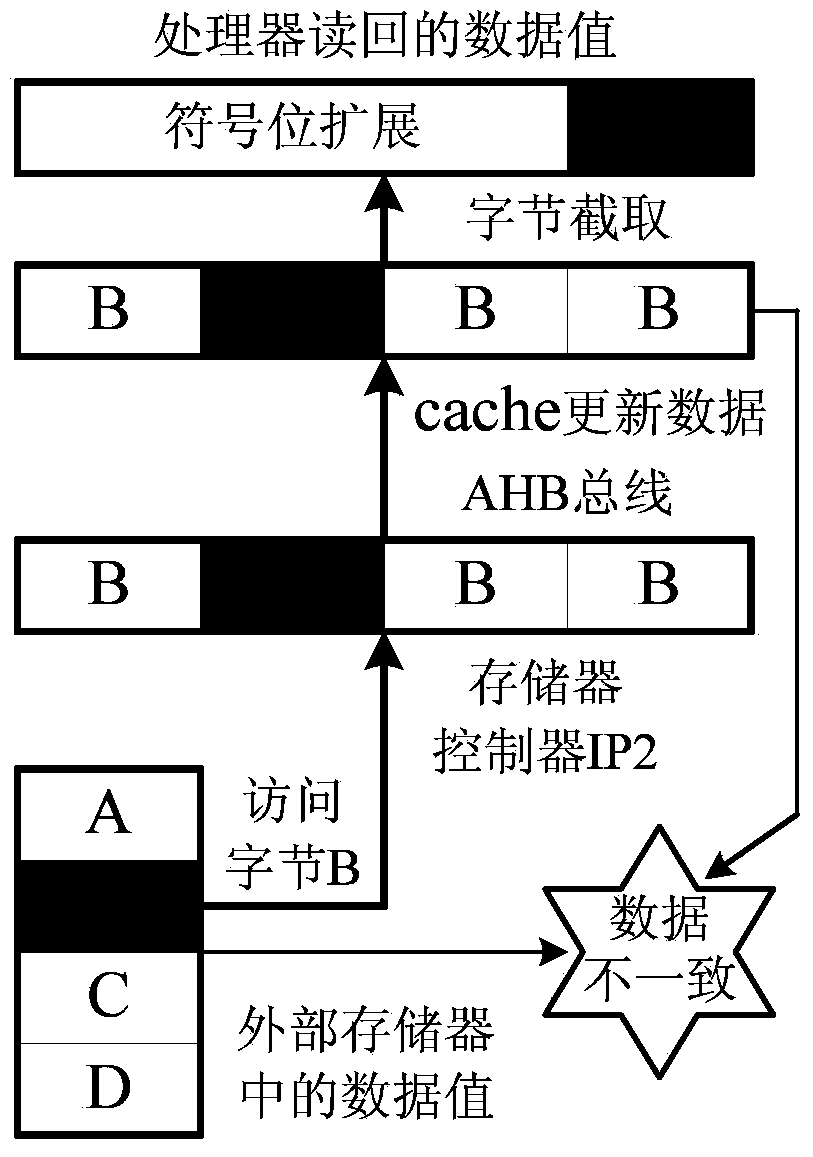

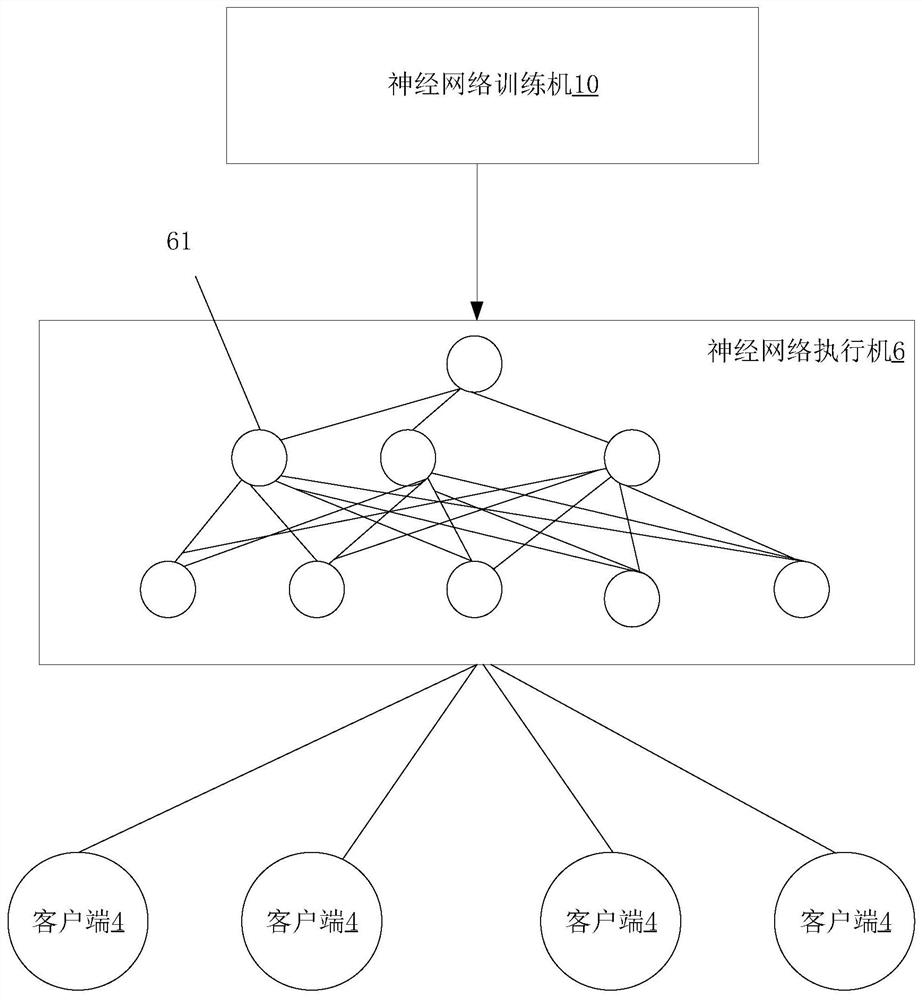

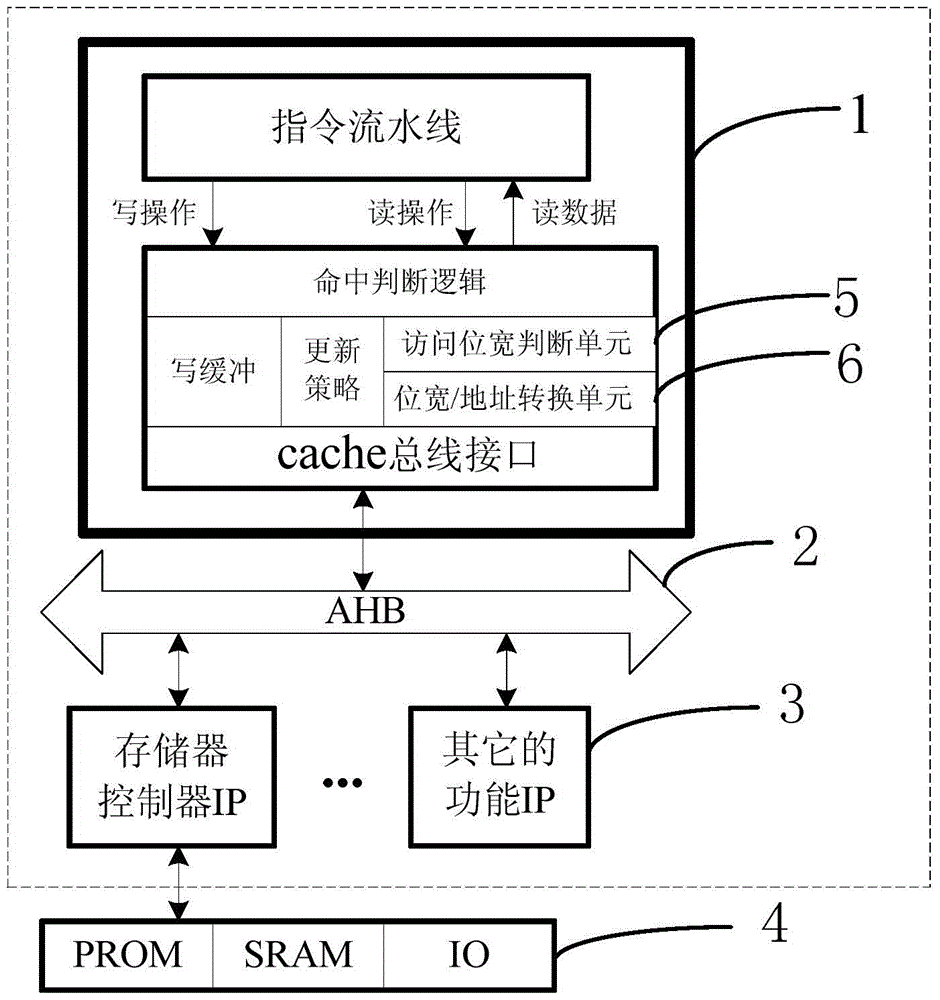

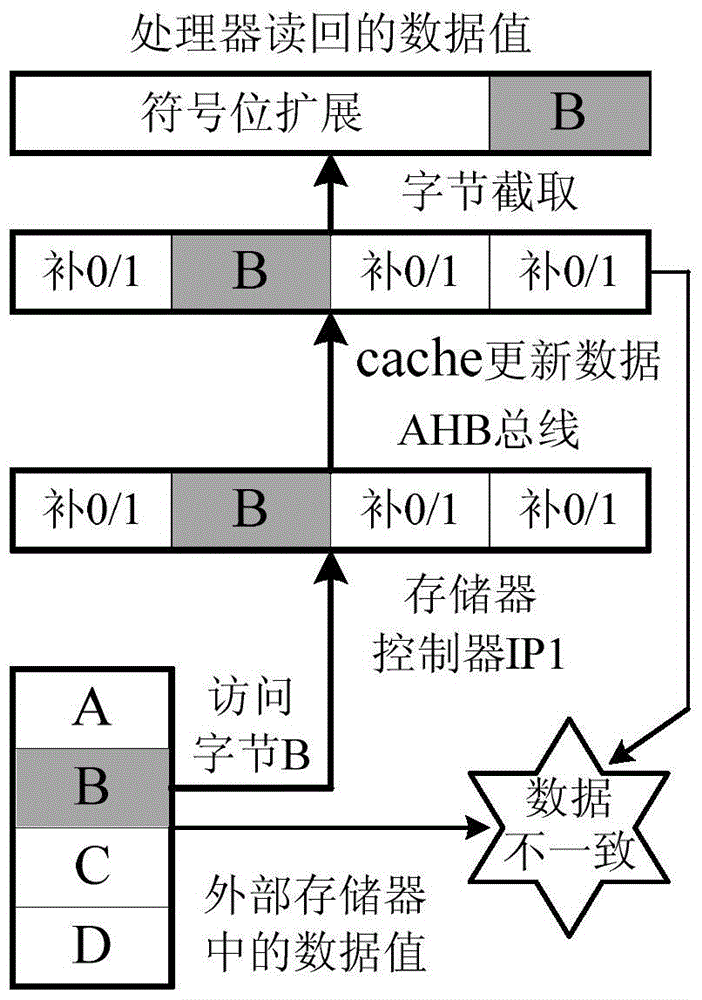

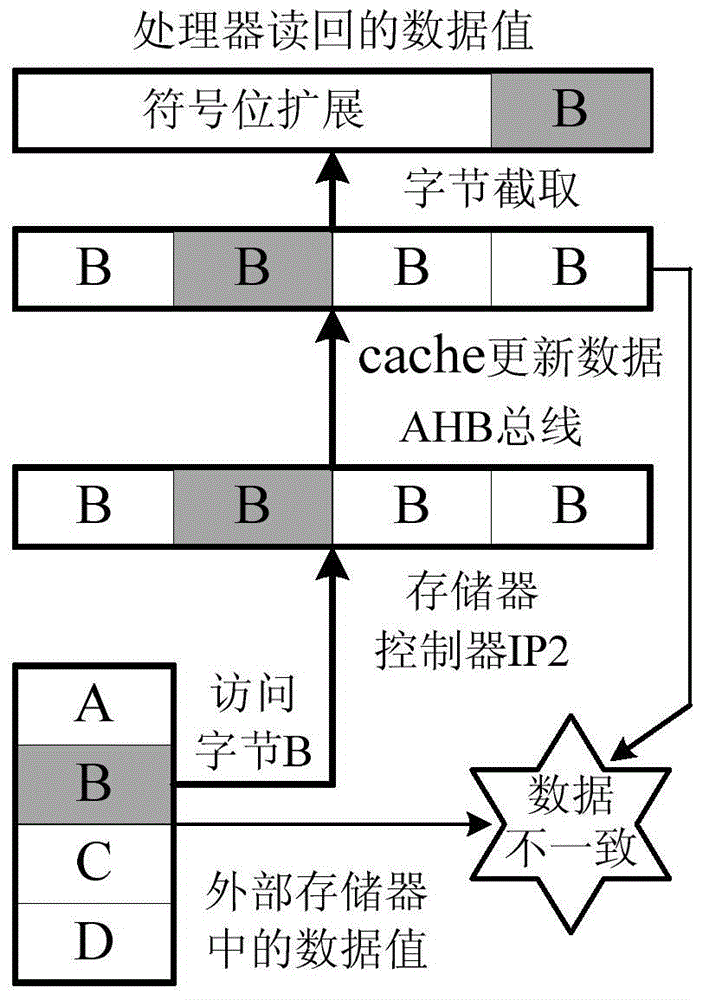

Unified bit width converting structure and method in cache and bus interface of system chip

ActiveCN104375962AImprove compatibilityEnsure consistencyMemory adressing/allocation/relocationLogic cellBus interface

The invention discloses a unified bit width converting structure and method in a cache and a bus interface of a system chip. The converting structure comprises a processor core and a plurality of IP cores carrying out data interaction with the processor core through an on-chip bus, and a memorizer controller IP is communicated with an off-chip main memorizer. The processor core comprises an instruction assembly line and a hit judgment logic unit receiving an operation instruction of the instruction assembly line. An access bit width judgment unit and a bit width / address converting unit are arranged between the hit judgment logic unit and the cache bus interface, the hit judgment logic unit sends a judgment result to the instruction assembly line, and the processor core is connected with the on-chip bus through the cache bus interface. According to the converting method, for the read access of a byte or a half byte, if cache deficiency happens and the access space belongs to the cache area, the bit width / address converting unit converts the read access of the byte or the half byte into one-byte access, access and storage are finished through the bus, an original updating strategy is not affected, and flexibility can exist.

Owner:NO 771 INST OF NO 9 RES INST CHINA AEROSPACE SCI & TECH

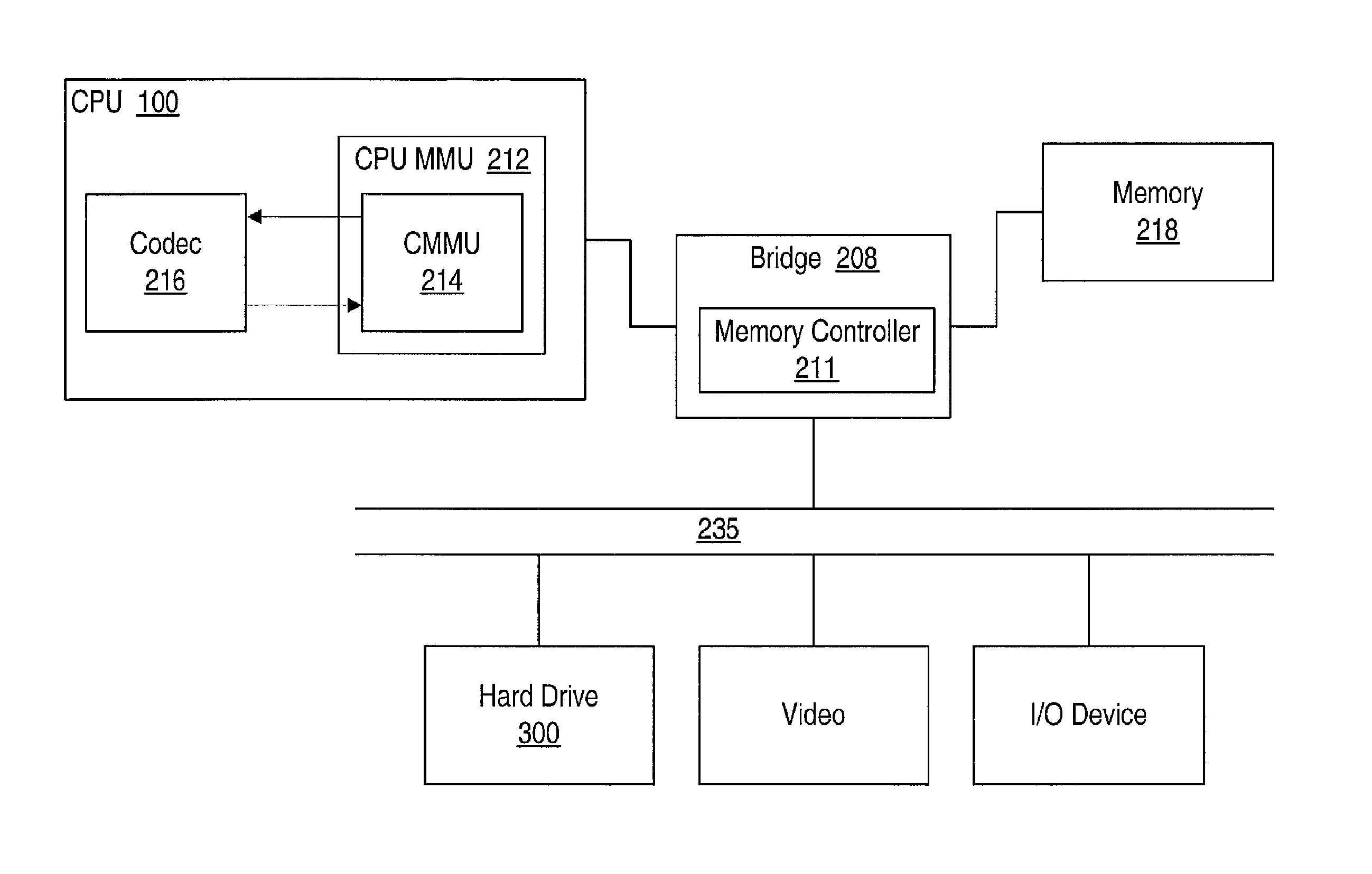

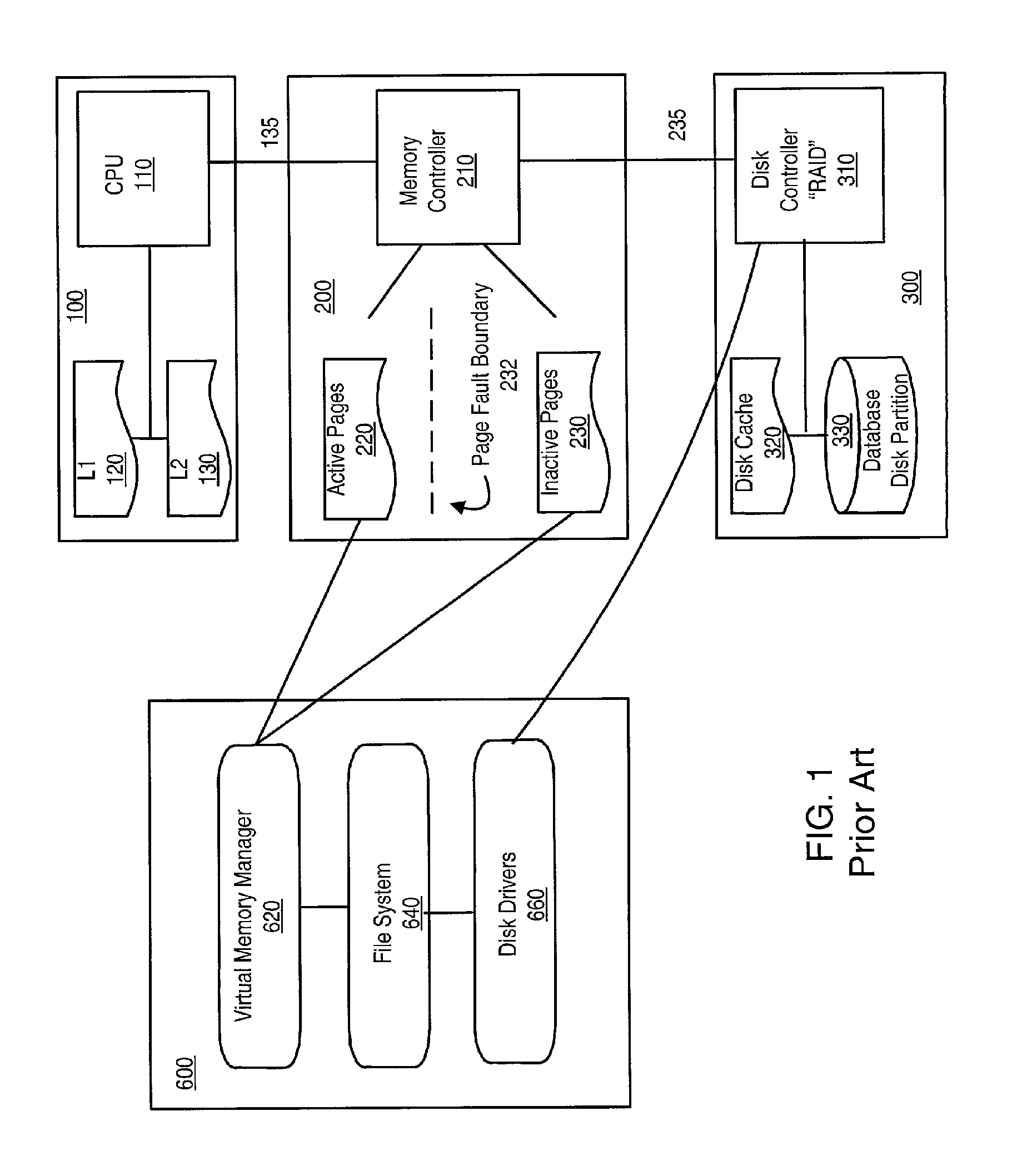

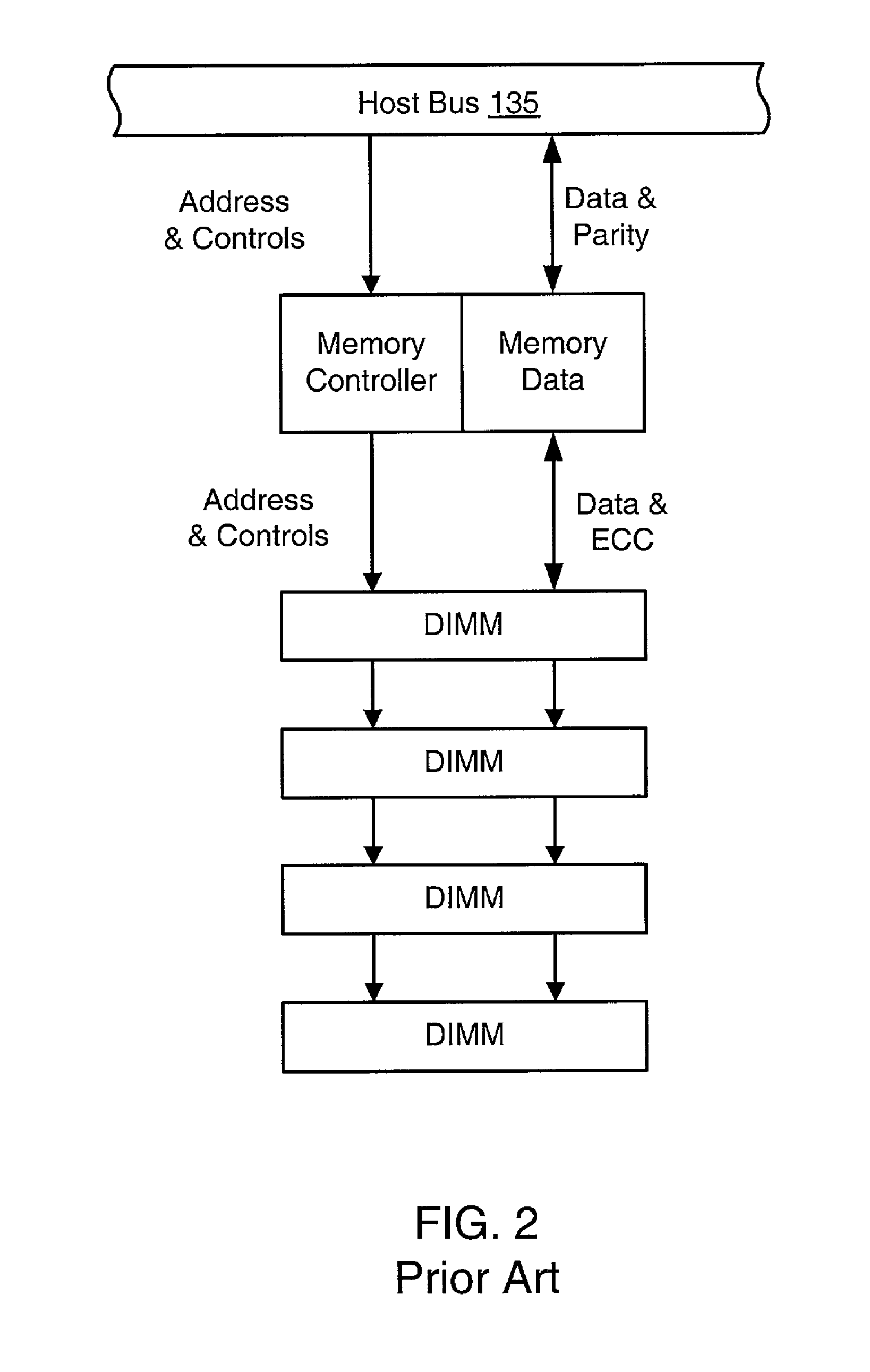

System and method for managing compression and decompression of system memory in a computer system

InactiveUSRE43483E1Increase effective sizeReduce access overheadMemory architecture accessing/allocationMemory adressing/allocation/relocationManagement unitParallel computing

Owner:MOSSMAN HLDG

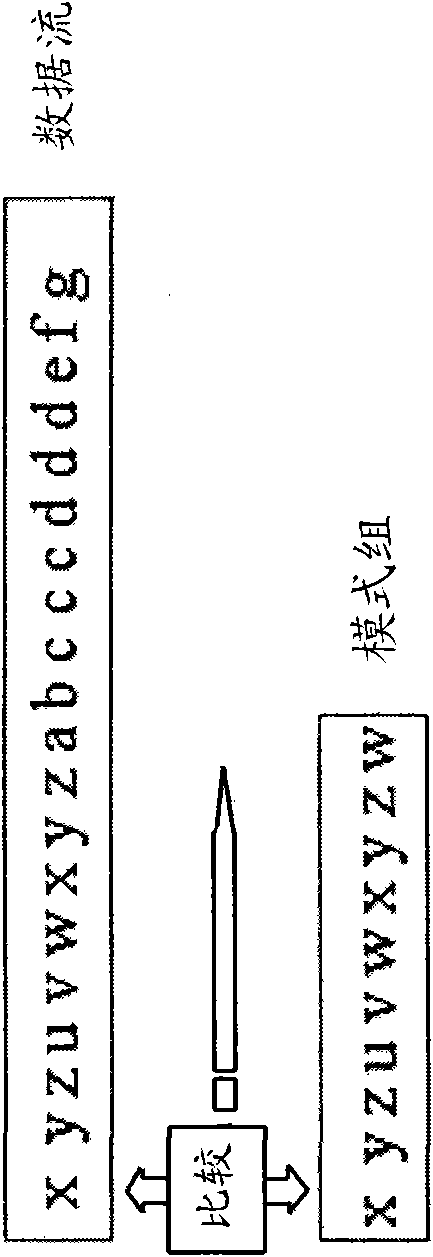

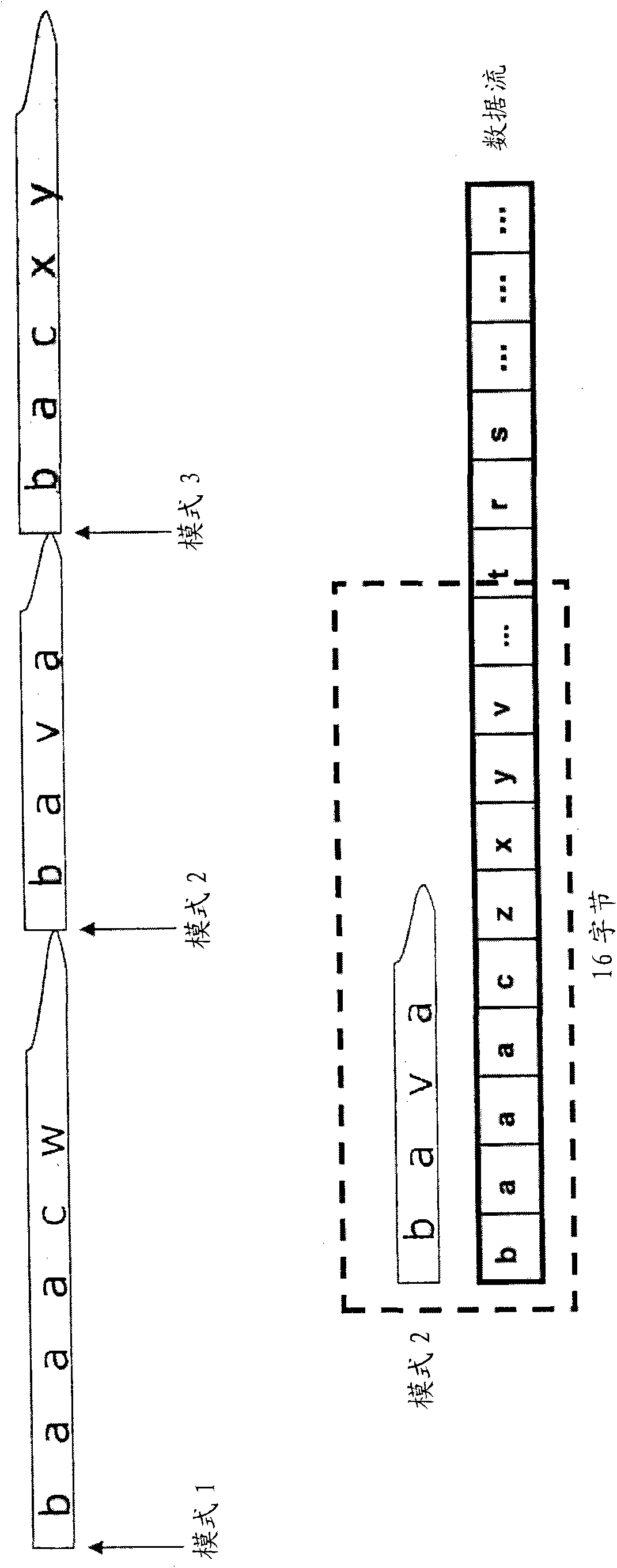

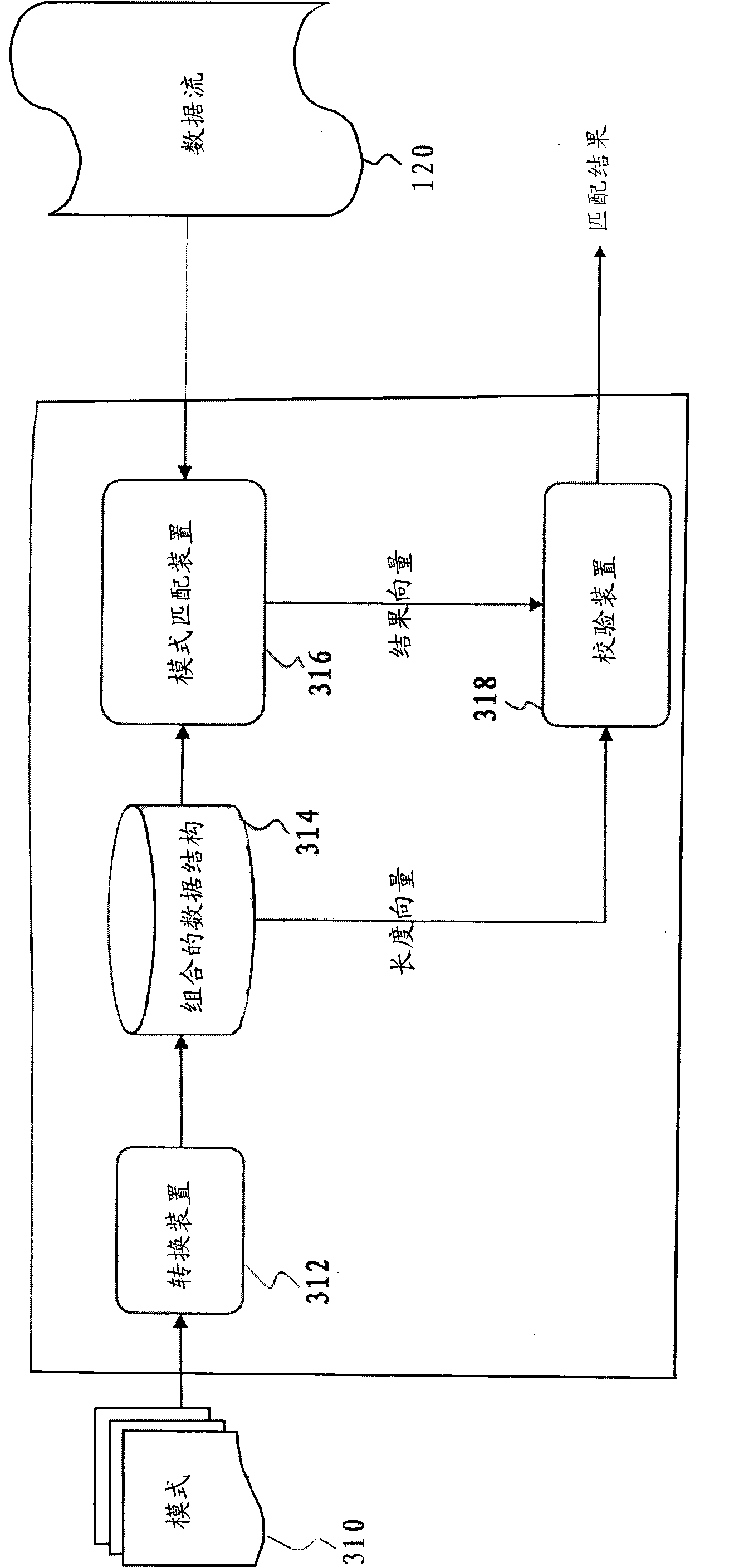

Equipment and method for parallel mode matching

InactiveCN101572693AIncrease profitReduce access overheadError preventionSpecial data processing applicationsData streamPattern matching

The invention provides equipment and a method for parallel mode matching. The equipment comprises a conversion device and a matching device, wherein the conversion device is used for combining a plurality of modes into a mode group and connecting the ith character of each mode in the mode group to form the ith character vector, wherein i is equal to 1, 2, 3 to N, and N is the character number contained in the mode having most characters in the mode group; and the matching device is used for respectively comparing each character in the ith character vector with the ith character in the data stream from the first character vector to carry out parallel mode matching. The invention has the advantages that the utilization ratio of a processor is greatly increased by using SIMD commands, the cost for memory access is lower, the size of codes is reduced by using fewer commands, and the branch delay is minimized.

Owner:IBM CORP

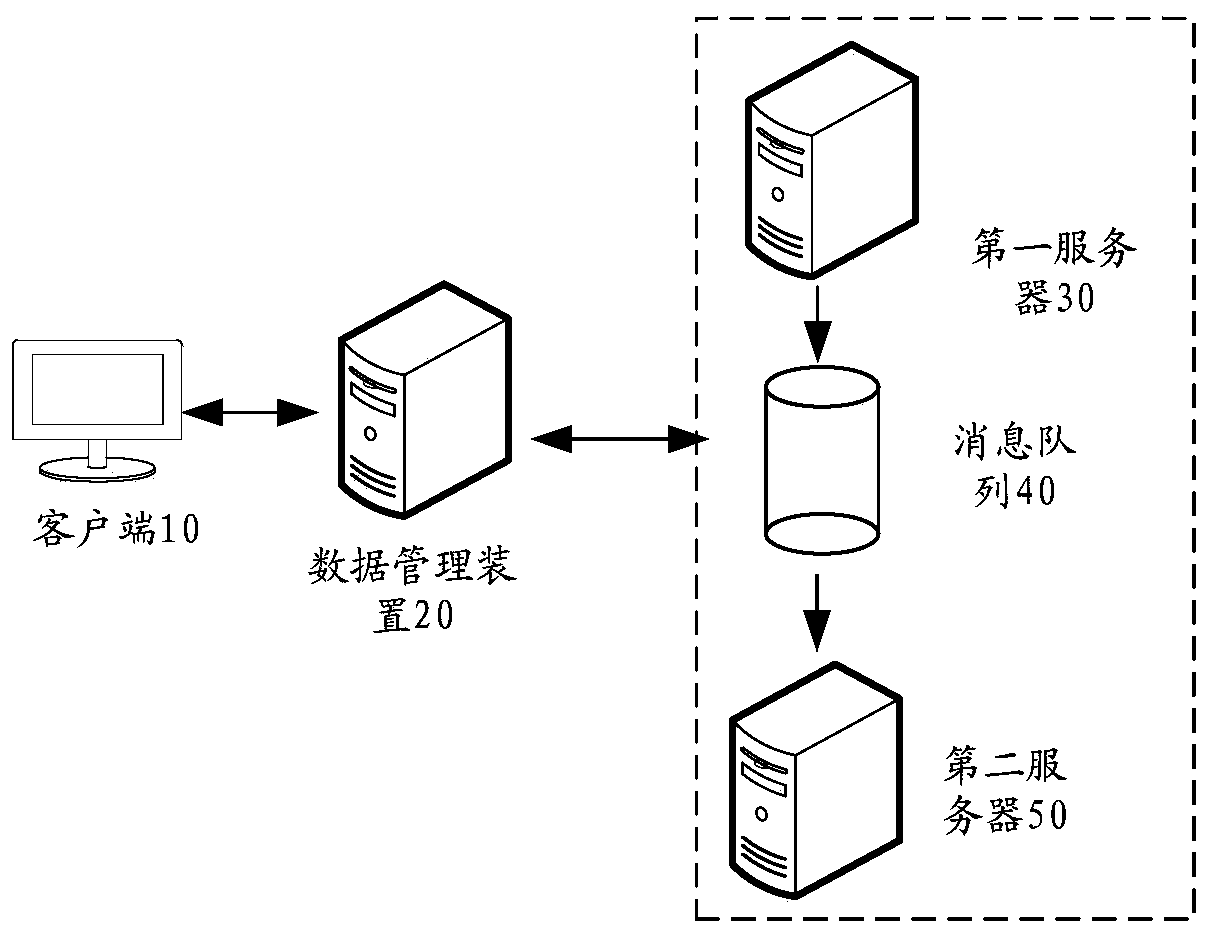

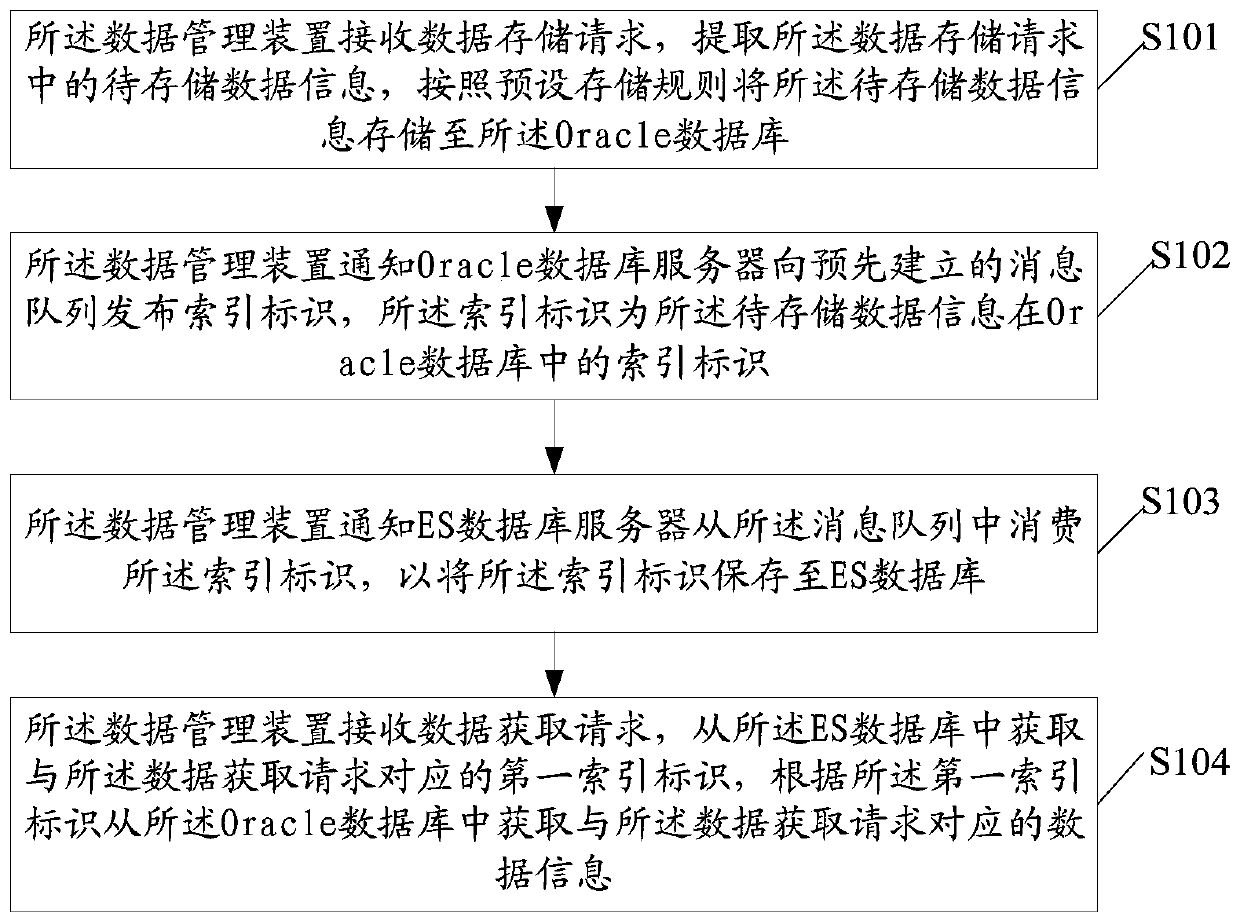

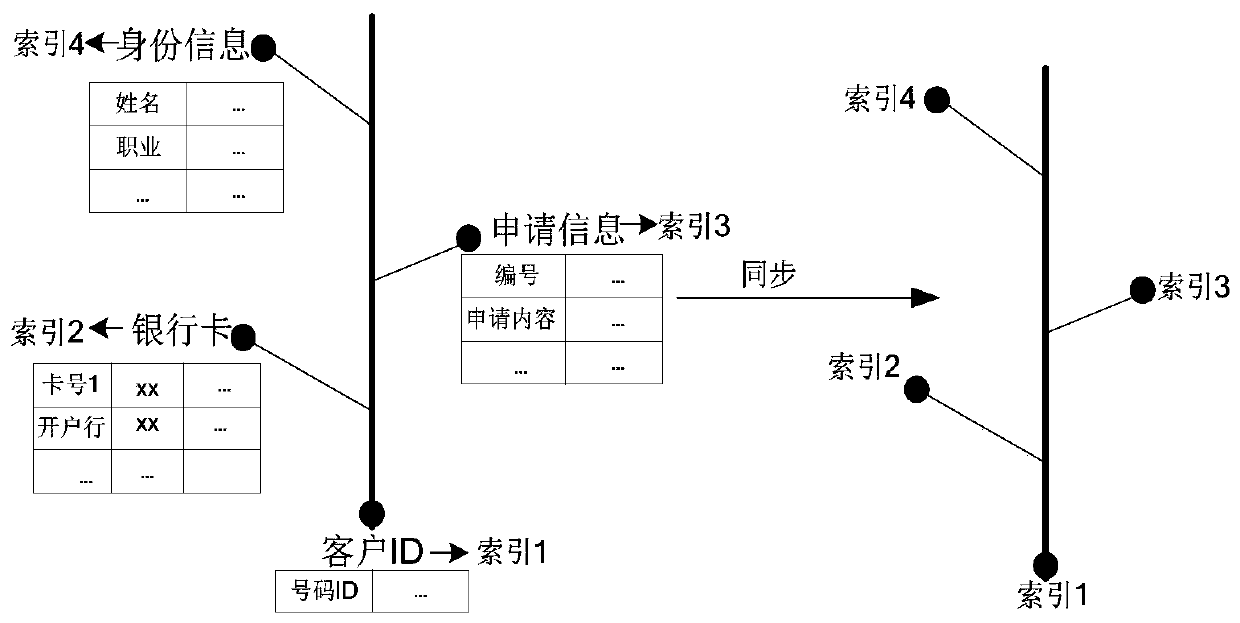

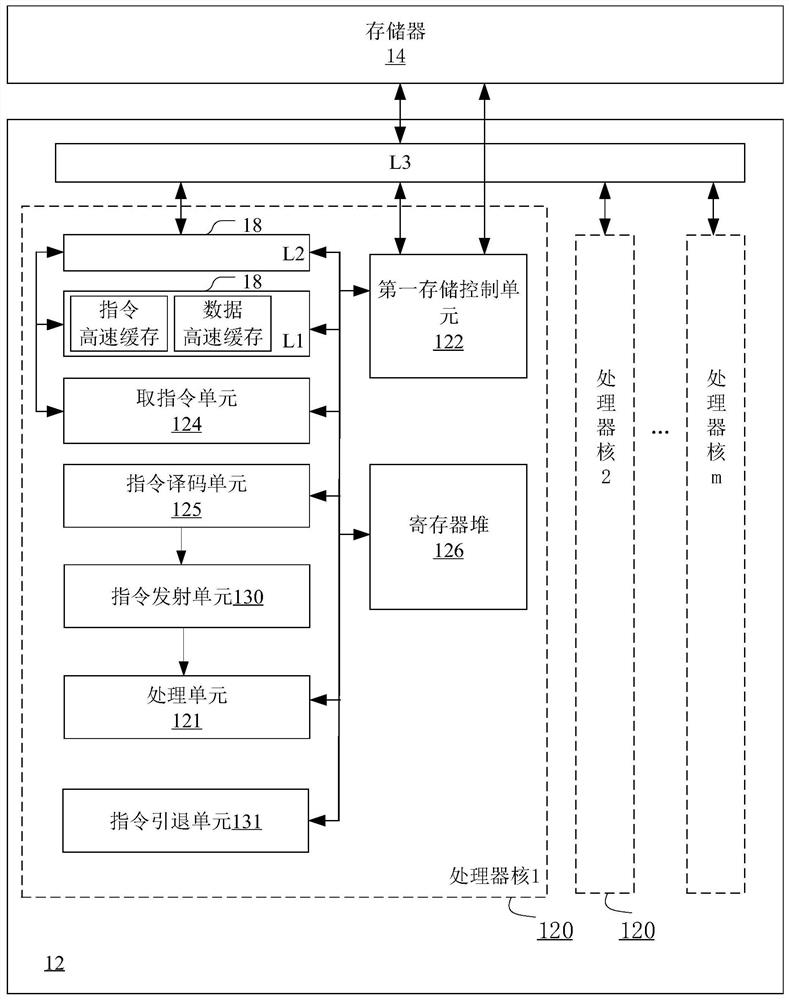

Data management method and related product

PendingCN110110006AReduce access overheadReduce overheadDatabase distribution/replicationMulti-dimensional databasesMessage queueDatabase server

The embodiment of the invention relates to the technical field of data processing, and particularly discloses a data management method and a related product, and the method comprises the steps: receiving a data storage request, extracting to-be-stored data information in the data storage request, and storing the to-be-stored data information into an Oracle database according to a preset storage rule; informing an Oracle database server of issuing an index identifier to a pre-established message queue, wherein the index identifier is an index identifier of the to-be-stored data information in an Oracle database; notifying an ES database server to consume the index identifier from the message queue so as to store the index identifier into an ES database; and receiving a data acquisition request, acquiring a first index identifier corresponding to the data acquisition request from the ES database, and acquiring data information corresponding to the data acquisition request from the Oracledatabase according to the first index identifier. The method is beneficial to improving the data access speed.

Owner:PINGAN PUHUI ENTERPRISE MANAGEMENT CO LTD

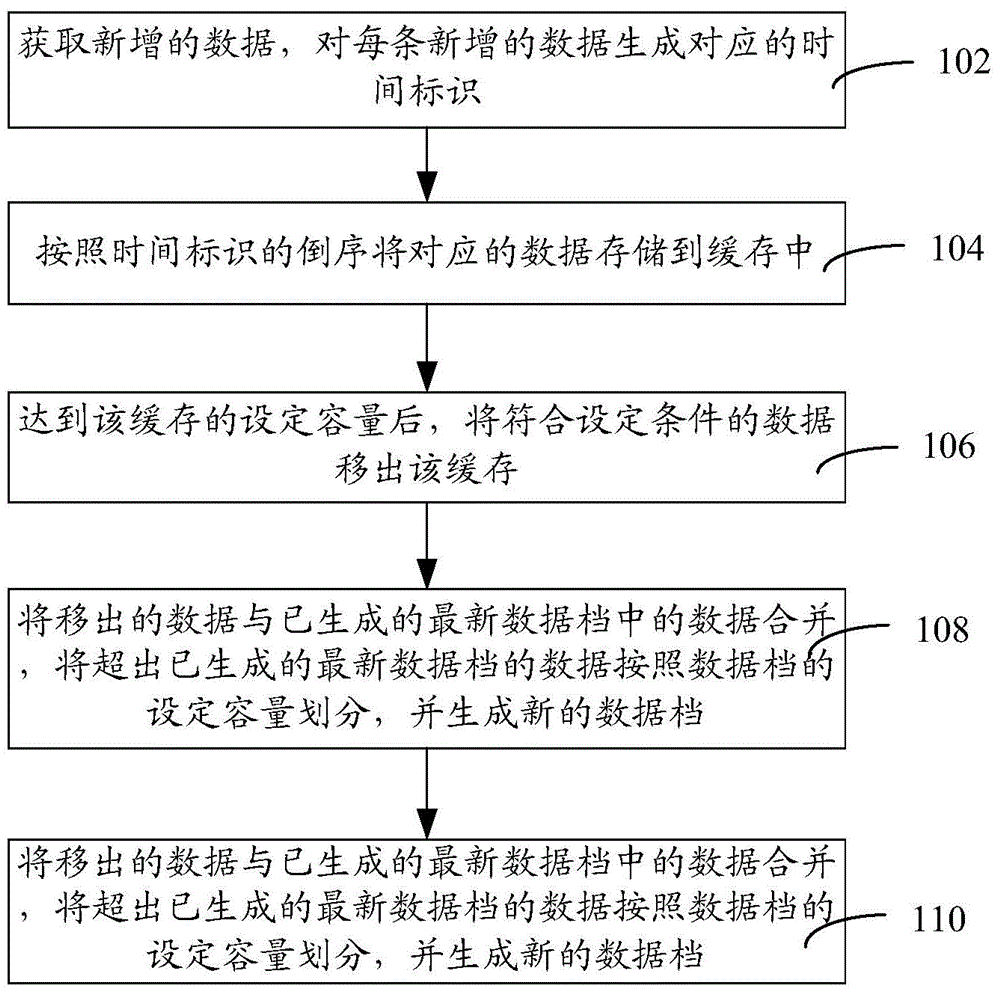

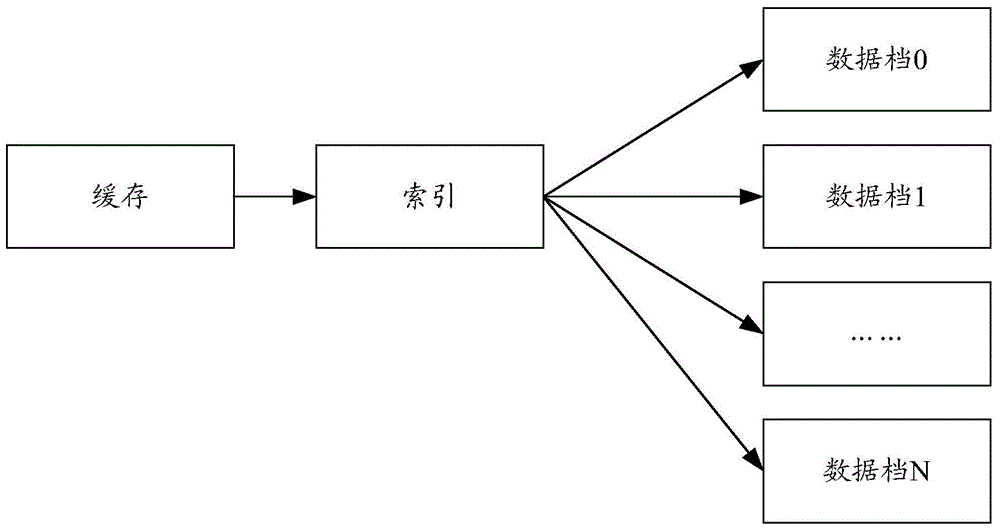

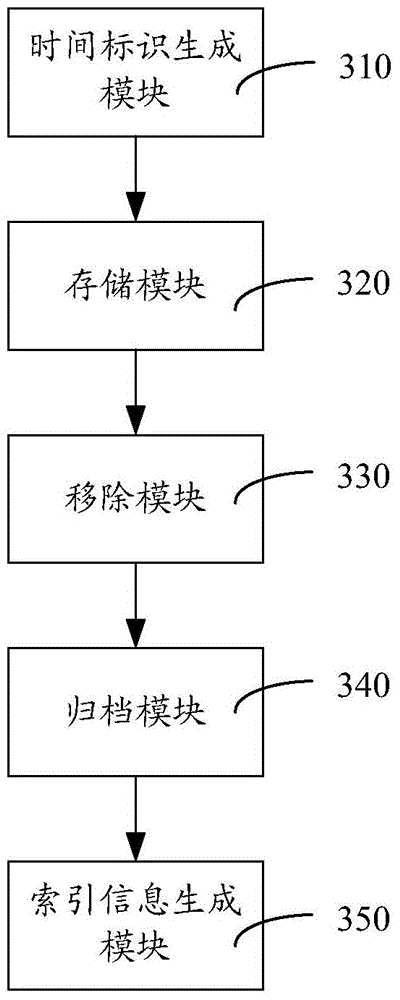

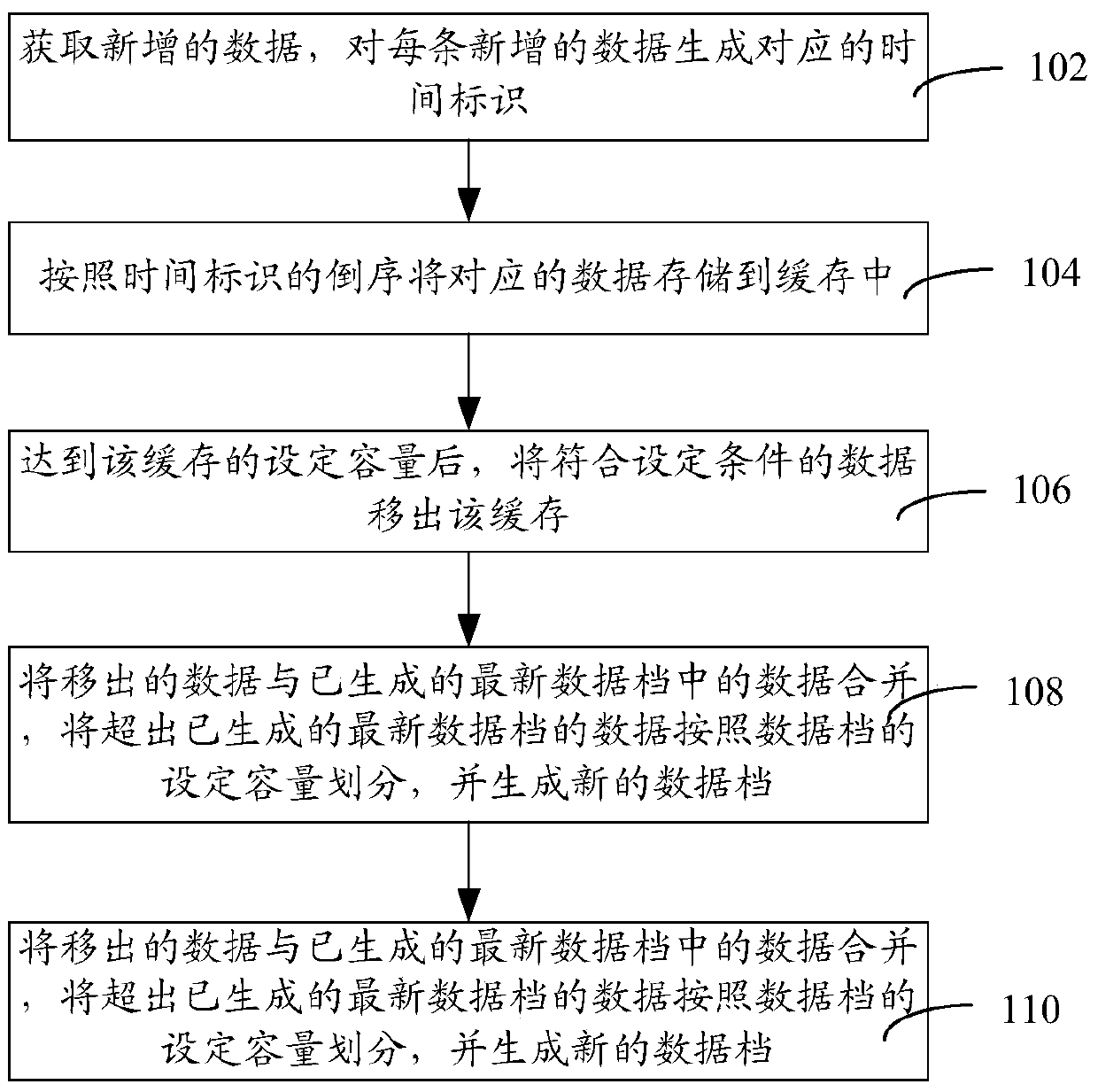

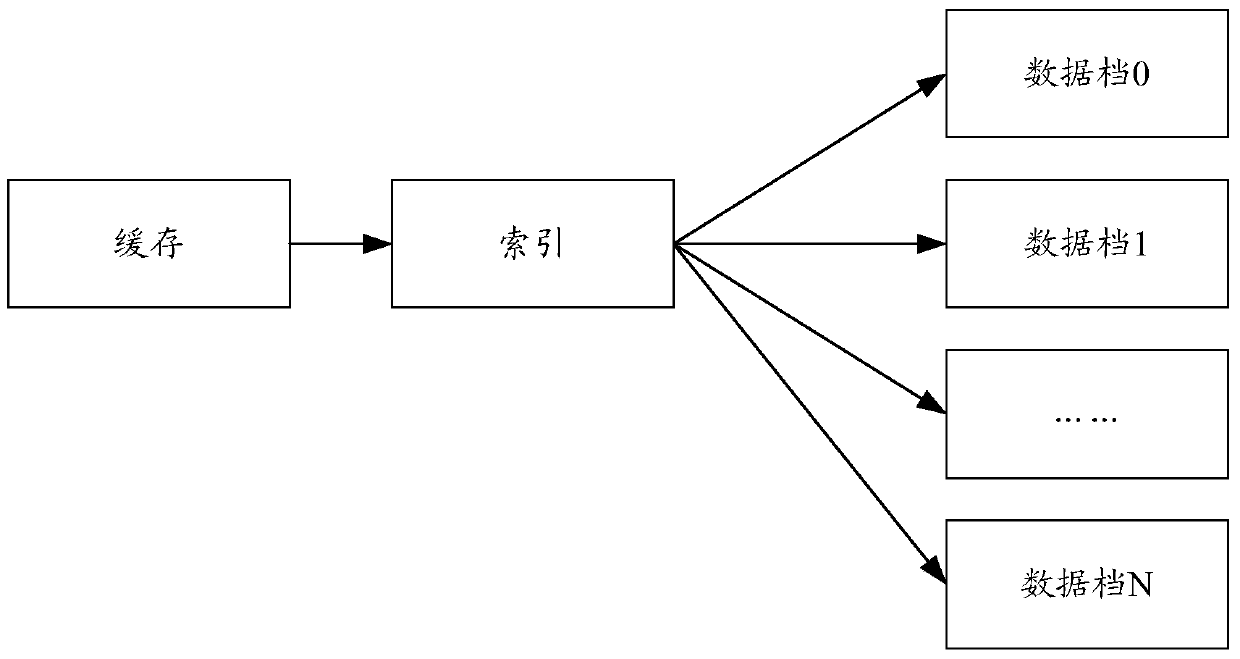

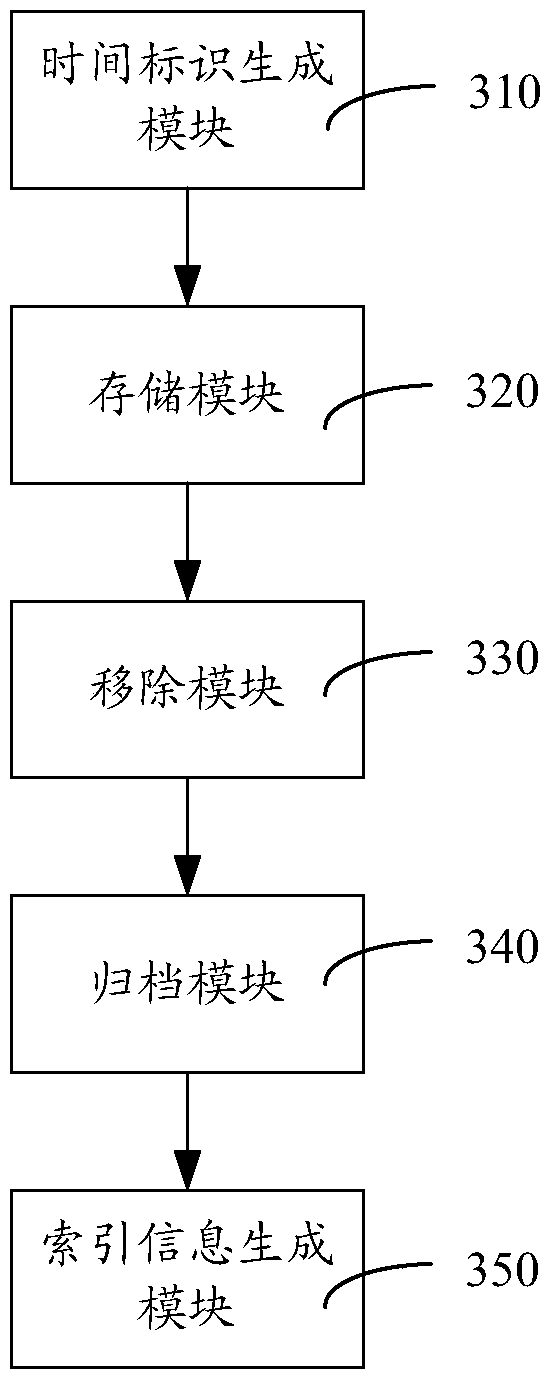

Data storage method and device

ActiveCN106156038AReduce wasteReduce data access overheadSpecial data processing applicationsReverse orderData access

The invention relates to a data storage method and device. The method includes the following steps of obtaining newly-added data, generating corresponding time stamps for all the newly-added data, storing the corresponding data in a cache according to the reverse order of the time stamps, removing the data meeting set conditions out of the cache after the set capacity of the cache is reached, combining the removed data with data in a generated latest data file, dividing the data outside the generated latest data file according to the set capacity of the data file, generating a new data file, generating indexing information for the new data file, and renewing the indexing information of the generated latest data file. By generating the corresponding time stamps for all the data and storing the data in the cache according to the reverse order of the time stamps, the size of the data listed in this way is not limited by the maximum key value, the data can be read in the cache or the data file when read subsequently, the whole list does not need to be read, flow waste is reduced, and data access expenditures are reduced.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

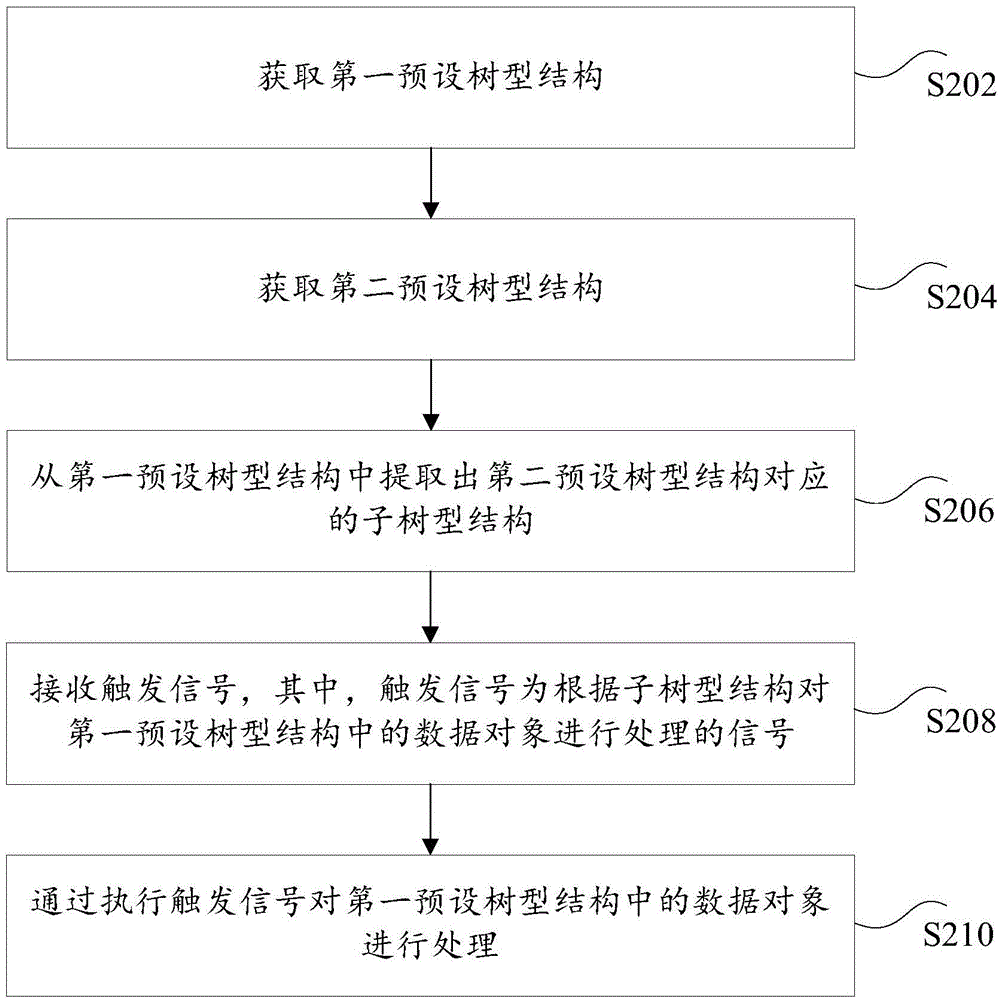

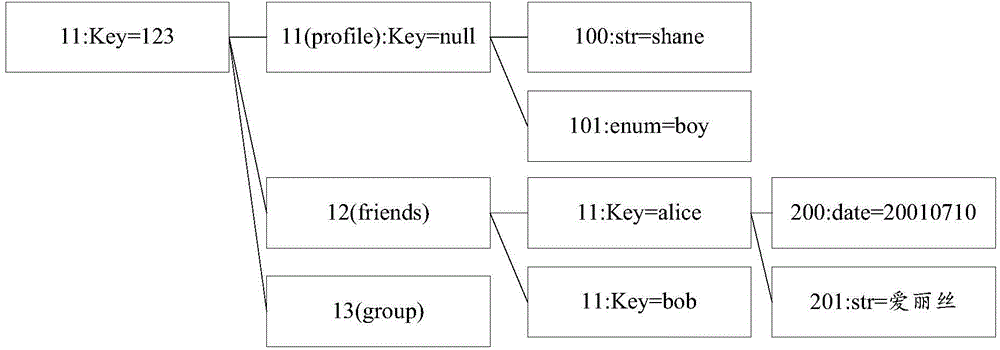

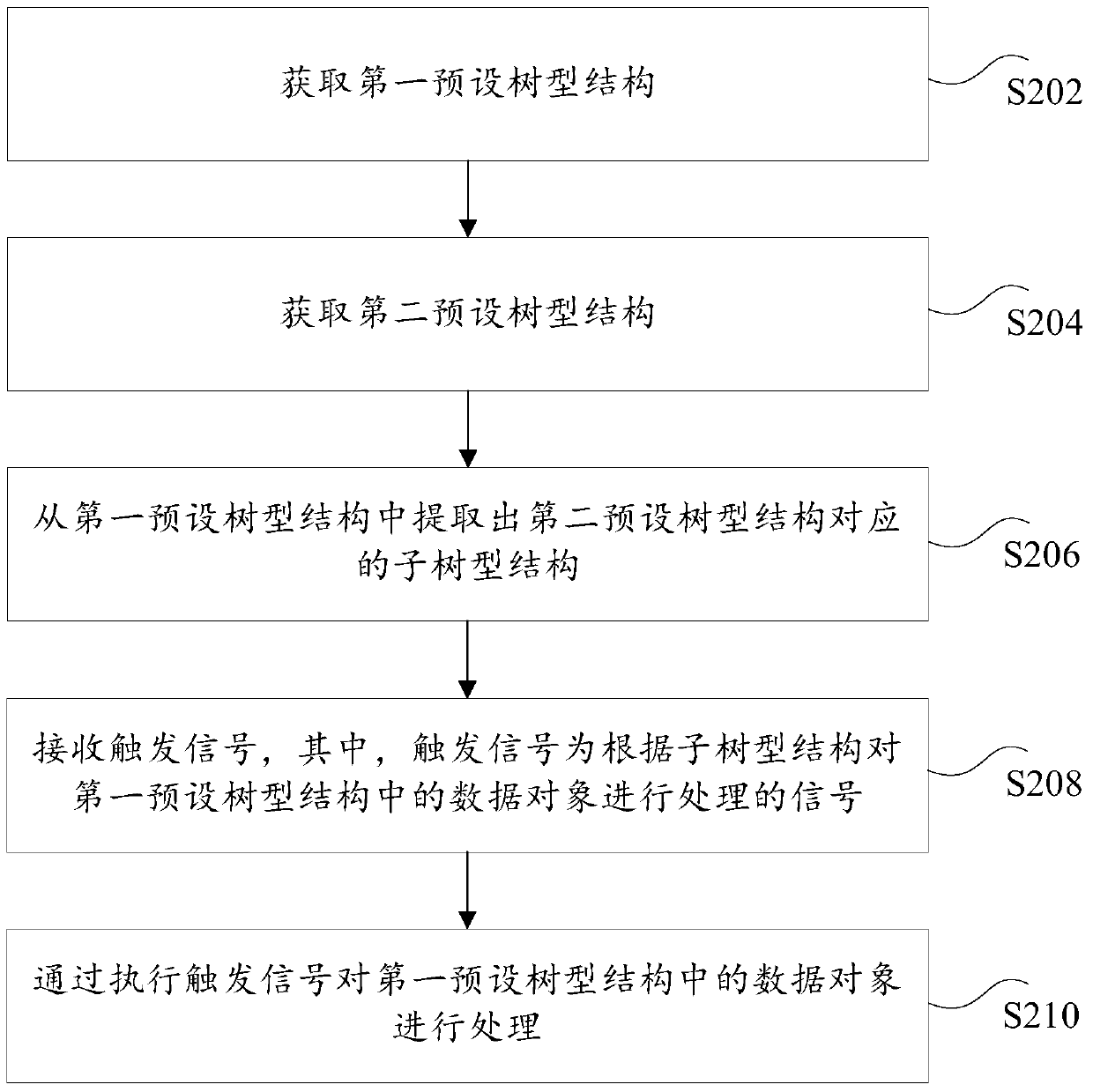

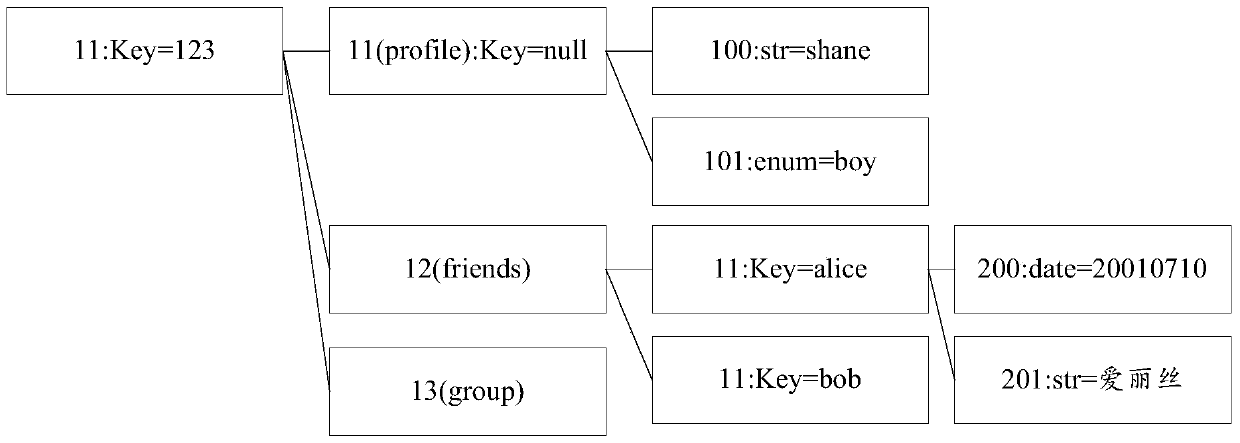

Data processing method and device

ActiveCN105843809AImprove processing efficiencyReduce access overheadSpecial data processing applicationsData miningData library

The invention discloses a data processing method and device. The data processing method includes the flowing steps: acquiring a first preset tree structure, wherein the tree structure is used for storing a preset data object; acquiring a second preset tree structure, wherein the second preset tree structure is used for requesting a path of the data object in a target node in the first preset tree structure; extracting a sub-tree structure corresponding to the second preset tree structure from the first preset tree structure; receiving a trigger signal, wherein the trigger signal is used for processing the data object in the first preset tree structure according to the sub-tree structure; and processing the data object in the first preset tree structure by executing the trigger signal. The data processing method and device can solve the technical scheme that when a Key-Value storage database reads or writes in a part of data, all the data objects need to be processed.

Owner:TENCENT TECH (SHENZHEN) CO LTD

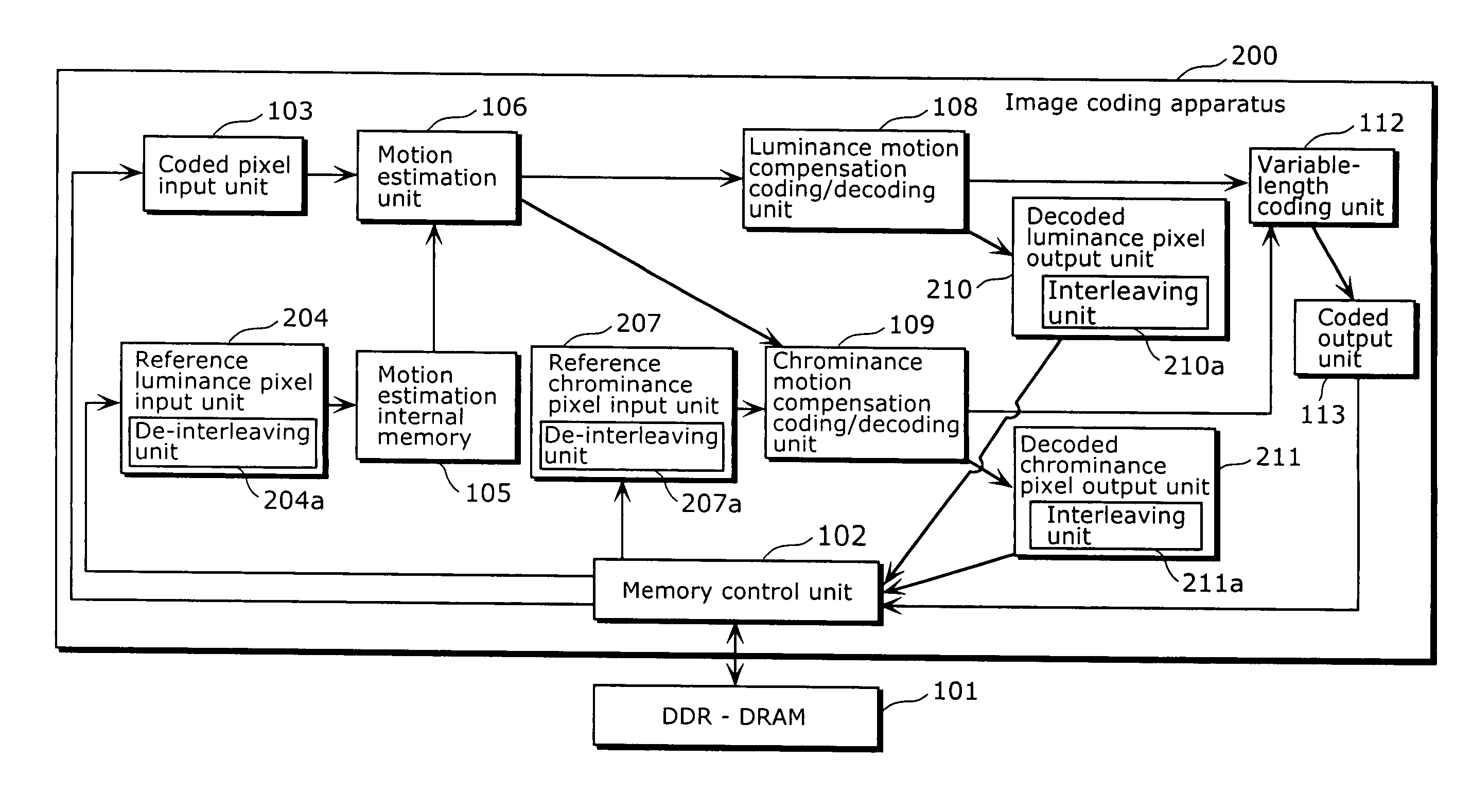

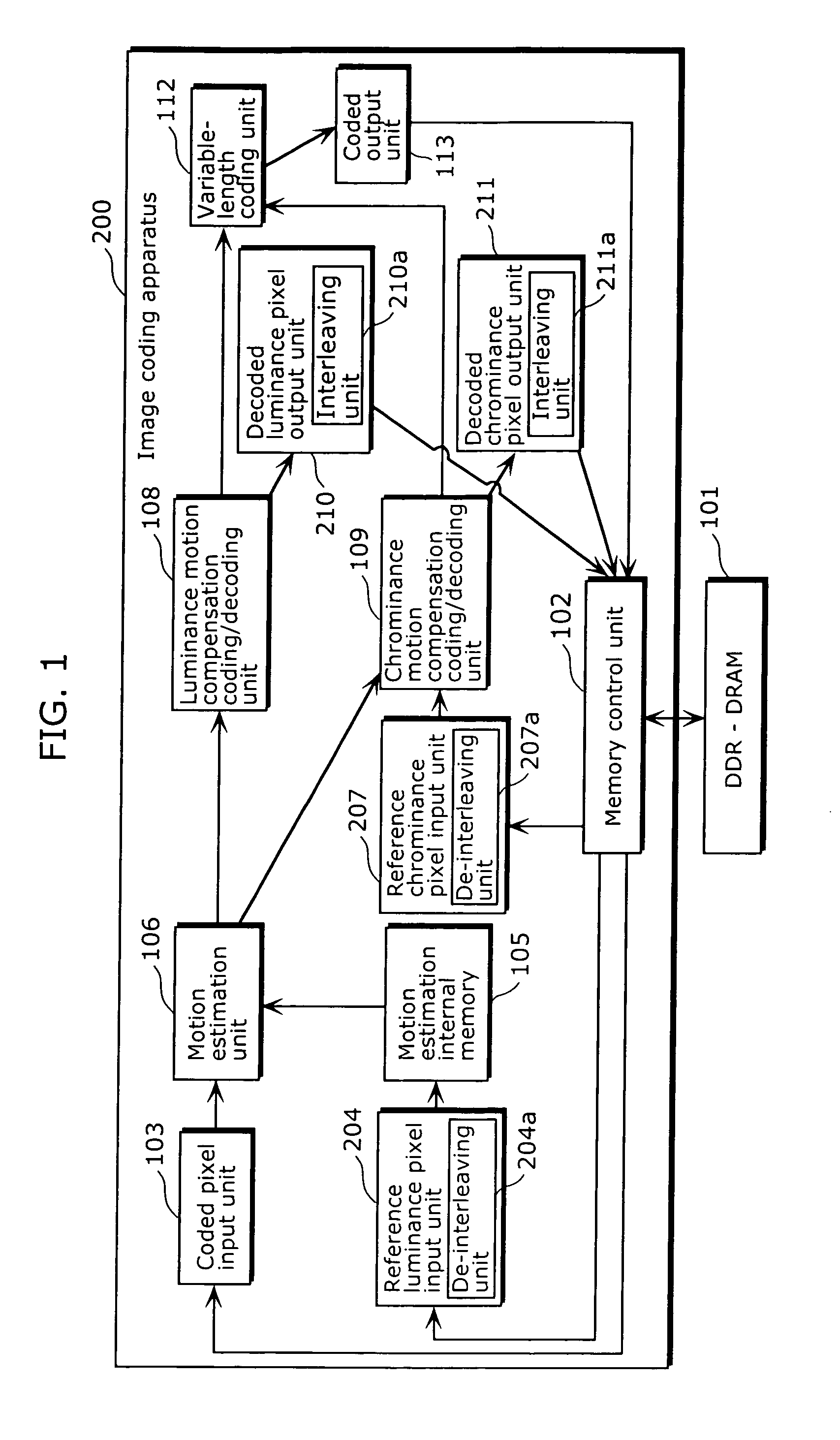

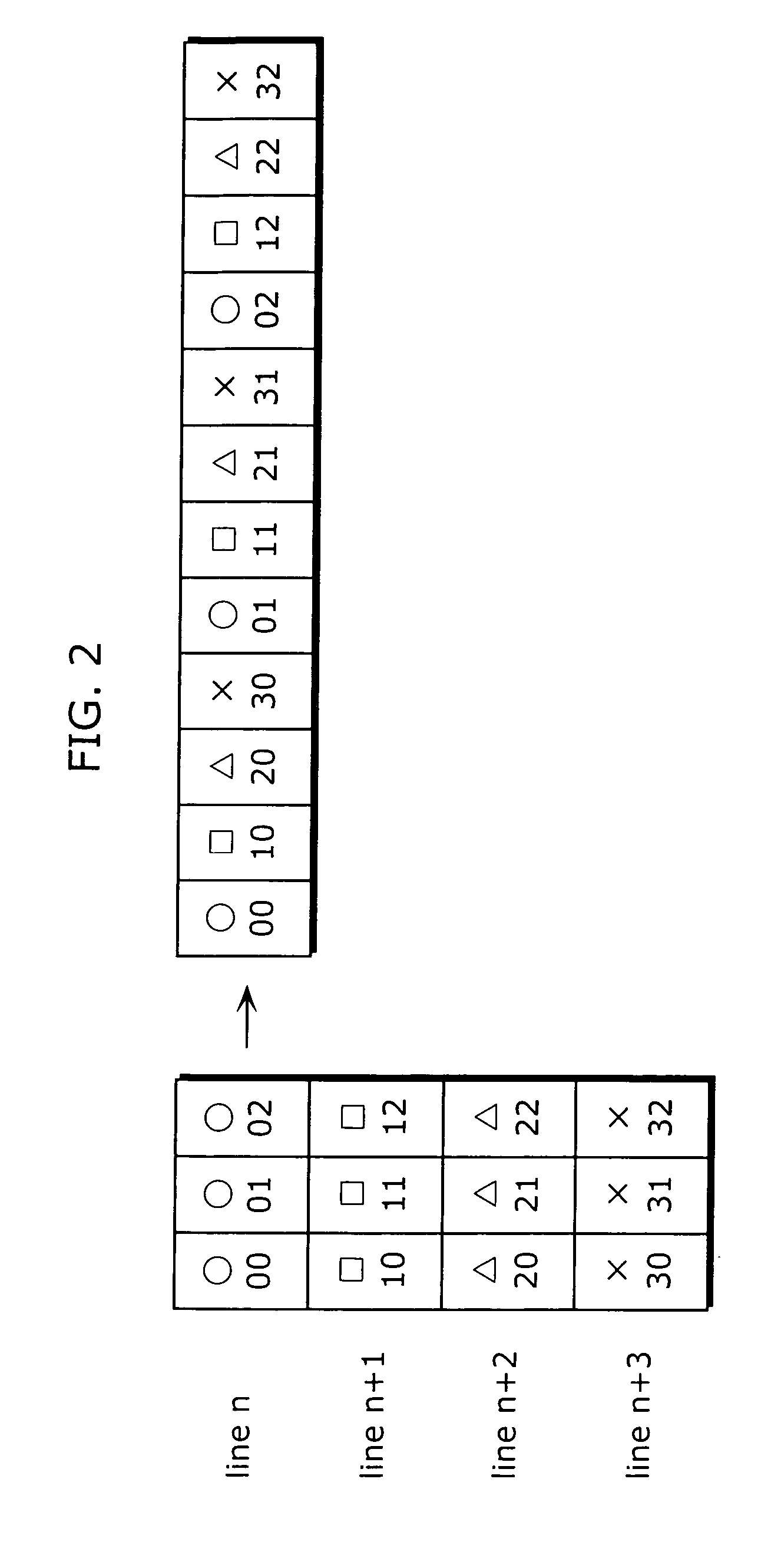

Image processor and image processing method

InactiveUS20070127570A1Reduce transfer rateLow costColor television with pulse code modulationColor television with bandwidth reductionMultiplexingImaging processing

An image processor, which requires a transfer rate lower than the conventional rate, for transmitting pixel data between a DDR-DRAM and a memory, and is configured of: a decoded chrominance pixel output unit which writes pixel data into a DDR-DRAM per p×q pixel unit or per p×q pixel units, each pixel unit being made up of p lines of pixels aligned in a vertical direction and q rows of pixels aligned in a horizontal direction; and a reference chrominance pixel input unit which reads out the pixel data of the pixels from the DDR-DRAM per p×q pixel unit or p×q pixel units, in which the decoded chrominance pixel output unit has an interleaving unit that interleaves q rows of p×q pixels to be written into the DDR-DRAM, so as to generate a pixel data sequence in which the pixel data of the pixels located in q rows is multiplexed and placed in a line.

Owner:PANASONIC CORP

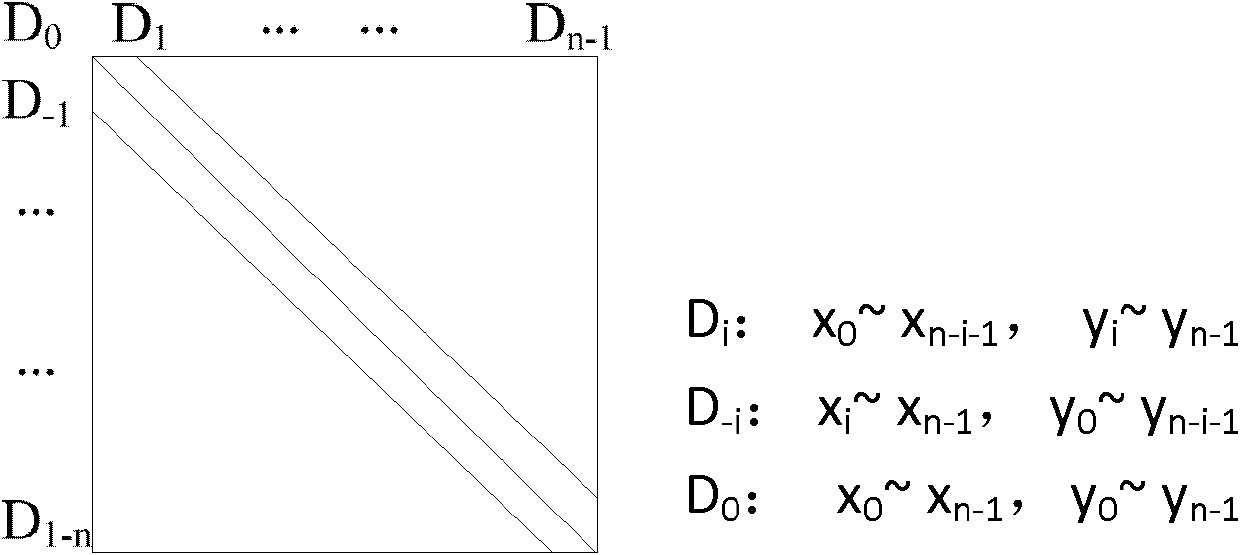

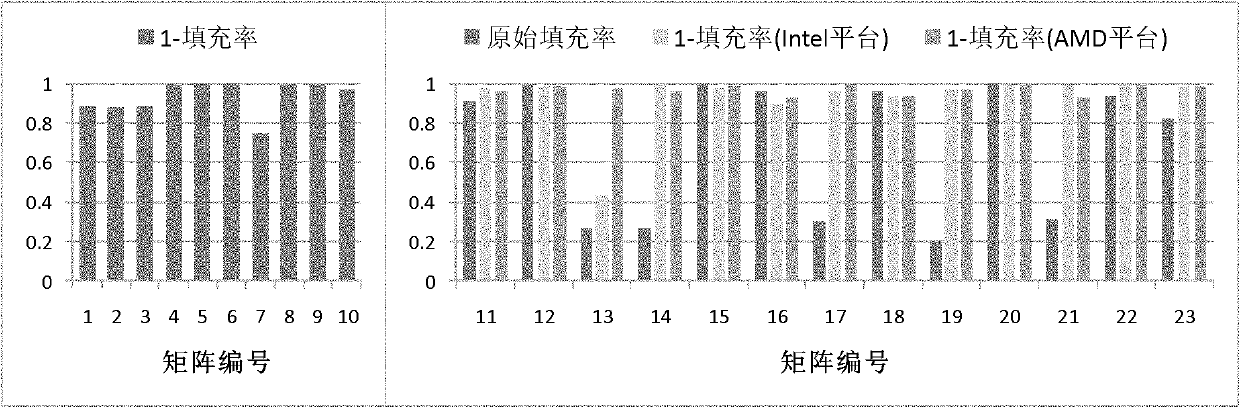

Method for storing diagonal data of sparse matrix and SpMV (Sparse Matrix Vector) realization method based on method

InactiveCN102141976BReduce demandReduce memory access overheadComplex mathematical operationsSparse matrix vectorArray data structure

The invention discloses a method for storing diagonal data of a sparse matrix and a SpMV realization method based on the method. The storage method comprises the following steps of: (1) scanning a sparse matrix A line by line and representing a position of a non-zero-element diagonal by using number of the diagonal; (2) segmenting the matrix A into a plurality of sparse sub-matrixes by using an intersection of the non-zero-element diagonal and the lateral side of the matrix A as a horizontal line; and (3) storing elements on the non-zero-element diagonal in each sparse matrix to a val array according to the line sequence. The SpMV realization method comprises the following steps of: (1) traversing the sparse matrixes and calculating vector multiplier y=A1*x of the sparse matrix in each sparse sub-matrix; and (2) merging the vector multipliers of all sparse sub-matrixes. The data storage method disclosed by the invention is not required to store row indexes of the non-zero elements, thereby reducing access expense and requirements on a storage space; a smaller storage space is occupied by the diagonal and the index array of the x array, so that the access complexity is reduced; andall the data required for calculation are continuously accessed, so that a complier and hardware can be optimized sufficiently.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

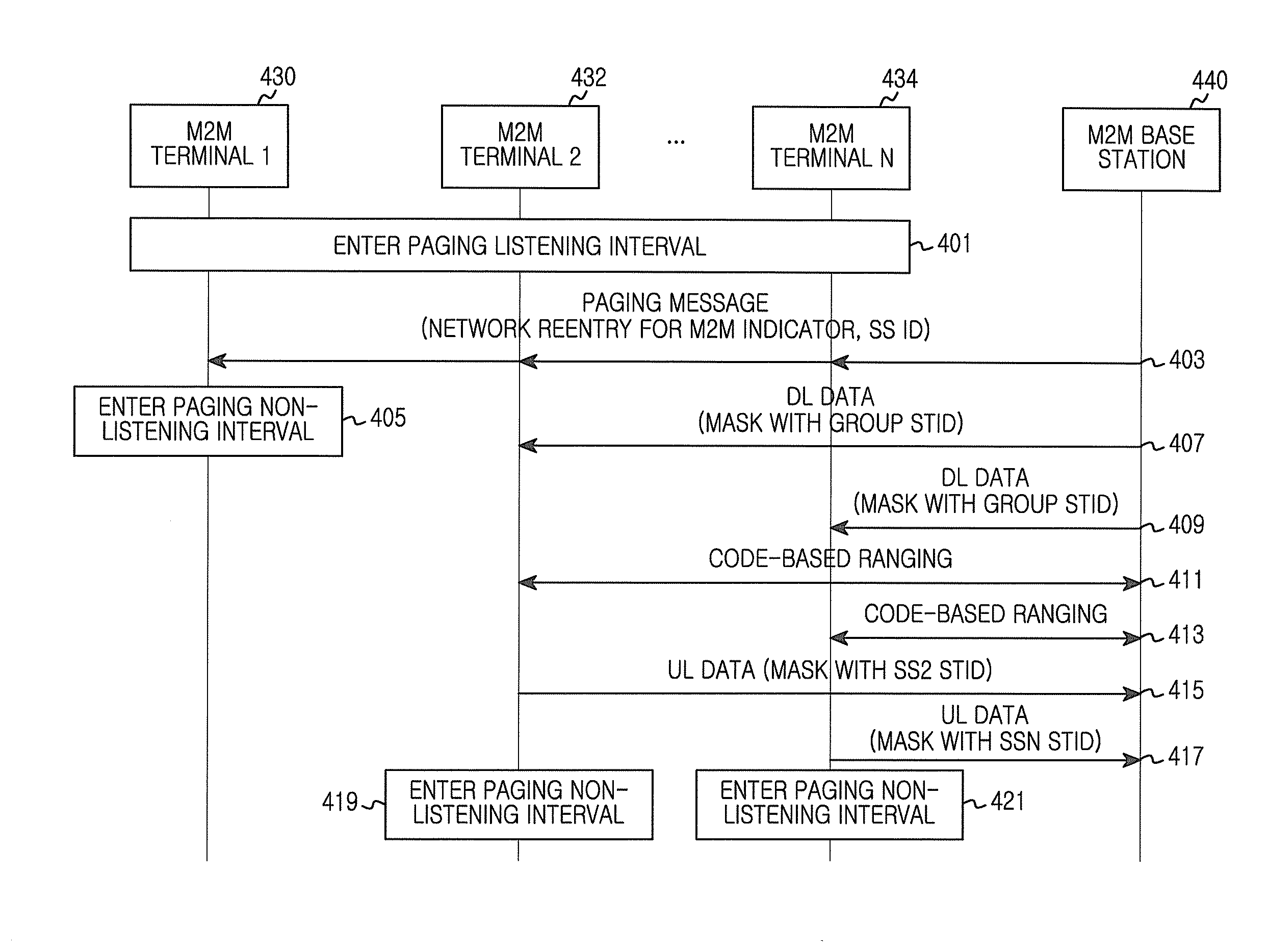

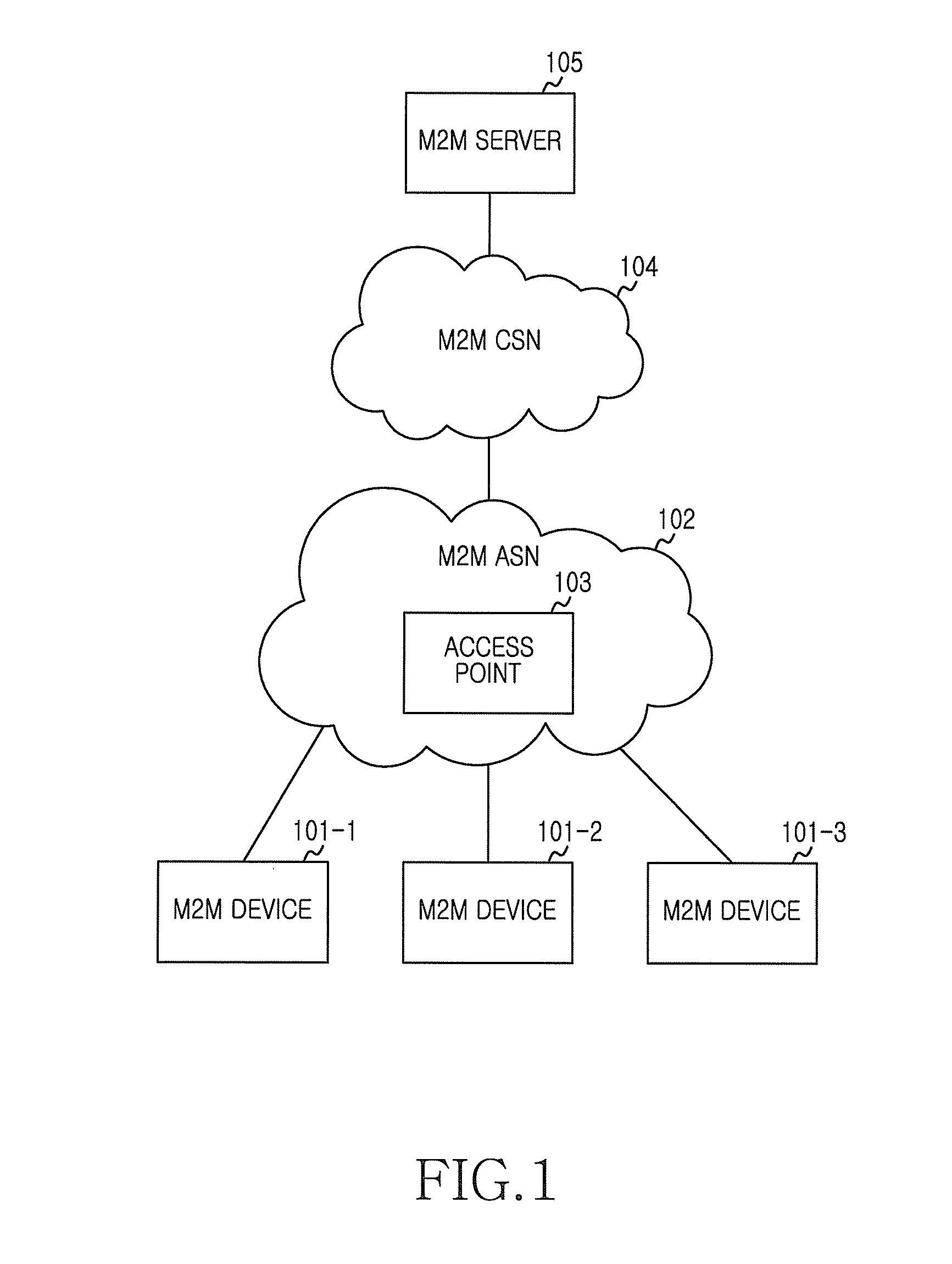

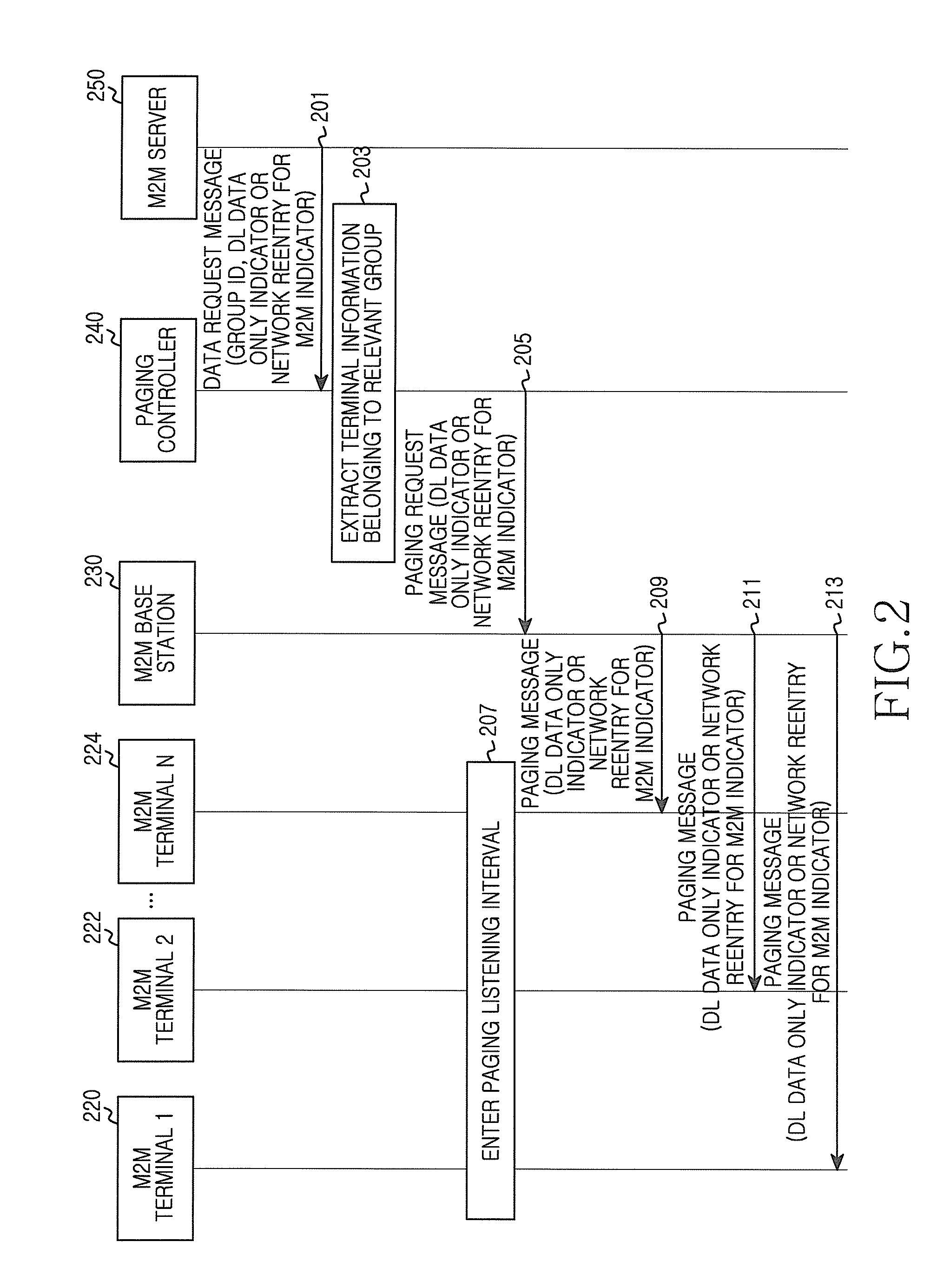

Method and apparatus for reducing access overhead from paged device in machine to machine communication system

ActiveUS20150208378A1Reduce access overheadAvoid access violationsSpecial service provision for substationPower managementCommunications systemBase station

An apparatus for an idle mode terminal is configured to perform a method for operating the idle mode terminal in a Machine to Machine (M2M) communication system. A paging message is received from the base station during a paging listening interval. The Apparatus determines whether an indicator indicating receipt of multicast group data is included in the paging message. When the indicator indicating the receiving of multicast group data is included, data transmitted via a downlink resource that uses an identifier mask of a multicast group to which a terminal belongs is received. Thereafter, a paging non-listening interval is entered.

Owner:SAMSUNG ELECTRONICS CO LTD

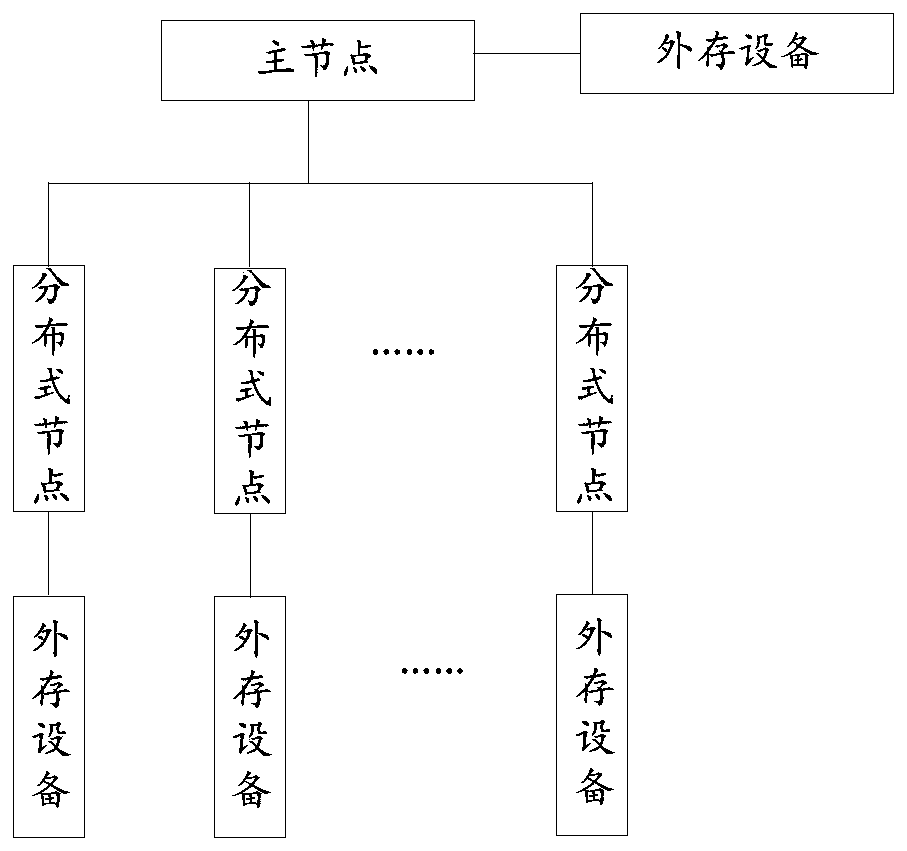

Lossless recovery distributed multilingual retrieval platform and lossless recovery distributed multilingual retrieval method

PendingCN110659157AReduce consumptionHigh speedRedundant operation error correctionSpecial data processing applicationsExternal storageAccess frequency

The invention provides a lossless recovery distributed multi-language retrieval platform and a lossless recovery distributed multi-language retrieval method. The lossless recovery distributed multilingual retrieval platform comprises a main node and distributed nodes communicating with the main node, wherein the main node and the distributed nodes are respectively and correspondingly connected with an external storage device, and the external storage device is configured to store data and memory states received by the main node or the distributed nodes connected with the external storage device at preset time intervals; when a fault is recovered, data in the external storage device is directly recovered to a local memory, the data is adjusted, and a routing algorithm is operated to enablethe routing algorithm to point to a new node; the main node is configured to issue multilingual data meeting retrieval conditions to the distributed nodes; the distributed nodes are configured to query multilingual data meeting retrieval conditions in a hotspot index table of an index memory cache layer; and multilingual data of which the access frequency is not less than a preset access frequencythreshold exists in the hotspot index table.

Owner:安徽芃睿科技有限公司

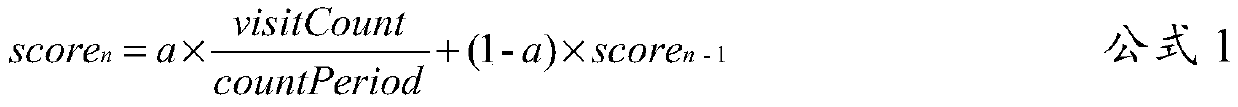

Processing unit, processor core, neural network training machine and method

PendingCN113011577AReduce computational overheadReduce access overheadProgram controlNeural architecturesAlgorithmEngineering

The invention provides a processing unit, a processor core, a neural network training machine and a method. The processing unit comprises a calculation unit used for executing weight gradient calculation of neural network nodes; the decompression unit decompresses the obtained compressed weight signal into a weight signal and a pruning signal, the weight signal indicates the weight of each neural network node, and the pruning signal indicates whether the weight of each neural network node is used in weight gradient calculation or not; the pruning signal is used to control whether to allow access to an operand memory storing an operand used in the weight calculation, and the pruning signal is also used to control whether to allow the calculation unit to perform a weight gradient calculation using the weight signal and the operand. According to the invention, the calculation overhead of a processor and the access overhead of a memory are reduced when the weight gradient of the neural network is determined.

Owner:ALIBABA GRP HLDG LTD

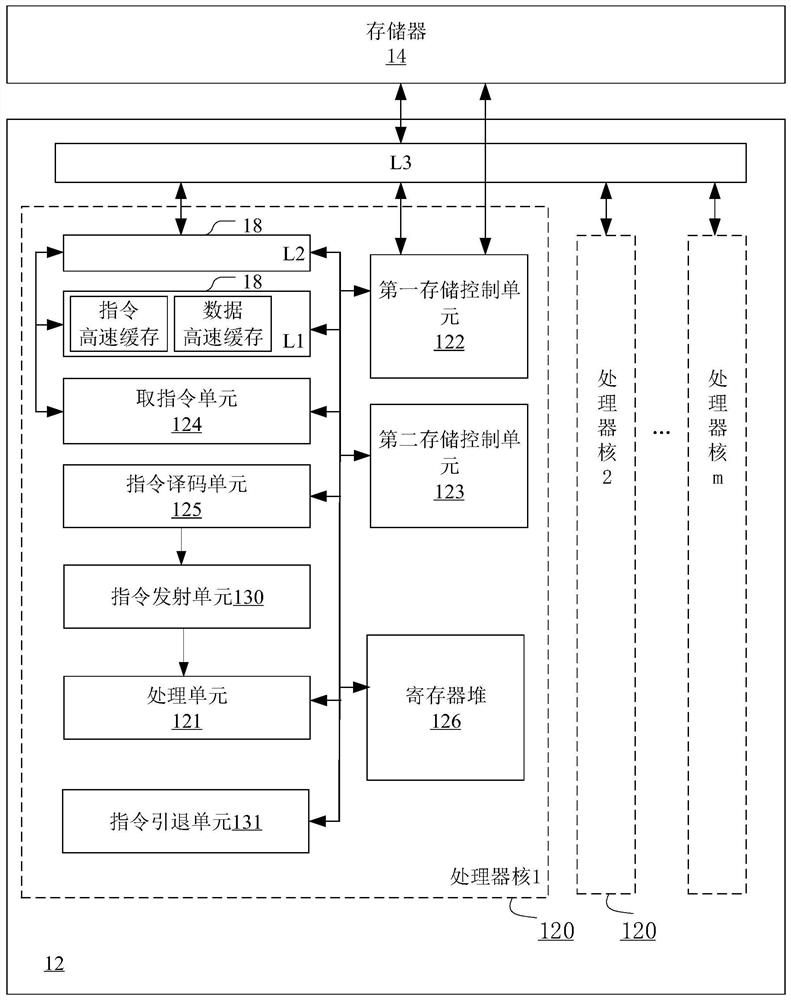

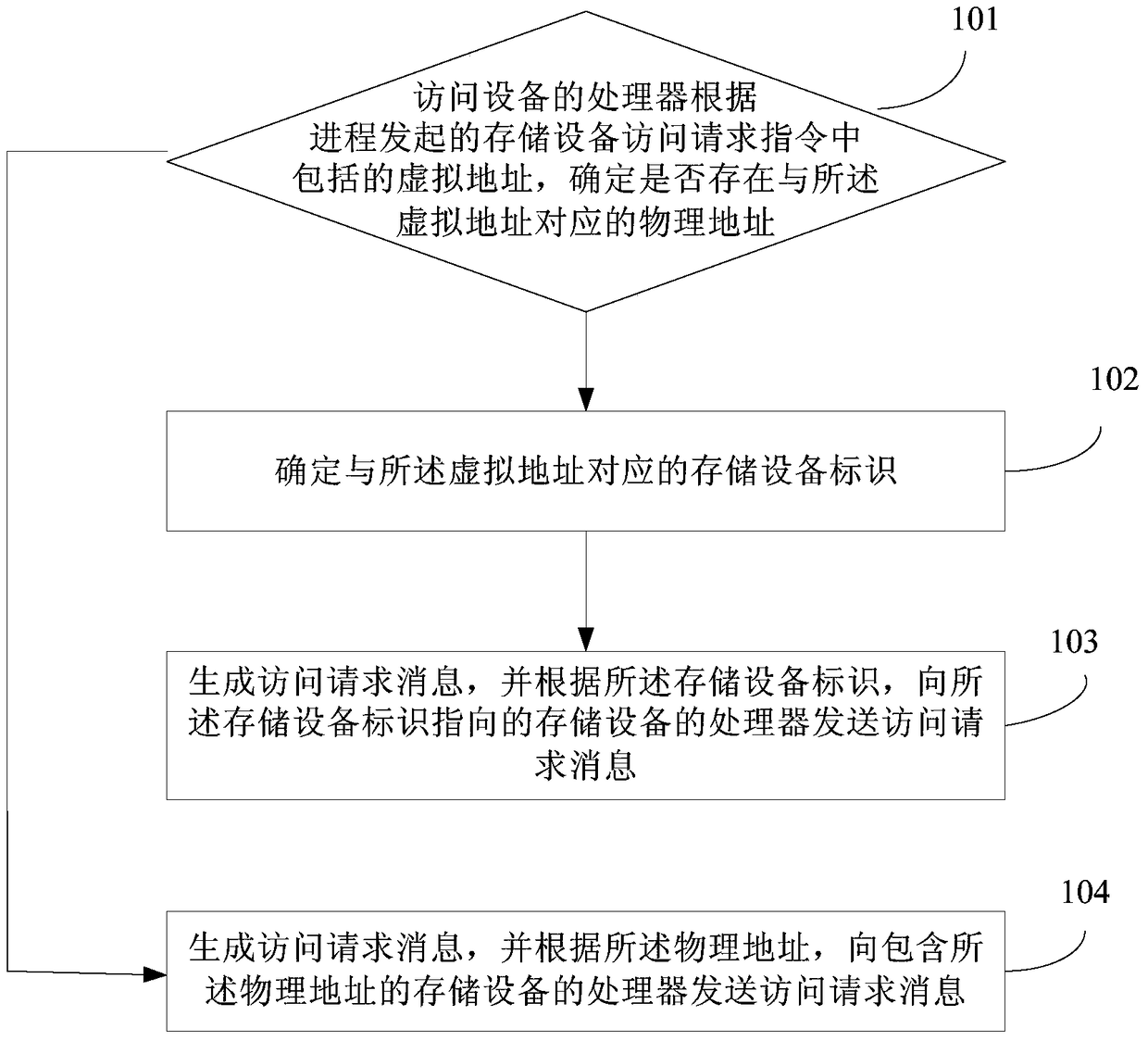

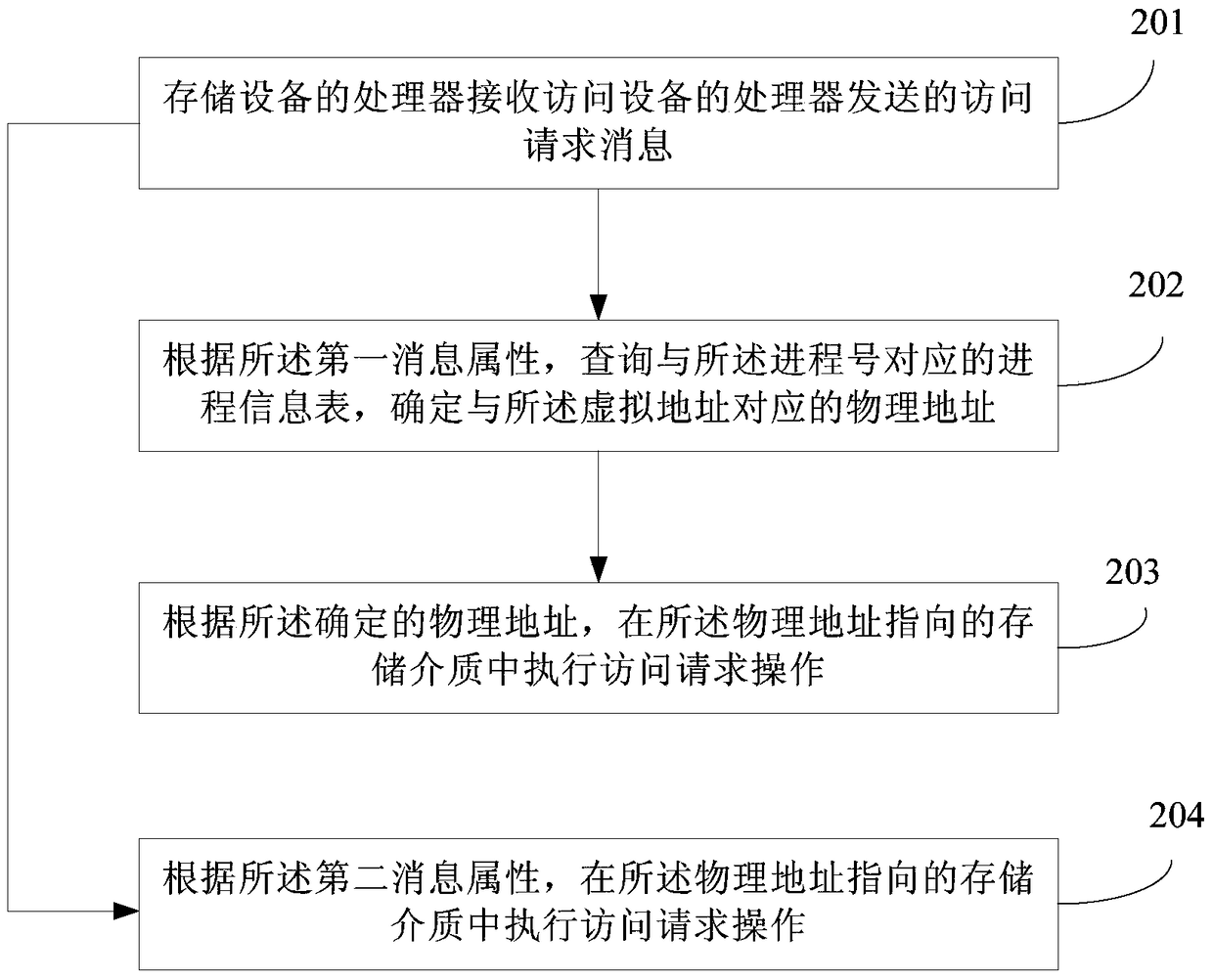

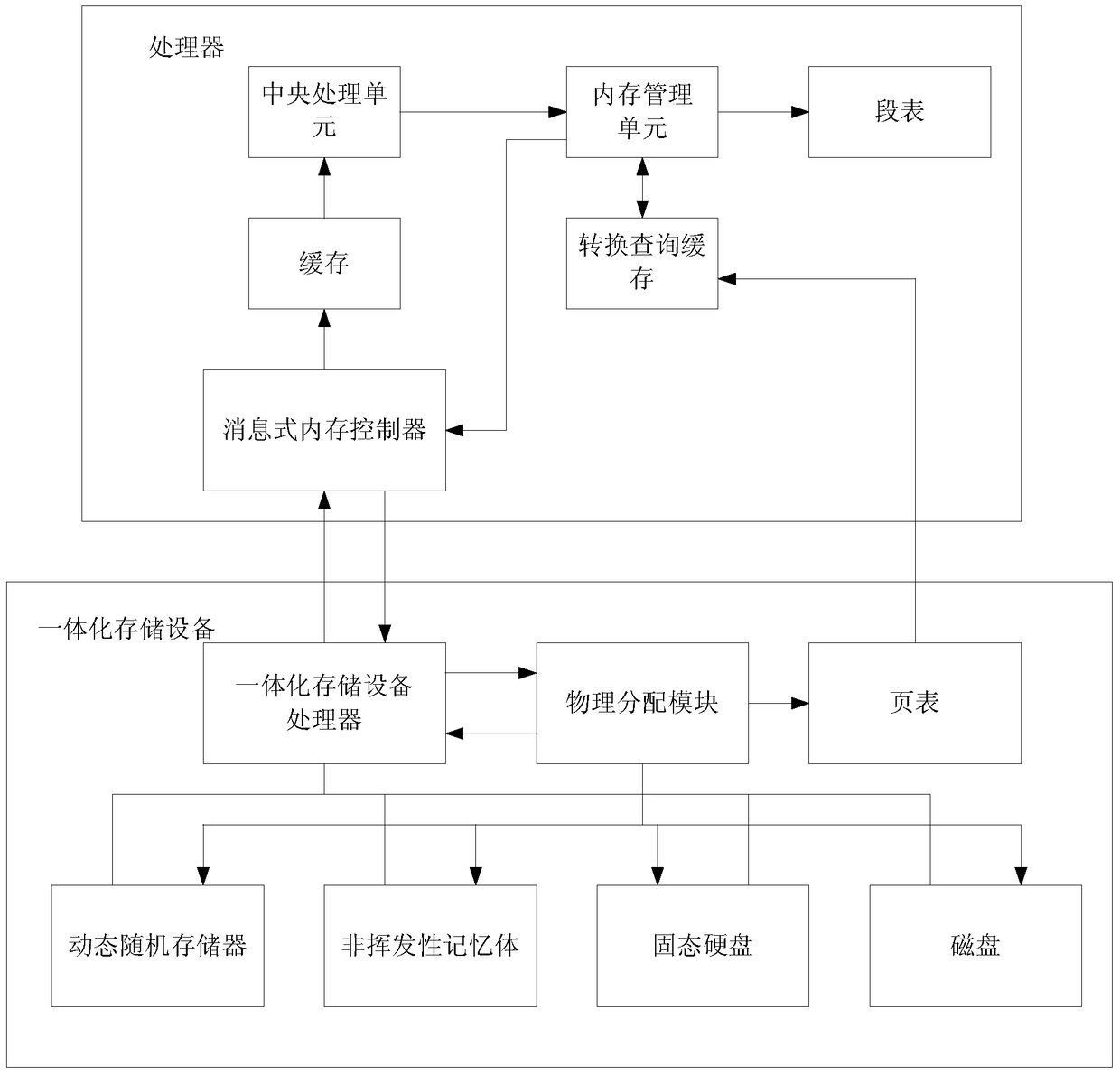

Access information processing method, device and system for storage device

ActiveCN105335308BReduce overheadImprove access performanceElectric digital data processingInformation processingOperating system

Owner:HUAWEI TECH CO LTD +1

Hash Bloom Filter and Data Forwarding Method for Name Lookup in NDN

ActiveCN104579974BLower overall cost of accessReduce access overheadData switching networksComputer hardwareMemory chip

The invention discloses a Hash Bloom filter and a data forwarding method for name search in NDN. The Hash Bloom filter consists of g counter Bloom filters and g counters located in the on-chip memory It consists of g hash tables located in the off-chip memory; each of the hash tables is associated with 1 counter Bloom filter and 1 counter; the hash Bloom filter is selected by the secondary hash method The complete information of the FIB / CS / PIT entries in the NDN router is uniformly distributed and stored in g counter Bloom filters and g hash tables. The HBF of the present invention utilizes the positioning and filtering functions of the CBF in the on-chip memory to greatly reduce the access overhead of the off-chip memory, thereby reducing the overall access cost of the HBF, increasing the forwarding rate of data packets, and effectively avoiding flood attacks at the same time.

Owner:HUNAN UNIV

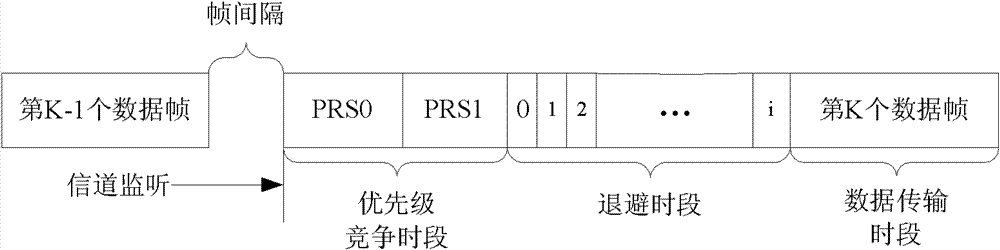

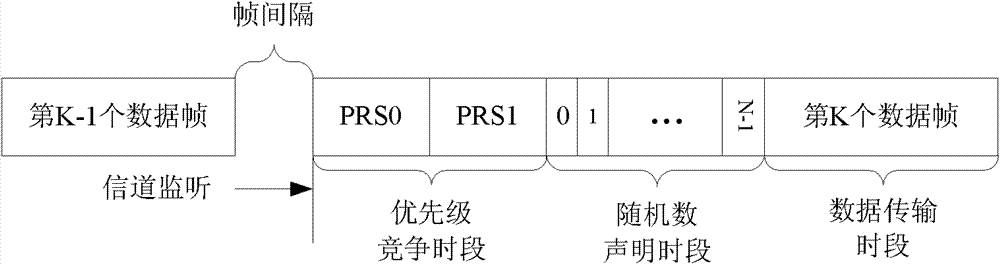

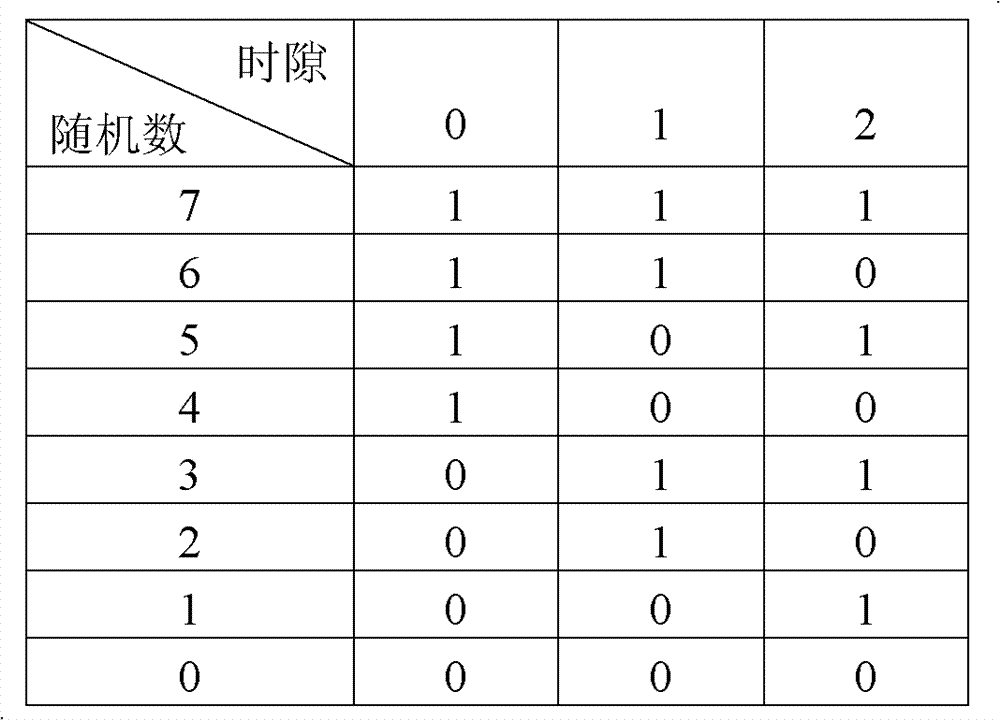

Random number statement-based multiple access method

ActiveCN102104517BReduce access overheadReduce access delayData switching networksAccess methodCurrent channel

The invention discloses a method for realizing multiple access with a random number statement, which mainly solves the problems of low channel utilization rate and long channel access delay caused by overmany time slots of an avoiding time period in the conventional priority-based multiple access method. The method is implemented by a process comprising a channel monitoring time period, a priority competition time period, a random number statement time period and a data transmission time period. In the channel monitoring time period, channels are monitored, and a current channel is judged whether to be idle or not. In the priority competition time period, the service priority of each station is stated, and the stations with the highest service priority are screened according to statement results. In the random number statement time period, random numbers are generated by the station with the highest service priority, and are stated, and the station with the greatest stated random number may obtain a data transmission opportunity. The method has the advantages of low channel access overhead, high channel utilization rate and short channel access delay, and can be used for Ethernet over cable (EoC) communication.

Owner:BEIJING HANNUO SEMICON TECH CO LTD

Data storage method and device

ActiveCN106156038BReduce wasteReduce data access overheadSpecial data processing applicationsDatabase indexingReverse orderData file

The invention relates to a data storage method and device. The method includes the following steps of obtaining newly-added data, generating corresponding time stamps for all the newly-added data, storing the corresponding data in a cache according to the reverse order of the time stamps, removing the data meeting set conditions out of the cache after the set capacity of the cache is reached, combining the removed data with data in a generated latest data file, dividing the data outside the generated latest data file according to the set capacity of the data file, generating a new data file, generating indexing information for the new data file, and renewing the indexing information of the generated latest data file. By generating the corresponding time stamps for all the data and storing the data in the cache according to the reverse order of the time stamps, the size of the data listed in this way is not limited by the maximum key value, the data can be read in the cache or the data file when read subsequently, the whole list does not need to be read, flow waste is reduced, and data access expenditures are reduced.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

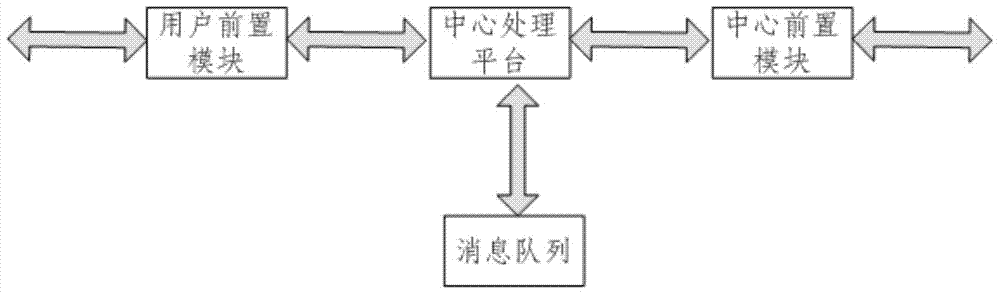

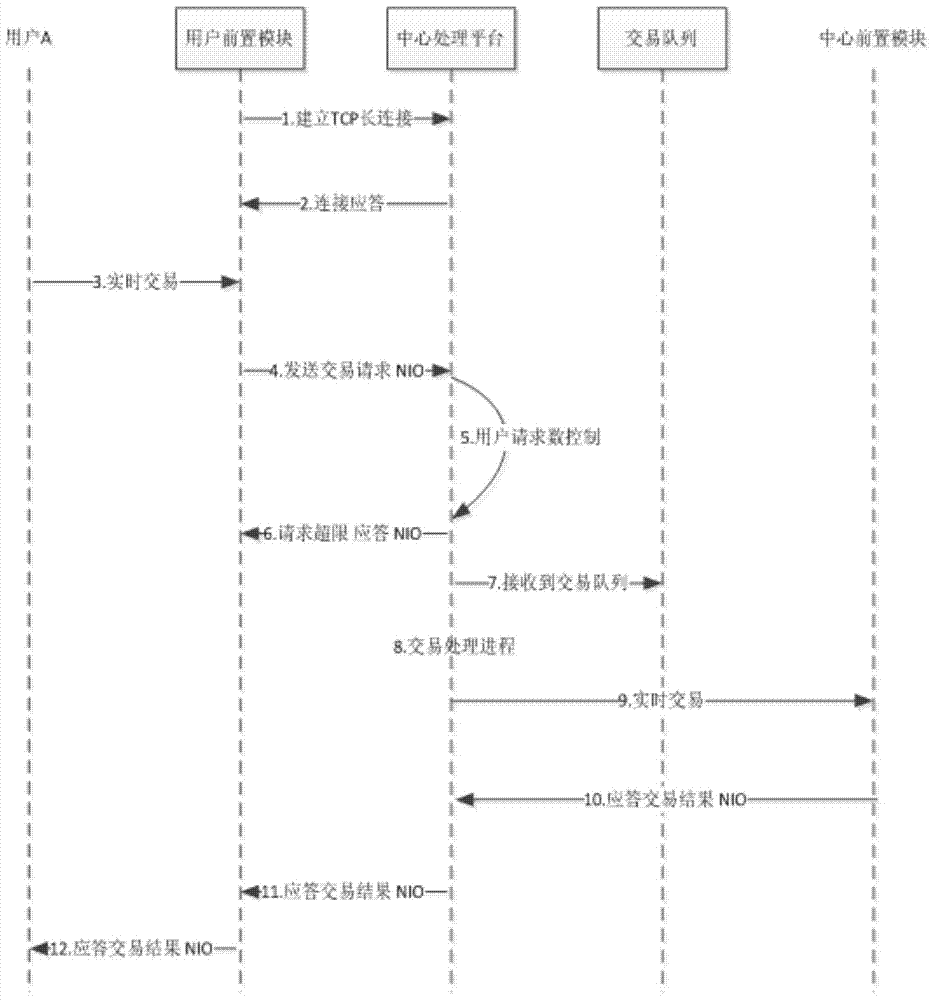

A centralized interface communication concurrency control system and control method thereof

ActiveCN104363270BReduce access overheadAvoid extensionBuying/selling/leasing transactionsData switching networksSingle pointMemory module

The invention belongs to the technical field of Internet of Things data services, and aims to provide a centralized interface communication concurrency control system and a control method thereof. The present invention includes a user front-end module, a central processing platform, a central front-end module, and a message queue. The interface transaction mode between the central processing platform and a single point adopts a multi-thread synchronous transaction mode, and the fault and performance bottleneck of a single point are eliminated. It will affect the access of other points; the user front-end module is provided with a memory for storing user verification information, and judging whether the user who initiates the transaction has transaction authority through comparison, and performing an integrity check on the user's transaction data ; The present invention optimizes the existing interface communication mode and improves the interface transaction performance. When the central processing platform conducts transactions with multiple single points, through the multi-threaded processing control of the interface transactions, the extension of single point performance problems to the central processing platform is avoided. Dynamic allocation of single-point concurrent requests improves system performance and reduces waste of request overhead.

Owner:咸亨国际电子商务有限公司

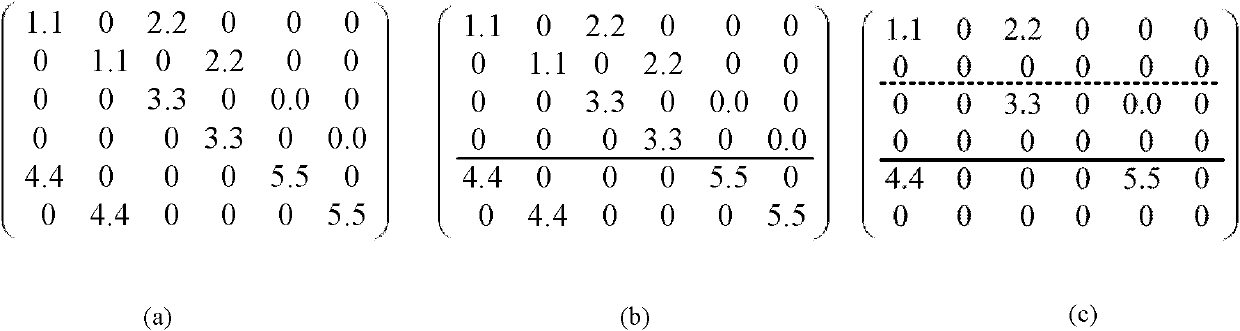

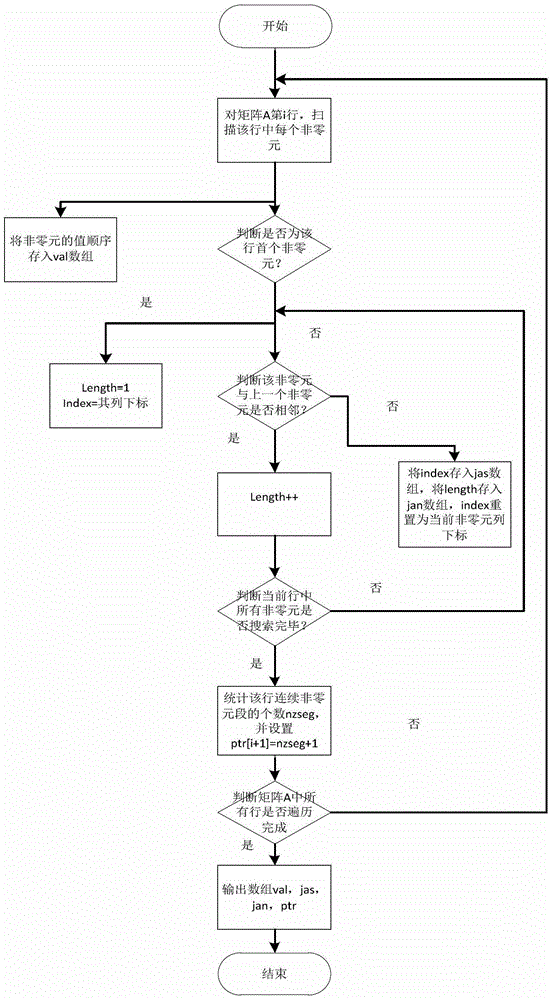

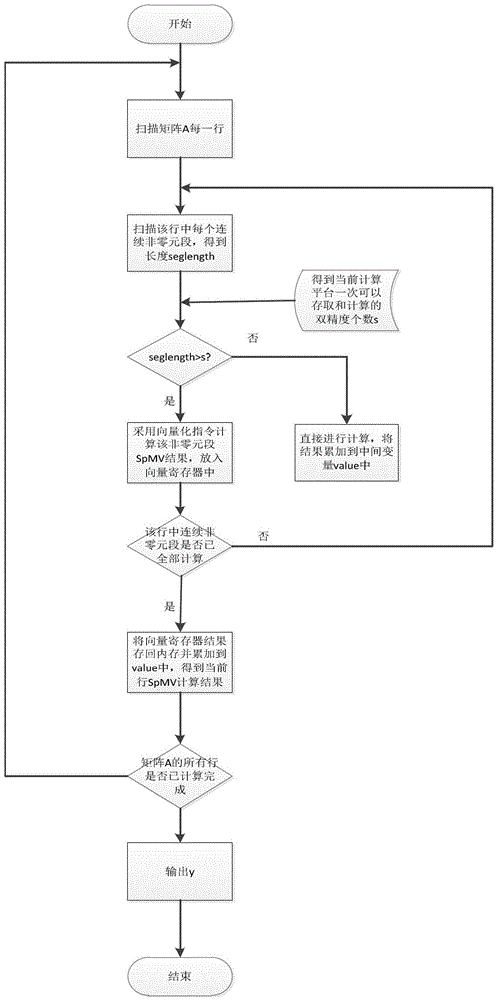

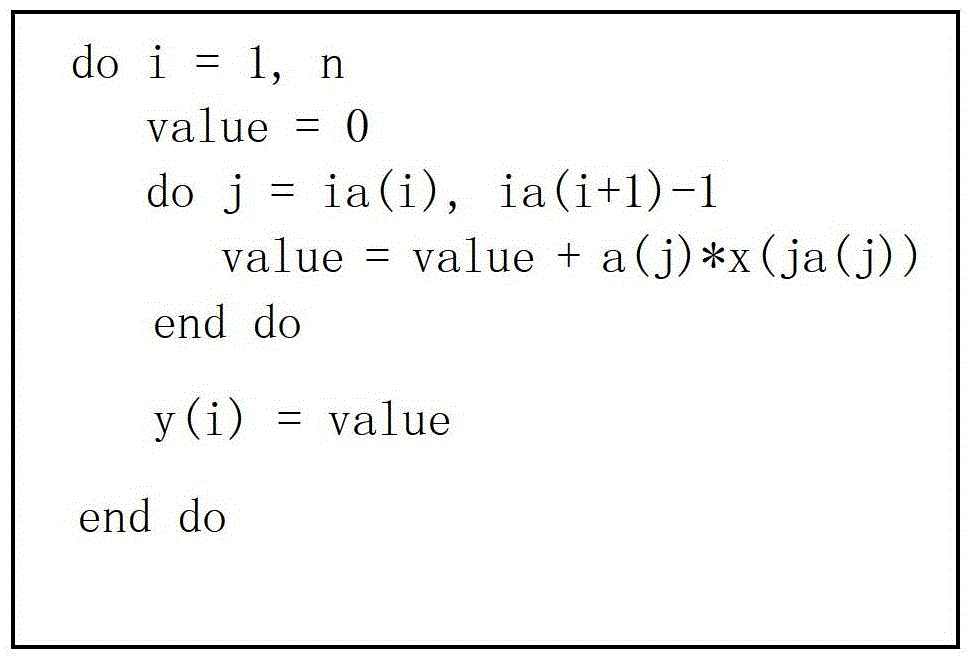

A sparse matrix storage method using compressed sparse rows with local information and an implementation method of spmv based on the method

ActiveCN103336758BReduce accessIncrease profitComplex mathematical operationsSparse matrix vectorArray data structure

The invention discloses a sparse matrix storage method CSRL (Compressed Sparse Row with Local Information) and an SpMV (Sparse Matrix Vector Multiplication) realization method based on the same. The storage method comprises the following steps of scanning a sparse matrix A in rows and storing each non-zero element value information in an array val sequence; defining a plurality of non-zero elements with continuous row subscripts as a continuous non-zero element section, recording a row subscript of a first element in each continuous non-zero element section by use of an array jas, and recording the number of non-zero elements in each continuous non-zero element section by use of an array jan; and recording an initial index of a first continuous non-zero element section in each row of the sparse matrix A by use of an array ptr. According to the data storage method, row indexes of the non-zero elements are combined and stored, so that the storage space requirement is reduced, the data locality of the sparse matrix is fully excavated, access and calculation can be performed by use of an SIMD (Single Instruction Multiple Data) instruction, the access frequency of an internal storage can be reduced, and the SpMV performance is improved.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

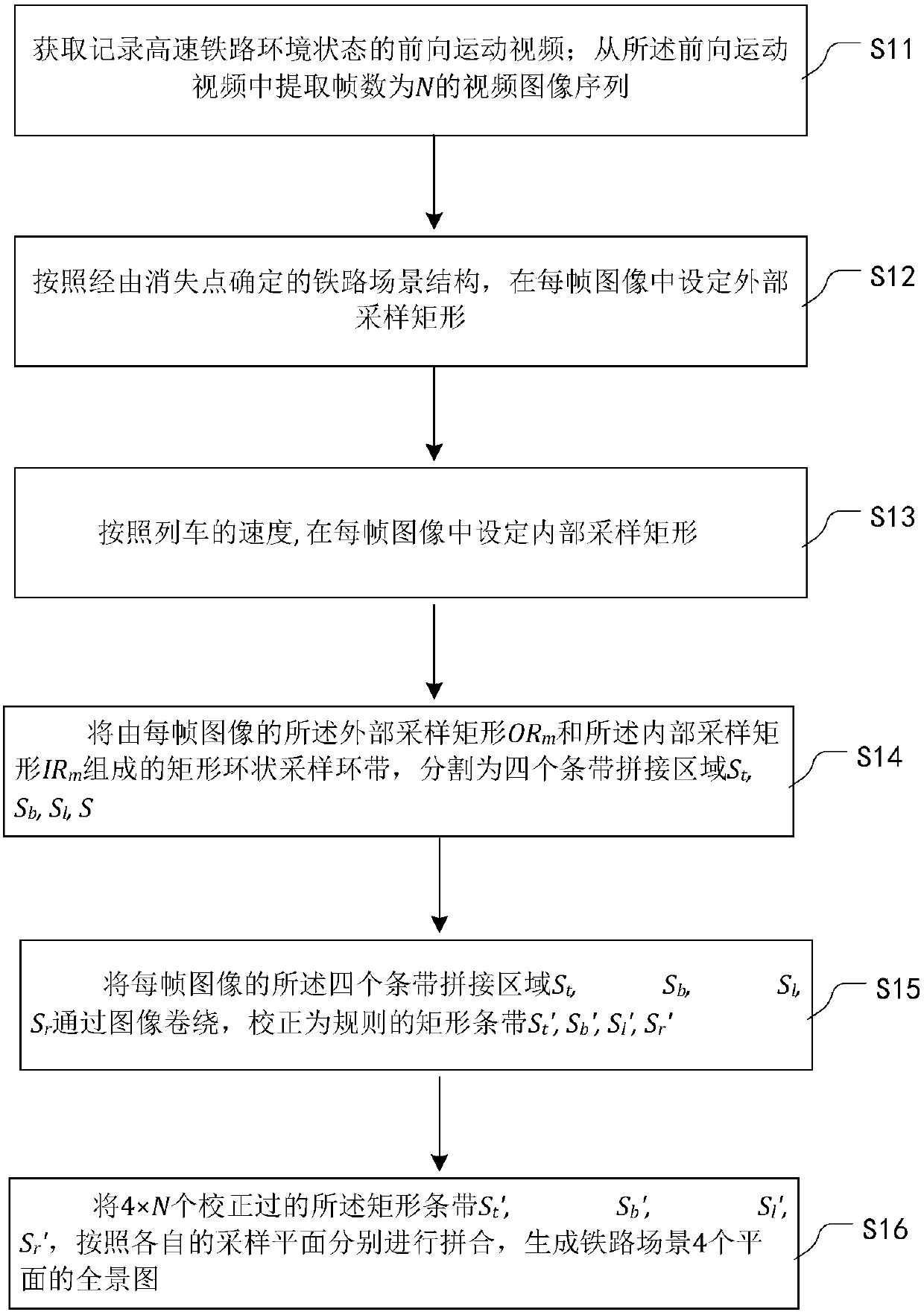

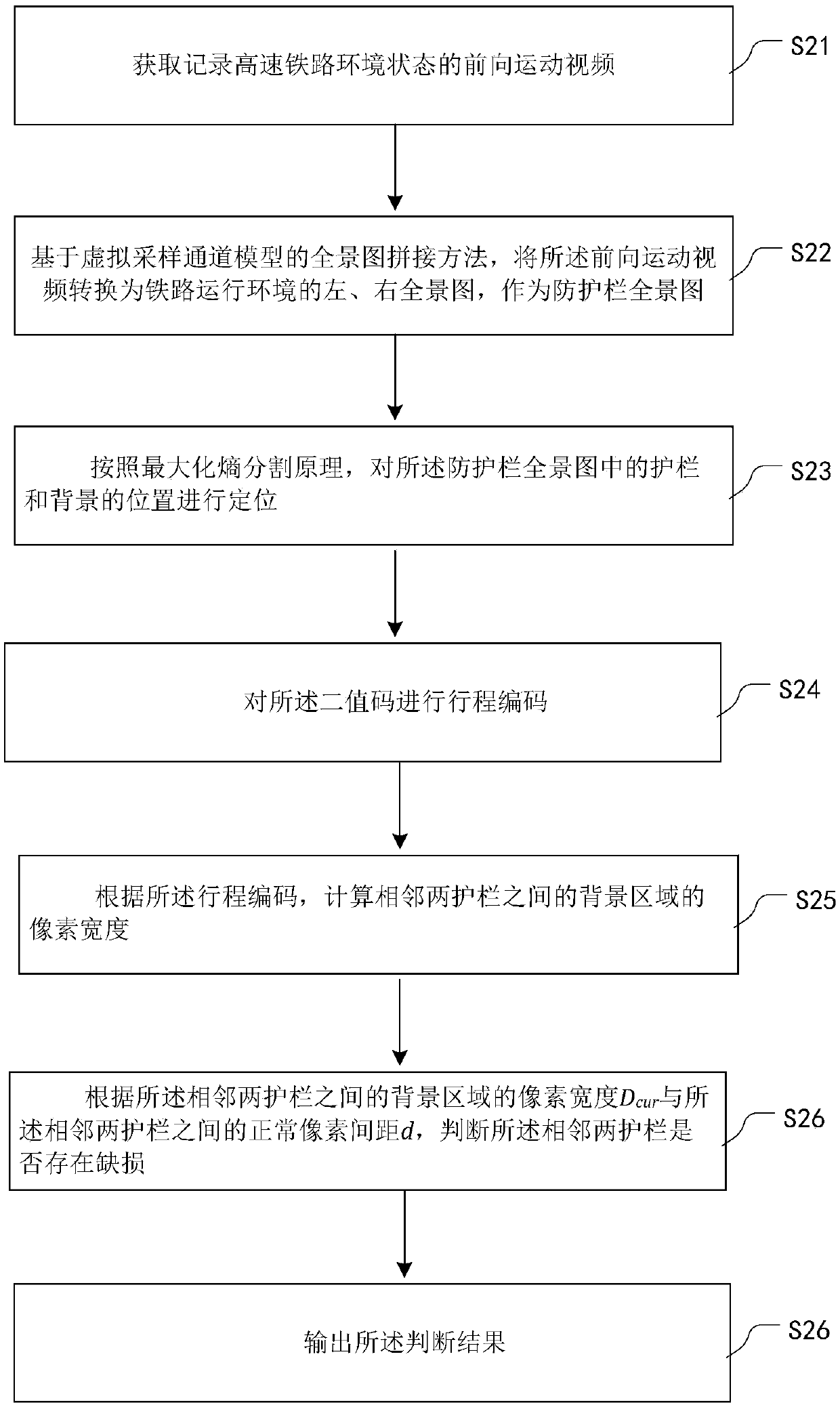

Method for splicing panoramas and method for detecting defect status of guardrails of high-speed railways

InactiveCN106023076BReduce storageReduce access overheadImage enhancementImage analysisAlgorithmEngineering

The embodiment of the invention provides a panoramic graph splicing method based on a virtual sampling channel model and a method for detecting a defect state of a guard railing of a high-speed railway. The detection method comprises: a forward motion video recording a high-speed railway environment state is obtained; with a panoramic graph splicing method based on a virtual sampling channel model, the forward motion video is converted into a left panoramic graph and a right panoramic graph in a railway operating environment, wherein the left panoramic graph and the right panoramic graph are used as a guard railing panoramic graph; on the basis of a maximum entropy segmentation principle, positions of a guard railing and a background in the guard railing panoramic graph are located to obtain a binary code formed by 0 and 1; run coding is carried out on th binary code; according to the run coding, a pixel width Dcur of a background area between two adjacent guard railings is calculated; and according to the pixel width Dcur of the background area between two adjacent guard railings and a normal pixel distance d between the two adjacent guard railings, whether defects exist at the two adjacent guard railings is determined.

Owner:BEIJING JIAOTONG UNIV

Data processing method and device

ActiveCN105843809BImprove processing efficiencyReduce access overheadDatabase indexingComputer hardwareEngineering

Owner:TENCENT TECH (SHENZHEN) CO LTD

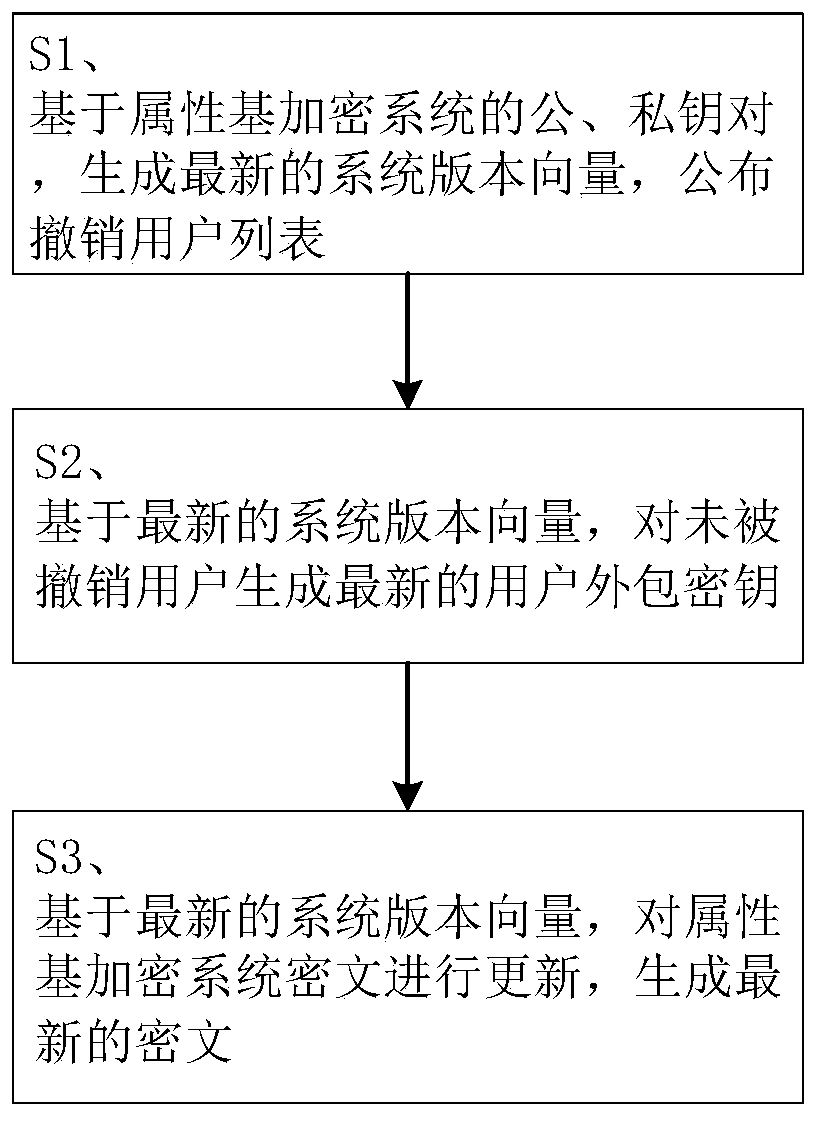

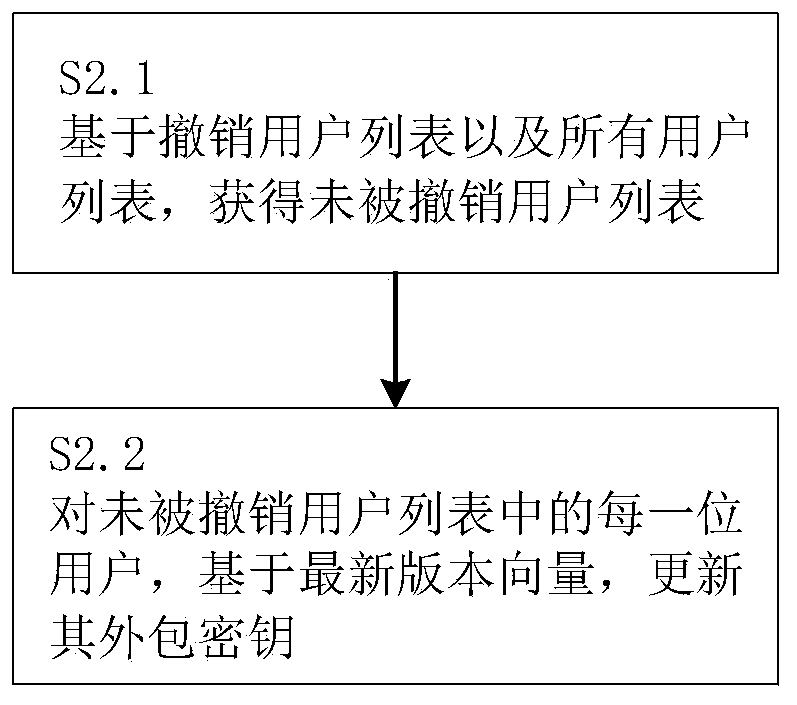

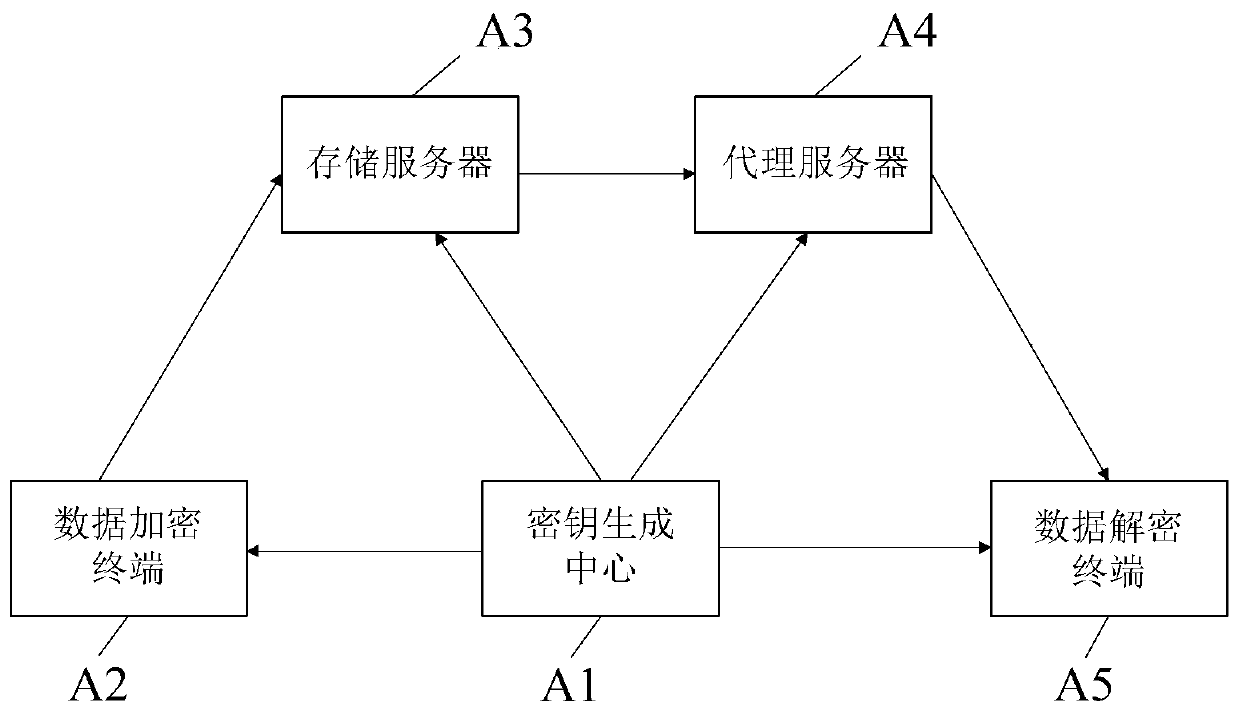

Outsourcing revocation method and system in attribute-based encryption system

InactiveCN110855613AReduce access overheadReduce overheadKey distribution for secure communicationThird partyCiphertext

The invention provides an outsourcing revocation method and system in an attribute-based encryption system. The method comprises the following steps: generating a latest system version vector based ona public and private key pair of the attribute-based encryption system, and publishing a revocation user list; generating a latest user outsourcing key for the unrevoked user based on the latest system version vector; and based on the latest system version vector, updating the attribute-based encryption system ciphertext to generate a latest ciphertext. According to the invention, the calculationoperation required for user revocation in the attribute-based encryption system is outsourced to the third-party mechanism for execution, so that the key generation center only needs to execute a very small amount of calculation, and the terminal user does not need to execute any calculation, thereby greatly improving the user revocation efficiency in the attribute-based encryption system.

Owner:HUNAN UNIV

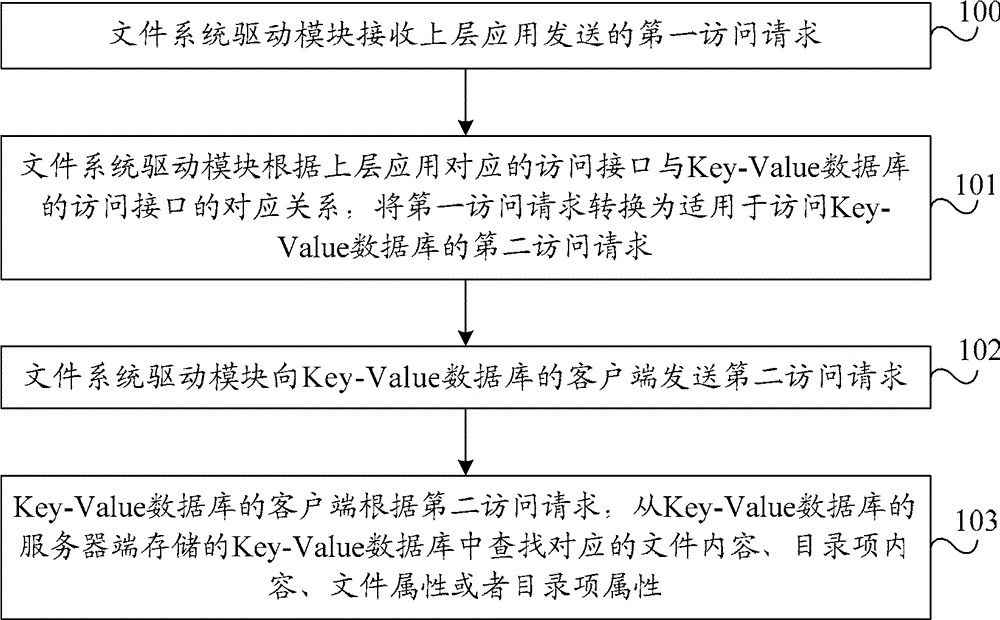

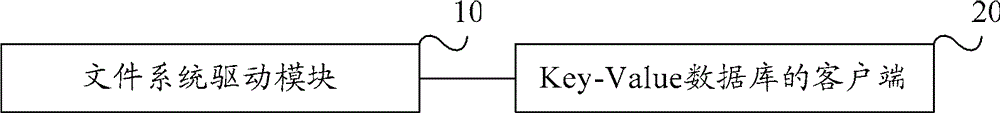

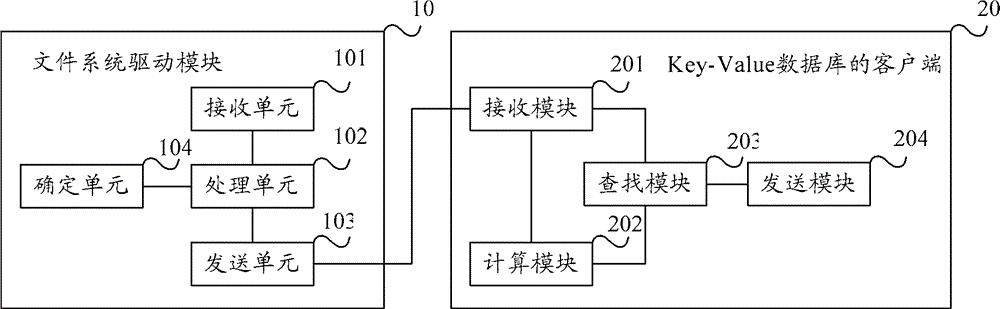

Method and system of file access

ActiveCN102725755BReduce access overheadImprove access efficiencyDatabase queryingSpecial data processing applicationsAccess methodFile system

Provided are a file access method and system. The method includes: a file system drive module receiving a first access request sent by an upper layer application; the file system drive module converting the first access request into a second access request suitable for accessing a key value database according to the correlation between an access interface corresponding to the upper layer application and an access interface of the key value database; the file system drive module sending the second access request to a client of the key value database; the client of the key value database searching for a corresponding file content or directory entry content or file attribute or directory entry attribute from a key value database stored at a server end of the key value database according to the second access request. As compared to the technical solution in the prior art that a POSIX file system accesses metadata layer by layer, the technical solution in the embodiments of the present invention is small in access overhead and high in access efficiency.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

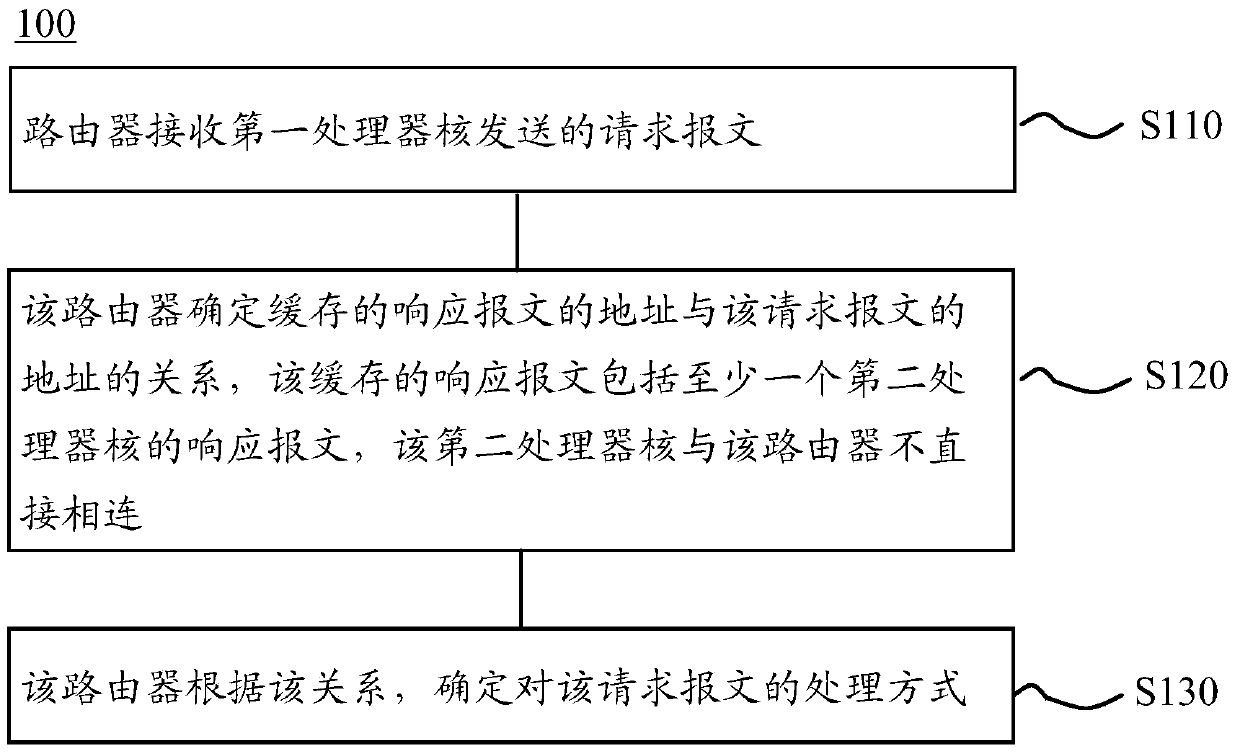

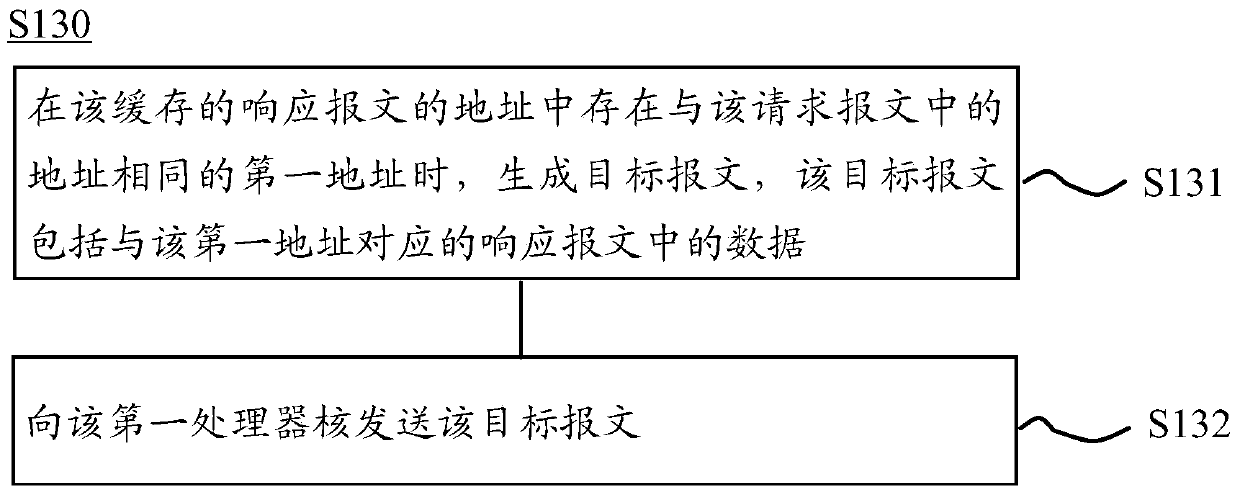

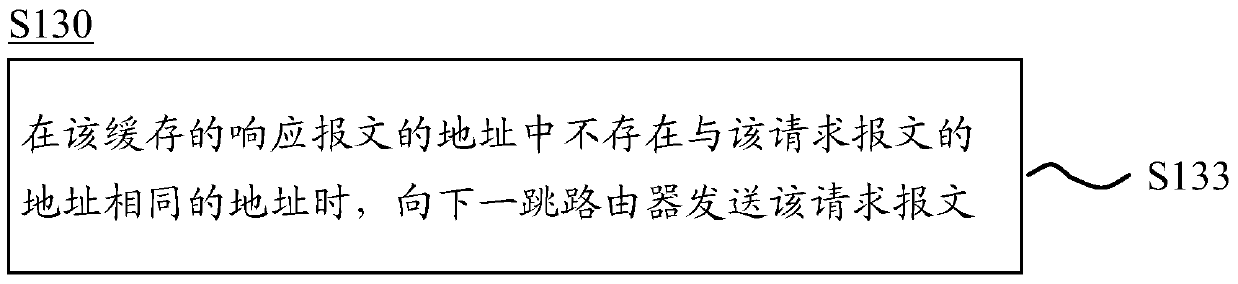

Method and router for processing packets in network on chip

ActiveCN106302259BReduce access overheadImprove performanceData switching networksComputer networkComputer architecture

The invention provides a method for processing a message in a network-on-chip, and a router. The method comprises the steps that: the router receives a request message sent by a first processor core; the router determines a relationship between an address of a cached response message and an address of the request message, wherein the cached response message comprises a response message of at least one second processor core which is not directly connected to the router; and the router determines a processing manner of the request message according to the relationship. Therefore, the memory access time delay can be reduced, the overall performance of a processor can be improved, and network access overhead can be reduced by utilizing data sharing opportunities of the different processor cores.

Owner:HUAWEI TECH CO LTD

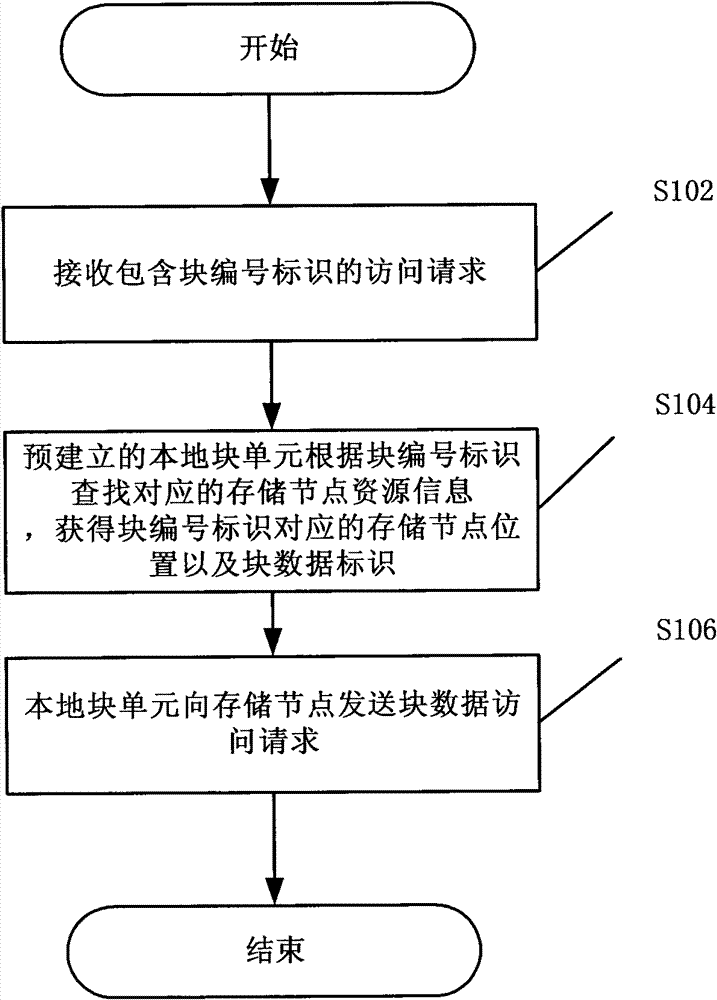

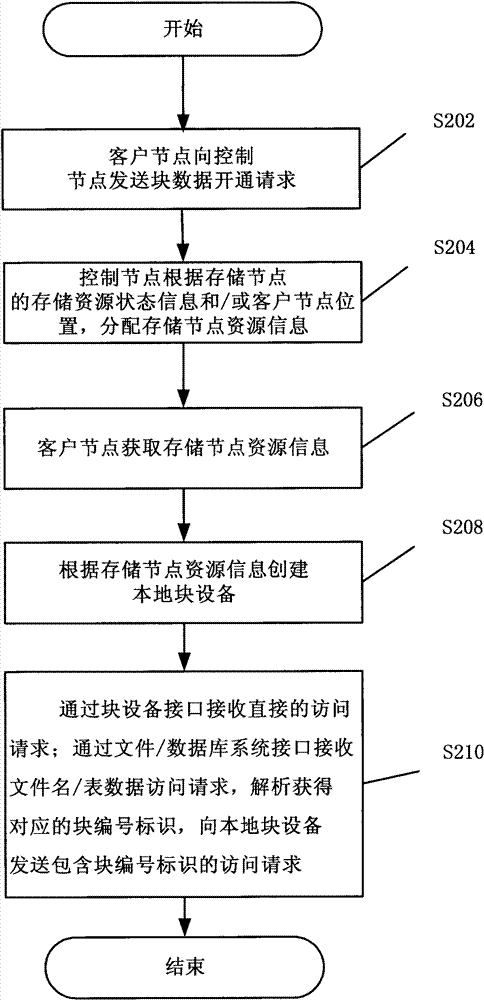

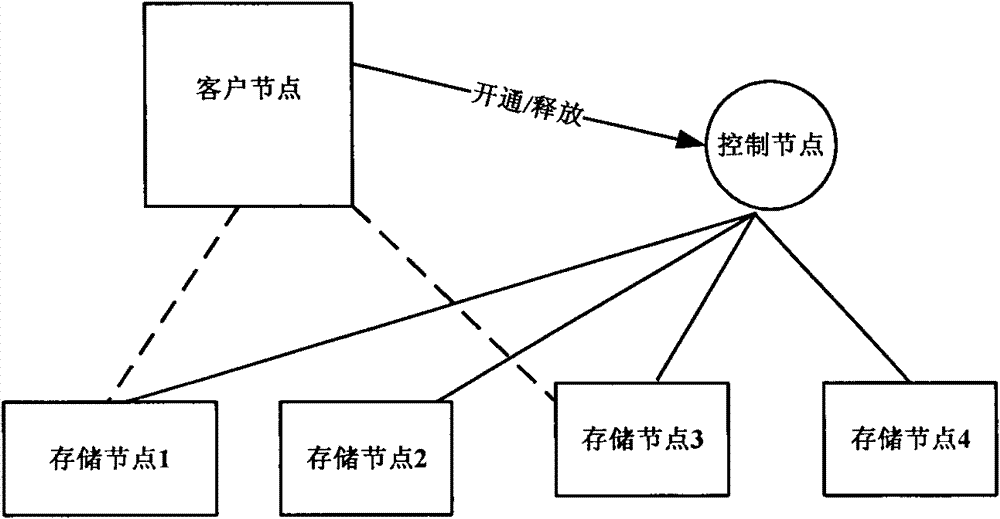

Data access method, node and system

ActiveCN102594852BReduce access overheadImprove access efficiencyTransmissionAccess methodDistributed File System

The invention discloses a data access method, a data access device and a data access system. The method comprises the following steps that: an access request containing a block number identifier is received; a pre-established local block unit searches the corresponding relation between the block number of the local block unit and storage node resource information providing storage services according to the block number to obtain a storage node position corresponding to the block number identifier and a block data identifier; and the local block unit sends the data access request to the storage node according to the storage node position corresponding to the block number identifier and the block data identifier, wherein the storage node resource information comprises the storage node position information and the block number identifier of a storage node. By the method, the device and the system, the problem that a metadata server becomes a bottleneck of the system because the current distributed file system is required to address through the metadata server at every access is solved, and the access efficiency is low is overcome.

Owner:CHINA MOBILE COMM GRP CO LTD

Unified Bit Width Conversion Method for Cache and Bus Interface in System Chip

ActiveCN104375962BImprove compatibilityReduce system performanceMemory adressing/allocation/relocationLogic cellAssembly line

The invention discloses a unified bit width converting structure and method in a cache and a bus interface of a system chip. The converting structure comprises a processor core and a plurality of IP cores carrying out data interaction with the processor core through an on-chip bus, and a memorizer controller IP is communicated with an off-chip main memorizer. The processor core comprises an instruction assembly line and a hit judgment logic unit receiving an operation instruction of the instruction assembly line. An access bit width judgment unit and a bit width / address converting unit are arranged between the hit judgment logic unit and the cache bus interface, the hit judgment logic unit sends a judgment result to the instruction assembly line, and the processor core is connected with the on-chip bus through the cache bus interface. According to the converting method, for the read access of a byte or a half byte, if cache deficiency happens and the access space belongs to the cache area, the bit width / address converting unit converts the read access of the byte or the half byte into one-byte access, access and storage are finished through the bus, an original updating strategy is not affected, and flexibility can exist.

Owner:NO 771 INST OF NO 9 RES INST CHINA AEROSPACE SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com