Patents

Literature

127results about How to "Reduce system performance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

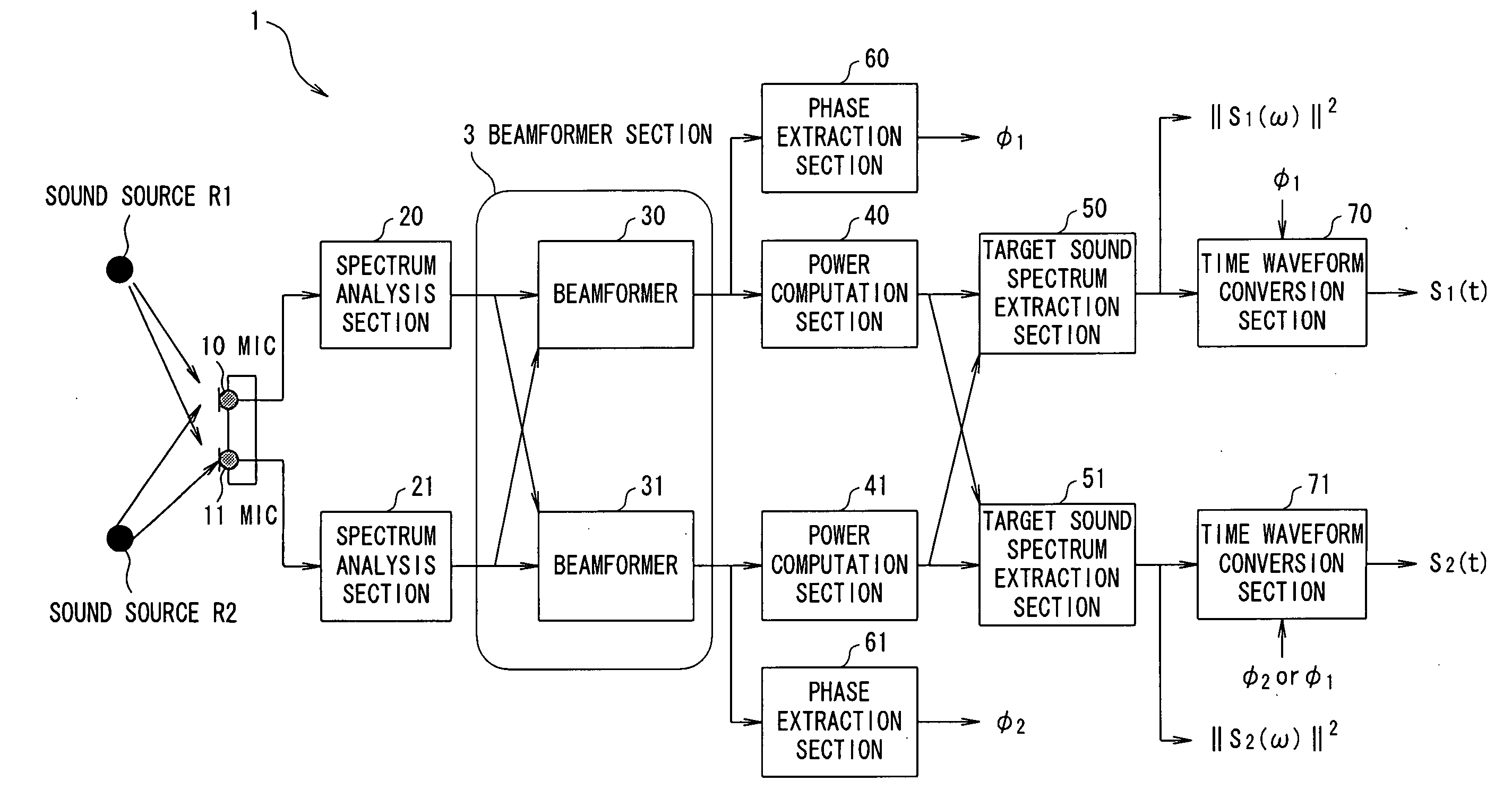

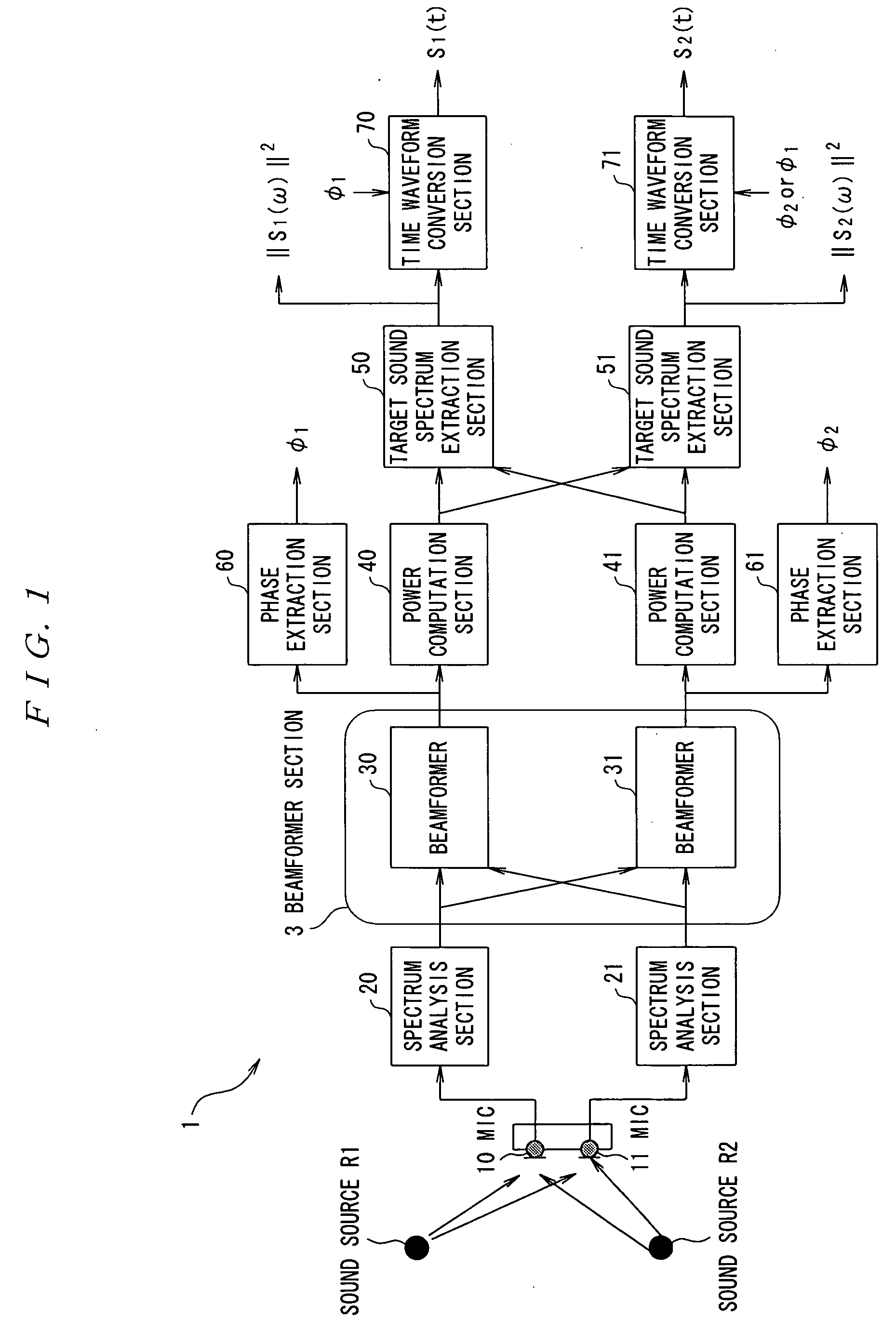

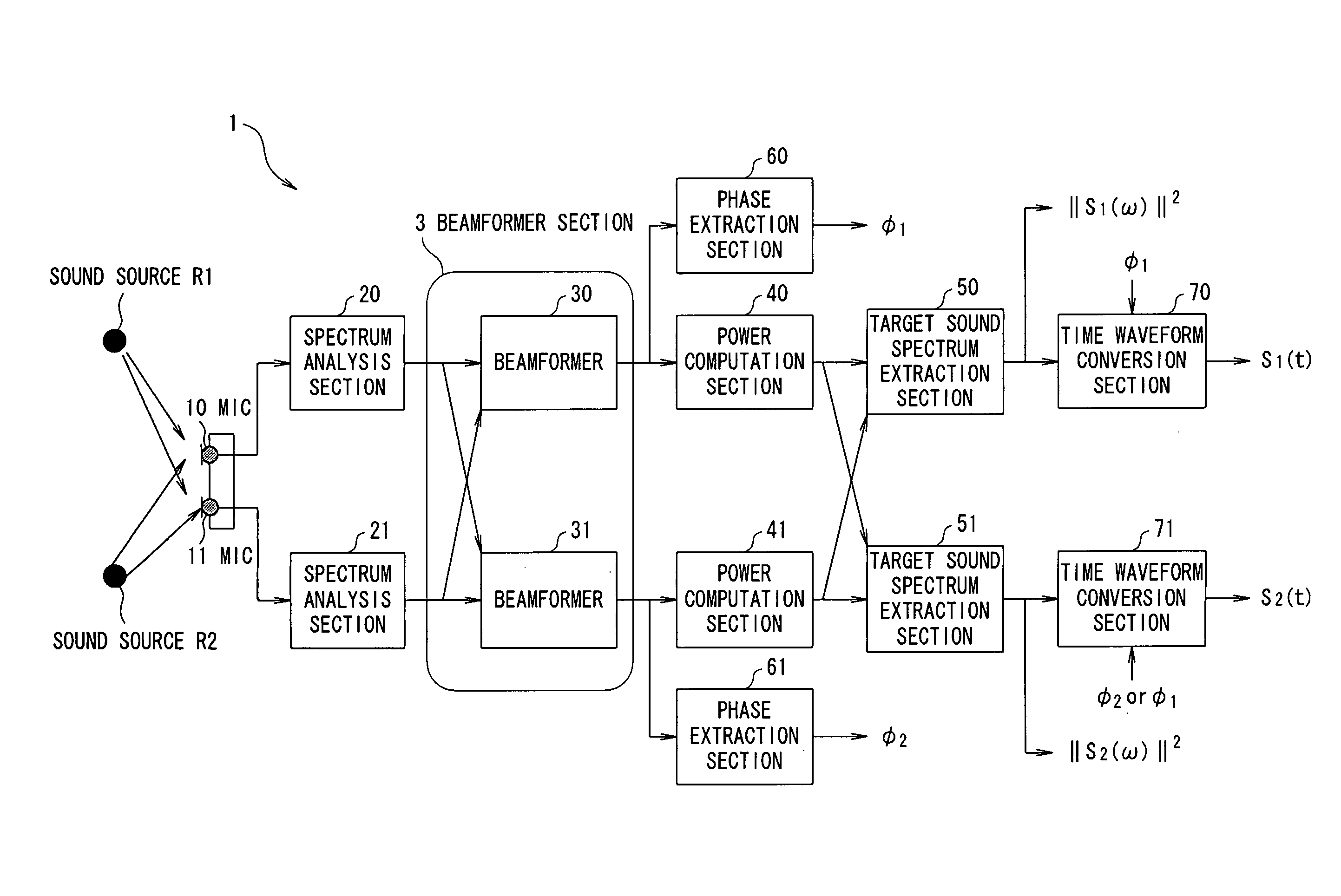

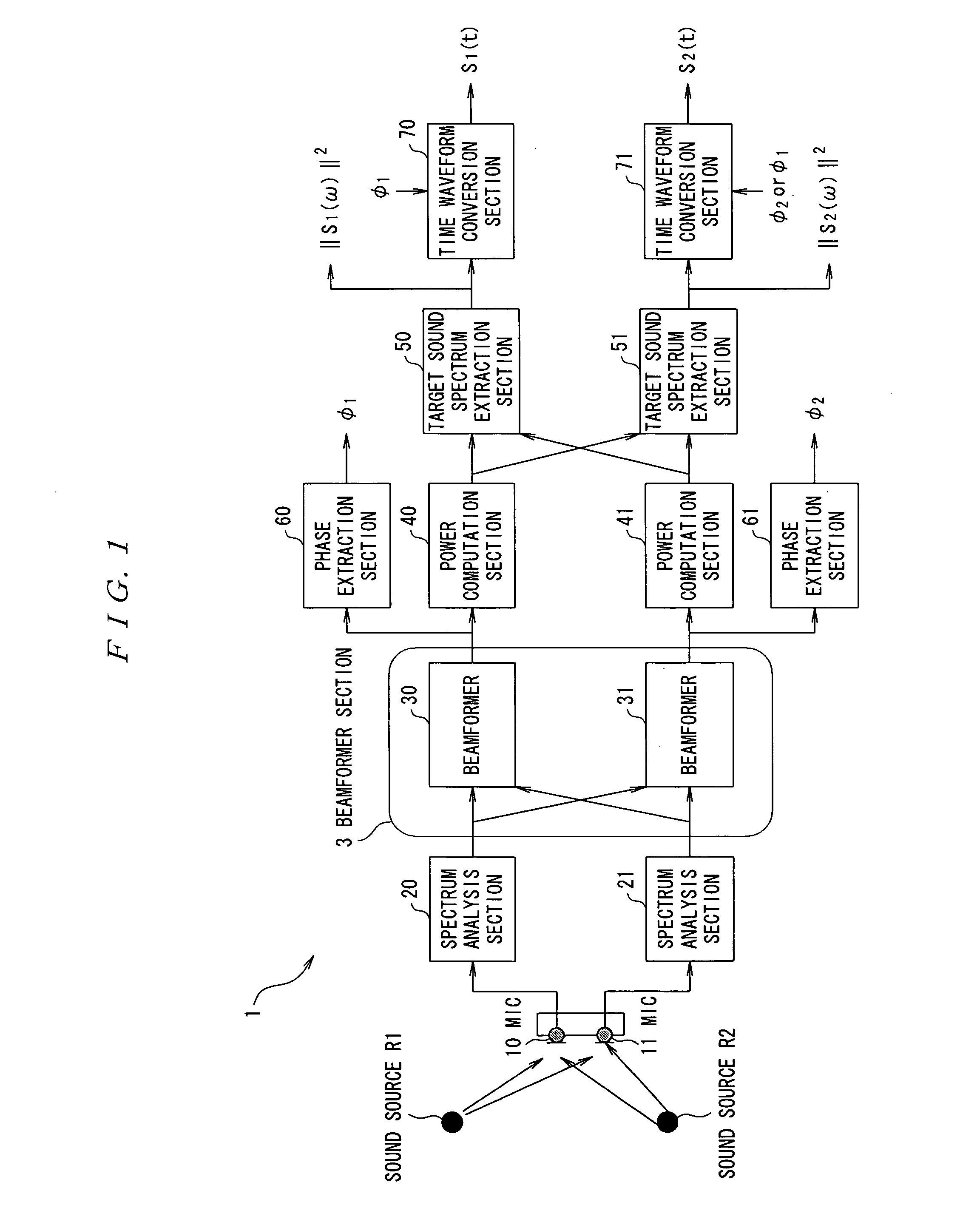

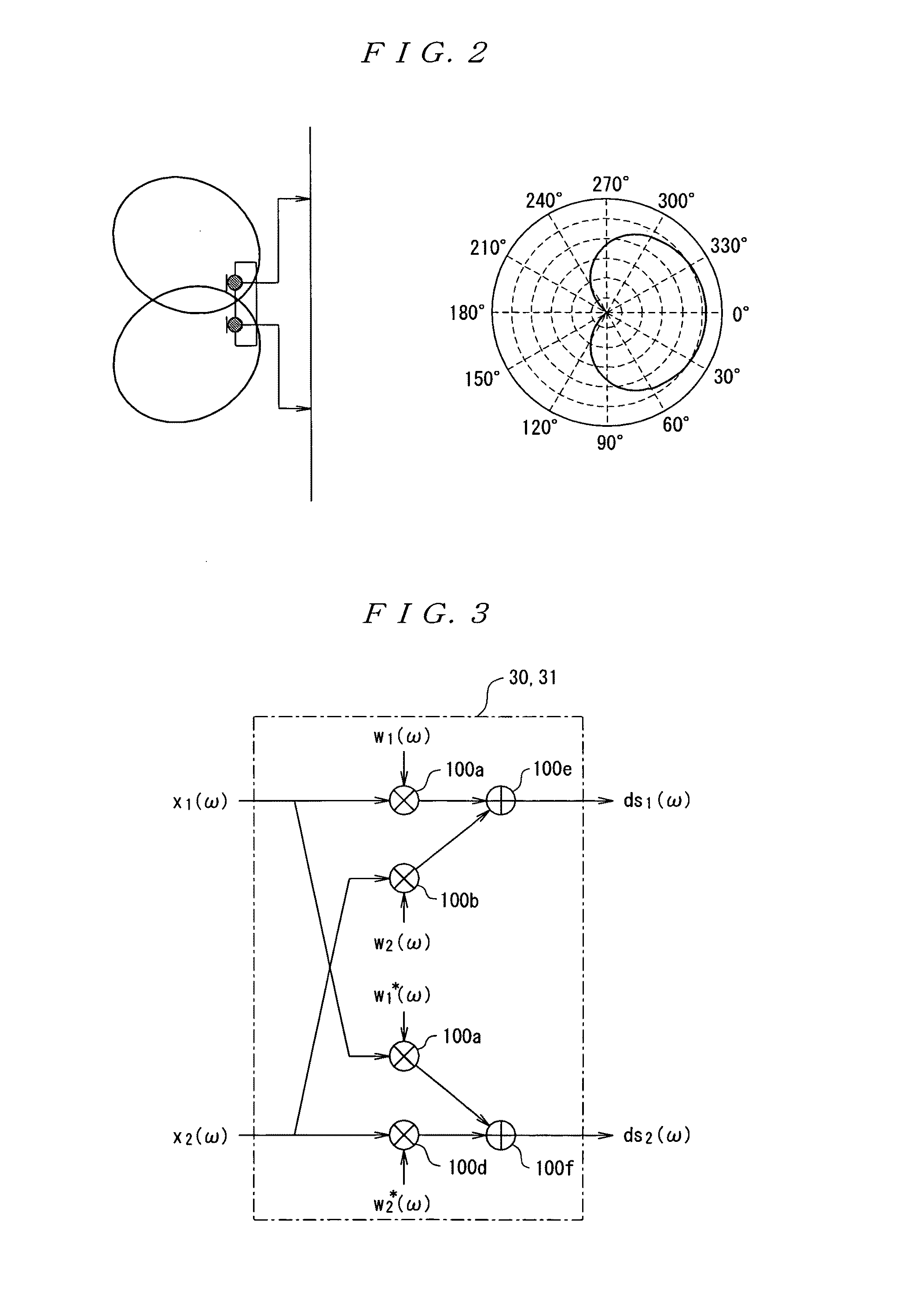

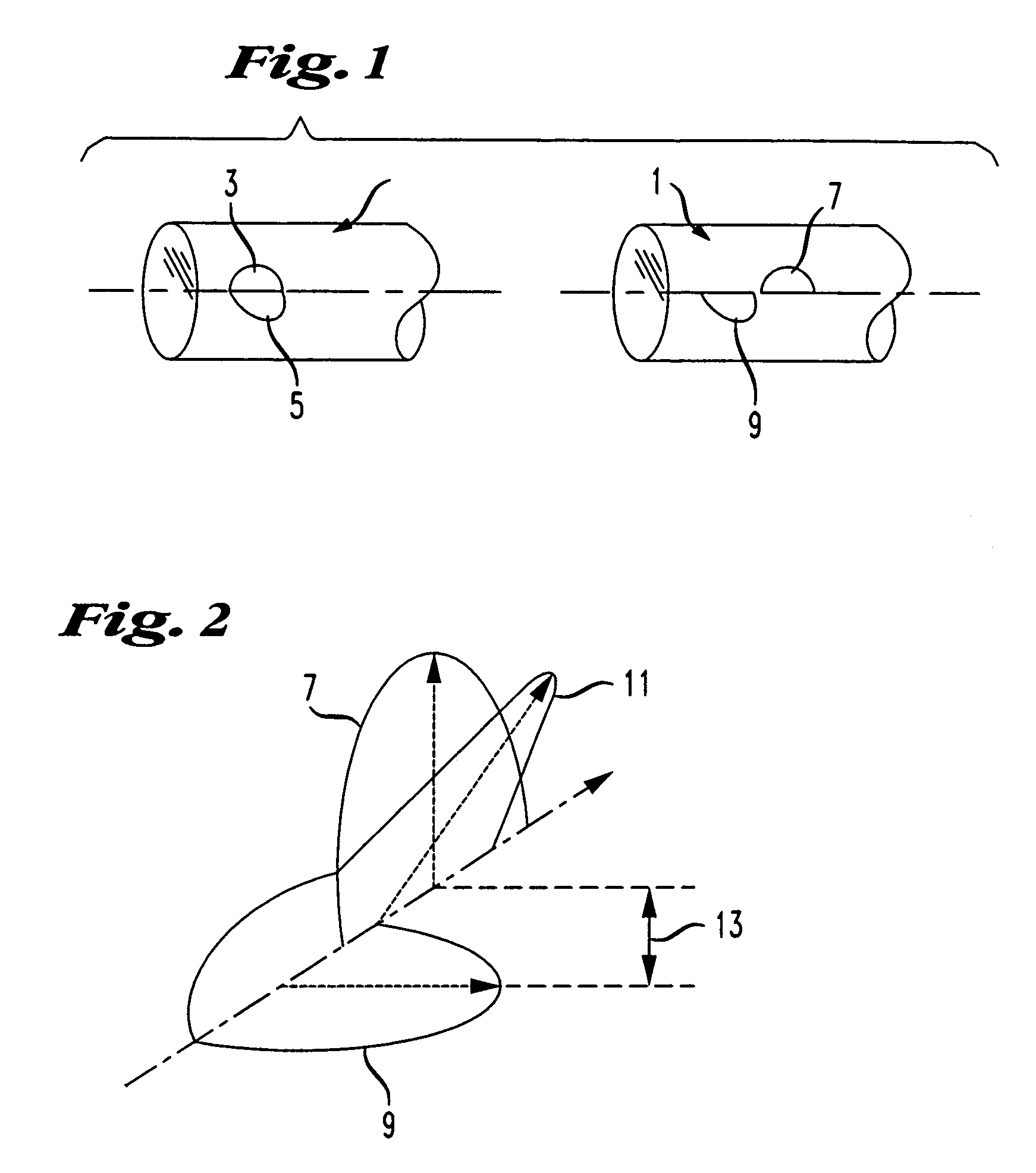

Sound Source Separation Device, Speech Recognition Device, Mobile Telephone, Sound Source Separation Method, and Program

ActiveUS20090055170A1Reduce system performanceSignal processingSpeech recognitionSound source separationFrequency spectrum

A sound source signal from a target sound source is allowed to be separated from a mixed sound which consists of sound source signals emitted from a plurality of sound sources without being affected by uneven sensitivity of microphone elements. A beamformer section 3 of a source separation device 1 performs beamforming processing for attenuating sound source signals arriving from directions symmetrical with respect to a perpendicular line to a straight line connecting two microphones 10 and 11 respectively by multiplying output signals from the microphones 10 and 11 after spectrum analysis by weighted coefficients which are complex conjugate to each other. Power computation sections 40 and 41 compute power spectrum information, and target sound spectrum extraction sections 50 and 51 extract spectrum information of a target sound source based on a difference between the power spectrum information.

Owner:ASAHI KASEI KK

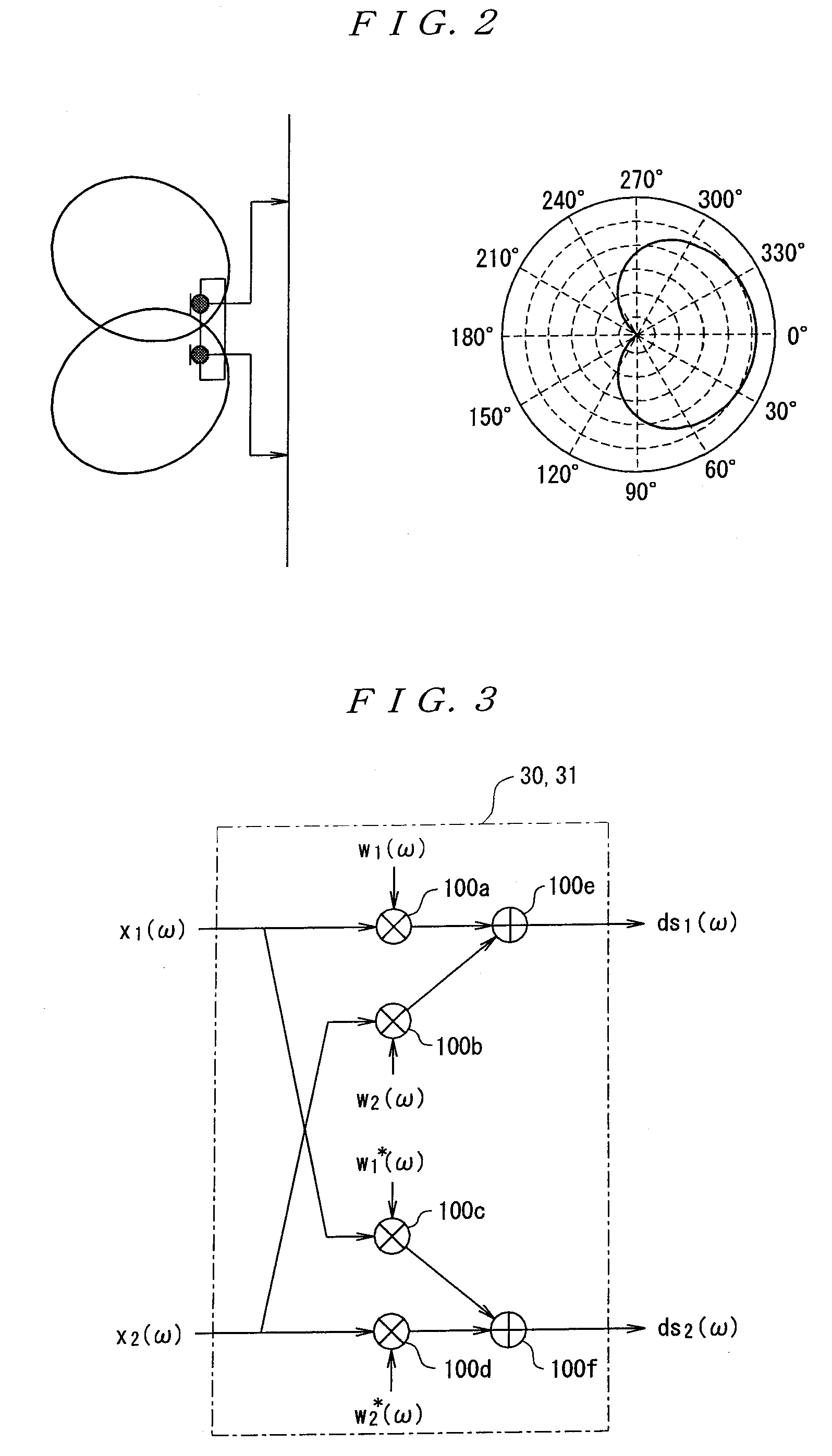

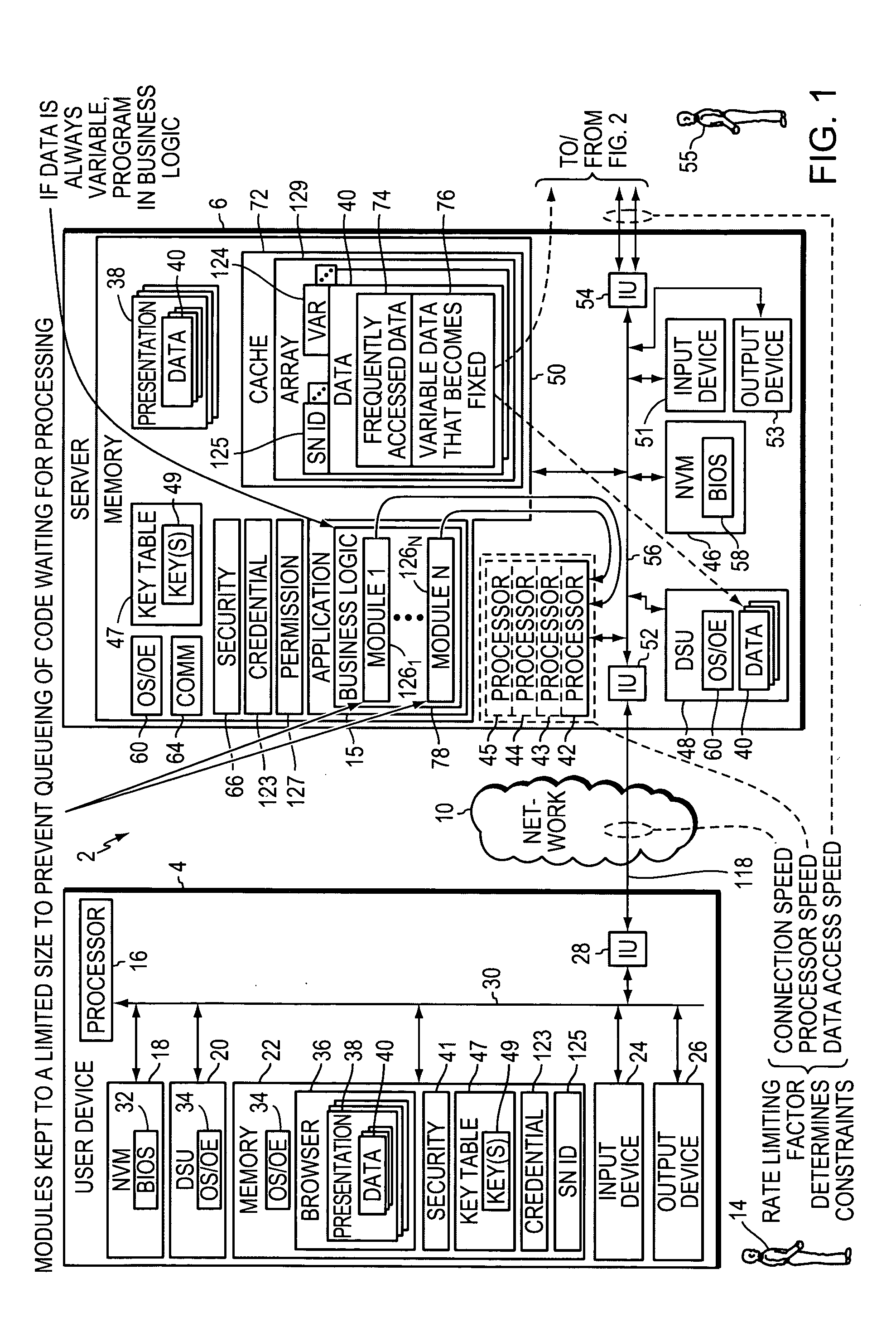

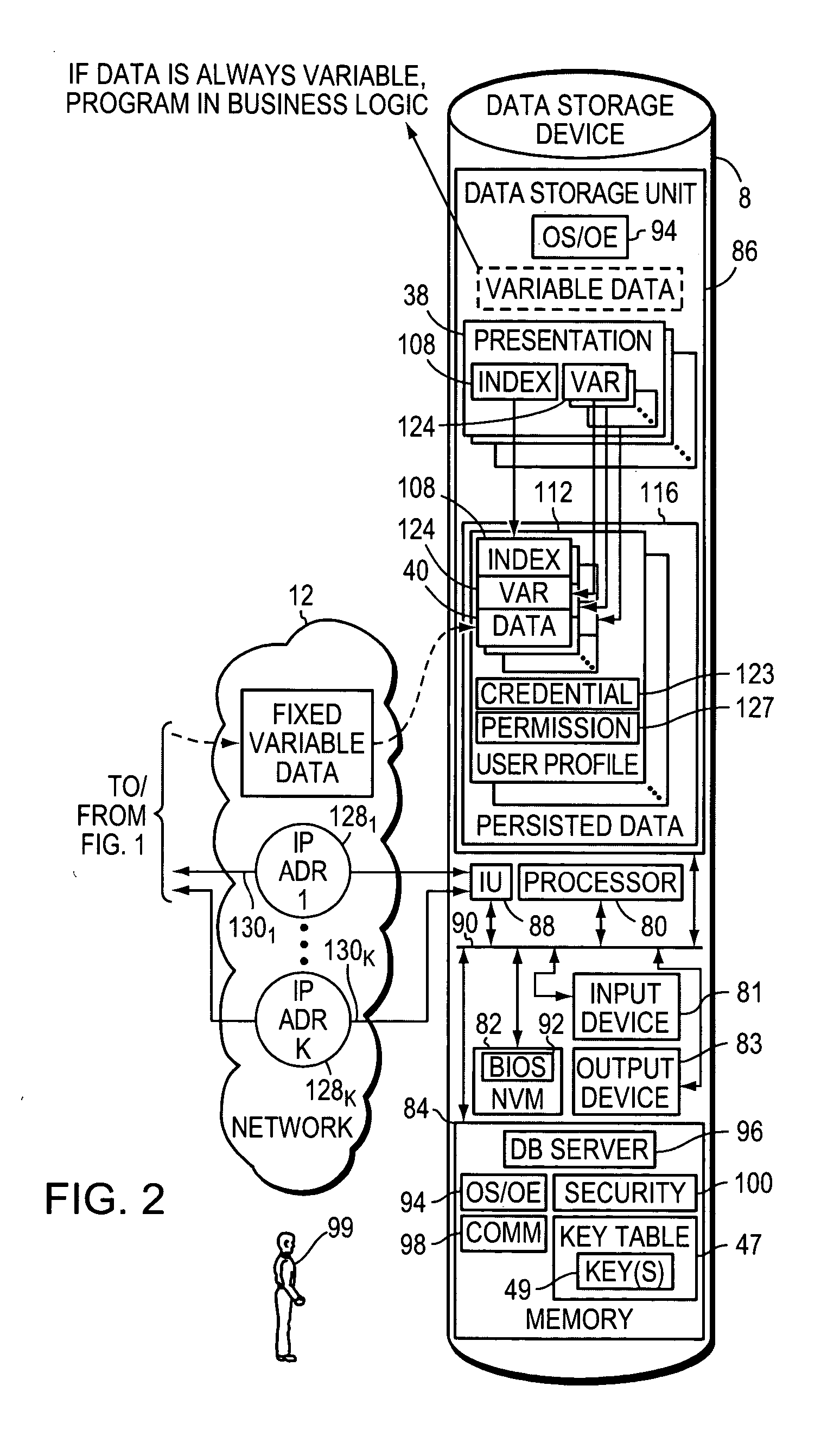

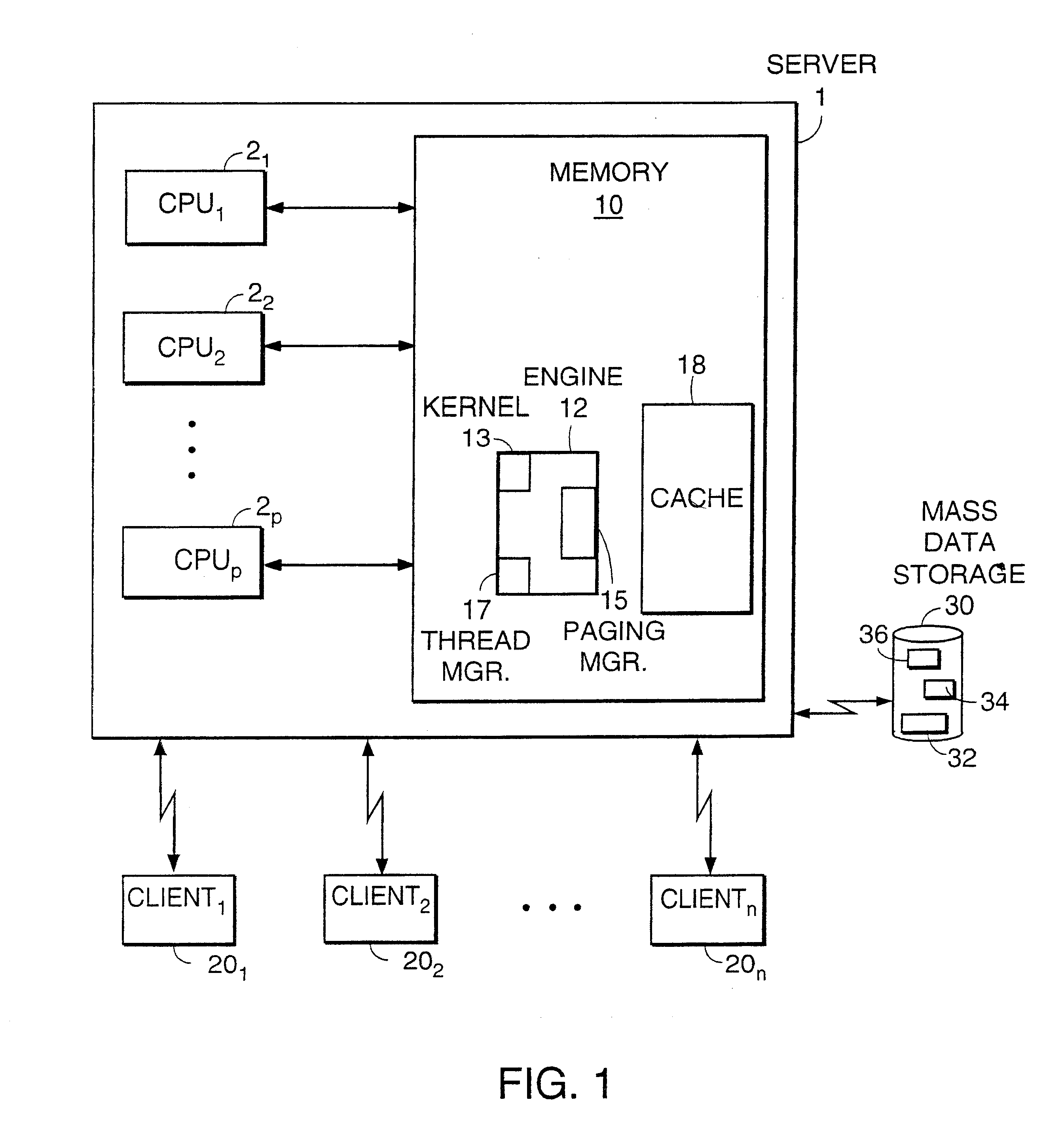

Methods, apparatuses, systems, and articles for determining and implementing an efficient computer network architecture

InactiveUS20050138198A1Reduce system performanceAvoid queuingMultiple digital computer combinationsTransmissionRate limitingNetwork architecture

Methods, apparatuses, systems, and articles of the invention are used to enhance the efficiency of a computing environment, and to improve its responsiveness to one or more users. In the invention, queuing of data or computer instructions is avoided since this would detract from best case performance for a network environment. The rate-limiting factor for the network is determined, and all constraints imposed by the rate-limiting factor are determined. Business logic is programmed in modules sufficiently small to avoid queuing of instructions. Data is stored by frequency of access and persistence to increase responsiveness to user requests. Requests for data from a data storage device are fulfilled not only with the requested data, but also additional data which is likely to be requested in the navigational hierarchy of presentations to which the requested data belongs.

Owner:IT WORKS

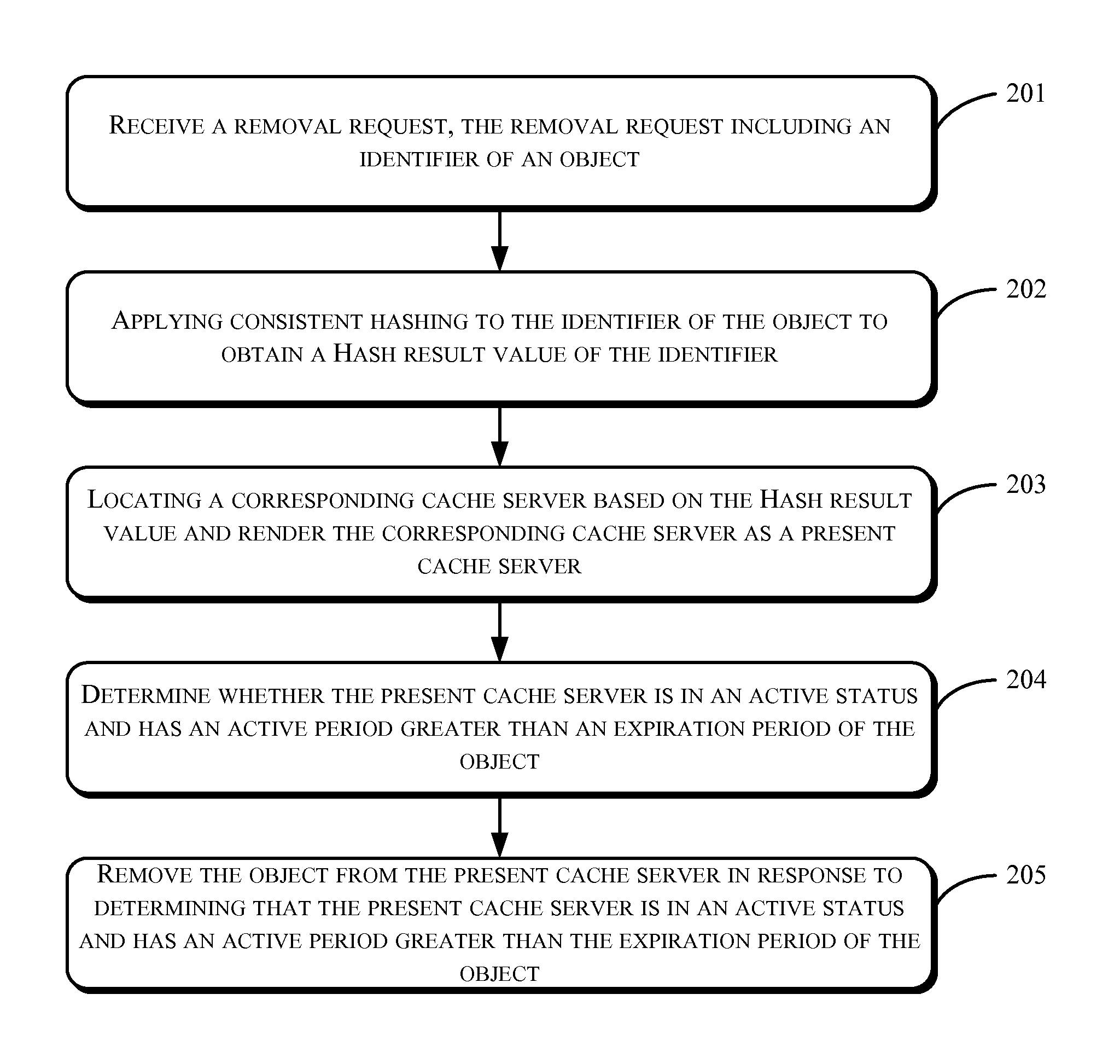

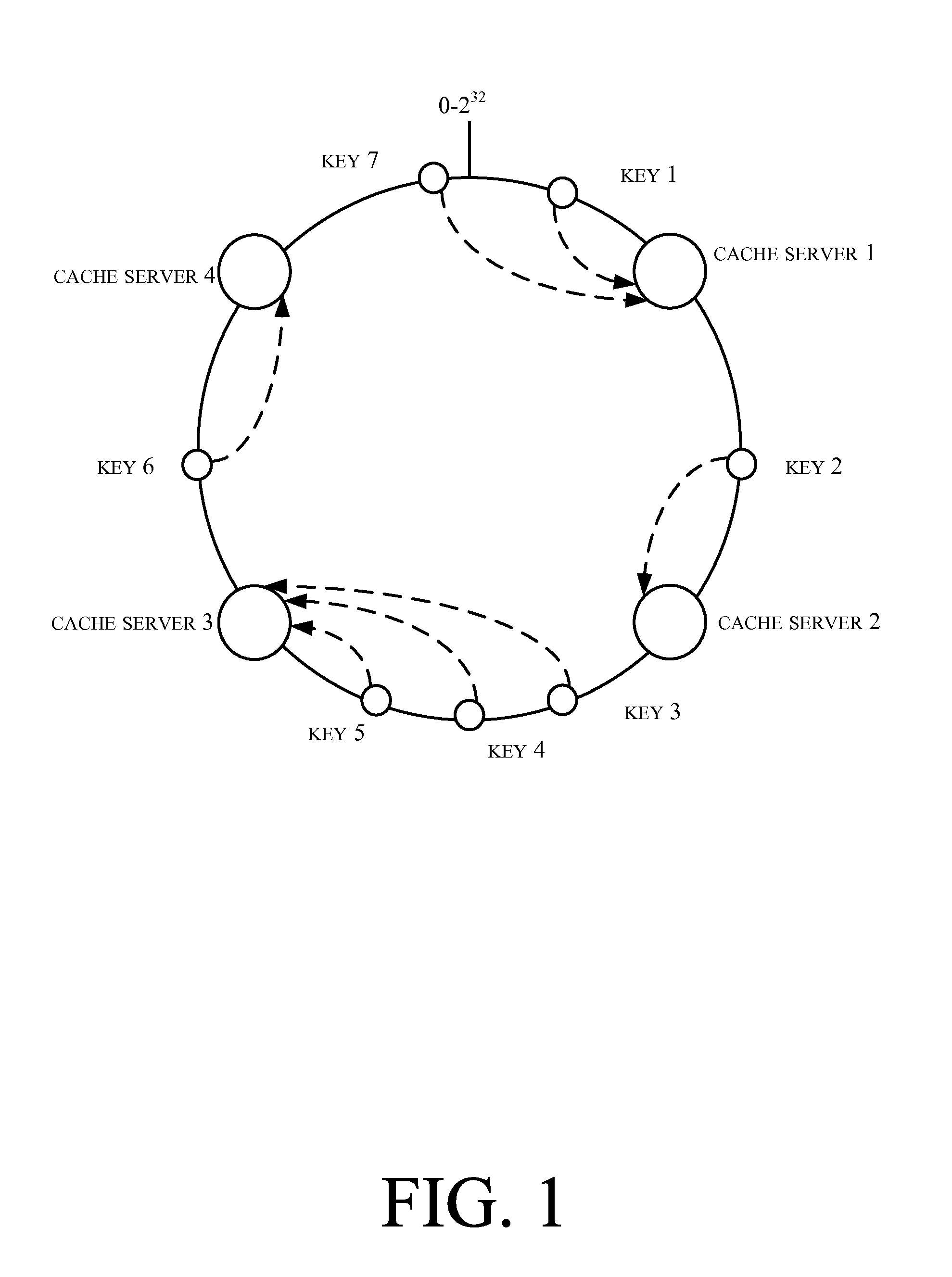

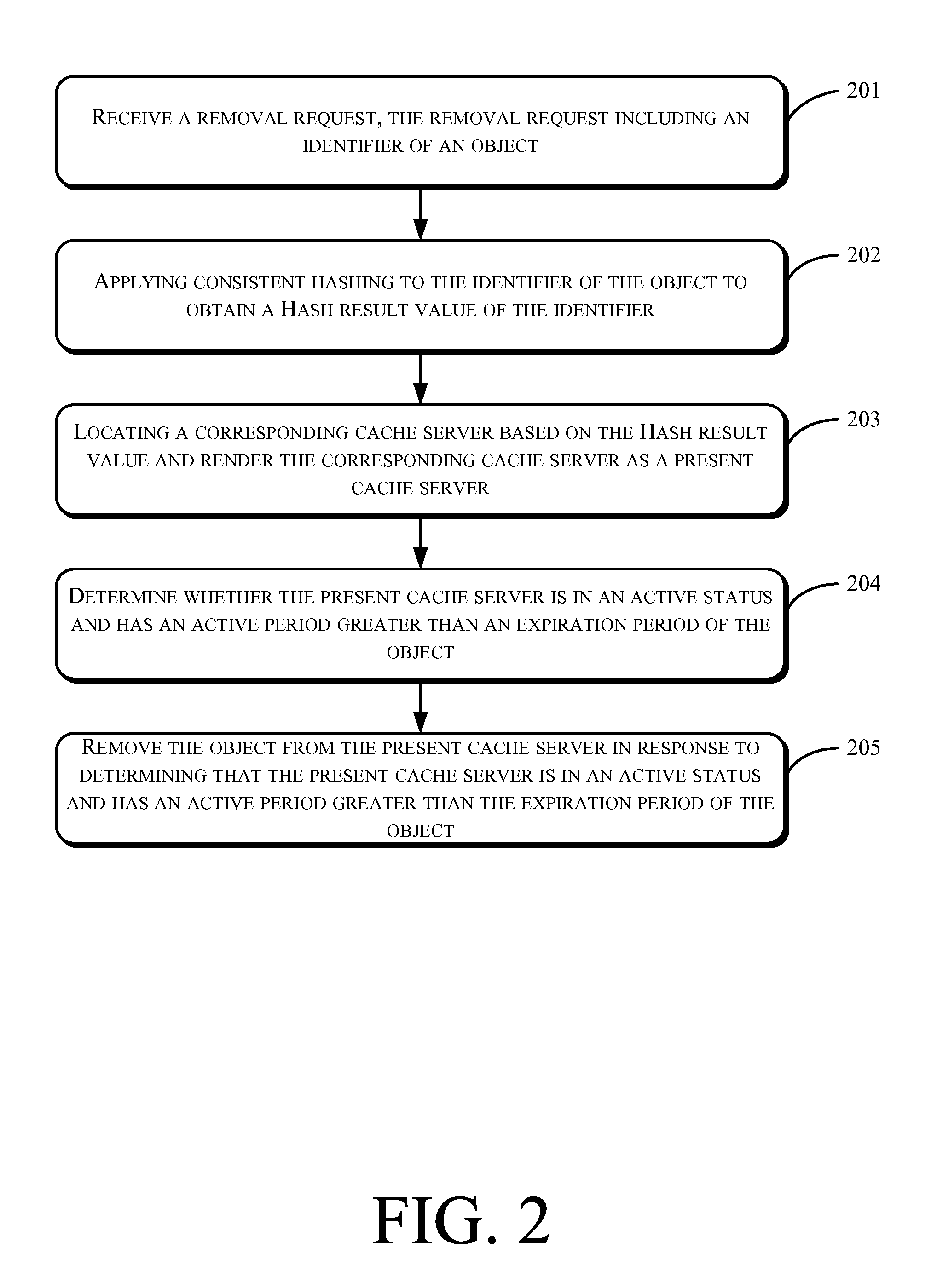

Method, System and Server of Removing a Distributed Caching Object

ActiveUS20130145099A1Waste of resourceImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationCache serverDistributed cache

The present disclosure discloses a method, a system and a server of removing a distributed caching object. In one embodiment, the method receives a removal request, where the removal request includes an identifier of an object. The method may further apply consistent Hashing to the identifier of the object to obtain a Hash result value of the identifier, locates a corresponding cache server based on the Hash result value and renders the corresponding cache server to be a present cache server. In some embodiments, the method determines whether the present cache server is in an active status and has an active period greater than an expiration period associated with the object. Additionally, in response to determining that the present cache server is in an active status and has an active period greater than the expiration period associated with the object, the method removes the object from the present cache server. By comparing an active period of a located cache server with an expiration period associated with an object, the exemplary embodiments precisely locate a cache server that includes the object to be removed and perform a removal operation, thus saving the other cache servers from wasting resources to perform removal operations and hence improving the overall performance of the distributed cache system.

Owner:ALIBABA GRP HLDG LTD

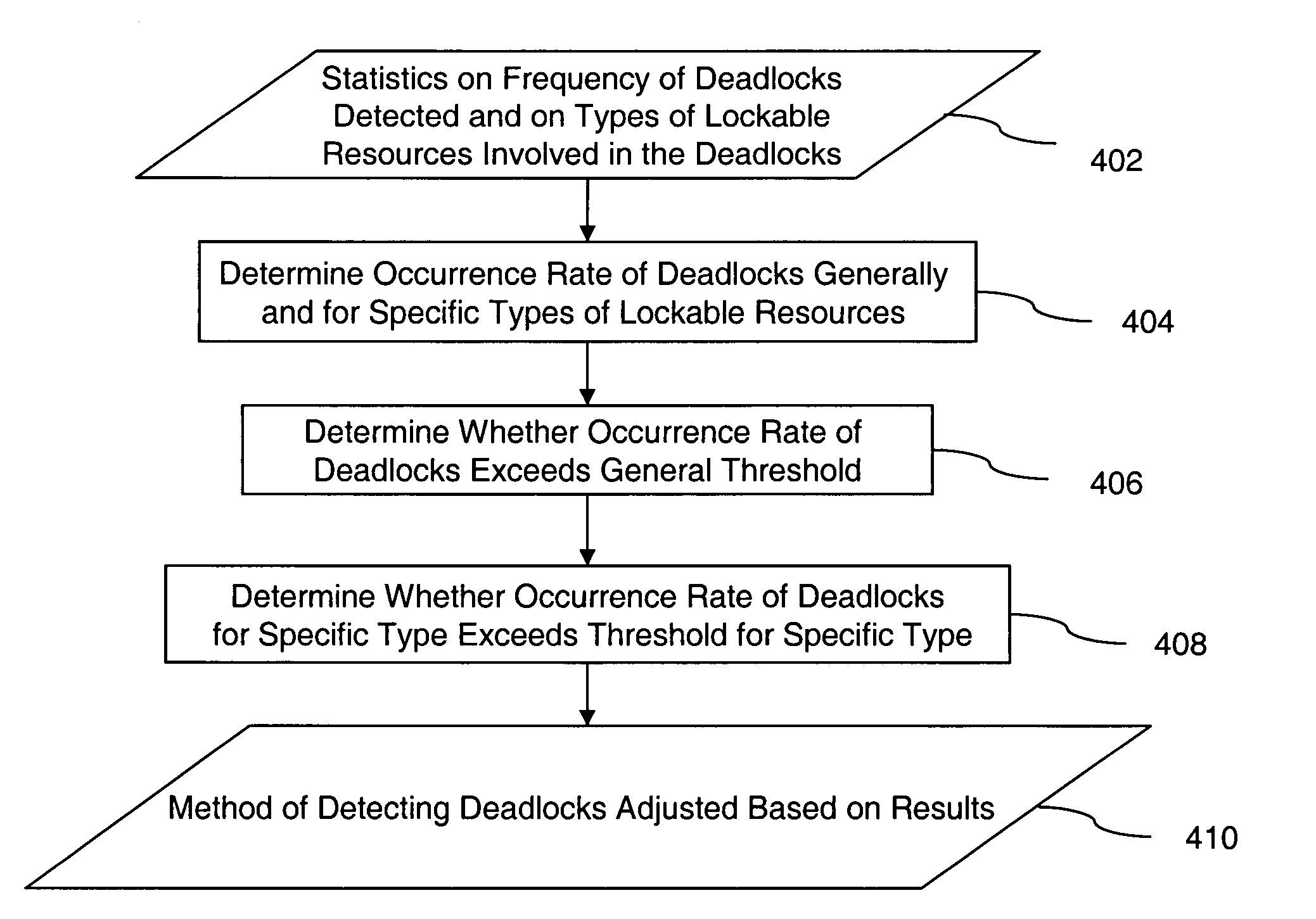

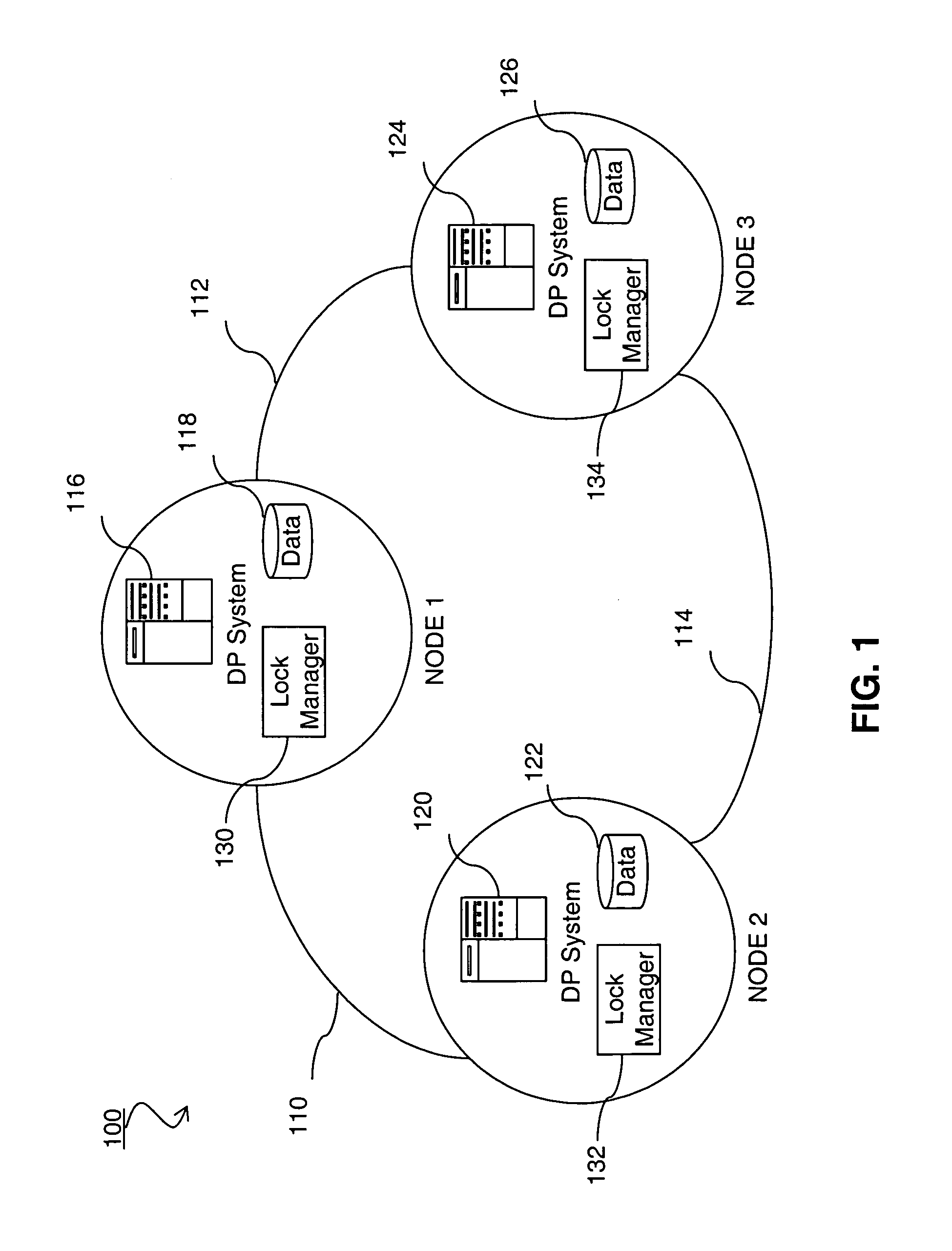

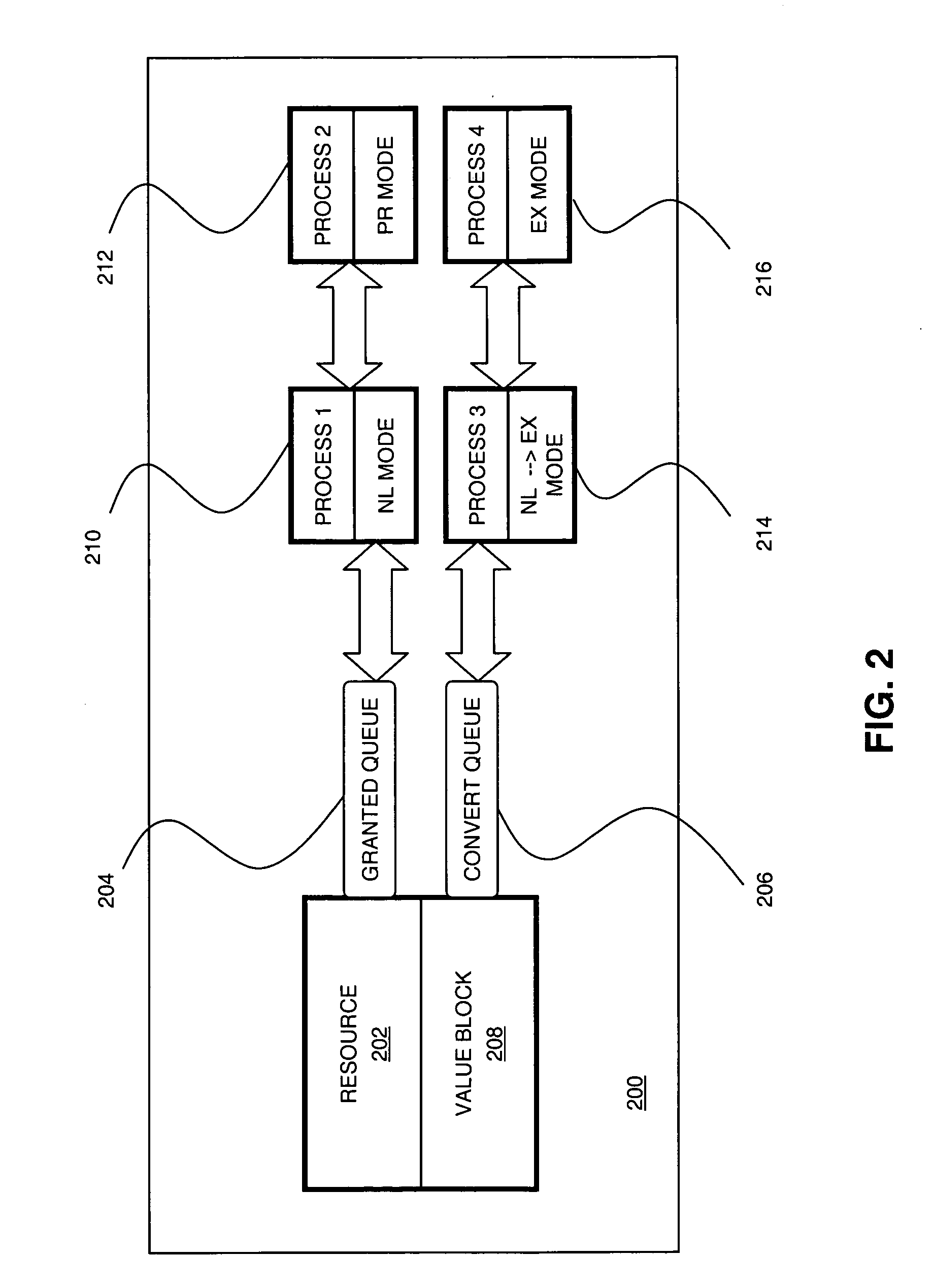

Method and system for deadlock detection in a distributed environment

ActiveUS20060206901A1Reduce processing timeEasy to detectUnauthorized memory use protectionMultiprogramming arrangementsDeadlockReal-time computing

A method of deadlock detection is disclosed which adjusts the detection technique based on statistics maintained for tracking the number of actual deadlocks that are detected in a distributed system, and for which types of locks are most frequently involved in deadlocks. When deadlocks occur rarely, the deadlock detection may be tuned down, for example, by reducing a threshold value which determines timeouts for waiting lock requests. When it is determined that actual deadlocks are detected frequently, the processing time for deadlock detection may be reduced, for example, by using parallel forward or backward search operations and / or by according higher priority in deadlock detection processing to locks which are more likely to involve deadlocks.

Owner:ORACLE INT CORP

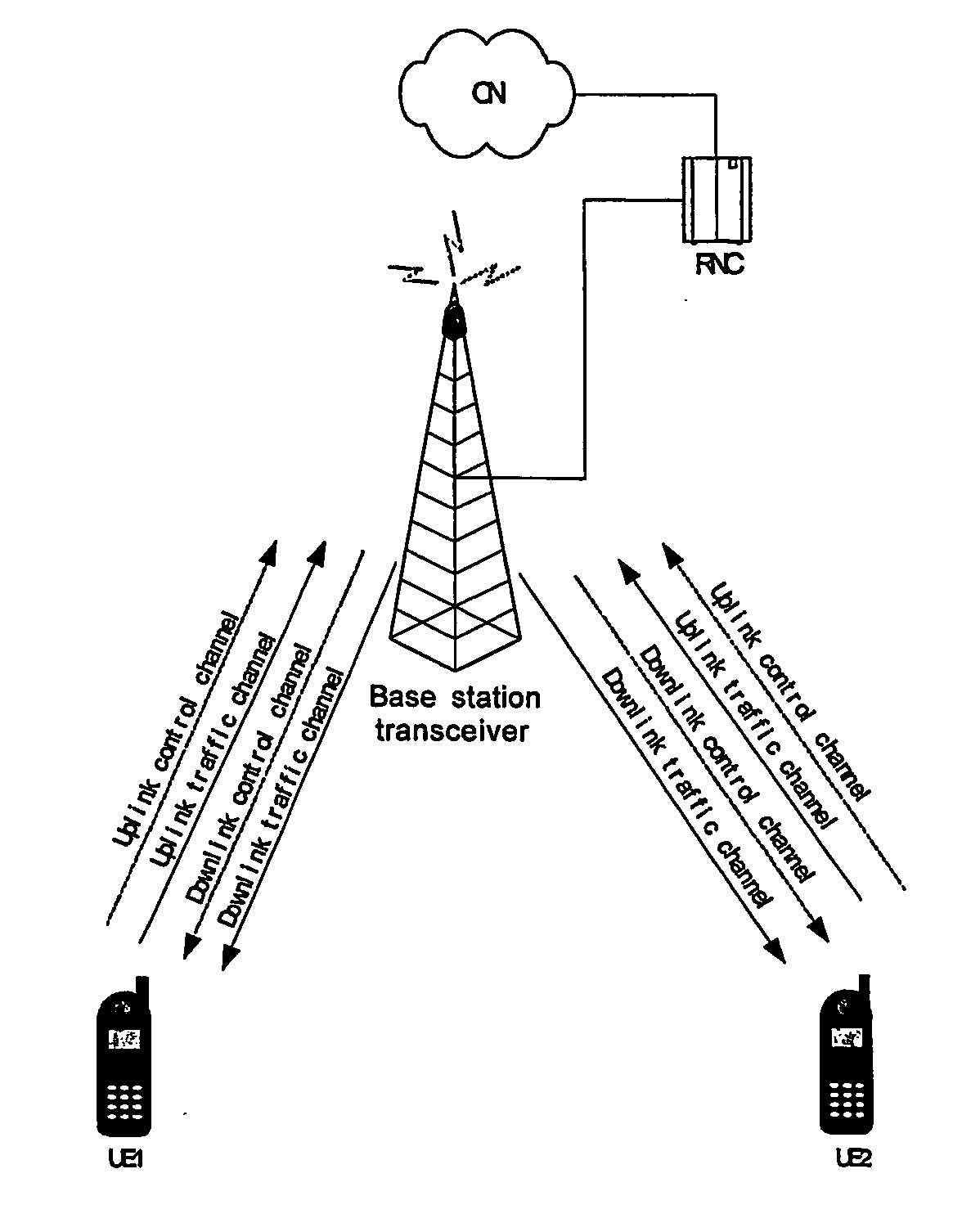

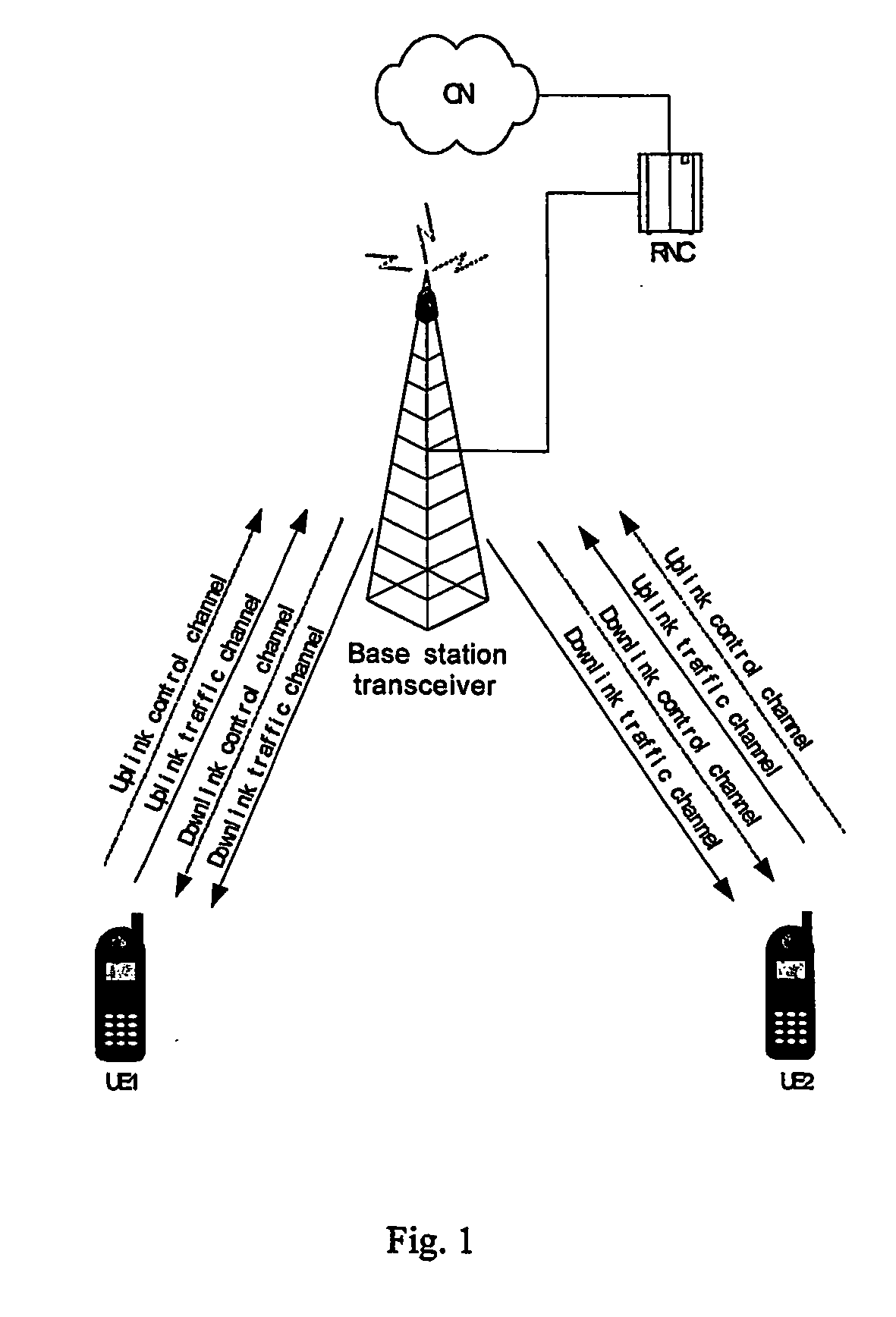

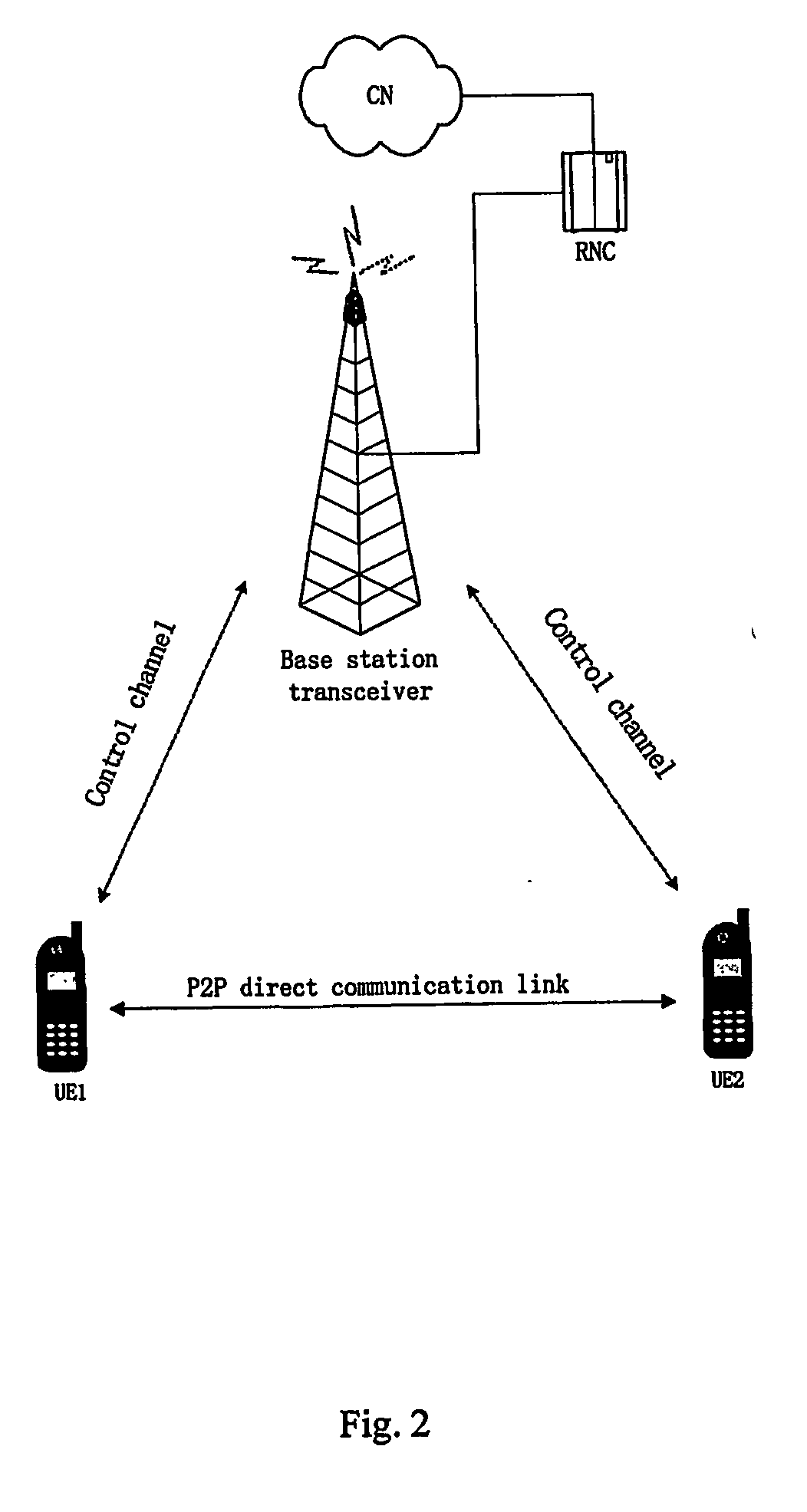

Method and apparatus for uplink synchronization maintenance with p2p communication in wireless communication networks

InactiveUS20060258383A1Increase transmit powerReduce system performancePower managementSynchronisation arrangementCommunications systemNetworked system

A method for maintaining uplink synchronization in P2P (Peer to Peer) communication, performed by a user equipment in wireless communication systems, comprising: negotiating with a wireless communication network system about the schedule and parameters for controlling the uplink synchronization of the user equipment by the procedure of establishing P2P direct link; transmitting testing signals to the network system via a customized uplink channel according to the negotiated parameters; receiving the control information transmitted via a customized downlink channel from the network system according to the negotiated schedule; and maintaining uplink synchronization of the user equipment in P2P communication according to the control information. Uplink synchronization can be realized through the customized channel, so the present invention can overcome the problem of overload brought by implementing uplink synchronization through the downlink common control channel and system performance degradation caused by increasing the transmitting power of the user equipment to transmit P2P signals.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

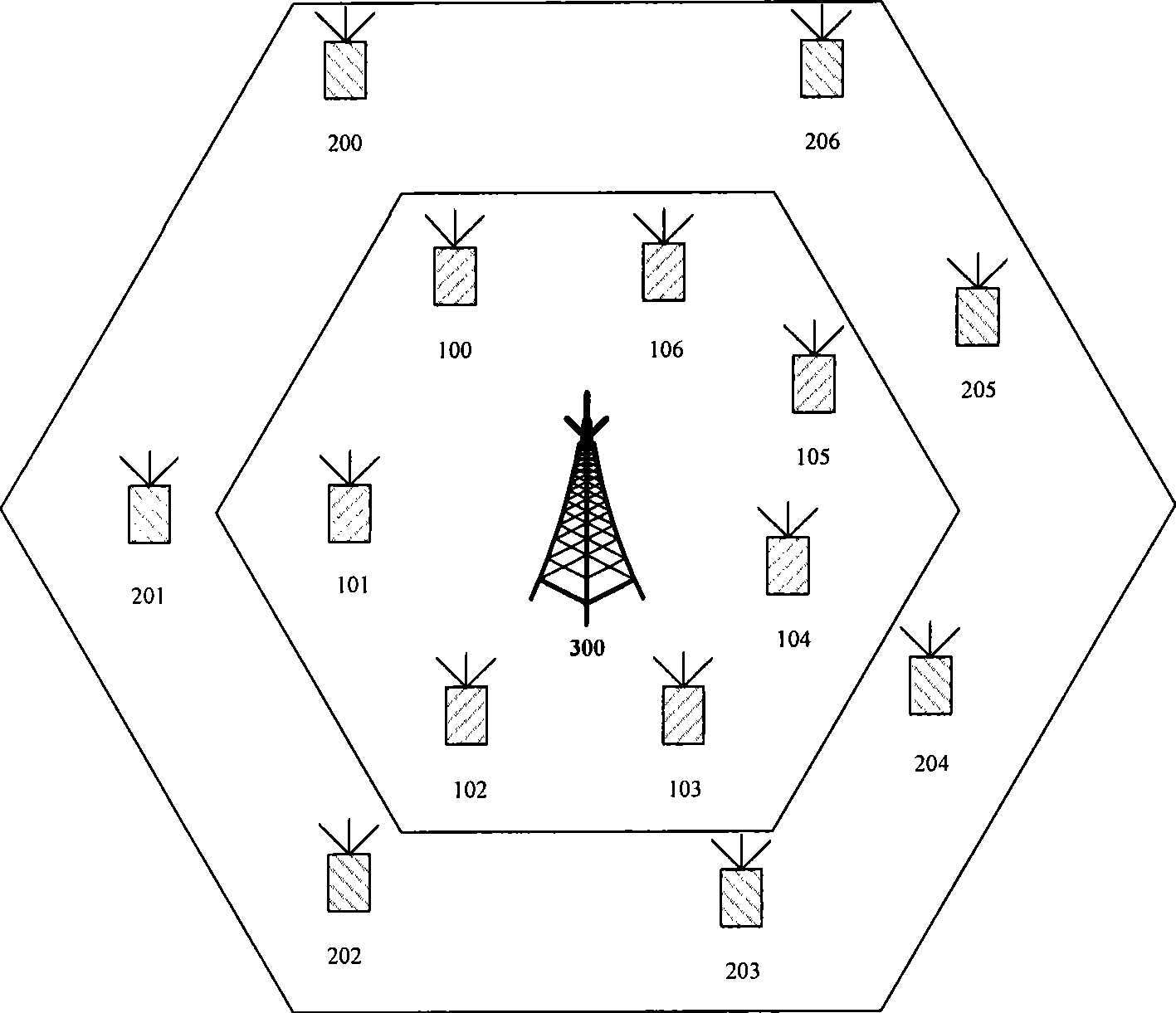

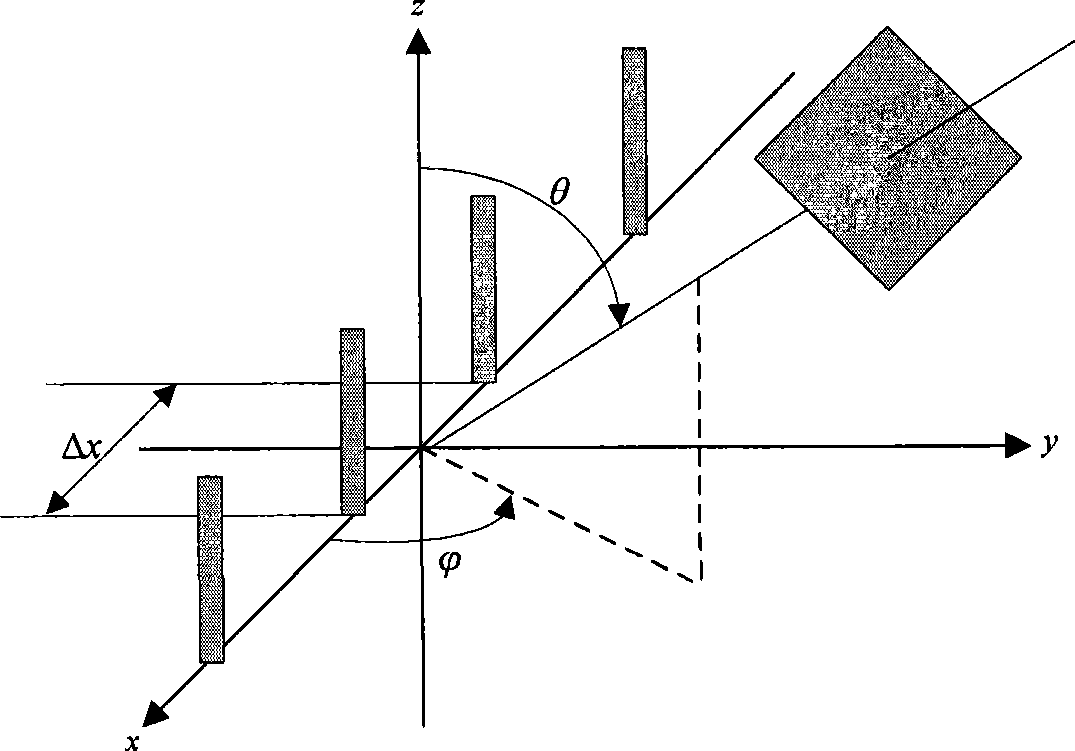

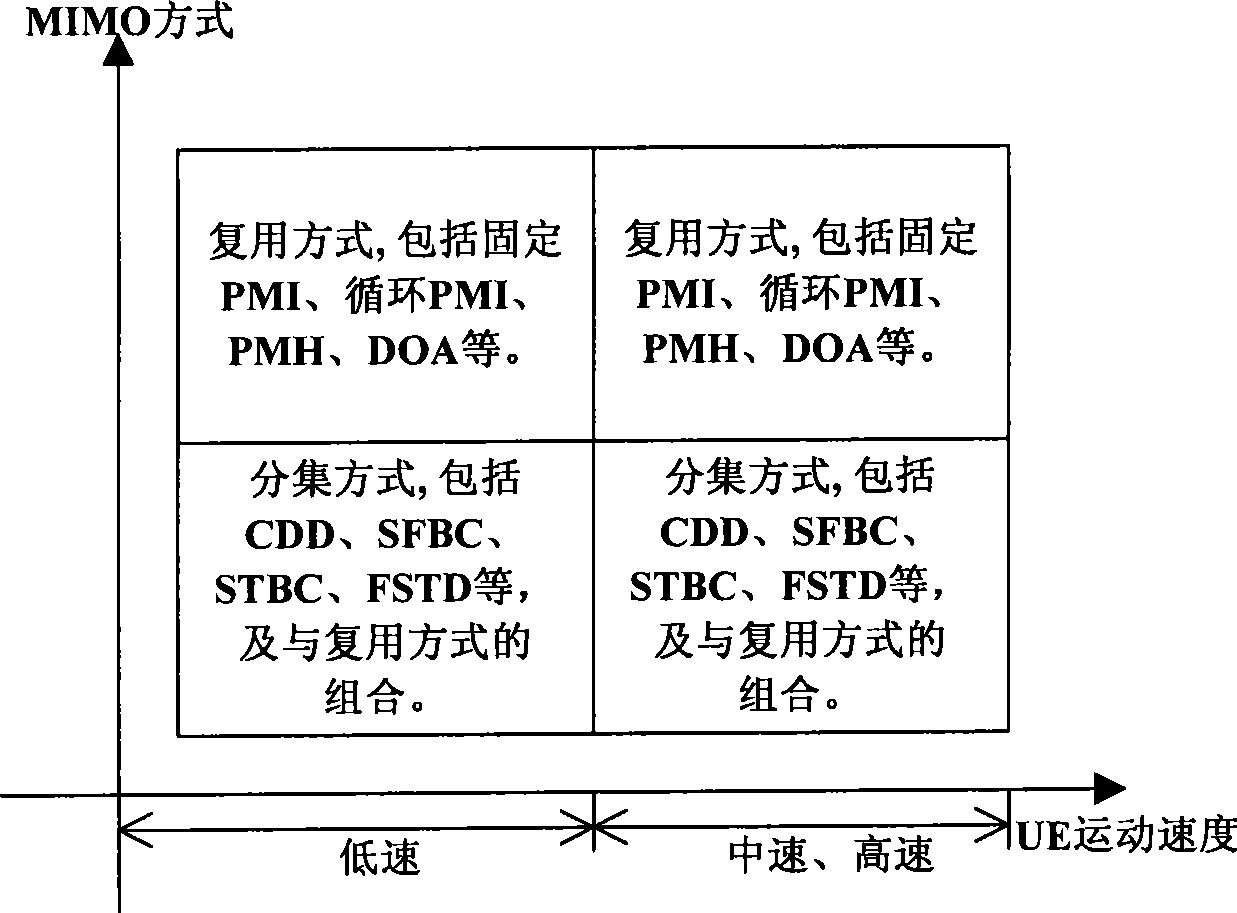

Open loop MIMO method, base station and user equipment based on direction of arrival

InactiveCN101505205AReduce signaling overheadReduce system performanceSpatial transmit diversityWireless communicationMoving speedLow speed

The invention discloses an open-loop MIMO method based on direction of arrival, a base station and user equipment, which are used in the technical field of wireless transmission. The method comprises the following steps that: the user equipment is divided into low-speed, middle-speed and high-speed user equipment according to the moving speed of the user equipment; the low-speed user equipment is set to adopt a closed-loop MIMO mode, and the middle-speed and high-speed user equipment is set to adopt an open-loop MIMO mode; then a sending end measures the direction of arrival of a feedback link, and estimates a precoding matrix index number of a sending link according to the direction of arrival obtained through the measurement; a rank of an MIMO system is decided; an MIMO sending mode is decided according to the rank and the precoding matrix index number; then the MIMO mode and the rank are sent to a receiving end; the receiving end intercepts the MIMO mode and the rank; and finally, the receiving end performs feedback according to intercepted information. The invention aims at the middle-speed and high-speed user equipment to provide an open-loop MIMO system and an open-loop MIMO device based on the direction of arrival, and has the characteristics of simple design and good system performance.

Owner:SHARP KK

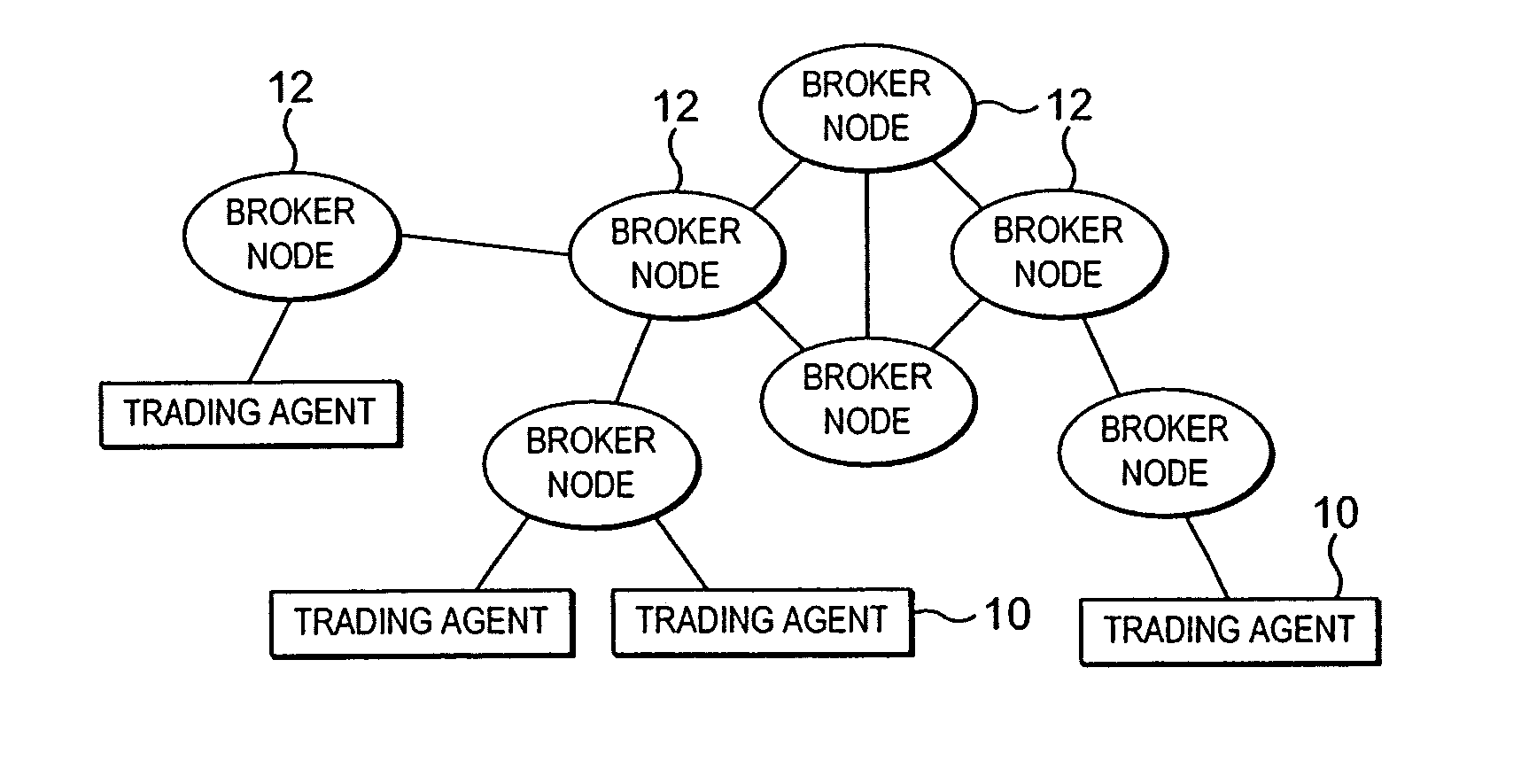

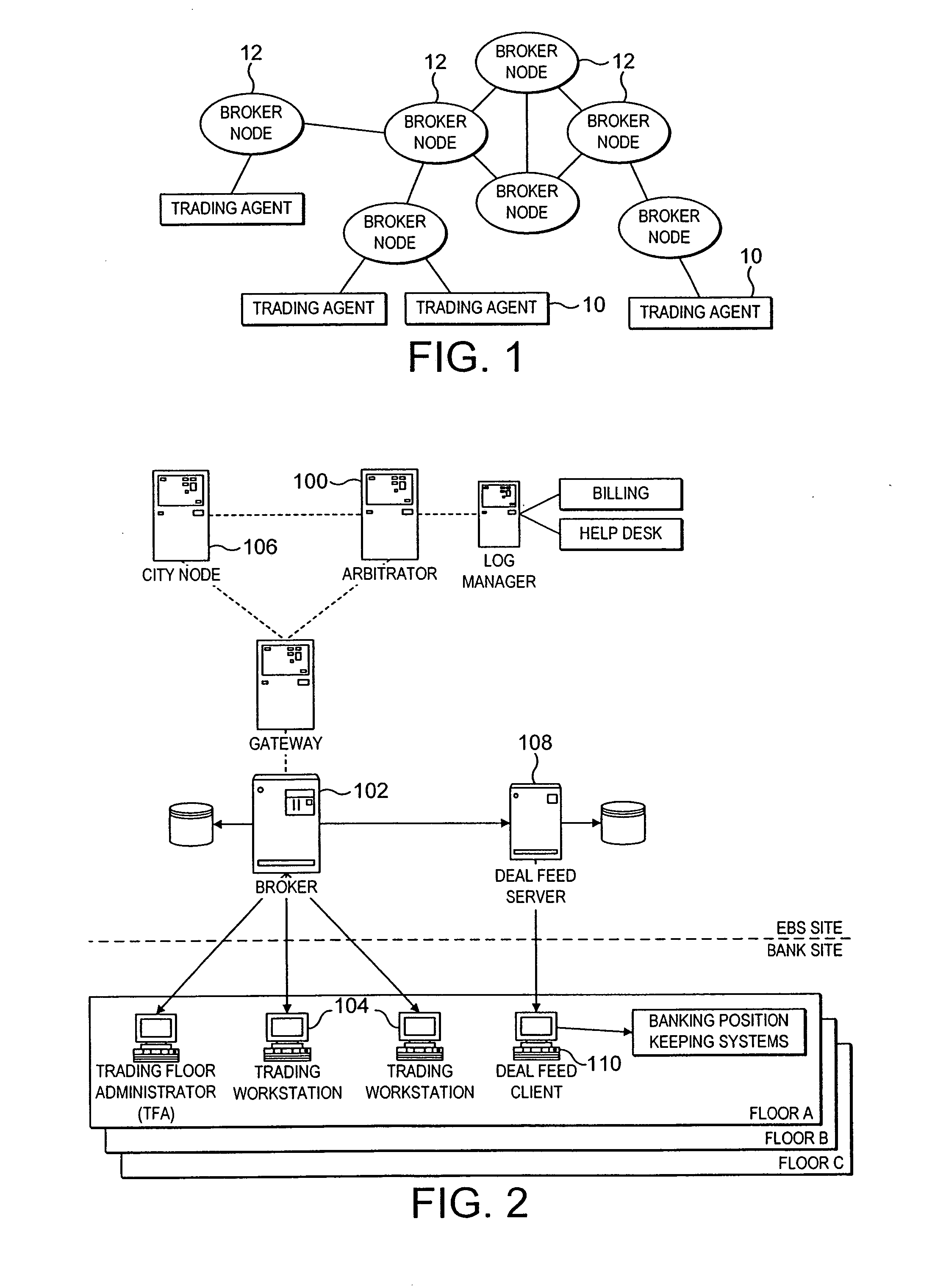

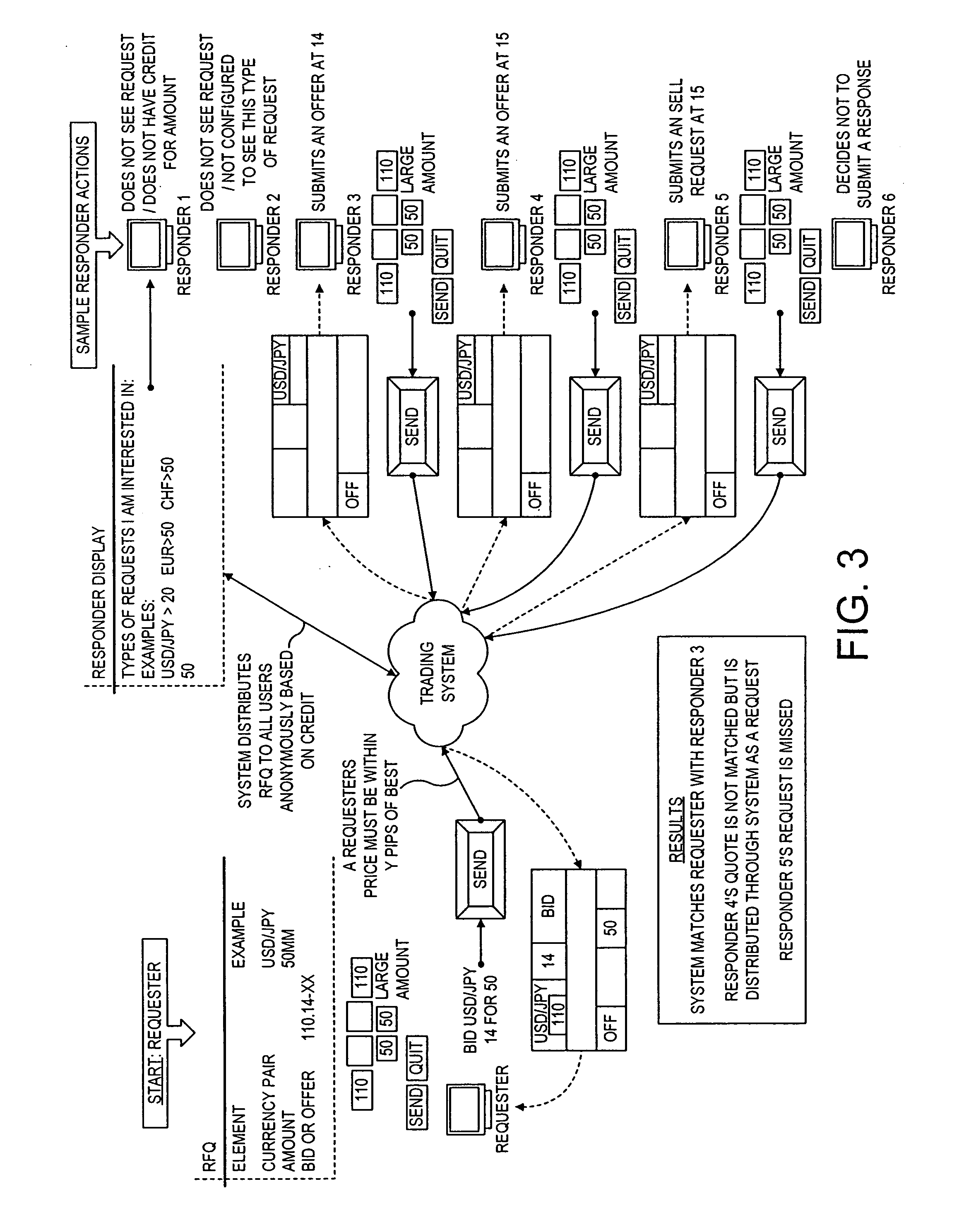

Price display in an anonymous trading system

InactiveUS20050283426A1Avoiding unnecessary messageReduce system performanceFinanceSpecial data processing applicationsRequest for quotationComputer science

An anonymous trading system comprises an interconnected network of broking nodes arranged in cliques which receive buy and sell orders from trader terminals via connected trading engines and which match persistent orders, executed deals and distribute price information to trader terminals. The system has the ability for a trader to call out for a quote (request for a quote) anonymously. RFQs are only transmitted on to potential counterparties if they are within a predetermined price range. The RFQs include a price at which the requesting party is willing to deal, but this price is not communicated potential counterparties. Counterparty responses are matches on a time, price basis and non-matched responses may be matched with themselves. Similarly, non-matched RFQs may be matched with each other.

Owner:EBS GROUP +1

Sound source separation device, speech recognition device, mobile telephone, sound source separation method, and program

ActiveUS8112272B2Reduce system performanceSignal processingTransducer detailsSound source separationFrequency spectrum

A sound source signal from a target sound source is allowed to be separated from a mixed sound which consists of sound source signals emitted from a plurality of sound sources without being affected by uneven sensitivity of microphone elements. A beamformer section 3 of a source separation device 1 performs beamforming processing for attenuating sound source signals arriving from directions symmetrical with respect to a perpendicular line to a straight line connecting two microphones 10 and 11 respectively by multiplying output signals from the microphones 10 and 11 after spectrum analysis by weighted coefficients which are complex conjugate to each other. Power computation sections 40 and 41 compute power spectrum information, and target sound spectrum extraction sections 50 and 51 extract spectrum information of a target sound source based on a difference between the power spectrum information.

Owner:ASAHI KASEI KK

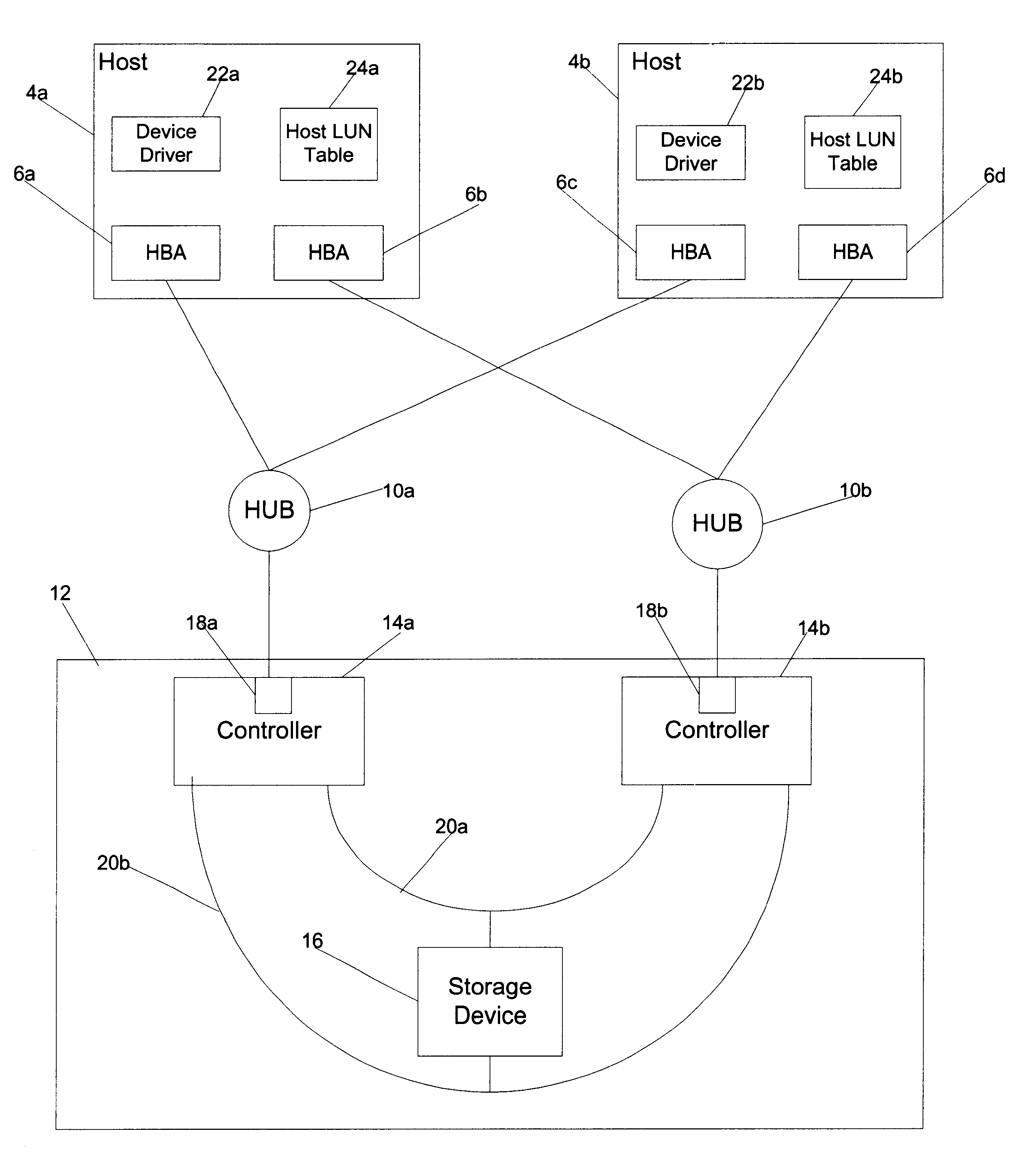

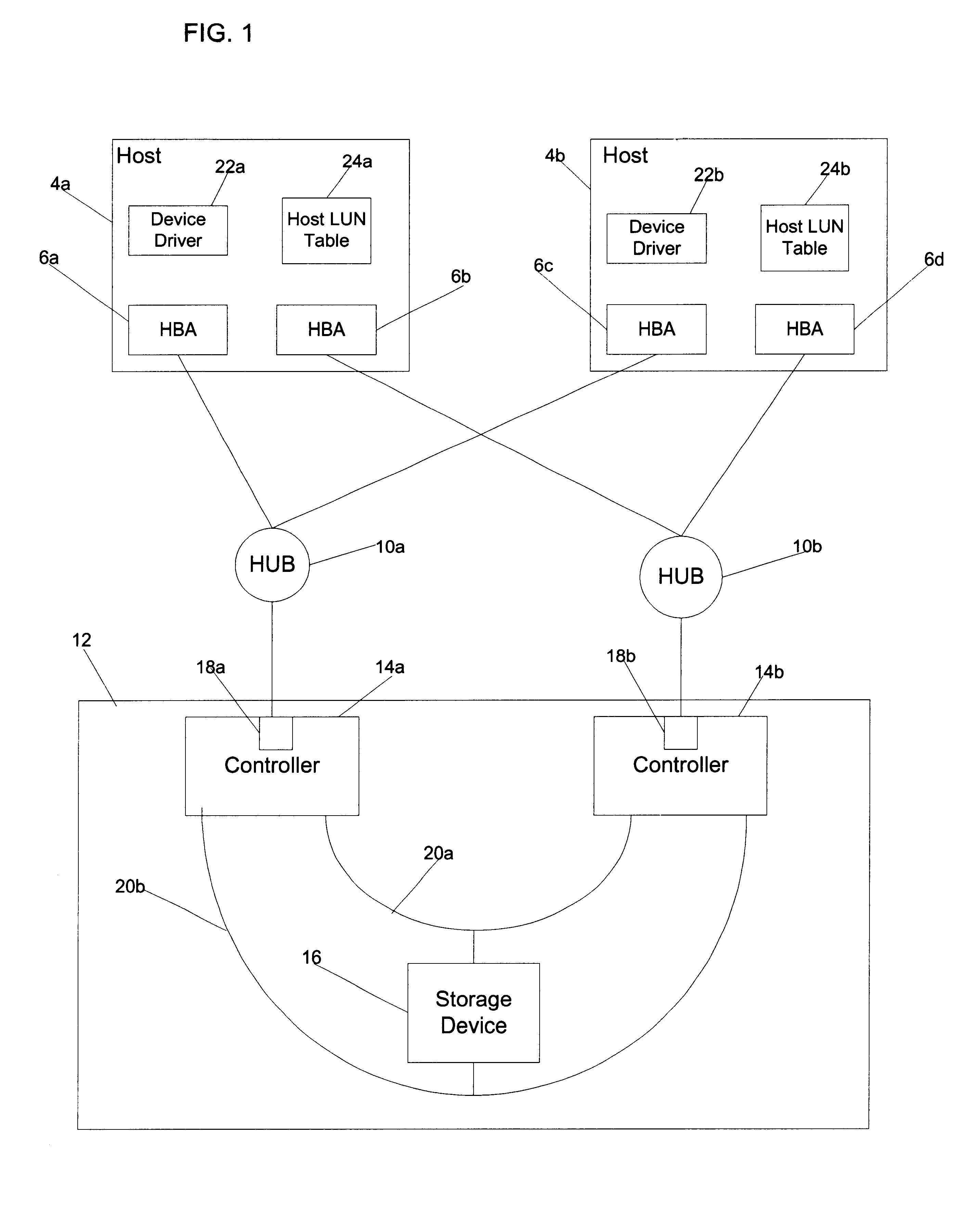

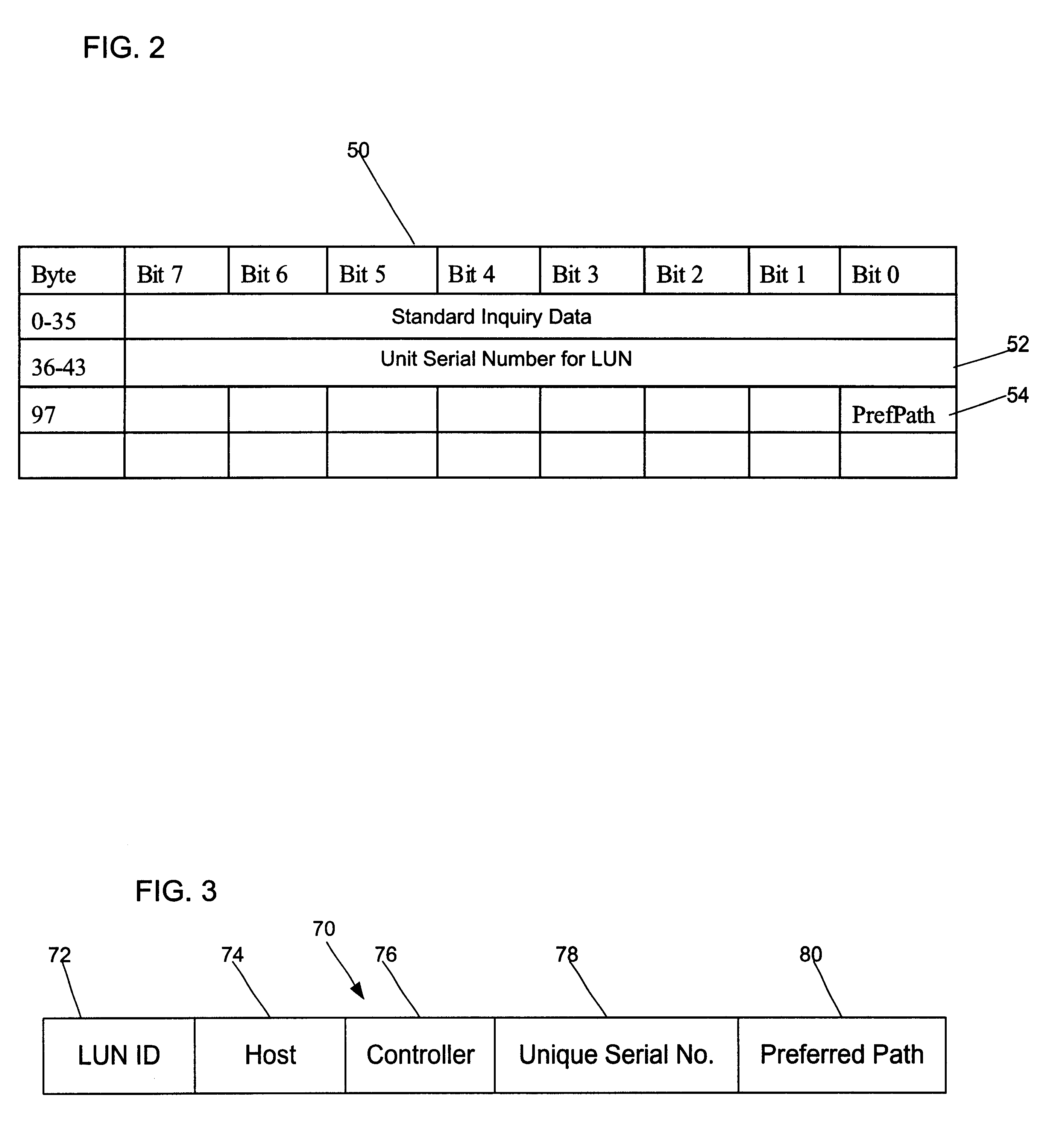

Method, system, and program for modifying preferred path assignments to a storage device

InactiveUS6393535B1Increase overheadReduce system performanceInput/output to record carriersError detection/correctionComputer hardwareData structure

Disclosed is a method, system, program, and data structure for defining paths for a computer to use to send commands to execute with respect to storage regions in a storage device that are accessible through at least two controllers. For each storage region, one controller is designated as a preferred controller and another as a non-preferred controller. The computer initially sends a command to be executed with respect to a target storage region to the preferred controller for the target storage region and sends the command to the non-preferred controller for the target storage region if the preferred controller cannot execute the command against the target storage region. In response to the non-preferred controller receiving at least one command for the target storage region, the designation is modified to make a current preferred controller the non-preferred controller for the target storage region and a current non-preferred controller the preferred controller for the target storage region.

Owner:IBM CORP

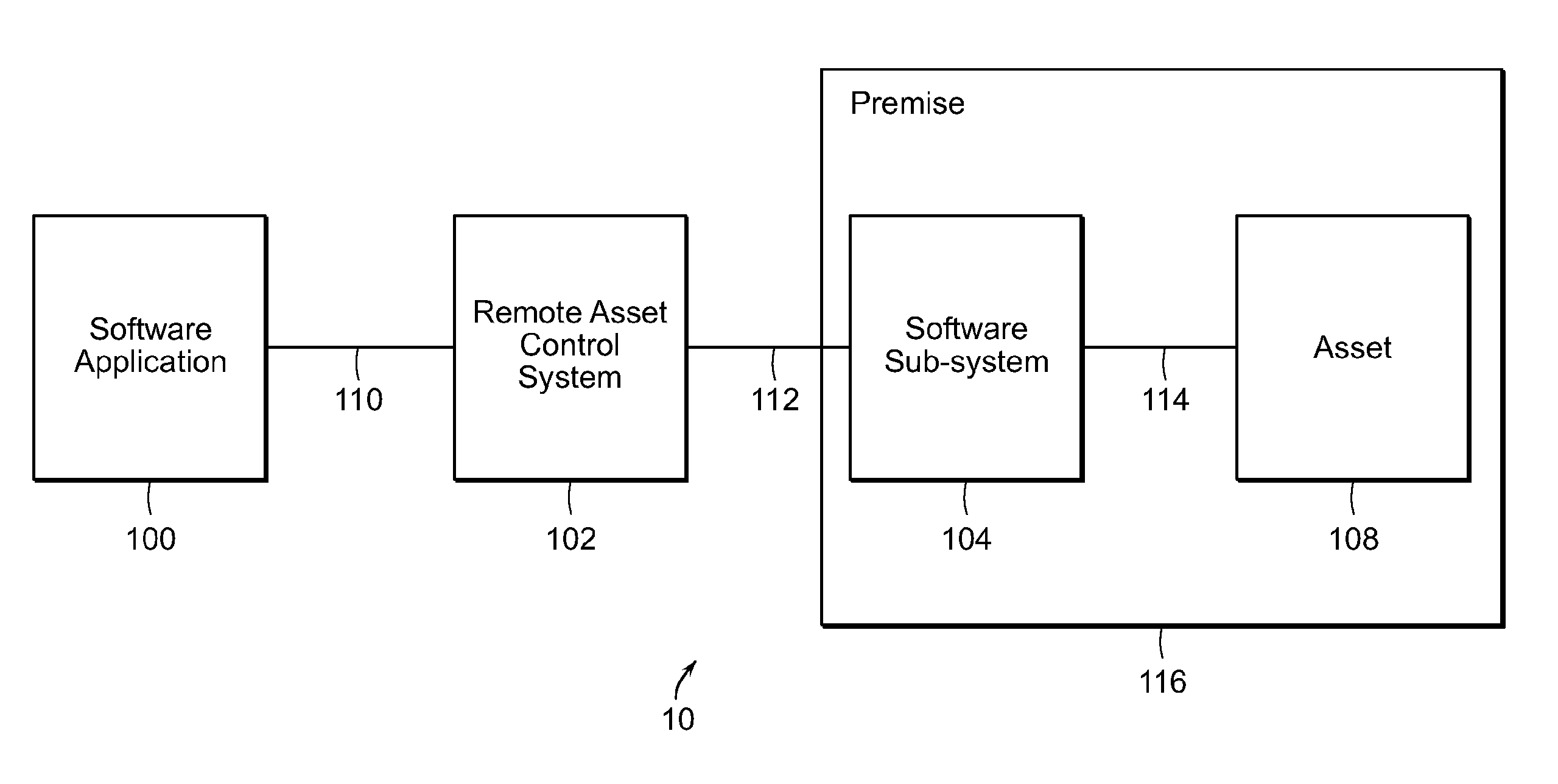

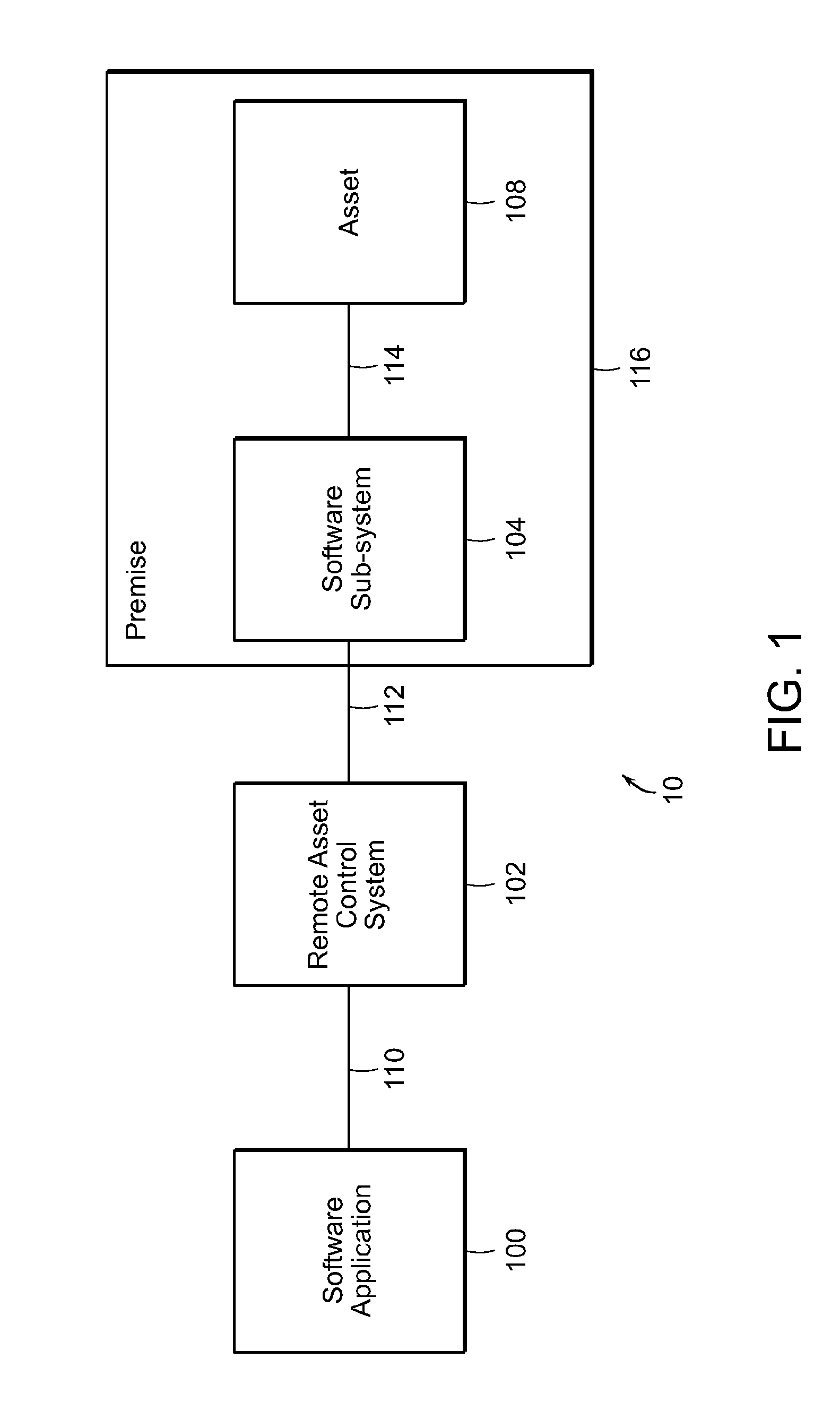

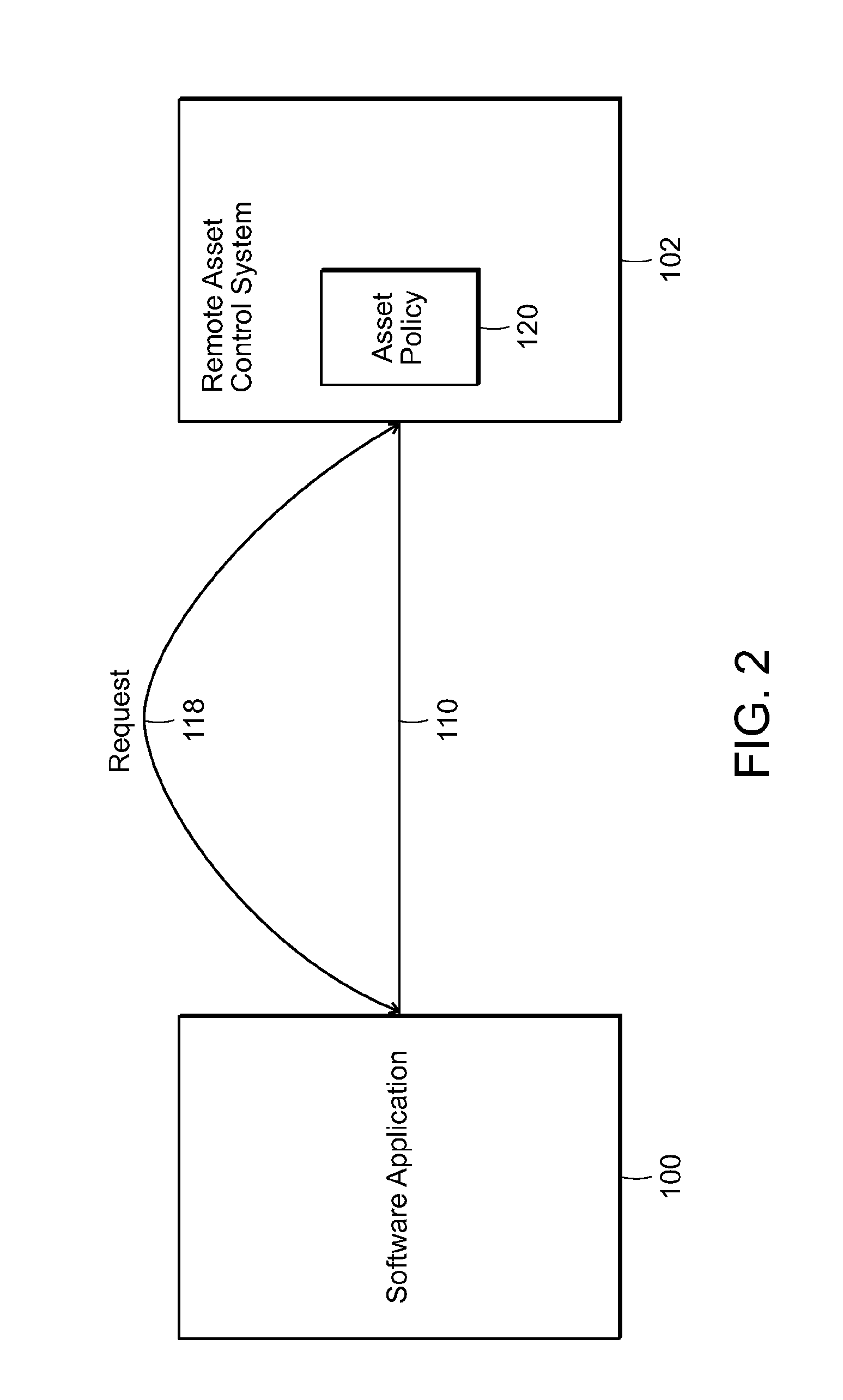

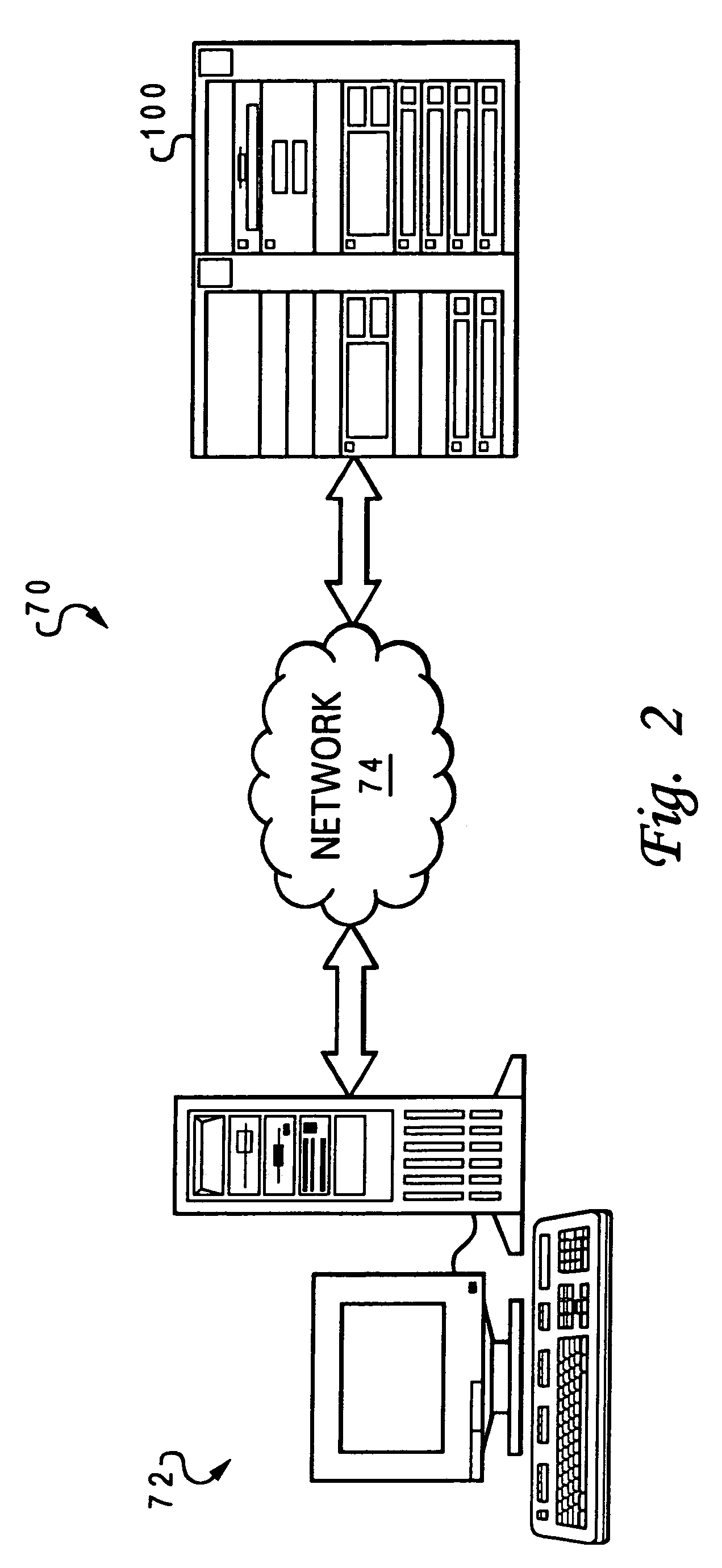

Remote asset control systems and methods

InactiveUS20130325997A1Minimize potentialSimple and reliable processTransmissionSystem maintenanceNetwork communication

A remote asset control system for optimized asset performance under a variety of circumstances, such as network communication path failures, software maintenance, software faults, hardware faults, hardware maintenance, computer system maintenance, computer system failure, undetected data errors, configuration errors, human error, power outages, malicious network attacks, and the like, having a means to create, modify, and delete asset policies, an object oriented asset policy inheritance scheme that generates composite asset policies, an asset policy transference and caching scheme, condition driven asset policy enforcement, permission-based asset policy mechanism for throttling energy or water consumption, asset replacement simplification, query capability to enumerate actual asset deviance as compared to the currently enforced composite asset policy, real-time control asset policies, atomic activation and deactivation of asset policies, which are part of the policy inheritance hierarchy, ensuring composite policy integrity, and multi-tiered telemetry caching and transference.

Owner:ALEKTRONA CORP

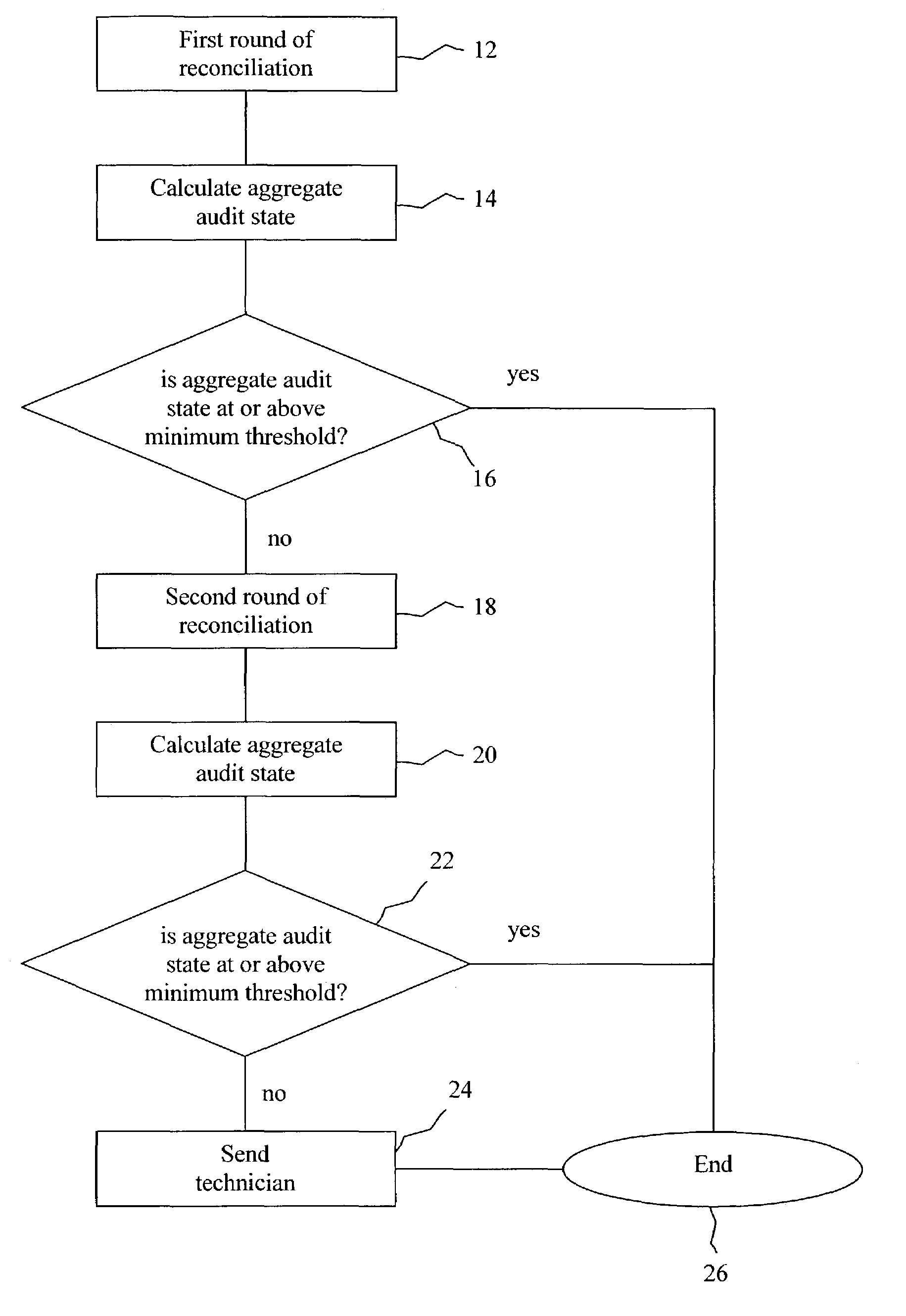

Network model audit and reconciliation using state analysis

InactiveUS7058861B1Reduced availabilityReduce system performanceError detection/correctionDigital computer detailsNetwork modelData store

A method for auditing and reconciliation of a network with a network model that includes identifying an audit state for each network resource included in the network model, storing the audit state for each resource, coupling the stored audit state information with information regarding the resource, reporting a calculated value reflecting the aggregate audit states of the resources, monitoring the calculated value, and triggering a reconciliation process when the calculated value drops below a defined threshold. The calculated value can be a best-case aggregate accuracy percentage, worst-case aggregate accuracy percentage, or presumed average aggregate accuracy percentage. The audit state can be stored as an additional field in the primary data store for the network model or in a separate data store that is associated with the primary data store. The audit state can be unconfirmed, confirmed, or suspect. The audit state is used to trigger and / or measure audit and reconciliation processes.

Owner:SPRINT CORPORATION

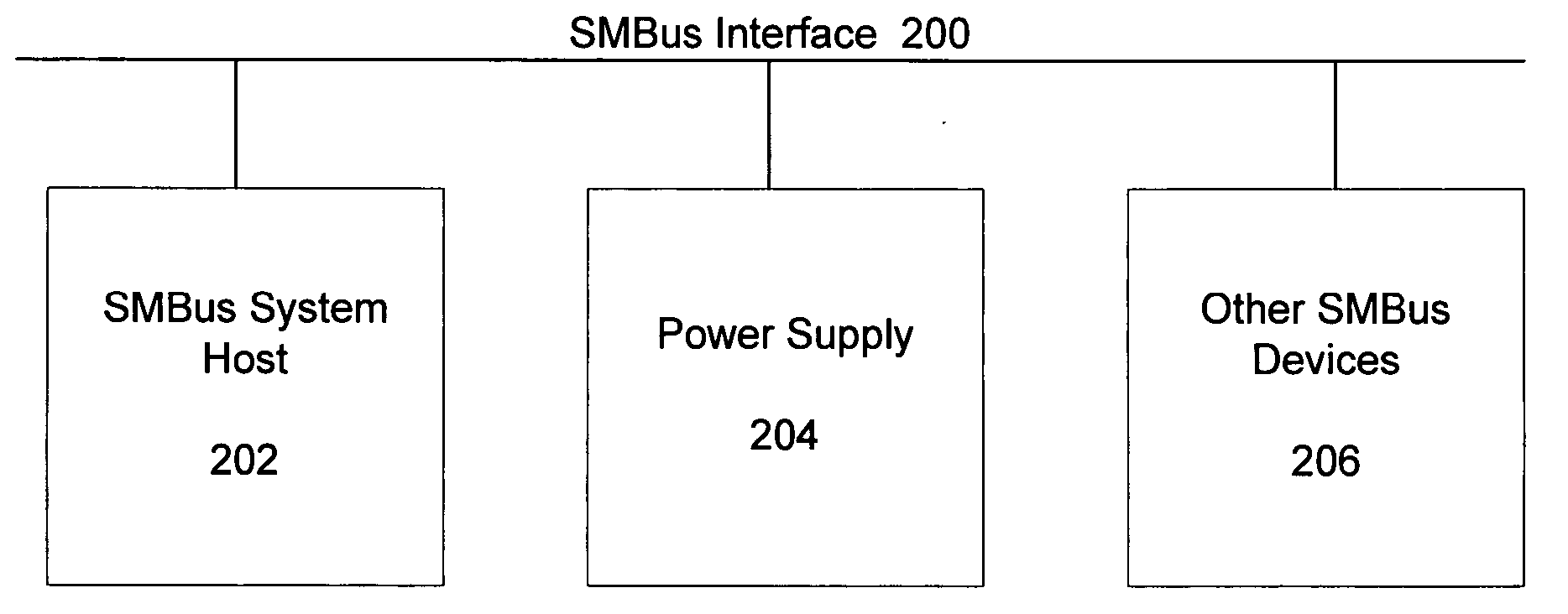

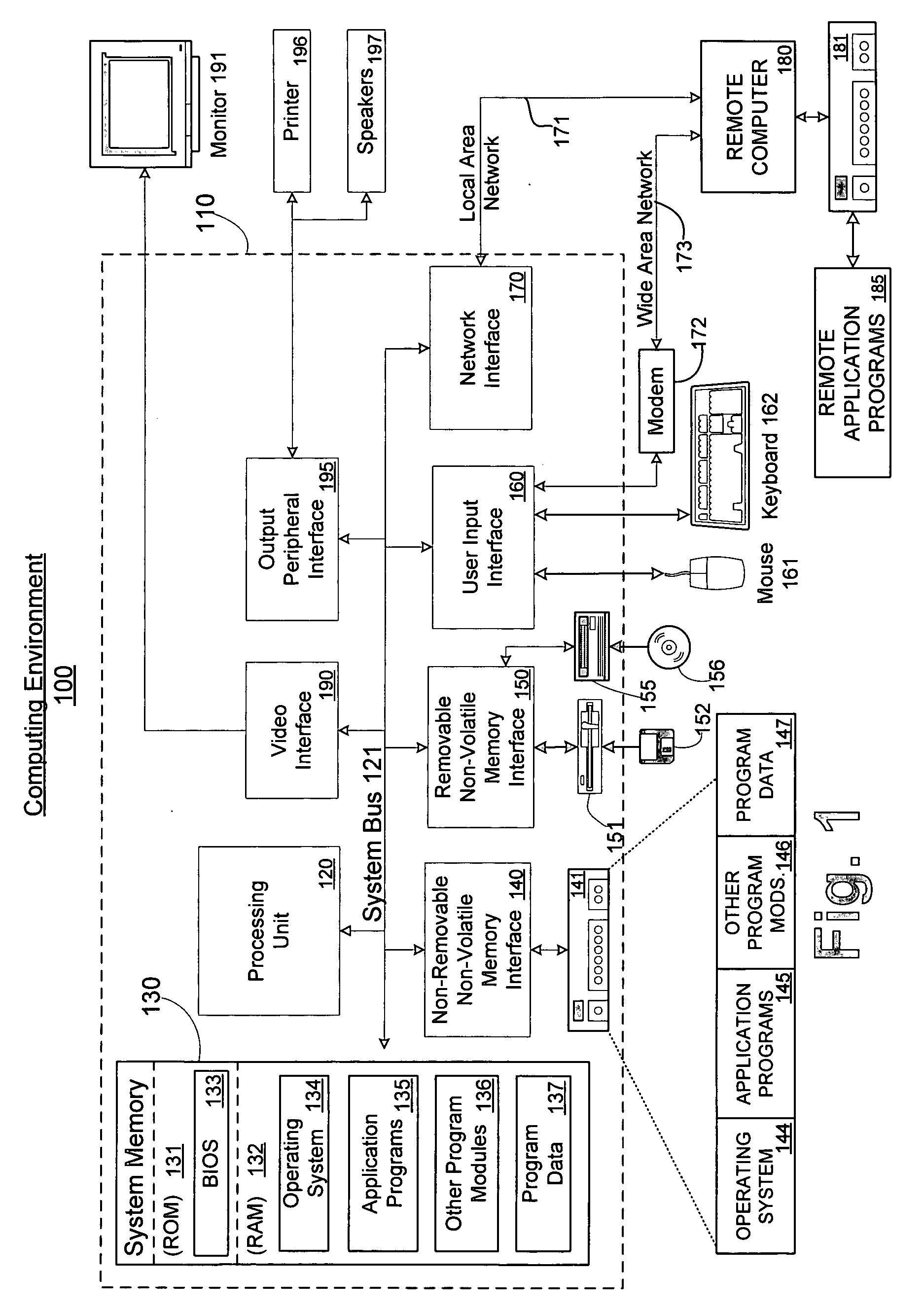

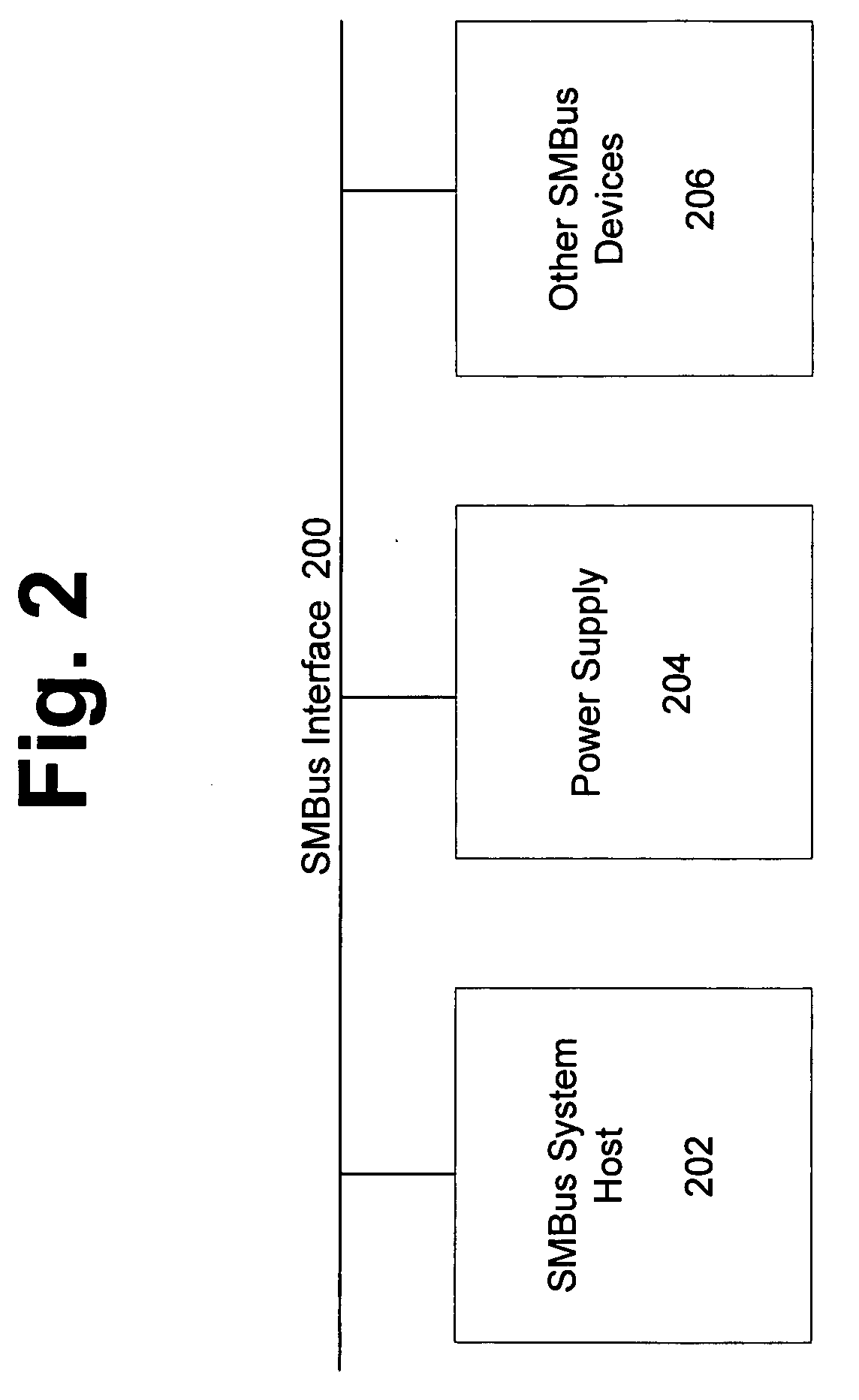

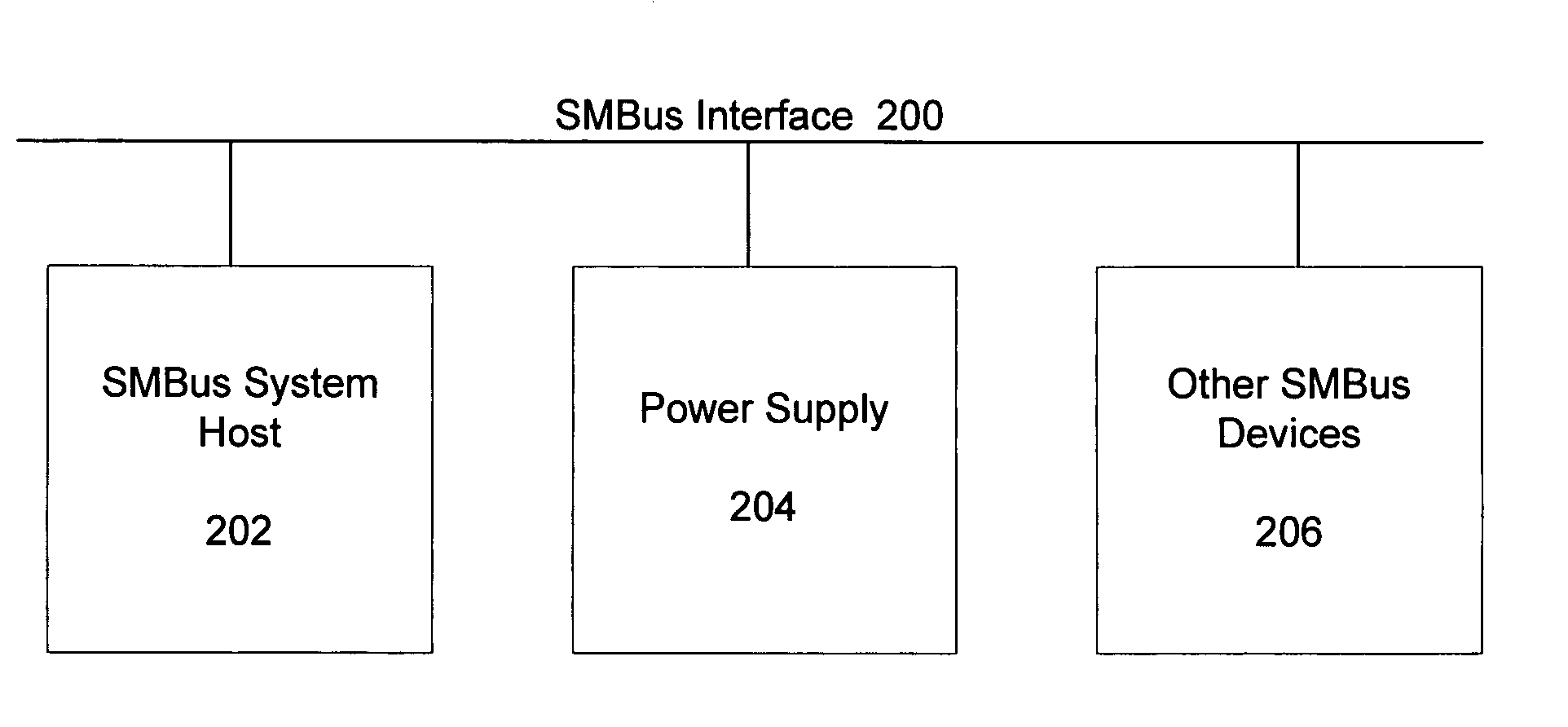

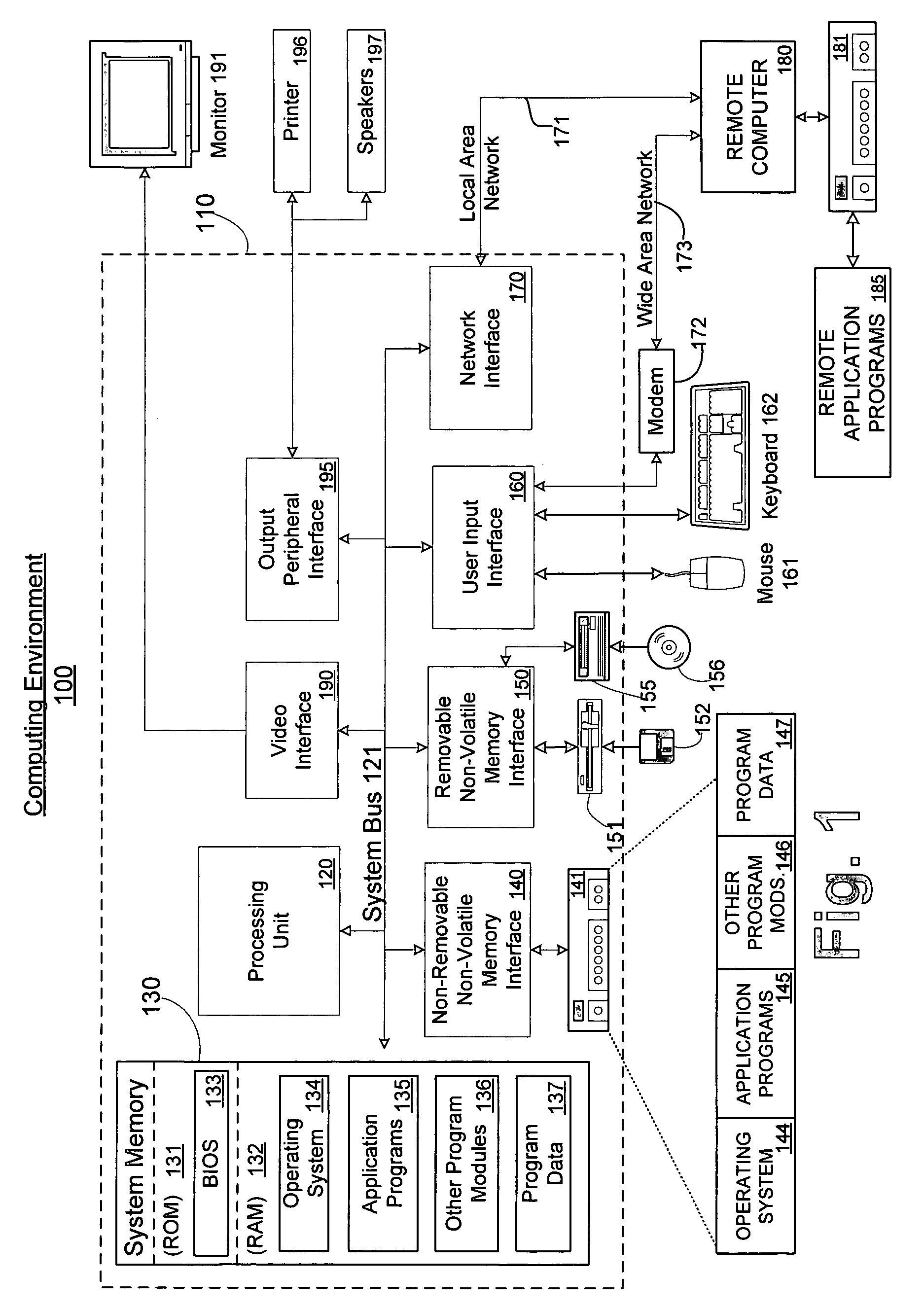

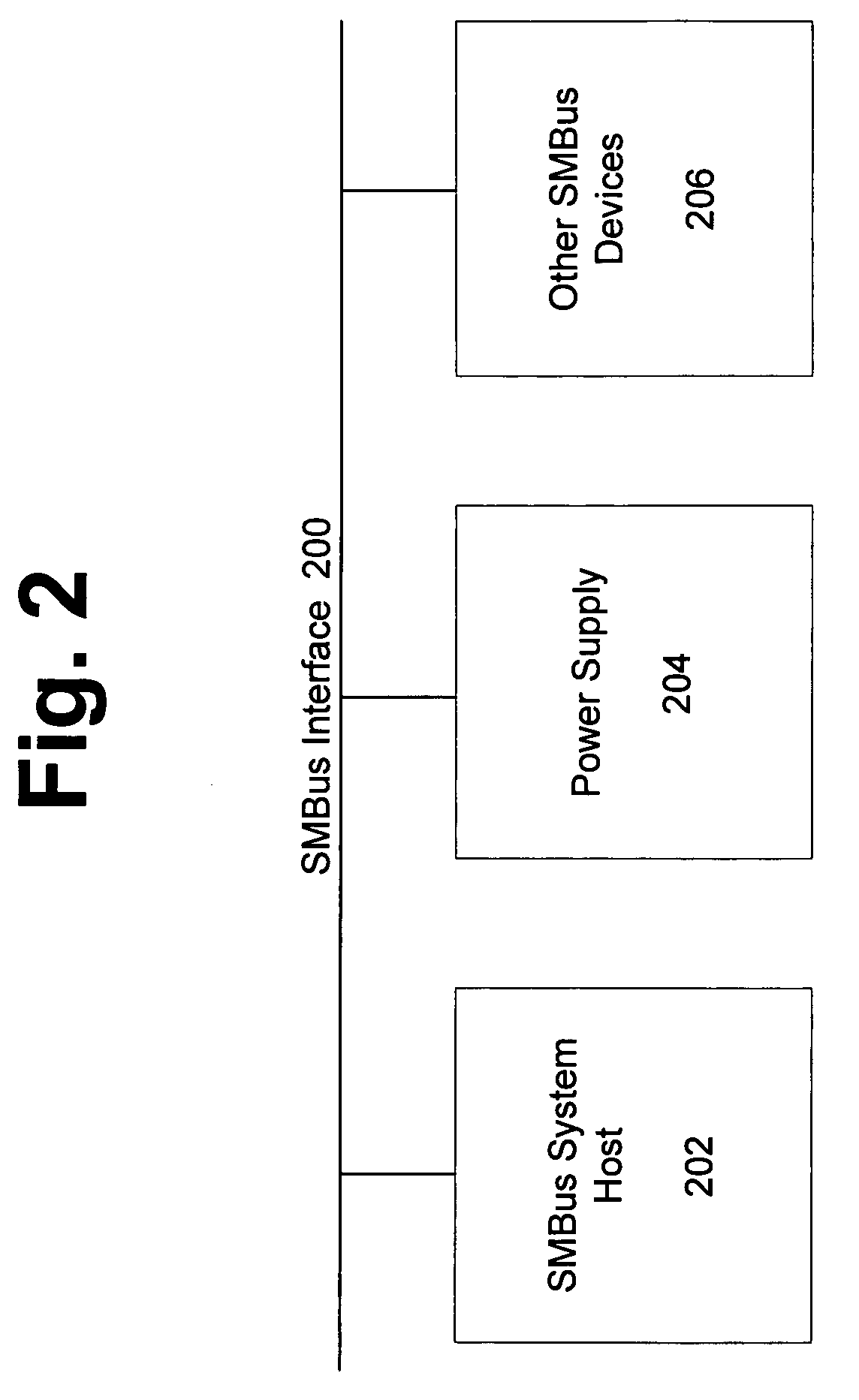

Method for making power supplies smaller and more efficient for high-power PCs

ActiveUS20050182986A1Capacity of power supplyReduce system performanceEnergy efficient ICTVolume/mass flow measurementLow power dissipationSoftware

Systems and methods for reducing the size of a power supply in a computing device by monitoring activities to maintain the load on the power supply below a predetermined threshold. Through a set of software drivers, various components are placed into low power states in order to reduce the load on the power supply. The methods allow manufacturers to utilize smaller power supplies that are better fitted for the actual operating conditions of the computing device, rather than a large power supply designed for worst case conditions.

Owner:MICROSOFT TECH LICENSING LLC

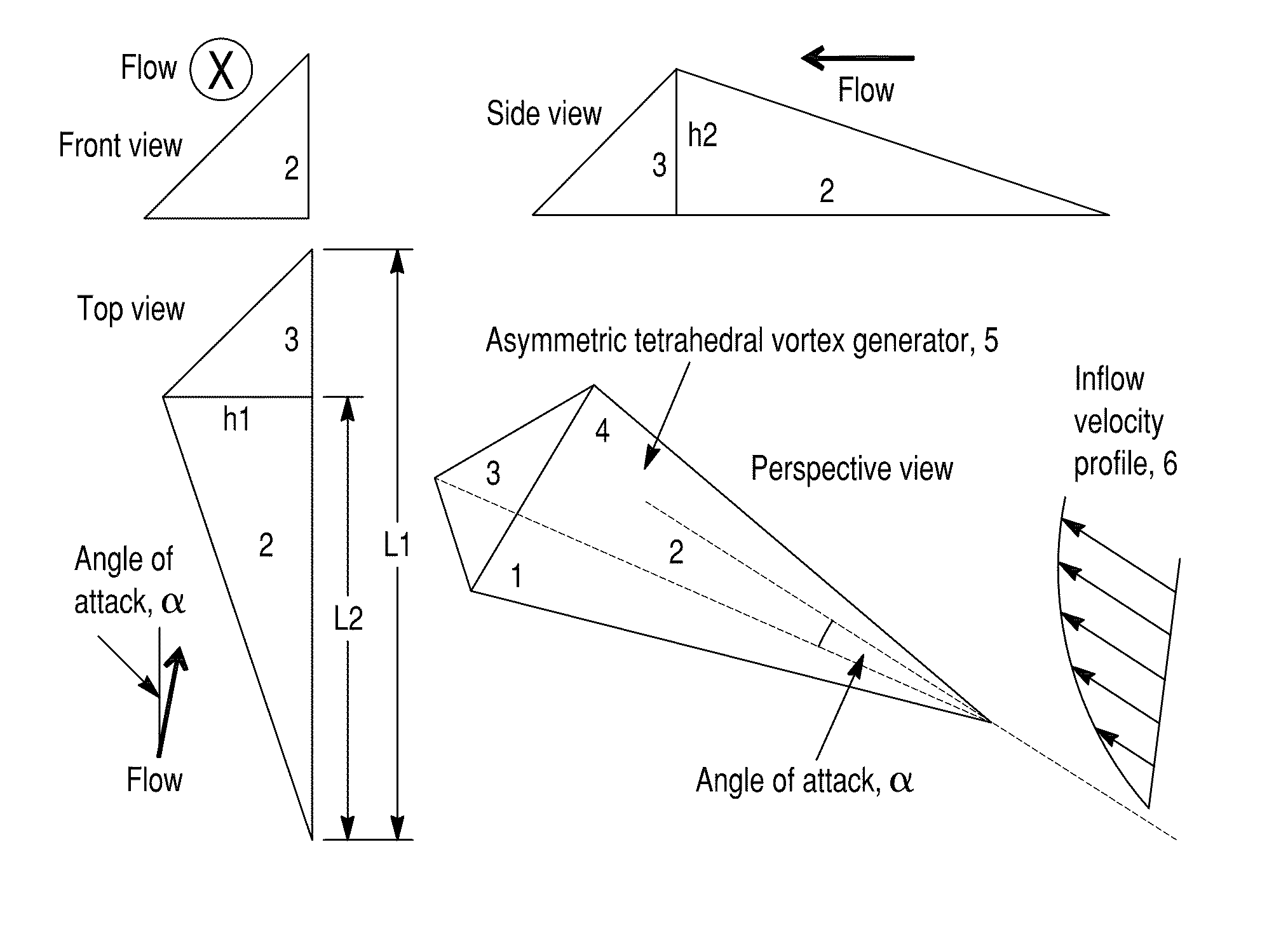

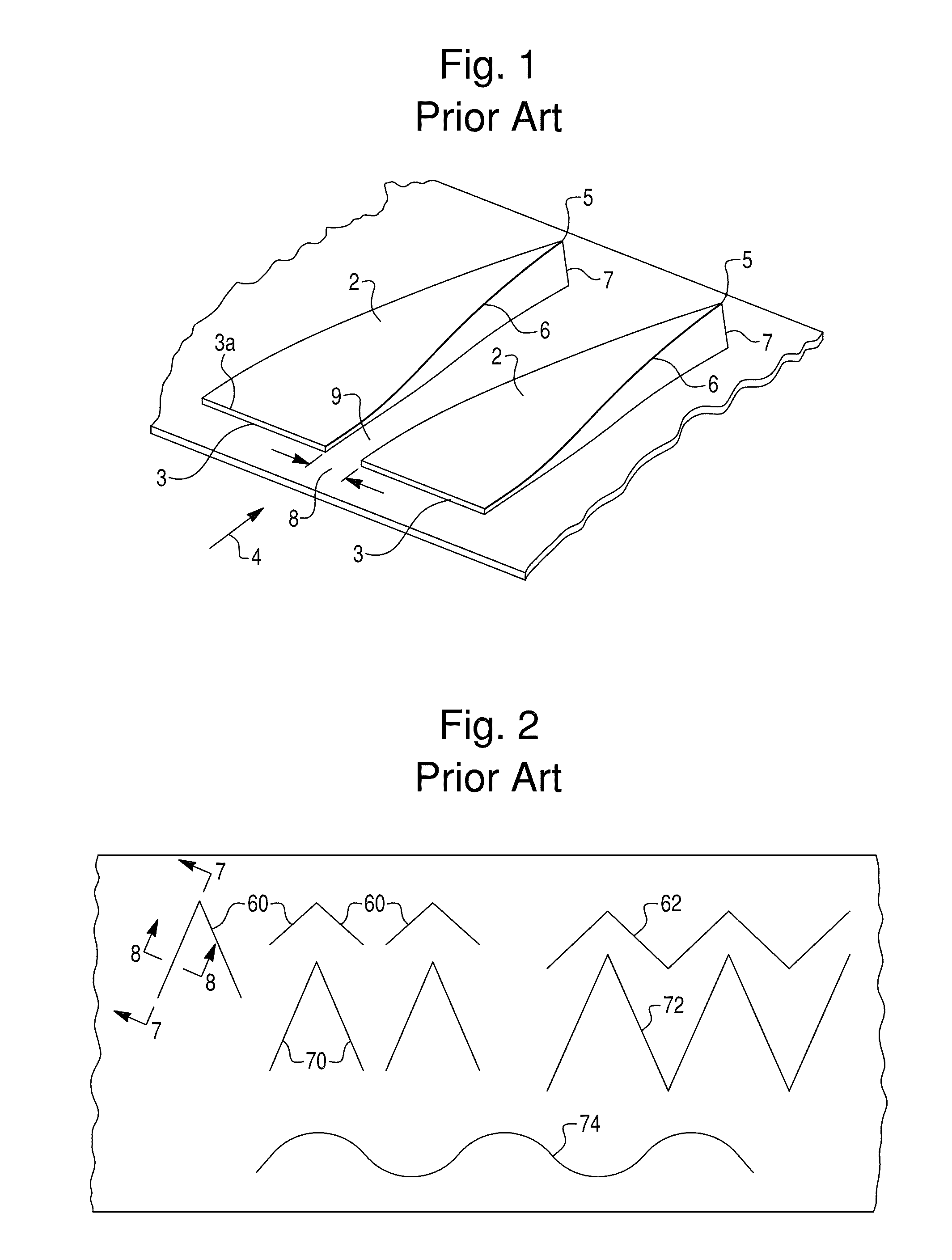

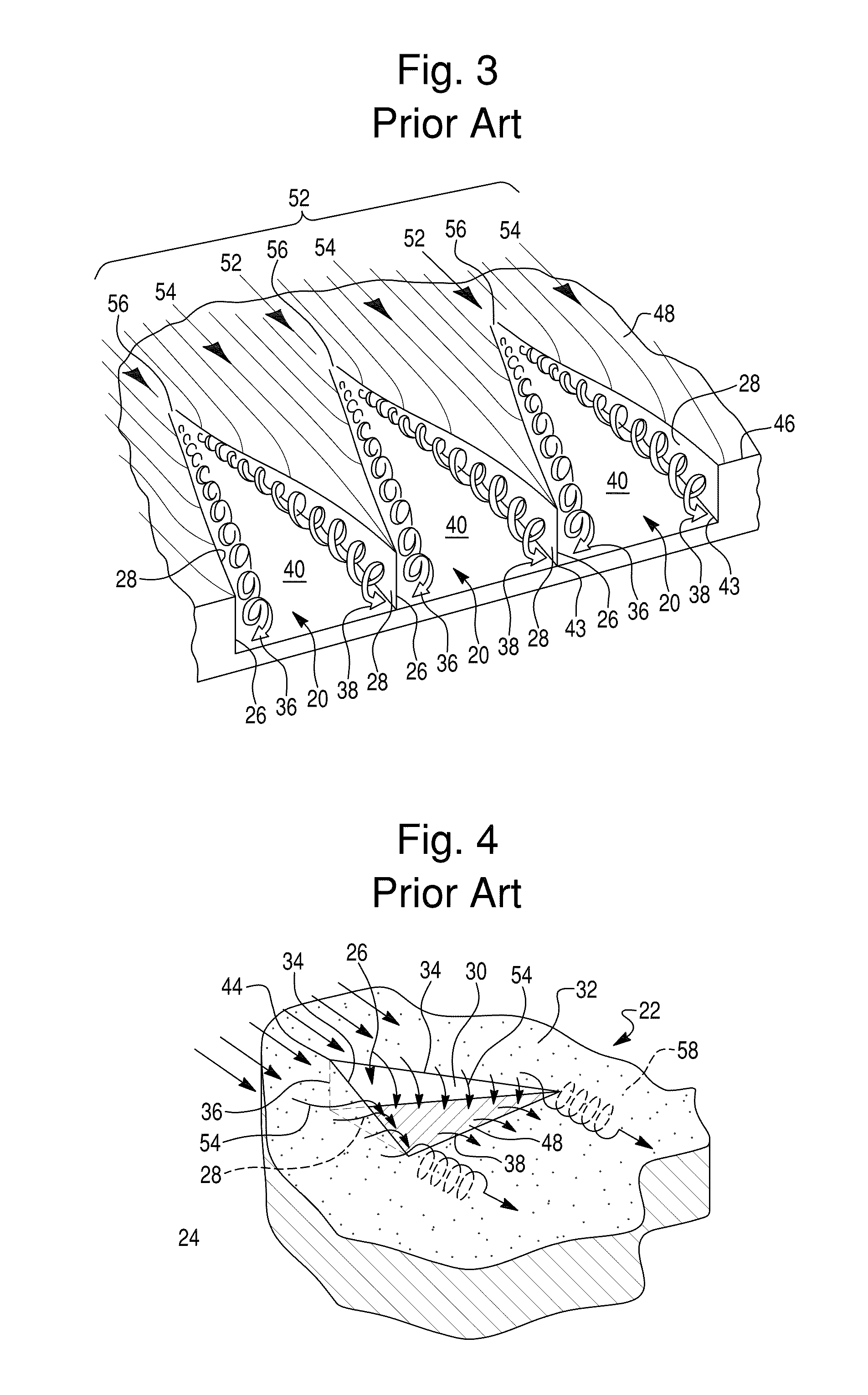

Low drag asymmetric tetrahedral vortex generators

ActiveUS20110315248A1Reduce resistancePreventing local scourFlow mixersServomotor componentsHydraulic structureEngineering

An asymmetric tetrahedral vortex generator that provides for control of three-dimensional flow separation over an underlying surface by bringing high momentum outer region flow to the wall of the structure using the generated vortex. The energized near-wall flow remains attached to the structure surface significantly further downstream. The device produces a swirling flow with one stream-wise rotation direction which migrates span-wise. When optimized, the device produces very low base drag on structures by keeping flow attached on the leeside surface thereof. This device can: on hydraulic structures, prevent local scour, deflect debris, and reduce drag; improve heat transfer between a flow and an adjacent surface, i.e., heat exchanger or an air conditioner; reduce drag, flow separation, and associated acoustic noise on airfoils, hydrofoils, cars, boats, submarines, rotors, etc. during subsonic or supersonic conditions; and, reduce radar signatures by using faceted edges with angles amenable to stealth technologies.

Owner:APPLIED UNIV RES

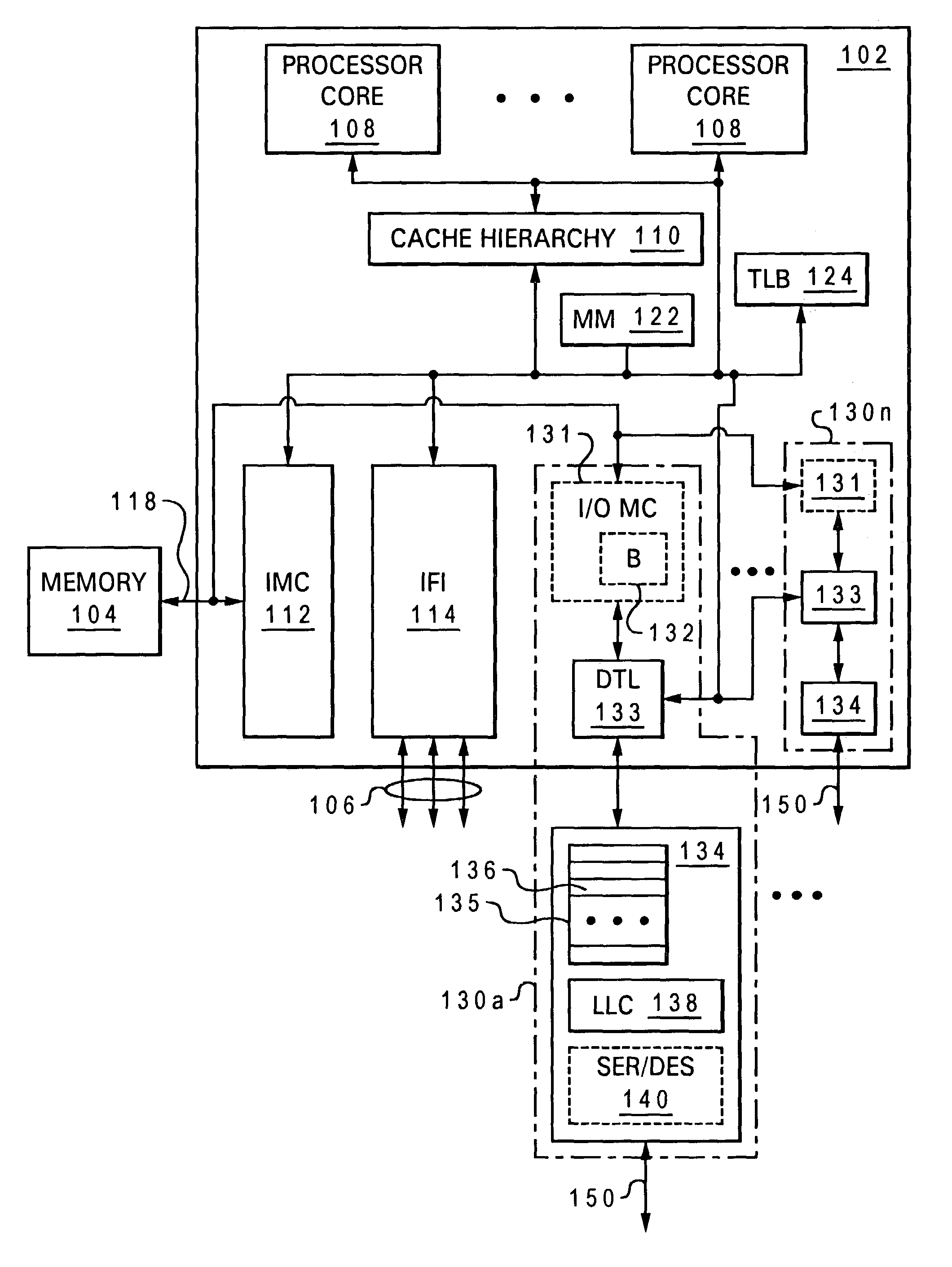

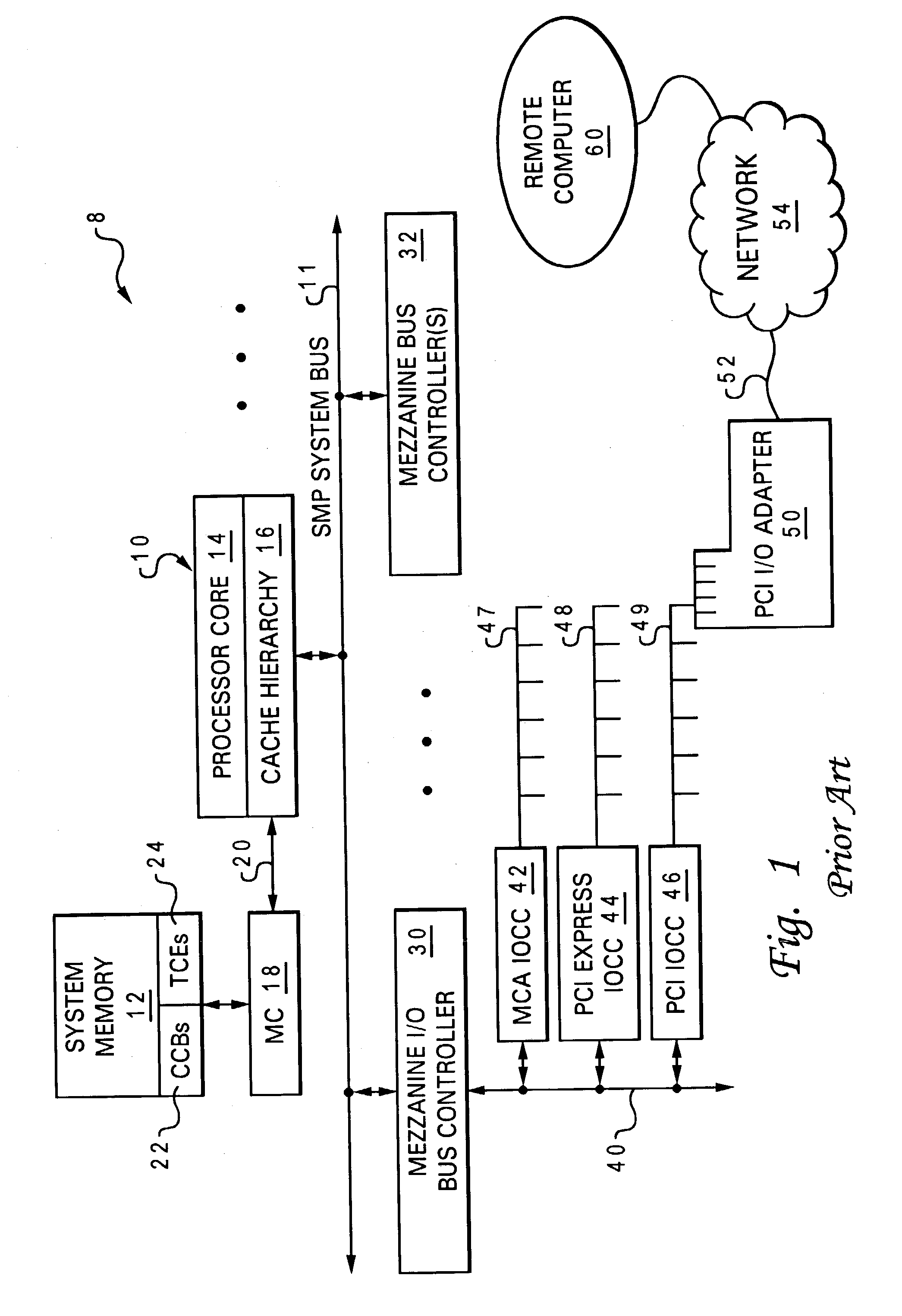

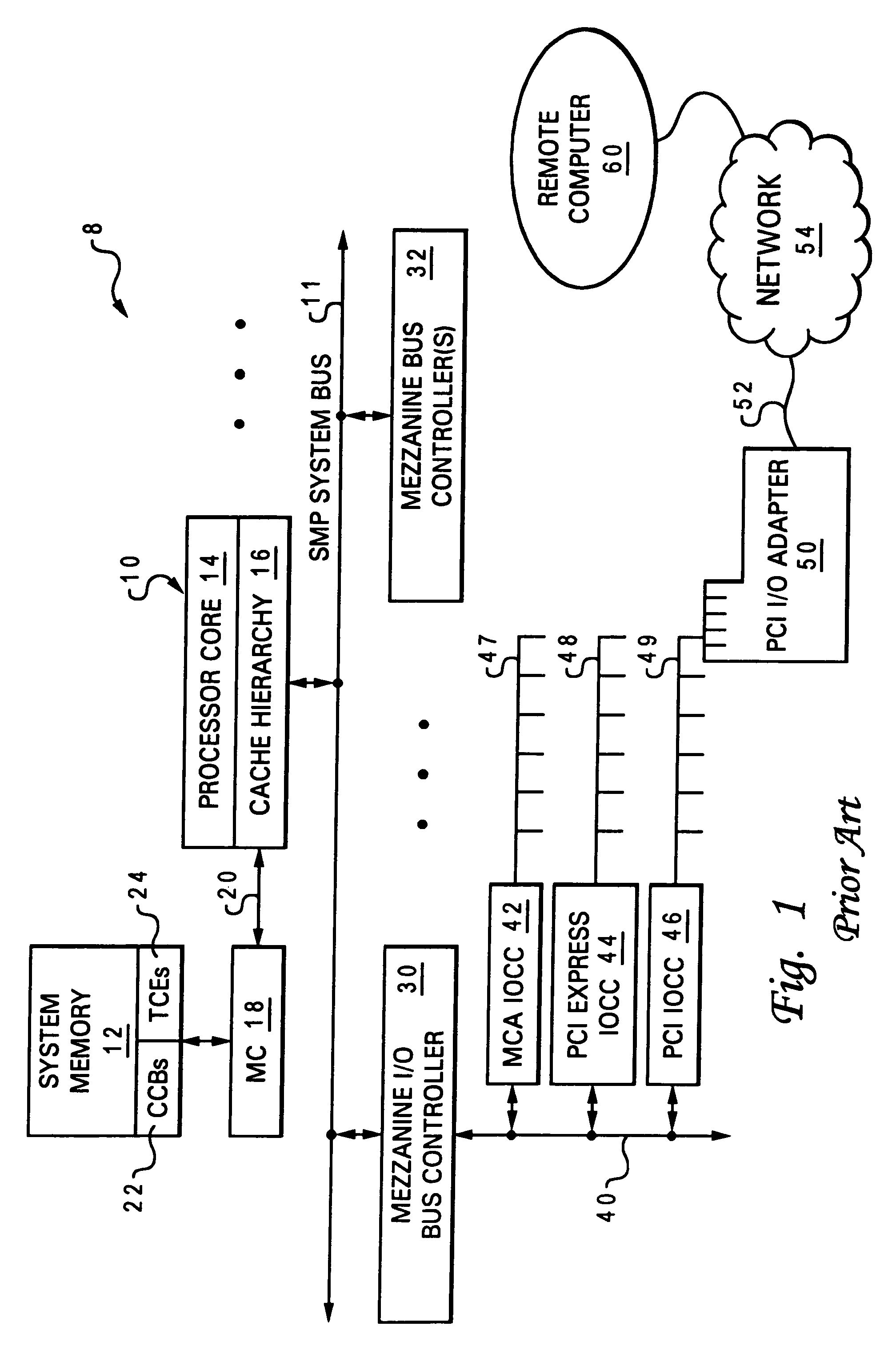

Data processing system providing hardware acceleration of input/output (I/O) communication

ActiveUS7047320B2Improve latencyReduce system performanceMemory adressing/allocation/relocationDigital computer detailsData processing systemTelecommunications link

An integrated circuit, such as a processing unit, includes a substrate and integrated circuitry formed in the substrate. The integrated circuitry includes a processor core that executes instructions, an interconnect interface, coupled to the processor core, that supports communication between the processor core and a system interconnect external to the integrated circuit, and at least a portion of an external communication adapter, coupled to the processor core, that supports input / output communication via an input / output communication link.

Owner:IBM CORP

Method for making power supplies smaller and more efficient for high-power PCs

ActiveUS7228448B2Reduce power levelEasy to operateEnergy efficient ICTVolume/mass flow measurementOperant conditioningSoftware

Systems and methods for reducing the size of a power supply in a computing device by monitoring activities to maintain the load on the power supply below a predetermined threshold. Through a set of software drivers, various components are placed into low power states in order to reduce the load on the power supply. The methods allow manufacturers to utilize smaller power supplies that are better fitted for the actual operating conditions of the computing device, rather than a large power supply designed for worst case conditions.

Owner:MICROSOFT TECH LICENSING LLC

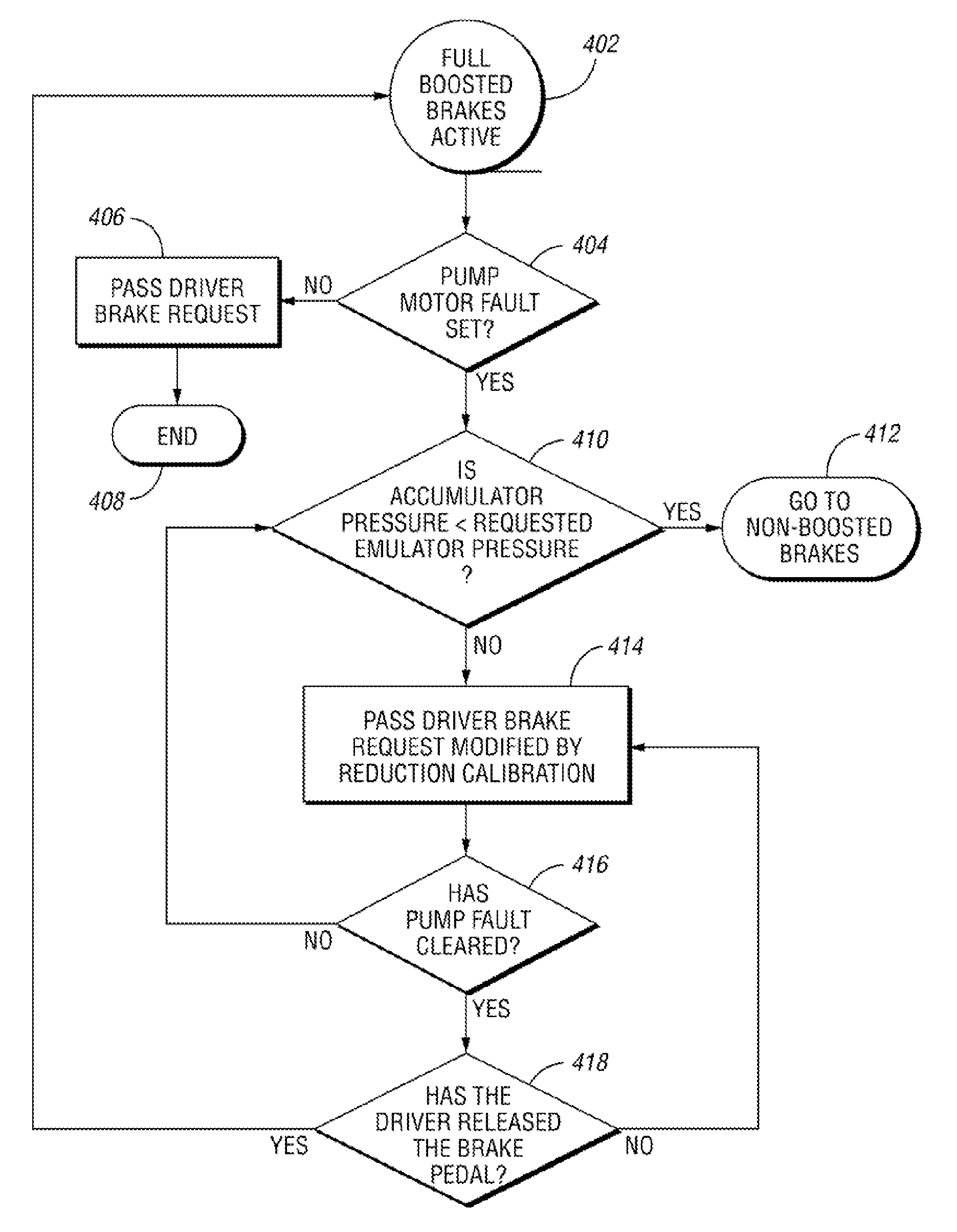

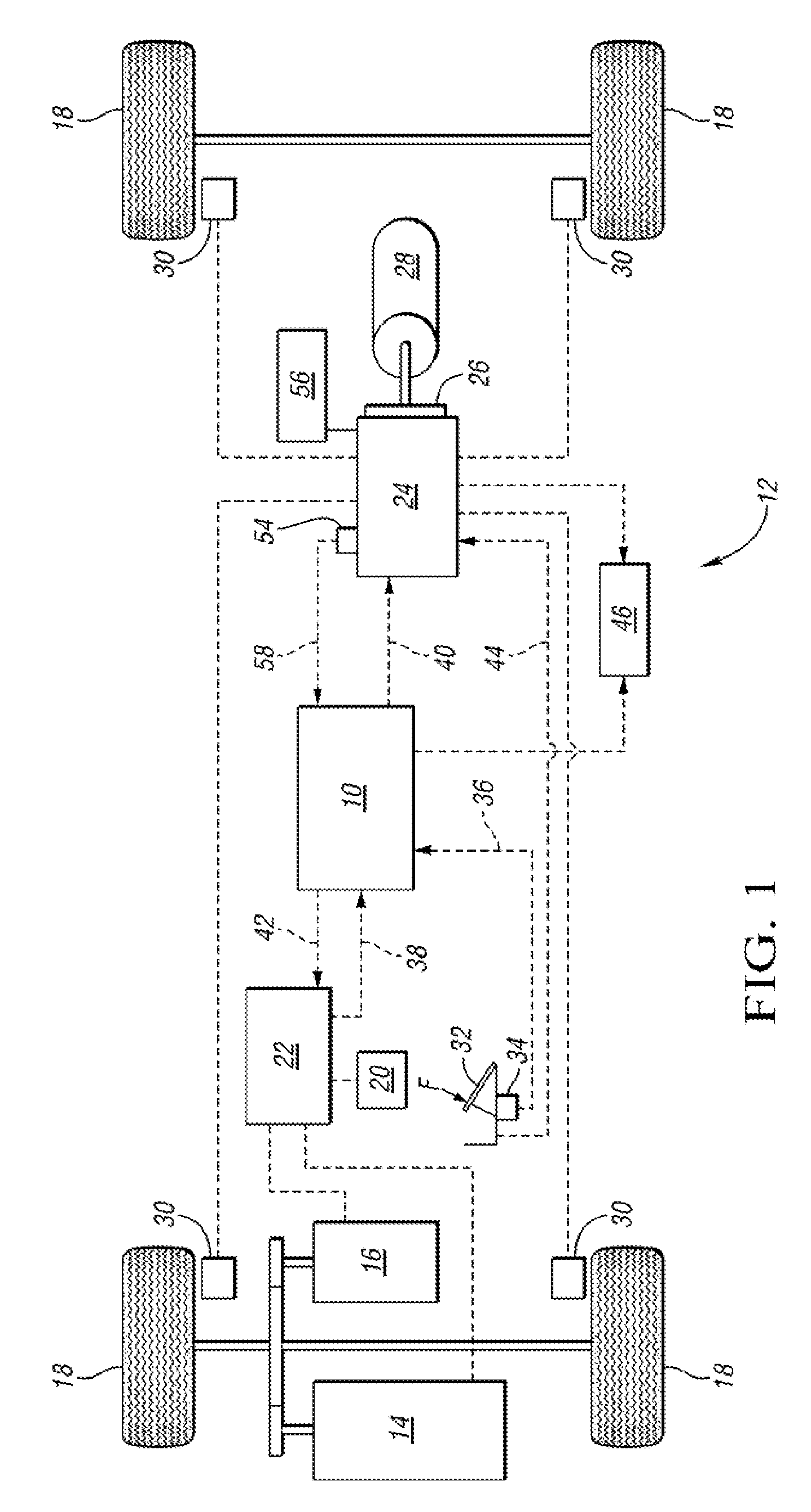

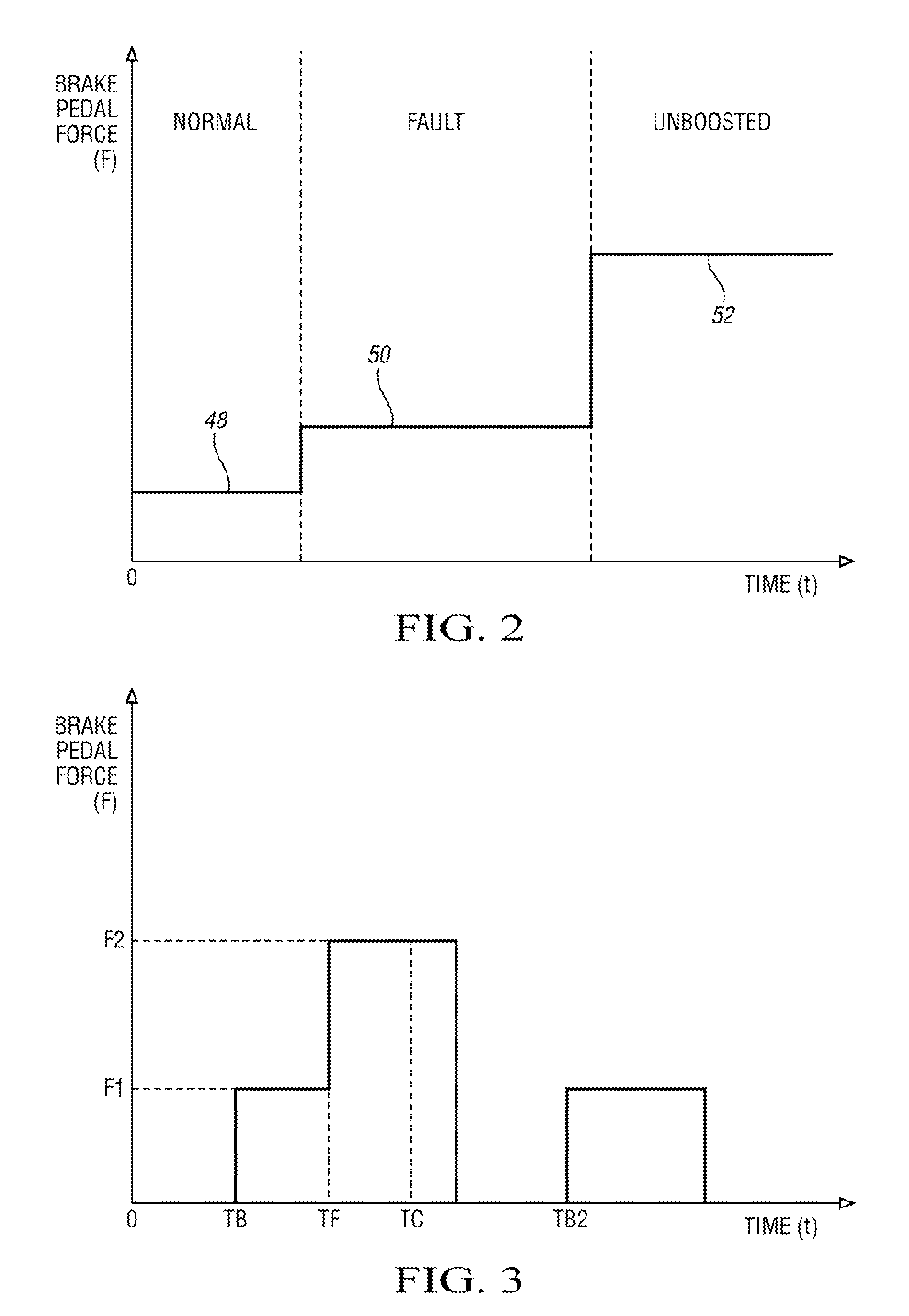

Brake System Fault Pedal Gain Change Method and System

ActiveUS20080265662A1Reduce system performanceSystem performance deterioratesHybrid vehiclesAnalogue computers for trafficElectric vehicleSystem failure

A brake system fault pedal gain change method and system for brake pedal simulator equipped vehicles such as hybrid electric vehicles is provided. In the event of a brake system booster fault, the method alerts the driver by way of tactile feedback. Additionally, the disclosed method provides a controlled means to gradually increase required brake pedal force during a brake system fault to avoid an abrupt change in brake pedal force when the brake system boost is depleted.

Owner:GM GLOBAL TECH OPERATIONS LLC

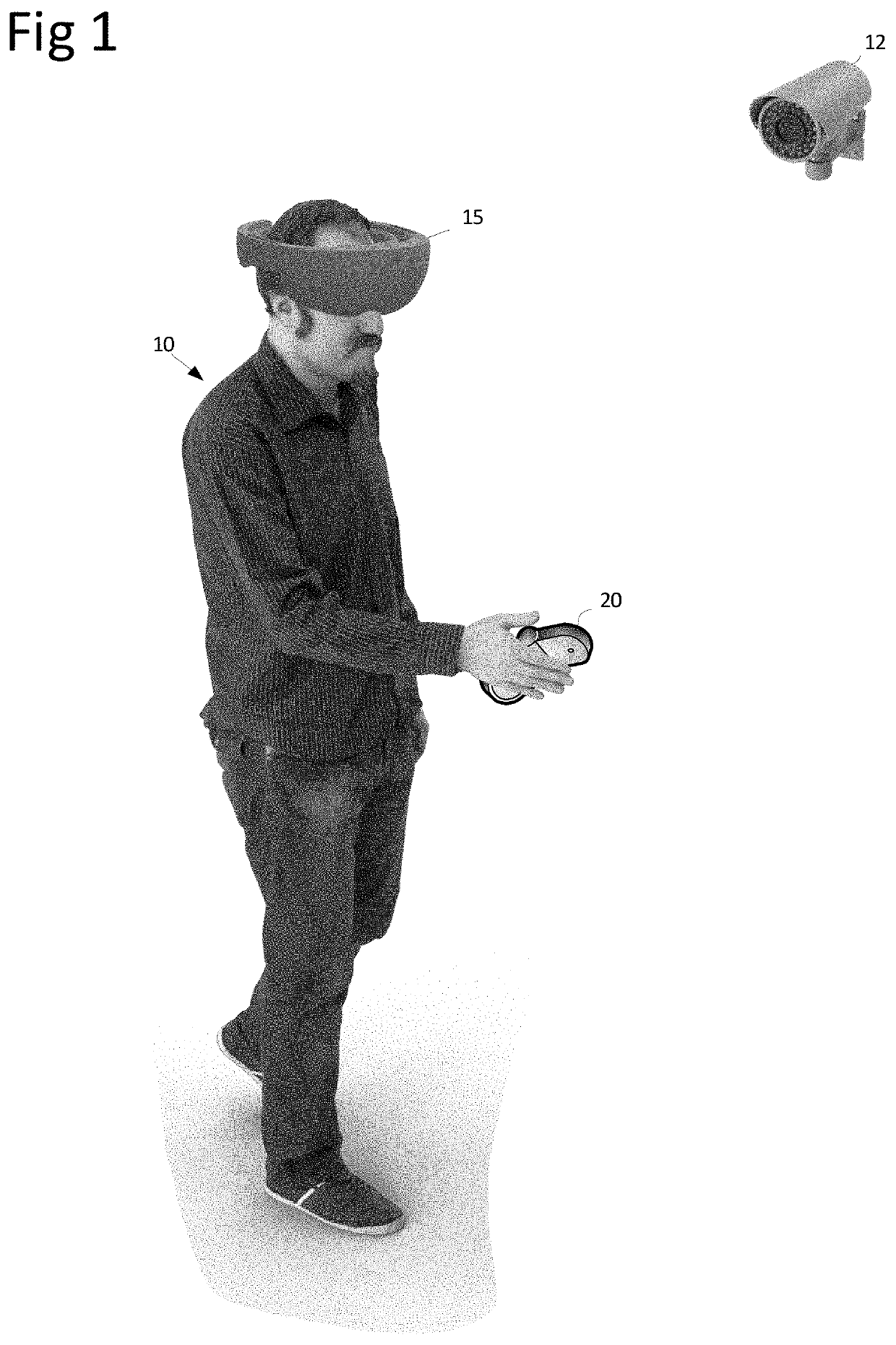

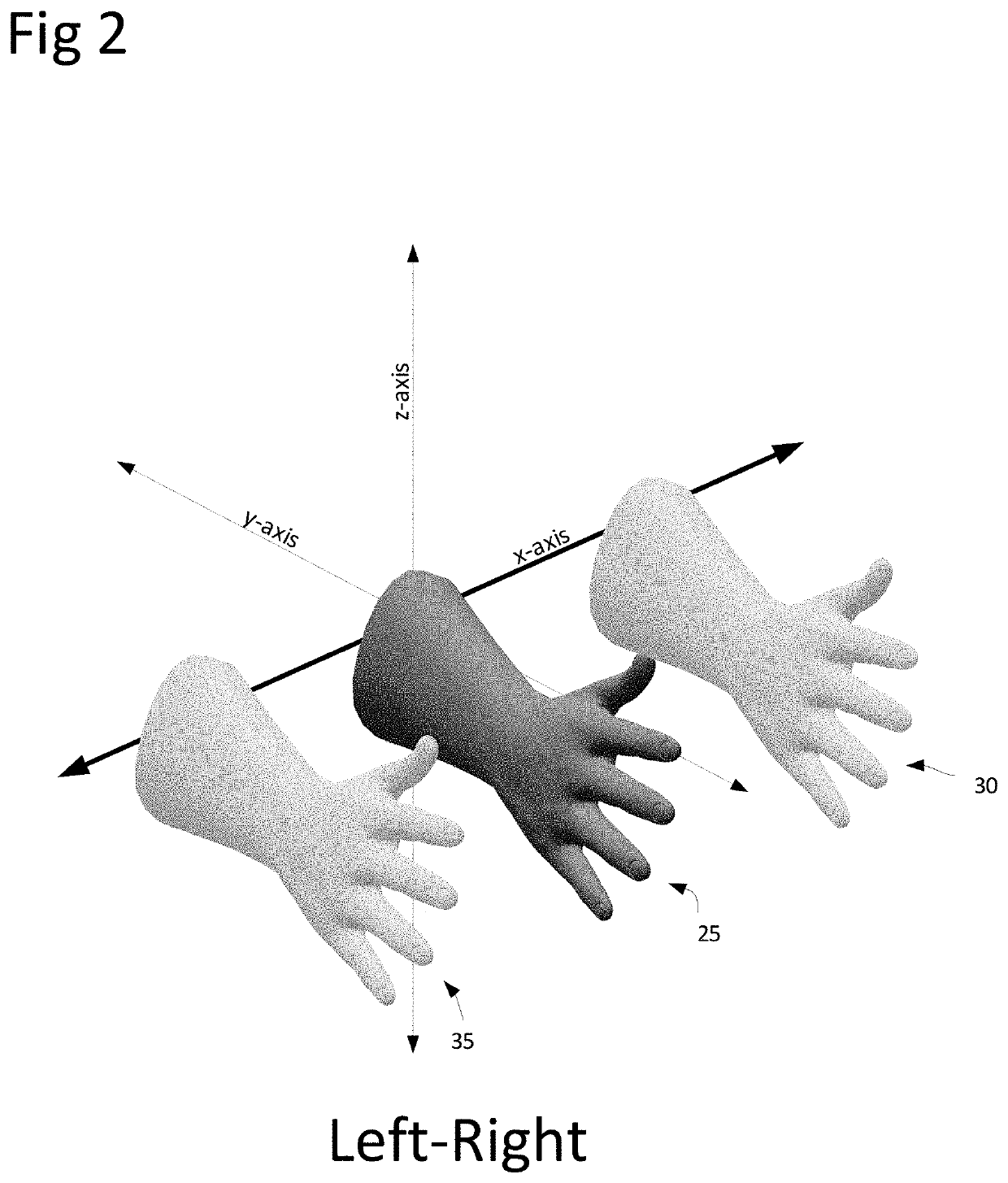

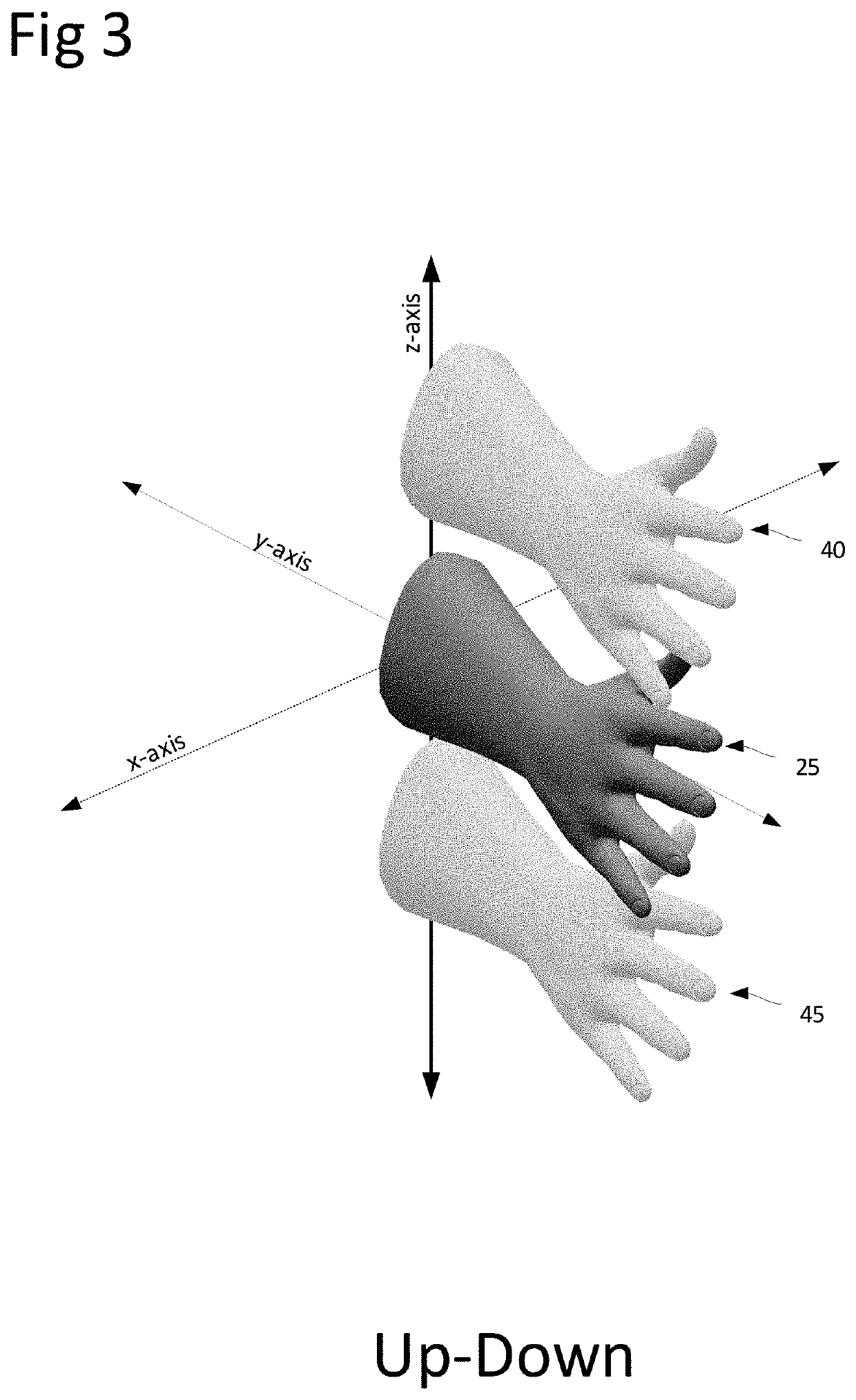

Relative pose data augmentation of tracked devices in virtual environments

ActiveUS11042028B1Reduce the possibilityReduced and no observabilityImage analysisLocation information based serviceHand heldHand held devices

This invention relates to tracking of user-worn and hand-held devices with respect to each other, in circumstances where there are two or more users interacting in the same share space. It extends conventional global and body-relative approaches to “cooperatively” estimate the relative poses between all useful combinations of user-worn tracked devices such as HMDs and hand-held controllers worn (or held) by multiple users. For example, a first user's HMD estimates its absolute global pose in the coordinate frame associated with the externally-mounted devices, as well as its relative pose with respect to all other HMDs, hand-held controllers, and other user held / worn tracked devices in the environment. In this way, all HMDs (or as many as appropriate) are tracked with respect to each other, all HMDs are tracked with respect to all hand-held controllers, and all hand-held controllers are tracked with respect to all other hand-held controllers.

Owner:UNIV OF CENT FLORIDA RES FOUND INC

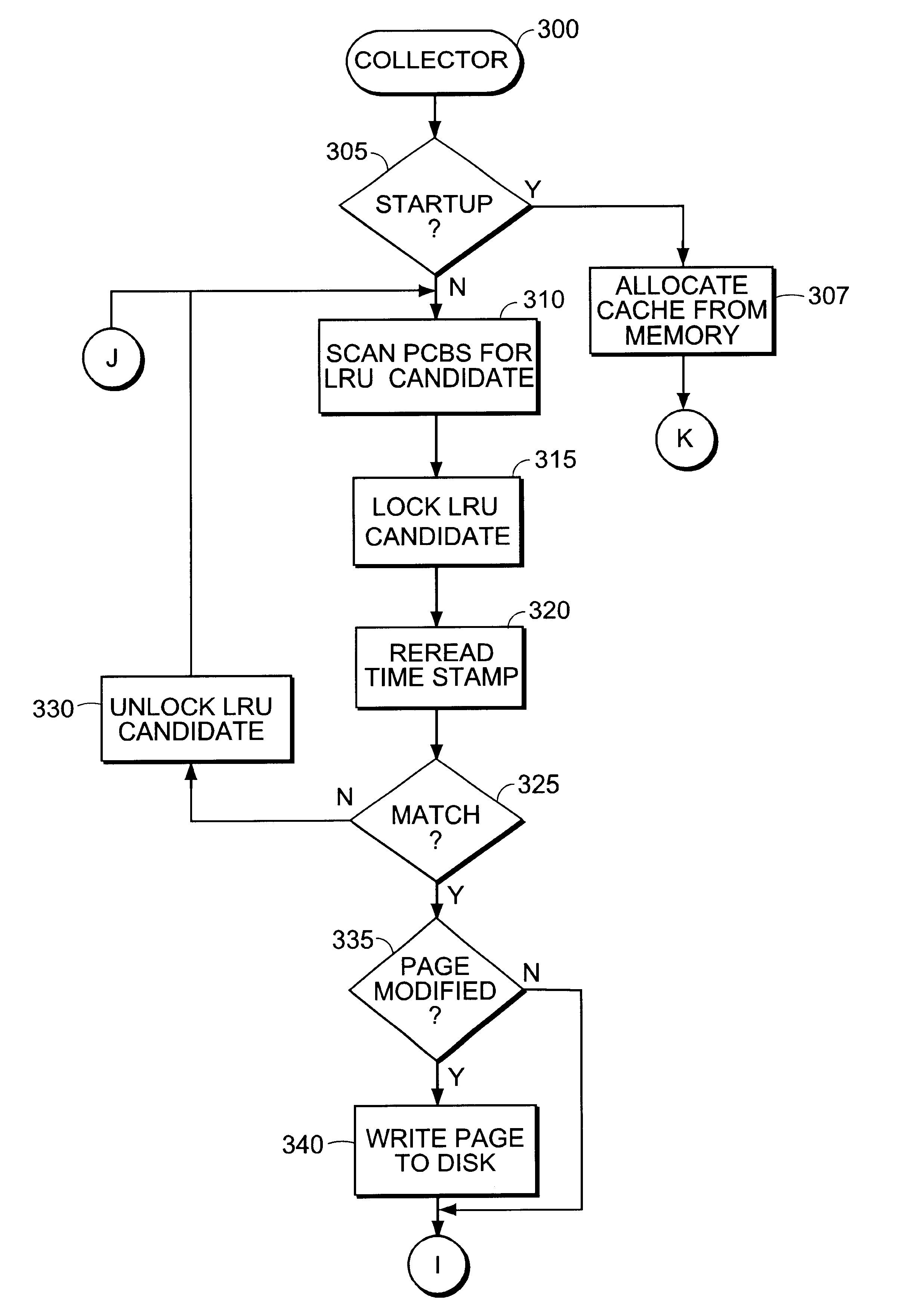

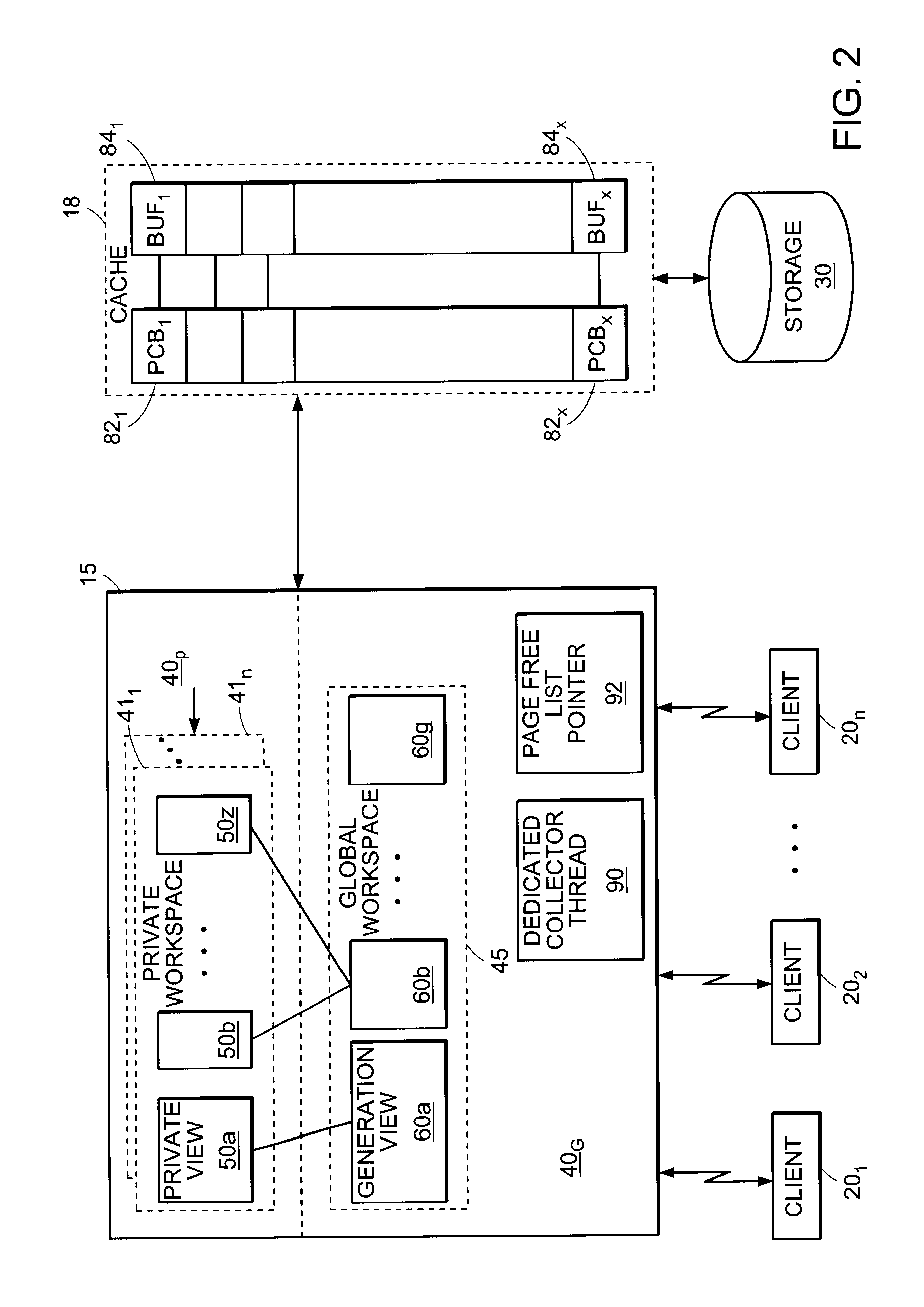

System for maintaining a buffer pool

InactiveUS6845430B2Avoids potential deadlockReduce memory requirementsData processing applicationsMemory adressing/allocation/relocationMulti dimensional dataMulti dimensional

In a multi-threaded computing environment, a shared cache system reduces the amount of redundant information stored in memory. A cache memory area provides both global readable data and private writable data to processing threads. A particular processing thread accesses data by first checking its private views of modified data and then its global views of read-only data. Uncached data is read into a cache buffer for global access. If write access is required by the processing thread, the data is copied into a new cache buffer, which is assigned to the processing thread's private view. The particular shared cache system supports generational views of data. The system is particularly useful in on-line analytical processing of multi-dimensional databases. In one embodiment, a dedicated collector reclaims cache memory blocks for the processing threads. By utilizing a dedicated collector thread, any processing penalty encountered during the reclamation process is absorbed by the dedicated collector. Thus the user session threads continue to operate normally, making the reclaiming of cache memory blocks by the dedicated collector task thread transparent to the user session threads. In an alternative embodiment, the process for reclaiming page buffers is distributed amongst user processes sharing the shared memory. Each of the user processes includes a user thread collector for reclaiming a page buffer as needed and multiple user processes can concurrently reclaim page buffers.

Owner:ORACLE INT CORP

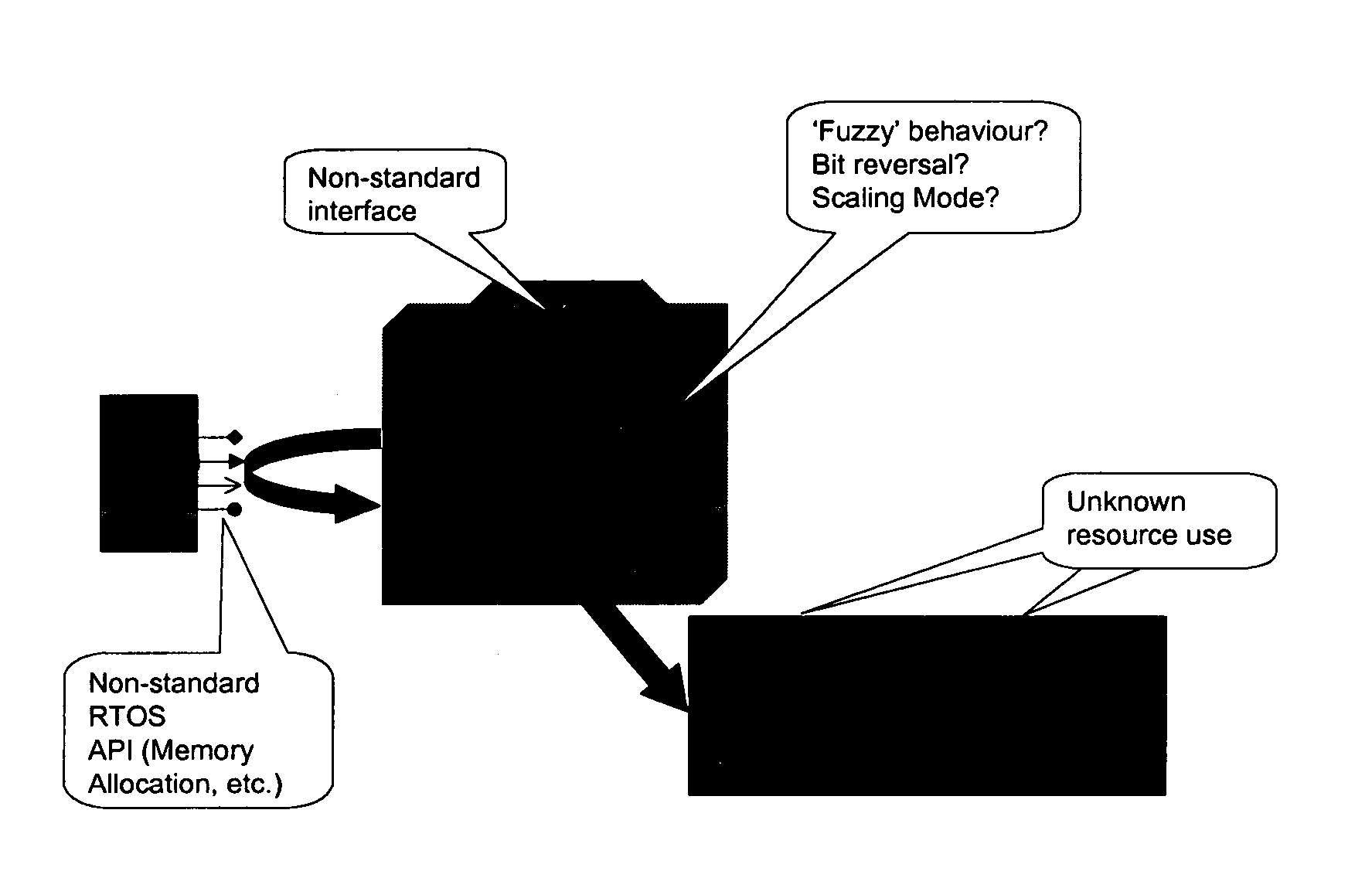

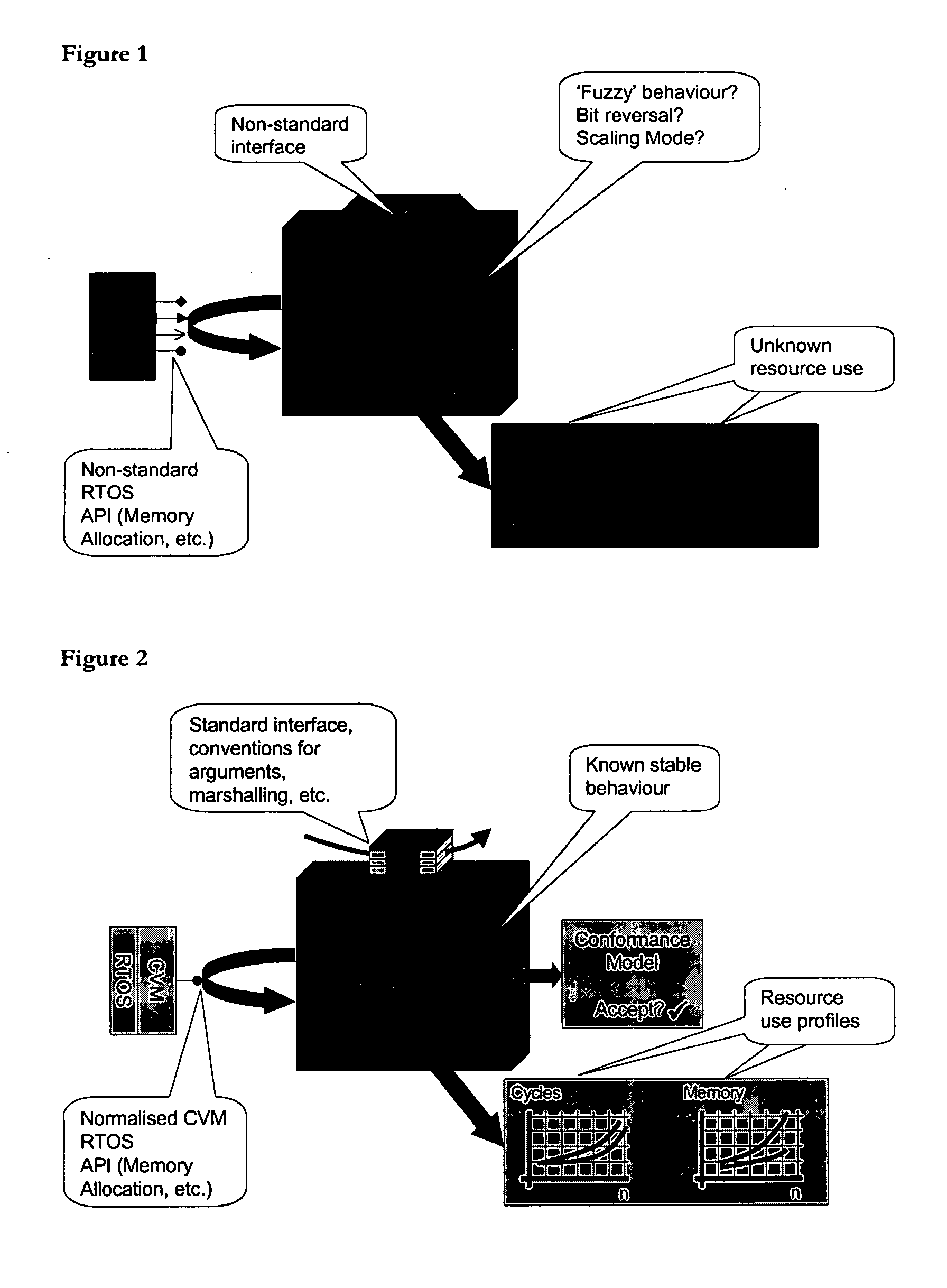

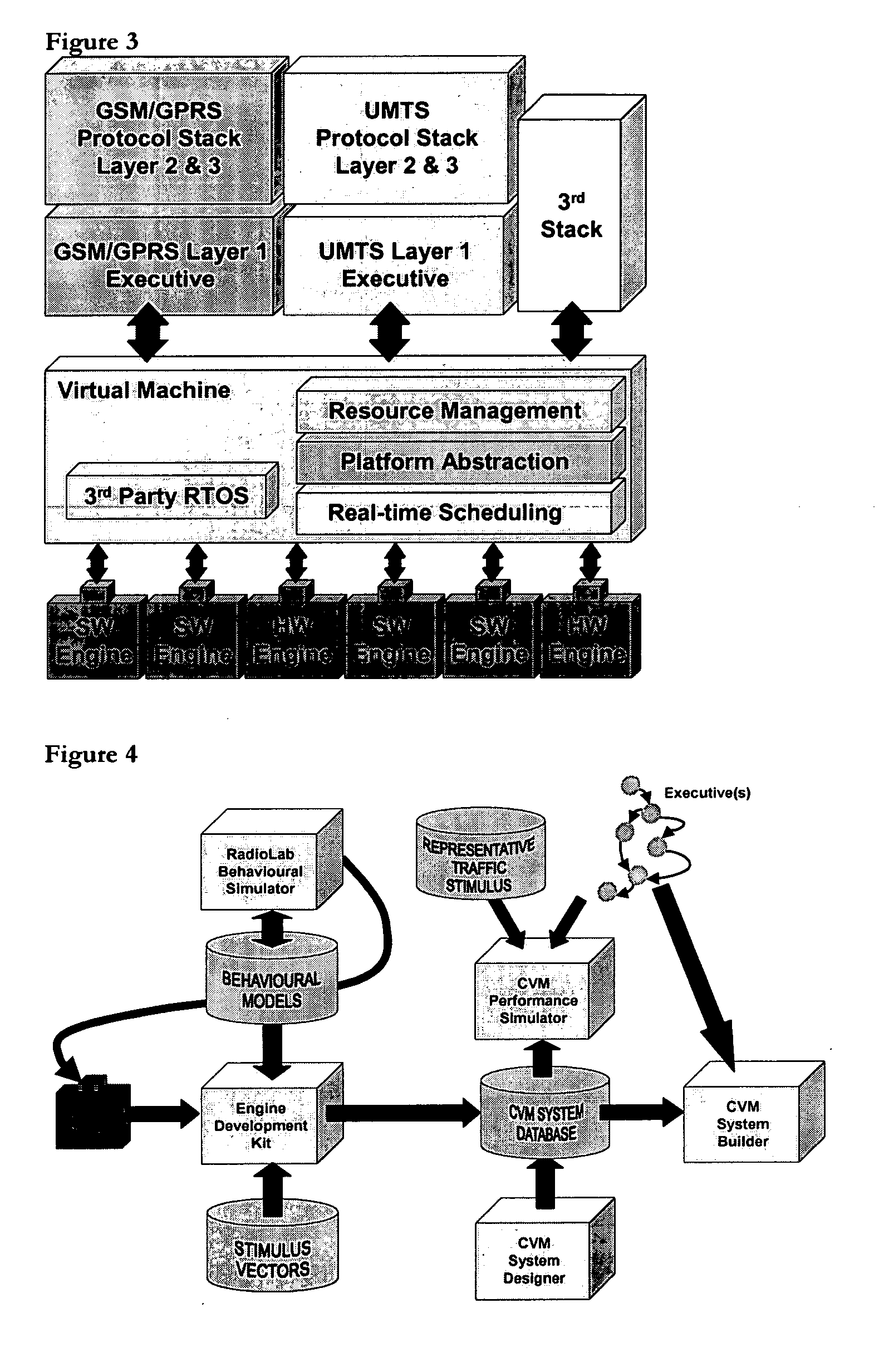

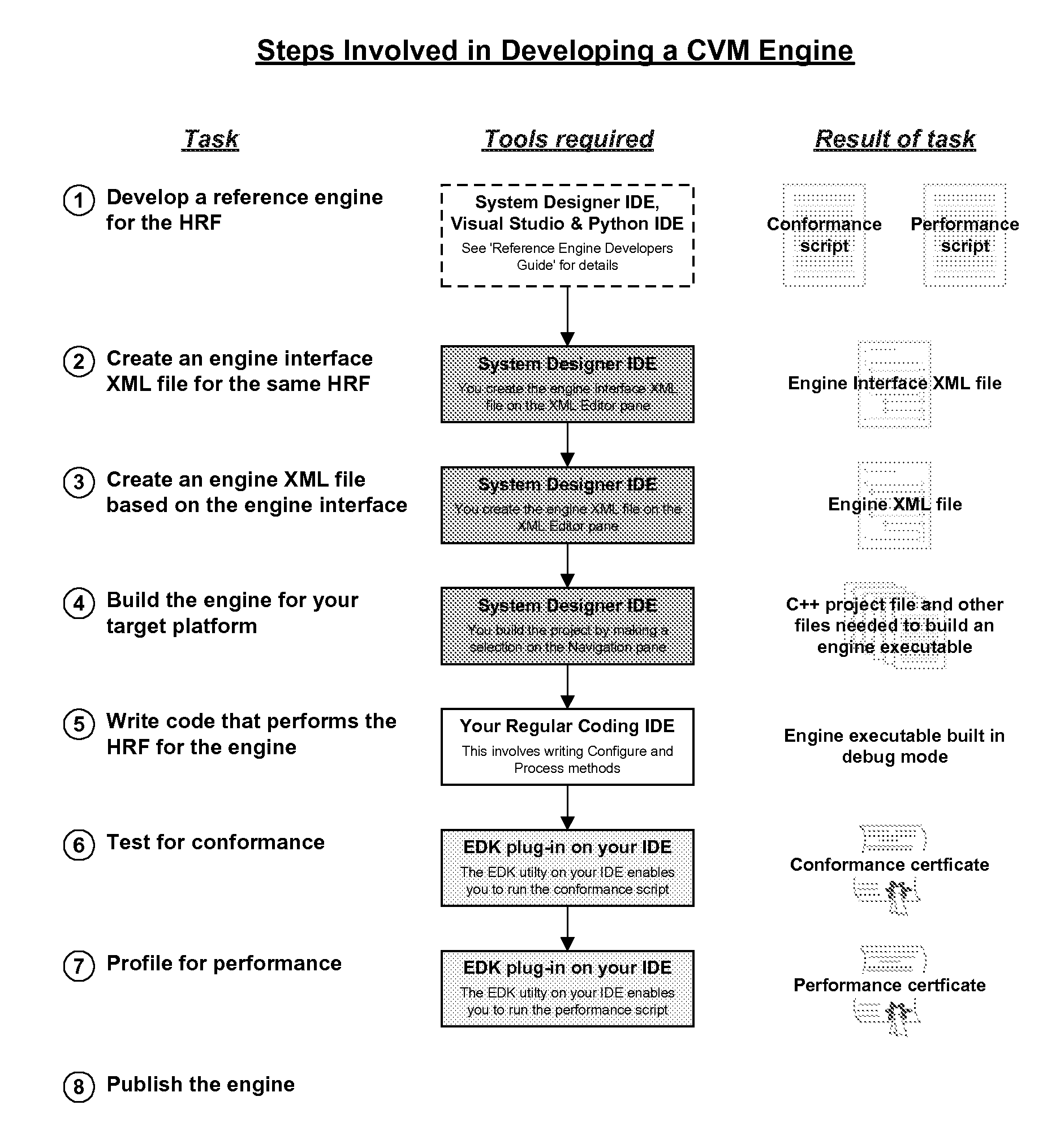

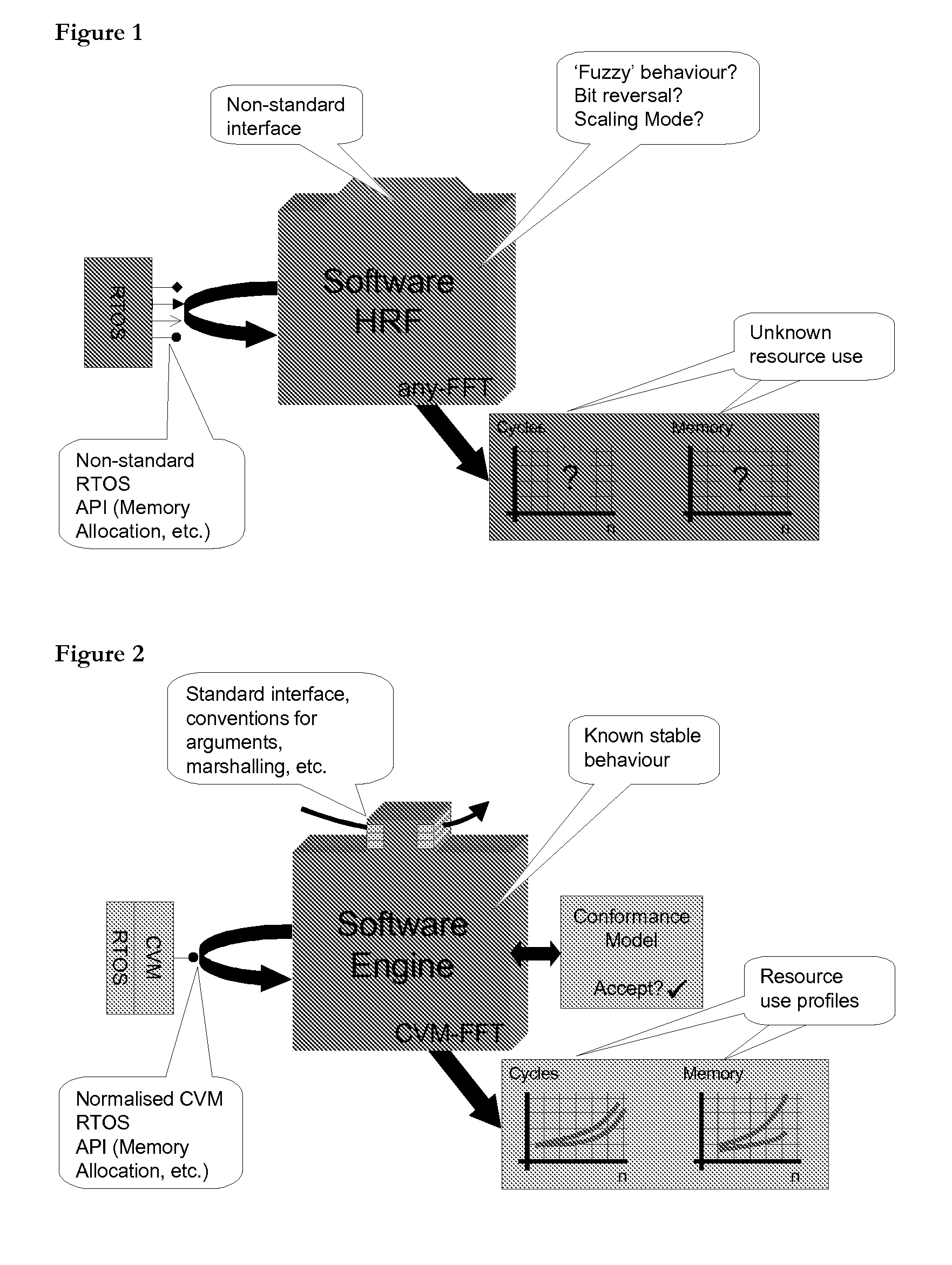

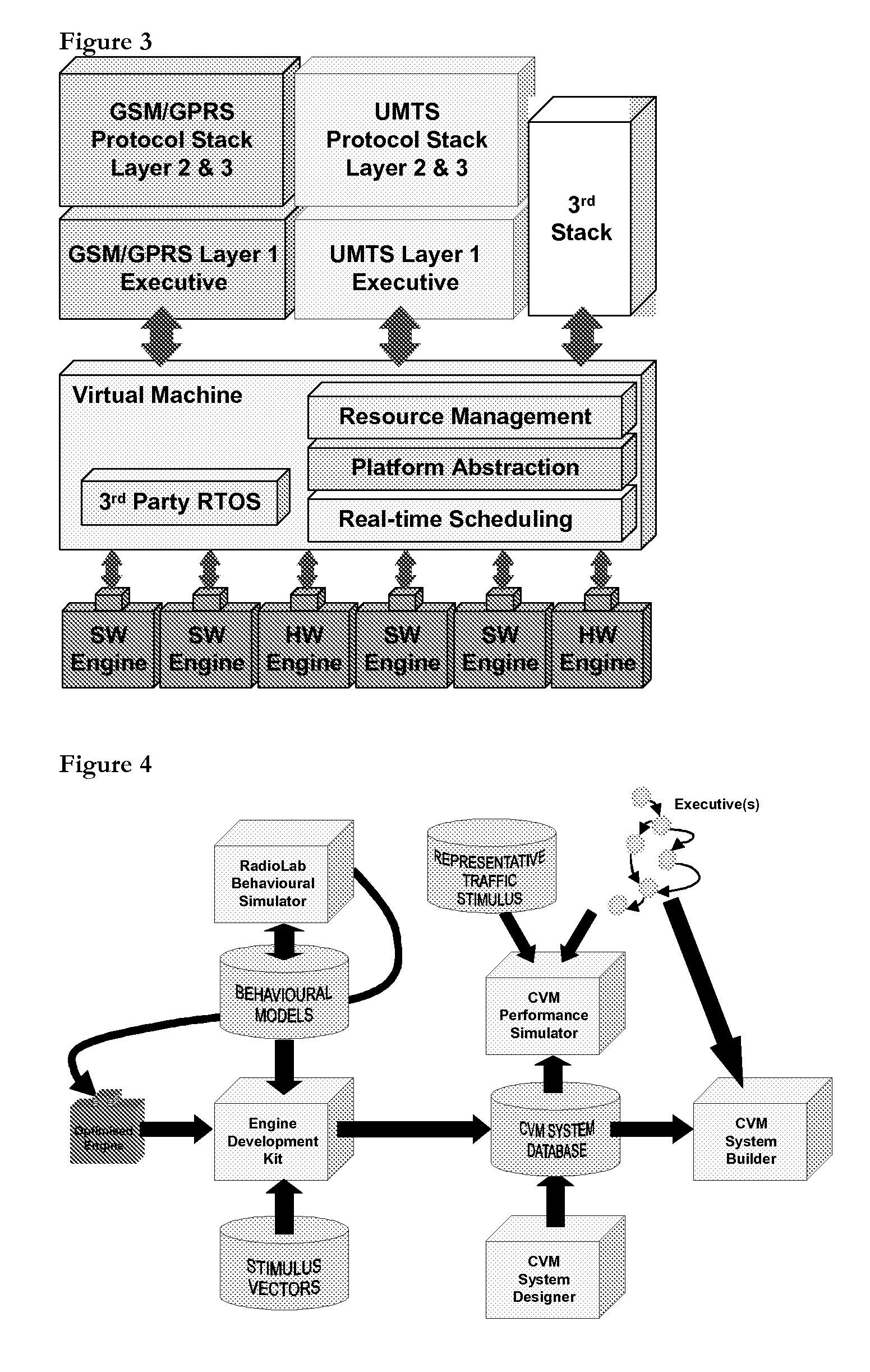

Device comprising a communications stack with a scheduler

InactiveUS20050223191A1Degrade system performanceEfficient solutionProgram initiation/switchingGeneral purpose stored program computerExecution controlTime schedule

Owner:RADIOSCAPE

Method and apparatus for uplink coordinated multi-point transmission of user data

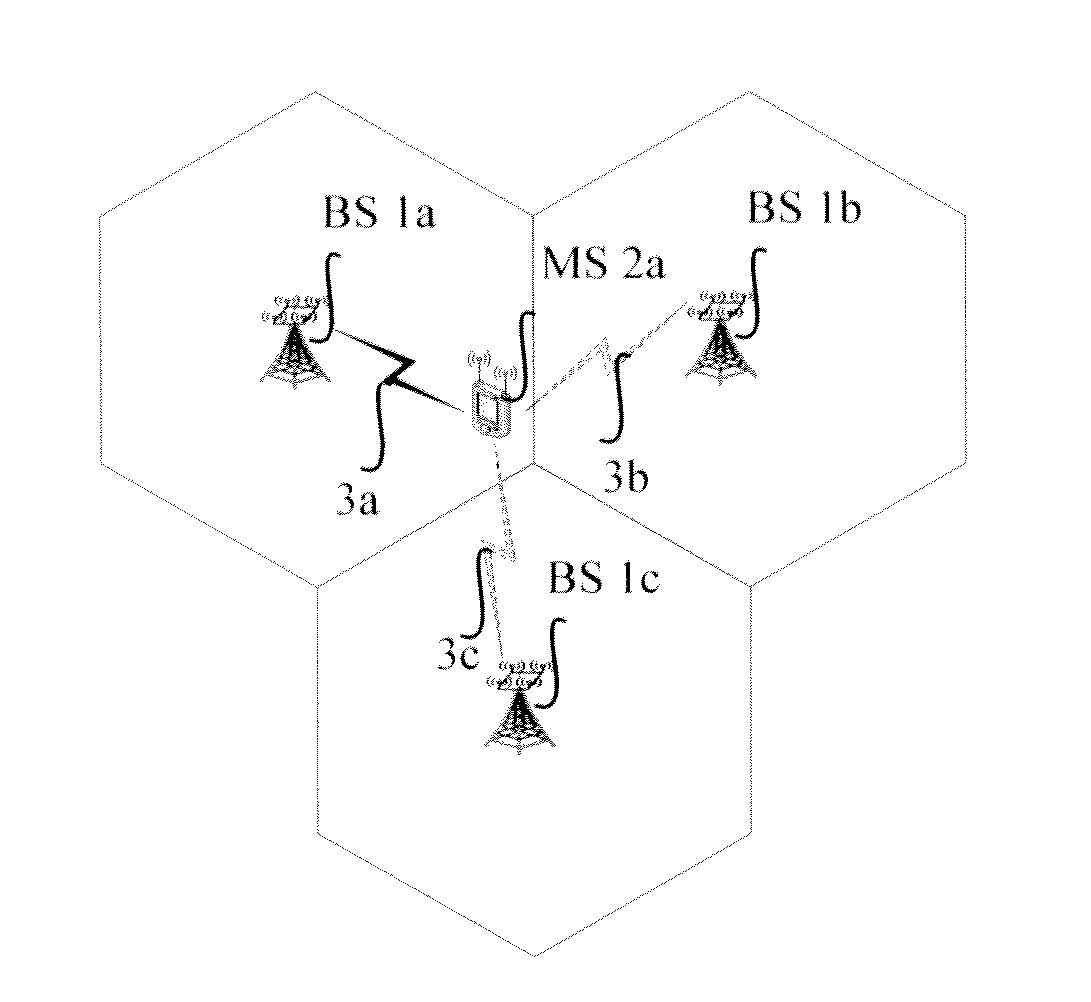

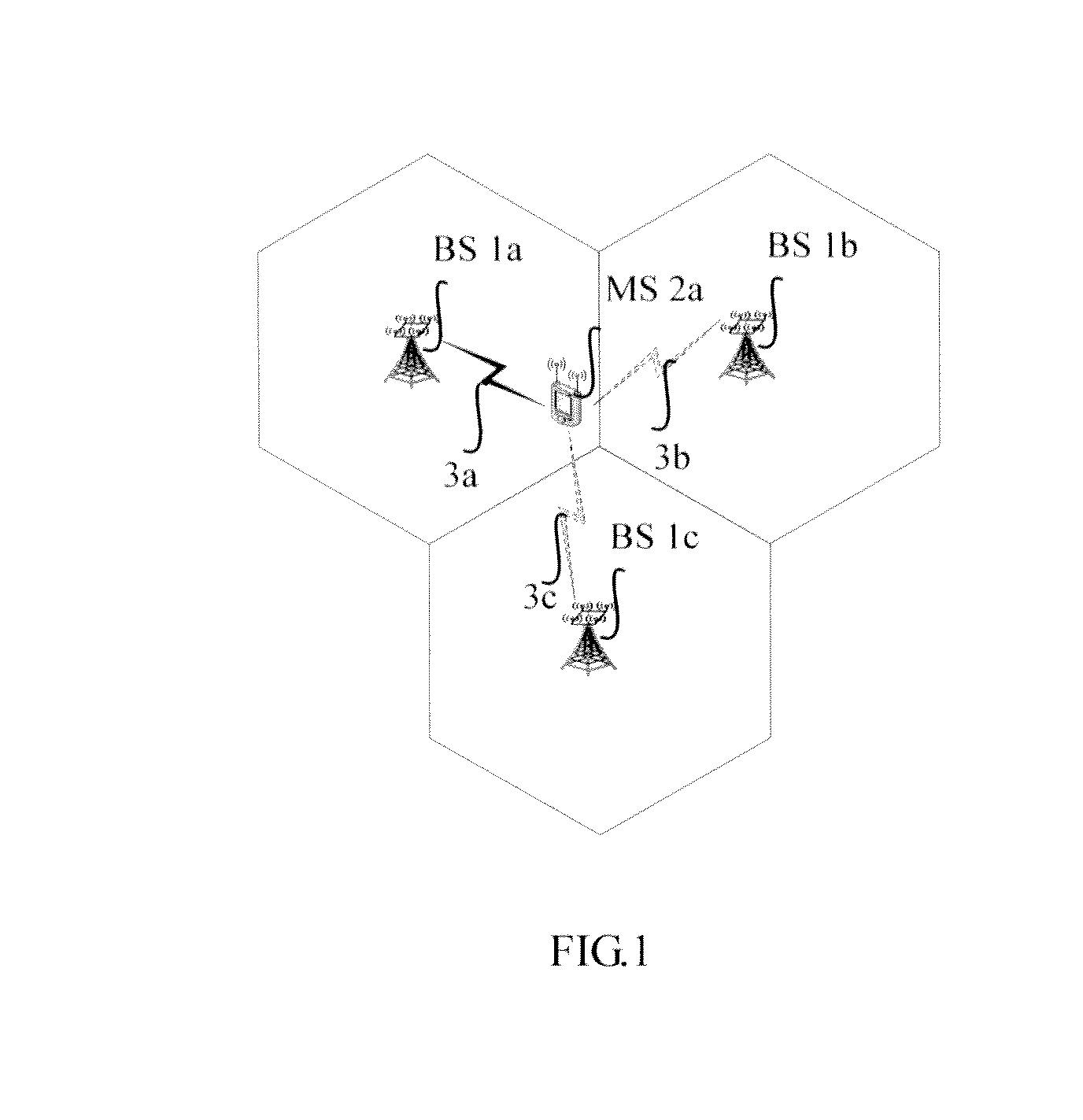

InactiveUS20120002611A1Reduce system performanceReduce needSite diversityWireless commuication servicesMulti pointBase station

The present invention provides an uplink progressive multi-point coordinated MIMO processing scheme. A coordinated processing management equipment determines whether it is necessary to send ACK or NACK message to neighboring base stations in the same CoMP cluster; when it is necessary to send ACK message to the neighboring base stations, the ACK message is sent to all the neighboring base stations in the cluster which have not participated in joint to inform each neighboring base stations that it is not necessary to send user data to the coordinated processing management equipment; when it is necessary to send NACK message to the neighboring base stations, the NACK message is sent to at least one neighboring base station in the cluster which has not participated in joint to inform the at least one base stations to send user data to the coordinated processing management equipment; according to the user data obtained by the base station and the user data from the at least one neighboring base stations, the coordinated processing management equipment performs joint detection and combination. Through the application of solution of the invention, the backhaul cost has been saved and the complexity of the system realization is reduced.

Owner:ALCATEL LUCENT SAS

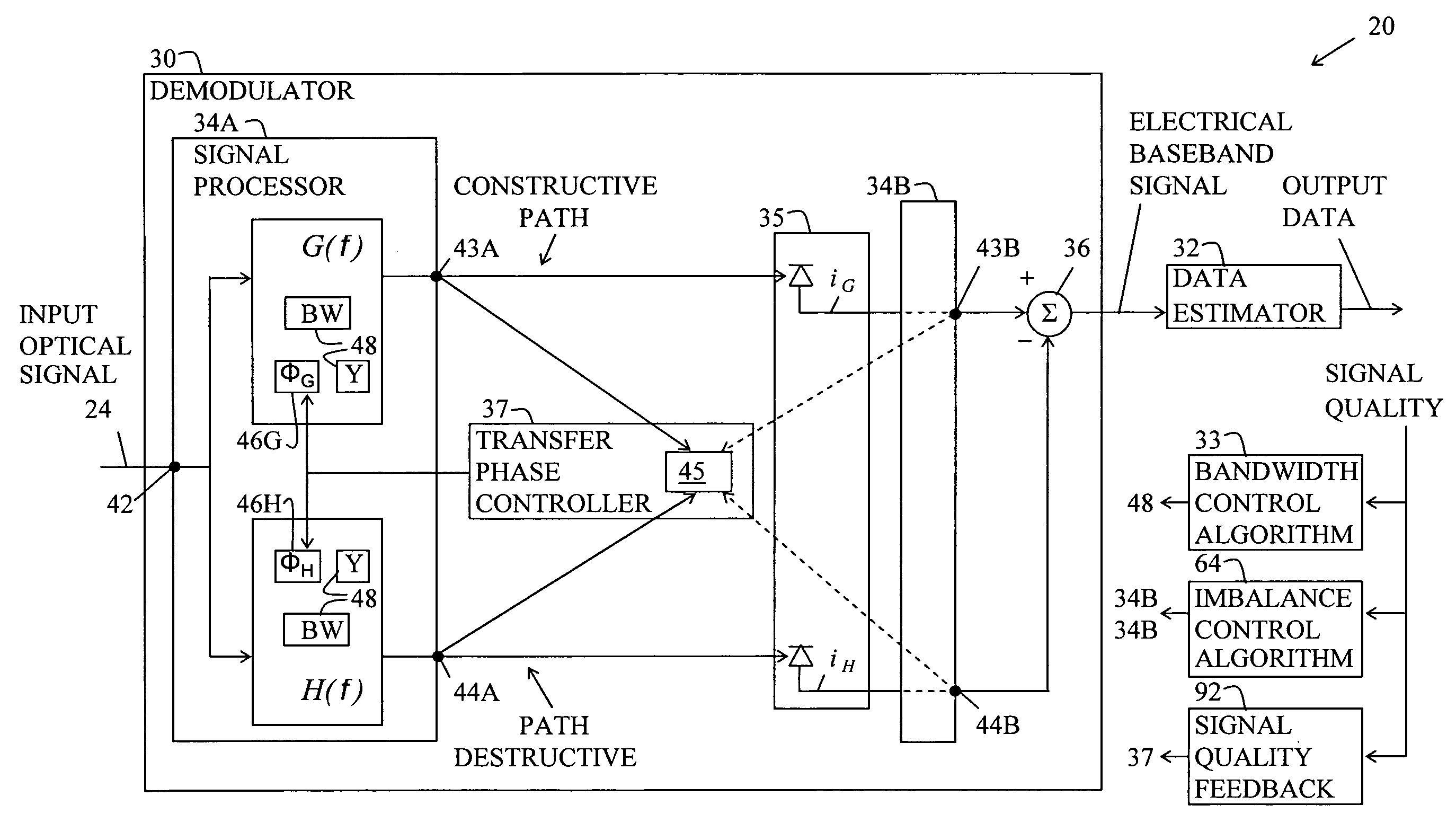

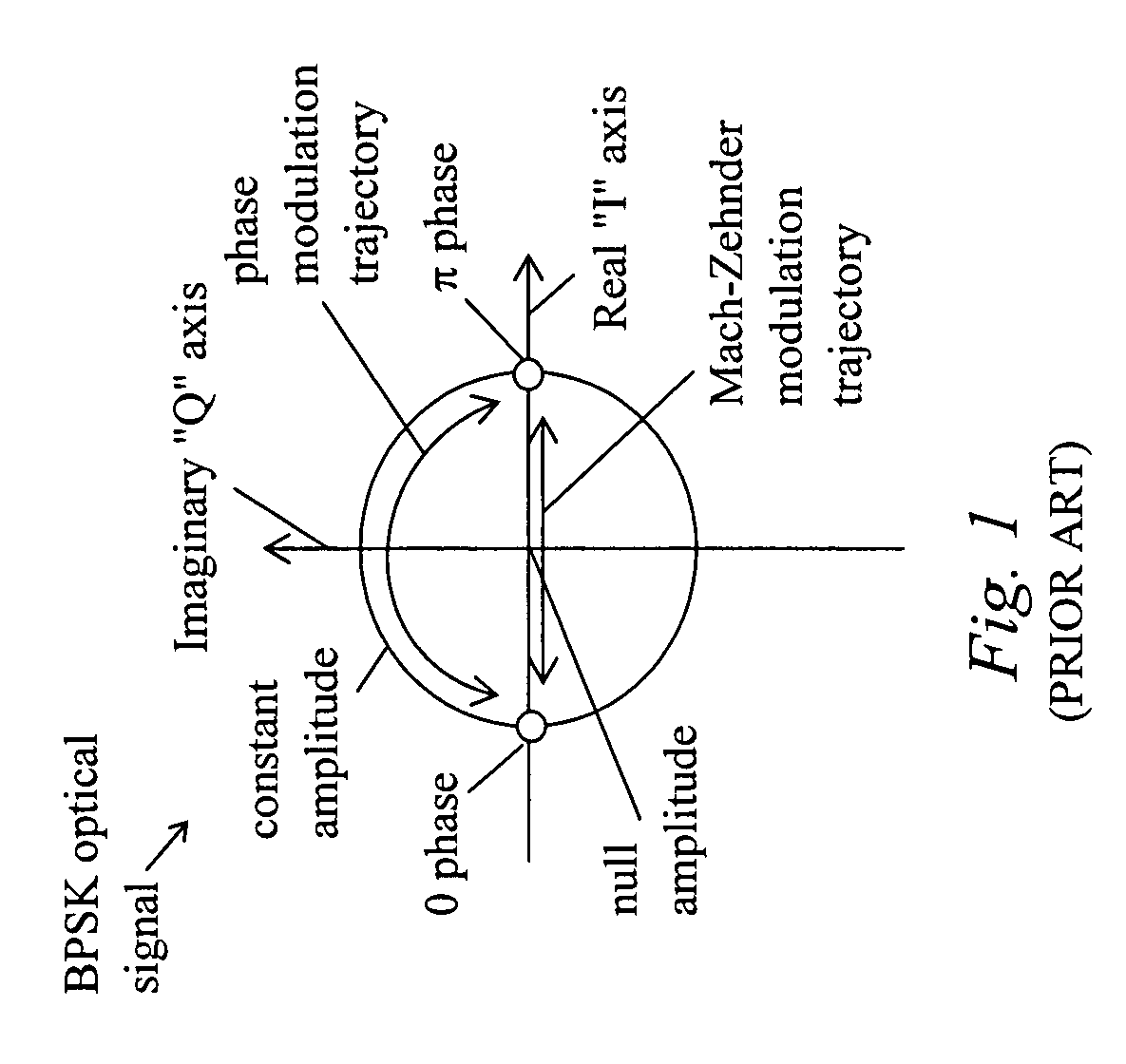

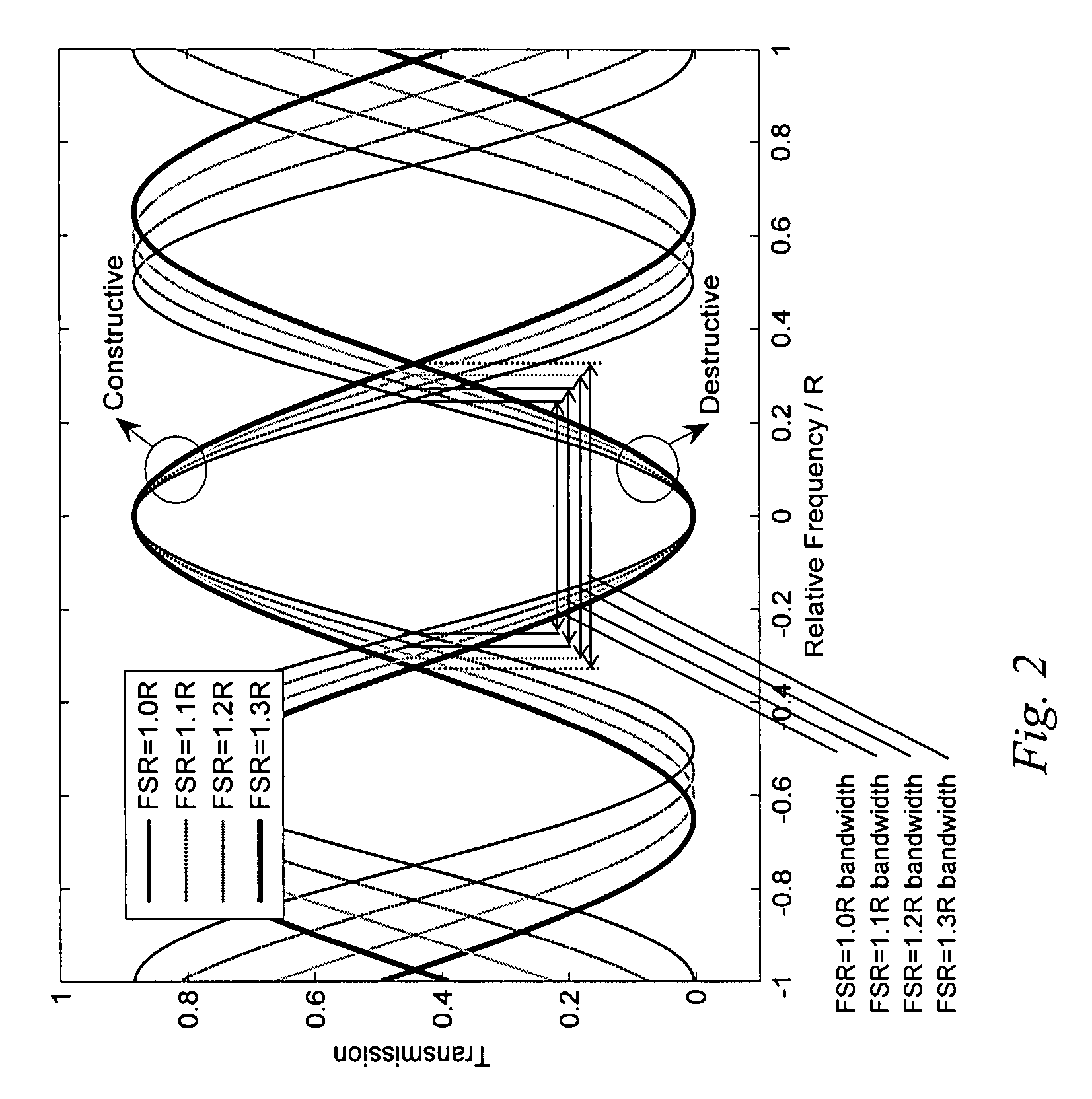

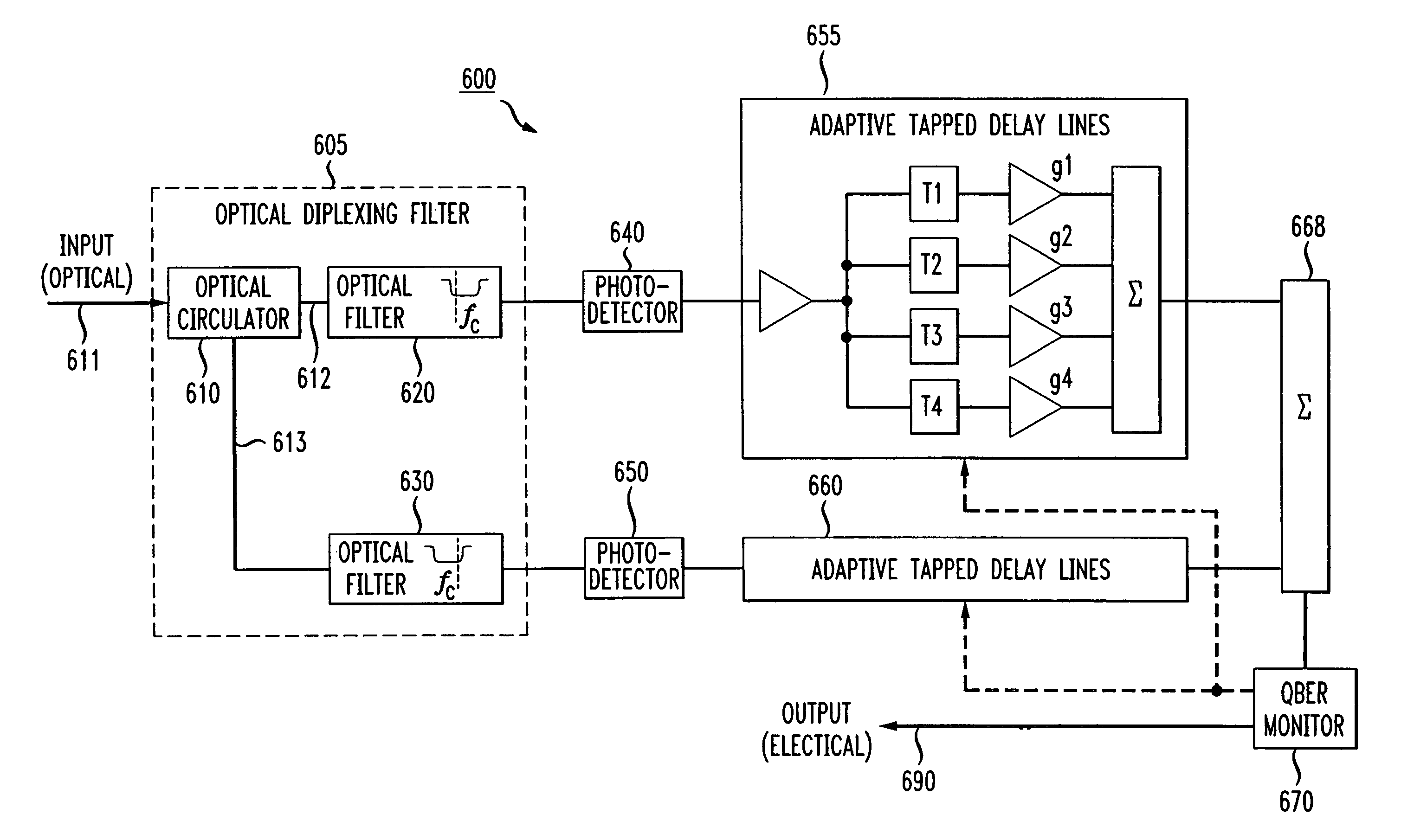

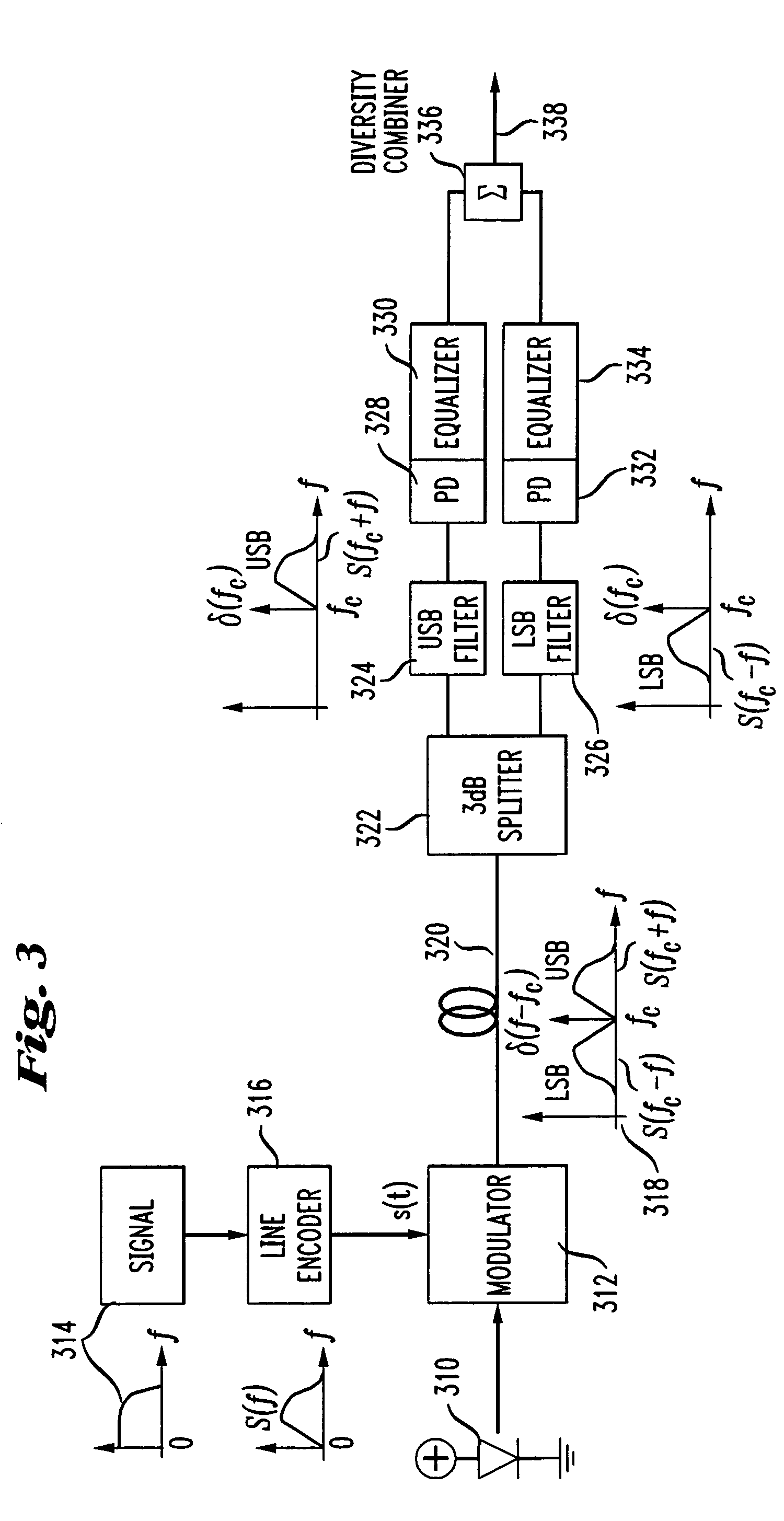

GT decoder having bandwidth control for ISI compensation

ActiveUS20080226306A1Improve system performanceLess dataCoupling light guidesDistortion/dispersion eliminationSignal transfer functionEngineering

An optical receiver apparatus and methods for mitigating intersymbol interference (ISI) in a differentially-encoded modulation transmission system by controlling constructive and destructive transfer functions. The receiver includes a bandwidth control element for controlling transfer function bandwidth, a transfer phase controller for controlling transfer function phase and / or an imbalancer for imbalancing the transfer functions for compensating for intersymbol interference and optimizing the quality of the received optical signal.

Owner:LUMENTUM FIBER OPTICS INC

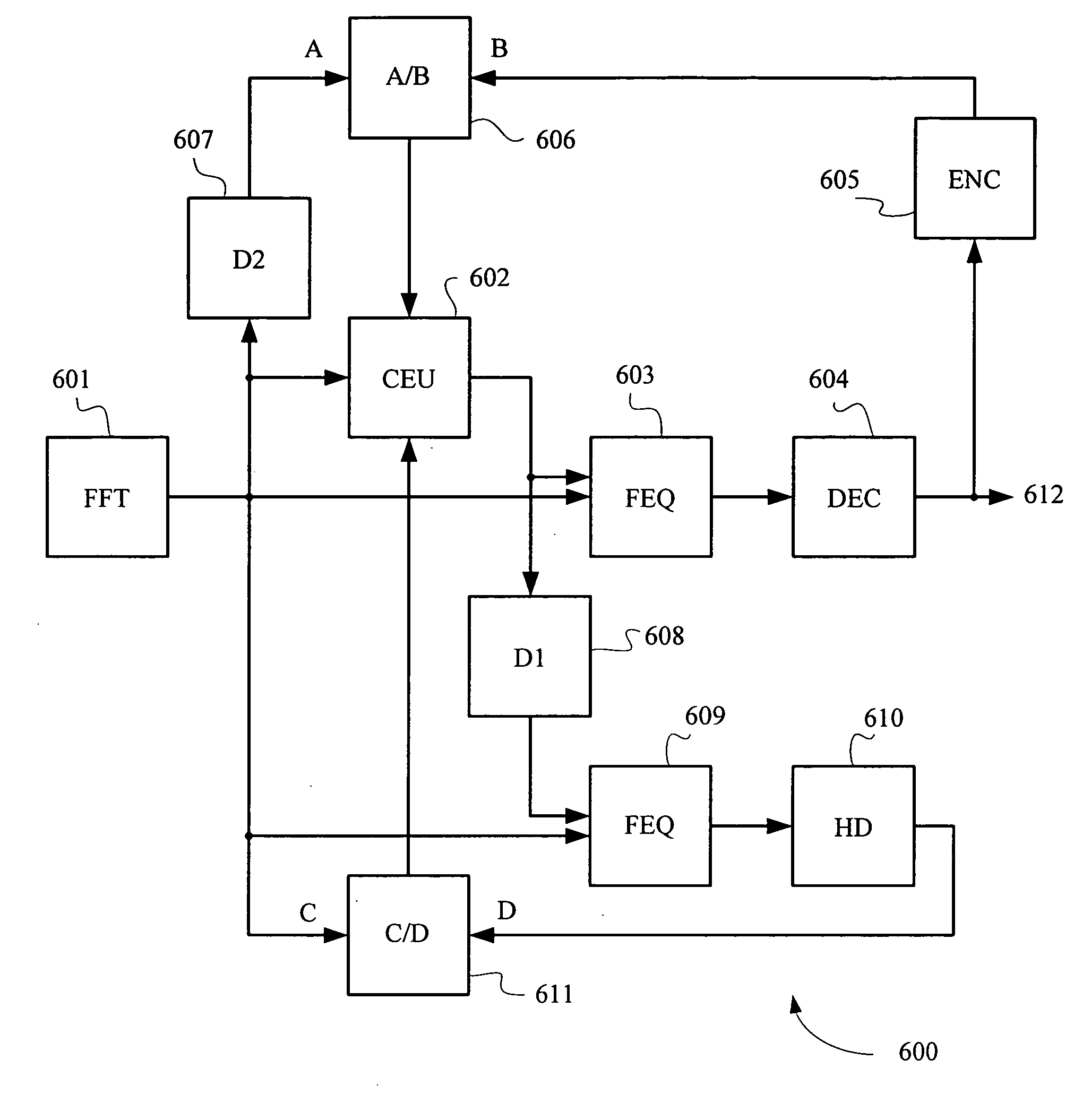

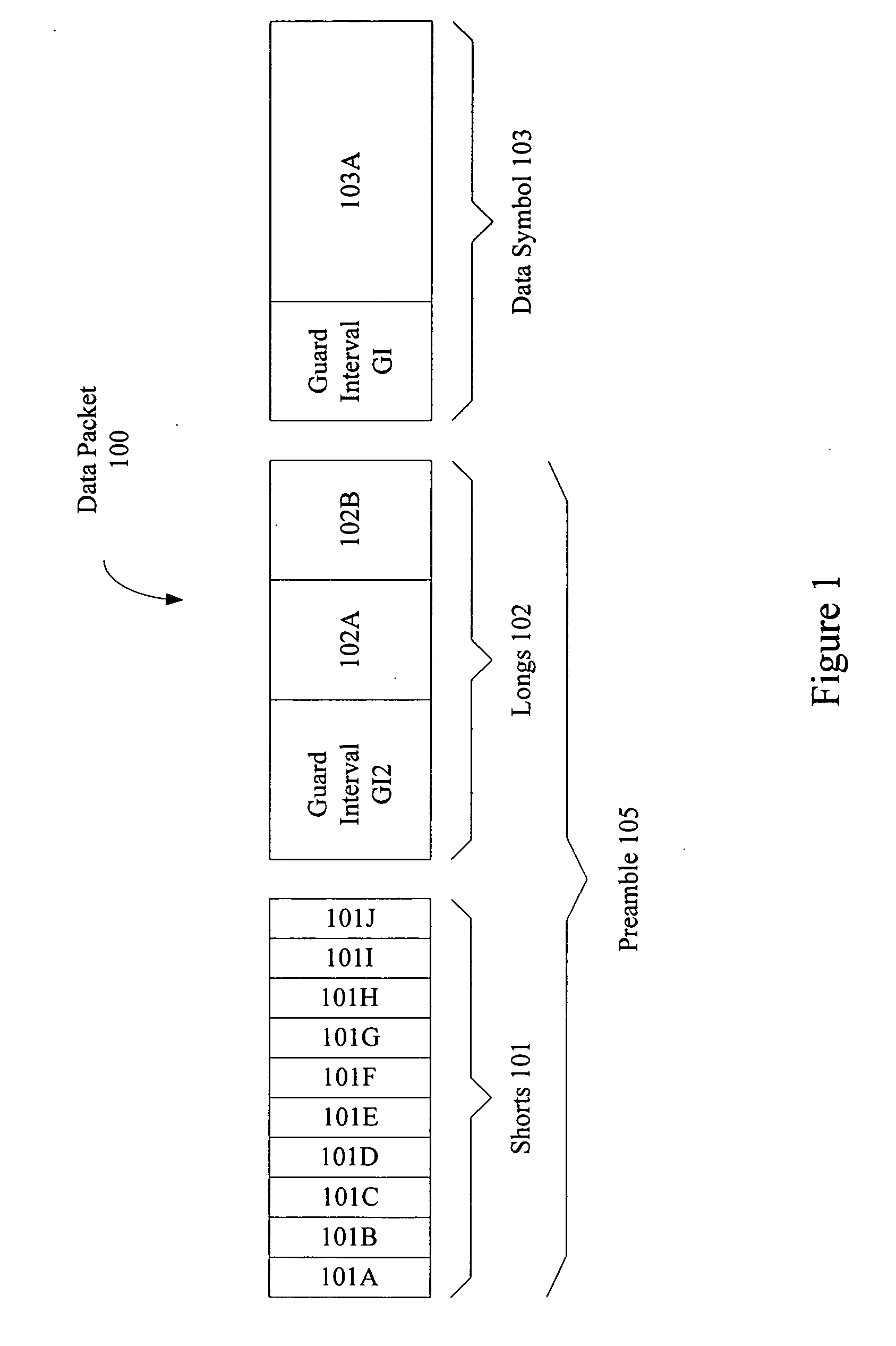

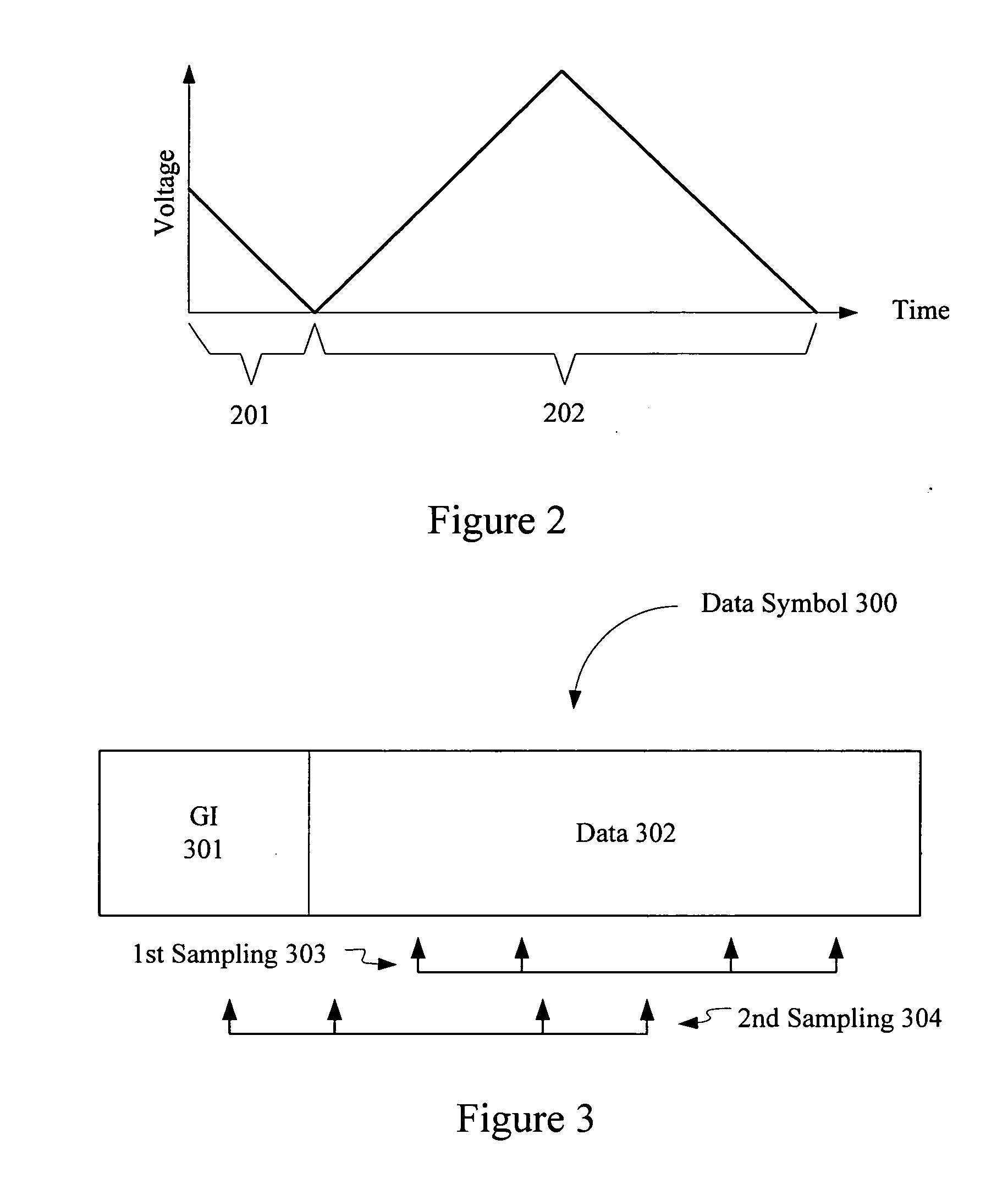

Decision feedback channel estimation and pilot tracking for OFDM systems

InactiveUS20050226341A1Degrade system performanceImprove channel estimationError preventionTransmission systemsPreambleAerospace engineering

Owner:QUALCOMM INC

Device Comprising a Communications Stick With A Scheduler

InactiveUS20080209425A1Reduce system performanceProgram initiation/switchingCAD circuit designTime scheduleRunning time

Owner:RADIOSCAPE

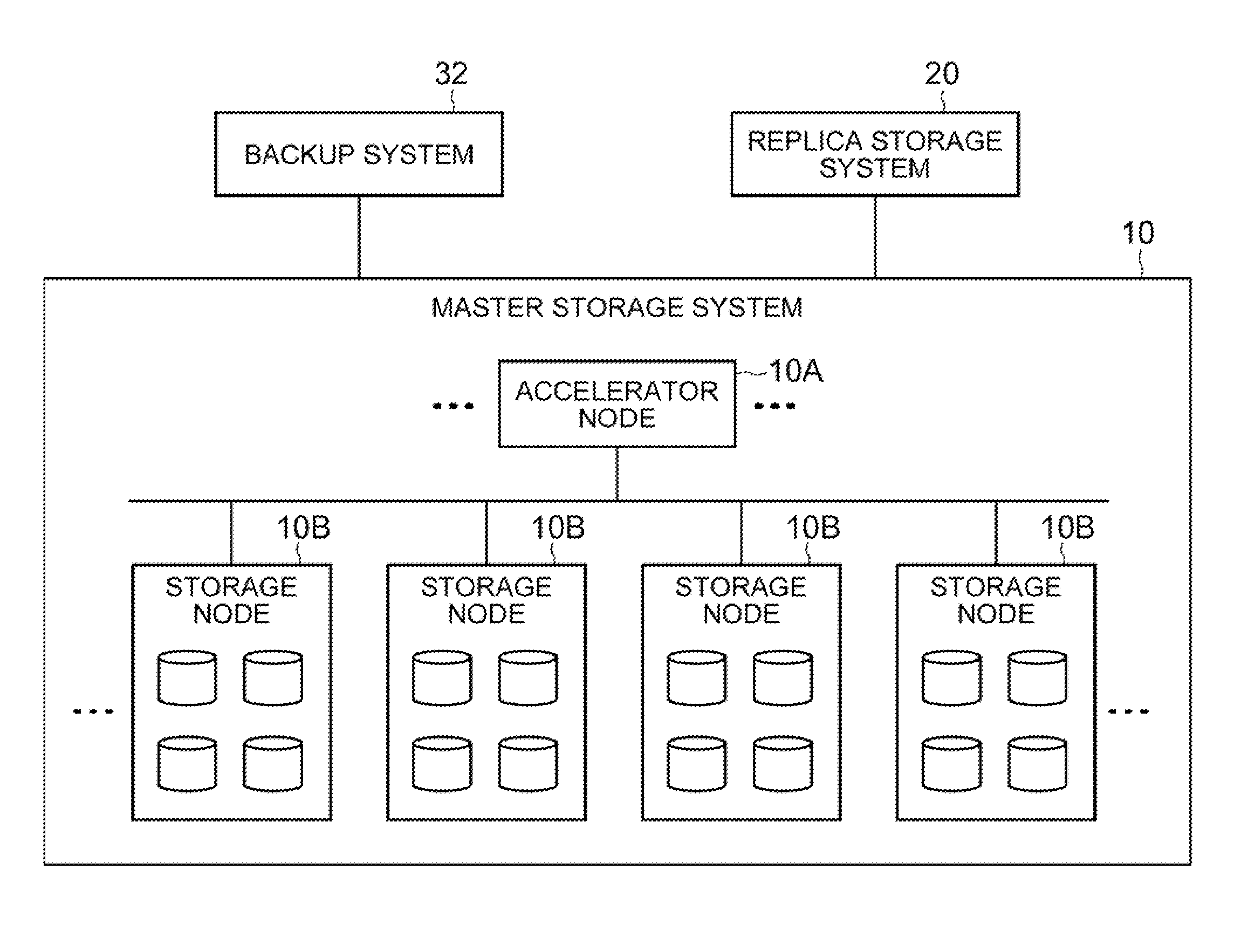

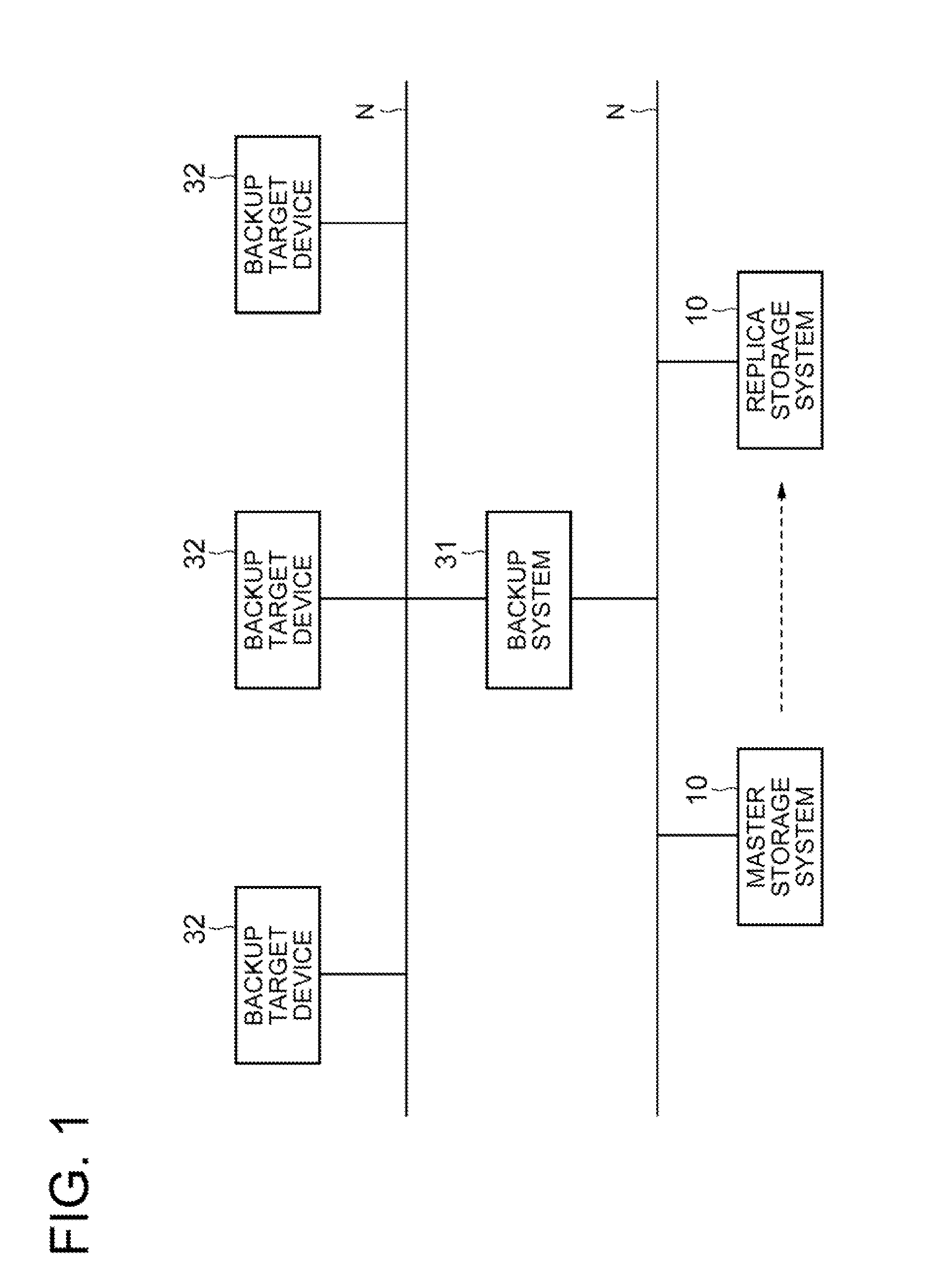

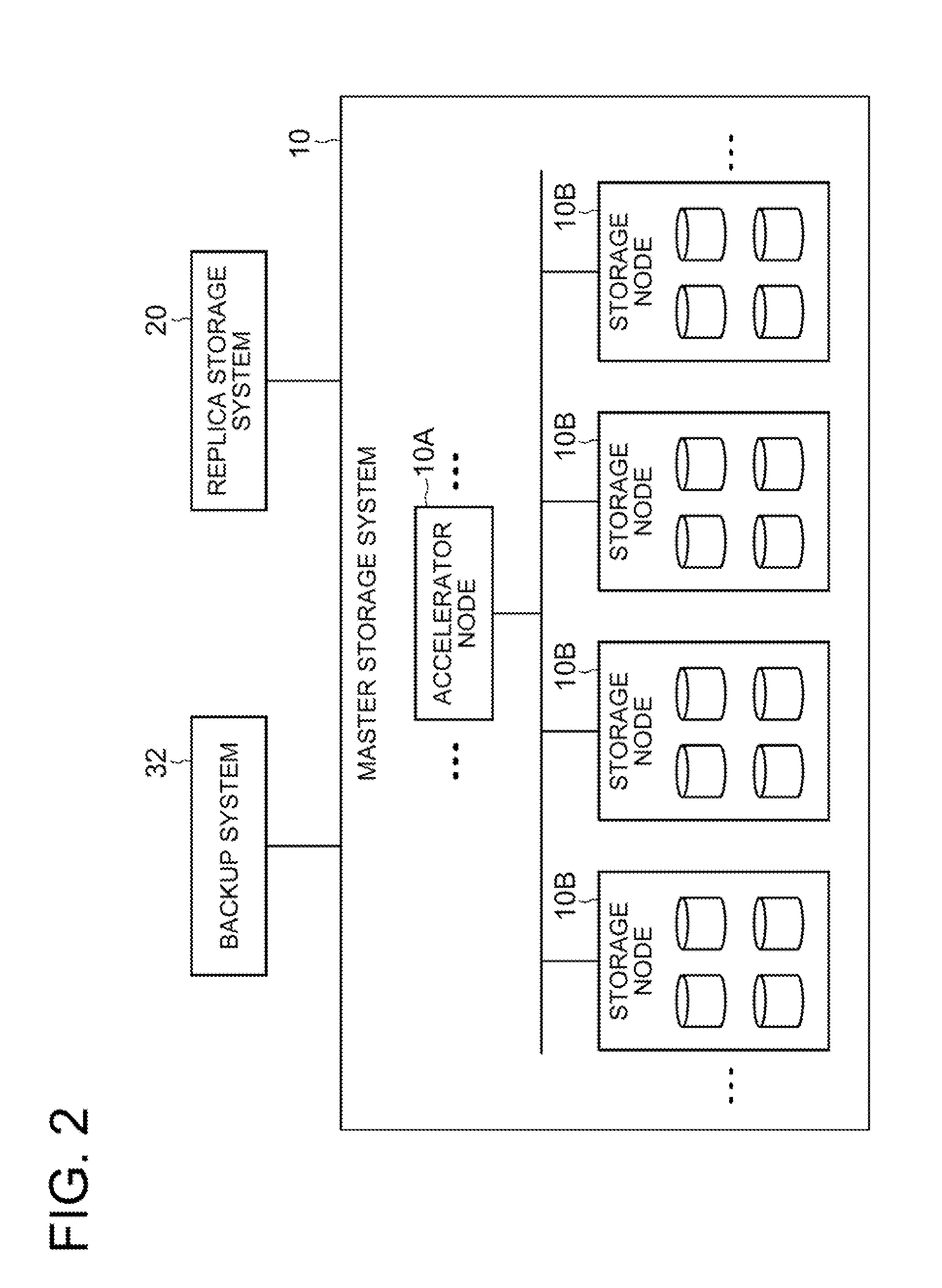

Storage system

InactiveUS20110313971A1Inhibits decrease of performance of systemReduce system performanceDigital data processing detailsSpecial data processing applicationsFile systemData storing

A system includes: a copy processing means configured to copy a copy source file system that includes storage data and key data referring to the storage data and being unique depending on the data, from a copy source storage system storing the copy source file system into a copy destination storage system, thereby forming a copy destination file system; and an update data specifying means configured to compare the key data within the copy source file system with the key data within the copy destination file system and specify, as update data, the storage data within the copy source file system referred to by the key data within the copy source file system, the storage data not existing in the copy destination file system. The copy processing means is configured to copy the update data stored within the copy source file system into the copy destination file system.

Owner:NEC CORP +1

Diversity receiver for mitigating the effects of fiber dispersion by separate detection of the two transmitted sidebands

InactiveUS6959154B1Reduce the impactImprove spectral efficiencyTransmission monitoringDistortion/dispersion eliminationFiber chromatic dispersionEngineering

Owner:AMERICAN TELEPHONE & TELEGRAPH CO

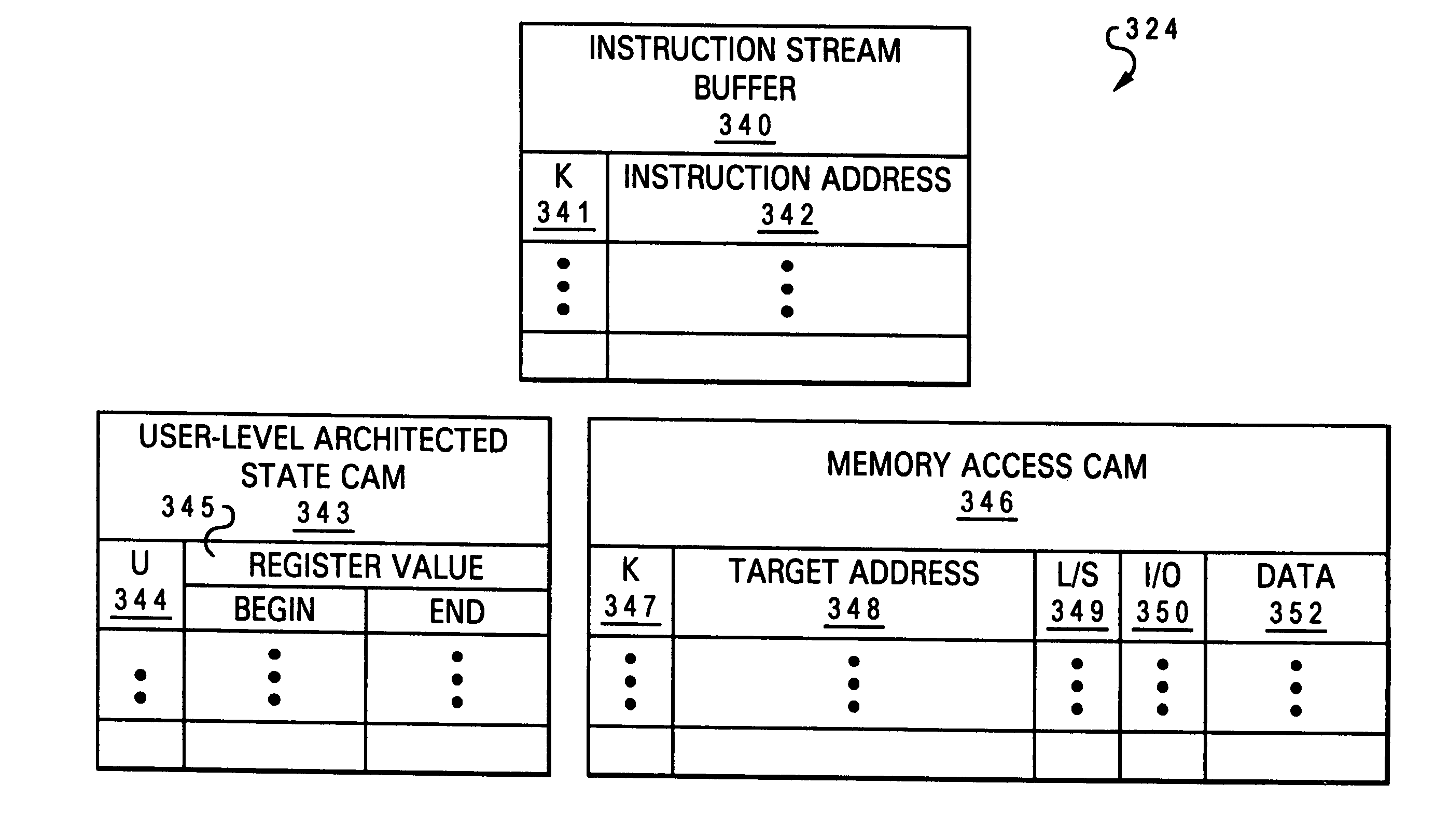

Acceleration of input/output (I/O) communication through improved address translation

InactiveUS6976148B2Improve latencyReduce system performanceMemory adressing/allocation/relocationMicro-instruction address formationComputer scienceAddress space

An I / O communication adapter receives from a processor core an I / O command referencing an effective address within an effective address space of the processor core that identifies a storage location. In response to receipt of the I / O command, the I / O communication adapter translates the effective address into a real address by reference to a translation data structure. The I / O communication adapter then accesses the storage location utilizing the real address to perform an I / O data transfer specified by the I / O command.

Owner:INT BUSINESS MASCH CORP

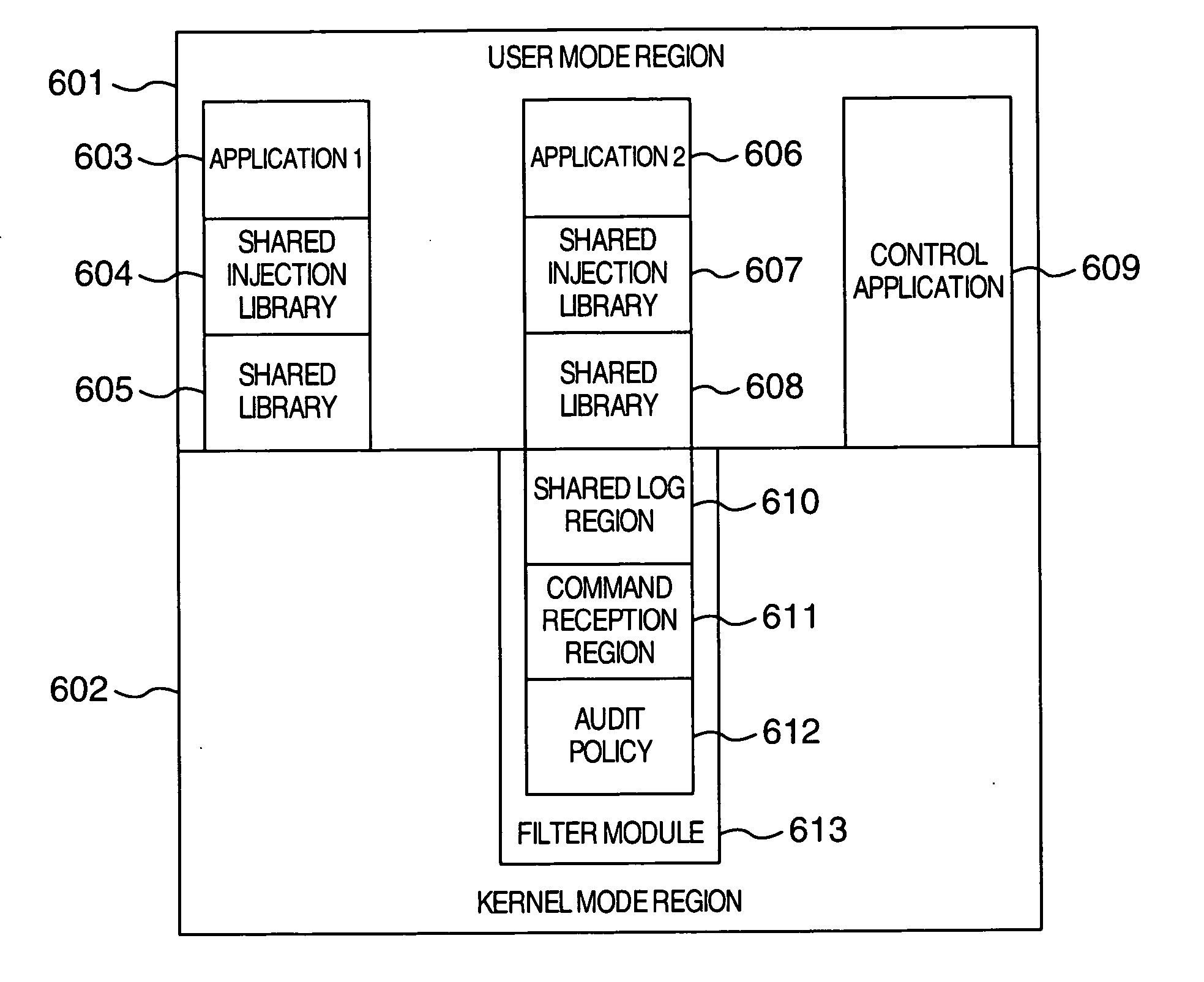

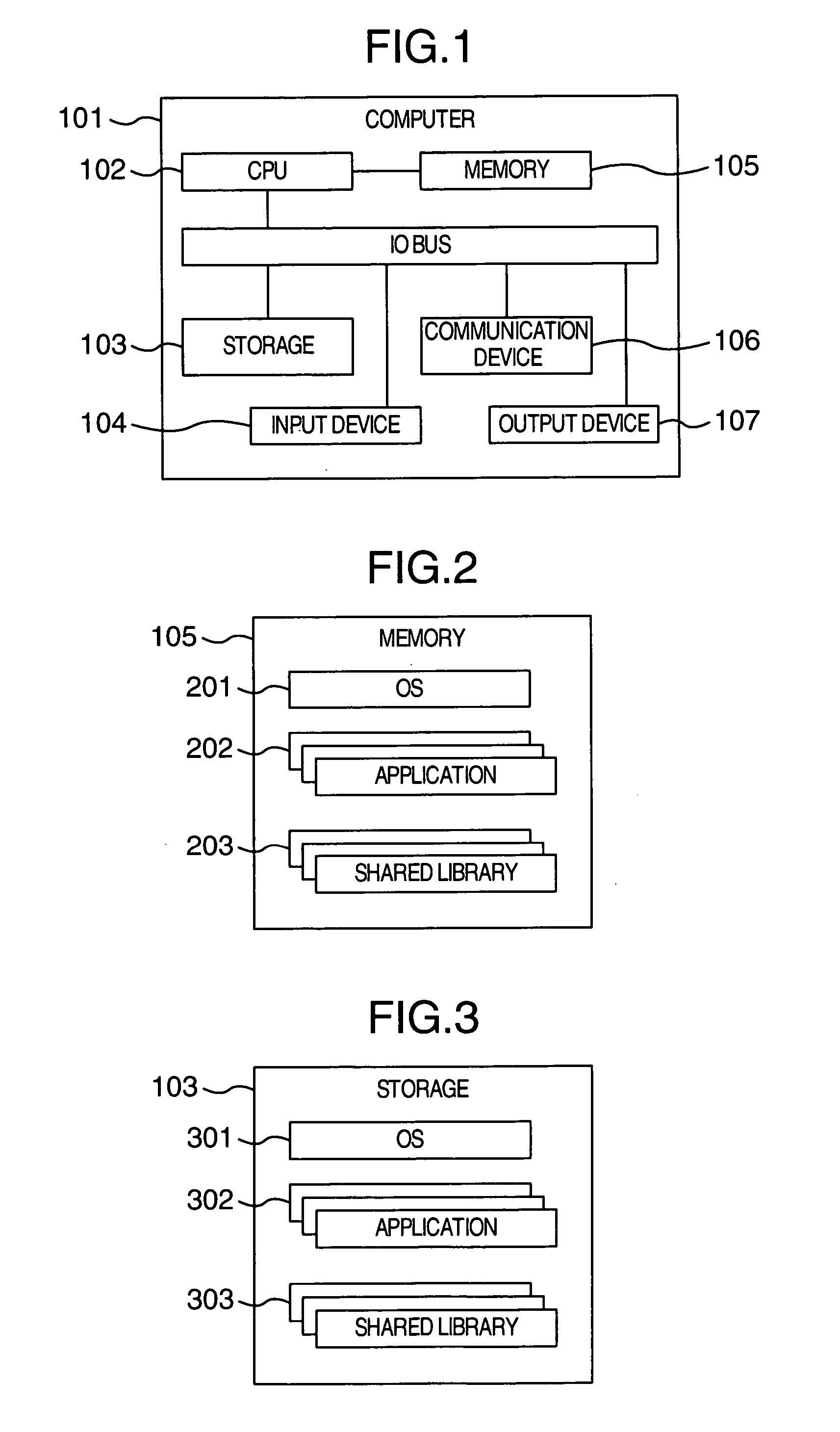

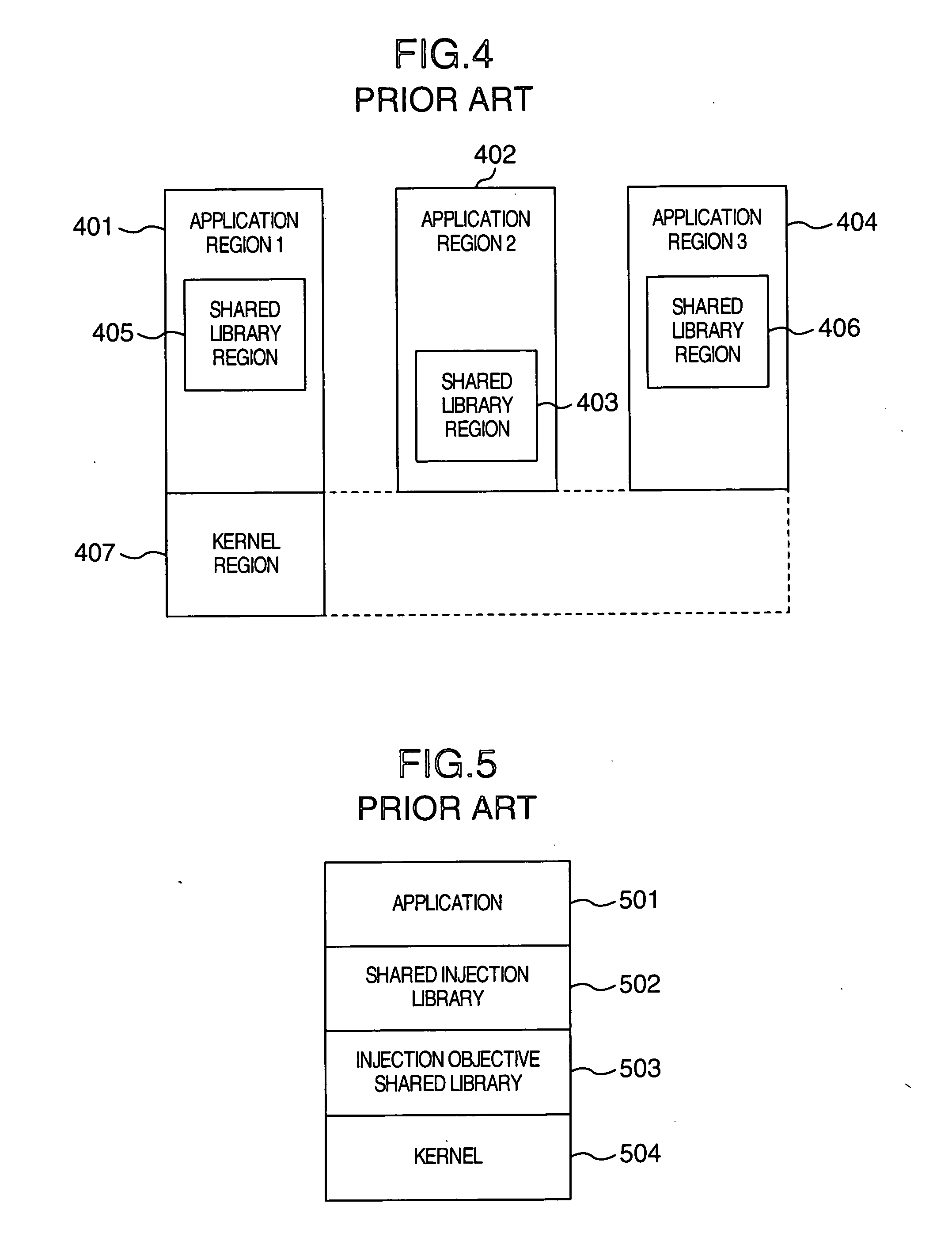

Method of calling an export function stored in a shared library

InactiveUS20050108721A1Reduce system performanceLow reliabilityError detection/correctionMultiprogramming arrangementsLibrary science

A method for efficiently auditing a call to an export function in a shared library from an application program executed by a computer and the computer executing the method are provided. The call method hooks a shared library call by using an injection shared library for hooking the export function in the shared library, records shared library call information and audits the shared library call. It is therefore possible to filter a shared library call without modifying the shared library and to generate consistent records at high speed.

Owner:HITACHI OMRON TERMINAL SOLUTIONS CORP

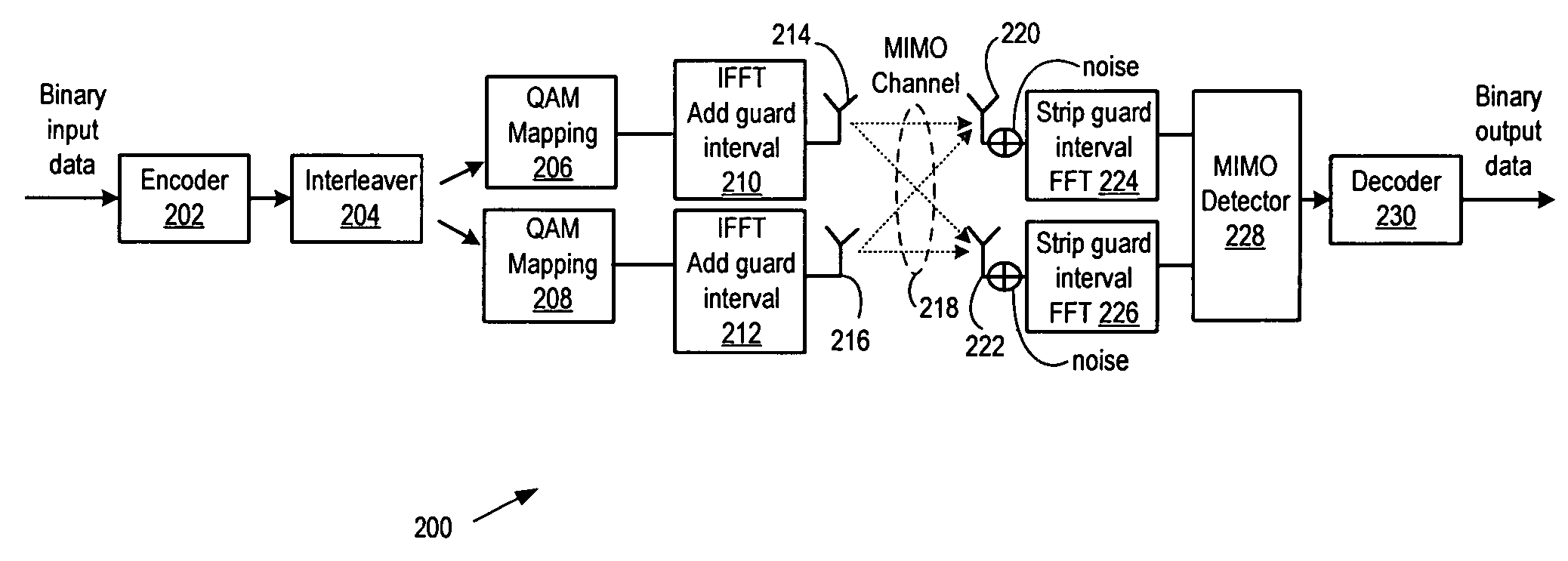

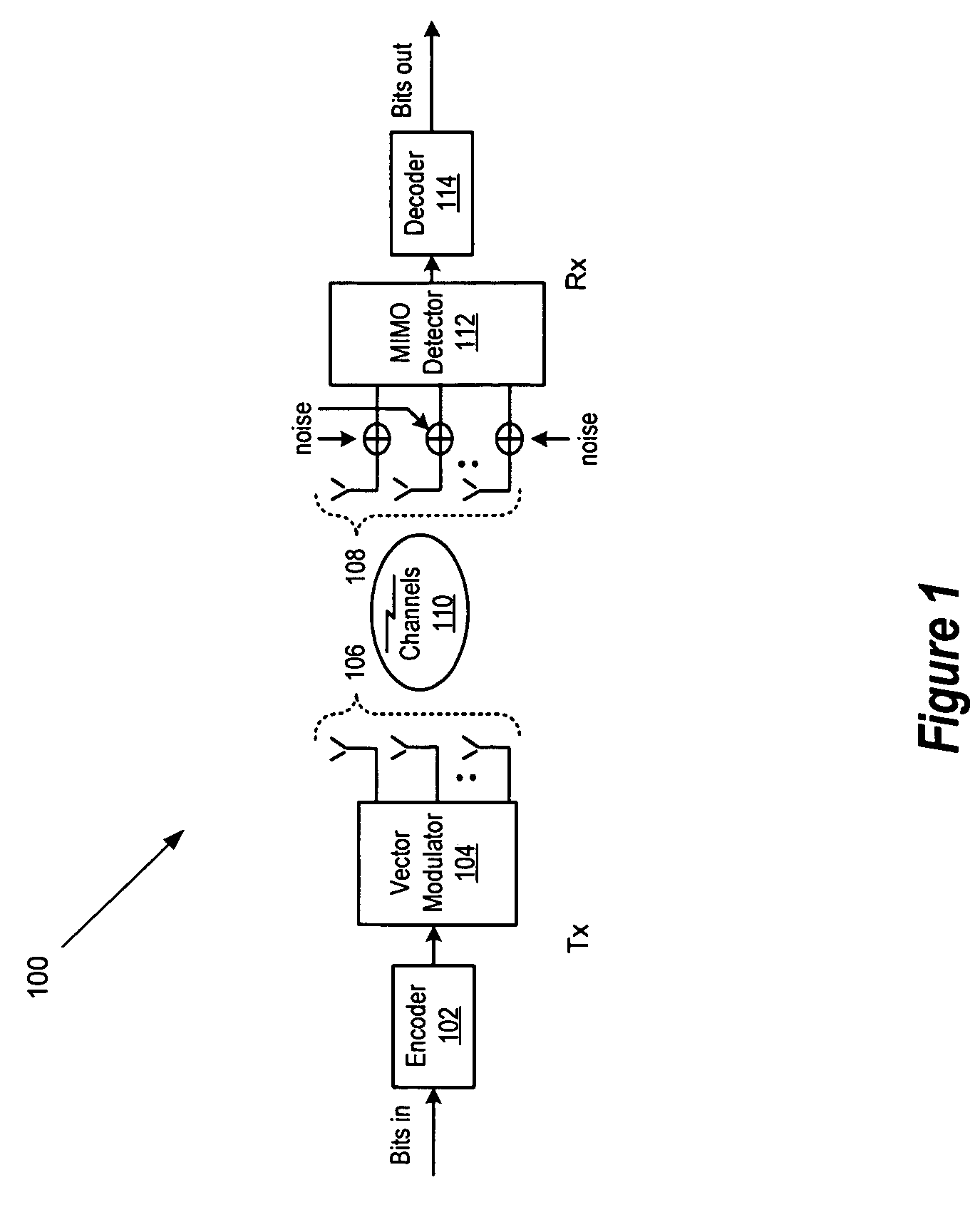

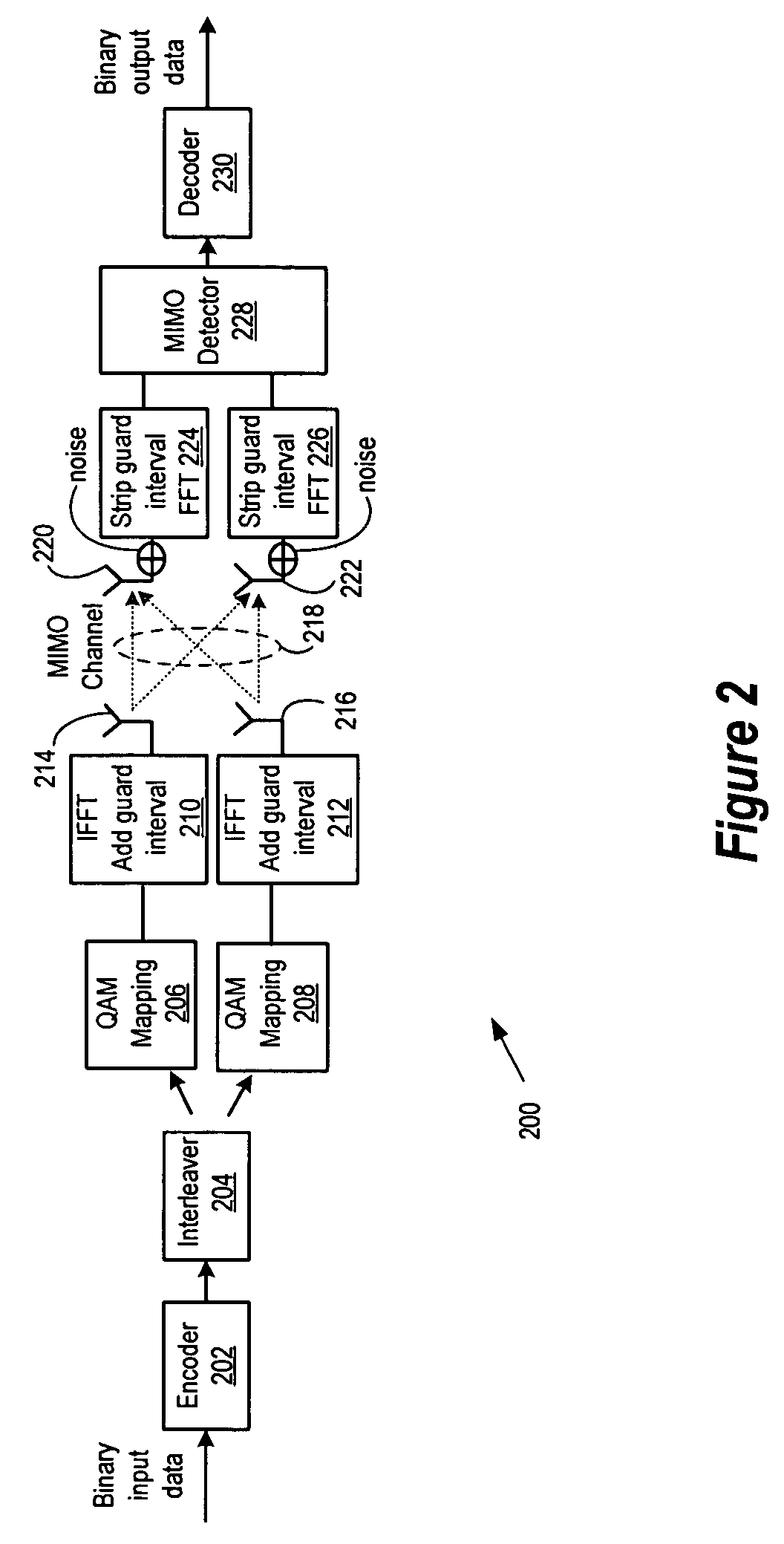

Reduced complexity detector for multiple-antenna systems

InactiveUS20060146950A1Fast processingReduce system performanceMultiple-port networksData representation error detection/correctionCommunications systemRound complexity

A reduced-complexity maximum-likelihood detector that provides a high degree of signal detection accuracy while maintaining high processing speeds. A communication system implementing the present invention comprises a plurality of transmit sources operable to transmit a plurality of symbols over a plurality of channels, wherein the detector is operable to receive symbols corresponding to the transmitted symbols. The detector processes the received symbols to obtain initial estimates of the transmitted symbols and then uses the initial estimates to generate a plurality of reduced search sets. The reduced search sets are then used to generate decisions for detecting the transmitted symbols. In various embodiments of the invention, the decisions for detecting the symbols can be hard decisions or soft decisions. Furthermore, in various embodiments of the invention, the initial estimates can be obtained using a plurality of linear equalization techniques, including zero-forcing equalization, minimum-mean-squared-error equalization. The initial estimate can also be obtained by nulling and canceling techniques. In various embodiments of the invention, the data output corresponding to the transmitted symbols can be obtained using a log-likelihood probability ratio. The method and apparatus of the present invention can be applied to any communication system with multiple transmit streams.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

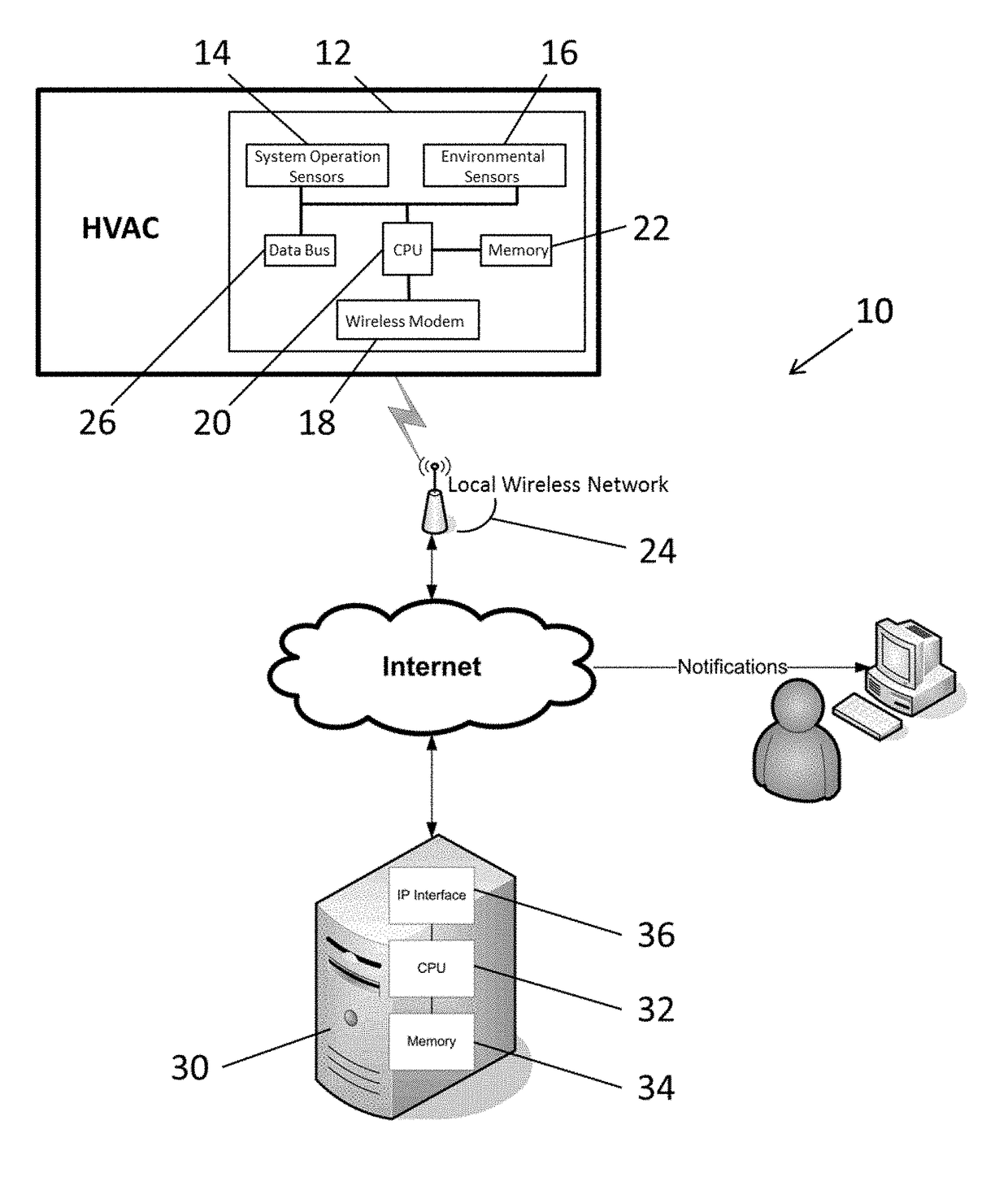

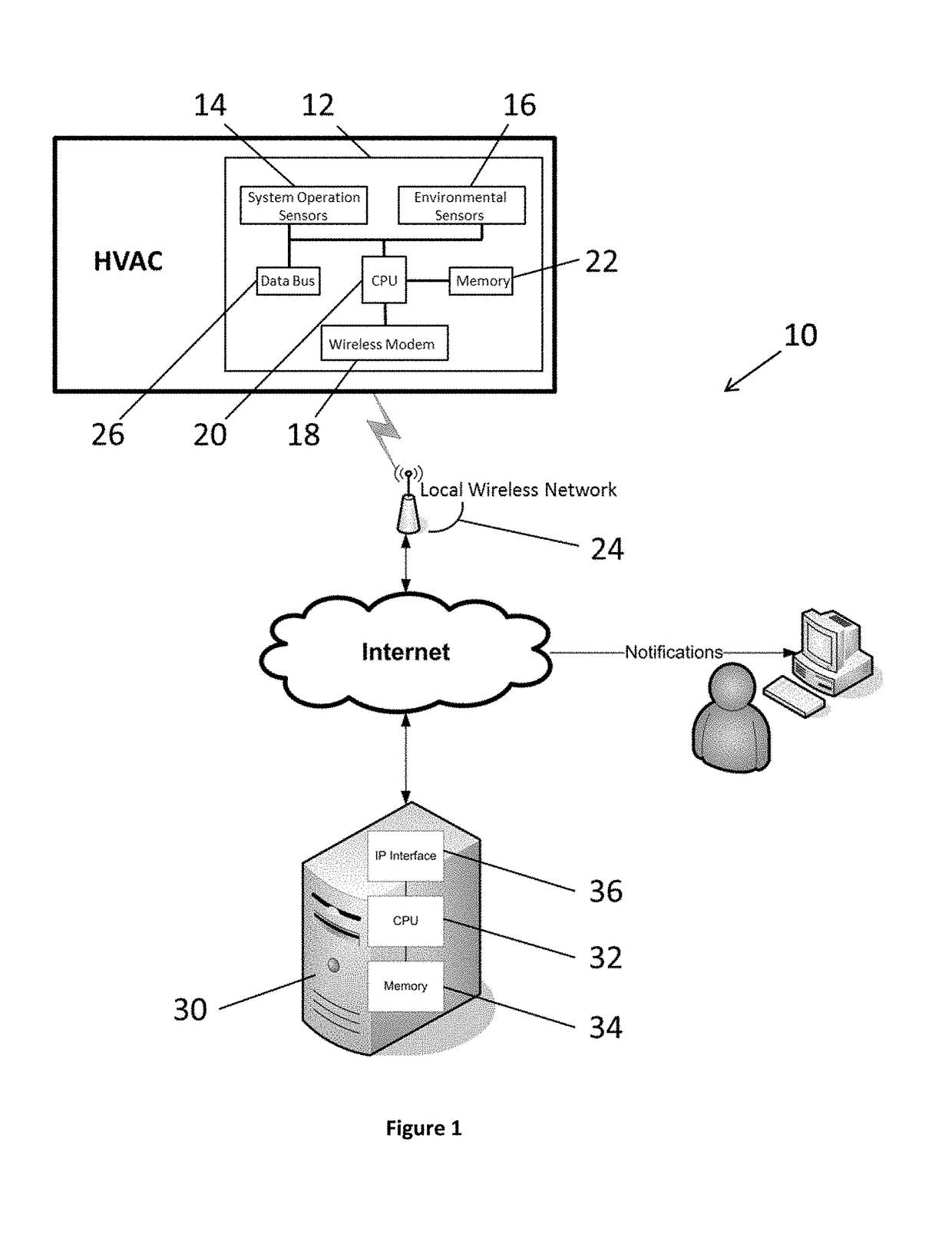

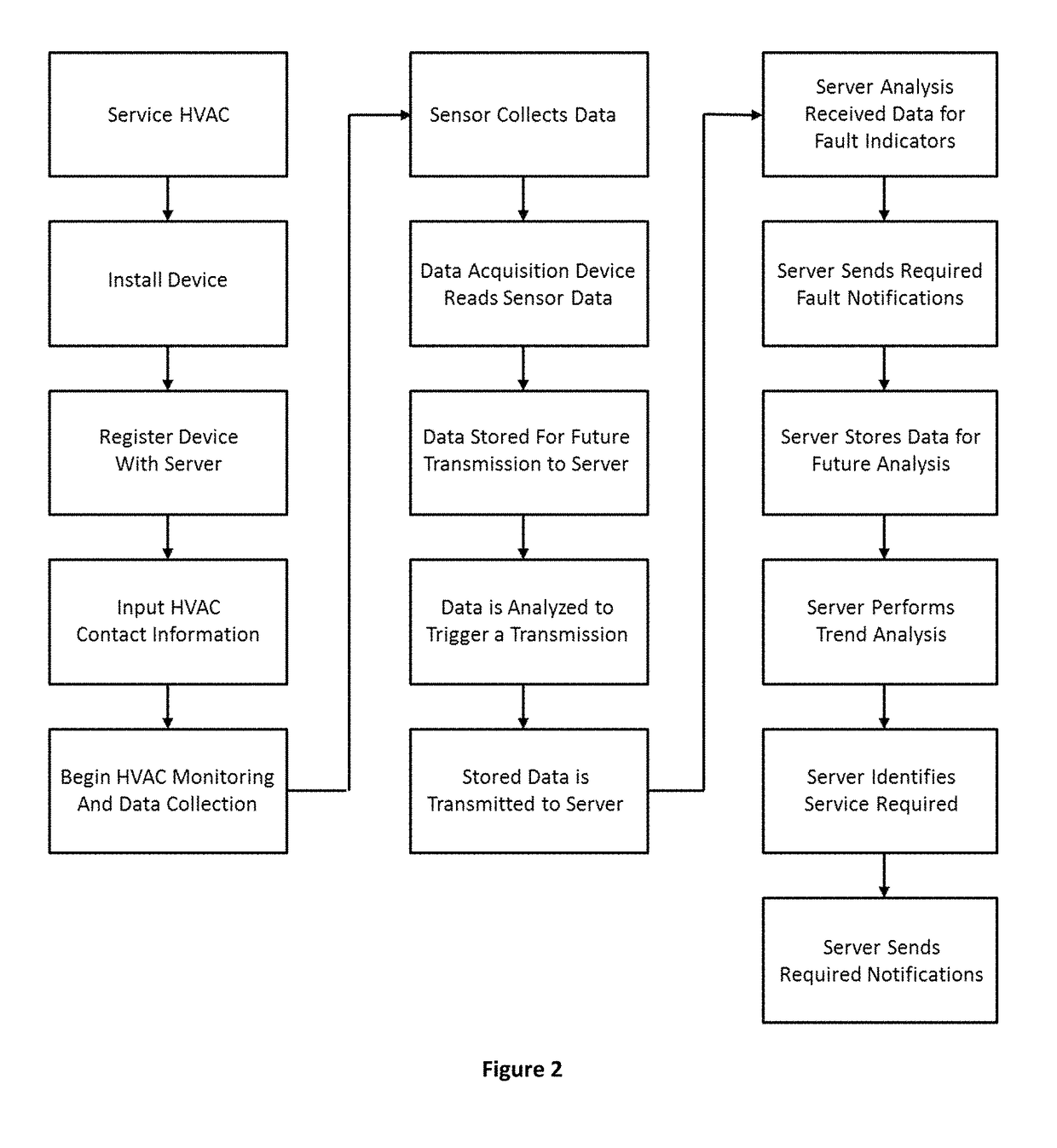

HVAC condition based maintenance system and method

InactiveUS9696056B1Easy and efficient to manufactureDurable and reliable constructionProgramme controlMechanical apparatusMulti unitData transmission

The HVAC condition based maintenance system and method provides for a system to reduce operating costs by replacing existing time based scheduled maintenance with an on-condition based maintenance system and method for continuous monitoring and acquiring condition based data from an operating HVAC system, transmitting data to a remote server for storage, analysis and trending, recognizing operational performance reductions based on the comparison of current trending to historical data, and for triggering notification of failures and corrective action based on routine, impending and immediate problem recognition. Additionally, the acquired data is used to derive actual heat load characteristic of the building, identify HVAC system deficiencies, building deficiencies and installation issues and load imbalance of multi-unit systems providing direction for system improvements.

Owner:SYST PROWORKS INC

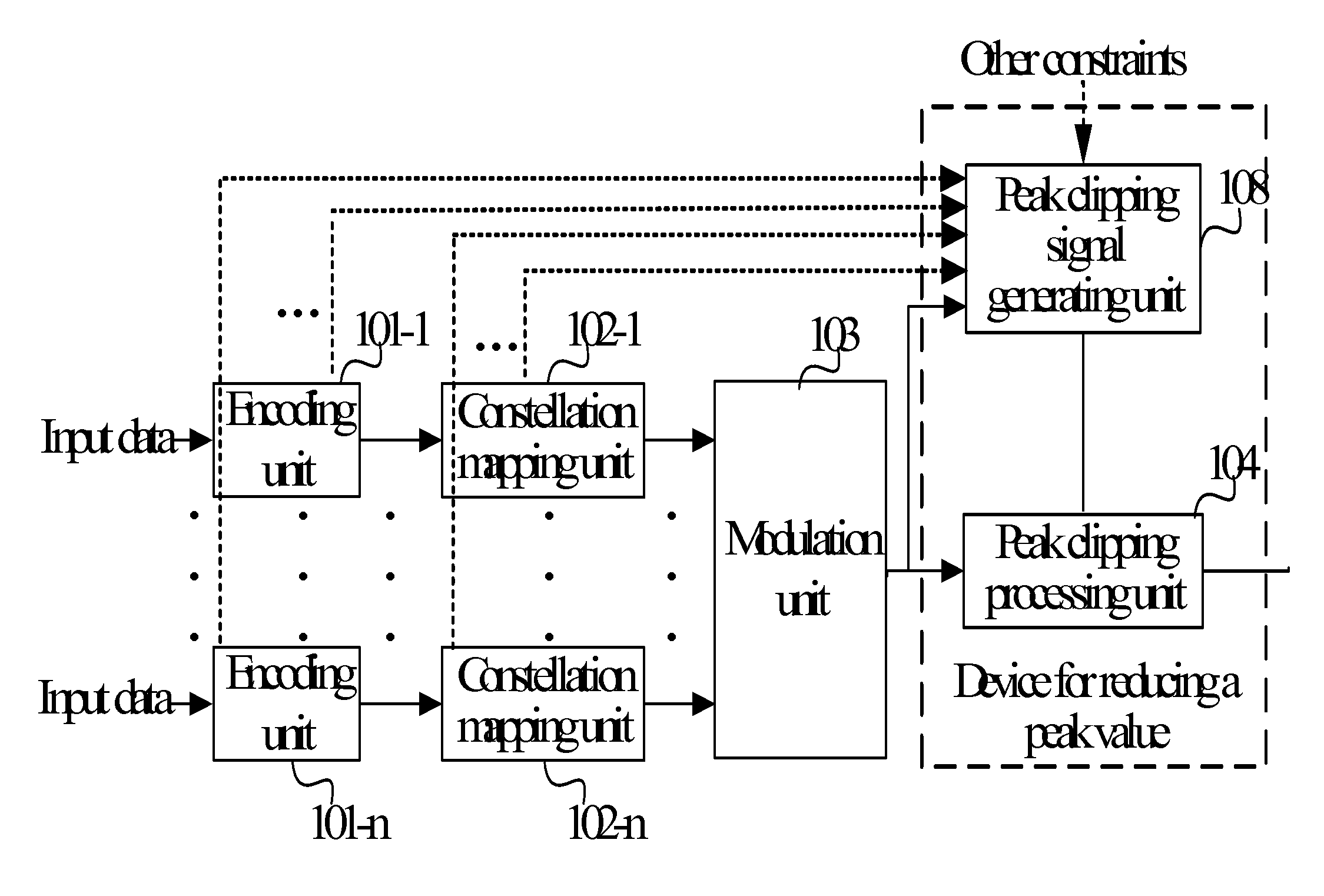

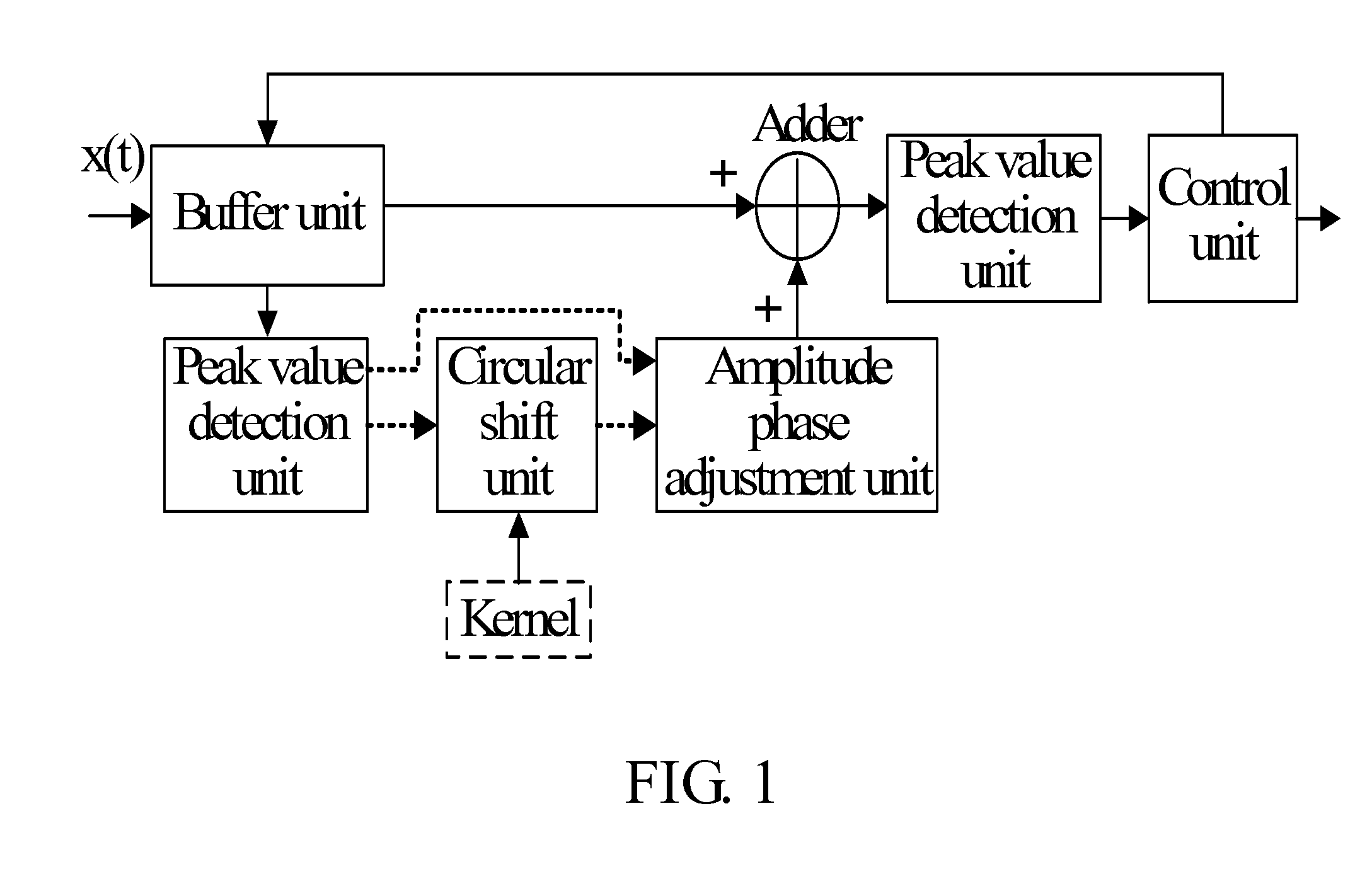

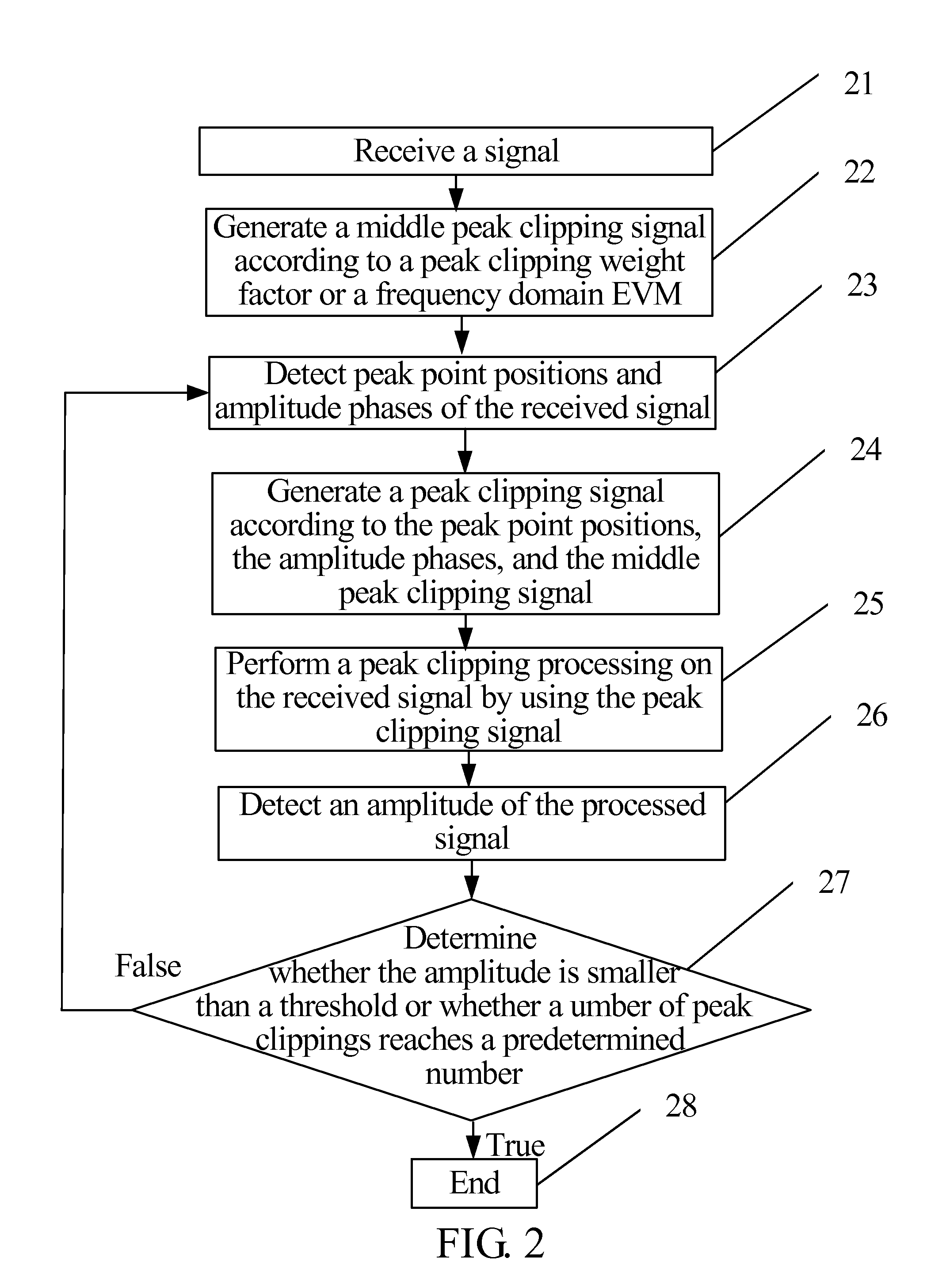

Method and device for reducing signal peak value and transmitting device

InactiveUS20100020895A1Improve system performanceImprove anti-interference abilityModulated-carrier systemsPulse demodulatorCarrier signalError vector magnitude

A method and a device for reducing a signal peak value are adapted to solve a problem that overall performance of a system is significantly degraded caused by allocating a same weight to each sub-carrier so as to averagely distribute a peak clipping noise to each sub-carrier. The method includes: receiving a signal (21); and performing a peak clipping processing on the received signal by using a peak clipping signal (25). The peak clipping signal is formed according to a peak clipping weight factor and the received signal, or according to a frequency domain error vector magnitude (EVM) and the received signal. In the method, a weight of each sub-carrier is set according to the peak clipping weight factor or the frequency domain EVM during the peak clipping processing, thereby improving the overall performance of the system.

Owner:HUAWEI TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com