Patents

Literature

112results about How to "Improve memory access efficiency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

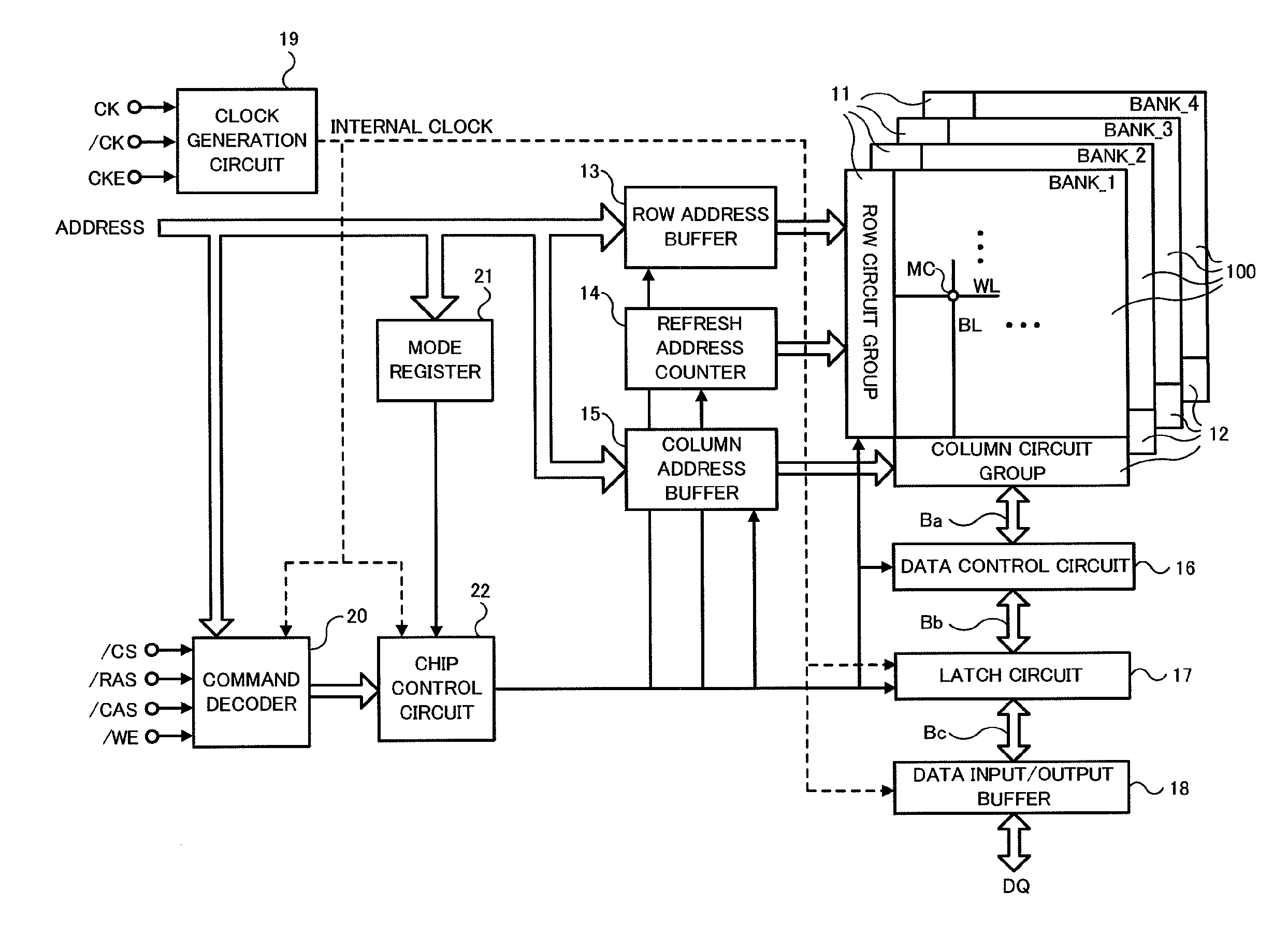

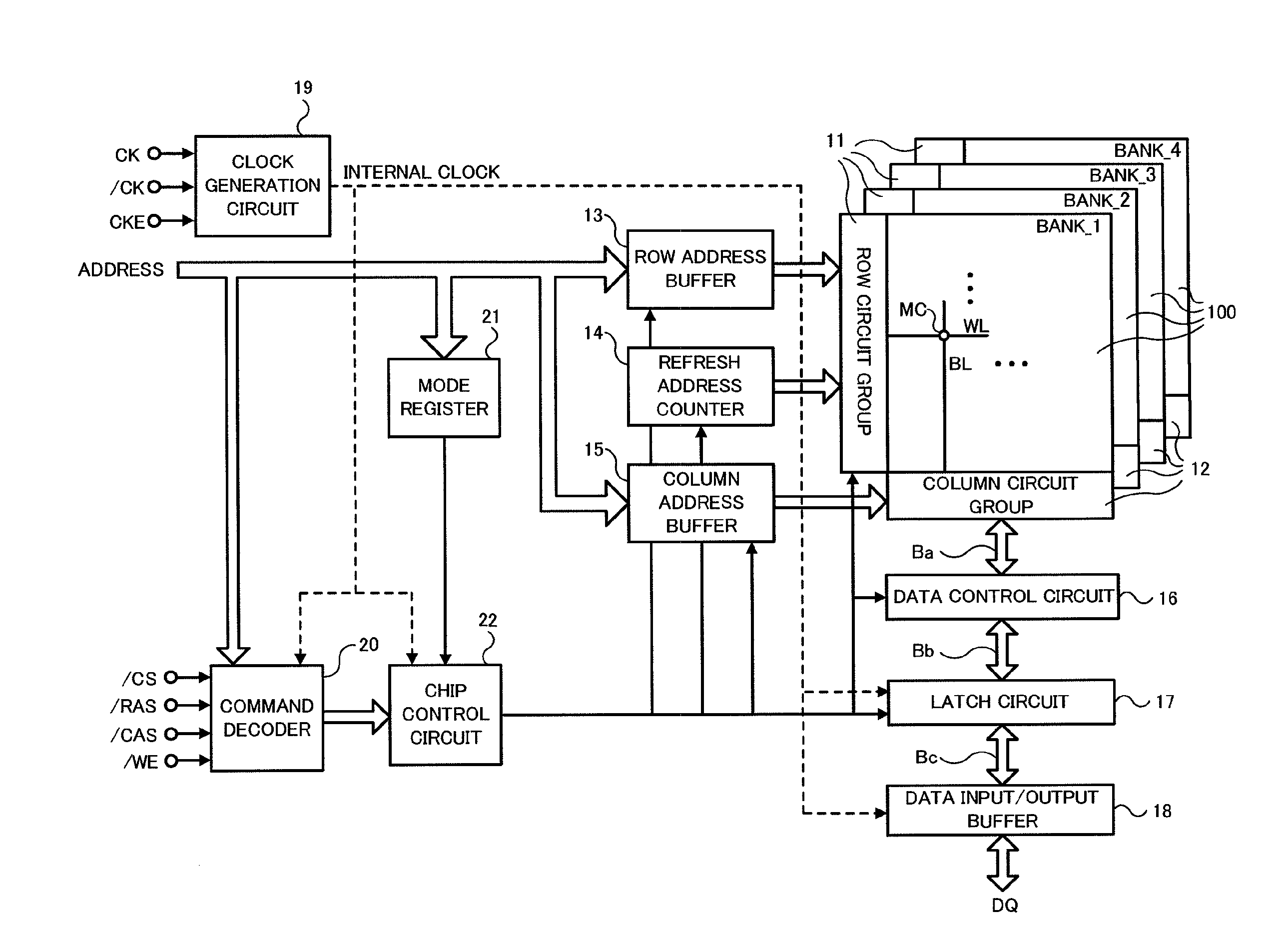

Semiconductor device, refresh control method thereof and computer system

InactiveUS20110225355A1Promote quick completionGrowth inhibitionDigital storageMemory systemsBit lineProcessor register

A semiconductor device comprises a first memory cell array, a register storing information of whether or not one of the word lines in an active state exists in a unit area and storing address information, and a control circuit controlling a refresh operation for a refresh word line based on the information in the register when receiving a refresh request. When the one of the word lines in an active state does not exist, memory cells connected to the refresh word line are refreshed. When the one of the word lines in an active state exists, the one of the word lines in an active state is set into an inactive state temporarily and the memory cells connected to the refresh word line are refreshed after precharging bit lines of the memory cells.

Owner:PS4 LUXCO SARL

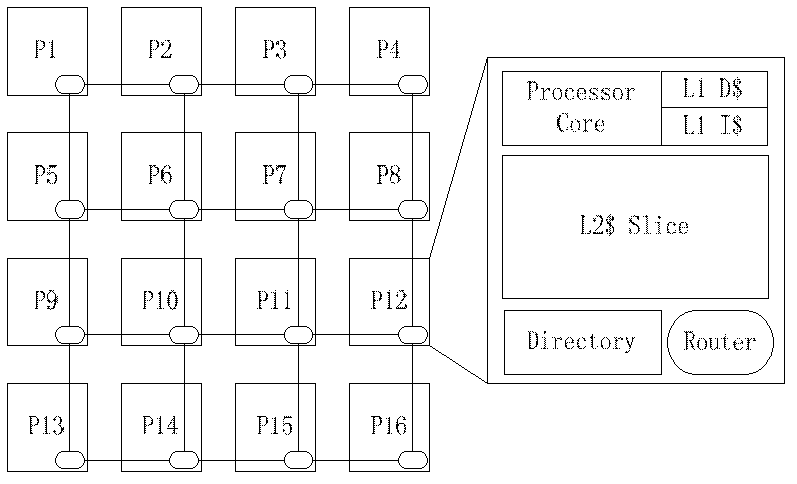

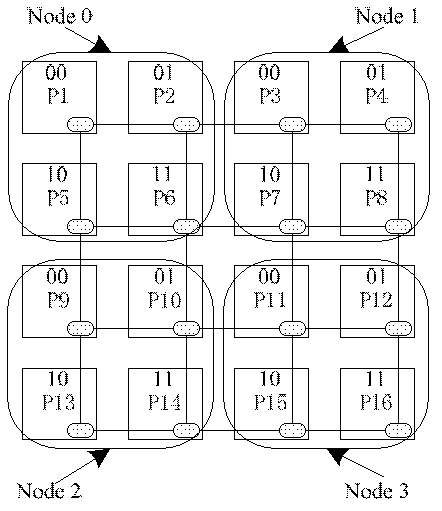

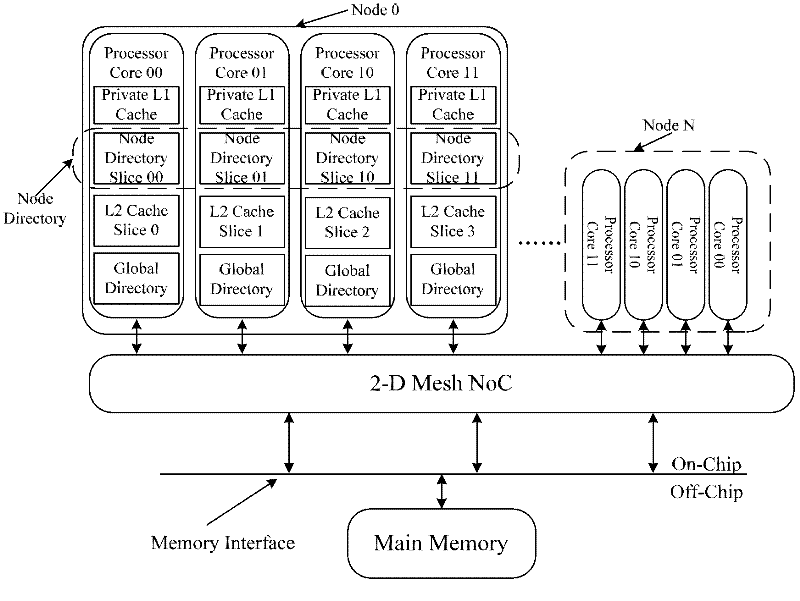

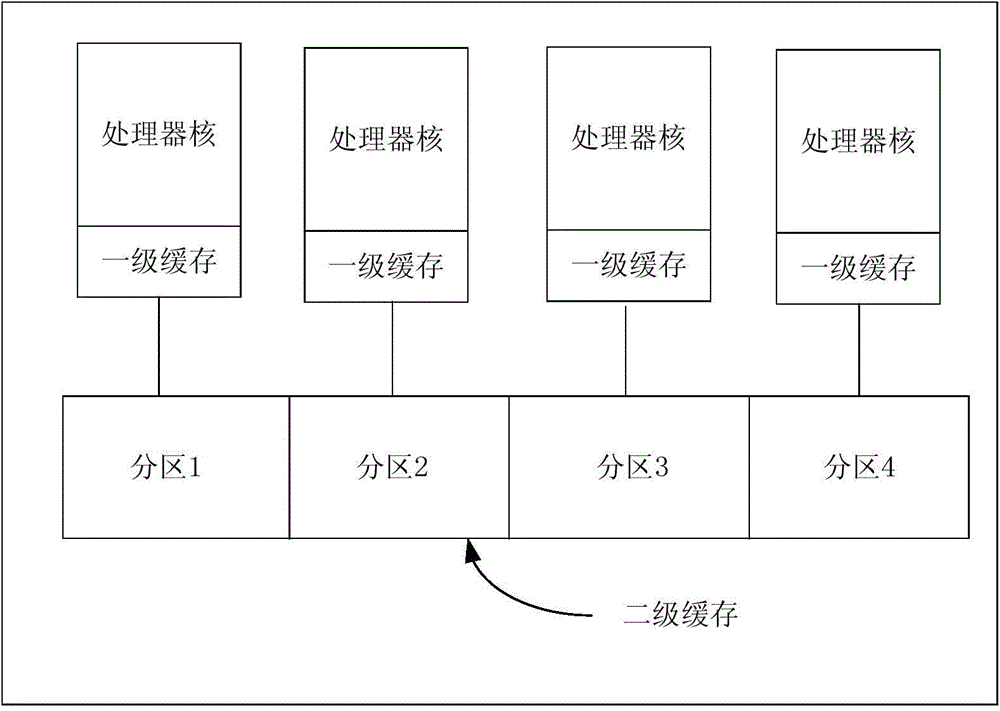

Consistency maintenance device for multi-kernel processor and consistency interaction method

InactiveCN102346714AReduce storage overheadImprove memory access efficiencyMemory adressing/allocation/relocationLinear growthData access

The invention discloses a consistency maintenance device for a multi-kernel processor and a consistency interaction method, mainly solving the technical problem of large directory access delay in a consistency interaction process for processing read-miss and write-miss by a Cache consistency protocol of the traditional multi-kernel processor. According to the invention, all kernels of the multi-kernel processor are divided into a plurality nodes in parallel relation, wherein each node comprises a plurality of kernels. When the read-miss and the write-miss occur, effective data transcription nodes closest to the kernels undergoing the read-miss and the write-miss are directly predicted and accessed according to node predication Cache, and a directory updating step is put off and is not performed until data access is finished, so that directory access delay is completely concealed and the access efficiency is increased; a double-layer directory structure is beneficial to conversion of directory storage expense from exponential increase into linear increase, so that better expandability is achieved; and because the node is taken as a unit for performing coarse-grained predication, the storage expense for information predication is saved compared with that for fine-grained prediction in which the kernel is taken as a unit.

Owner:XI AN JIAOTONG UNIV

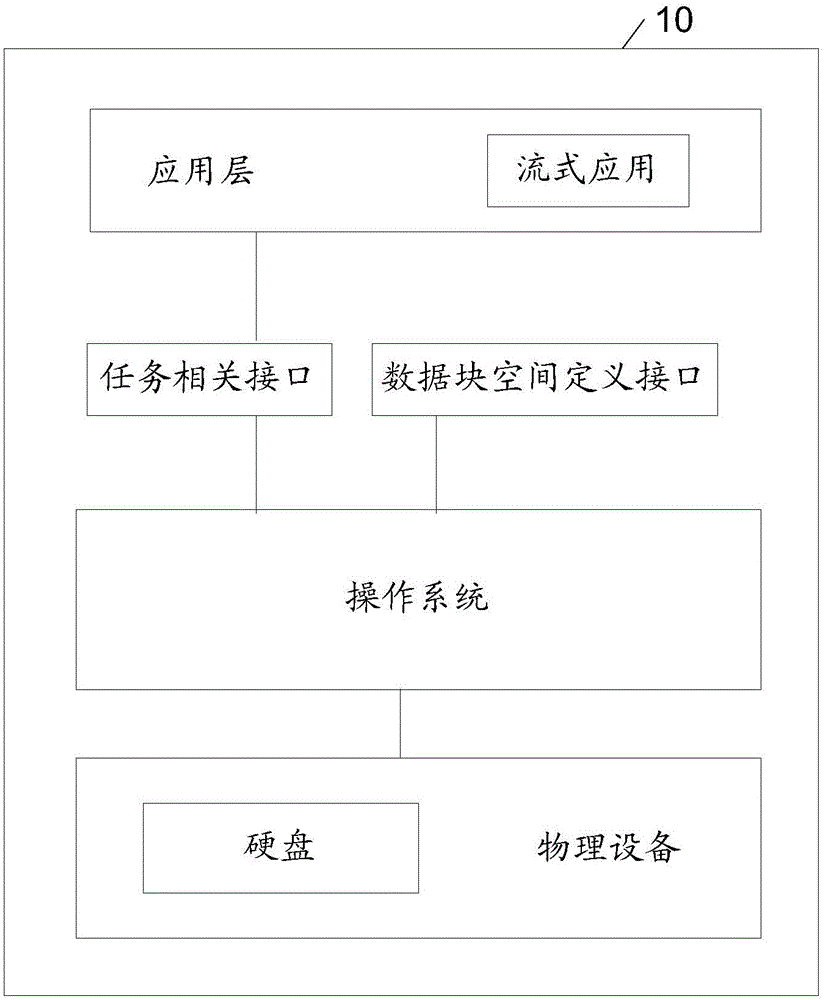

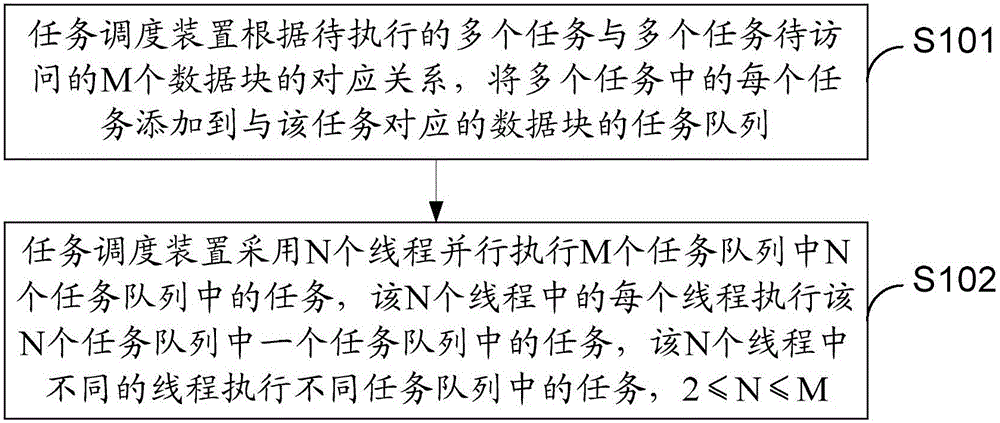

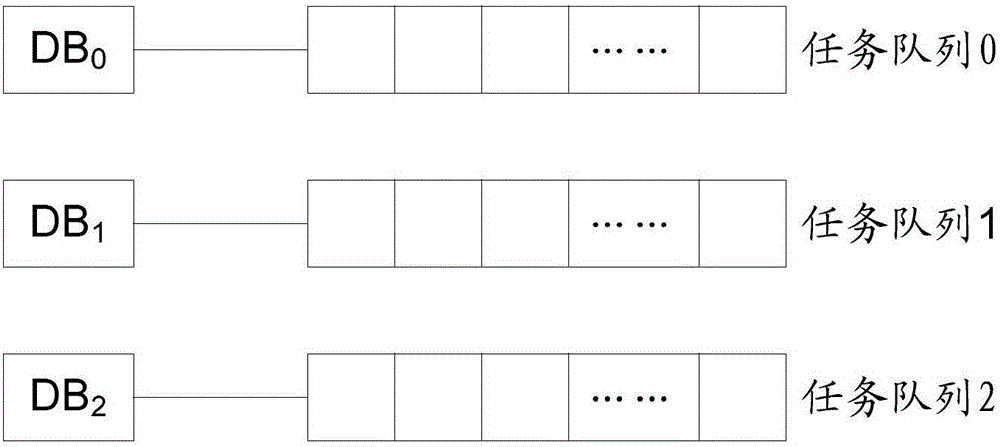

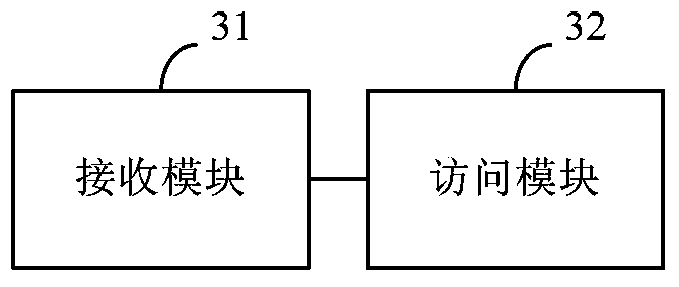

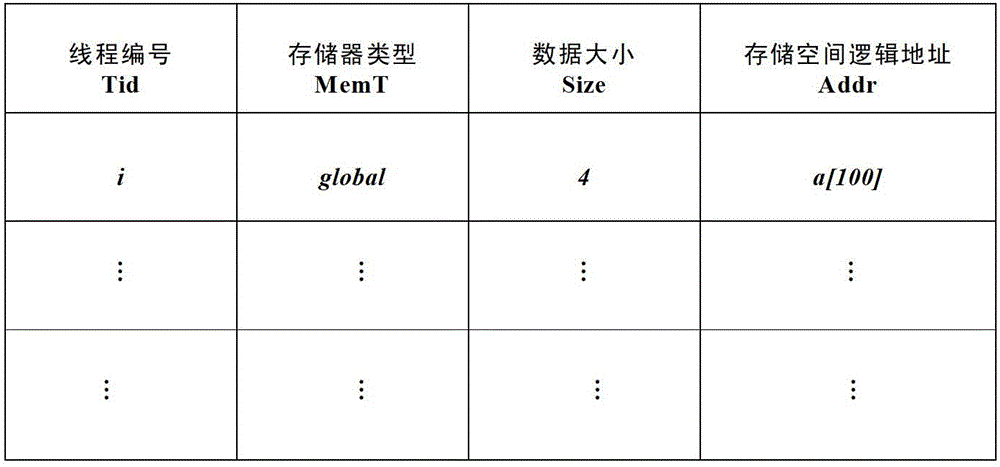

Task scheduling method and device

ActiveCN105893126ANo lock problemImprove performanceProgram initiation/switchingParallel computingCore system

The embodiment of the invention discloses a task scheduling method and device and relates to the technical field of computers. The situation that when multiple threads carry out tasks in parallel, the threads have access to one data block at the same time, so that data competition is triggered can be avoided, additional performance expenses caused by introduction of a lock can also be avoided, and the detection and debugging difficulty of concurrent errors is lowered. According to the specific scheme, according to the corresponding relation between multiple to-be-executed tasks and M data blocks to be accessed by the tasks, each of the tasks is added to a task queue of the data block corresponding to the task; N threads carry out the tasks in the N task queues in the M task queues in parallel, each of the N threads carries out the tasks in one of the N task queues, and different threads in the N threads carry out the tasks in different task queues, wherein N is larger than or equal to 2 and smaller than or equal to M. The method and device are used for the task scheduling process of a multi-core system.

Owner:HUAWEI TECH CO LTD +1

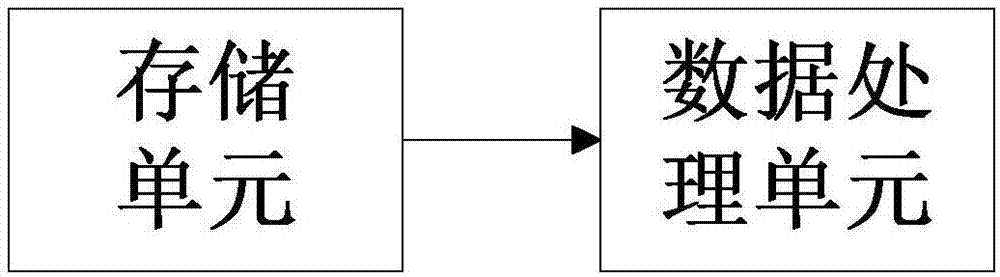

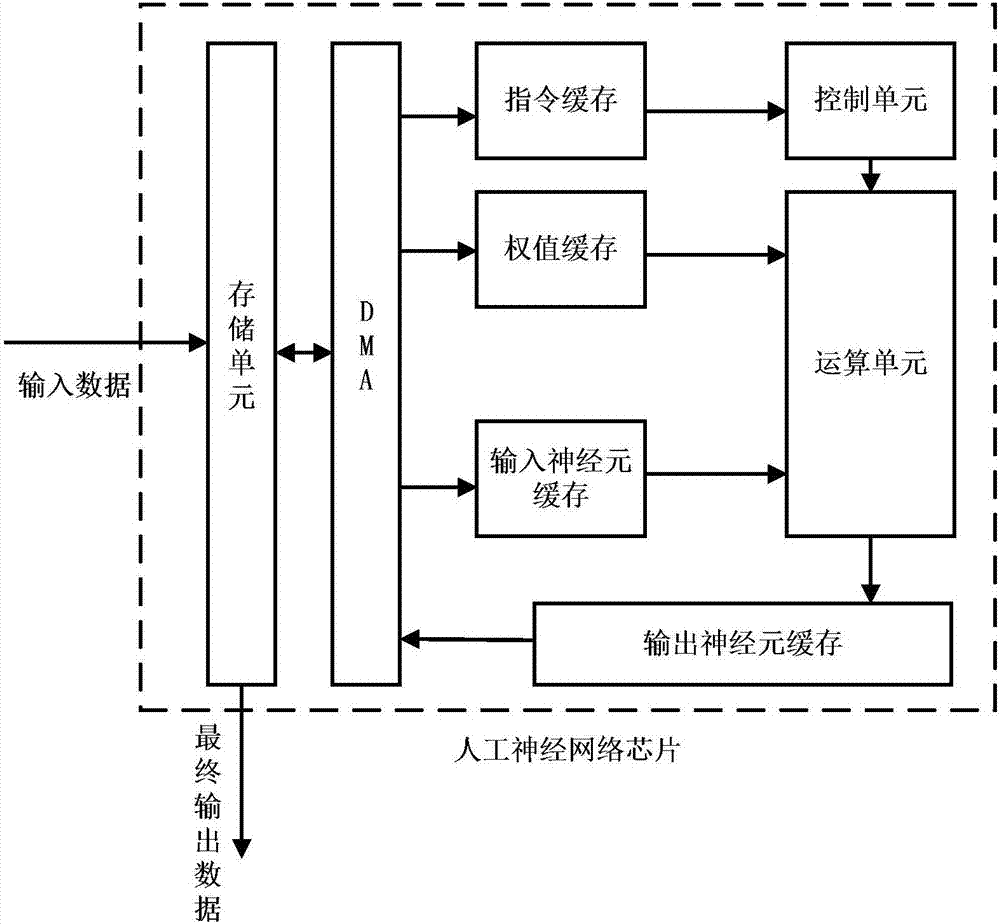

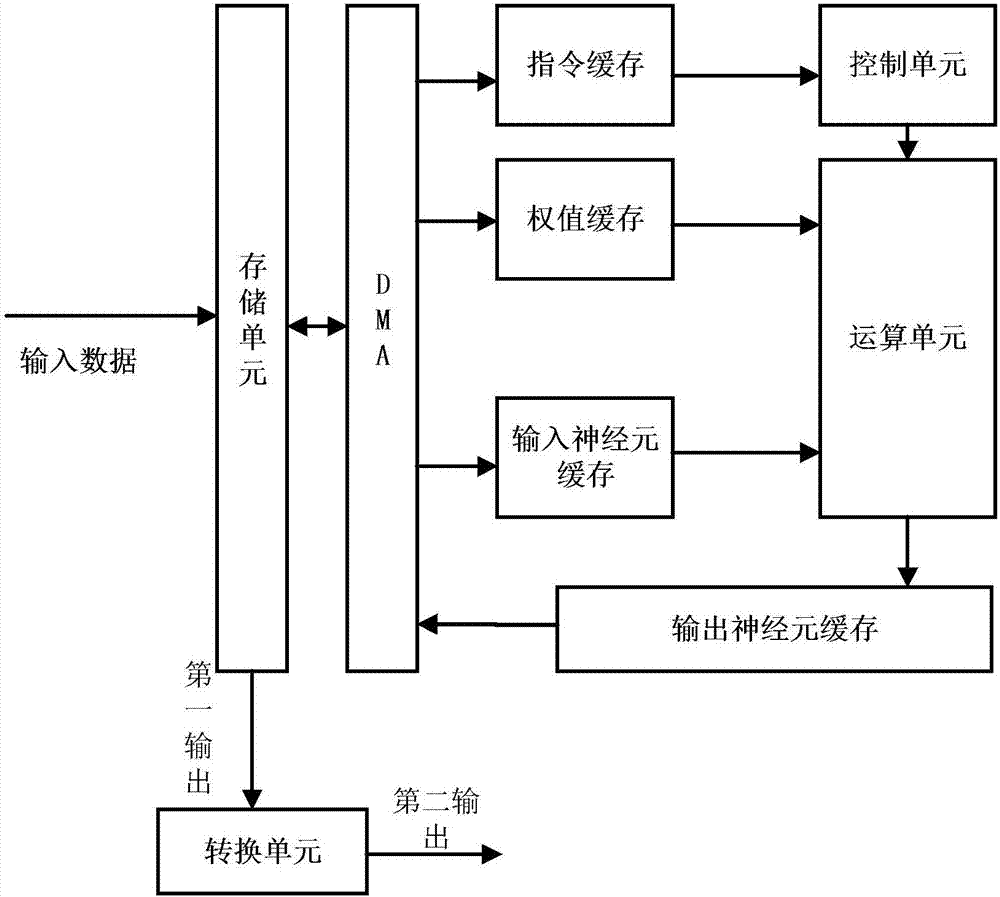

Information processing device and information processing method

ActiveCN107578014AAccurate identificationQuick identificationDigital data processing detailsArchitecture with single central processing unitInformation processingComputer terminal

The invention provides an information processing device. The information processing device comprises a storage unit and a data processing unit. The storage unit is used for receiving and storing dataand commands. The data processing unit is connected with the storage unit, and is used for receiving the data and the commands both sent by the storage unit, extracting and calculating key features included in the data and generating multi-dimensional vectors according to calculation results. The invention further provides an information processing method. The information processing device and theinformation processing method have the advantages that human faces are recognized accurately and rapidly; offline neural network operation is supported, and human face recognition and corresponding control works can be realized when user terminals / front ends are offline without assisted calculation of cloud servers; high portability is achieved, the information processing device and the information processing method can be applied to various application scenes and equipment, and design cost is saved greatly.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

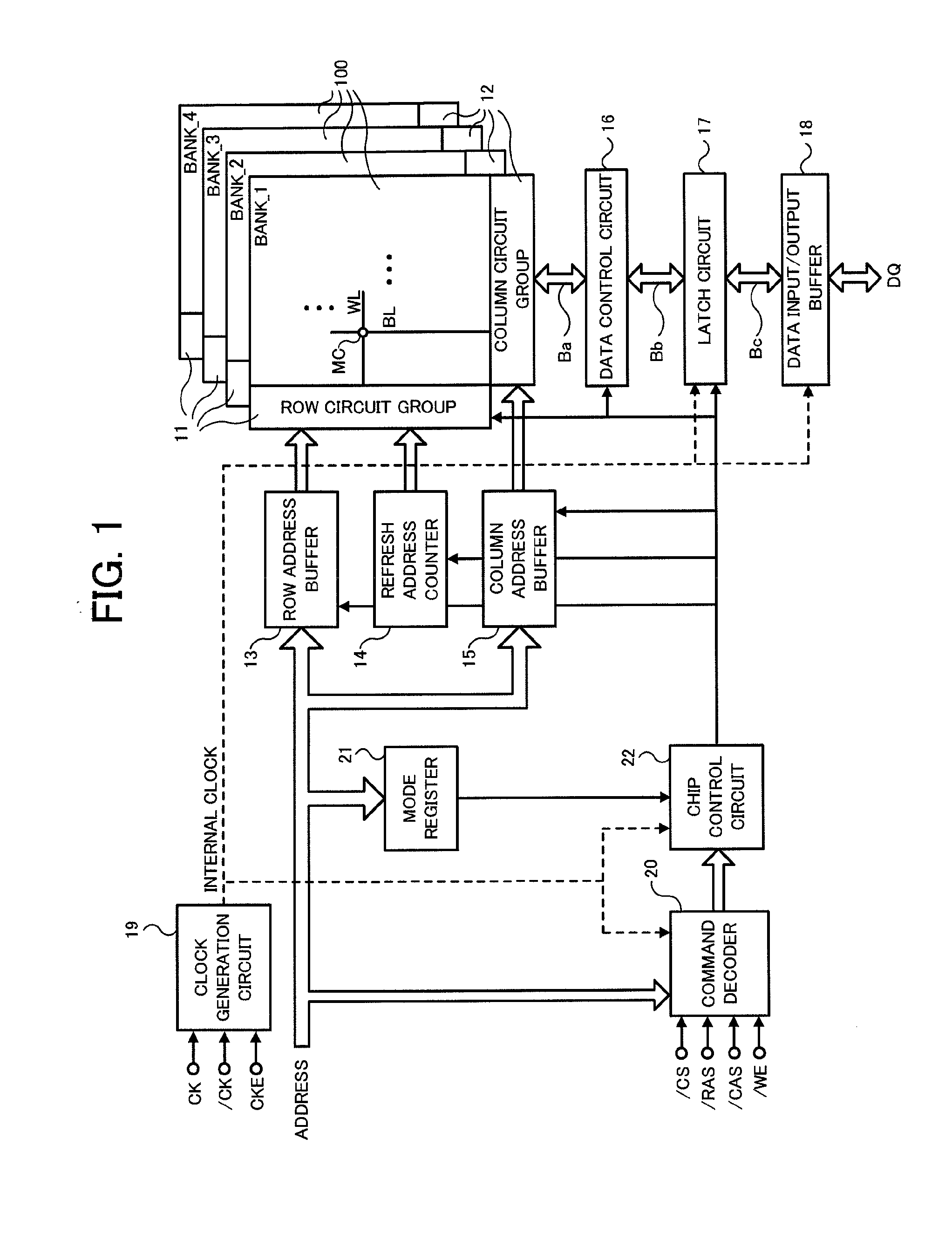

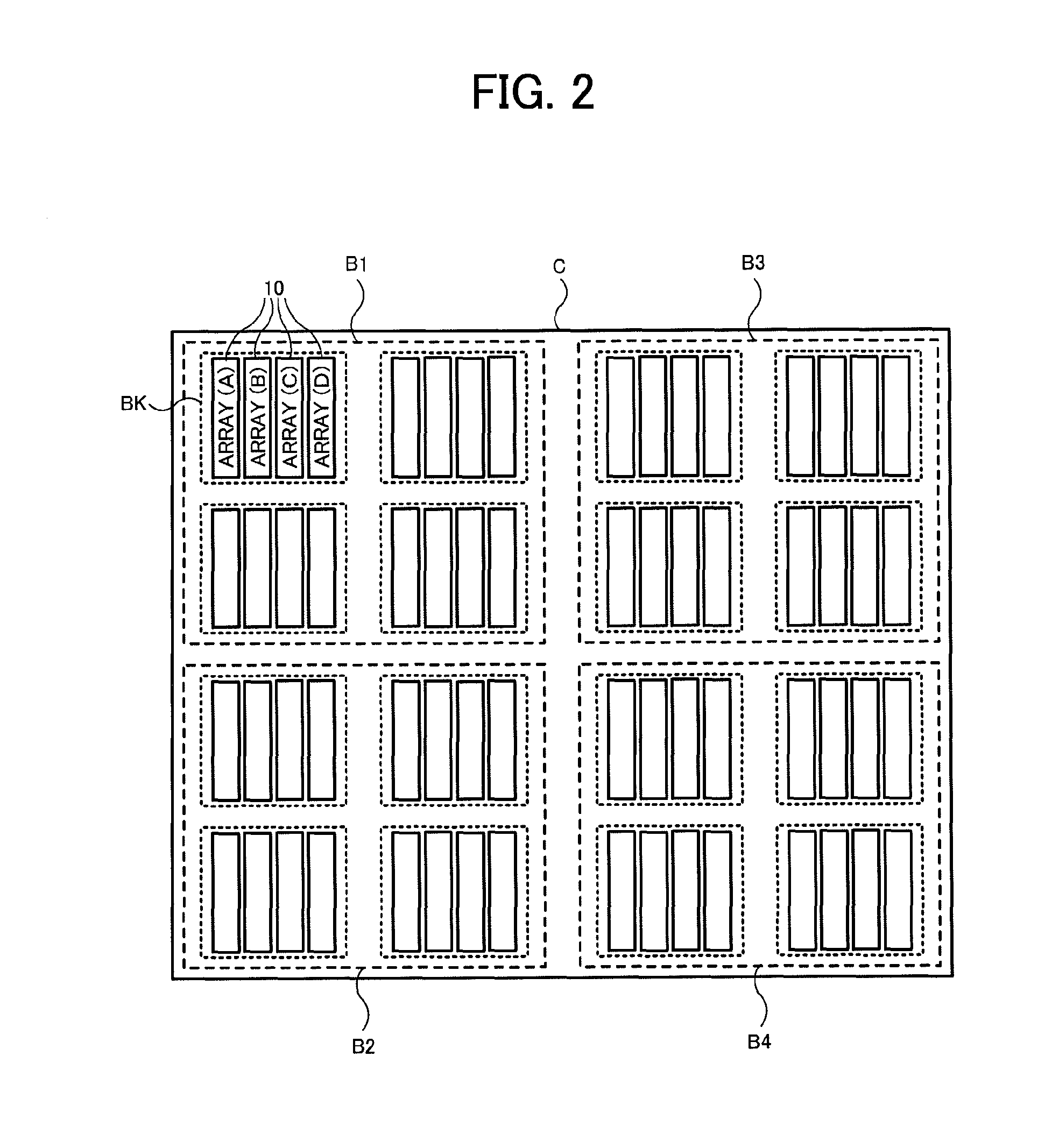

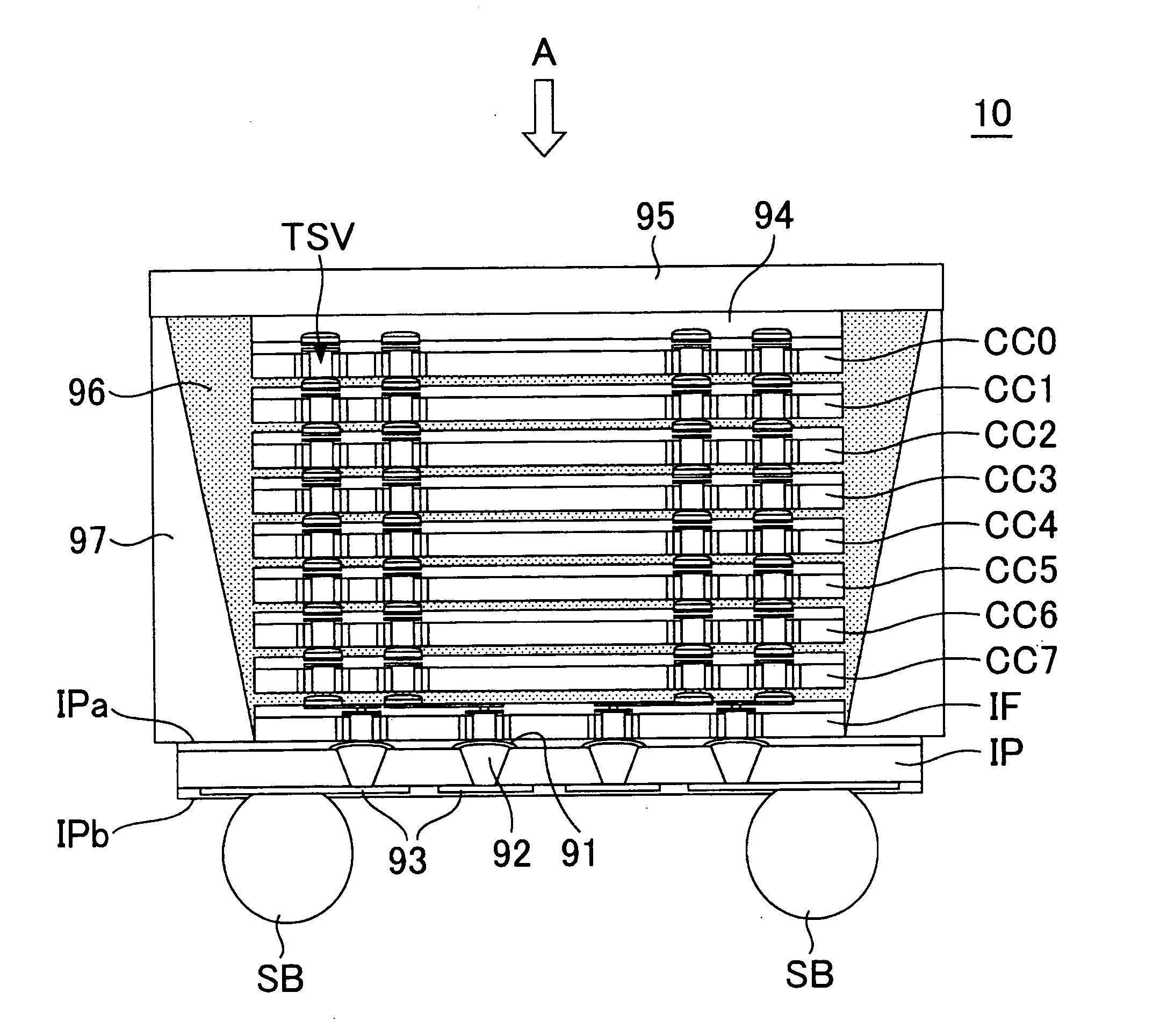

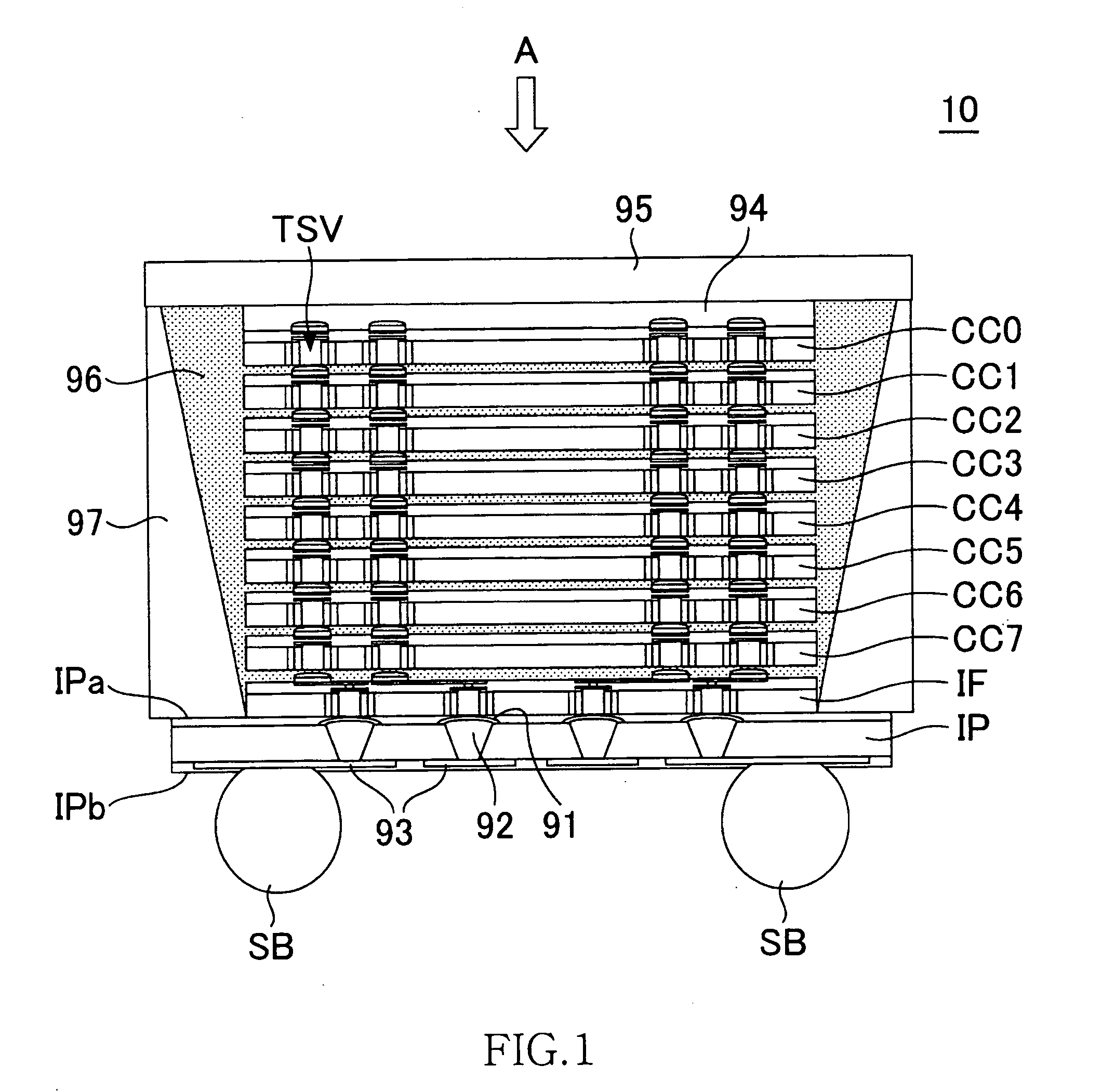

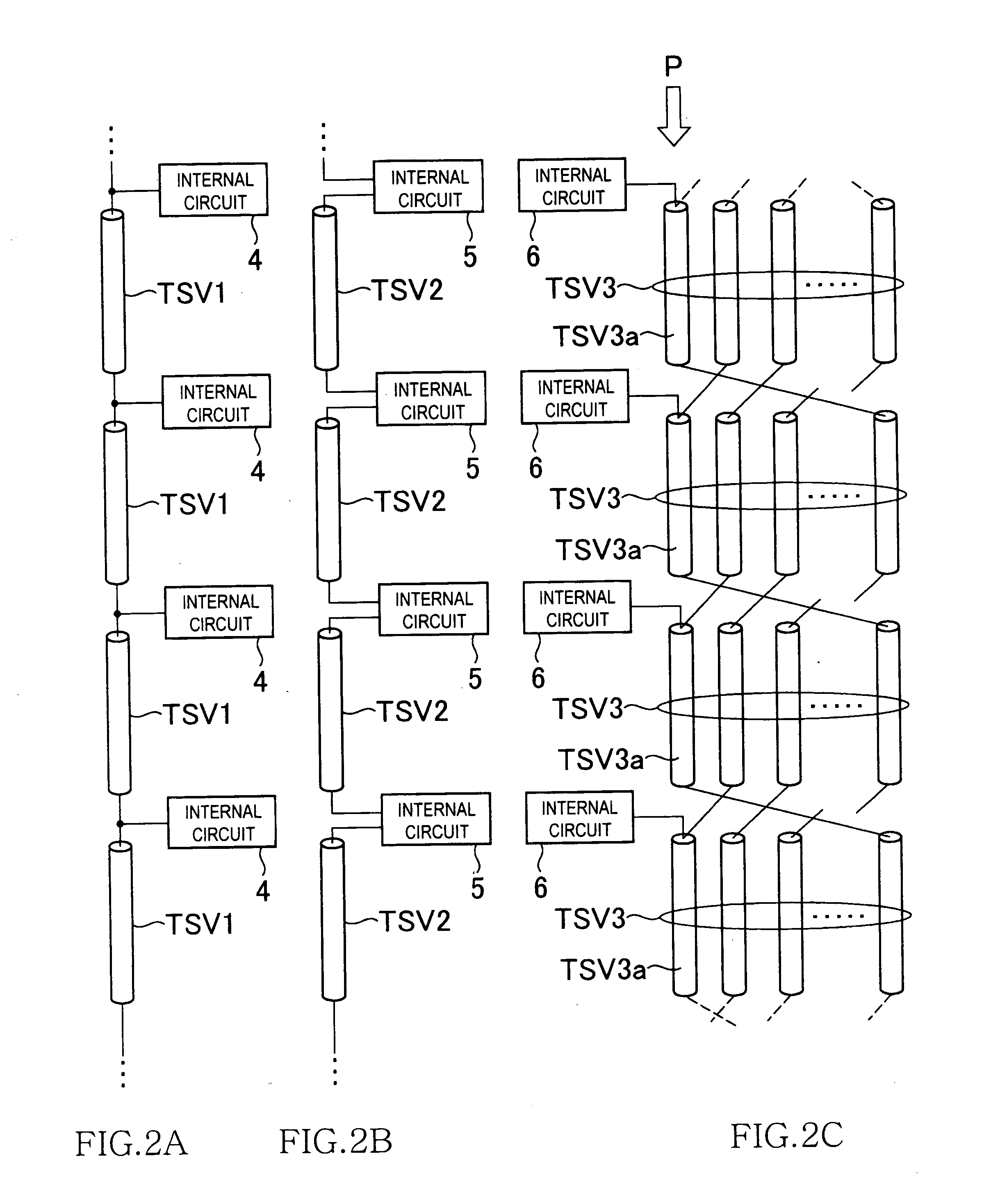

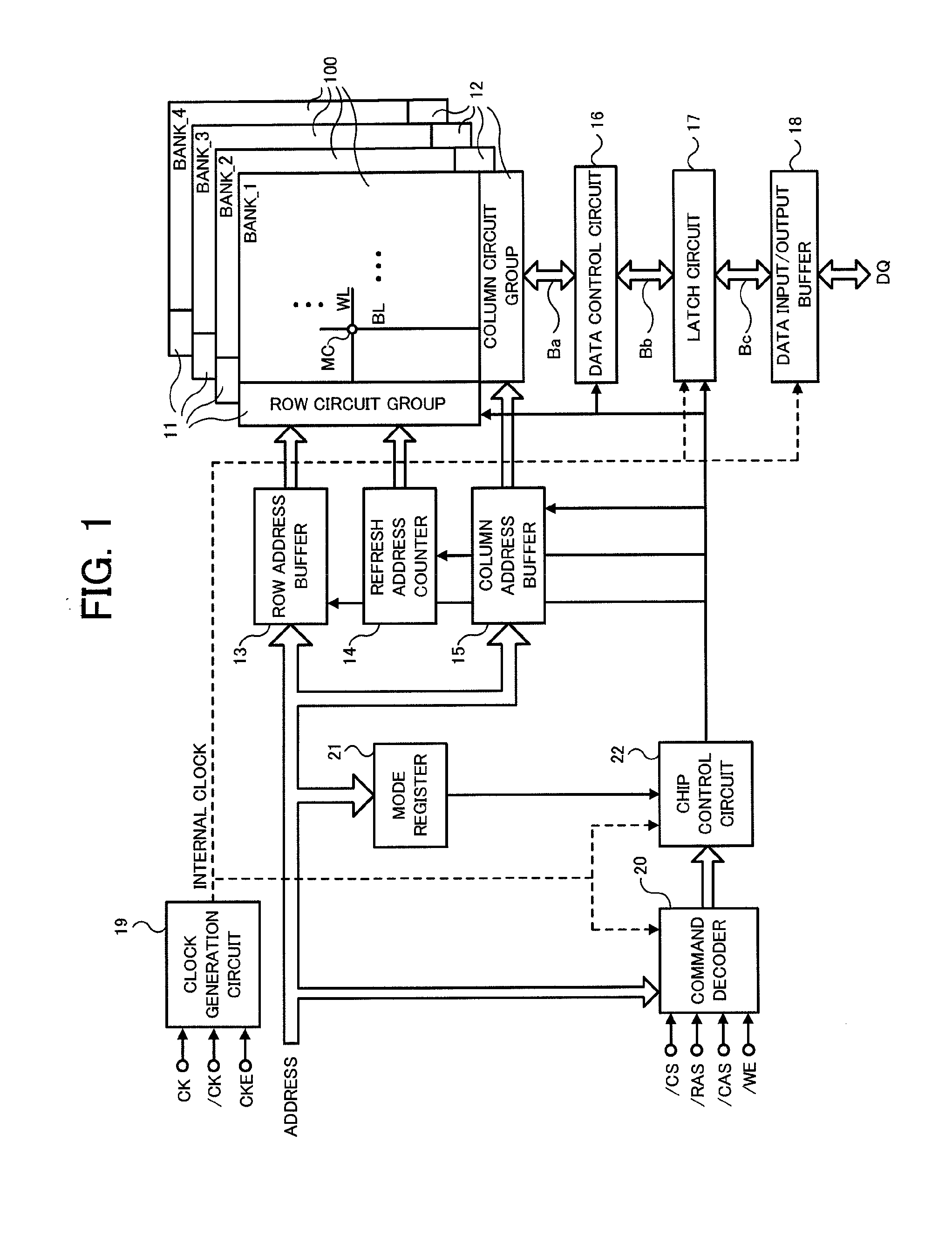

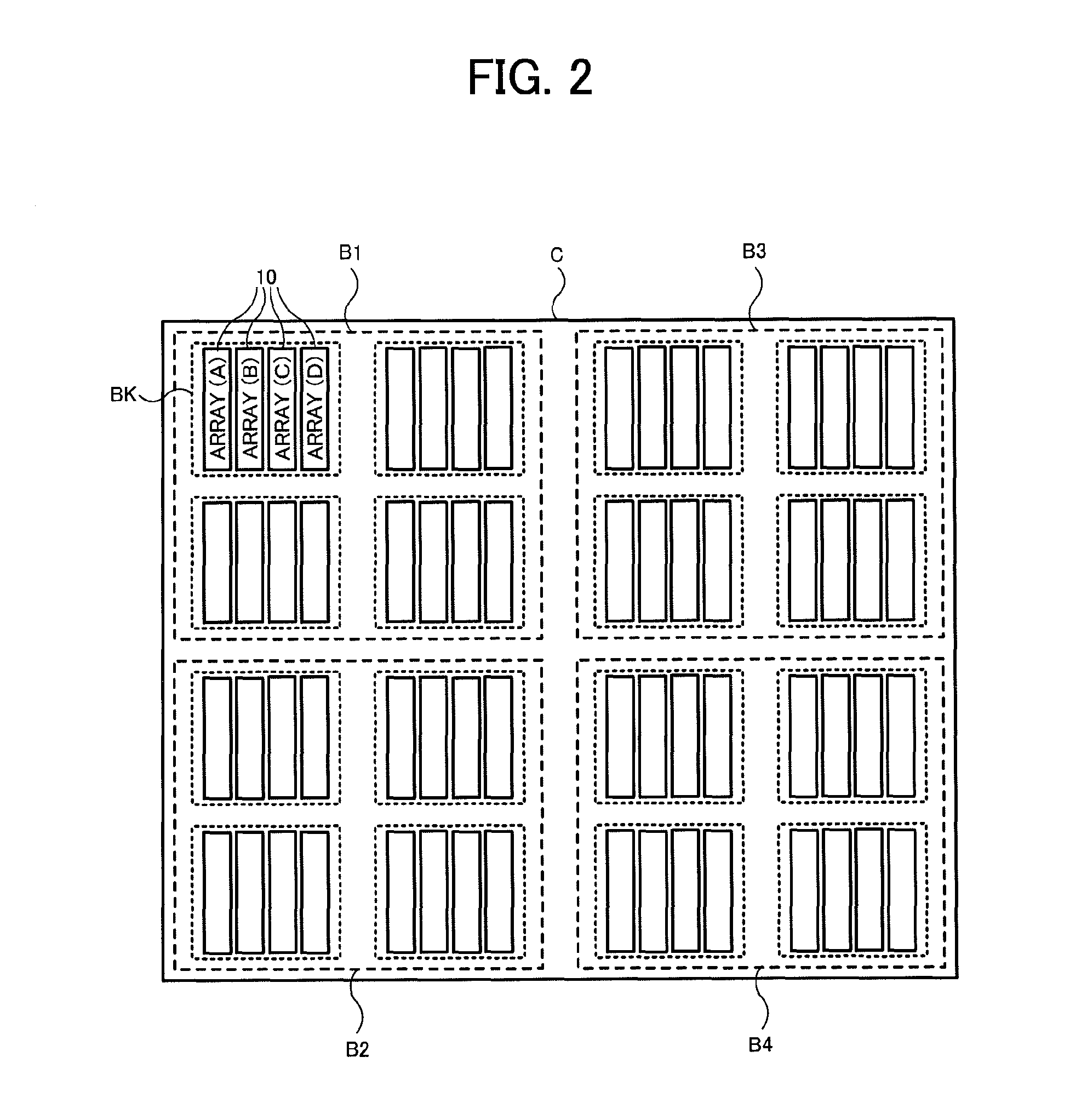

Semiconductor device, information processing system including same, and controller for controlling semiconductor device

InactiveUS20110211411A1Increase in bank numberImprove memory access efficiencySolid-state devicesDigital storageMemory chipInformation handling system

To improve the access efficiency of a semiconductor memory that includes a plurality of memory chips. Based on a layer address, a bank address, and a row address received in synchronization with a row command, and a layer address, a bank address, and a column address received in synchronization with a column command, a memory cell selected by the row address and column address in a bank selected by the bank address included in a core chip selected by the chip address is accessed. This can increase the number of banks recognizable to a controller, thereby improving the memory access efficiency of the semiconductor device which includes the plurality of memory chips.

Owner:LONGITUDE SEMICON S A R L

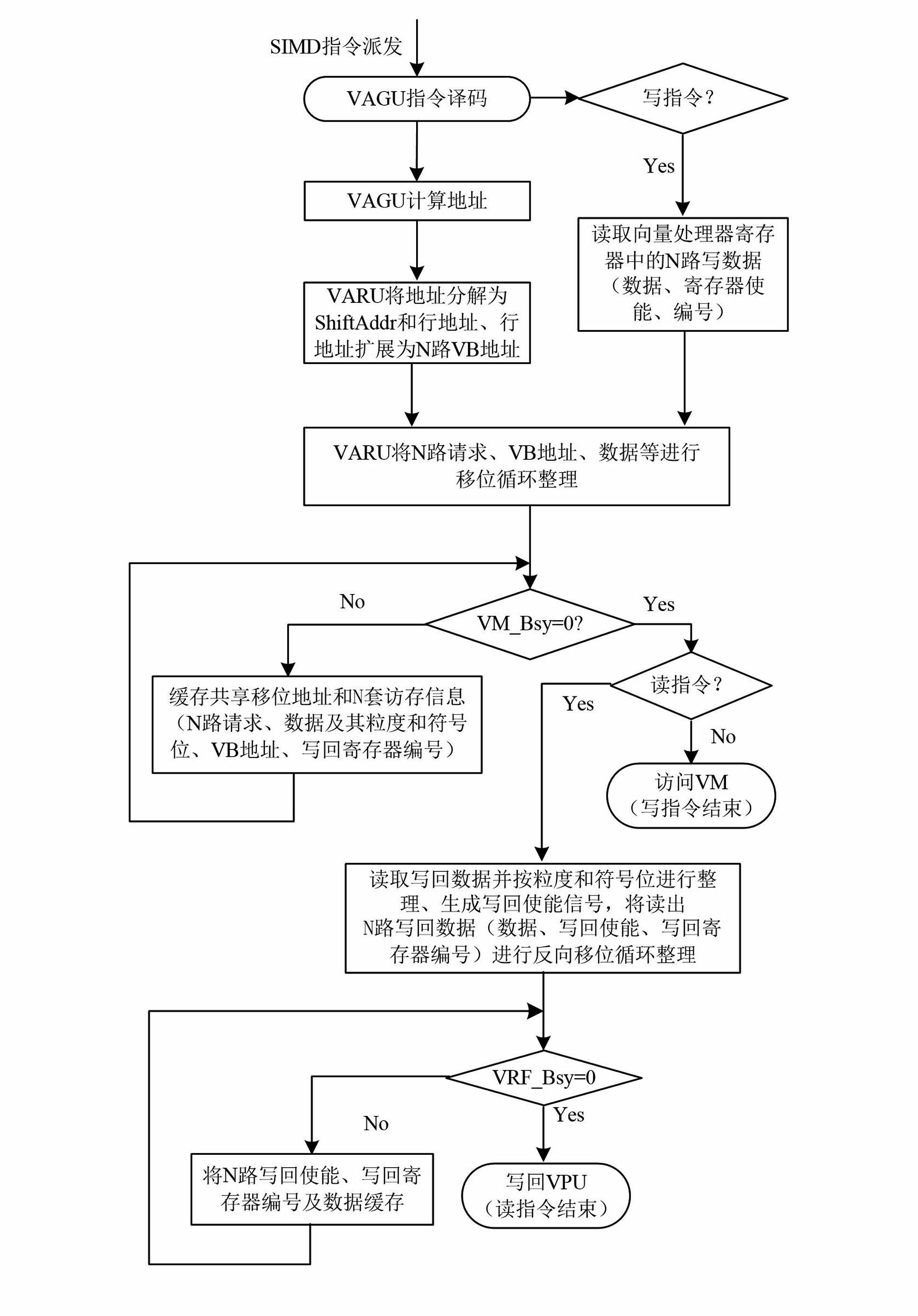

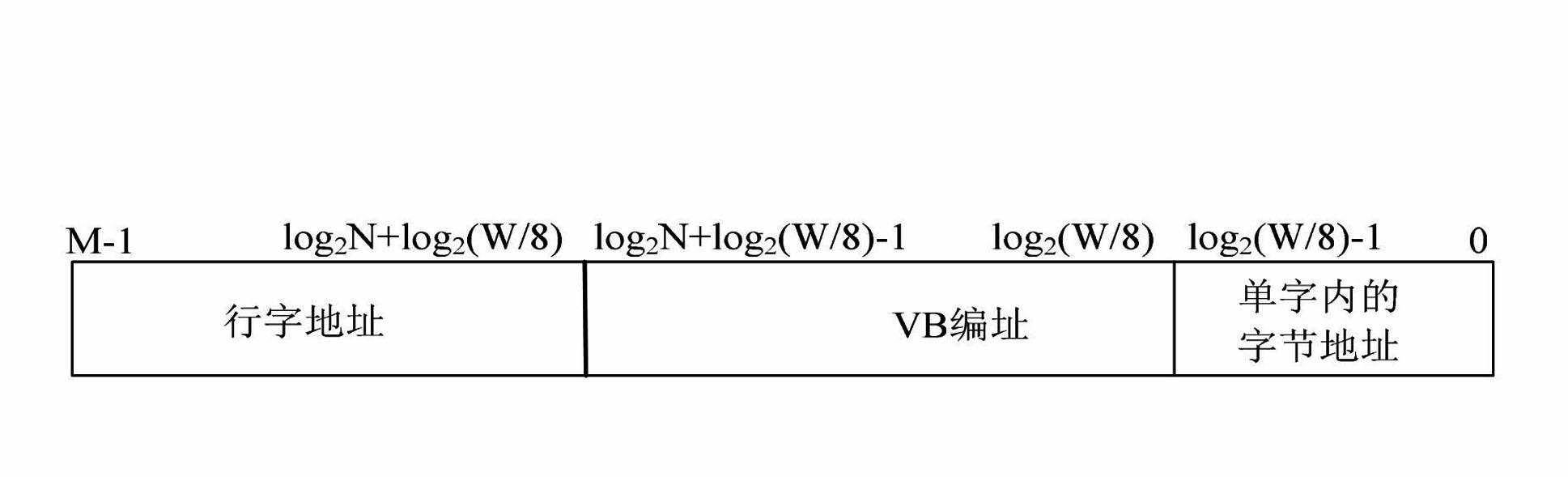

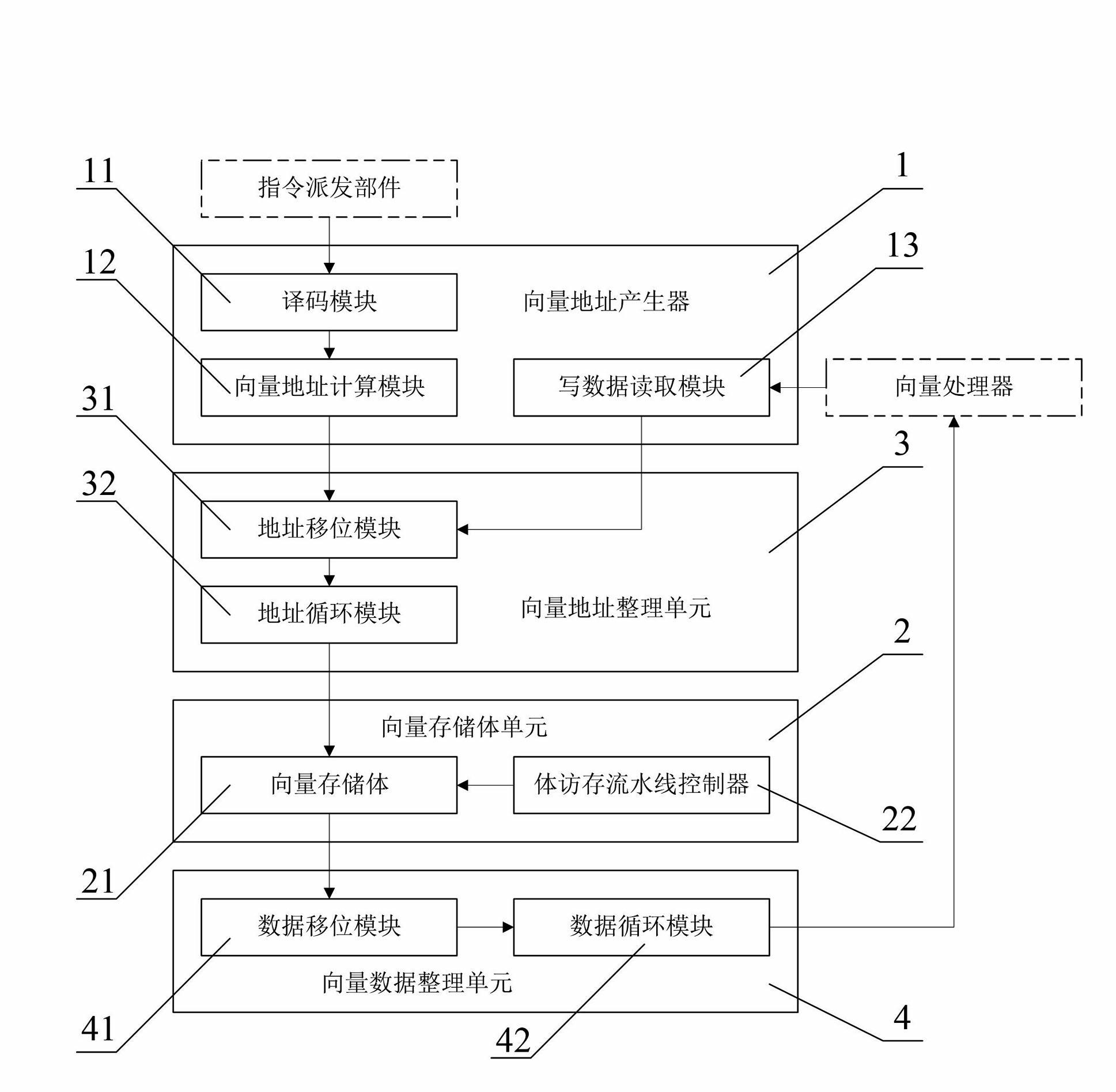

Vector data access control method and vector memory that support limited sharing

ActiveCN102279818ASupport for unaligned accessLow hardware costConcurrent instruction executionData access controlMemory bank

The invention discloses a vector data access and storage control method supporting limited sharing and a vector memory. The method comprises the following steps of: 1) uniformly addressing the vector memory; 2) acquiring the access and storage information; performing the decomposition, expansion and displacement circular arrangement on the vector address in the access and storage information so as to generate N sets of access and storage information; and 3) respectively sending the N sets of access and storage information to the access and storage flow line of the vector memory; and if the current vector access and storage command is the reading command, performing the opposite displacement circular arrangement on the N paths of writing-back data according to the shared displacement address to obtain the N sets of writing-back data and send the data to the corresponding vector processing unit in the vector processor. The vector memory comprises a vector address generator, a vector memory unit and an access and storage management control unit; and the access and storage management control unit comprises a vector address arrangement unit and a vector data arrangement unit. The method has the advantages of realizing the hardware at low cost, and supporting the limited sharing of the vector data and the non-aligned access.

Owner:NAT UNIV OF DEFENSE TECH

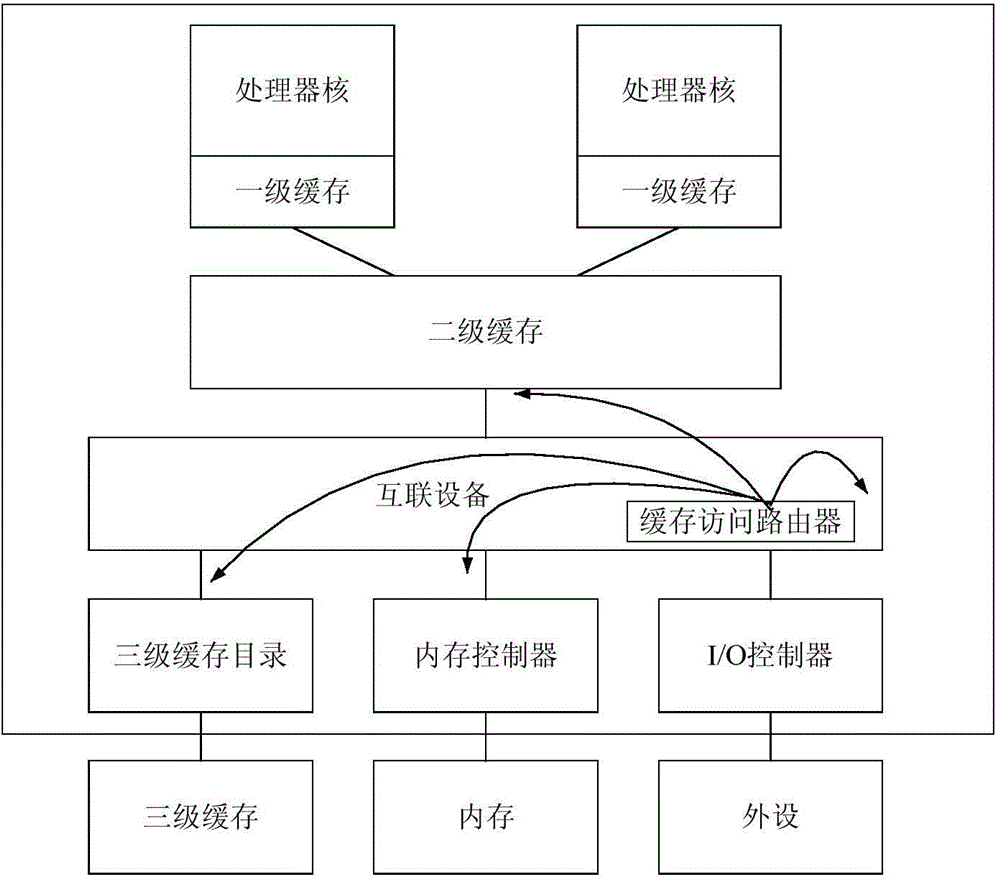

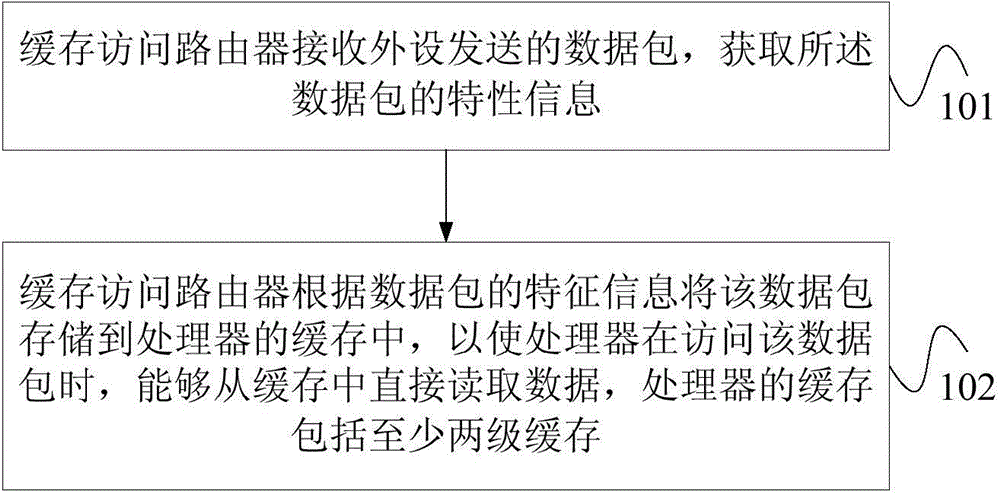

Cache access method, cache access router and computer system

ActiveCN105095109AImprove hit rateReduce visitsMemory adressing/allocation/relocationCache accessAccess method

The embodiment of the invention provides a cache access method, a cache access router and a computer system. The cache access method comprises the following steps of: receiving a data packet sent by peripheral equipment by the cache access router, and obtaining the feature information of the data packet, wherein the feature information of the data packet includes any one piece the following information or the combination of the following information including data packet size, packet interval and hot degree information; and storing the data packet into a cache of the processor by the cache access router according to the feature information of the data packet, wherein the cache of the processor comprises at least two stages of caches. The method has the advantages that the data packet is directly stored into the cache; the processor can directly read data from the cache; the number of memory access times is reduced; and the hit rate of the cache is improved, so that the memory access efficiency is improved. The cache of the processor comprises at least two stages of caches; the cache access router can store the data packet into the proper cache according to the feature information of the data packet; and further, the memory access efficiency is improved.

Owner:HUAWEI TECH CO LTD +1

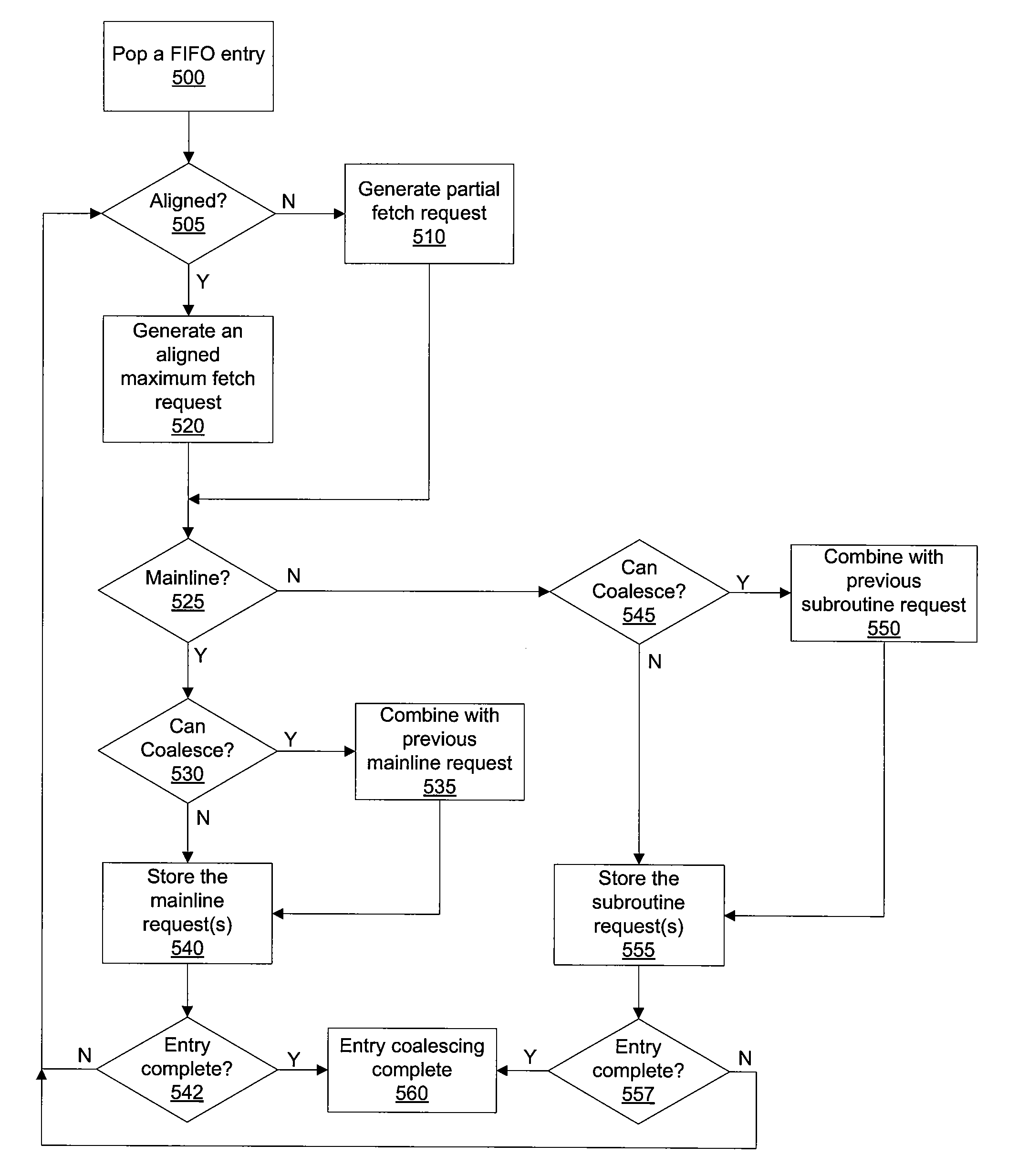

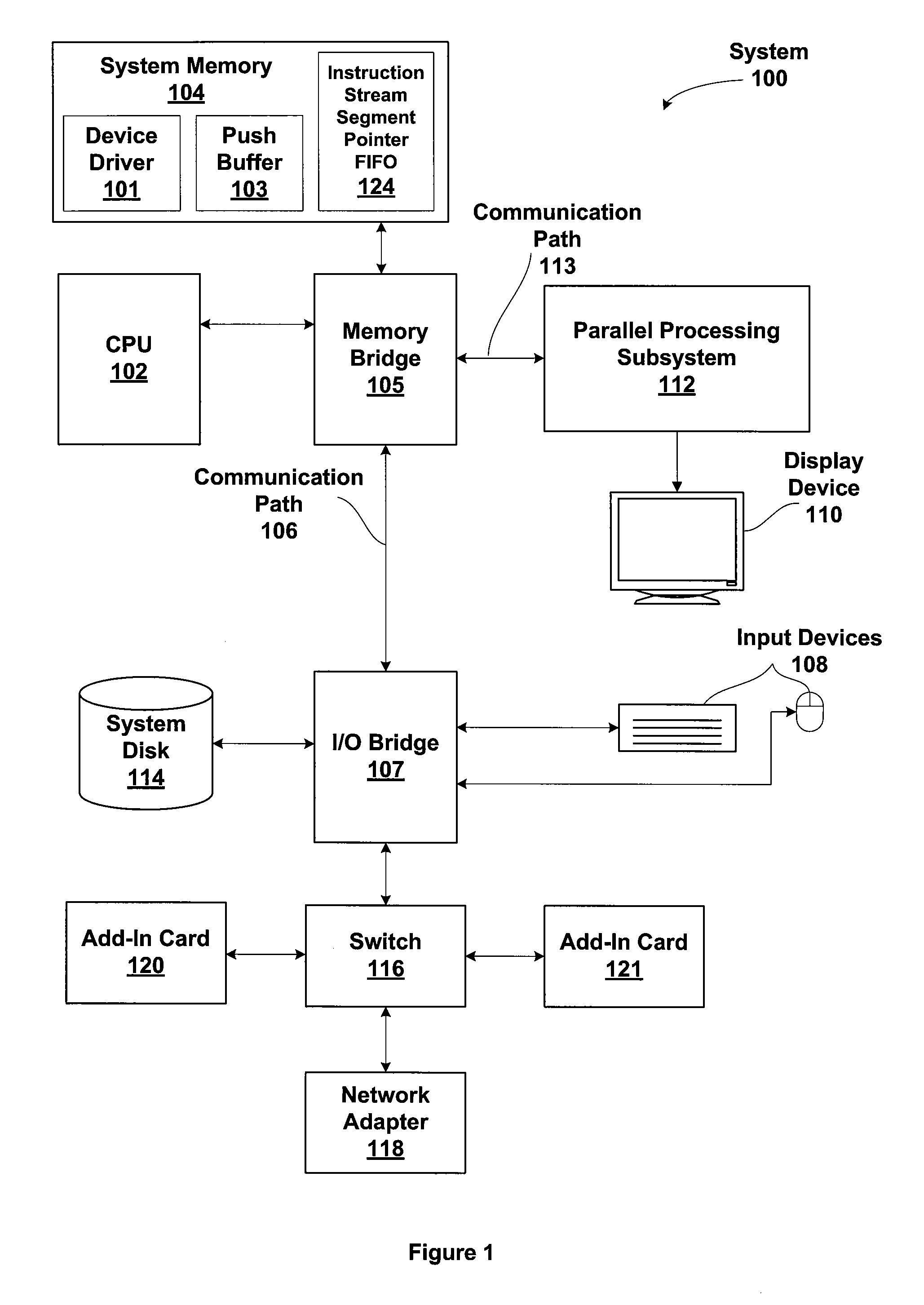

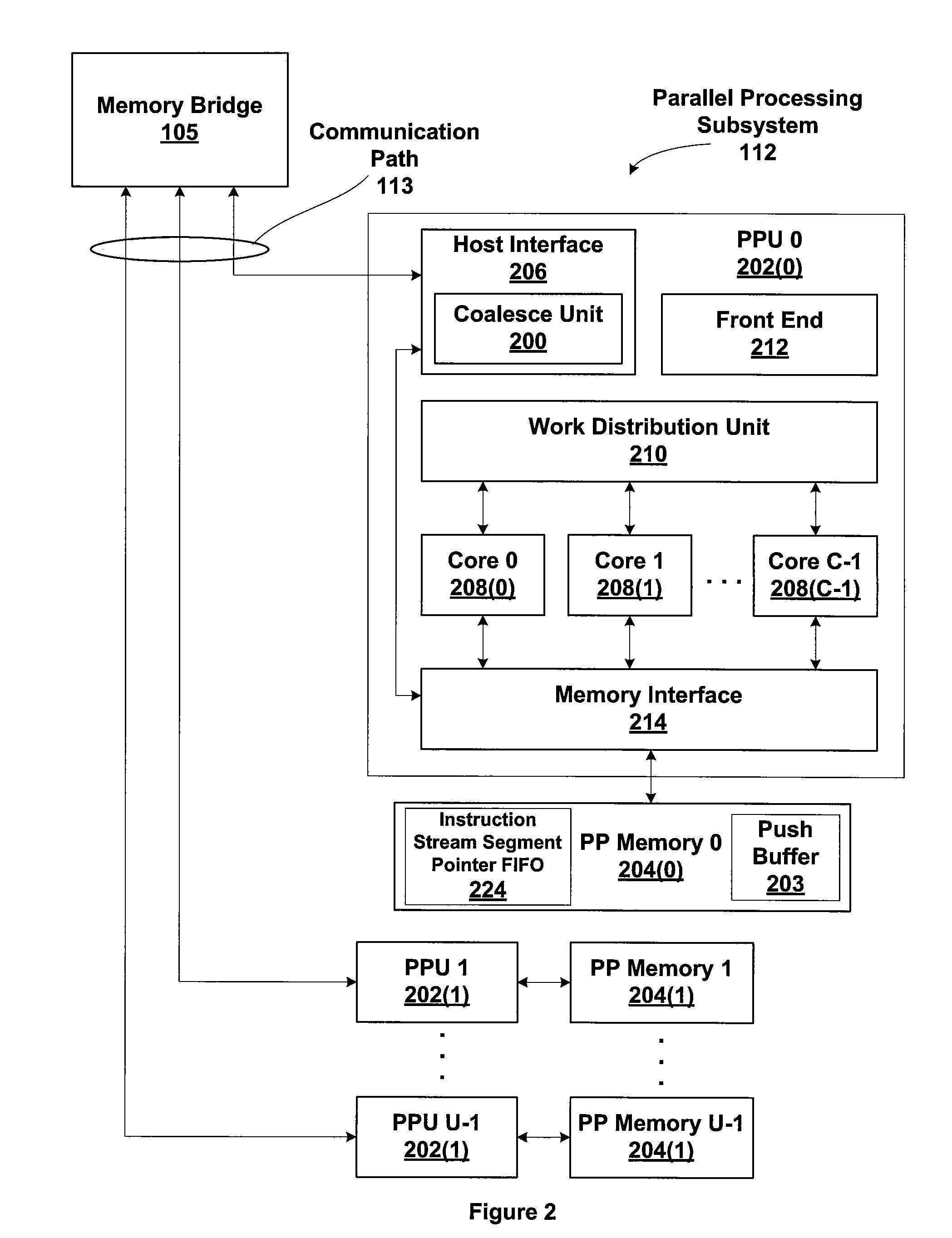

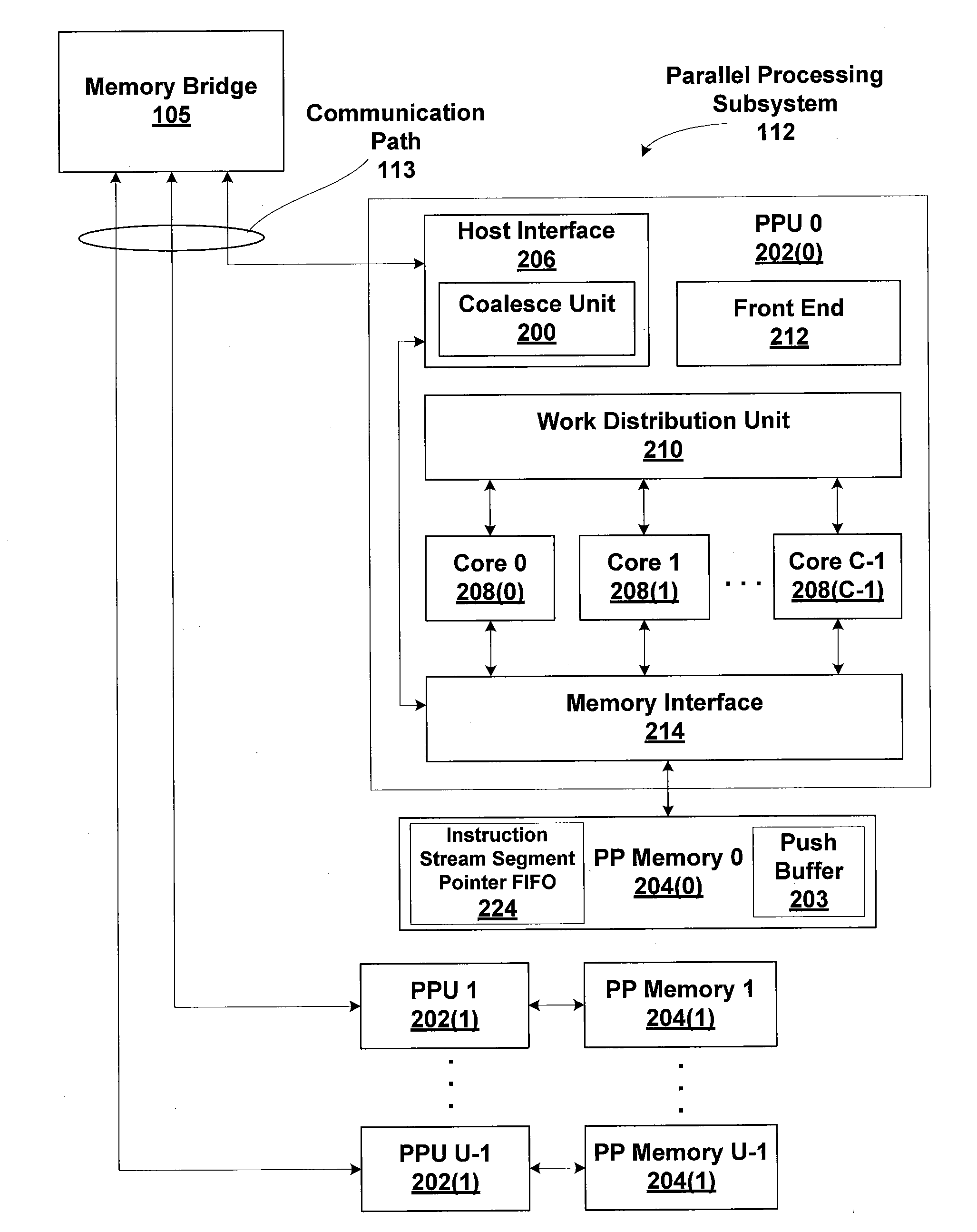

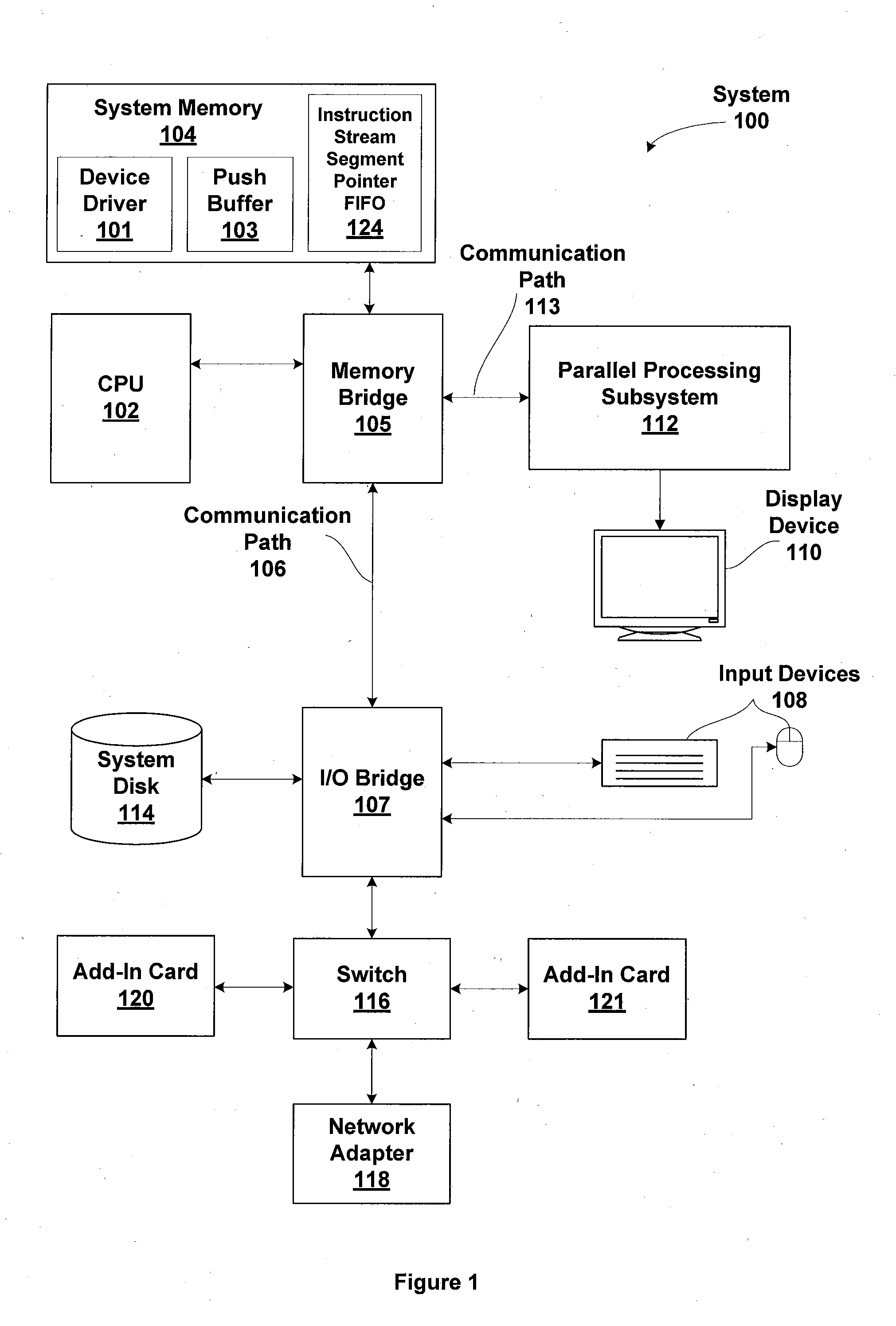

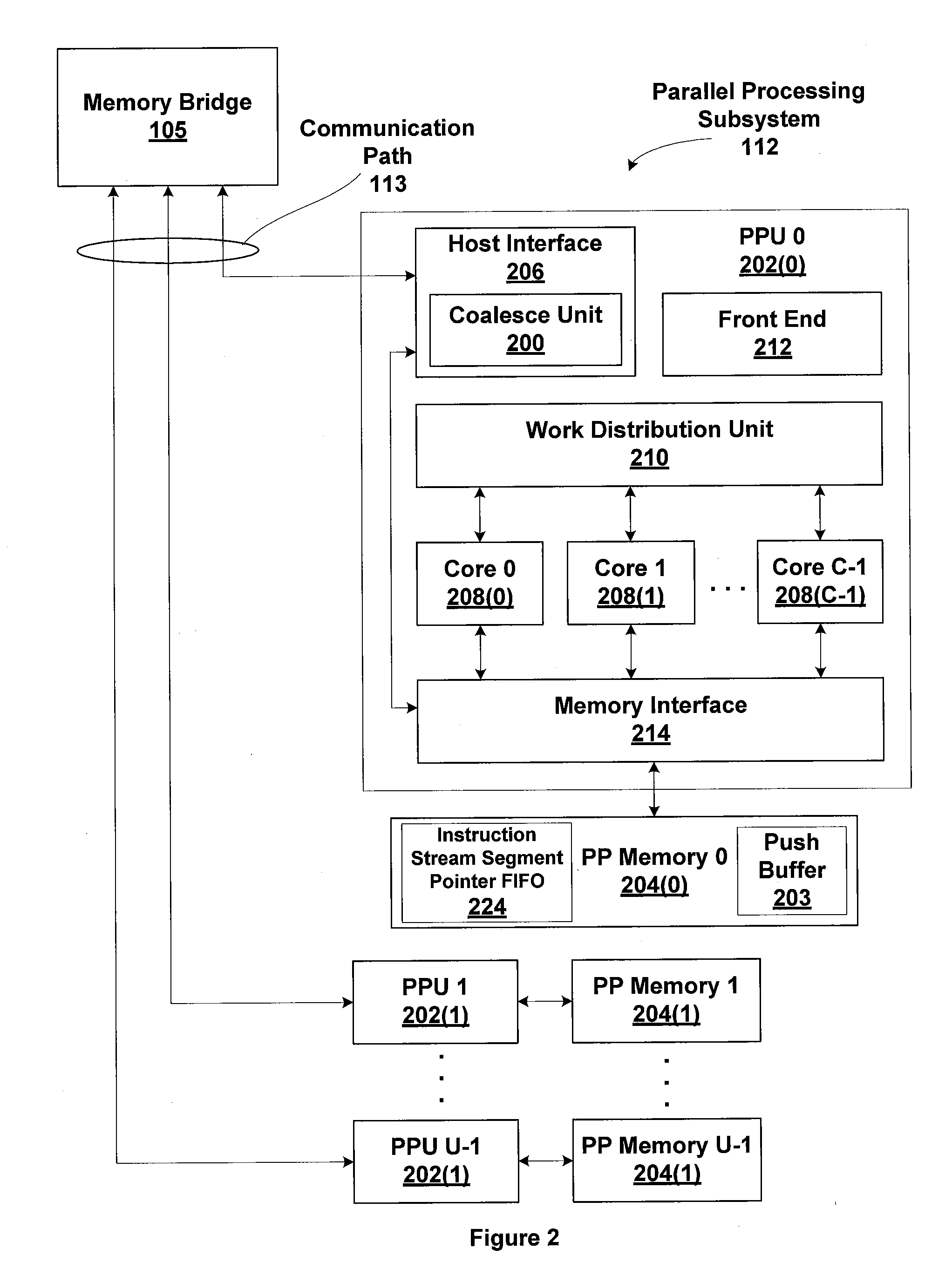

Request coalescing for instruction streams

ActiveUS8219786B1Improve memory access efficiencyDigital computer detailsMemory systemsMemory interfaceInstruction stream

Sequential fetch requests from a set of fetch requests are combined into longer coalesced requests that match the width of a system memory interface in order to improve memory access efficiency for reading the data specified by the fetch requests. The fetch requests may be of different classes and each data class is coalesced separately, even when intervening fetch requests are of a different class. Data read from memory is ordered according to the order of the set of fetch requests to produce an instruction stream that includes the fetch requests for the different classes.

Owner:NVIDIA CORP

Semiconductor device, refresh control method thereof and computer system

ActiveUS20140133255A1Promote quick completionGrowth inhibitionDigital storageBit lineDevice material

Owner:LONGITUDE LICENSING LTD

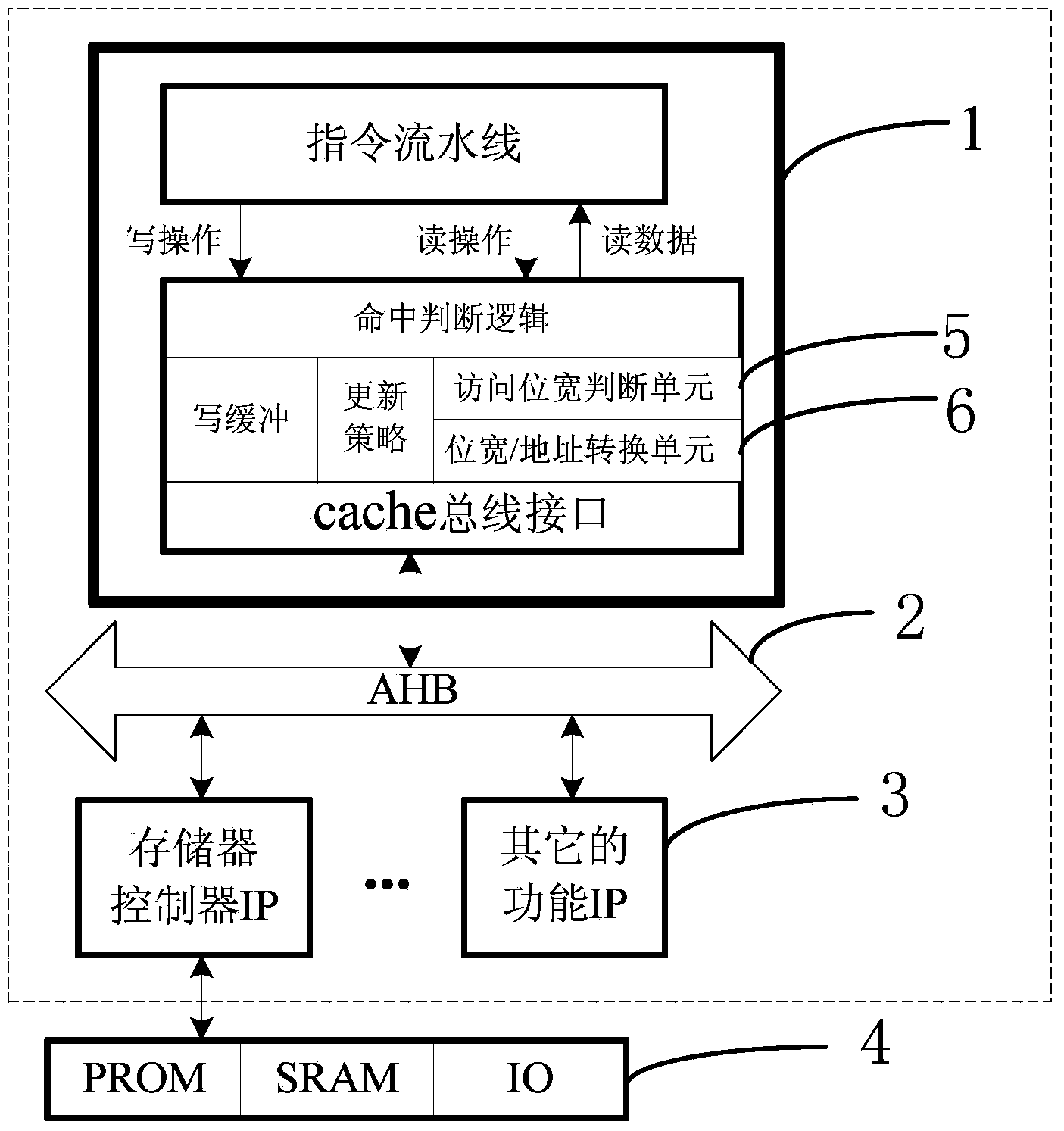

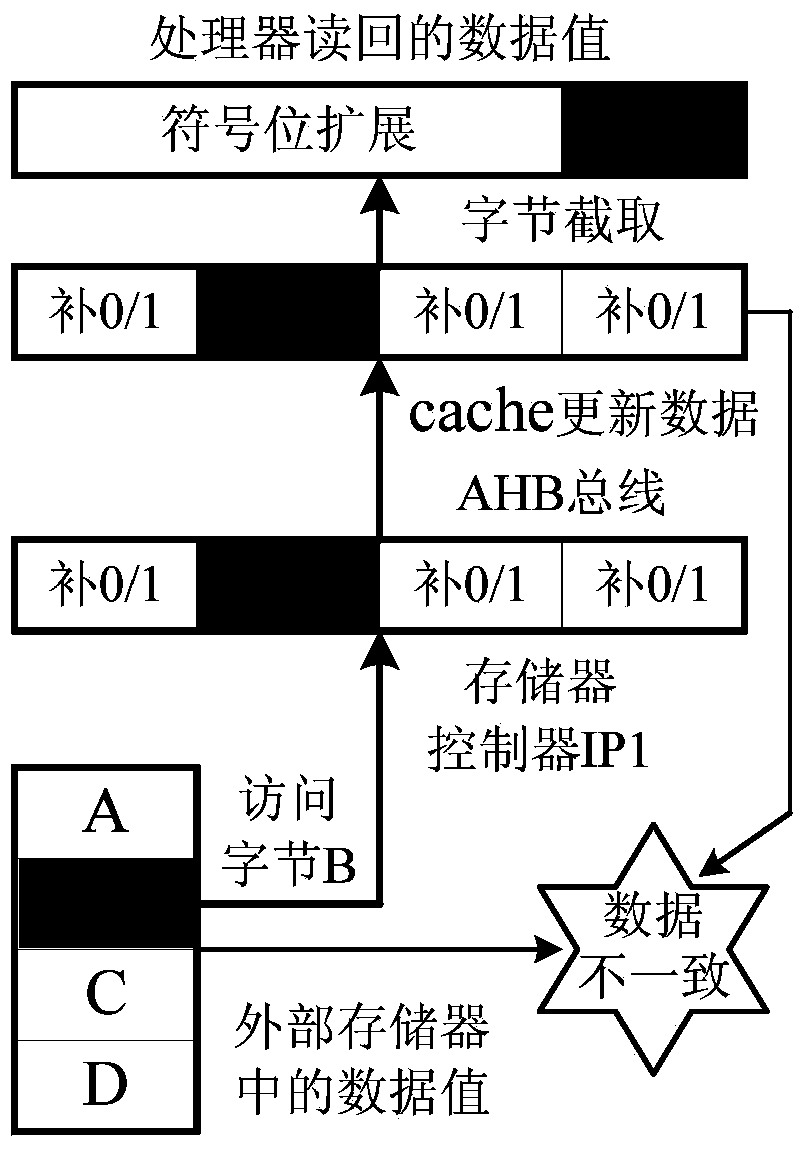

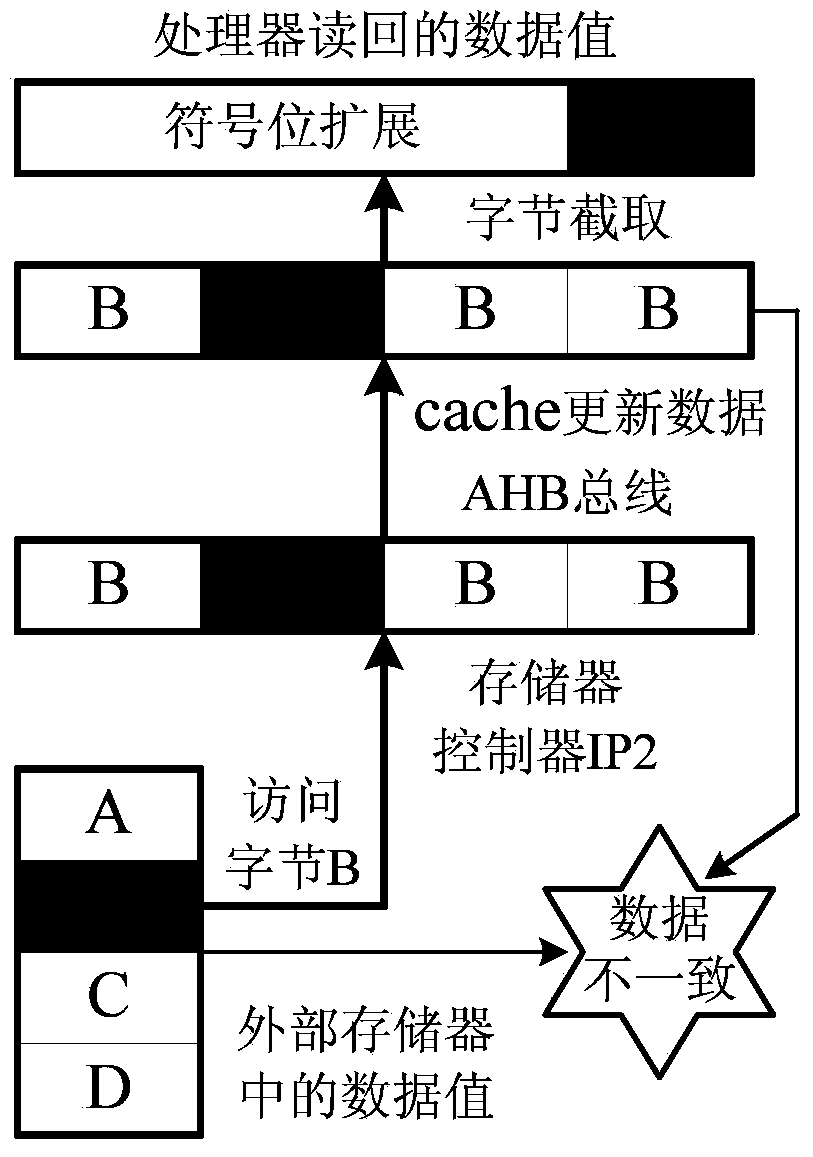

Unified bit width converting structure and method in cache and bus interface of system chip

ActiveCN104375962AImprove compatibilityEnsure consistencyMemory adressing/allocation/relocationLogic cellBus interface

The invention discloses a unified bit width converting structure and method in a cache and a bus interface of a system chip. The converting structure comprises a processor core and a plurality of IP cores carrying out data interaction with the processor core through an on-chip bus, and a memorizer controller IP is communicated with an off-chip main memorizer. The processor core comprises an instruction assembly line and a hit judgment logic unit receiving an operation instruction of the instruction assembly line. An access bit width judgment unit and a bit width / address converting unit are arranged between the hit judgment logic unit and the cache bus interface, the hit judgment logic unit sends a judgment result to the instruction assembly line, and the processor core is connected with the on-chip bus through the cache bus interface. According to the converting method, for the read access of a byte or a half byte, if cache deficiency happens and the access space belongs to the cache area, the bit width / address converting unit converts the read access of the byte or the half byte into one-byte access, access and storage are finished through the bus, an original updating strategy is not affected, and flexibility can exist.

Owner:NO 771 INST OF NO 9 RES INST CHINA AEROSPACE SCI & TECH

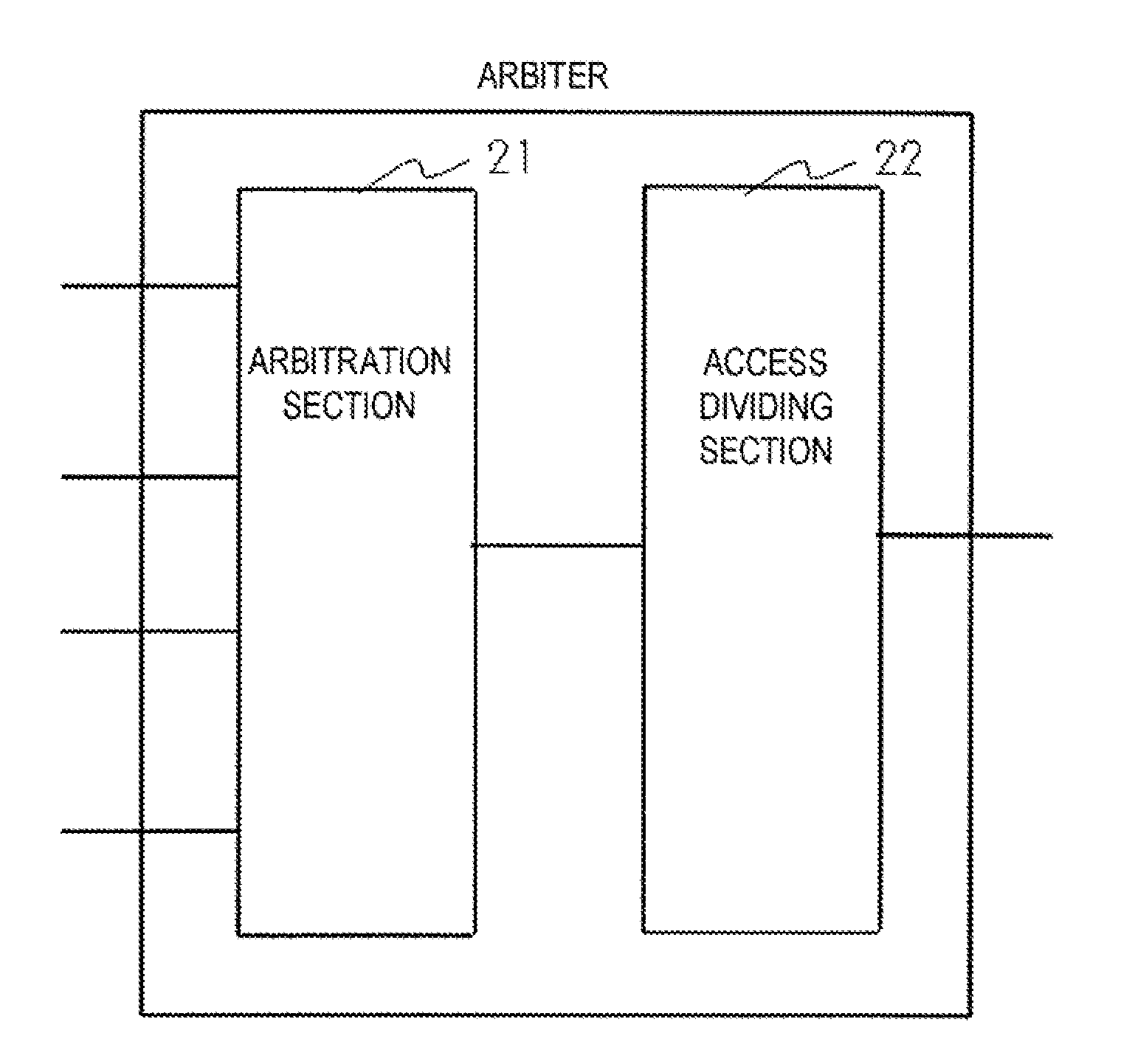

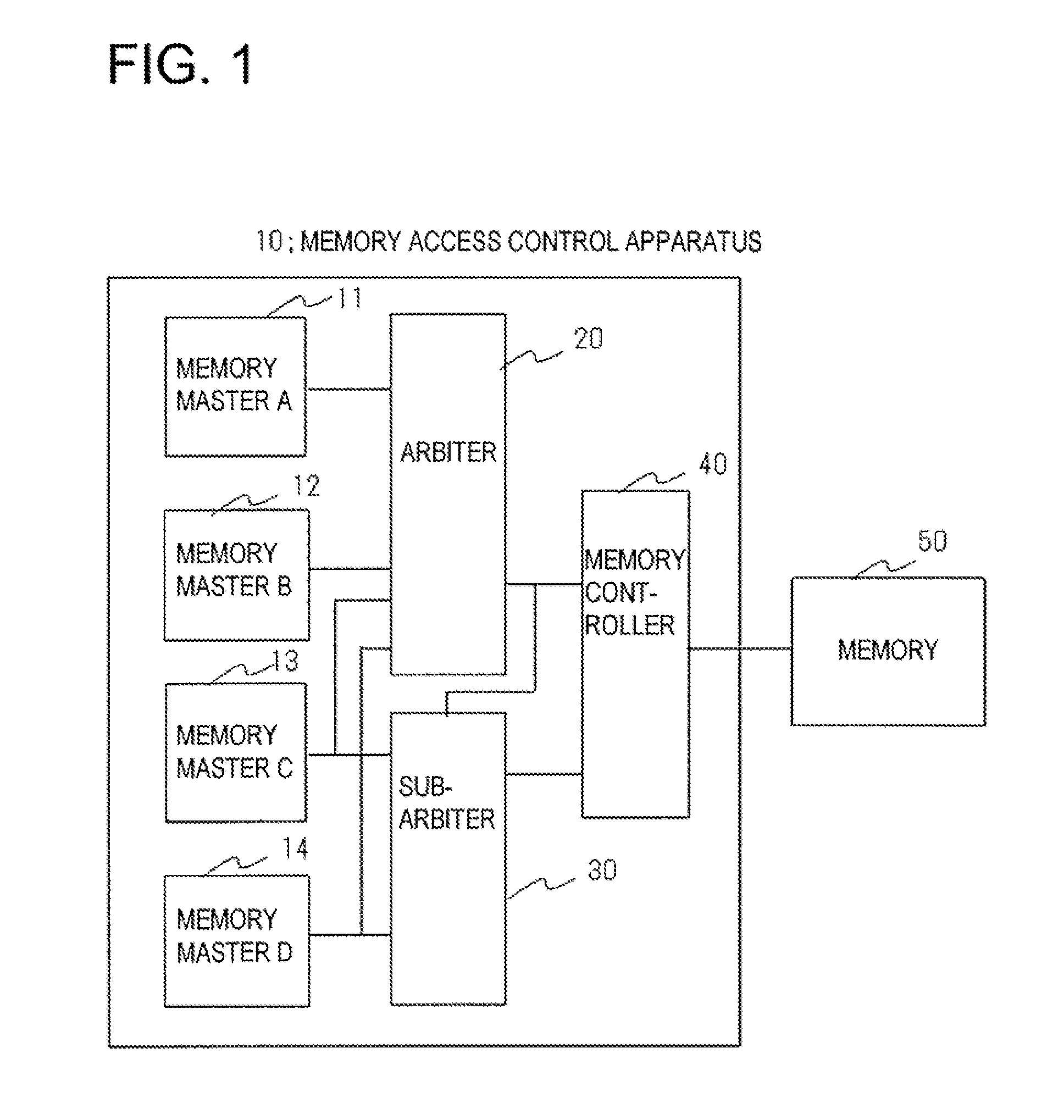

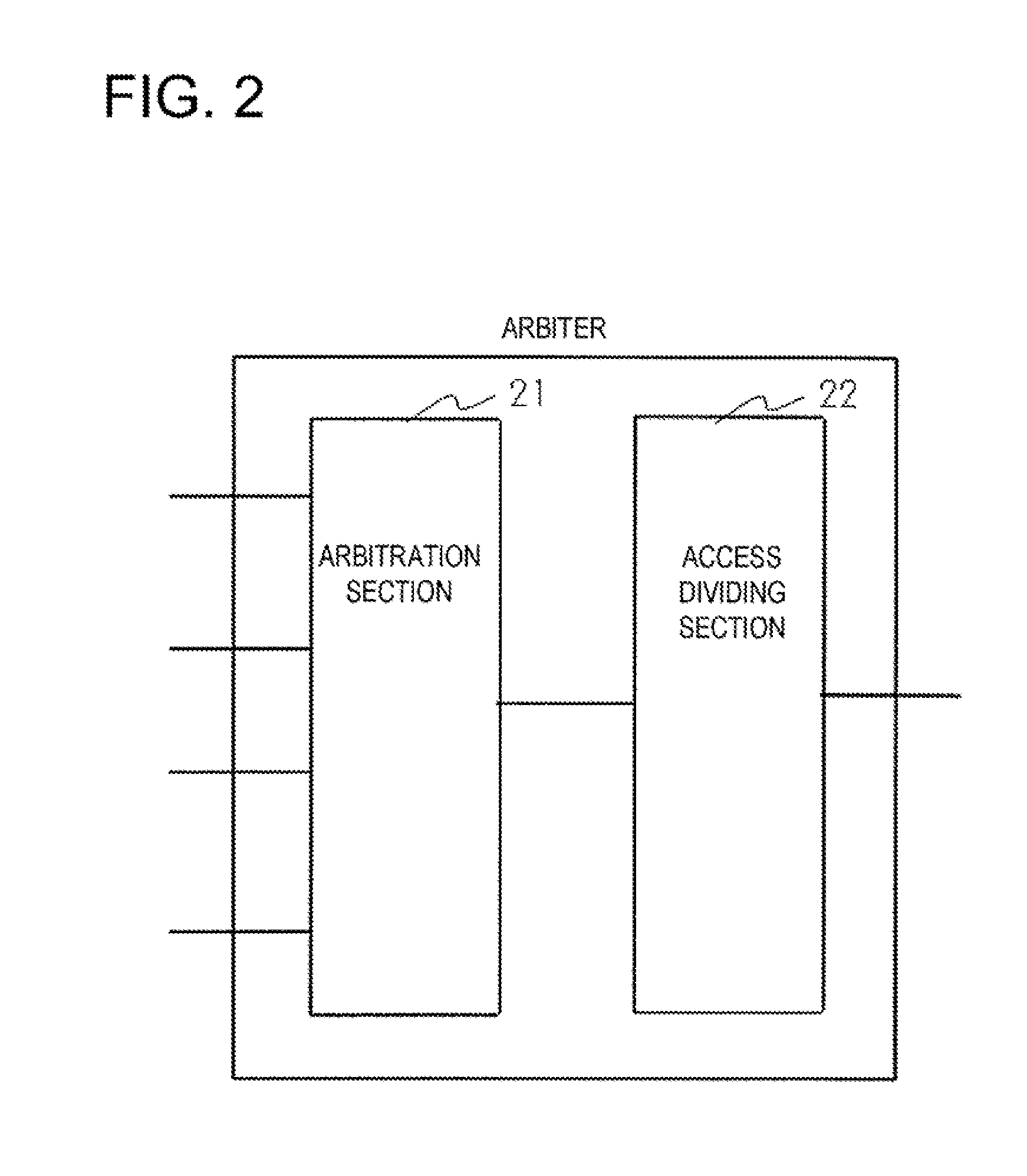

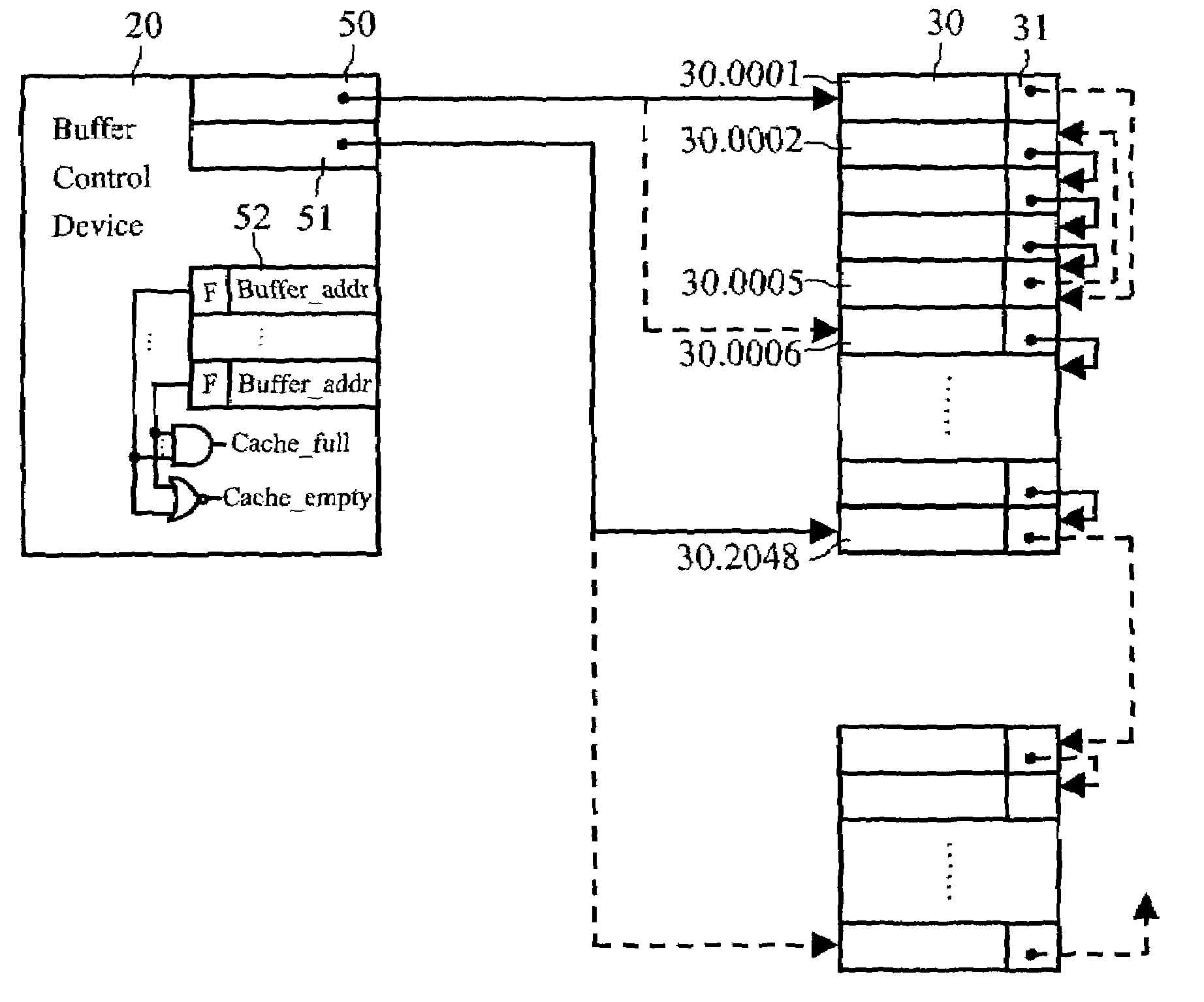

Memory access control apparatus

InactiveUS20100082877A1Improve memory access efficiencyIncrease latency of memory accessMemory adressing/allocation/relocationMemory controllerAccess control

A memory access control apparatus includes an arbiter and a sub-arbiter receiving and arbitrating access requests from a plurality of memory masters; a memory controller; and a memory having a plurality of banks. When a bank of the memory used by an access request allowed by the arbiter and currently being executed and a bank of the memory to be accessed by an access request by the sub-arbiter are different and the type of access request allowed by the arbiter and currently being executed and the type of memory access to be performed by the sub-arbiter are identical, then it is decided that access efficiency will not decline, memory access by the arbiter is suspended and memory access by the sub-arbiter is allowed to squeeze in (FIG. 1).

Owner:NEC CORP

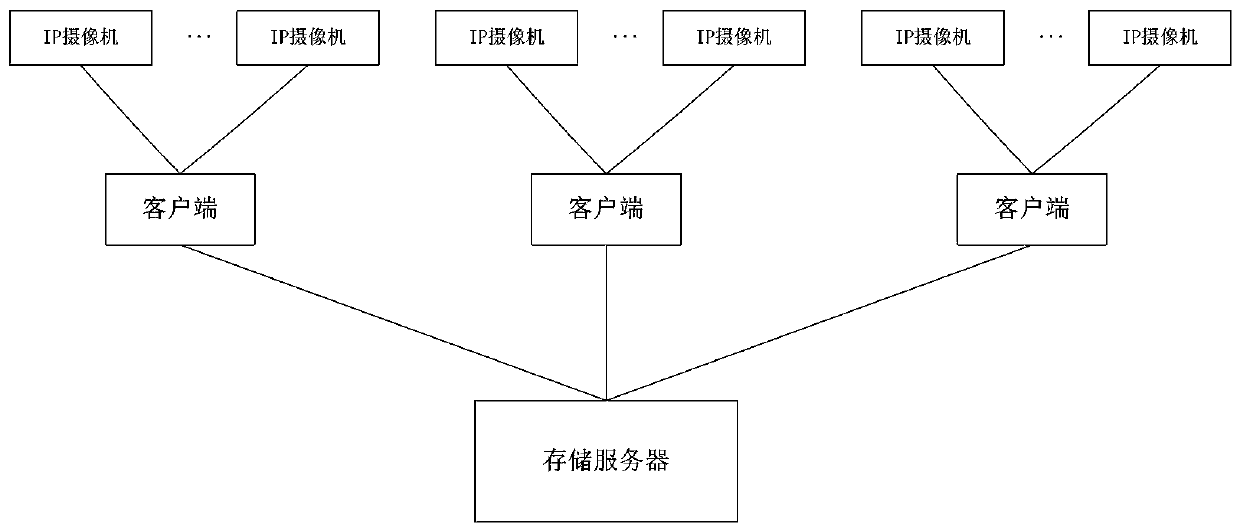

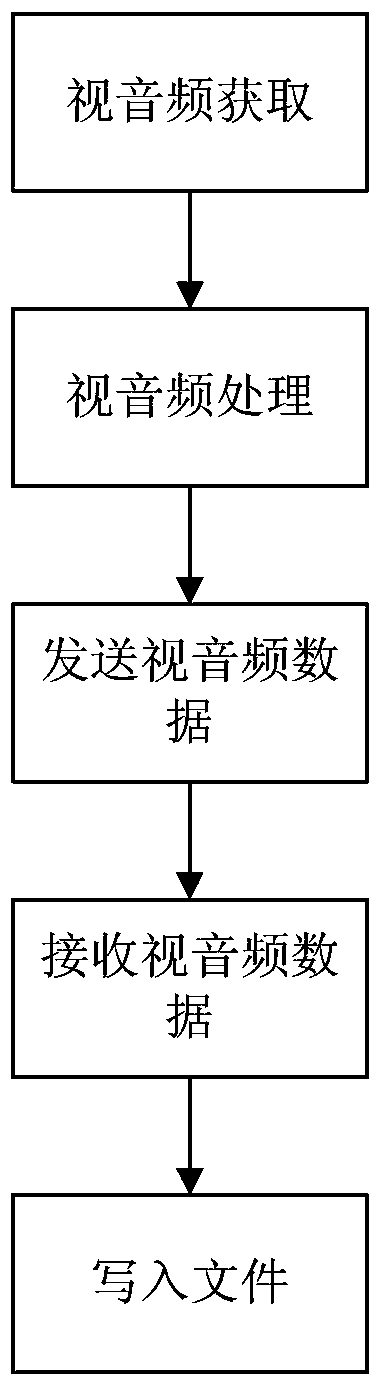

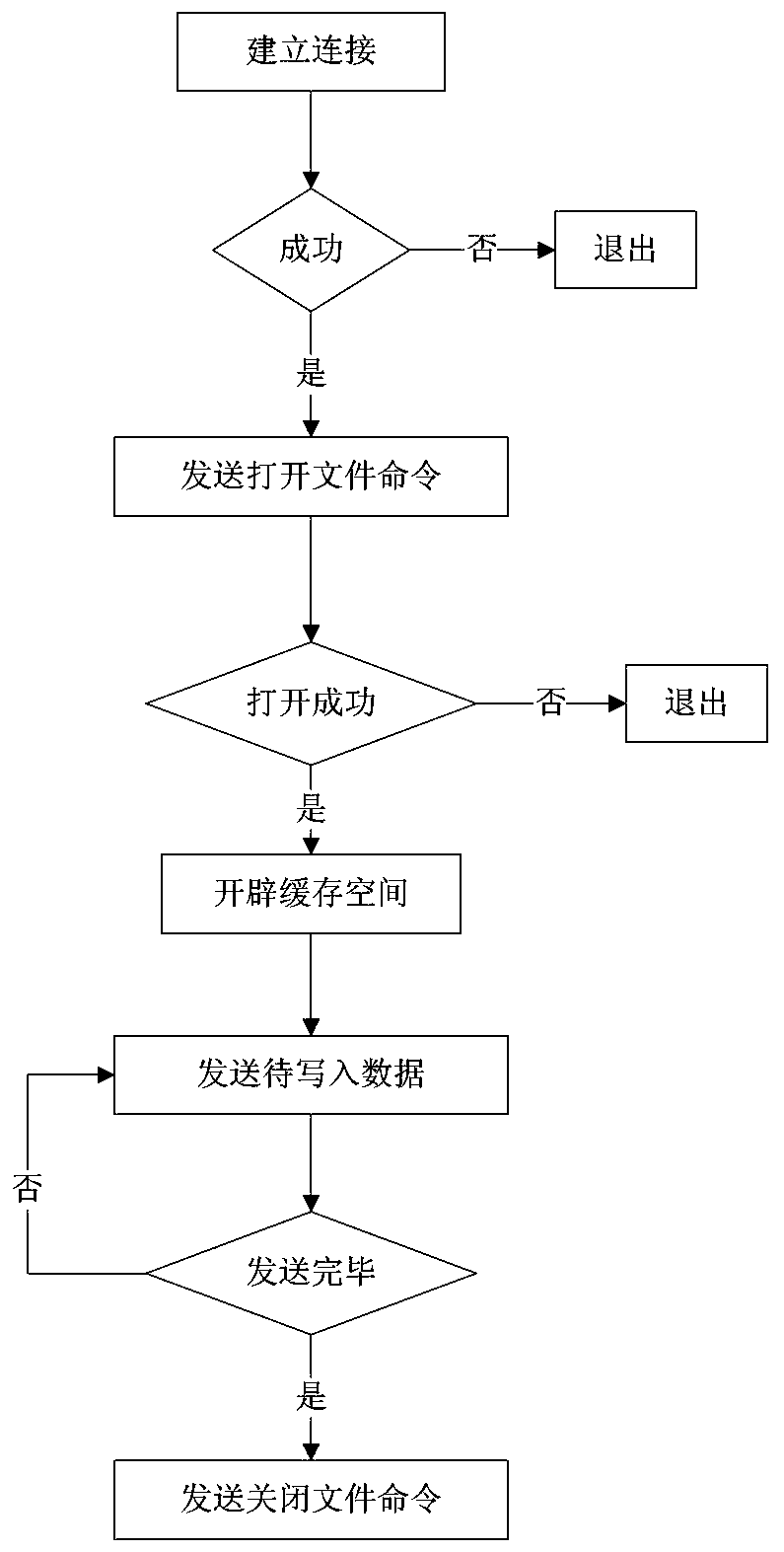

Method for quickly receiving and storing data in shared file system

ActiveCN107749893AReduce page faultsImprove memory access efficiencyFile access structuresTransmissionClient-sideUser state

The invention relates to a method for quickly receiving and storing data in a shared file system. The method comprises the steps of: obtaining videos and audios; processing the videos and audios; sending video and audio data; receiving the video and audio data; and writing in a file. According to the method disclosed by the invention, by utilization of a large page memory, a user-state network card driving technology and a data and metadata separation technology, a storage server can quickly receive multiple channels of data flows sent by multiple client sides, and write the data flows in thefile; and thus, the purpose of storing hundreds of video flows at the same time can be achieved. By means of the method disclosed by the invention, the concurrent access efficiency of the shared filesystem is effectively increased; simultaneously, the more access files are, the more obvious the effect is; and, for the common education recording industry or video monitoring industry, the video storage efficiency is increased to a great degree.

Owner:BEIJING JINGYI QIANGYUAN TECH

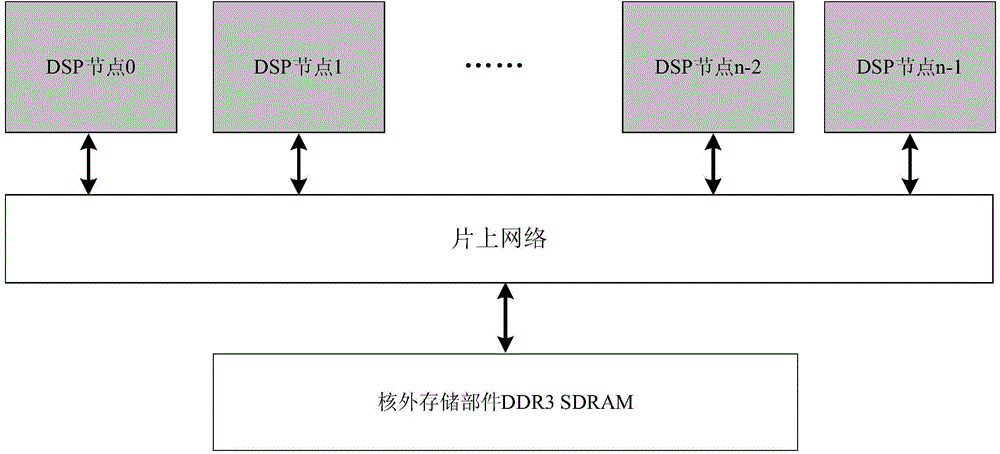

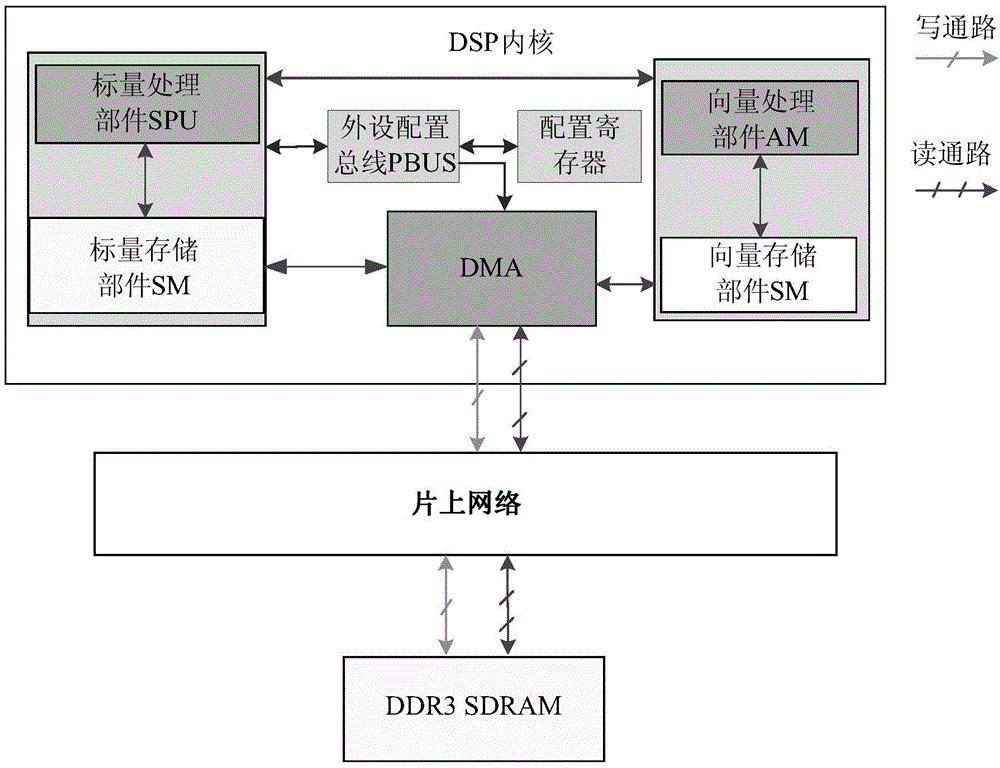

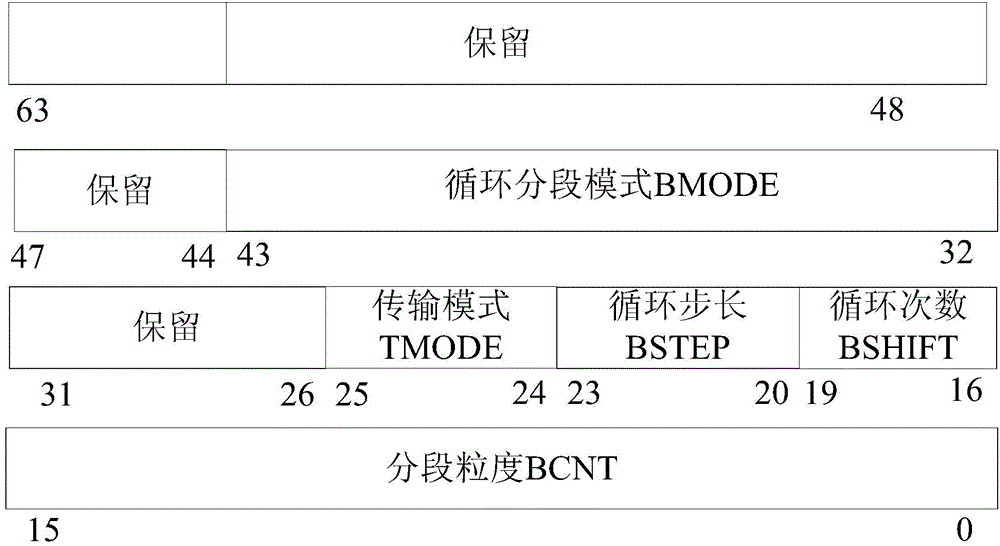

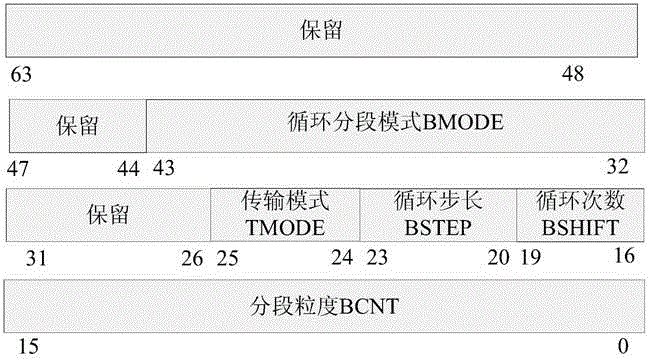

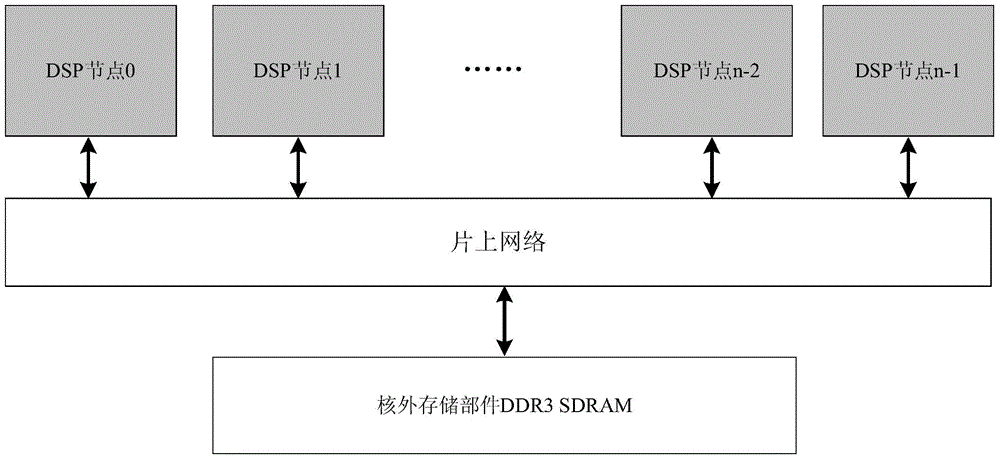

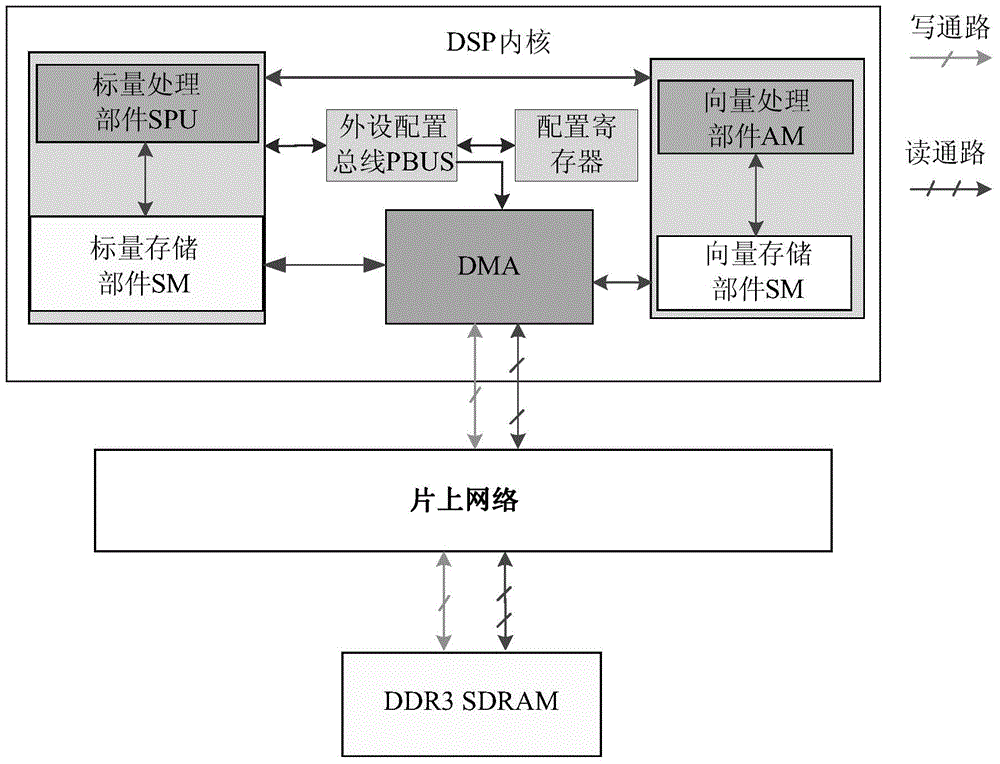

Multi-core DMA (direct memory access) subsection data transmission method used for GPDSP (general purpose digital signal processor) and adopting slave counting

ActiveCN104679689ALighten the load on the on-chip networkImprove memory access efficiencyElectric digital data processingData transmissionCore Storage

The invention discloses a multi-core DMA (direct memory access) subsection data transmission method used for a GPDSP (general purpose digital signal processor) and adopting slave counting. The multi-core DMA subsection data transmission method includes that (1), host DMA starts and generates a subsection data transmission request according to configuration parameters, and a reading-data return data selection vector is carried in a subsection data transmission reading request sent out by the DMA each time and indicates a target DSP inner core of return data; (2), a reading data return data selection vector corresponding to the reading request is carried in data returned by an out-of-core storage part, and an on-chip network explains a signal domain and sends data according to a DSP inner core corresponding to an effective bit vector; (3), the DMA of the DSP inner core after receiving the return data forwards the data to an in-core storage part AM or SM and counts at the same time; (4), after counting is completed, setting service completes identifier register. The multi-core DMA subsection data transmission method has the advantages of simple principle, convenience in operation, configuration flexibility, higher memory access efficiency and the like.

Owner:NAT UNIV OF DEFENSE TECH

Multi-core DMA (direct memory access) subsection data transmission method used for GPDSP and adopting host counting

ActiveCN104679691AReduce the amount of access requestsEffective perception of memory access characteristicsElectric digital data processingDirect memory accessTransfer procedure

The invention discloses a multi-core DMA (direct memory access) subsection data transmission method used for GPDSP and adopting host counting. The multi-core DMA subsection data transmission method includes that host DMA starts and generates a subsection data transmission request according to configuration parameters, and a return data selection vector marking a return data target node is carried in a subsection data transmission reading request sent out by the host DMA each time, each bit of the return data selection vector indicates whether a corresponding core is a target node reading return data ore not; when data corresponding to the reading request are returned, an on-chip network distributes the data to corresponding DMA according to return data selection vector; the host DMA counts transmission data; after counting is completed, the host DMA sends signals for emptying receiving cache to all slave DMA taking part in transmission service; after the slave DMA empties the receiving cache, data transmission service is completed. The multi-core DMA subsection data transmission method has the advantages of simple principle, convenience in operation, configuration flexibility, high memory access efficiency and the like.

Owner:NAT UNIV OF DEFENSE TECH

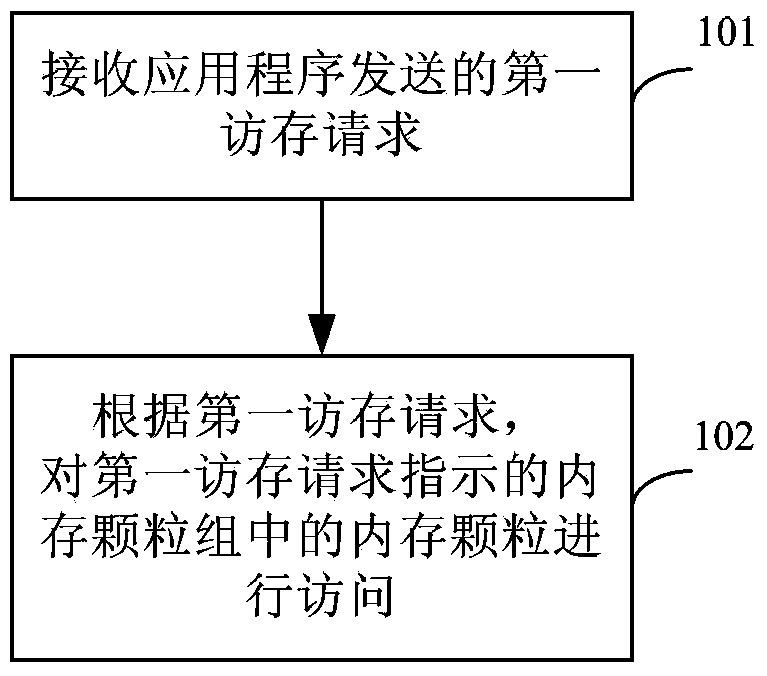

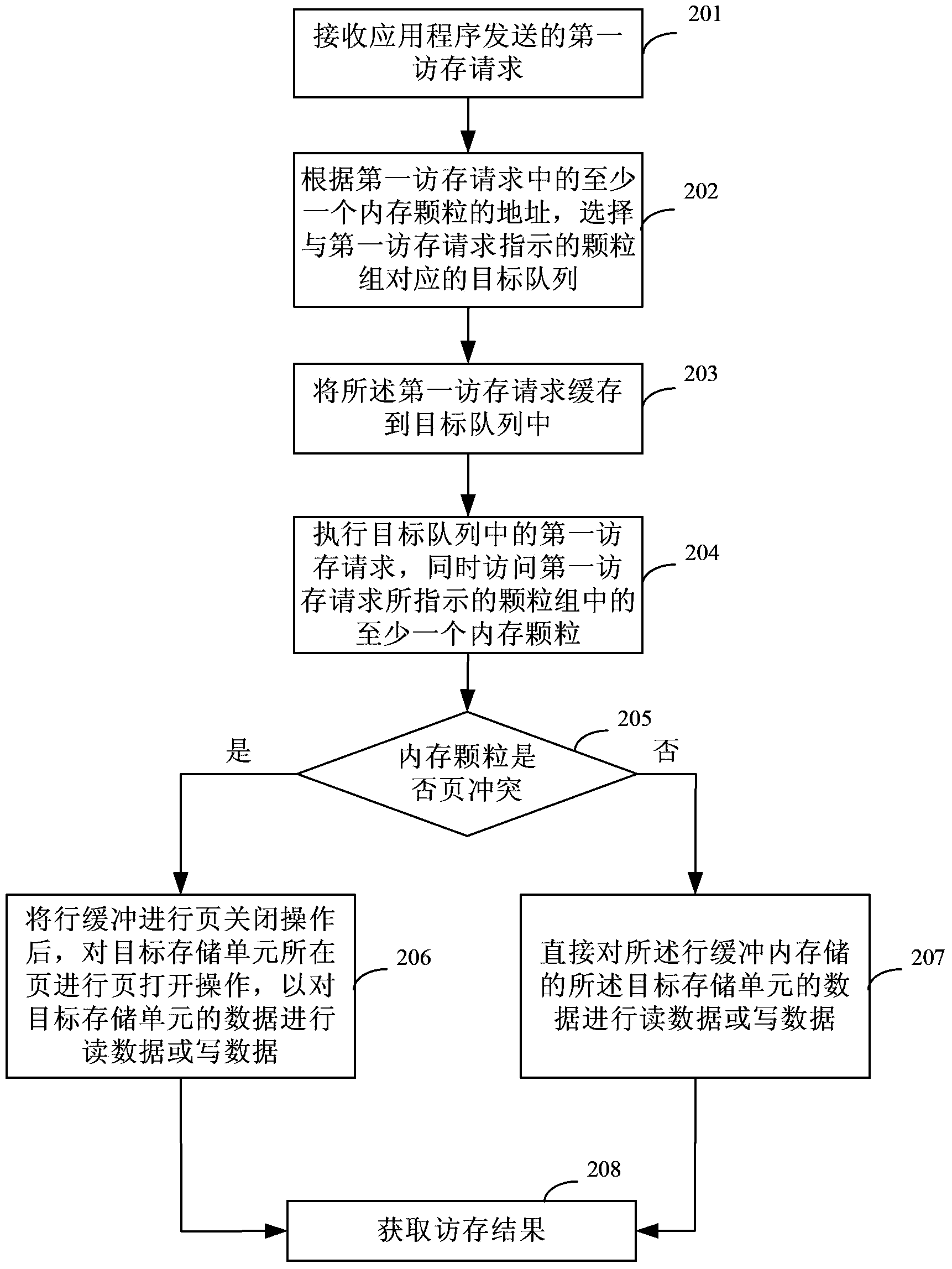

Memory access method and memory controller

InactiveCN104252422AReduce the possibilityImprove efficiencyMemory adressing/allocation/relocationProgram controlMemory controllerMemory chip

The invention provides a memory access method and a memory controller. A memory access request is sent according to an application program, and at least one memory chip comprised by a memory chip set indicated by the memory access request is accessed; the memory chip in the memory chip set is determined by the application program according to the memory access bandwidth requirement, and different application programs have different memory access bandwidth requirements, therefore, memory access requests sent by different application programs indicate at least one of different memory chips, so that different memory chips can be respectively accessed by different application programs, and thus a line buffer memory in each memory chip has pages with different page addresses, the page conflicts in all memory chips are avoided, the possibility of page conflicts is reduced, and the efficiency of memory access is improved.

Owner:HUAWEI TECH CO LTD +1

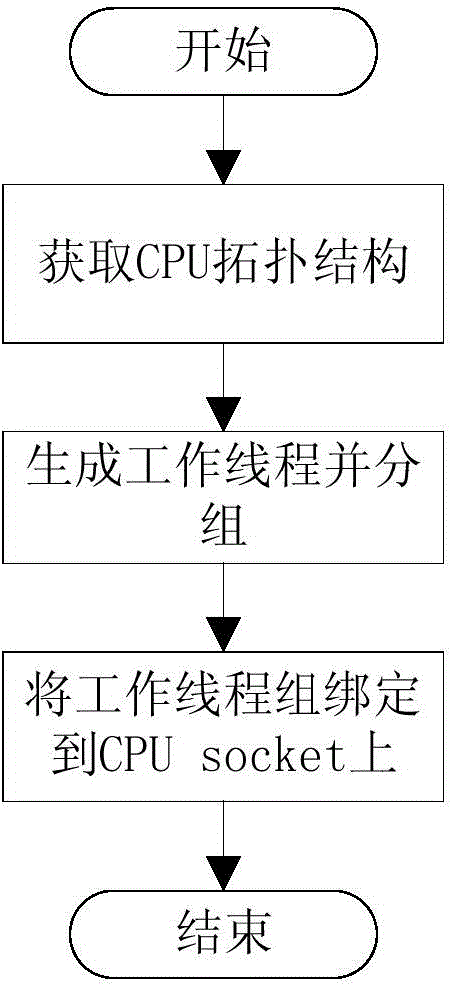

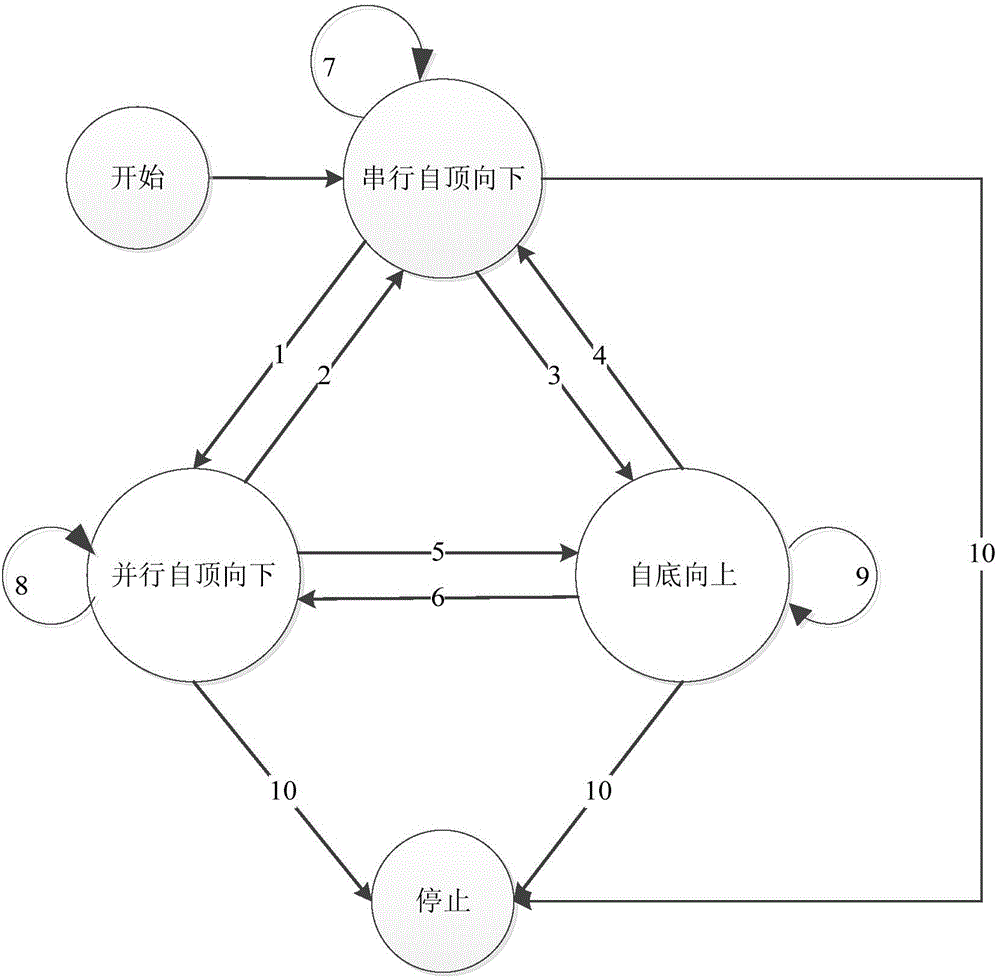

Parallel breadth-first search method based on shared memory system structure

InactiveCN106033442AReduce access conflictsImprove memory access efficiencySpecial data processing applicationsCPU socketShared memory architecture

The invention discloses a parallel breadth-first search method based on a shared memory system structure. The method comprises: a worker thread grouping and binding process, a data interval partition process, and a task execution process. The method specifically comprises: obtaining a CPU topological structure of an operating environment, partitioning and binding a worker thread group; partitioning data intervals, and making the data intervals corresponding to the worker thread groups; task execution using a hierarchical ergodic method, in an execution process, automatically selecting a proper state from a series top-down state, a parallel top-down state, and a bottom-up state, as a self execution state, and when count in an activated state in a certain layer is zero, ending task execution. The method reduces communication among CPU sockets through task partition, data consistency maintenance among the CPU sockets is eliminated, and improves data reading efficiency through data binding and automatic switching, so as to improve operation efficiency of program.

Owner:PEKING UNIV

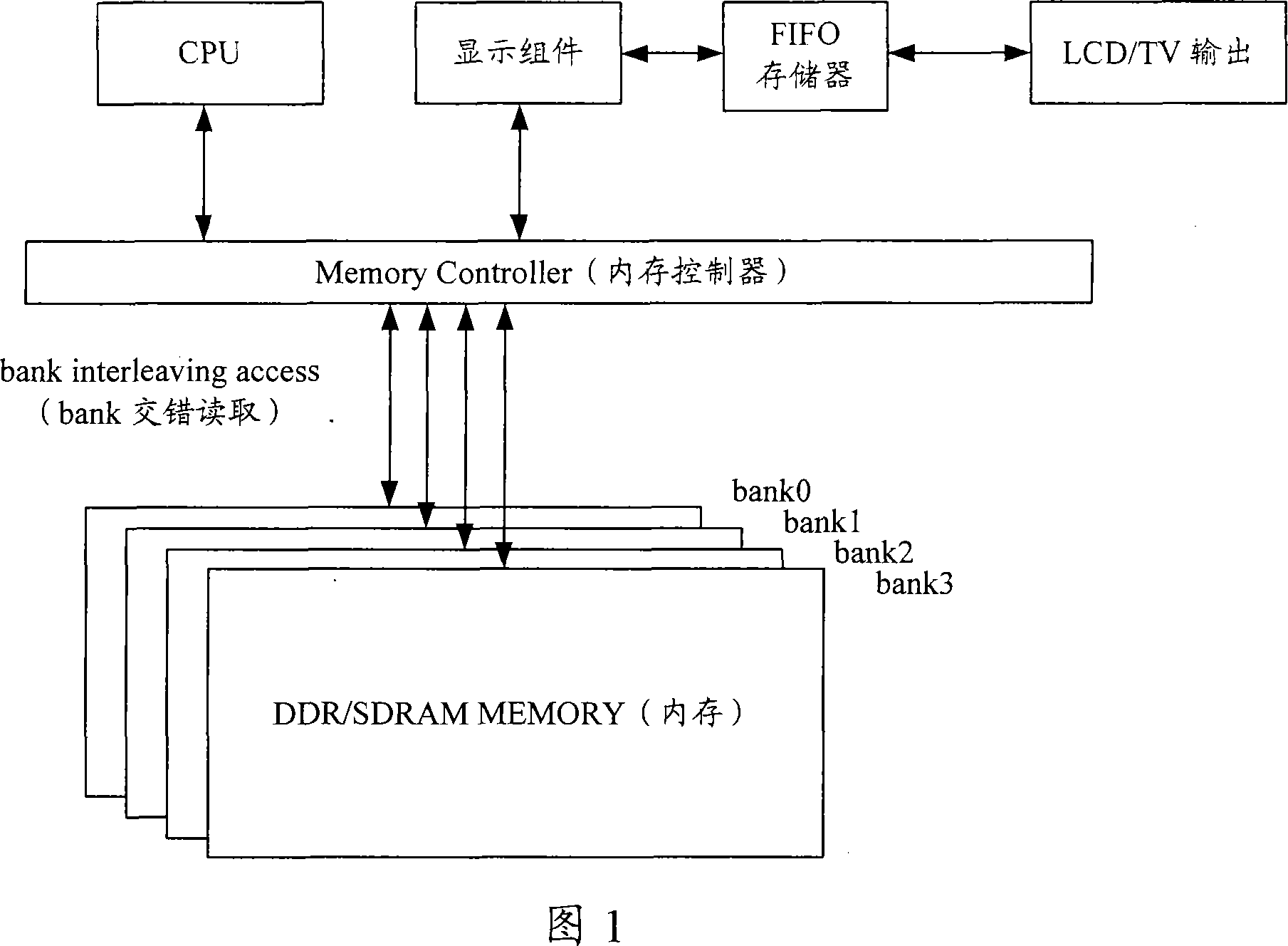

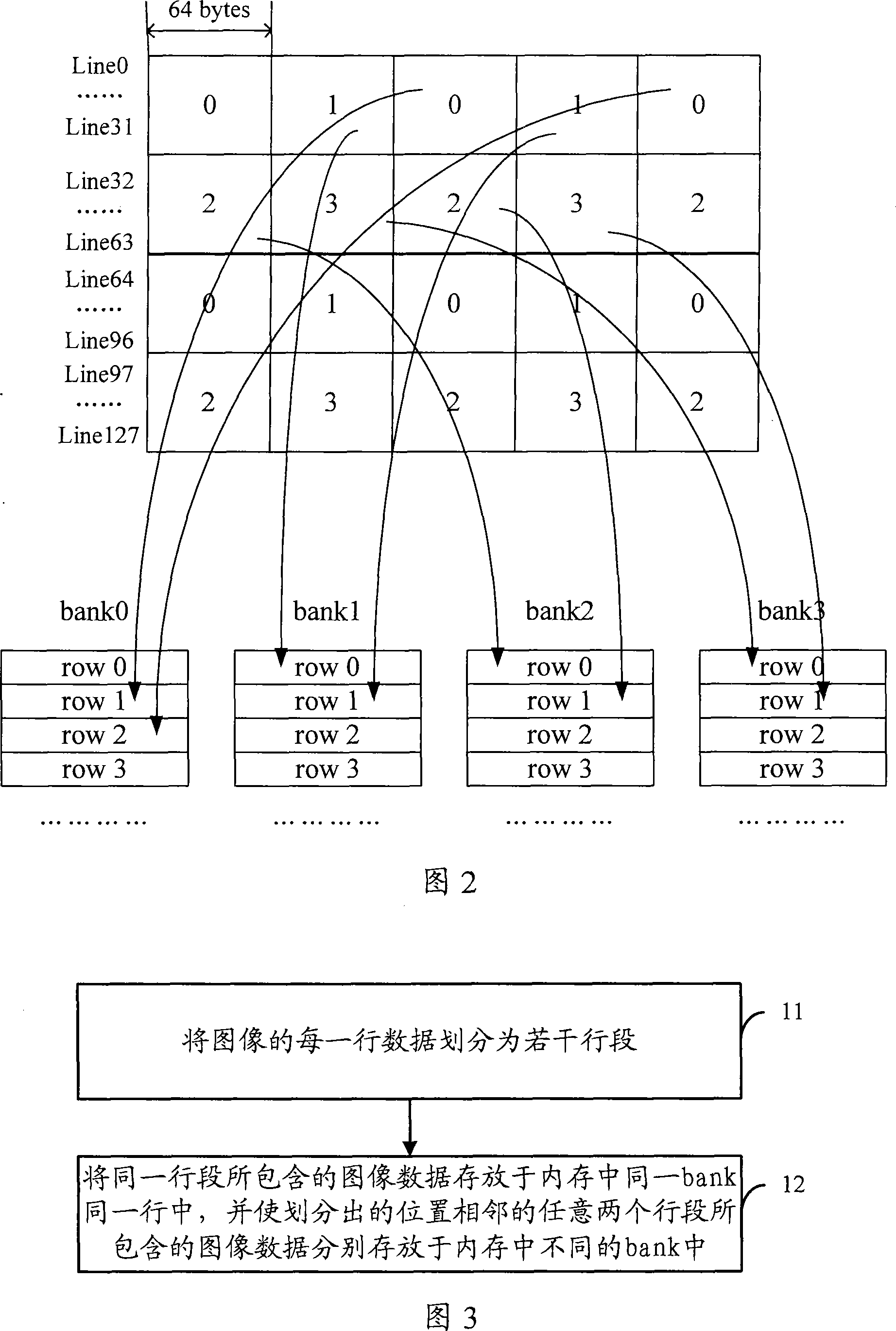

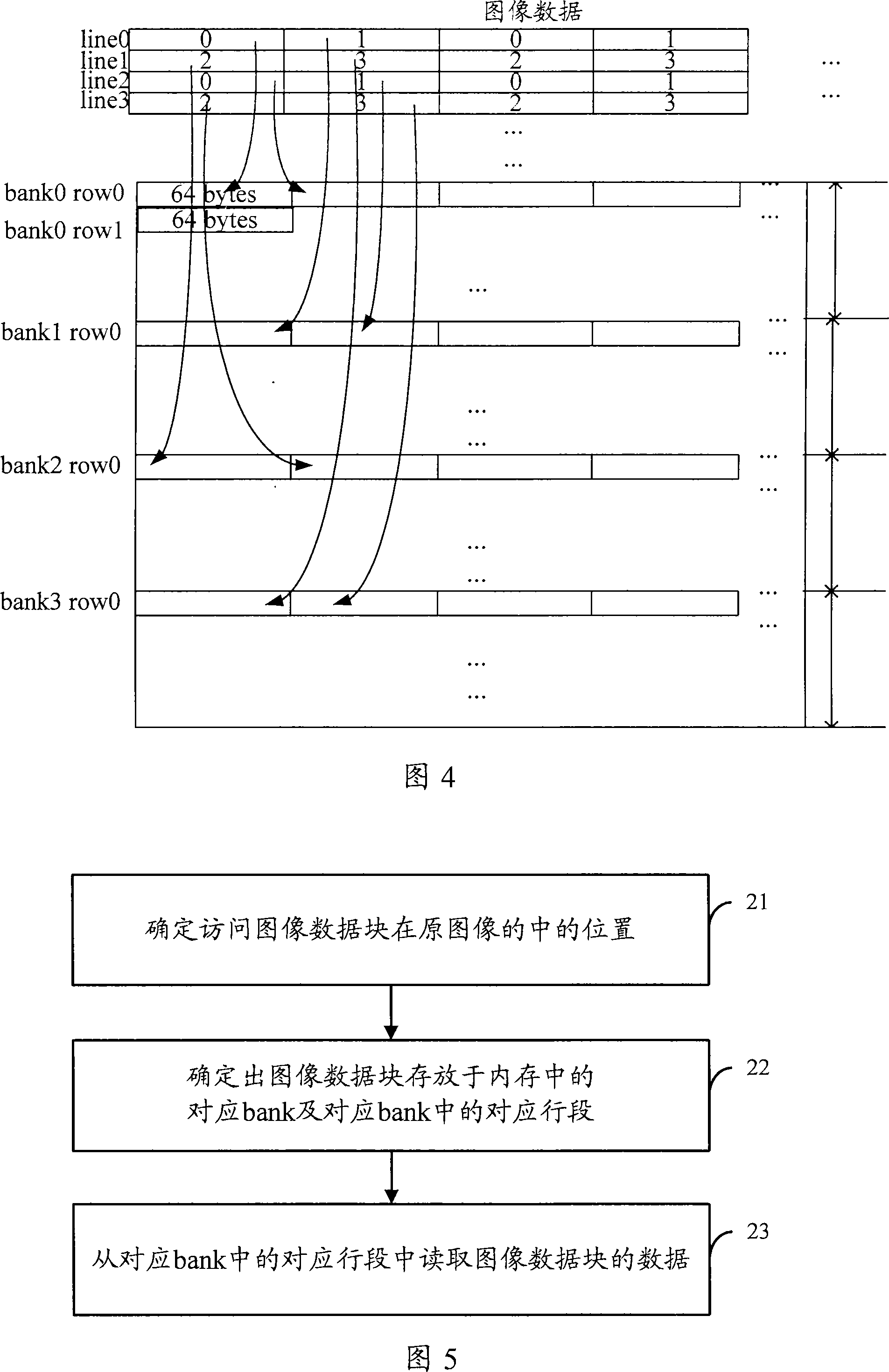

An image data memory projection method, assess method and device

InactiveCN101216933ALow memory frequencyReduce power consumptionImage memory managementTelevision systemsAccess methodData memory

The invention discloses a memory projection method, an access method and a device. The invention comprises the following steps of: dividing each row of the image data of an image into a plurality of row sections, storing the image data in the same row section in the same row of the same bank in a memory and separately storing the image data contained in any two contiguous rows into the different banks in the storage. The image access to the images in the storage has the following steps of: first ensuring the position of the image block in the image, confirming the corresponding bank and the corresponding row section in the corresponding bank of the image data block stored in the storage, and reading the data of the image data block in the corresponding row section of the corresponding bank. The project provided by the inventive embodiment still can screen the preparation time of the storage access via the switch between the banks in the storage and observably improve the storage access efficiency in the concurrence of the image data storage access and other real time storage accesses.

Owner:ACTIONS SEMICONDUCTOR

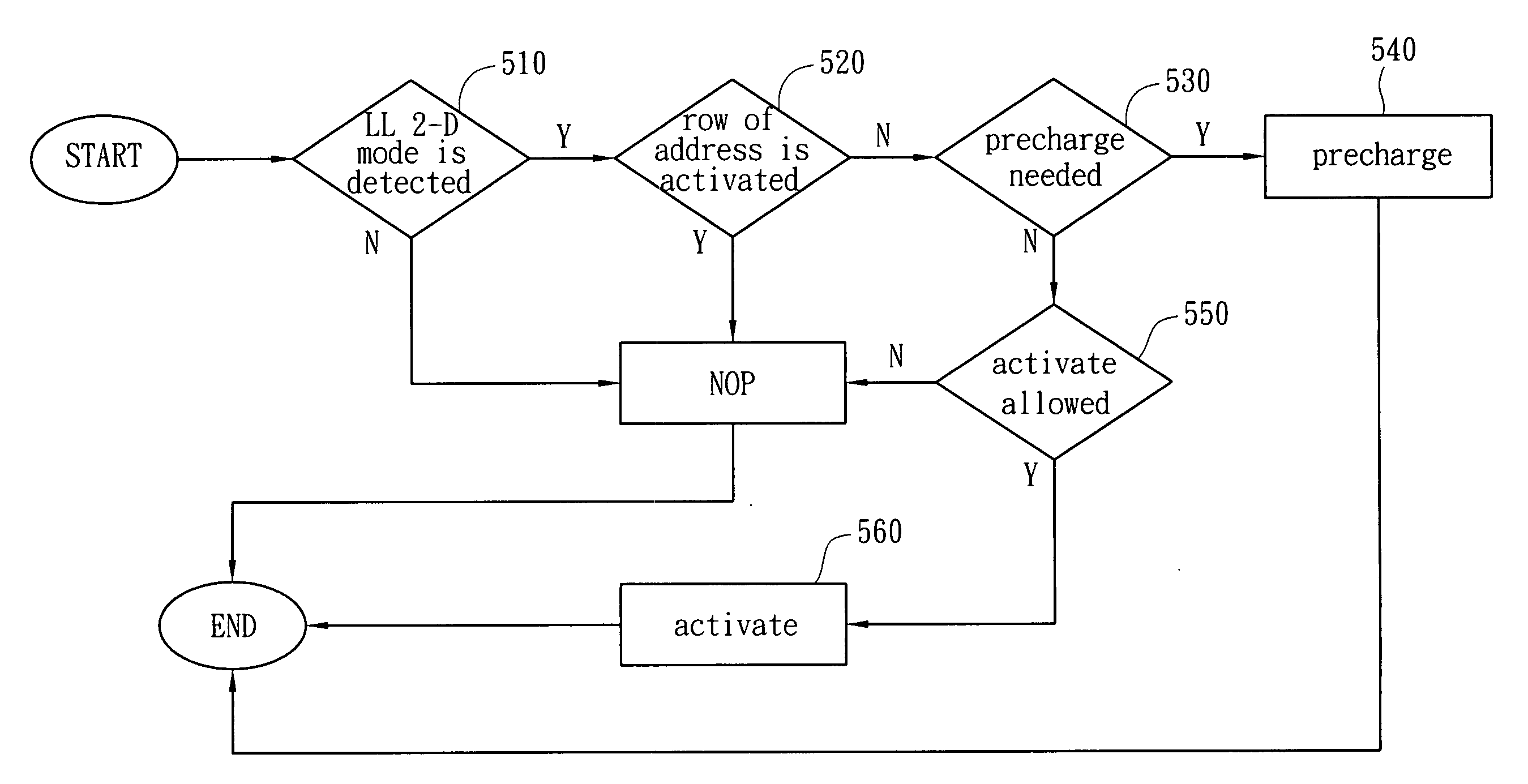

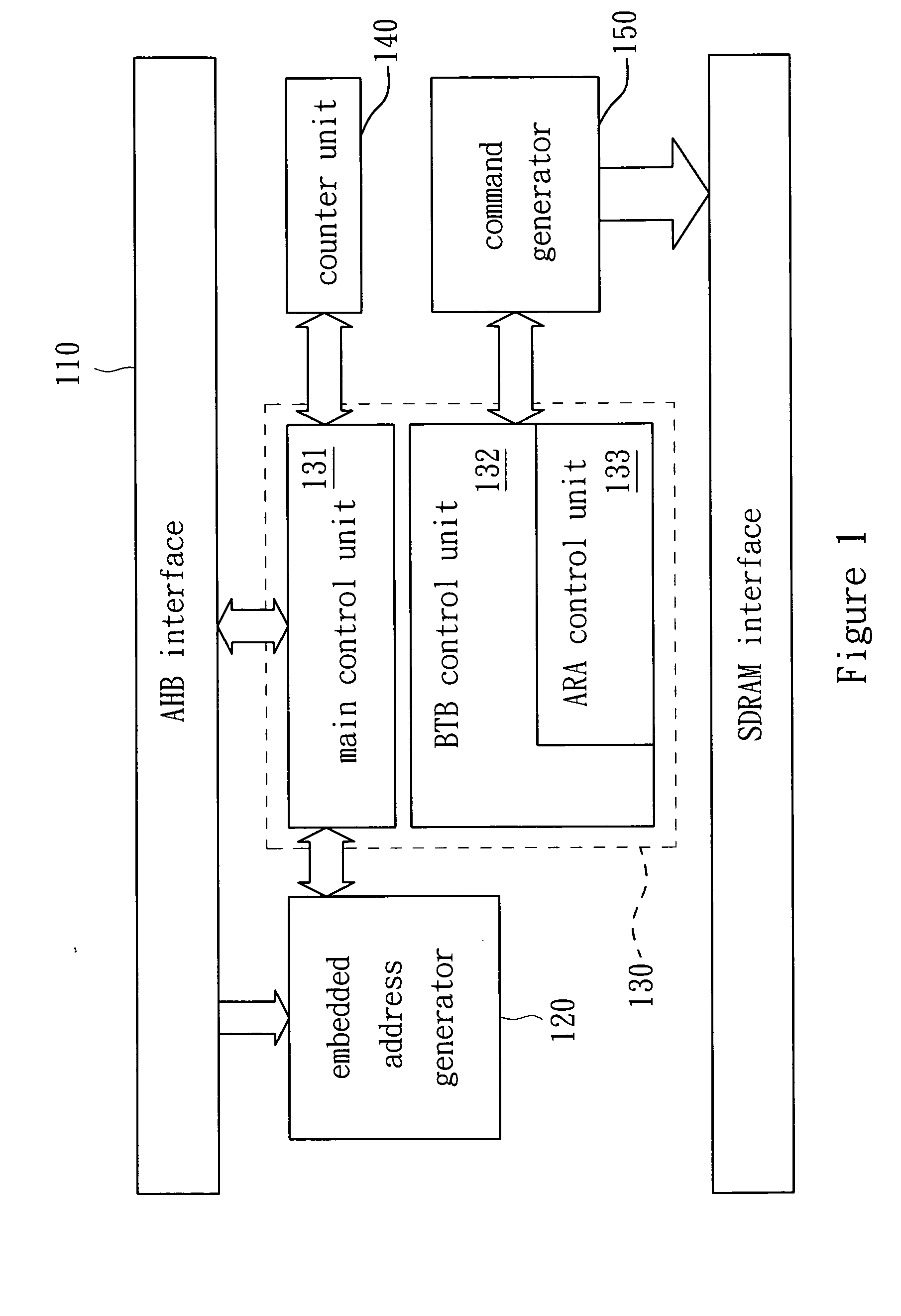

Memory controlling method

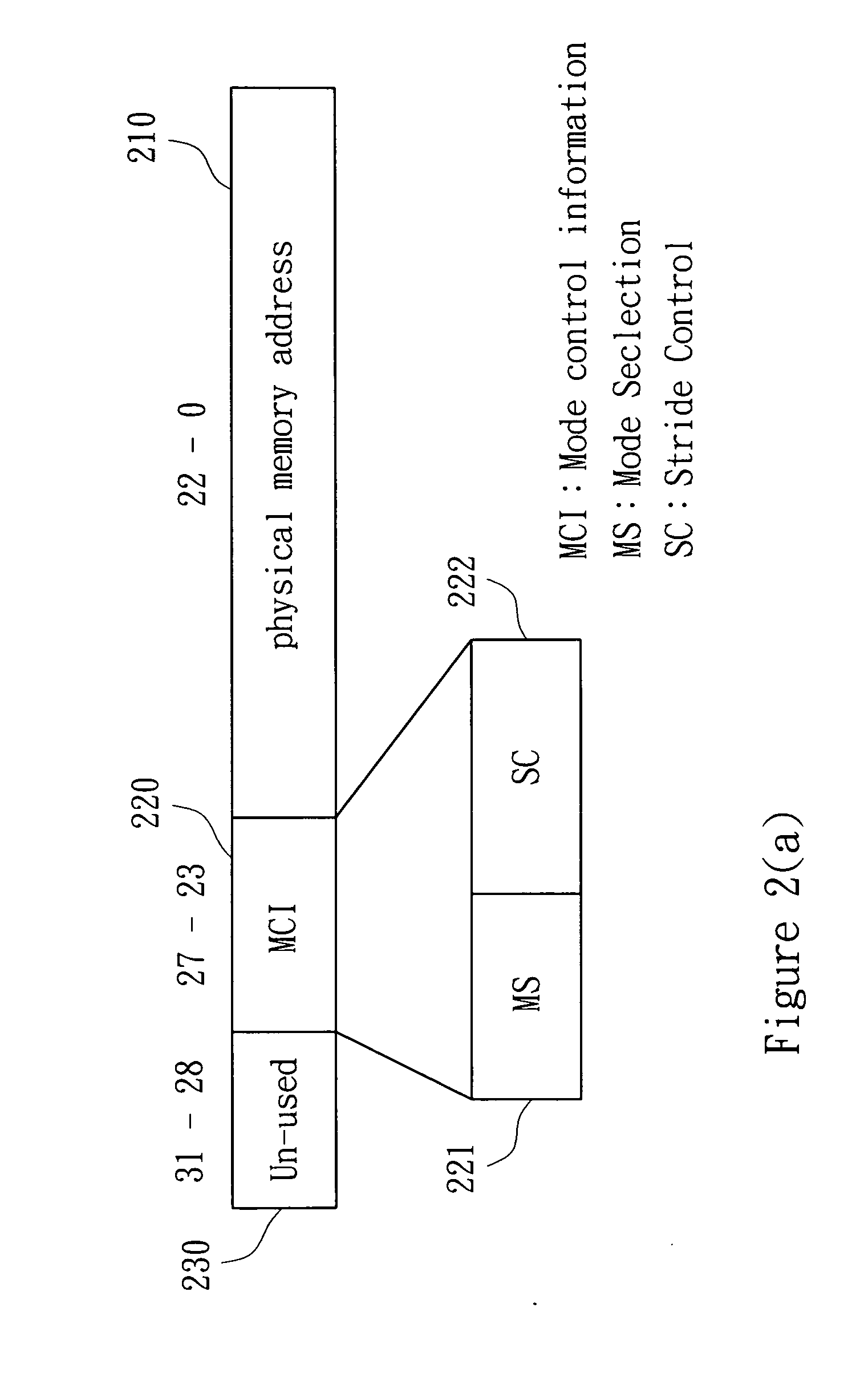

InactiveUS20080065819A1Lower latencyImprove memory access efficiencyEnergy efficient ICTMemory adressing/allocation/relocationBus interfaceAddress generator

A method for memory controlling is disclosed. It includes an embedded address generator and a controlling scheme of burst terminates burst, which could erase the latency caused by bus interface during the access of non-continuous addresses. Moreover, it includes a controlling scheme of anticipative row activating, which could reduce the latency across different rows of memory by data access. The method could improve the access efficiency and power consumption of memory.

Owner:NATIONAL CHUNG CHENG UNIV

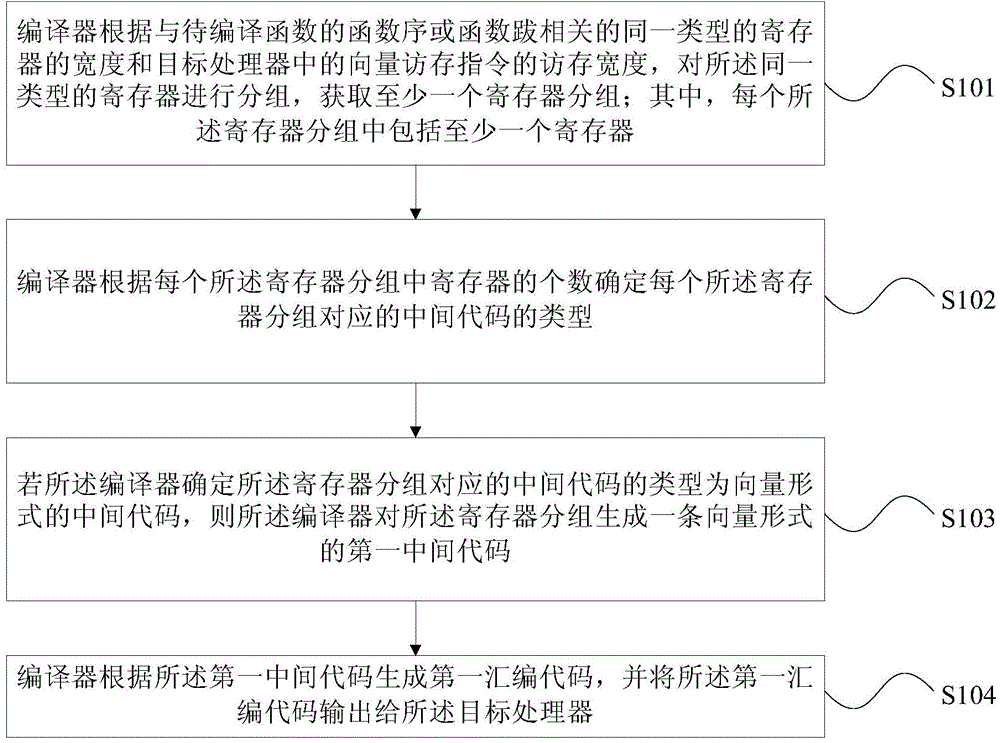

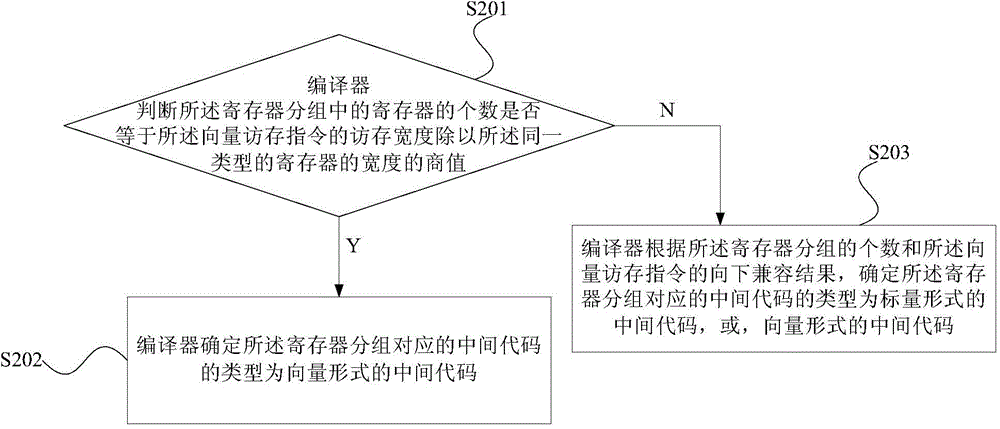

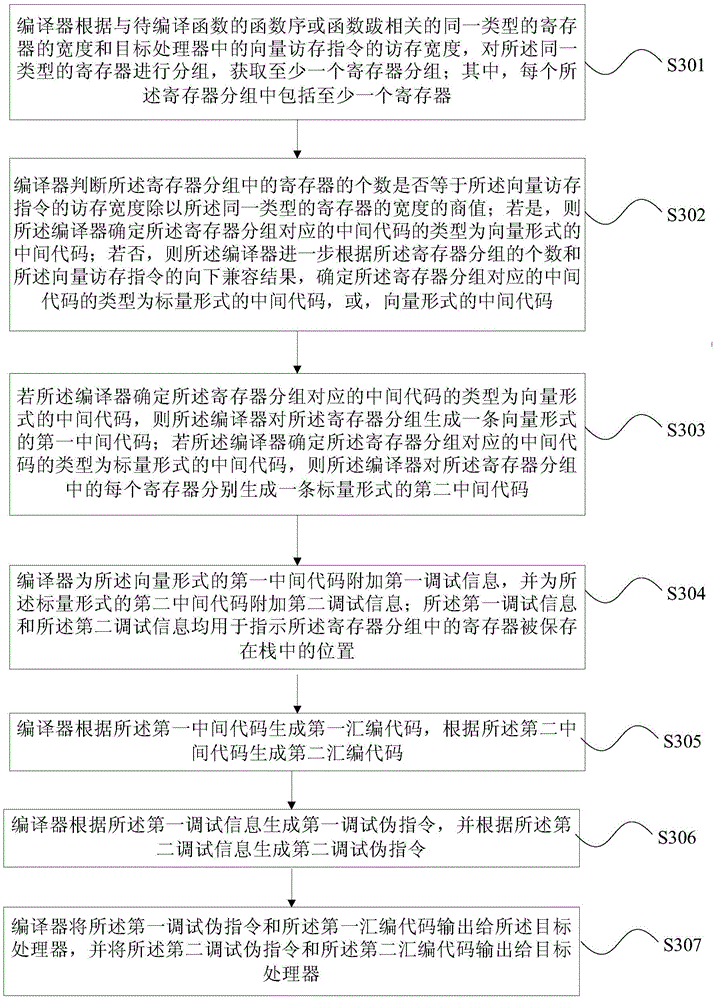

Access optimization compiling method and device for functions

ActiveCN106201641ARelieve pressureReduce in quantityProgram controlMemory systemsFistProcessor register

The invention provides an access optimization compiling method and device for functions. The method comprises the following steps of: grouping the same type of registers related to a function series or a function sequence of a to-be-compiled function by a compiler according to widths of the same type of registers and an access width of a vector access instruction in a target processor, so as to obtain register groups, and determining a type of an intermediate code corresponding to each register group according to the number of registers in each register group; if the type of the intermediate code corresponding to the register group is an intermediate code in a vector form, generating a fist intermediate code in the vector form for the register group; and generating a first assembly code by the compiler according to the first intermediate code, and outputting the first assembly code to the target processor. According to the method provided by the embodiments of the invention, the quantity of instructions in the function series or function sequence is greatly decreased, the space for storing the instructions is saved, the access efficiency of a computer is improved and the pressure of the registers is decreased.

Owner:LOONGSON TECH CORP

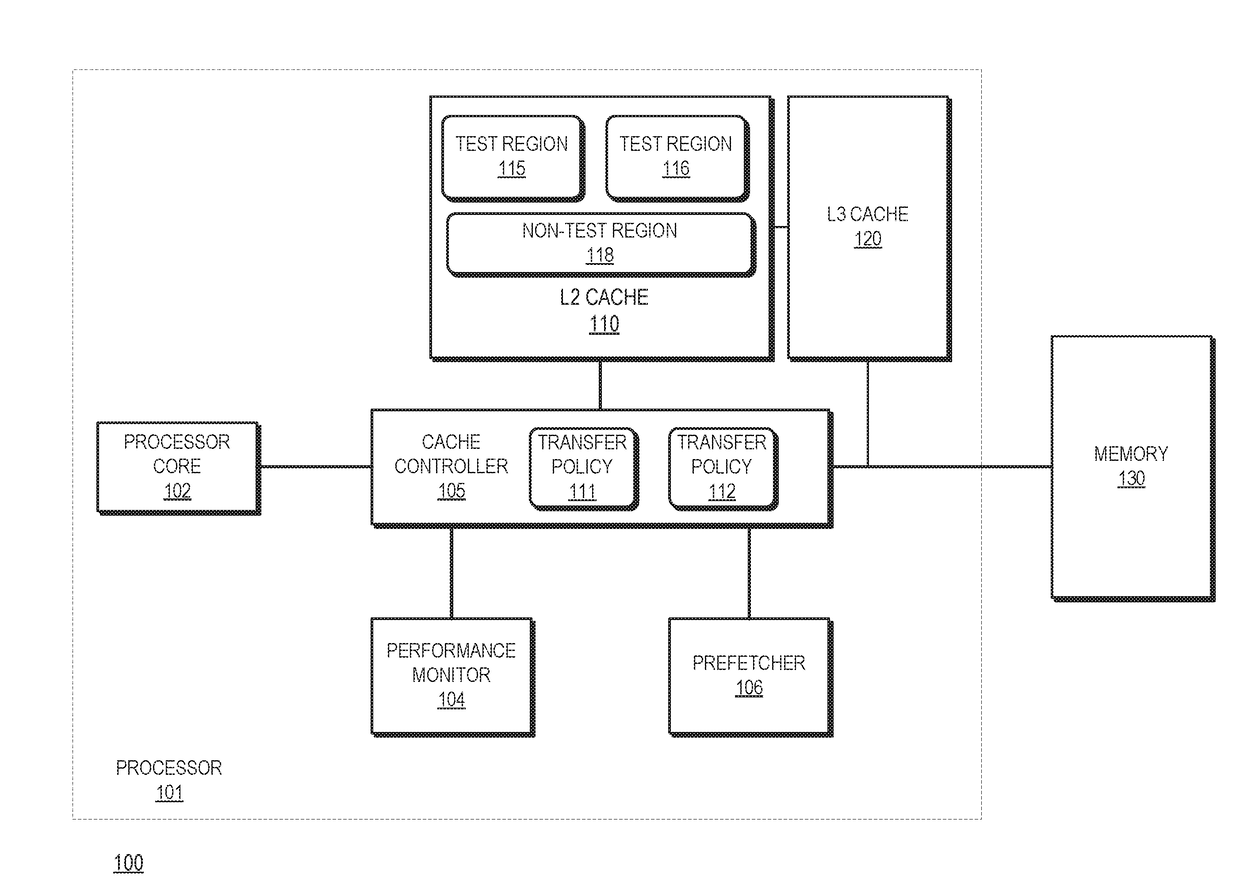

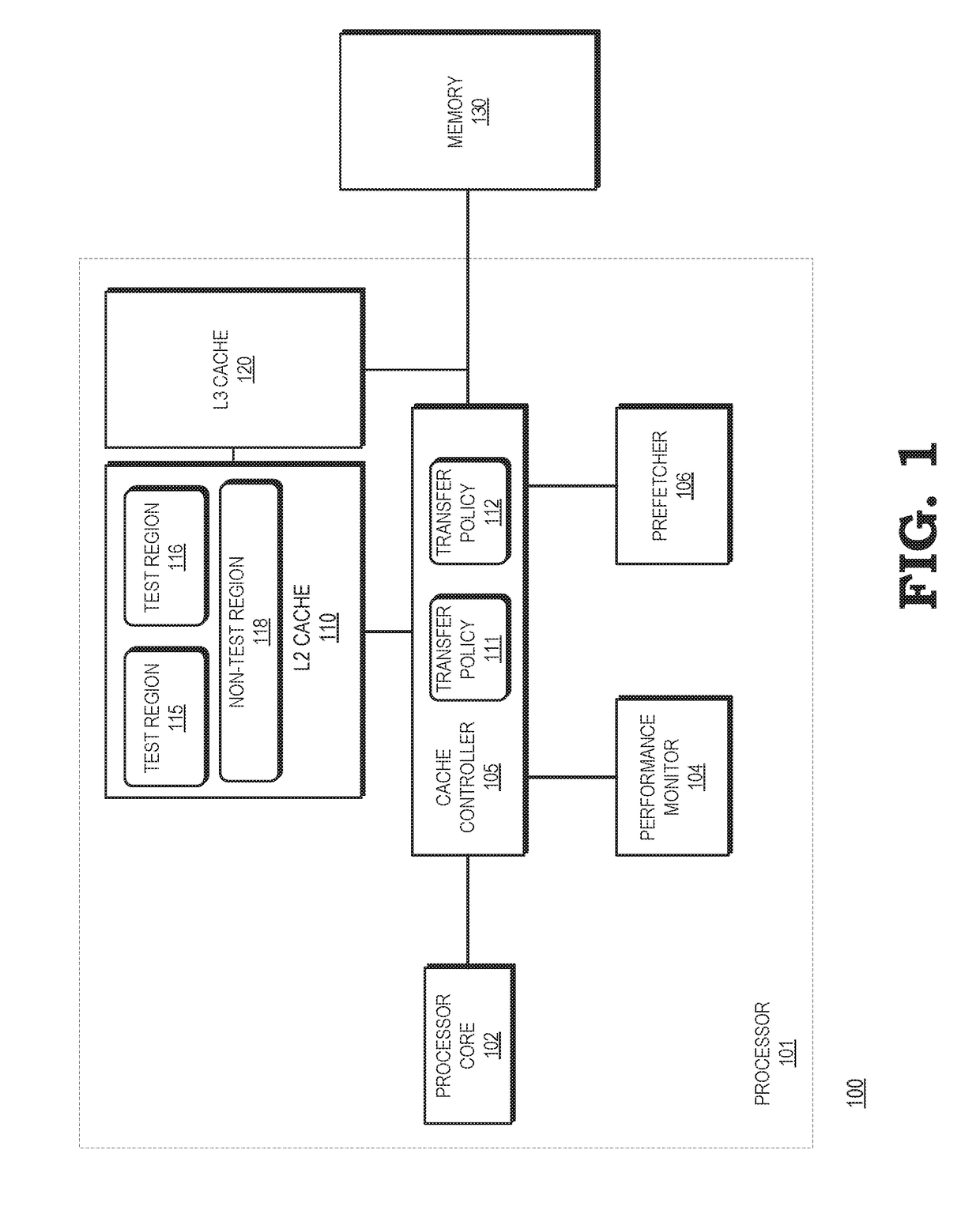

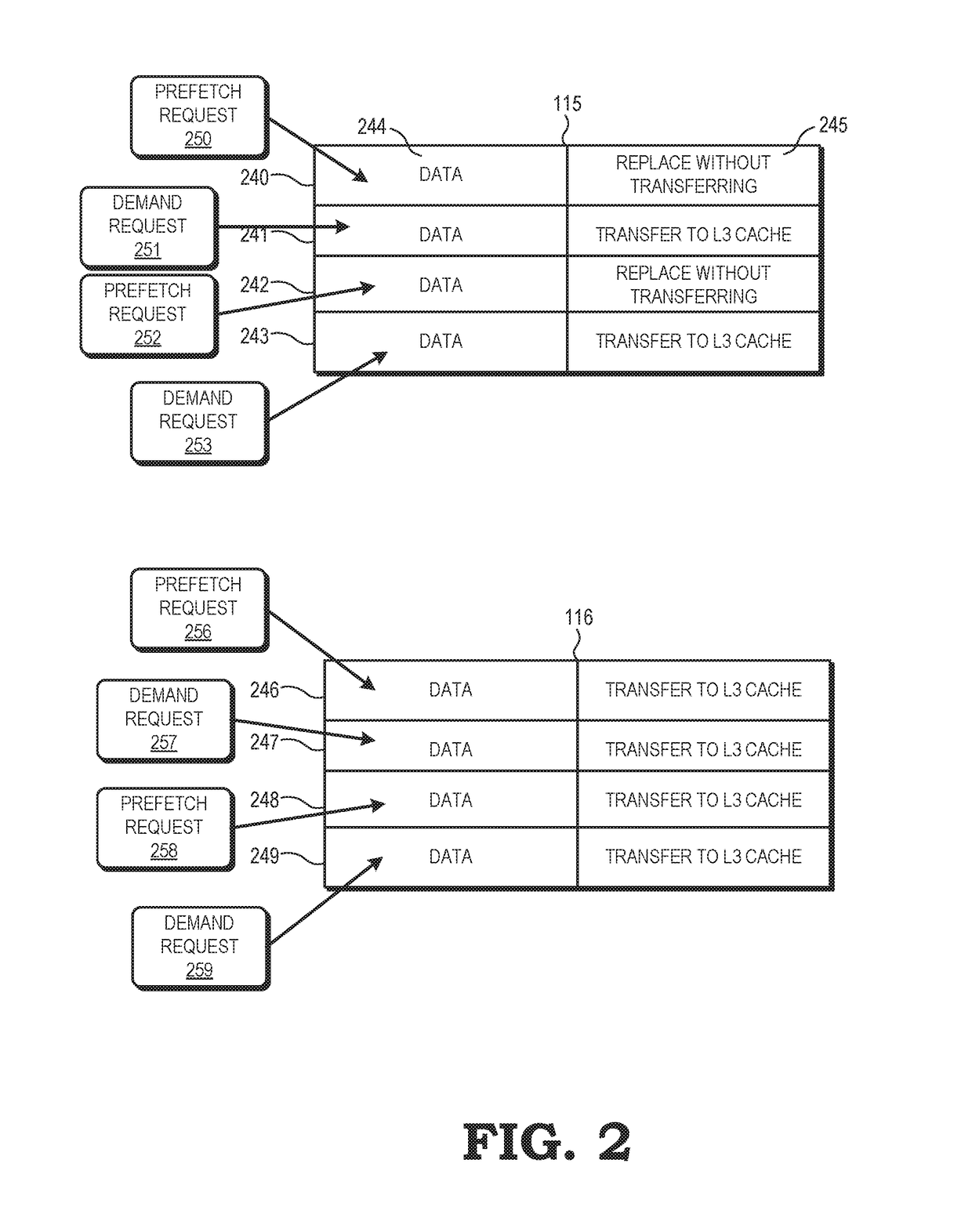

Selecting cache transfer policy for prefetched data based on cache test regions

ActiveUS20180024931A1Improve memory access efficiencyLow efficiencyMemory architecture accessing/allocationMemory systemsCache hierarchyInvalid Data

A processor applies a transfer policy to a portion of a cache based on access metrics for different test regions of the cache, wherein each test region applies a different transfer policy for data in cache entries that were stored in response to a prefetch requests but were not the subject of demand requests. One test region applies a transfer policy under which unused prefetches are transferred to a higher level cache in a cache hierarchy upon eviction from the test region of the cache. The other test region applies a transfer policy under which unused prefetches are replaced without being transferred to a higher level cache (or are transferred to the higher level cache but stored as invalid data) upon eviction from the test region of the cache.

Owner:ADVANCED MICRO DEVICES INC

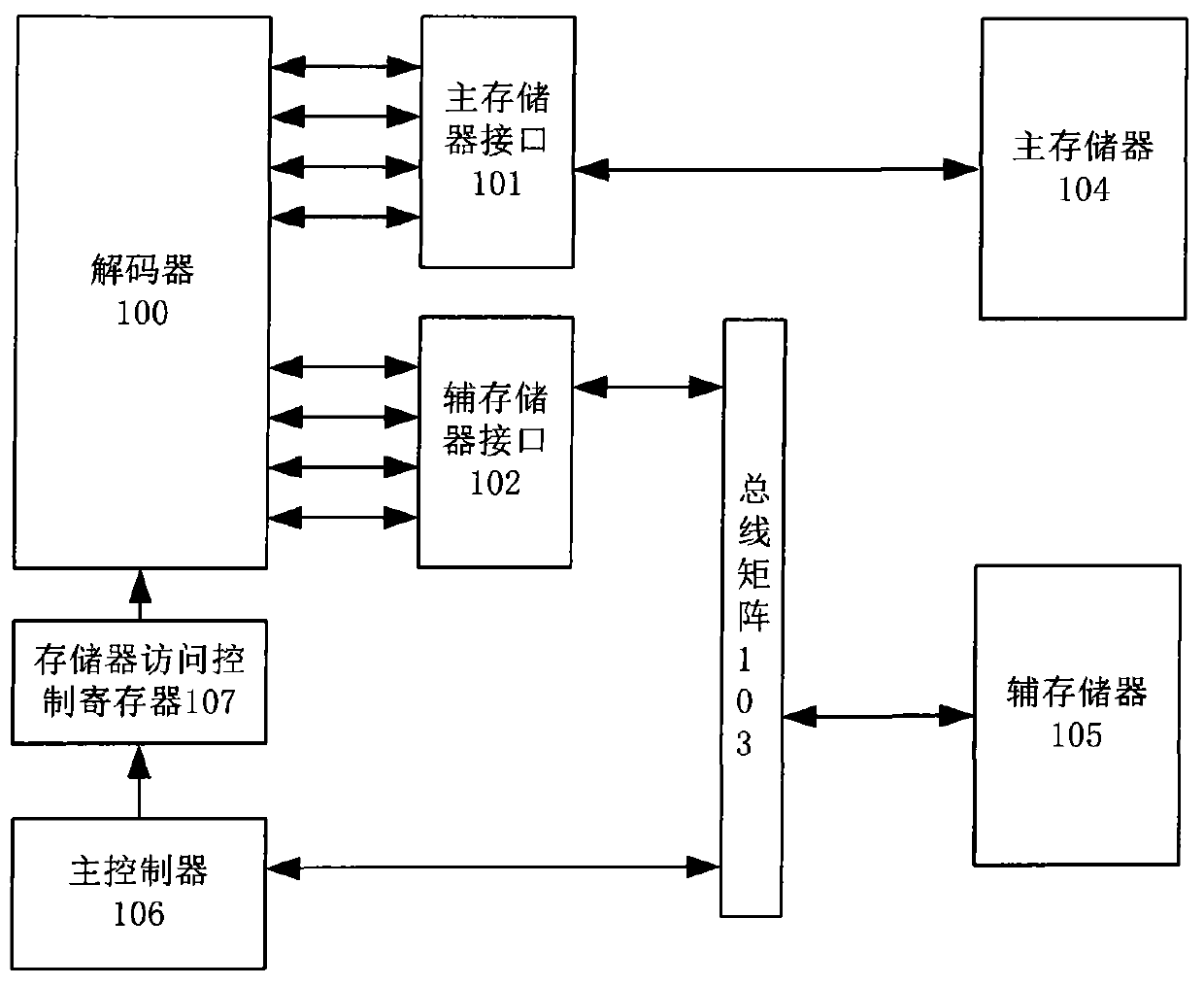

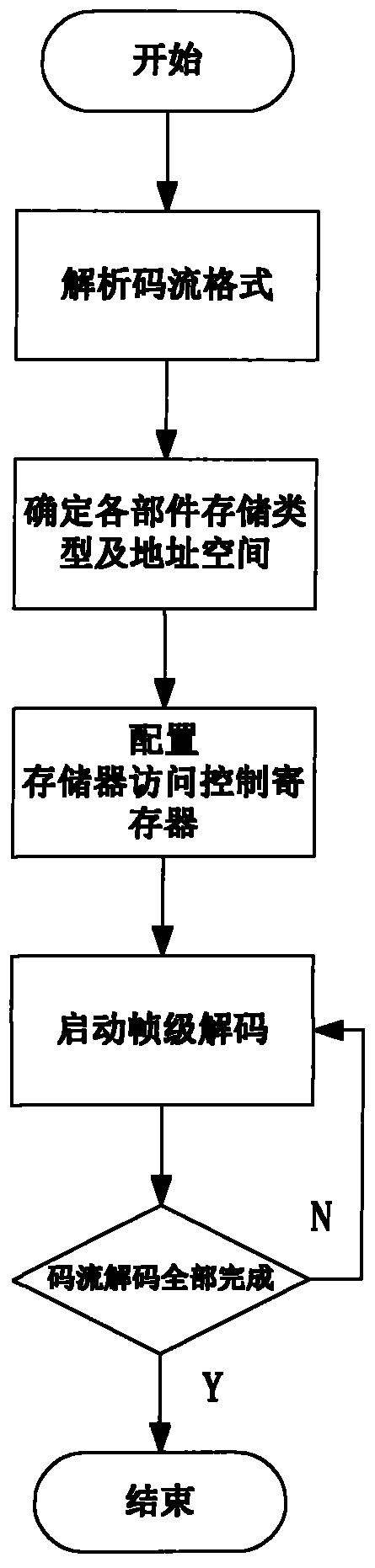

Video decoder of dual-buffer-memory structure and control method

InactiveCN103957419AReduce bandwidth requirementsFlexible allocationDigital video signal modificationProcessor registerMemory interface

The invention provides a video decoder of a dual-buffer-memory structure. The video decoder comprises a storage access control register, a main storage interface, an auxiliary storage interface, a bus matrix, a main storage, an auxiliary storage and an encoder body. According to the format of input and compressed video streams, a main controller configures the storages used by hardware functional modules of the decoder body through the storage access control register, and sets corresponding access and storage addresses and spaces. After the decoder body receives a decoding start command sent by the main controller, the hardware functional modules in the decoder body are started and carry out concurrent execution, and an access and storage request is sent to the main storage interface or the auxiliary storage interface according to setting of a storage access controller. The main storage interface and the auxiliary storage interface receive the access and storage request of the hardware functional modules of the decoder body and have access to the corresponding storage after arbitration. According to a method and a circuit, a storage centralized management mode is adopted, different storage access modes are configured to the hardware functional modules of the decoder body according to the video streams of a multi-standard compressed encoding format, the areas of the storages are saved, and bandwidth requirements of a system are effectively reduced.

Owner:CHINA AGRI UNIV

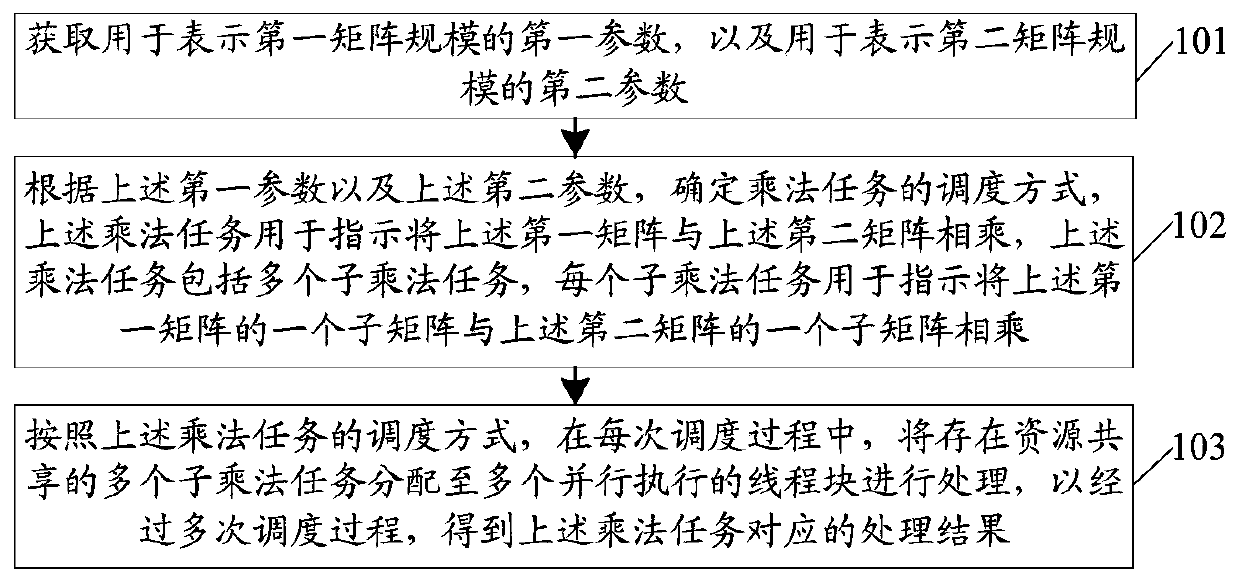

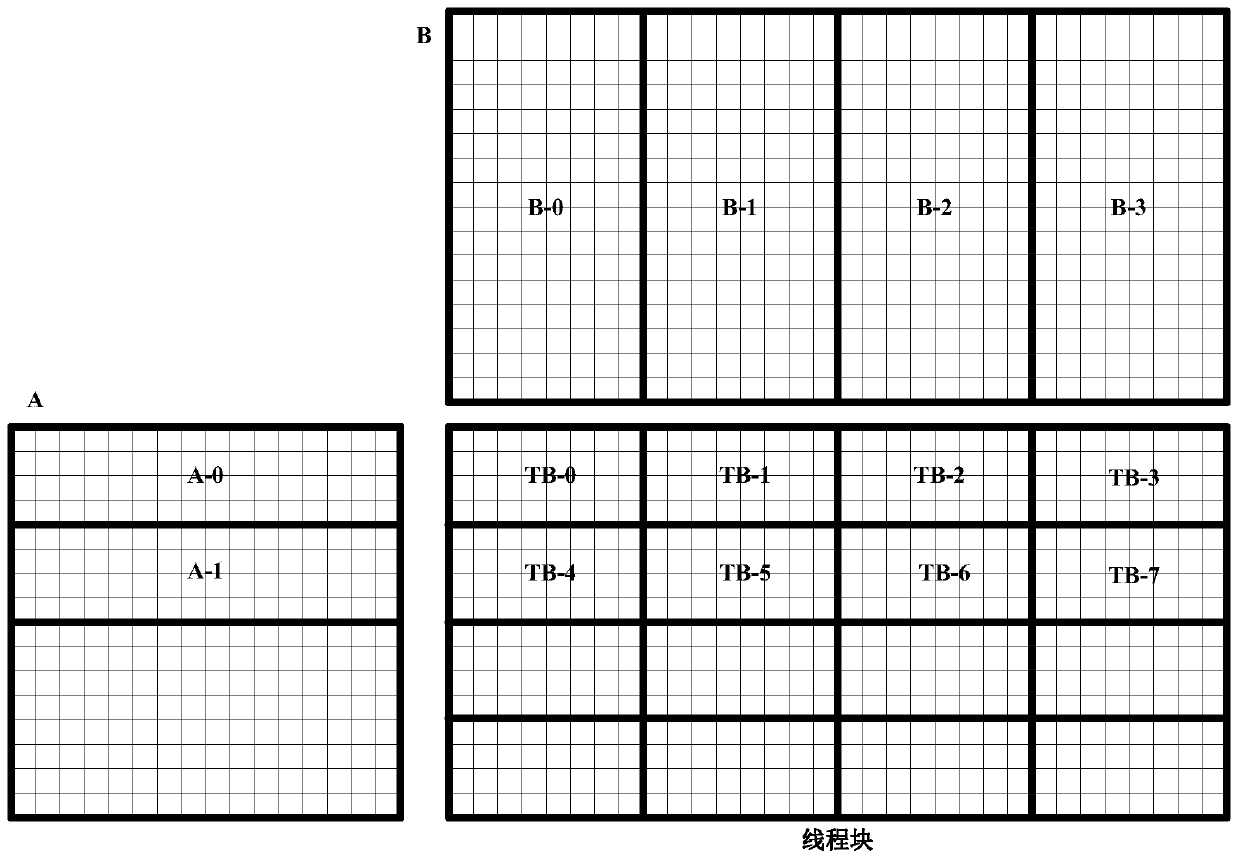

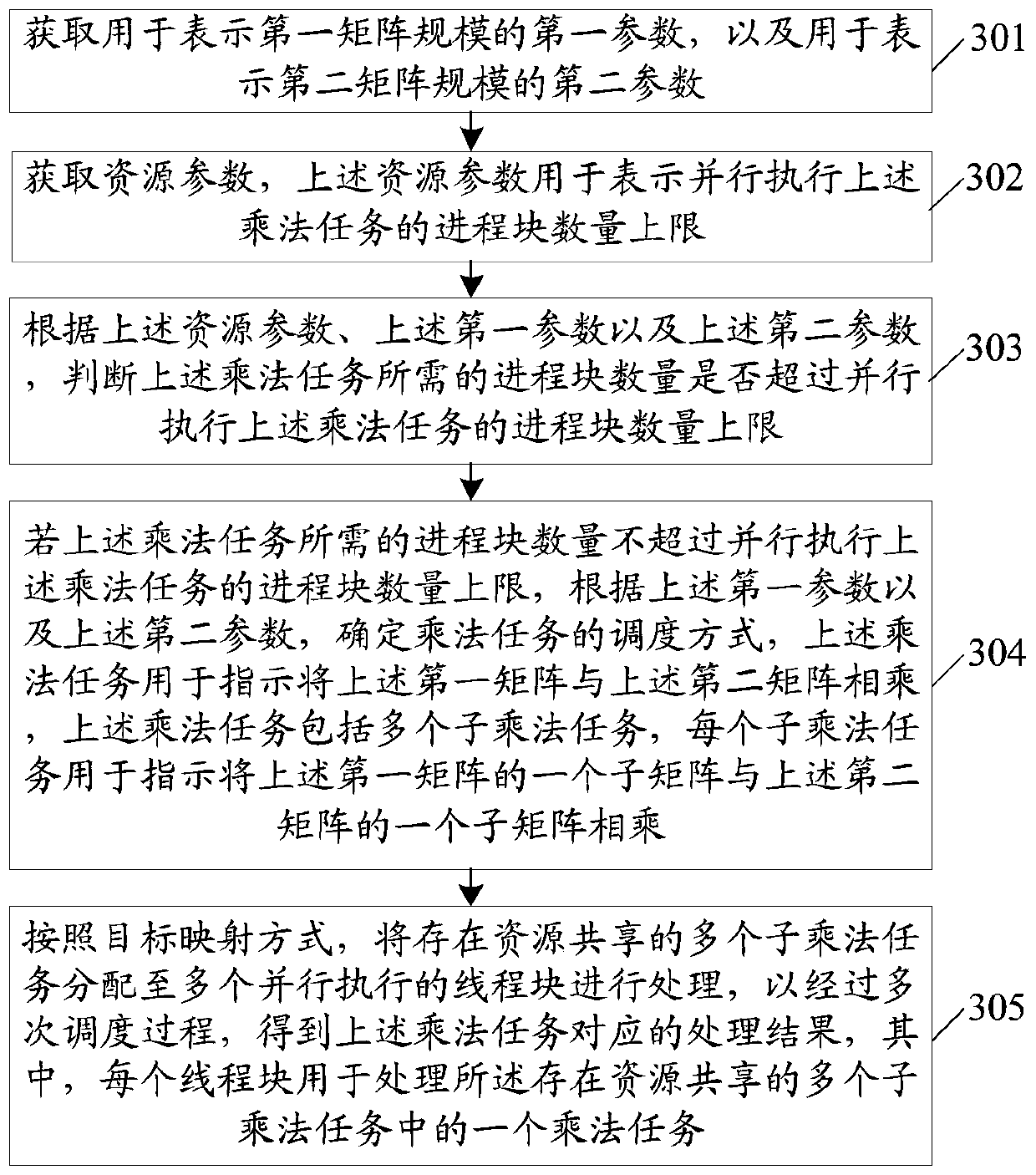

Data processing method and device, electronic equipment and storage medium

PendingCN111158874AImprove efficiencyIncrease calculation rateProgram initiation/switchingComplex mathematical operationsComputer hardwareParallel computing

The embodiment of the invention discloses a data processing method and device, electronic equipment and a storage medium. The method comprises the steps: acquiring a first parameter used for representing a first matrix scale and a second parameter used for representing a second matrix scale; and determining a scheduling mode of a multiplication task according to the first parameter and the secondparameter. The multiplication task is used for indicating multiplication of the first matrix and the second matrix, the multiplication task comprises a plurality of submultiplication tasks, and each submultiplication task is used for indicating multiplication of one submatrix of the first matrix and one submatrix of the second matrix.

Owner:SHENZHEN SENSETIME TECH CO LTD

Request coalescing for instruction streams

ActiveUS20120272043A1Improve memory access efficiencyDigital computer detailsMemory systemsData classMemory interface

Sequential fetch requests from a set of fetch requests are combined into longer coalesced requests that match the width of a system memory interface in order to improve memory access efficiency for reading the data specified by the fetch requests. The fetch requests may be of different classes and each data class is coalesced separately, even when intervening fetch requests are of a different class. Data read from memory is ordered according to the order of the set of fetch requests to produce an instruction stream that includes the fetch requests for the different classes.

Owner:NVIDIA CORP

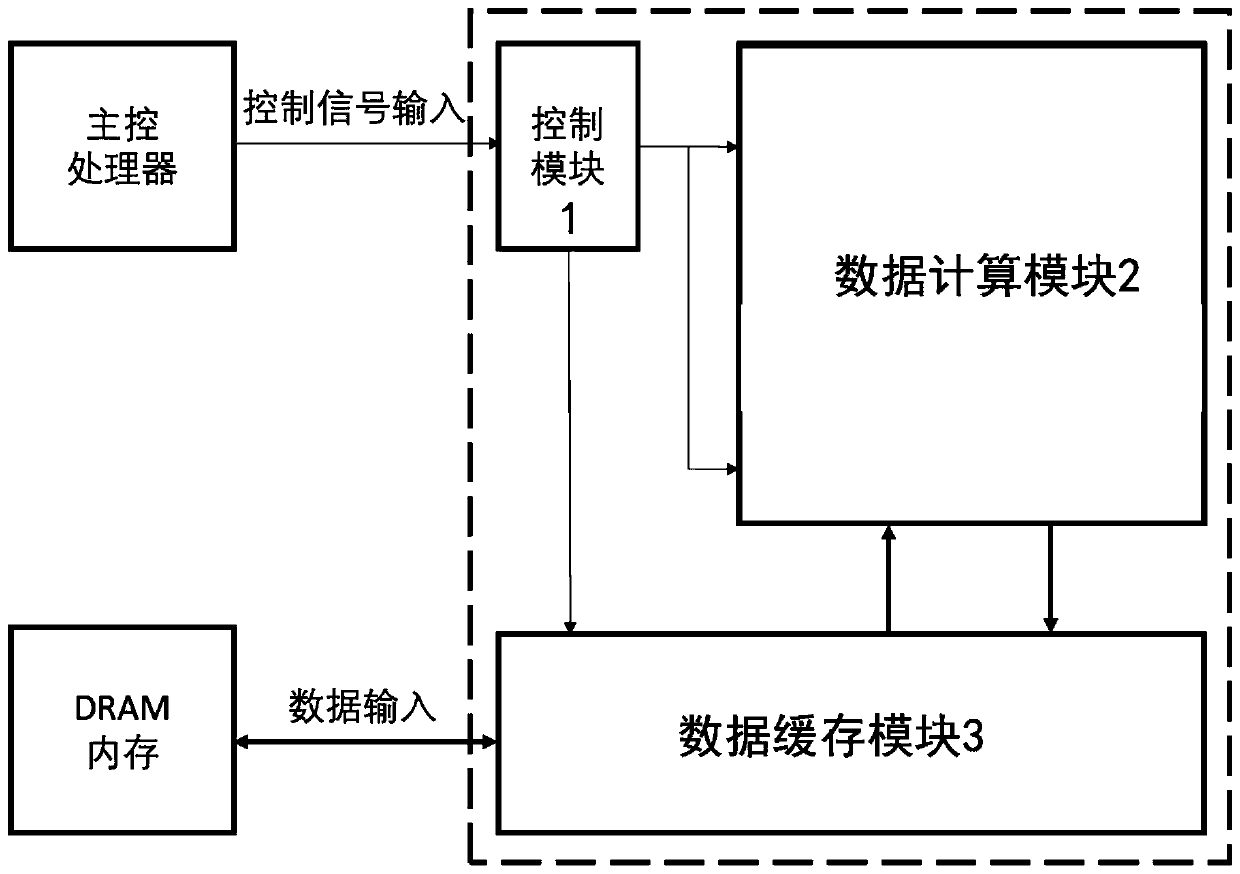

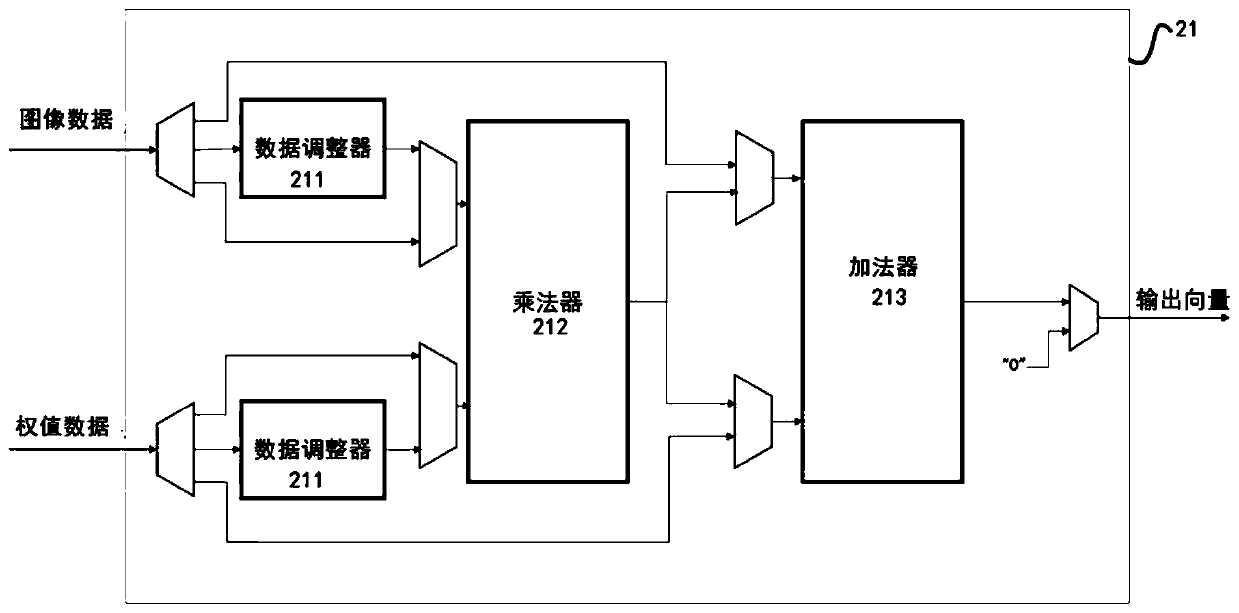

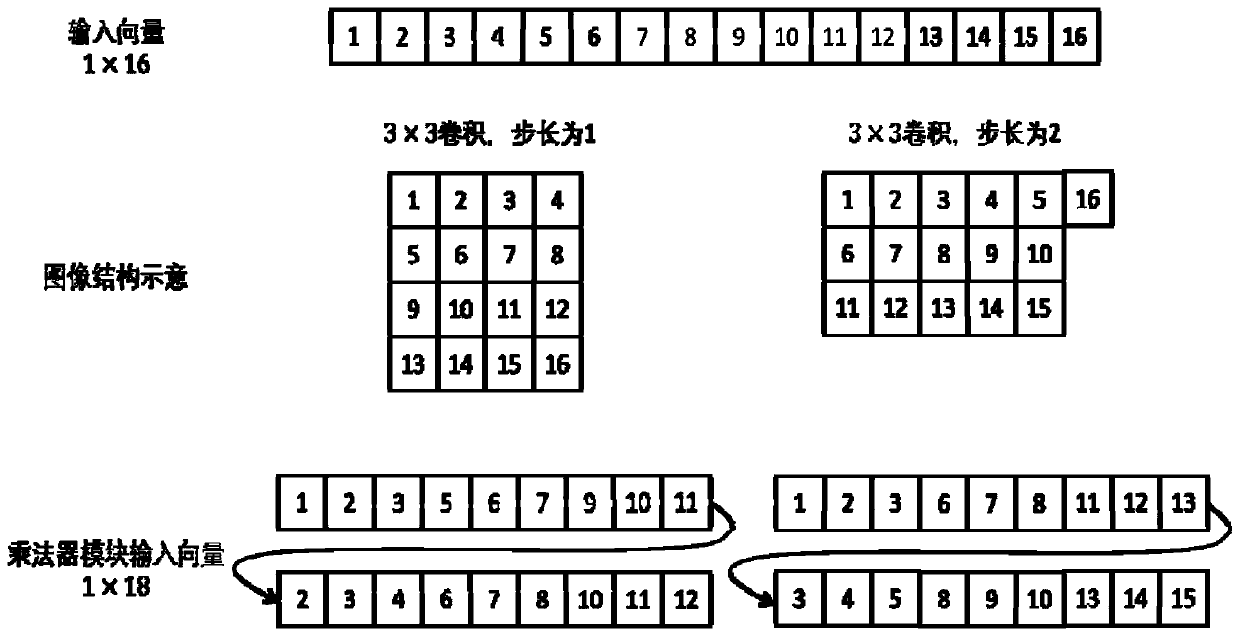

Deep learning accelerator suitable for stacked hourglass network

ActiveCN109993293AAchieve Computational Acceleration PerformanceCalculation speedNeural architecturesPhysical realisationTime delaysData acquisition

The invention discloses a deep learning accelerator suitable for a stacked hourglass network. A parallel computing layer computing unit improves the computing parallelism, and a data caching module improves the utilization rate of data loaded into the accelerator while accelerating the computing speed; and meanwhile, a data adjuster in the accelerator can carry out self-adaptive data arrangement sequence change according to different calculation layer operations, so that the integrity of acquired data can be improved, the data acquisition efficiency is improved, and the time delay of a memoryaccess process is reduced. Therefore, according to the accelerator, the memory bandwidth is effectively reduced by reducing the number of memory accesses and improving the memory access efficiency while the algorithm calculation speed is increased, so that the overall calculation acceleration performance of the accelerator is realized.

Owner:SUN YAT SEN UNIV

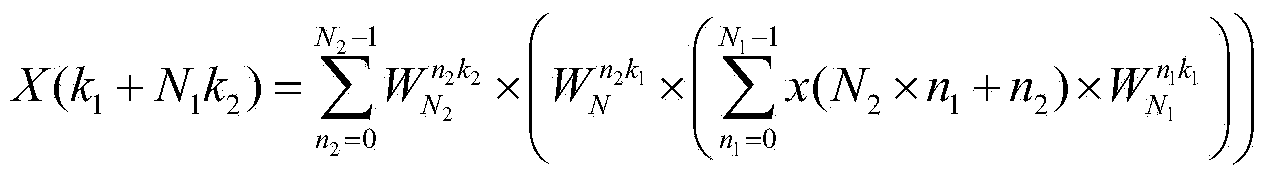

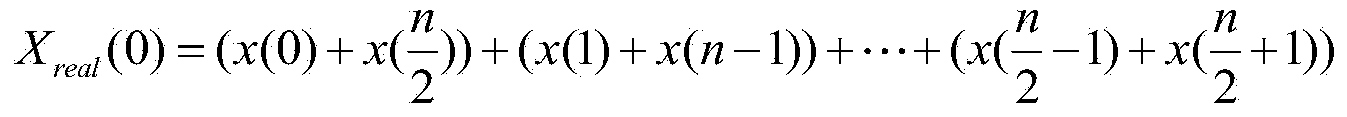

FFTW3 optimization method based on loongson 3B processor

ActiveCN103902506AImprove performanceReduce the number of memory accessesComplex mathematical operationsParallel computingDiscrete Fourier transform

The invention discloses an FFTW3 optimization method based on a loongson 3B processor. The FFTW3 optimization method is characterized by comprising the steps of utilizing a vector quantity instruction method and a Cooley-Tukey algorithm for optimization in complex number discrete Fourier transform with the calculation scale being a sum, and utilizing the vector quantity instruction method and a real part and imaginary part individual processing method for optimization in real number discrete Fourier transform calculation. According to the FFTW3 optimization method based on the loongson 3B processor, the running performance of FFTW3 on the loongson 3B processor can be effectively improved, and therefore the FFTW3 can be efficiently obtained on the loongson 3B processor.

Owner:INST OF ADVANCED TECH UNIV OF SCI & TECH OF CHINA

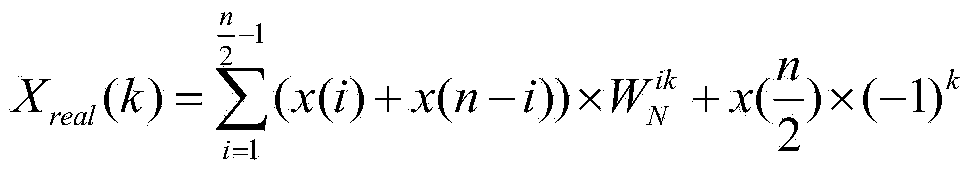

Buffer controller and management method thereof

ActiveUS7000073B2Improve memory access efficiencySimplify hardware designMemory adressing/allocation/relocationInput/output processes for data processingNetwork packetMemory buffer register

The invention provides a new linked structure for a buffer controller and management method thereof. The allocation and release actions of buffer memory can be more effectively processed when the buffer controller processes data packets. The linked structure enables the link node of the first buffer register to point to the last buffer register. The link node of the last buffer register points to the second buffer register. Each of the link nodes of the rest buffers points to the next buffer register in order until the last buffer register. This structure can effectively release the buffer registers in the used linked list to a free list.

Owner:VIA TECH INC

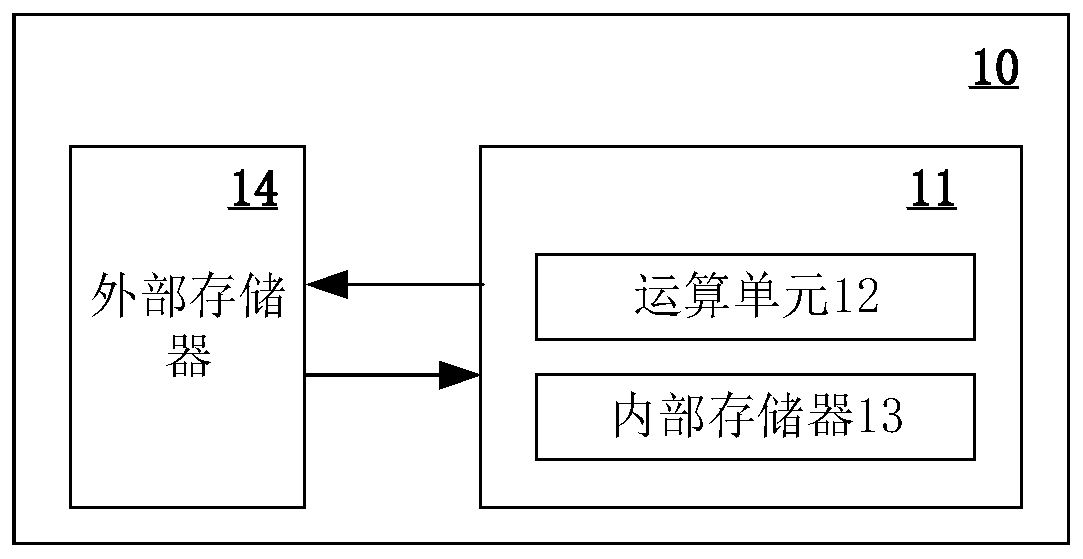

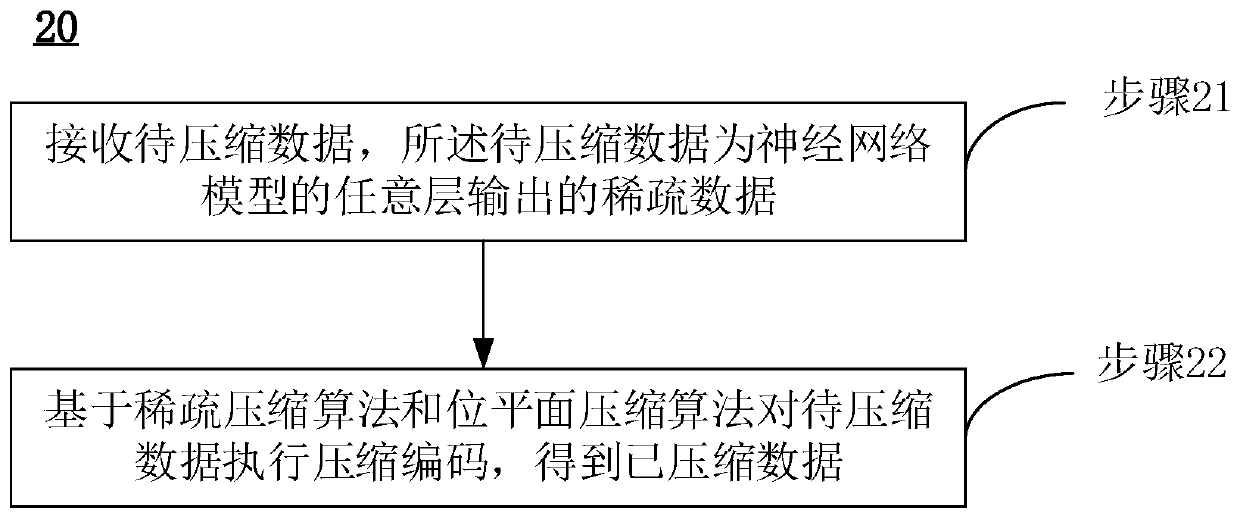

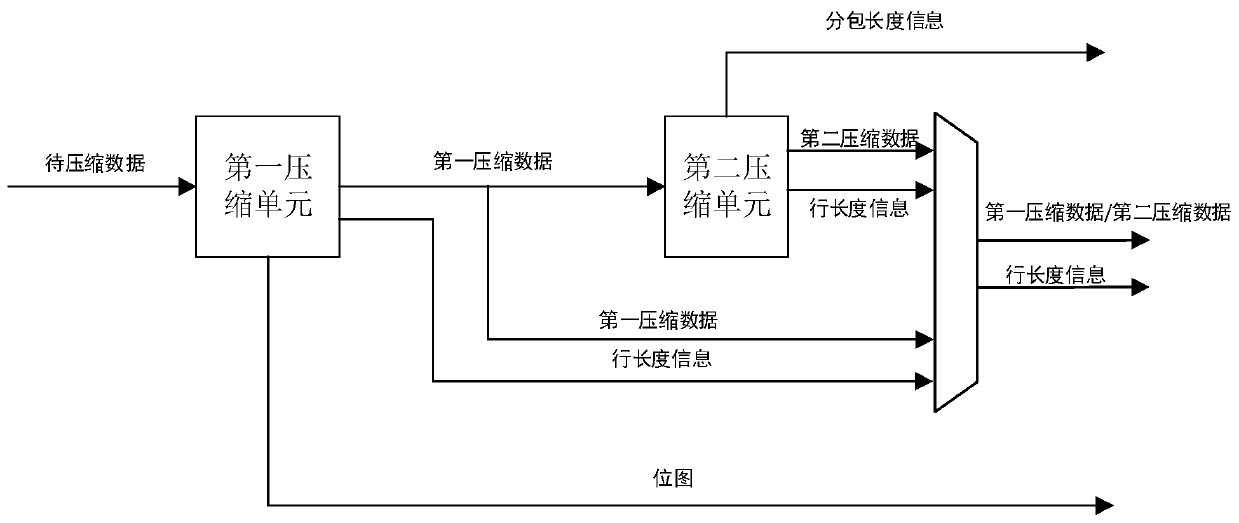

Data compression method and device, data decompression method and device and processing method and device based on data compression and decompression

ActiveCN110943744AImprove computing powerIncrease the compression ratioCode conversionPhysical realisationExternal storageNetwork model

The invention provides a data compression method and device, a data decompression method and device and a processing method and device based on data compression and decompression, and the data compression method comprises the steps: receiving to-be-compressed data which is sparse data outputted by any layer of a neural network model; and performing compression processing on the to-be-compressed data based on a sparse compression algorithm and a bit plane compression algorithm to obtain compressed data. By utilizing the method, a relatively high compression ratio can be realized, so that the data transmission bandwidth and the storage space of an external memory can be saved, the memory access efficiency is improved, and the chip computing power is improved.

Owner:CANAAN BRIGHT SIGHT CO LTD

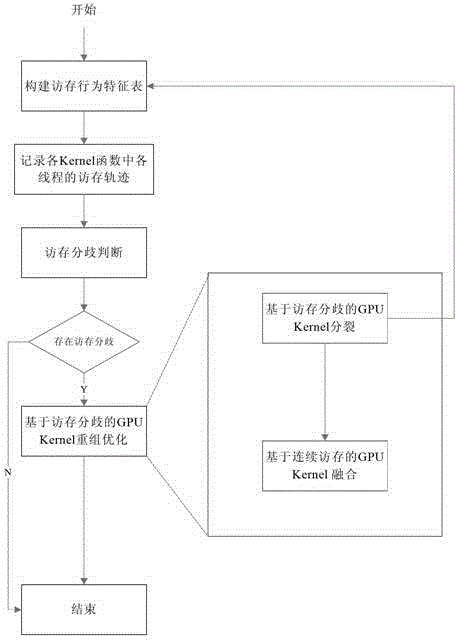

Memory access bifurcation-based GPU (Graphics Processing Unit) kernel program recombination optimization method

InactiveCN103150157ARelieve memory access pressureImprove memory access efficiencyResource allocationSpecific program execution arrangementsDirect memory accessMulti gpu

The invention discloses a memory access bifurcation-based GPU (Graphics Processing Unit) kernel program recombination optimization method, which aims to improve the executing efficiency and the application program performance of large-scale GPU Kernel. The technical scheme is that a memory access behavior feature list is constructed by using a Create method; the memory access track of each thread in each Kernel function is recorded by using a Record method; next, whether memory access bifurcation occurs in the thread memory access in the GPU thread of the same Kernel function is judged according to a memory access address of the GPU thread in each Kernel function; and then Kernel recombination optimization is performed on the memory access bifurcation-based GPU, and the method comprises two steps of memory access bifurcation-based GPU Kernel split and continuous memory access-based GPU Kernel fusion. By using the method, the problem of low executing efficiency of the large-scale GPU Kernel application can be solved, and the executing efficiency and the application program performance of the large-scale GPU Kernel are improved.

Owner:NAT UNIV OF DEFENSE TECH

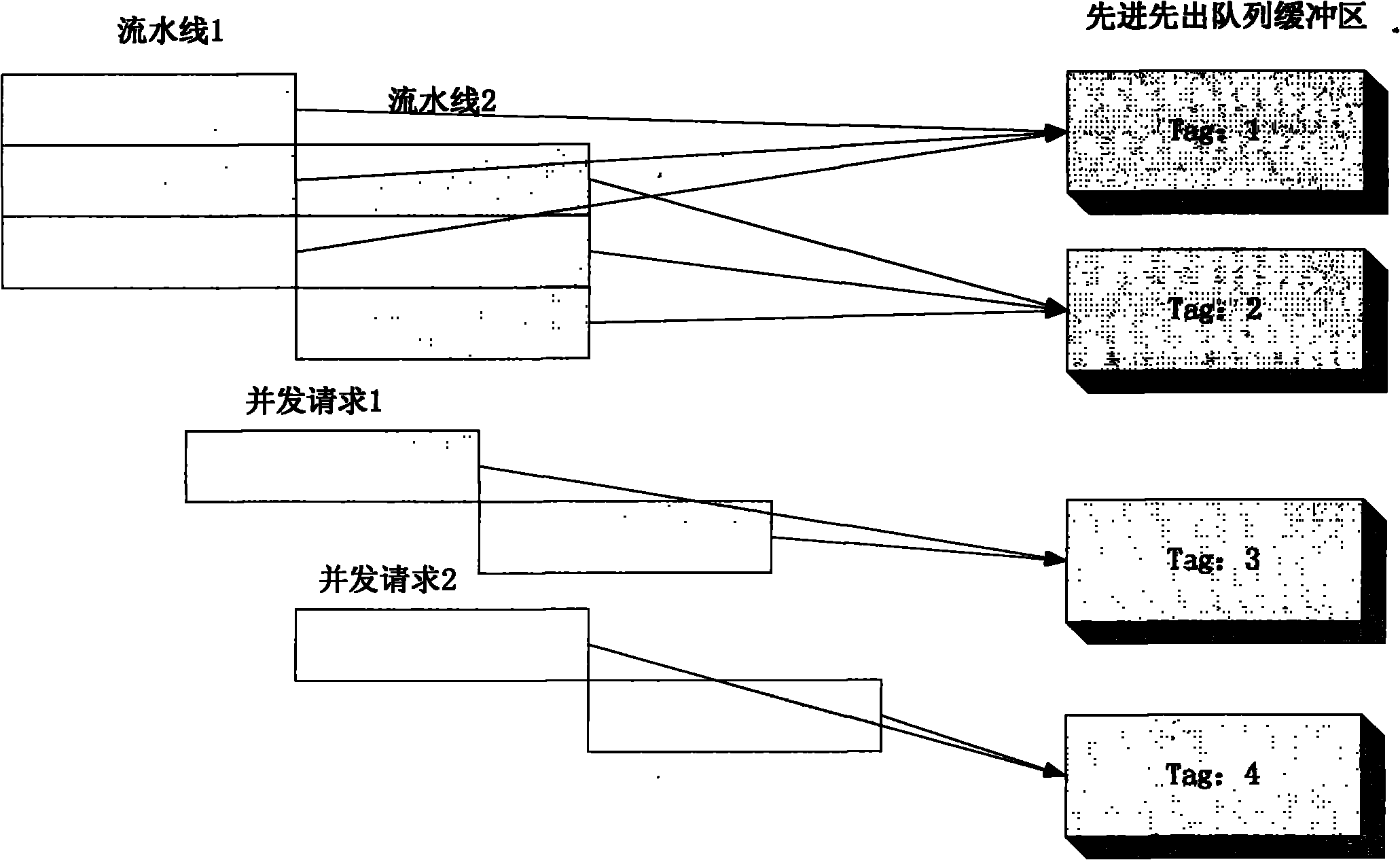

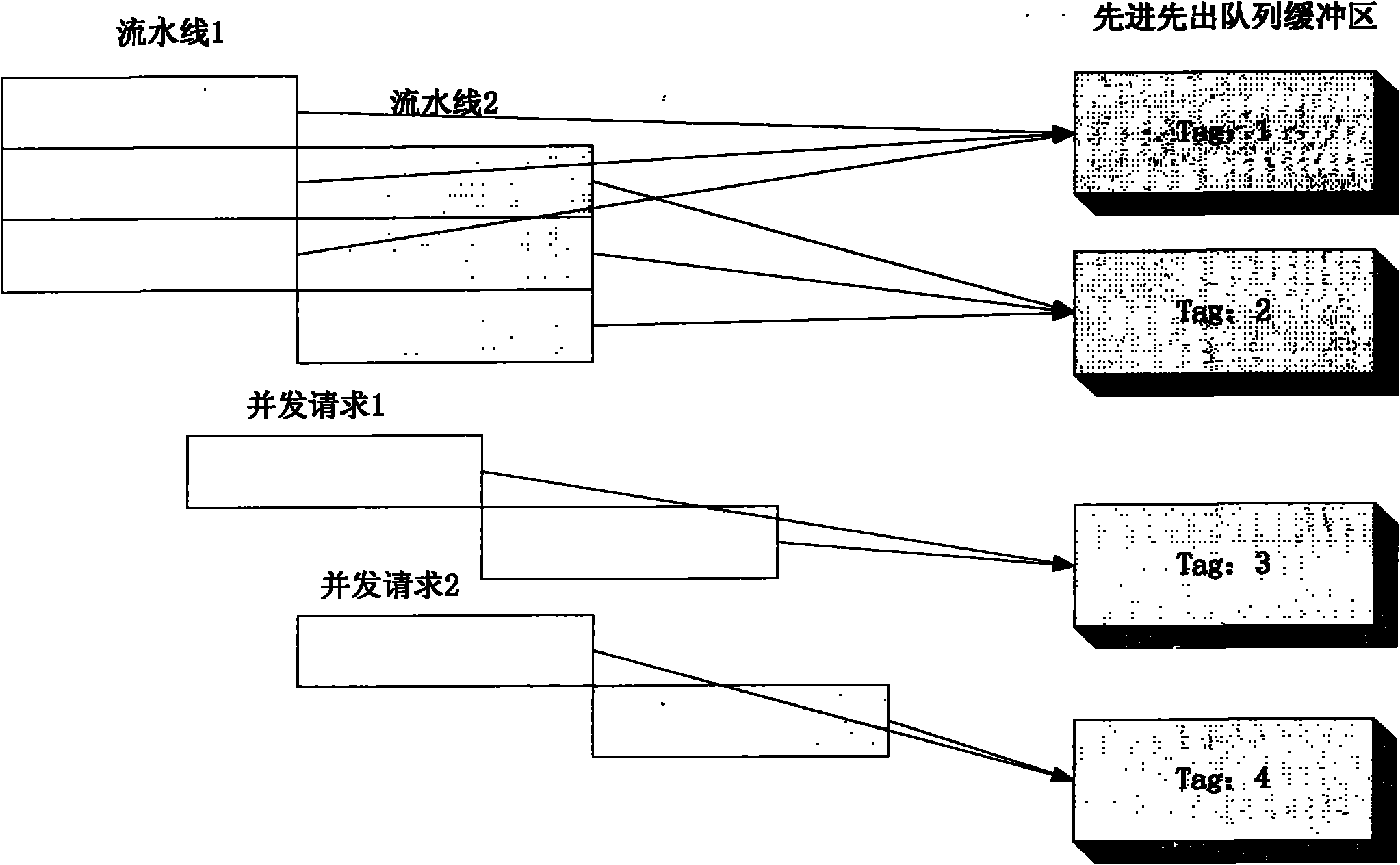

Method for improving memory efficiency by using stream processing

ActiveCN102103490AImprove memory access efficiencyConcurrent instruction executionProduction lineParallel processing

The invention provides a method for improving the memory efficiency by using stream processing. A parallel processing unit simultaneously sends a request and adds a tag number on a request address; returned data is stored into a corresponding buffer area according to a tag carried by the returned data of a memory in a classified way; and the data is fetched from the corresponding buffer area during use so as to perform data processing. By the method of parallel access and storage by using a production line, the access and storage efficiency is improved effectively.

Owner:中科腾龙信息技术有限公司

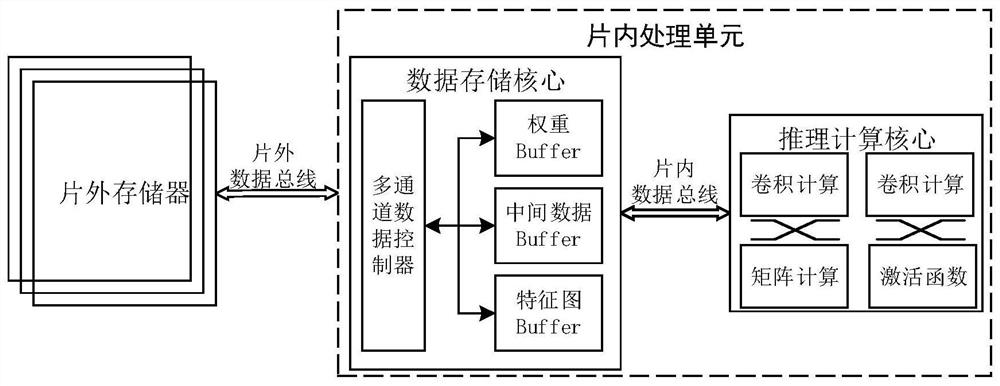

Hardware acceleration system for LSTM (Long Short Term Memory) network model

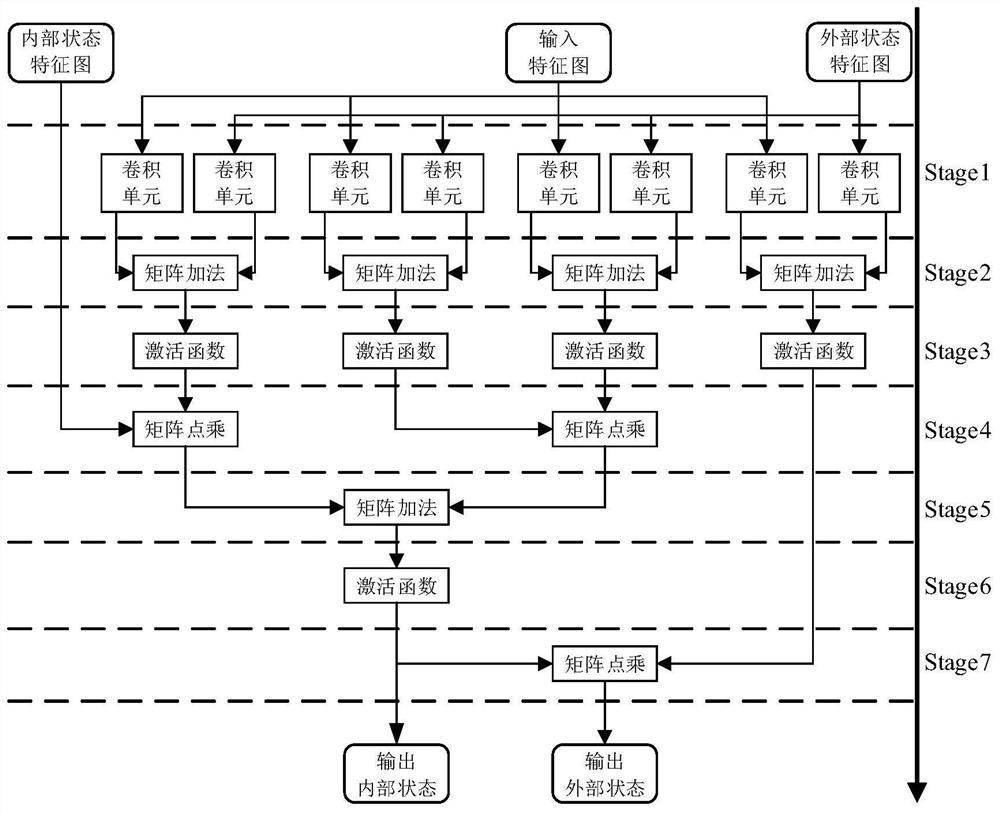

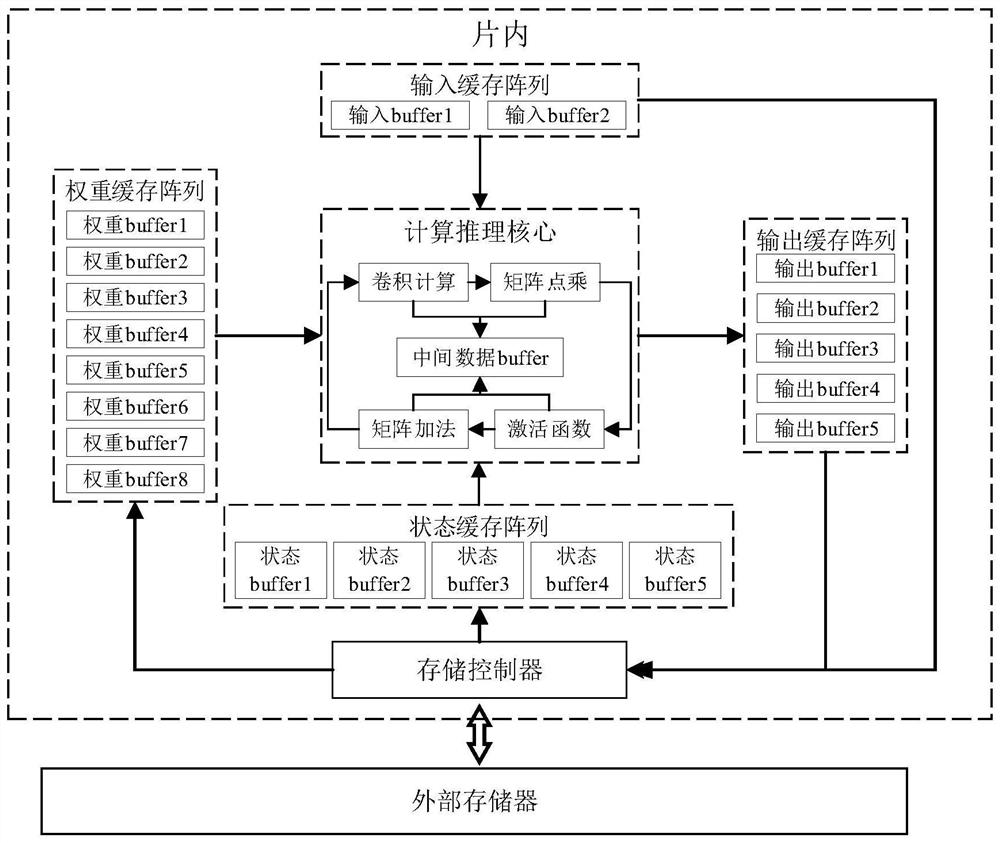

ActiveCN113191488AImprove parallelismReduce processing latencyNeural architecturesPhysical realisationActivation functionTerm memory

The invention discloses a hardware acceleration system for an LSTM network model, and belongs to the technical field of deep learning hardware acceleration. The invention discloses a hardware acceleration system for a deep learning long short-term memory (LSTM) network model. The hardware acceleration system comprises a network reasoning calculation core and a network data storage core. The network reasoning calculation core is used as a calculation accelerator of an LSTM network model, calculation units are deployed according to the network model, and calculation acceleration of the calculation units such as convolution operation, matrix dot multiplication, matrix addition and an activation function is achieved; and the network data storage core serves as a data cache and interaction controller of an LSTM network model, deploys an on-chip cache unit according to the network model, and realizes a data interaction link between the computing core and an off-chip memory. According to the invention, the calculation parallelism of the LSTM network model is improved, the processing delay is reduced, the memory access time is shortened, and the memory access efficiency is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com