Patents

Literature

47 results about "Shared memory architecture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

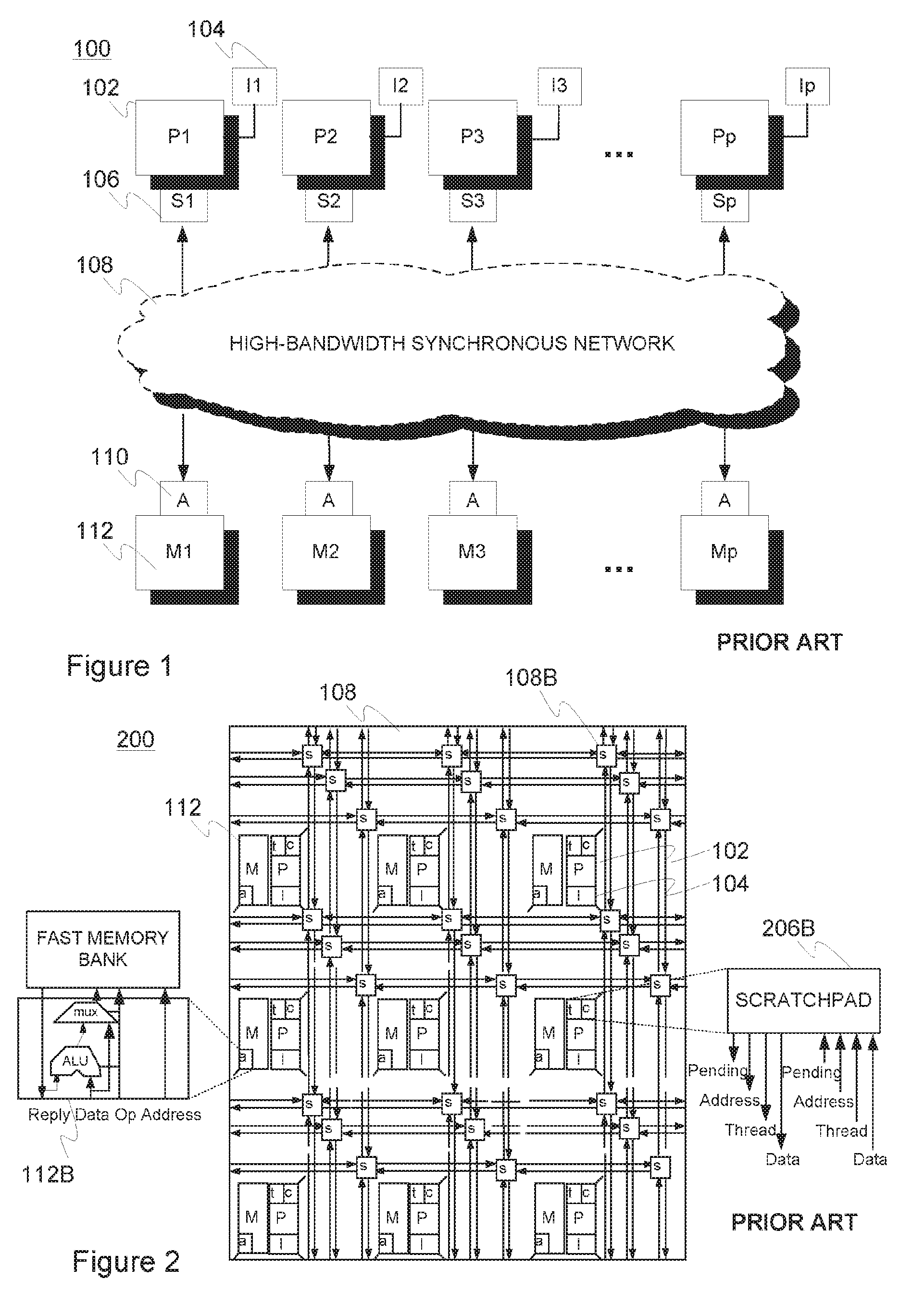

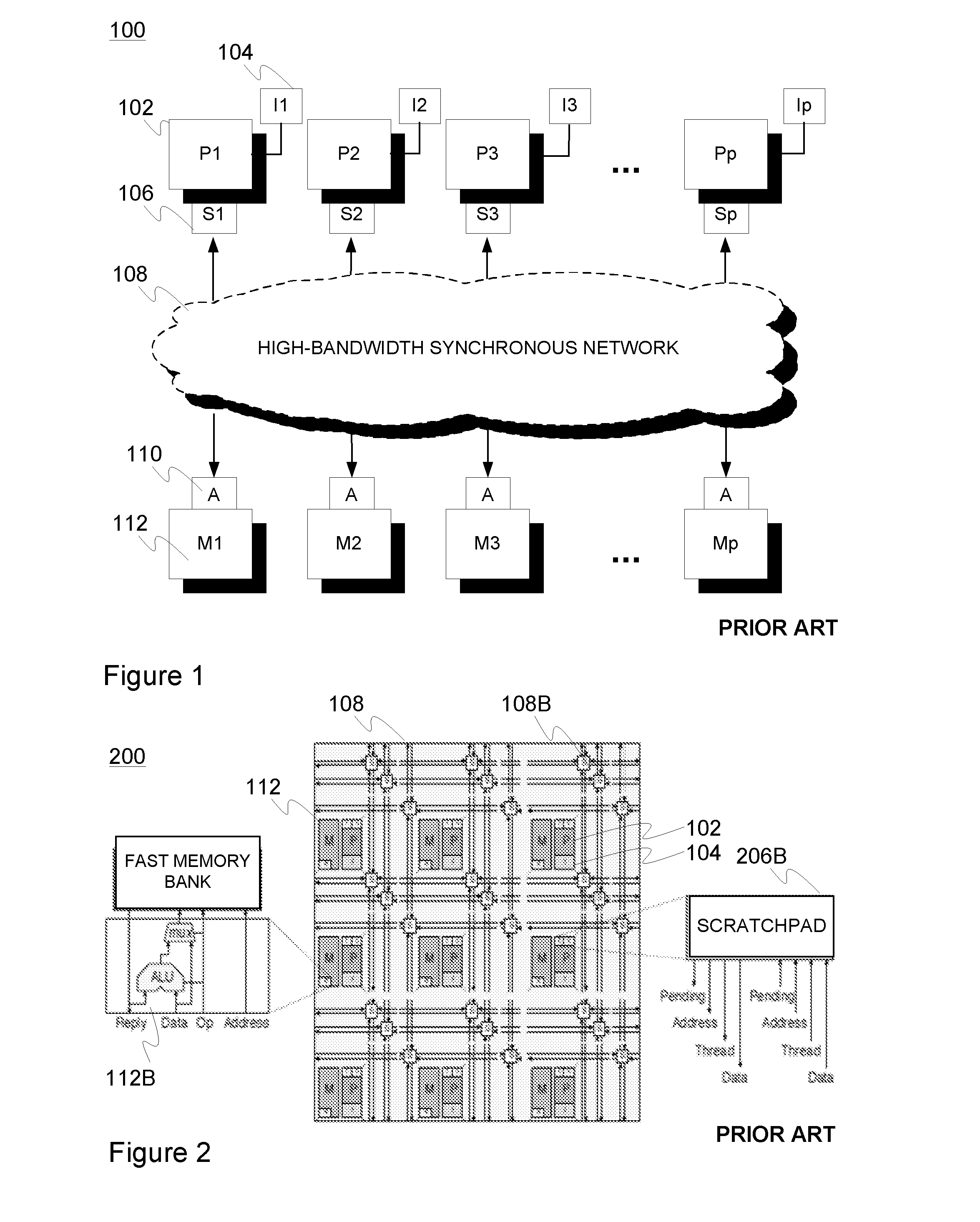

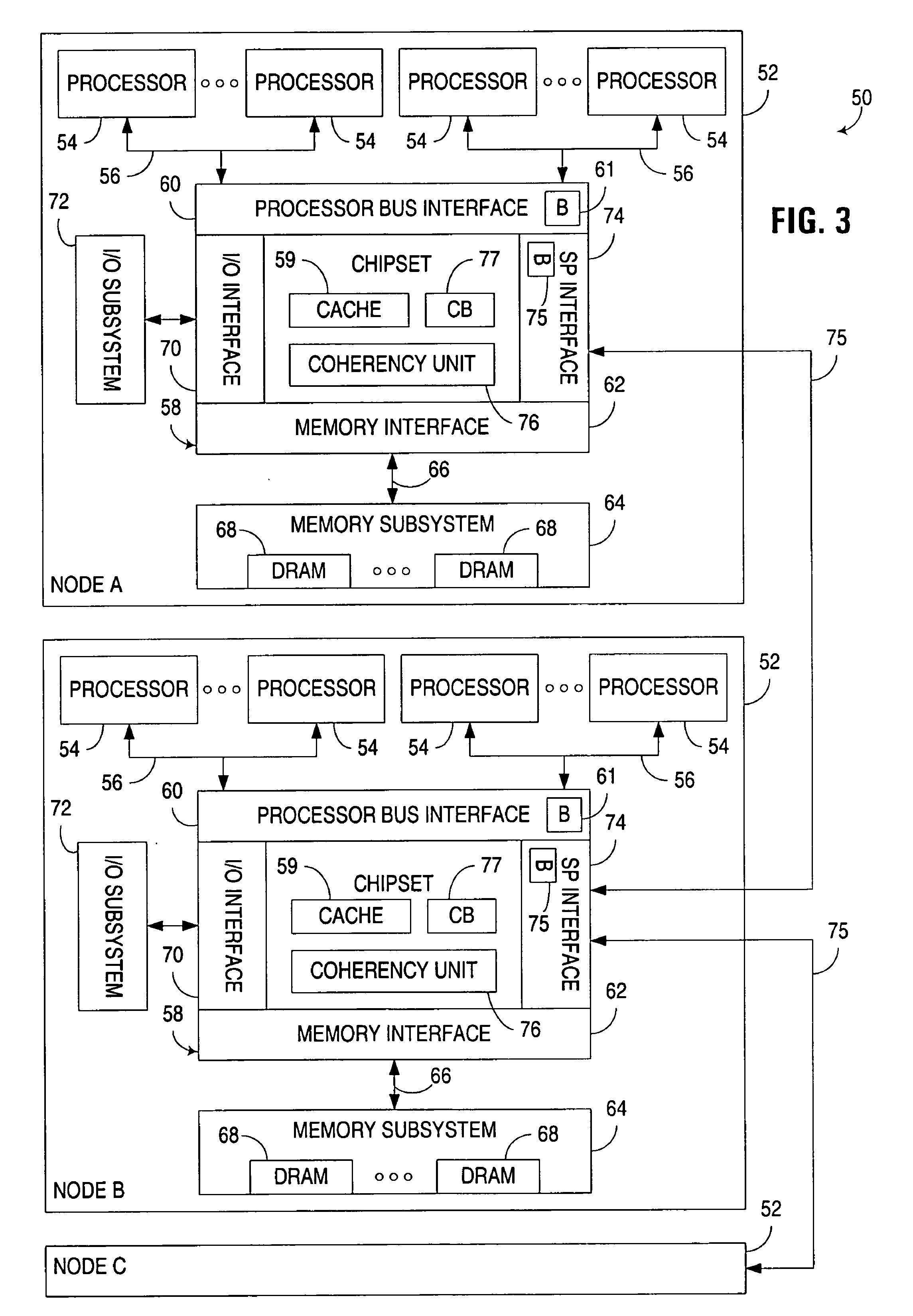

In computer architecture, shared memory architecture refers to a multiprocessing design where several processors access globally shared memory. Shared memory architectures may use: Uniform Memory Access: all the processors share the physical memory uniformly. Non-Uniform Memory Access: memory access time depends on the memory location relative to a processor. Cache-only memory architecture: the local memories for the processors at a node is used as cache. In a SMA system processors communicate by reading and writing memory locations. The two key problems in scaling an SMA system are: performance degradation due to "contention" when several processors try to access the same memory location. lack of "coherence" if memory is cached and goes out of synch with the original values as modifications take place.

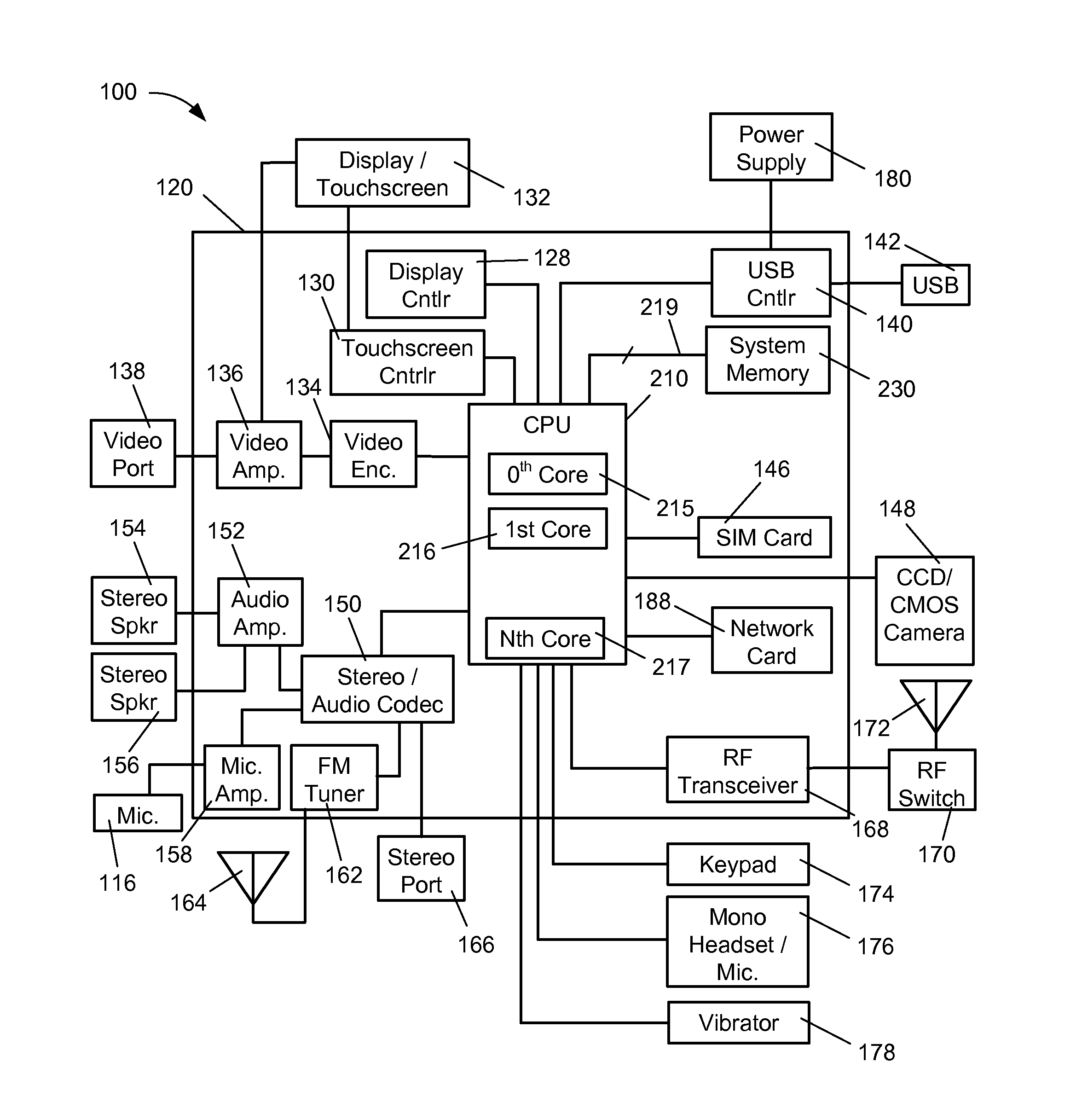

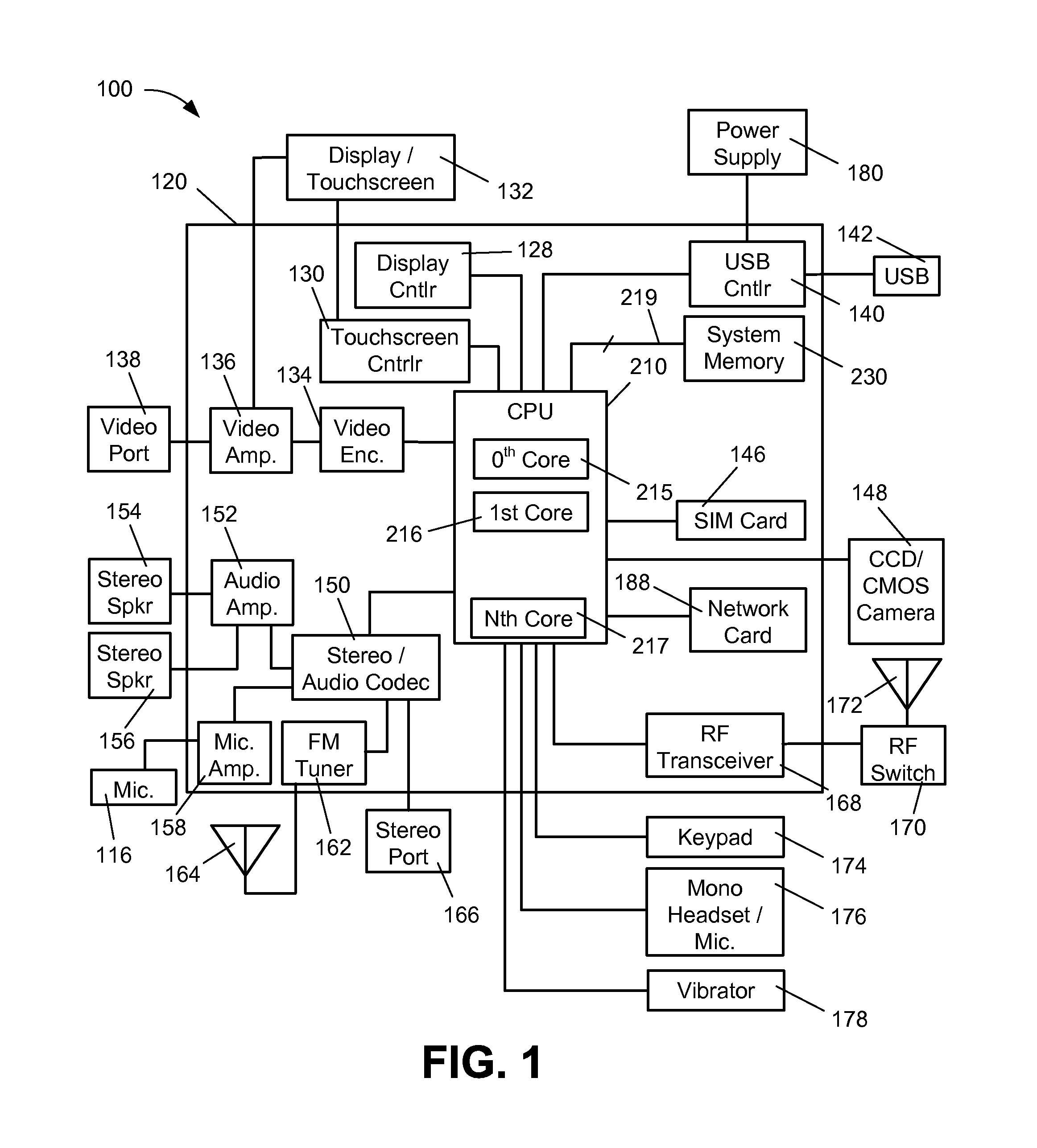

System and method for uniform interleaving of data across a multiple-channel memory architecture with asymmetric storage capacity

ActiveUS20150100746A1Memory adressing/allocation/relocationShared memory architectureCache-only memory architecture

Systems and methods for uniformly interleaving memory accesses across physical channels of a memory space with a non-uniform storage capacity across the physical channels are disclosed. An interleaver is arranged in communication with one or more processors and a system memory. The interleaver identifies locations in a memory space supported by the memory channels and is responsive to logic that defines virtual sectors having a desired storage capacity. The interleaver accesses the asymmetric storage capacity uniformly across the virtual sectors in response to requests to access the memory space.

Owner:QUALCOMM INC

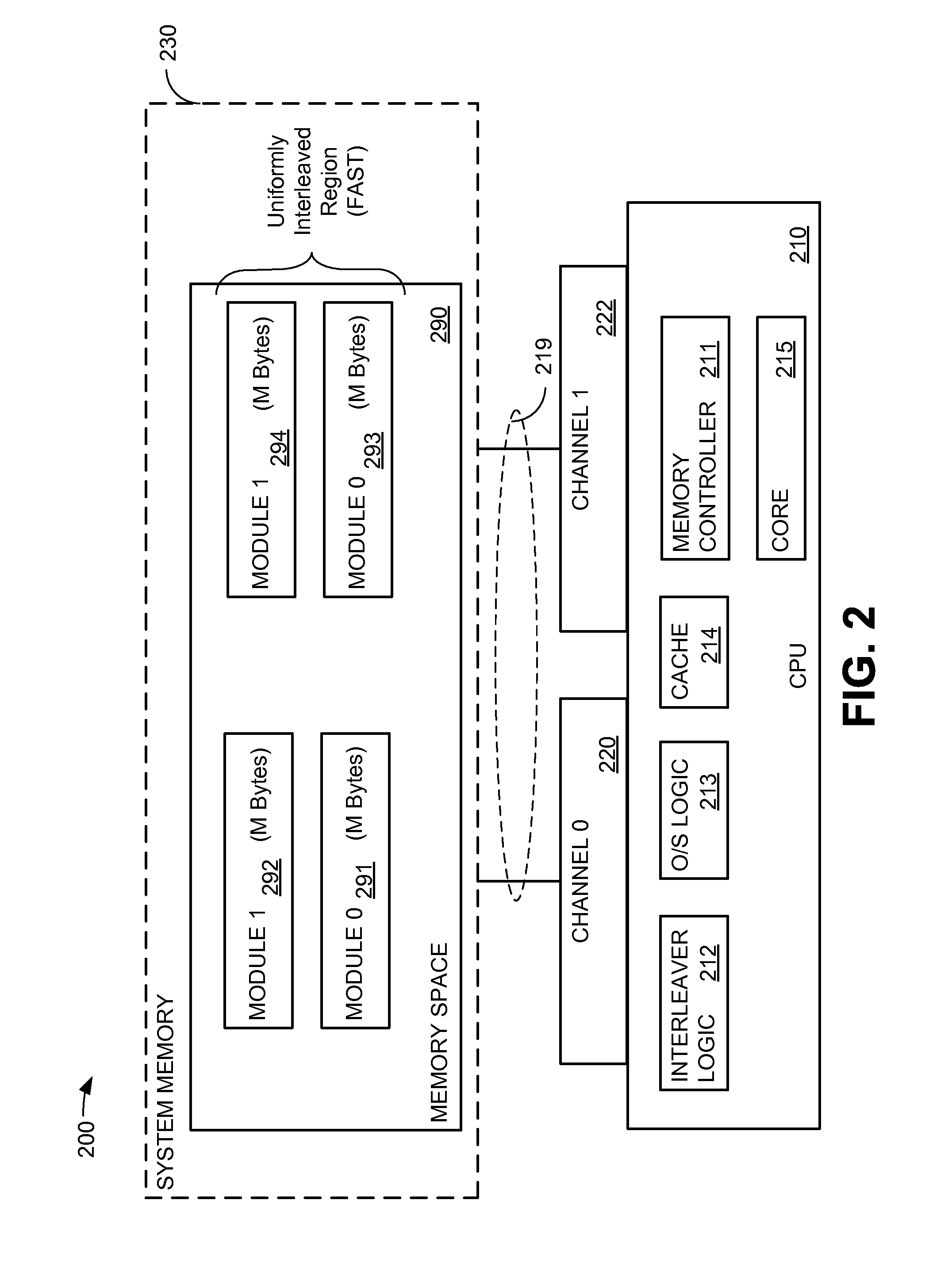

Method and apparatus for adjusting overflow buffers and flow control watermark levels

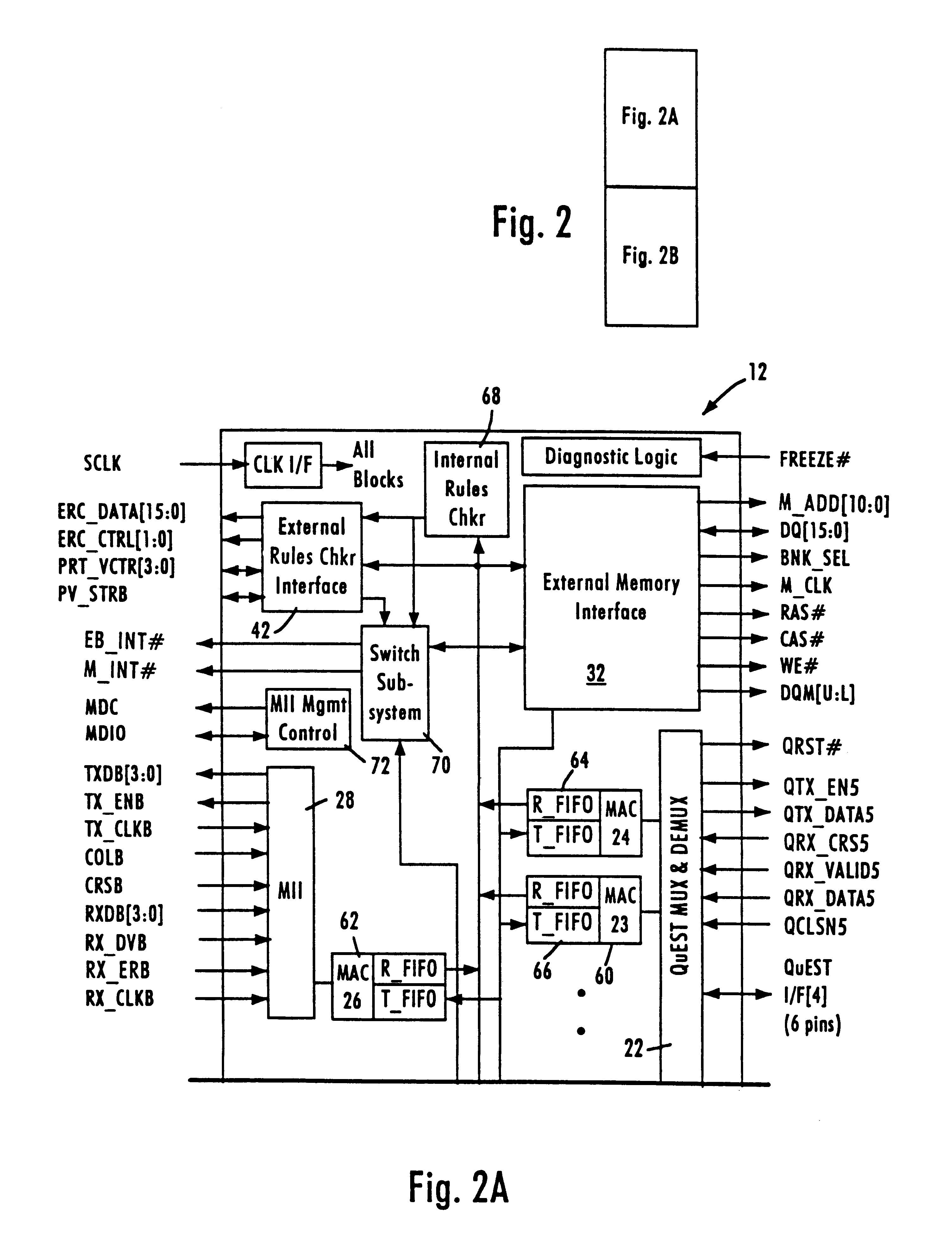

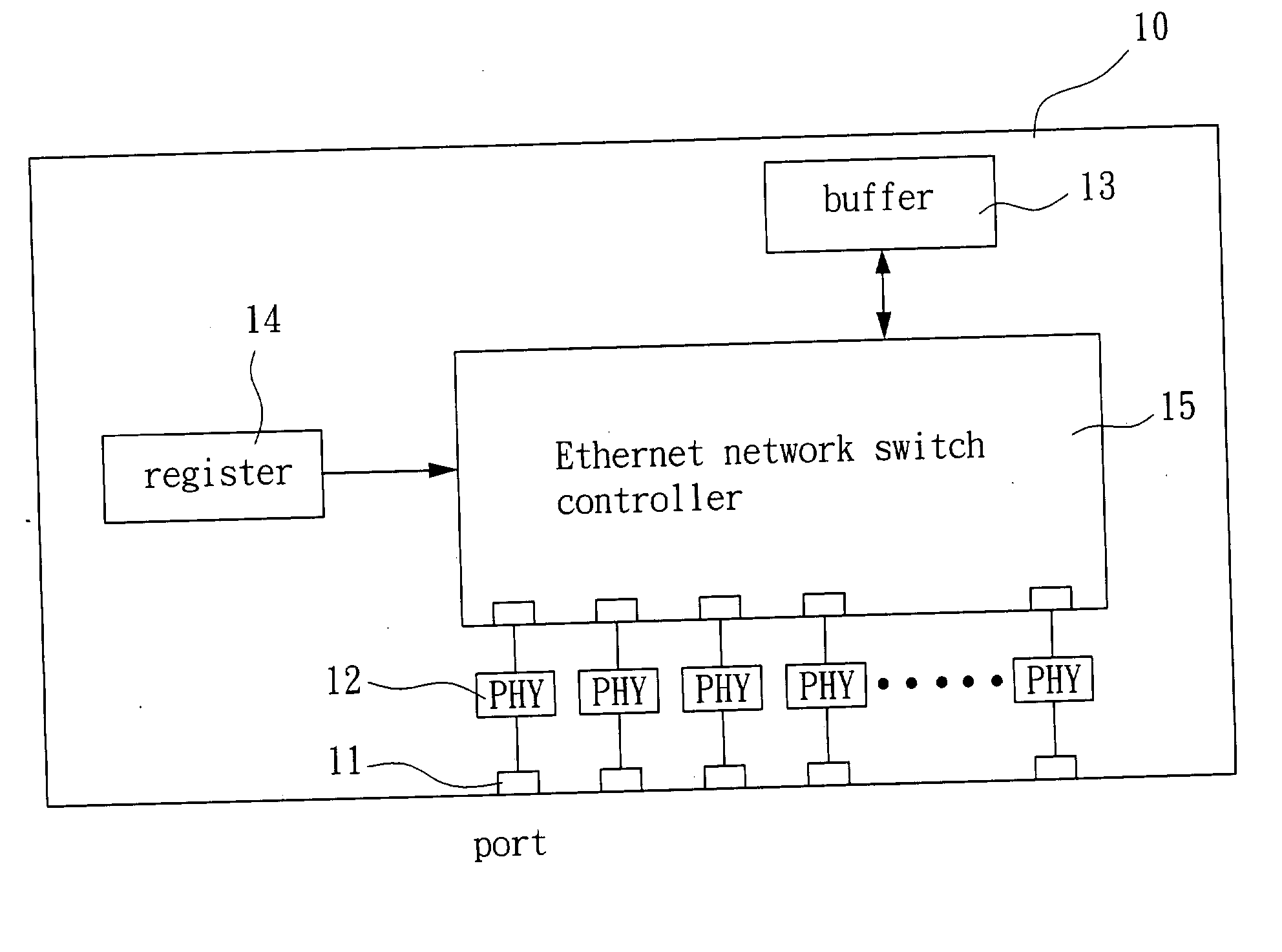

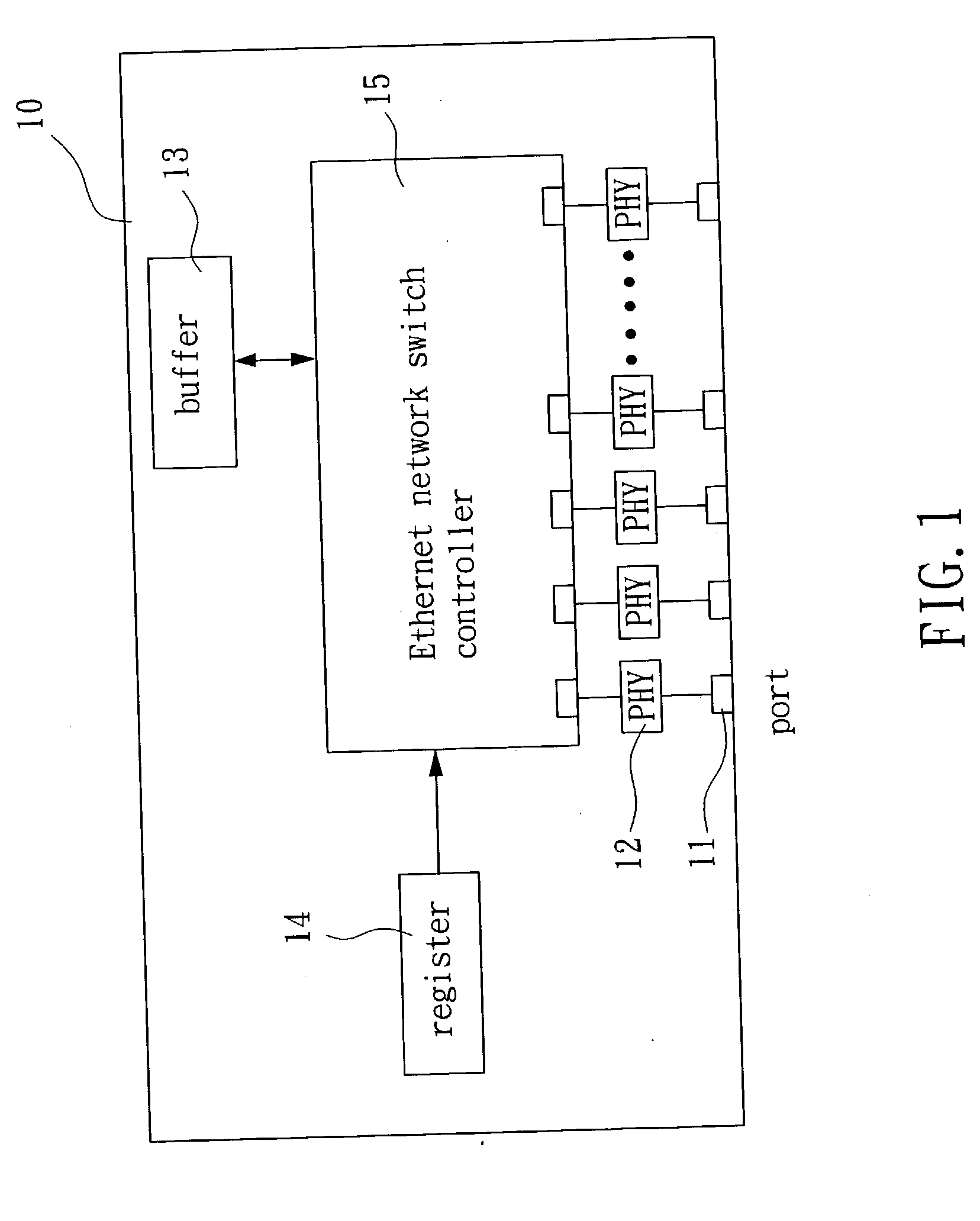

InactiveUS6084856AReduce congestionHigh trafficError prevention/detection by using return channelTransmission systemsShared memory architectureTraffic capacity

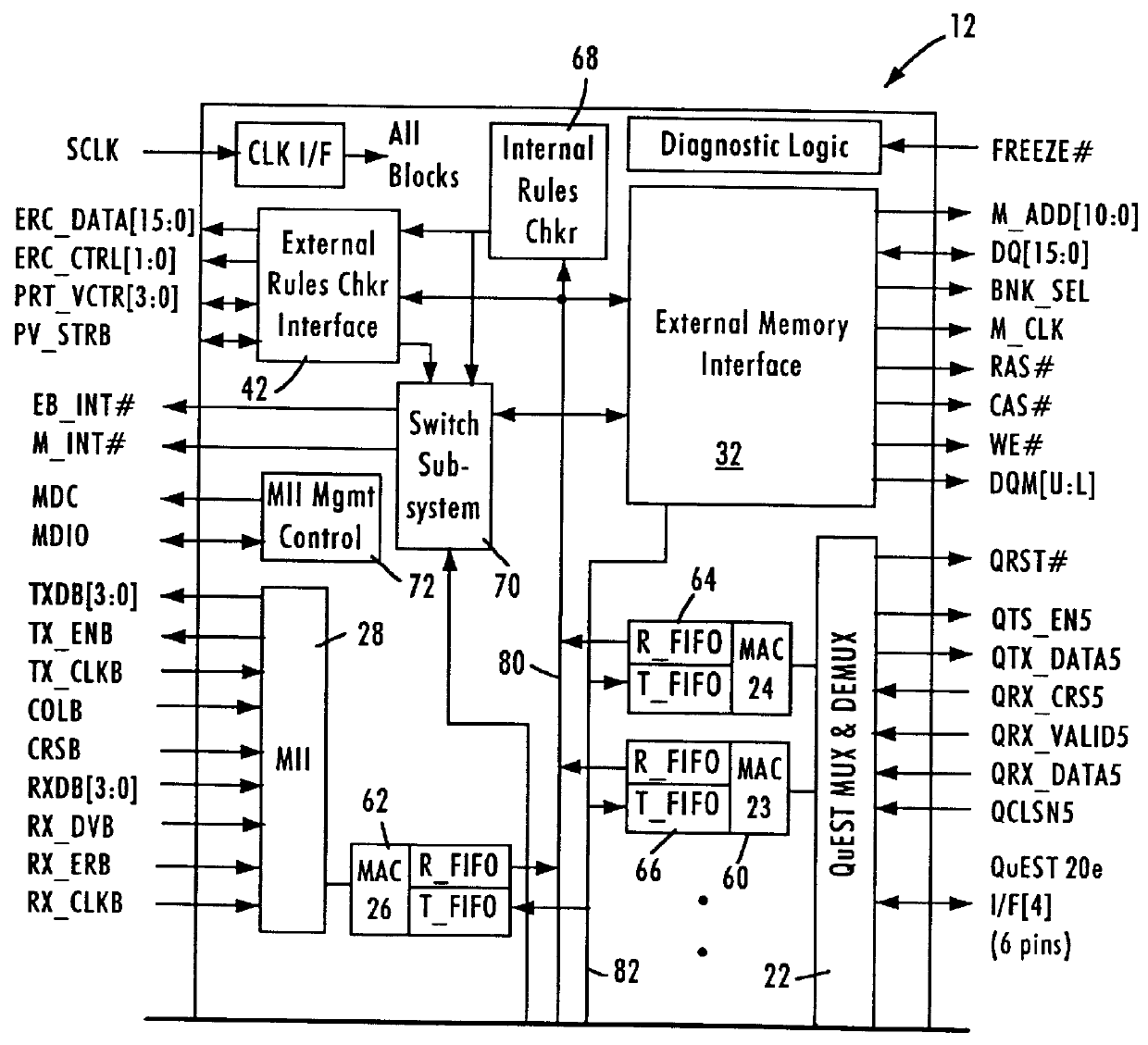

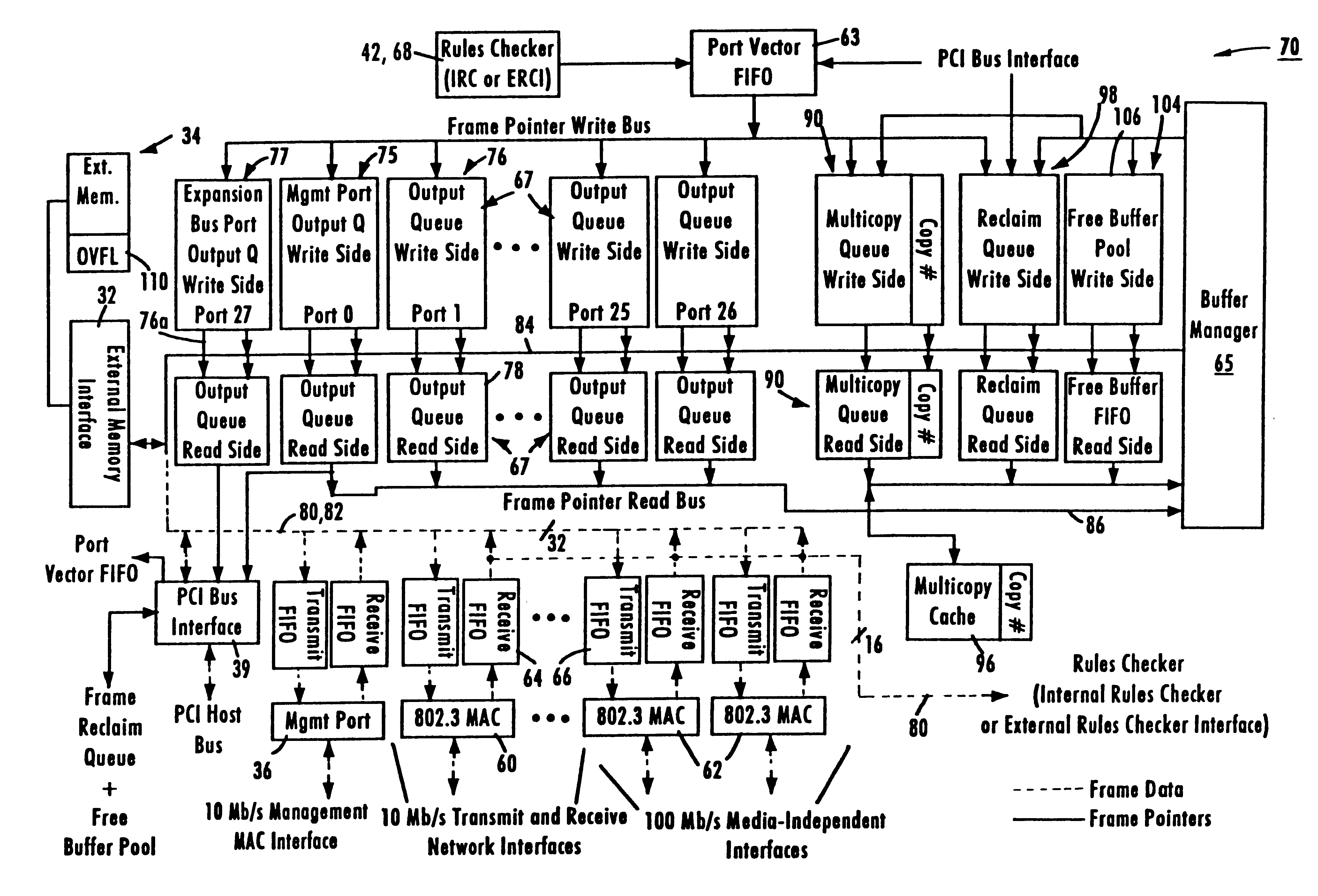

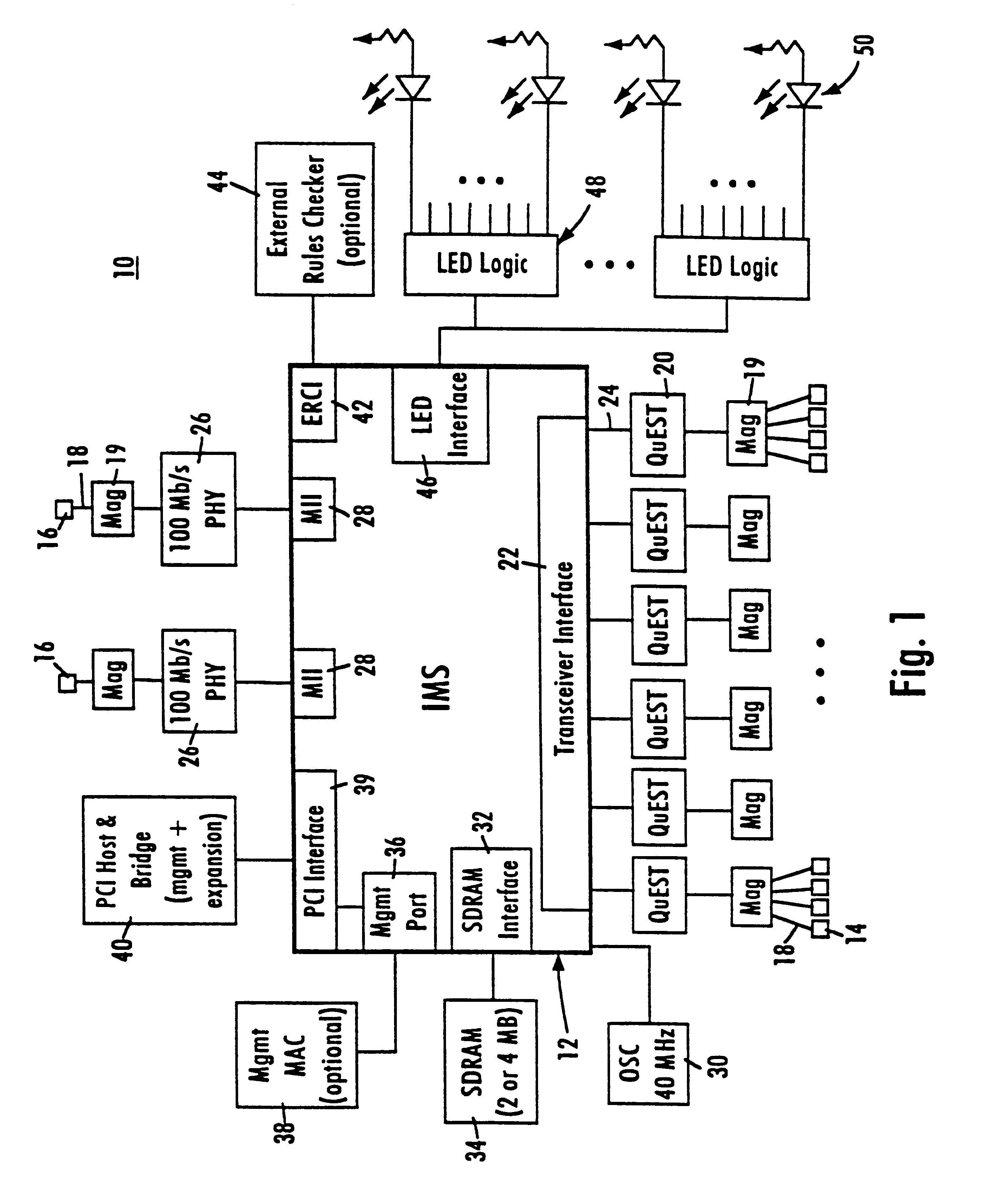

A network switch having a shared memory architecture for storing data frames includes configuration registers that are programmable by a host processor to adaptively adjust overflow buffer locations and flow control watermark levels based on monitored network traffic. The host processor allocates shared resources by setting memory configuration registers corresponding to an external memory, where the network switch uses the external memory for storing received data frames, Management Information Base (MIB) counter values, and output port overflow data. The host processor periodically reads the MIB counter values from the external memory, and adjusts flow control watermark levels or the memory configuration registers in the network switch based on rate-based network traffic derived from the MIB counter values. PCI burst transfers between the host processor and the external memory via the network switch ensure that stored data is not lost during reconfiguration of the external memory. The disclosed arrangement enables resources used by the network switch to be adjusted to minimize congestion by borrowing resources from network ports serving idle stations.

Owner:GLOBALFOUNDRIES INC

Method and apparatus providing programmable thresholds for half-duplex flow control in a network switch

InactiveUS6192028B1Maximize throughputConvenient ArrangementError preventionFrequency-division multiplex detailsShared memory architectureExternal storage

A network switch having a shared memory architecture for storing data frames has a set of programmable thresholds that specify when flow control should be initiated on a selected network port. The network switch includes a queue for storing free frame pointers, each specifying available memory locations in an external memory for storing data frames received from a network station. The network switch takes a frame pointer from a free buffer queue for each received data frame, and stores the received data frame in the location in external memory specified by the frame pointer while a decision making engine within the switch determines the appropriate destination ports. Flow control is initiated on a half-duplex network port by transmitting a phantom packet to a transmitting network station if the output buffer of a destination port exceeds a programmed high threshold, or if the output buffer of the destination port exceeds a low programmed threshold and the queue of free frame pointers falls below a low programmable threshold. The switch thus provides flexibility in providing selective collisions with transmitting stations to minimize the possibility of network congestion without wasting network bandwidth with unnecessary collisions.

Owner:GLOBALFOUNDRIES INC

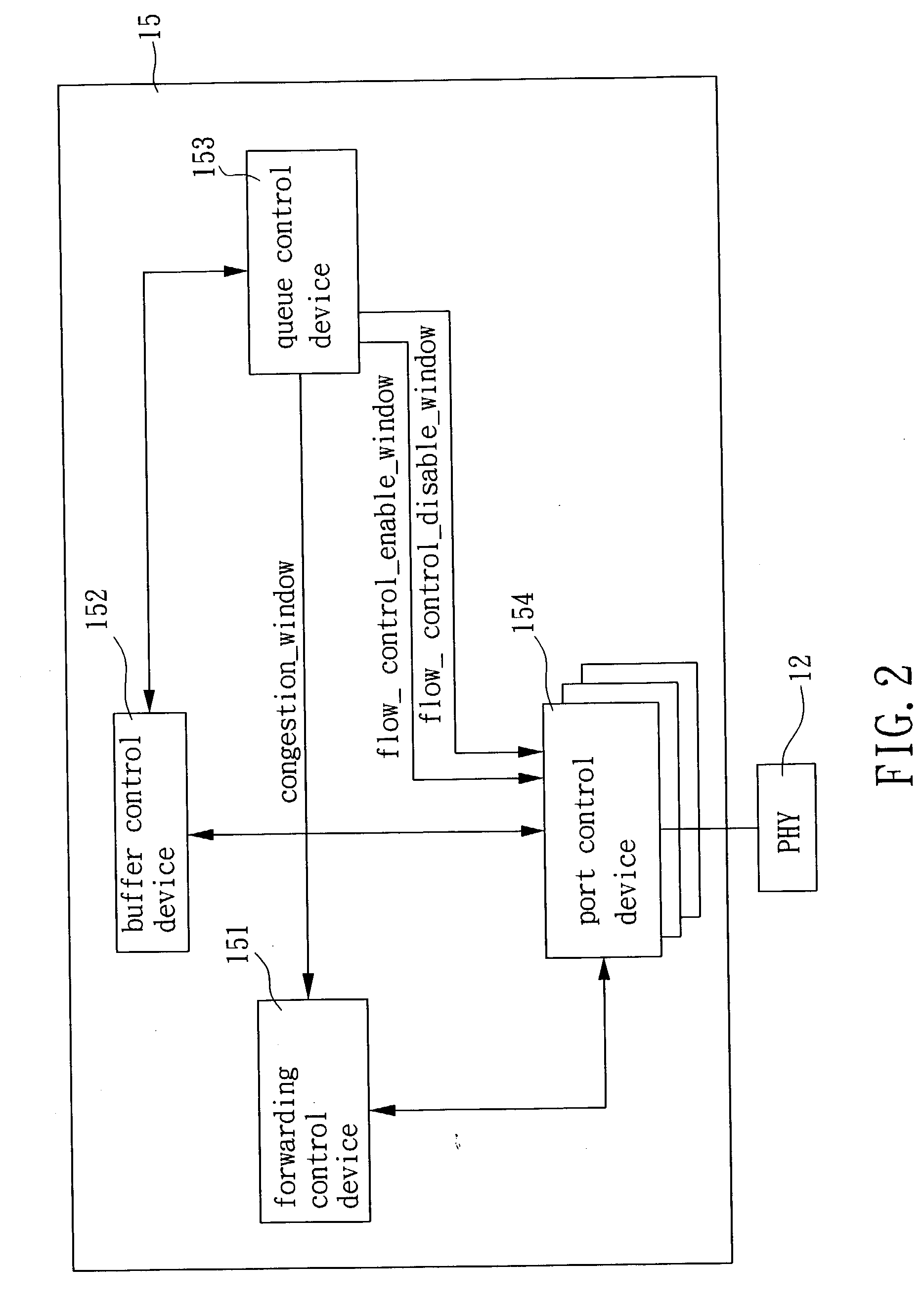

Method for congestion control and associated switch controller

InactiveUS20030147347A1Improve network performanceError preventionFrequency-division multiplex detailsShared memory architectureData buffer

The present invention provides a method for congestion control and an associated switch controller. The switch controller performs either a shared memory architecture or an equal memory partition structure which limits the length of a queue corresponding to each port in response to buffer space. When exceeding a predetermined length, the queue will enter into a congested state. Preferrably, if a source port requests to establish a link in the queue, flow control is performed, and the length of the queue will be limited since there are no more packets to be received in. After escaping congestion, the switch controller returns to the shared memory architecture. Thus, the unfairness problem is improved when the network congests while network performance is enhanced.

Owner:VIA TECH INC

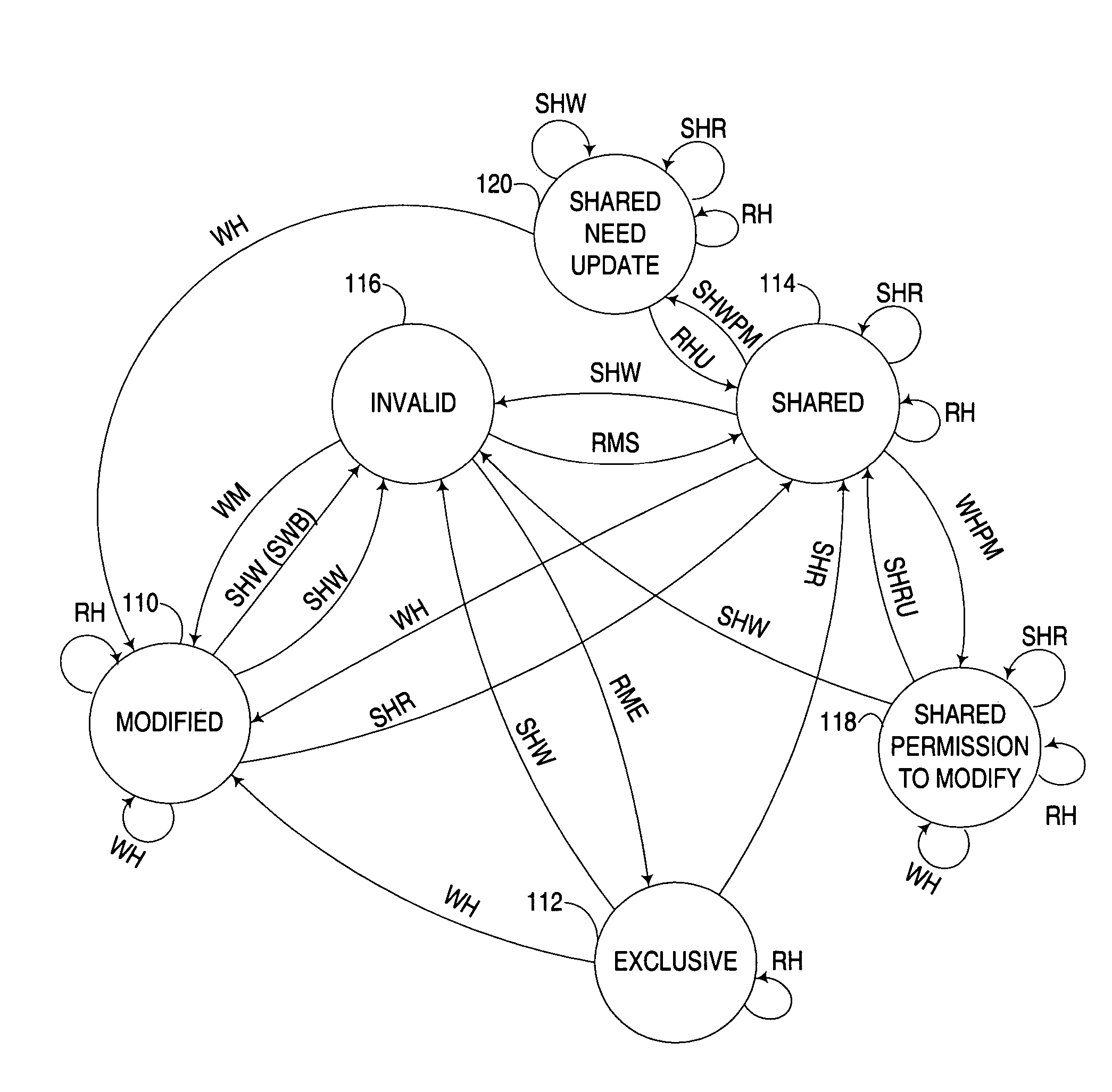

Concurrent read access and exclusive write access to data in shared memory architecture

Concurrent read access and exclusive write access are provided in a shared memory architecture to permit one or more devices in the shared memory architecture to maintain read access to a block of memory such as a cache line while one device has exclusive permission to modify that block of memory. By doing so, a device that has permission to modify may make updates to its copy of the block of memory without invalidating other copies of the block of memory, and potentially enabling other devices to continue to read data from their respective copies of the block of memory without having to retrieve the updated copy of the block of memory.

Owner:LENOVO GLOBAL TECH INT LTD

Concurrent read access and exclusive write access to data in shared memory architecture

Concurrent read access and exclusive write access are provided in a shared memory architecture to permit one or more devices in the shared memory architecture to maintain read access to a block of memory such as a cache line while one device has exclusive permission to modify that block of memory. By doing so, a device that has permission to modify may make updates to its copy of the block of memory without invalidating other copies of the block of memory, and potentially enabling other devices to continue to read data from their respective copies of the block of memory without having to retrieve the updated copy of the block of memory.

Owner:LENOVO GLOBAL TECH INT LTD

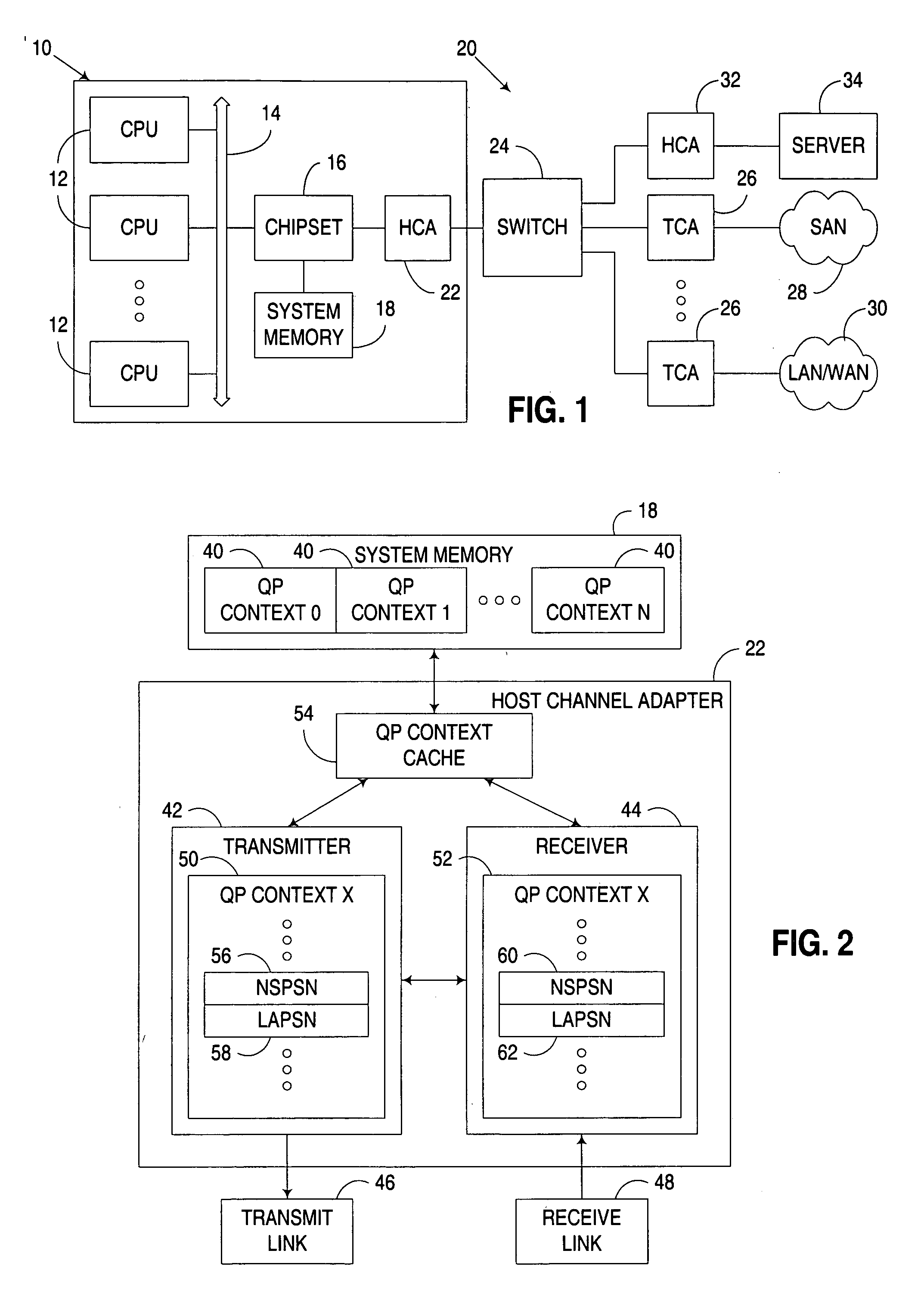

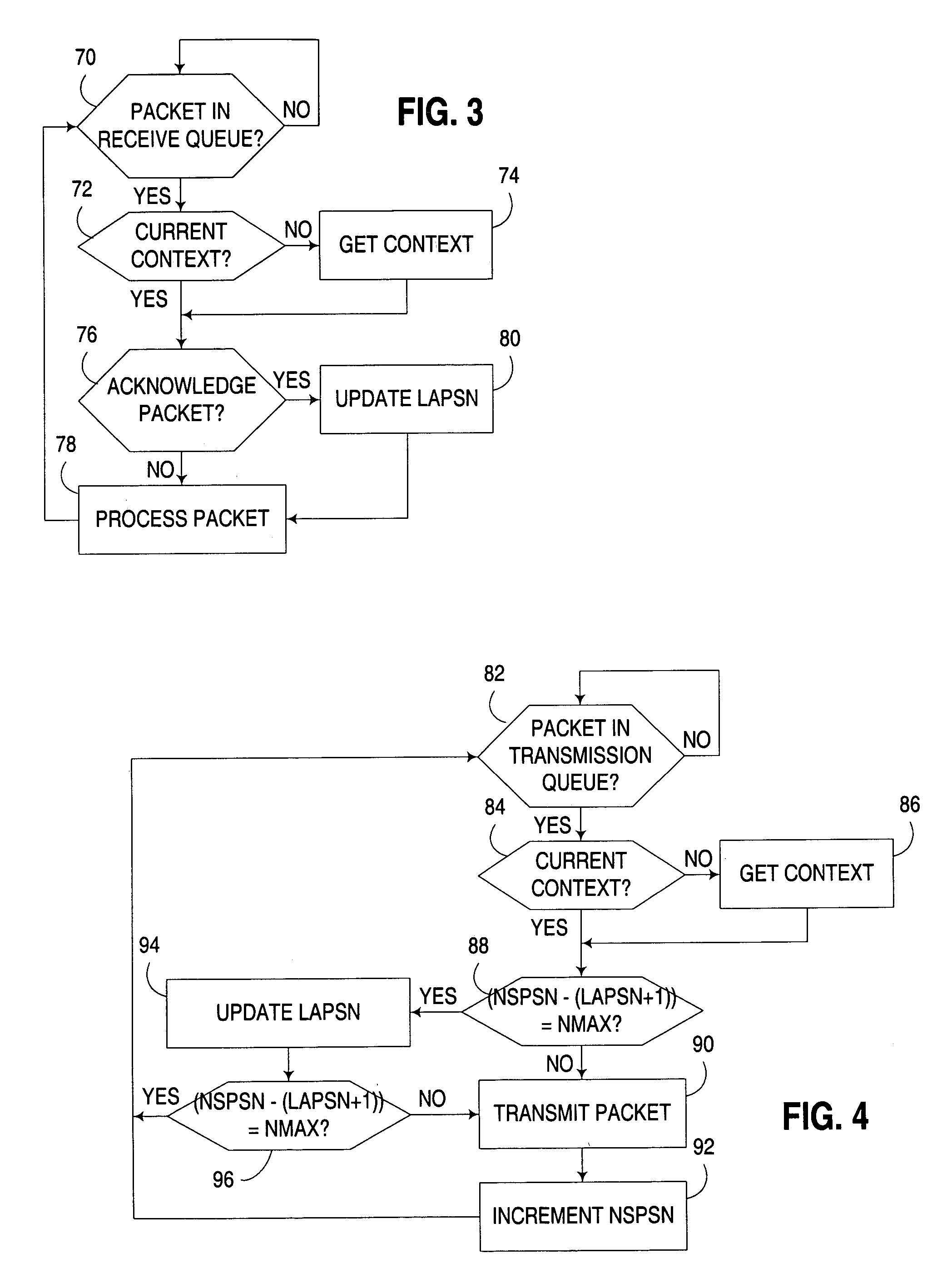

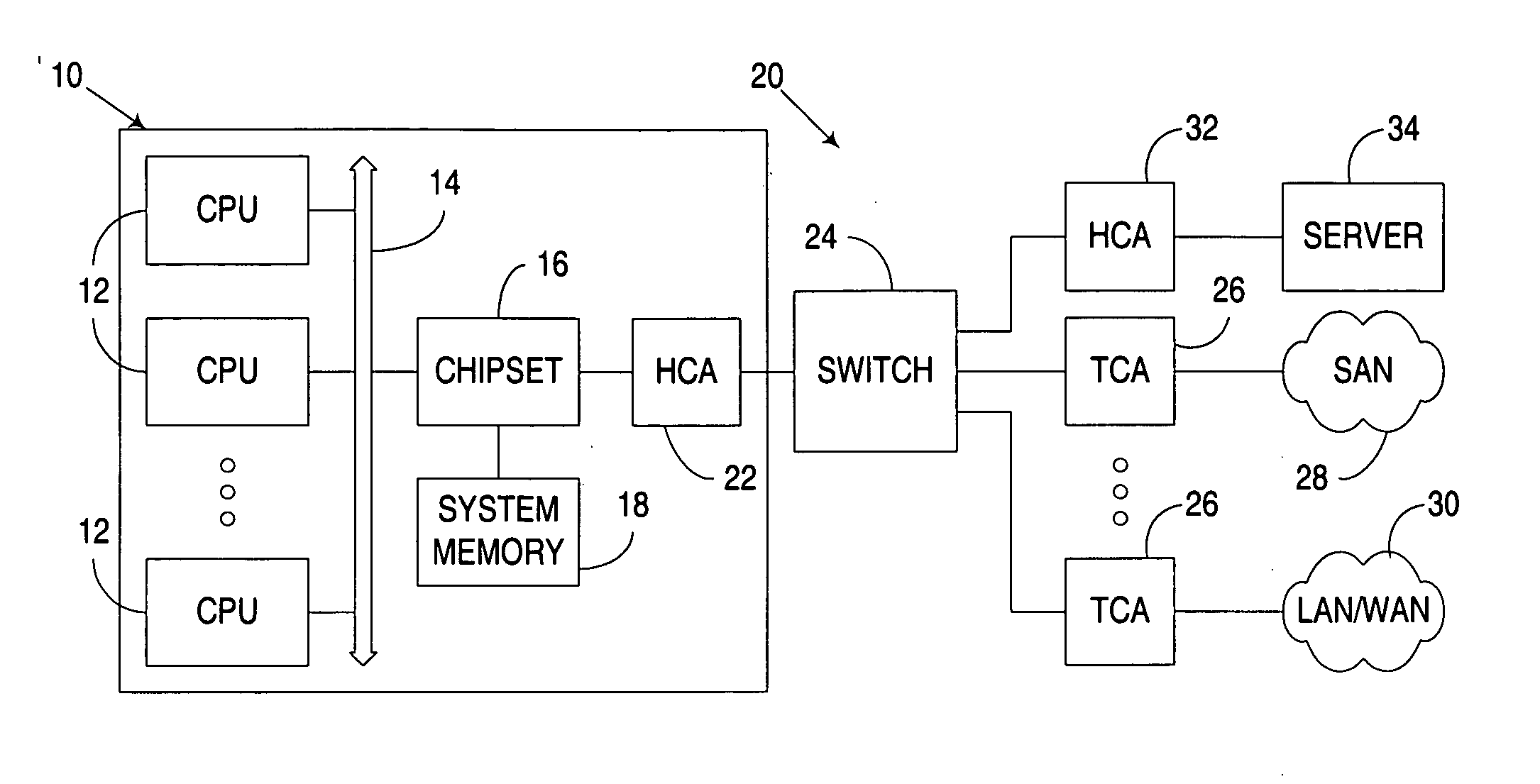

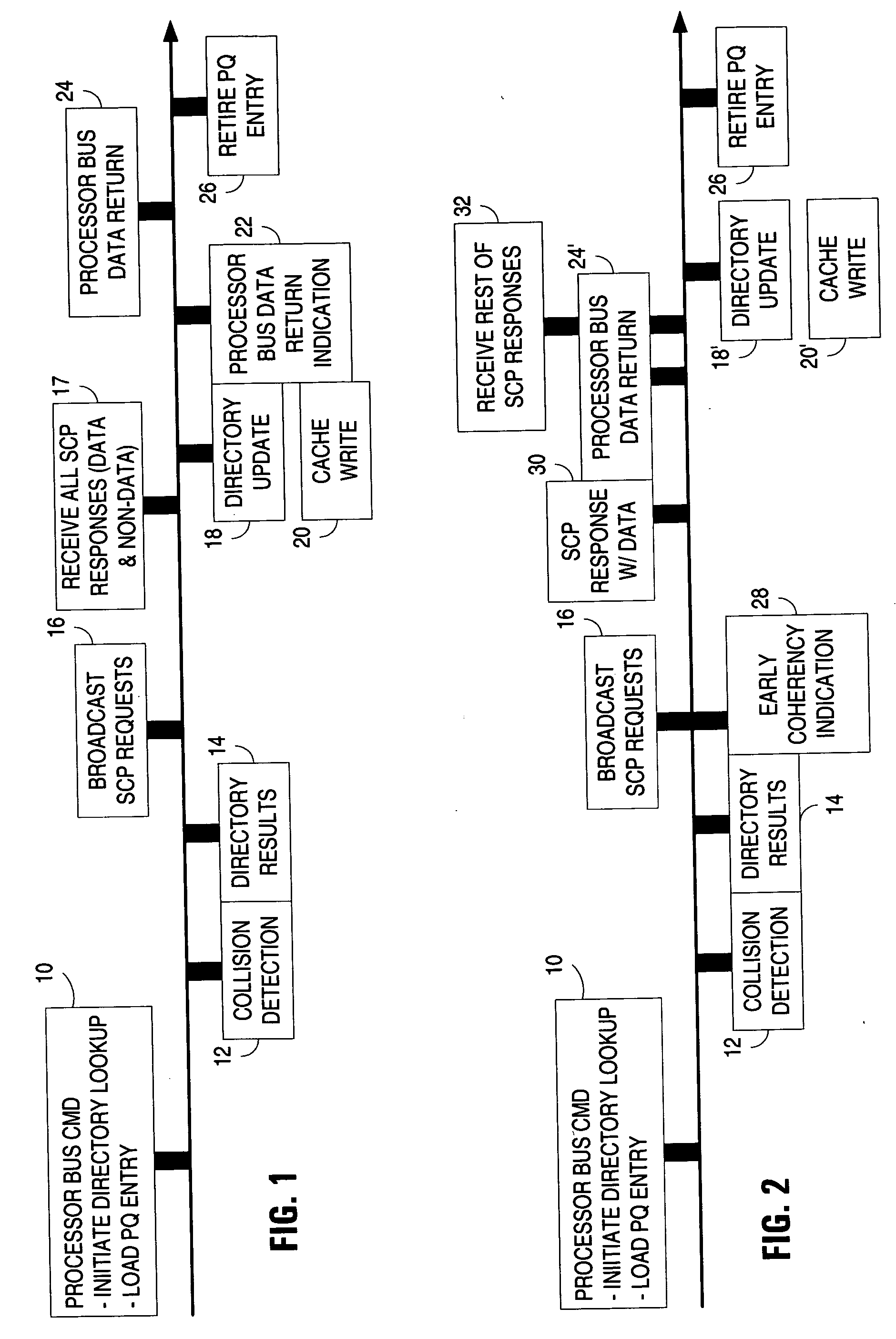

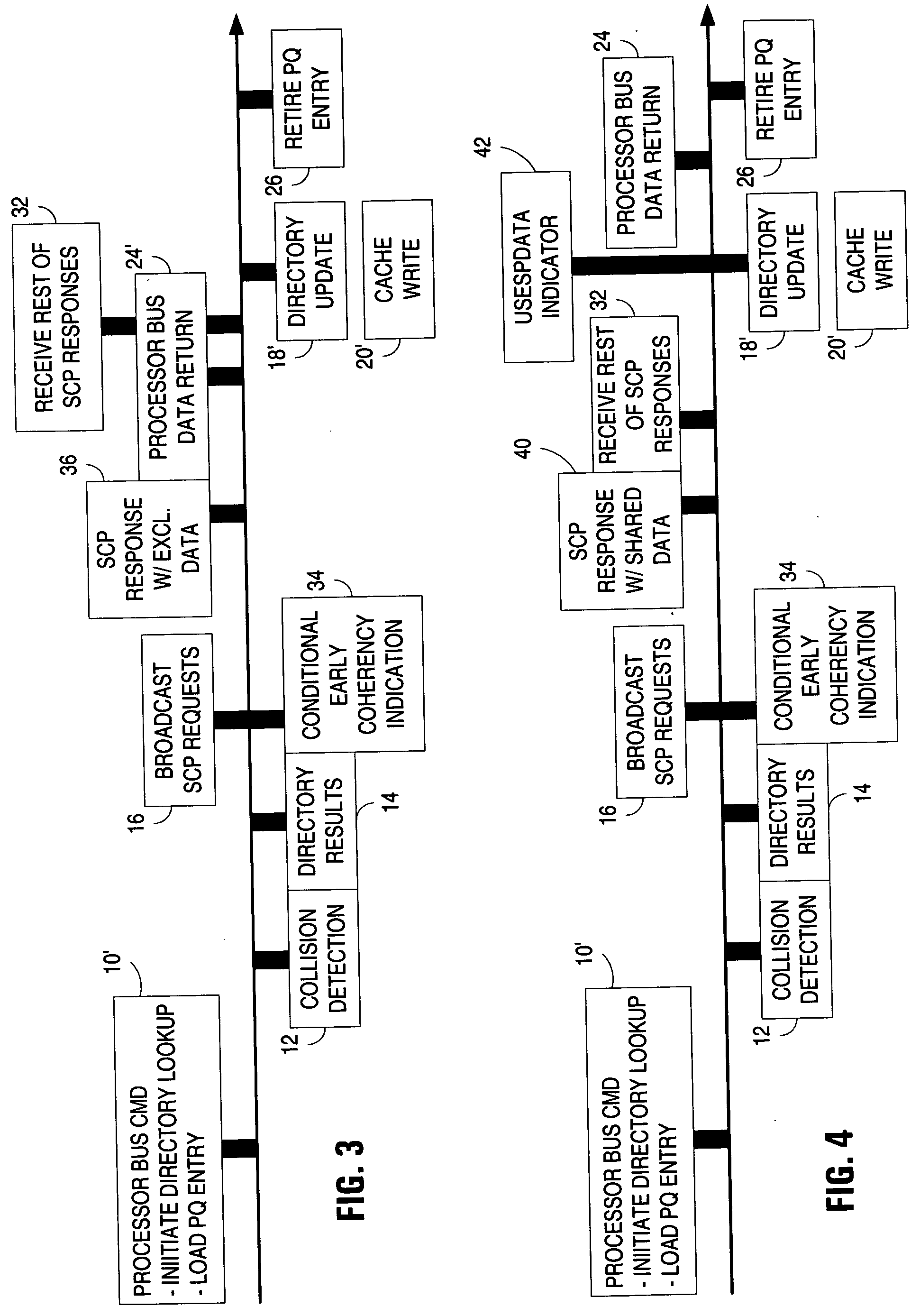

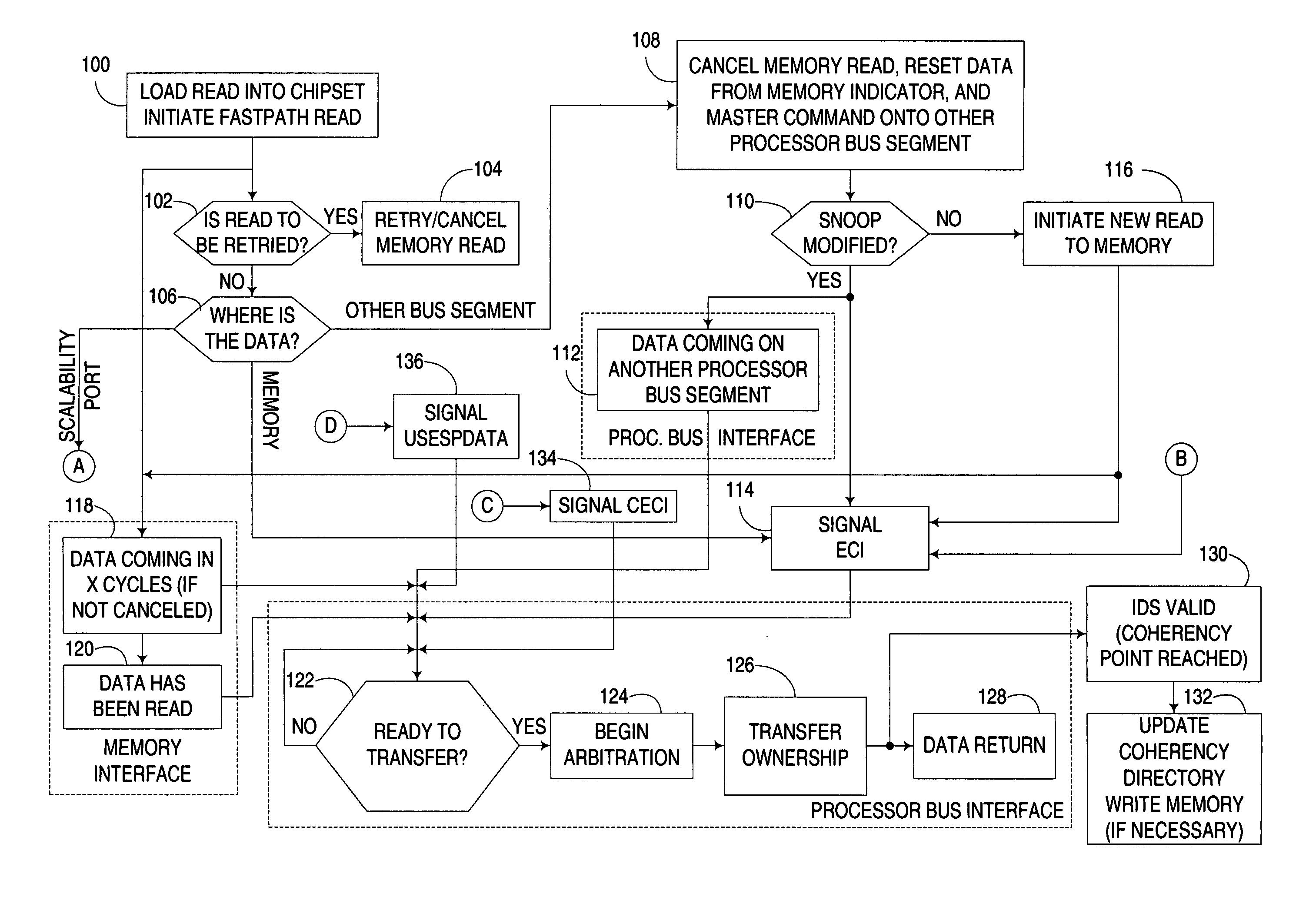

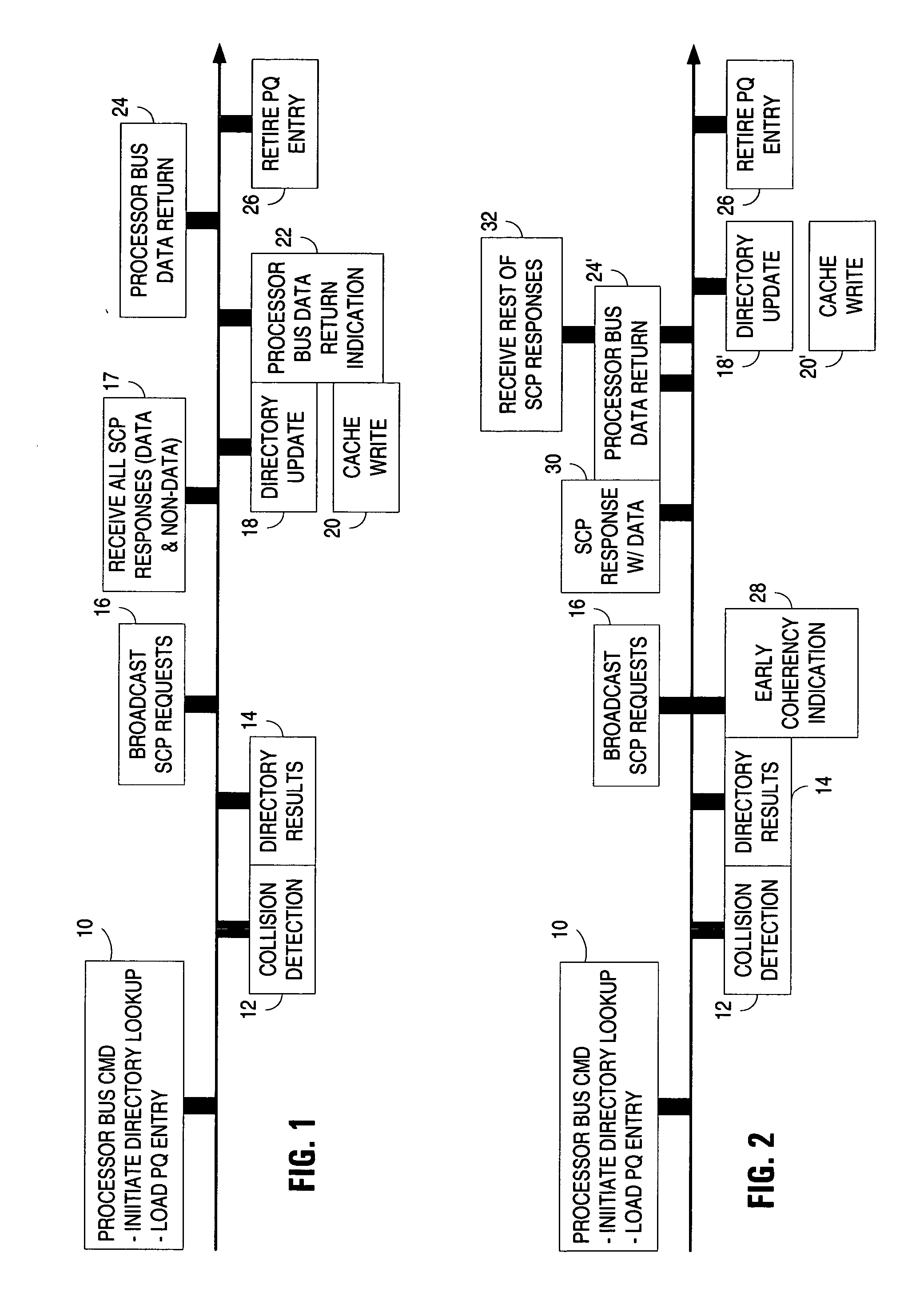

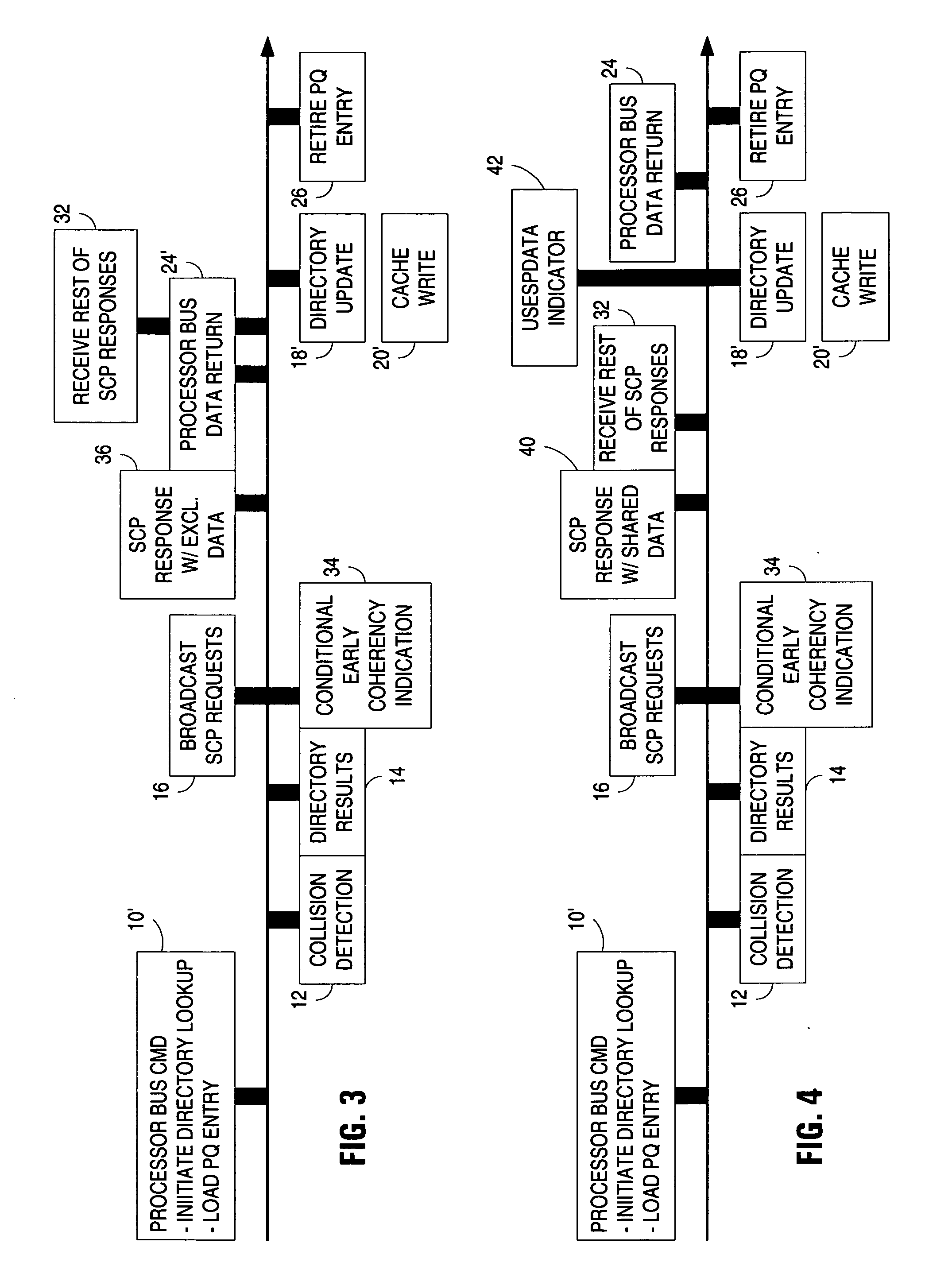

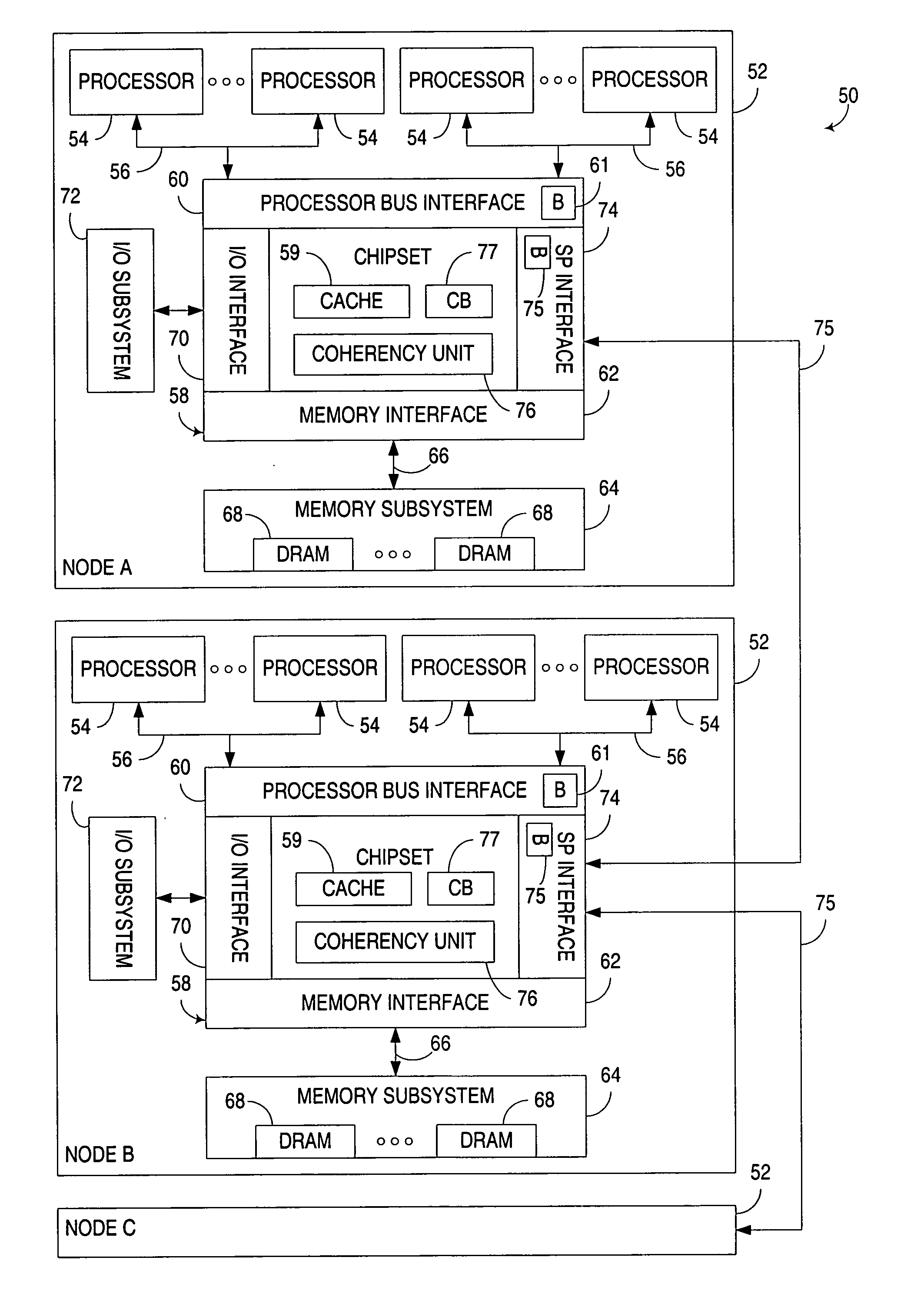

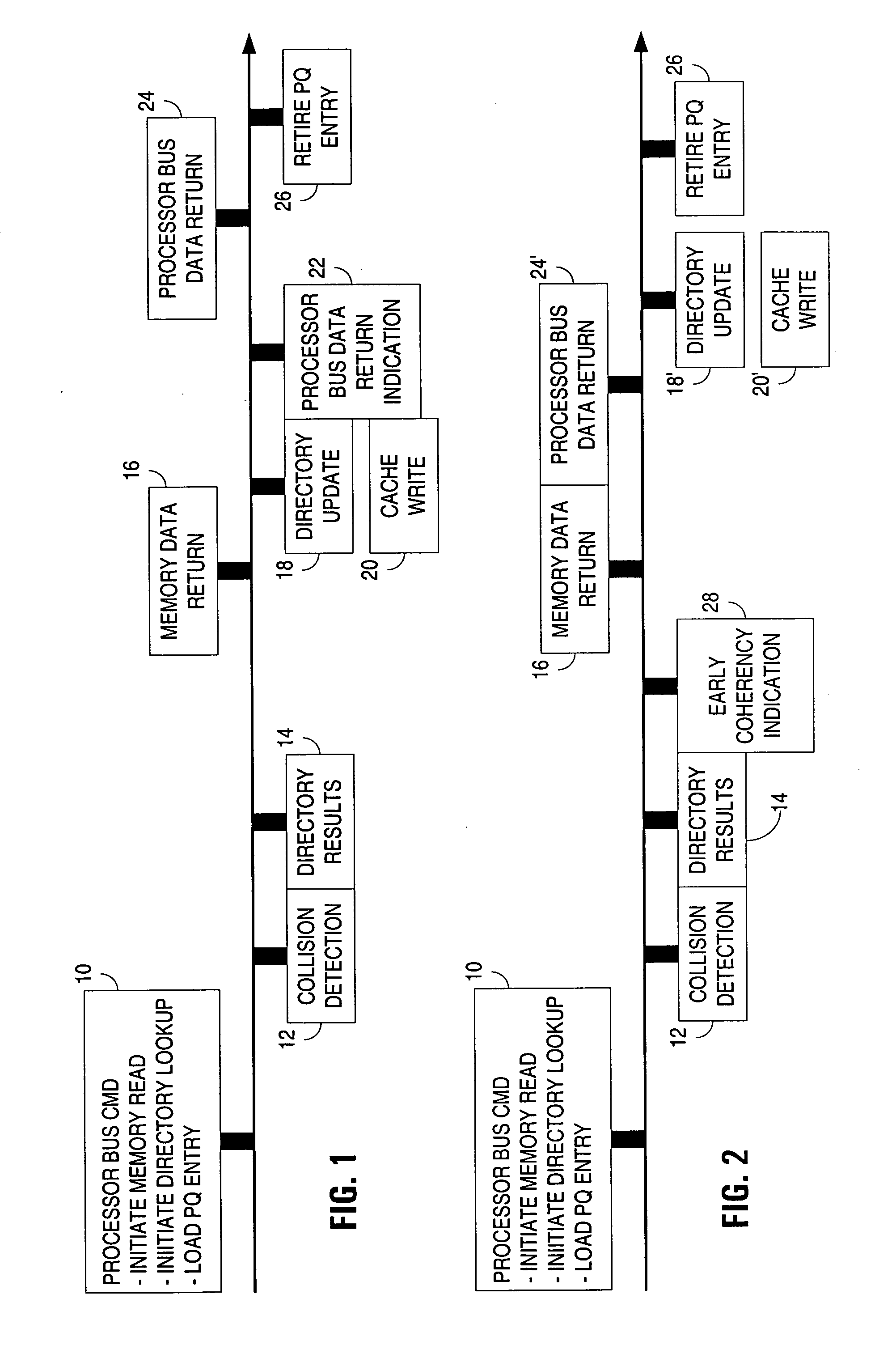

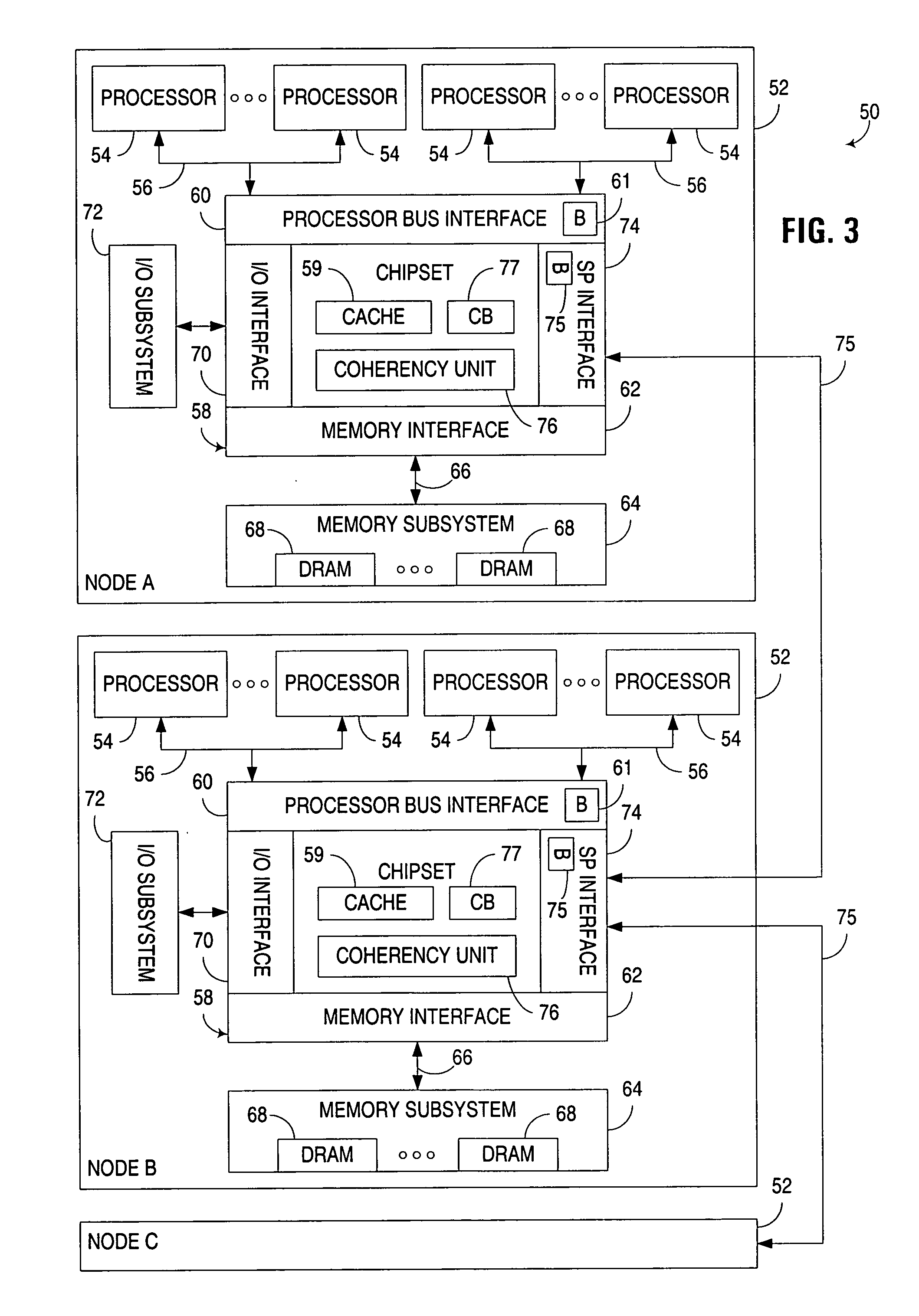

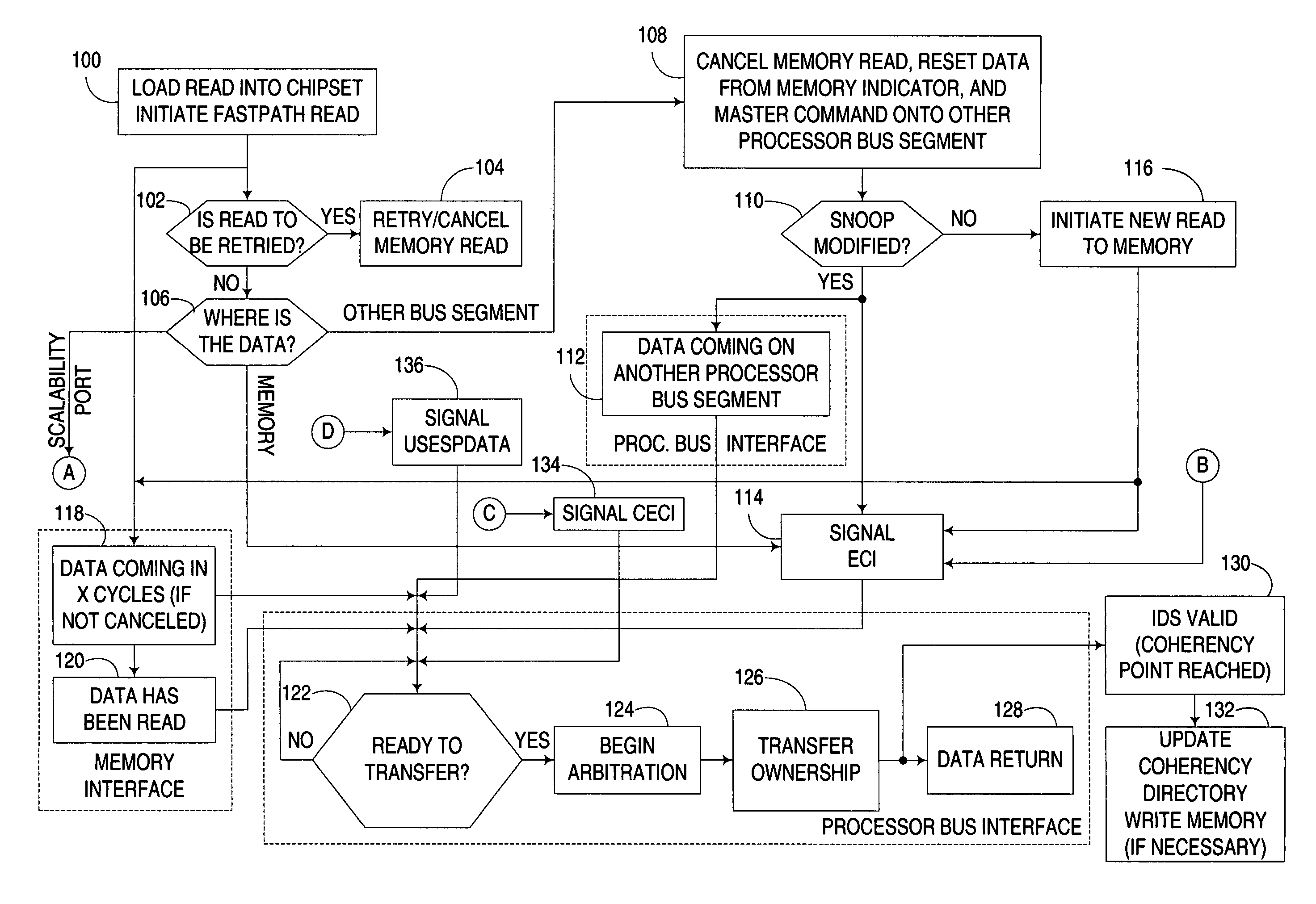

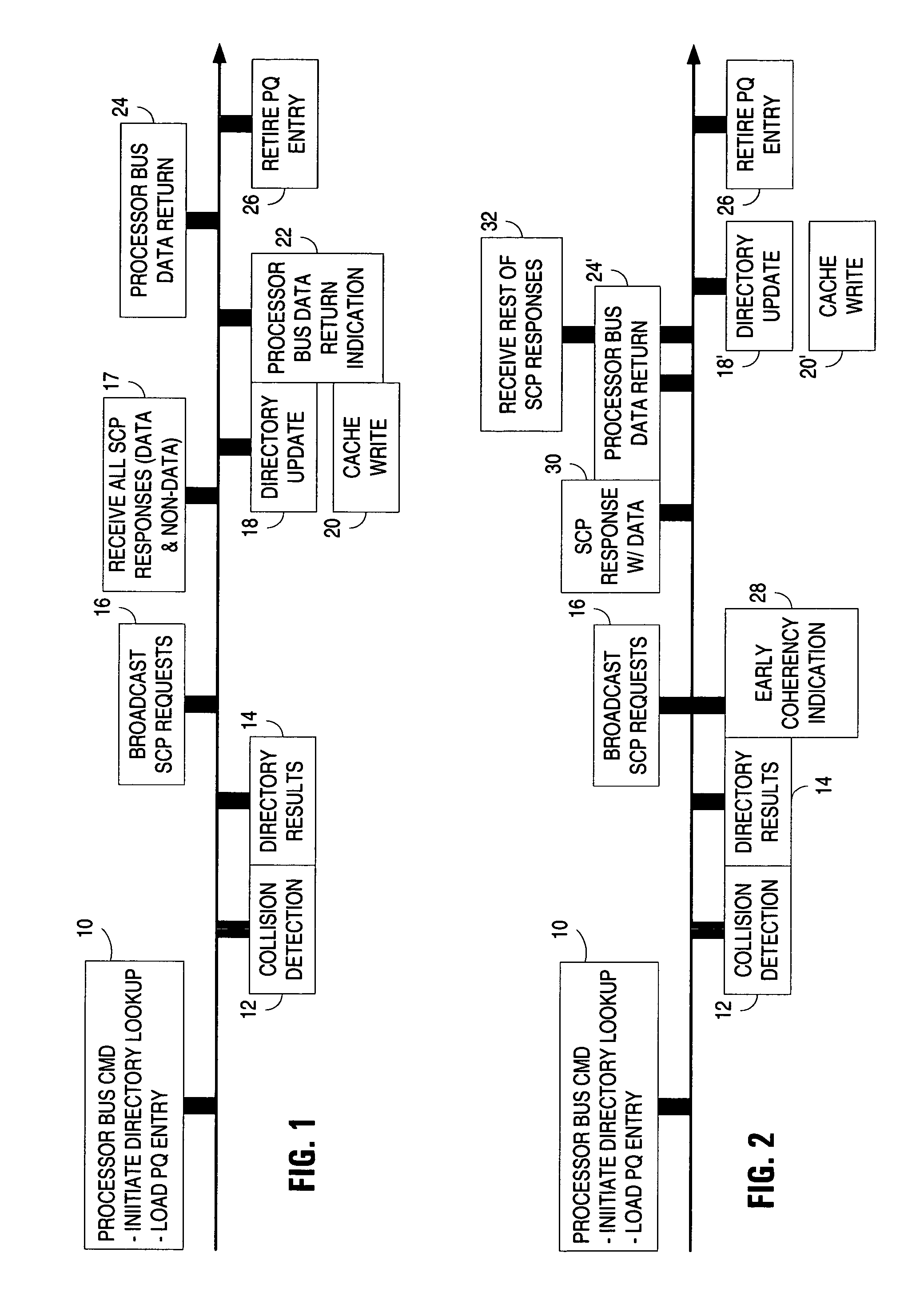

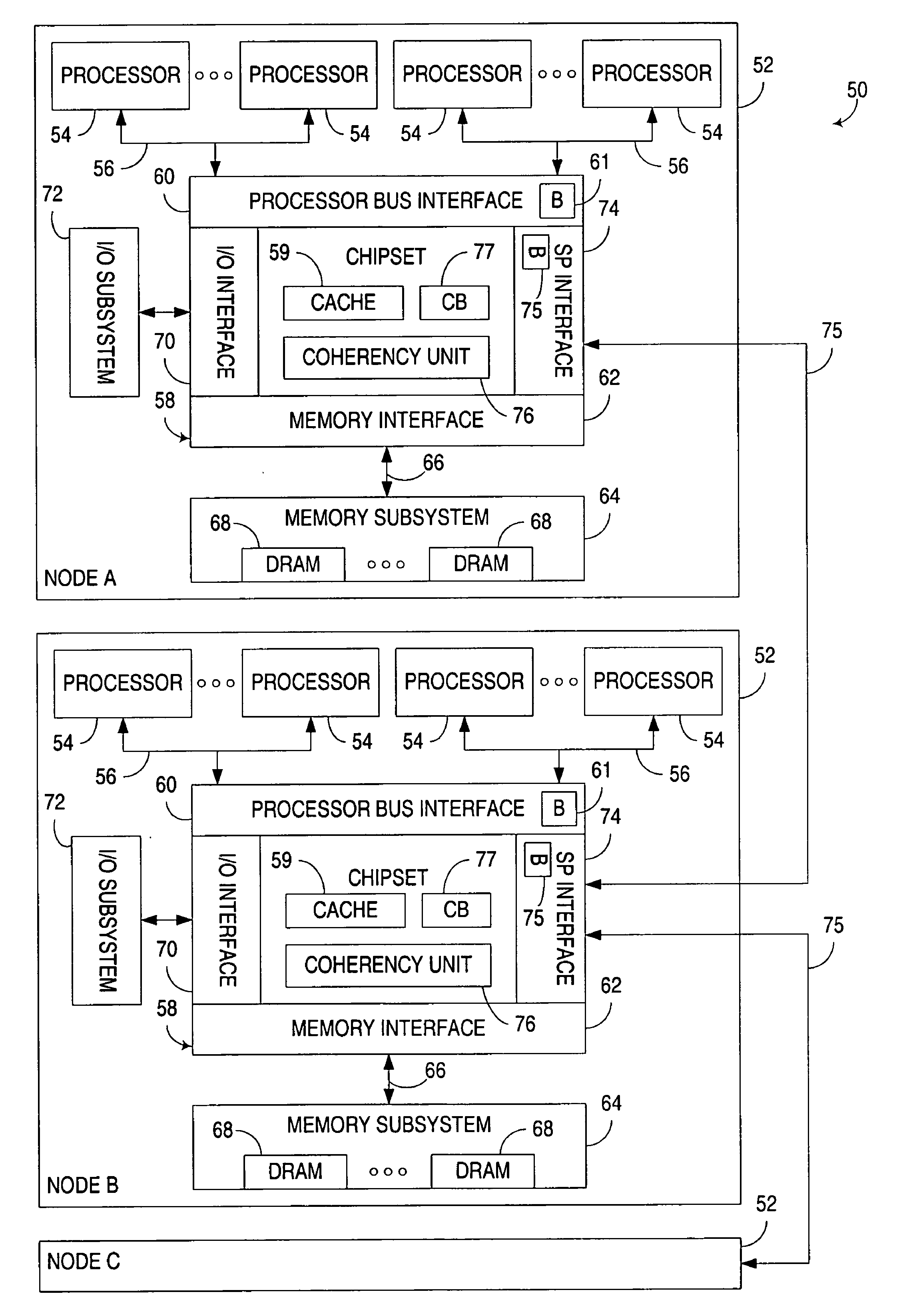

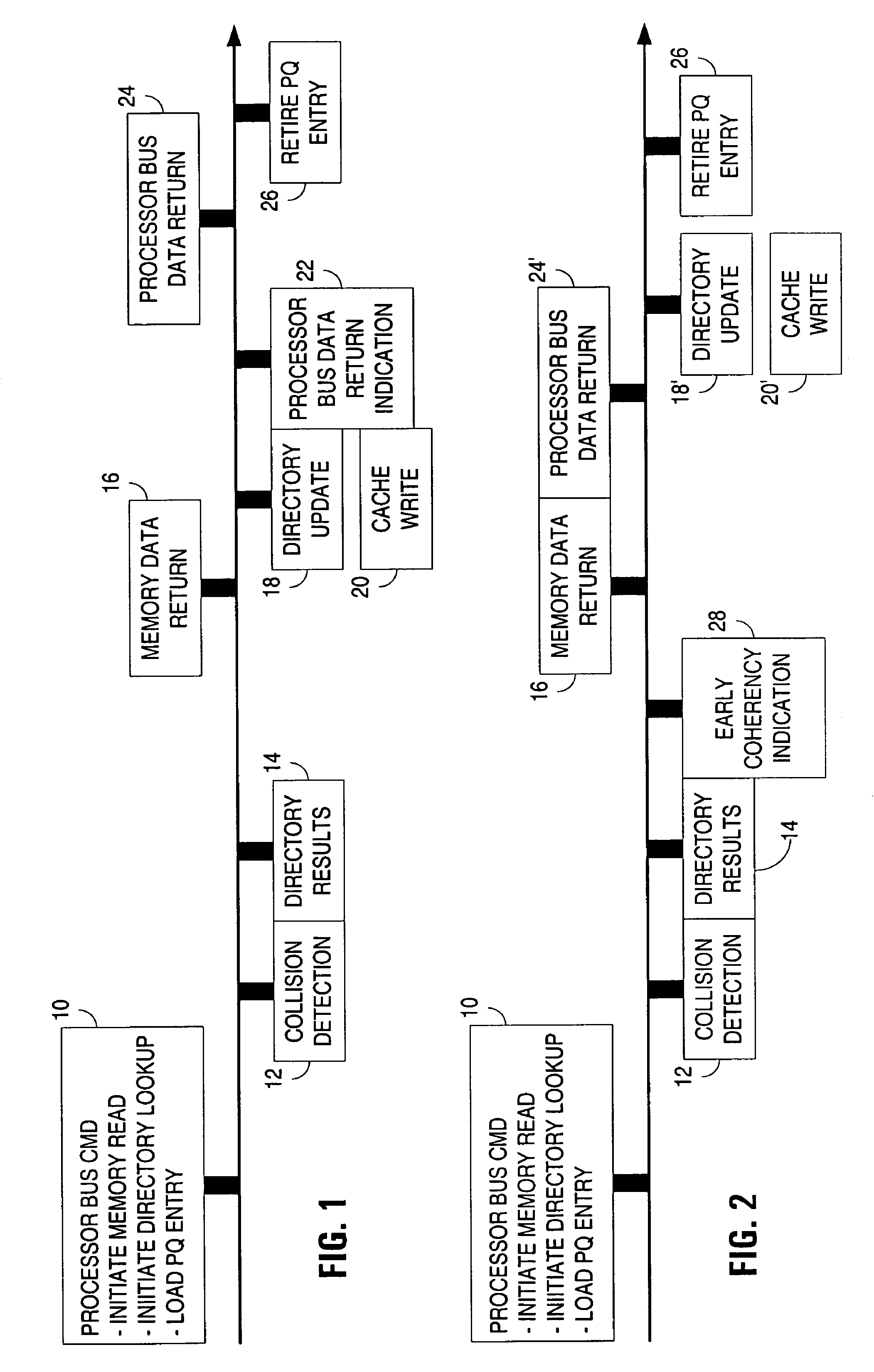

Early return indication for return data prior to receiving all responses in shared memory architecture

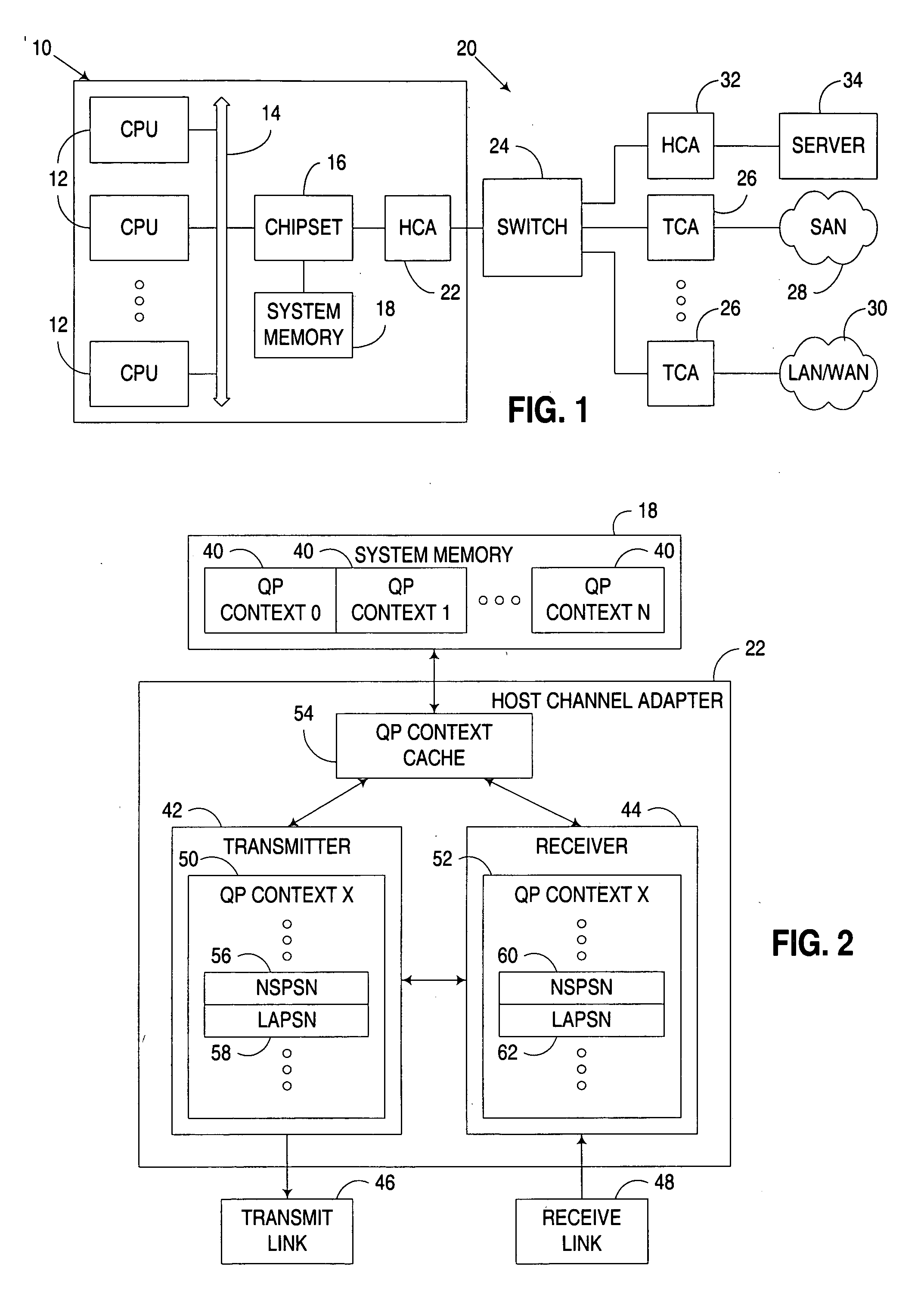

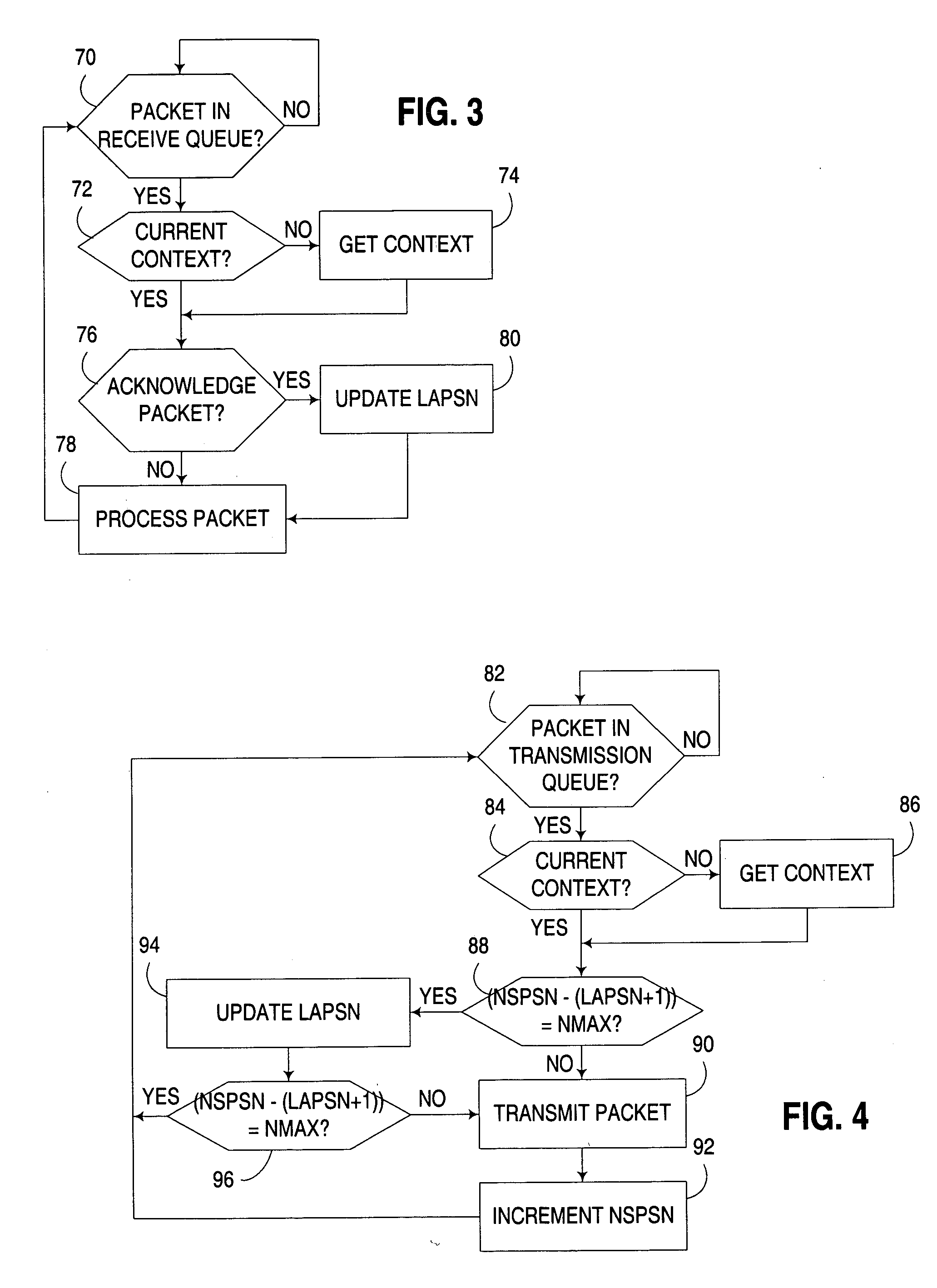

InactiveUS20070083715A1Little and no latencyLittle and latencyMemory systemsShared memory architectureCommunication interface

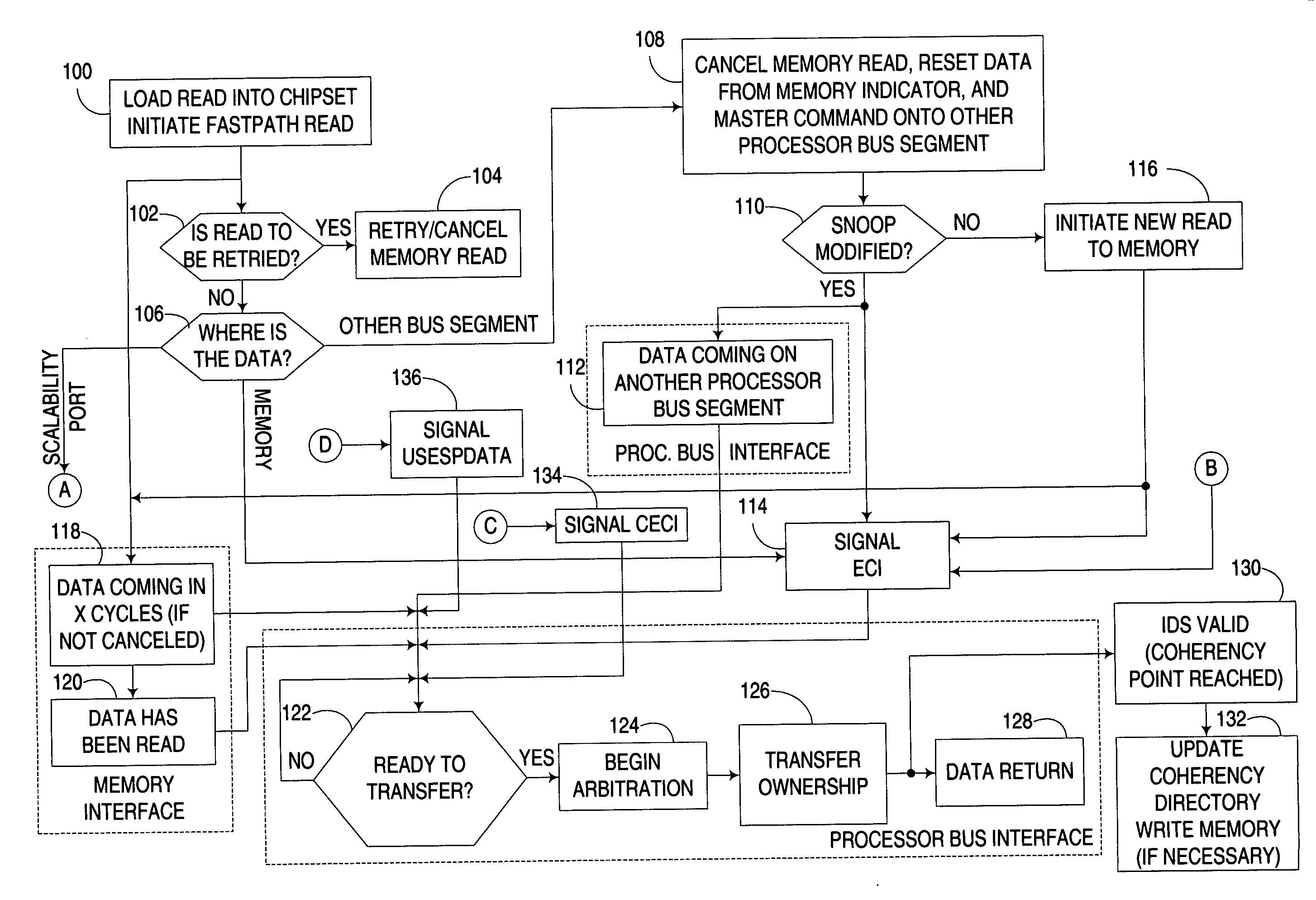

An early return indication is used to notify a first communications interface, prior to a response being received from any of a plurality of sources coupled to a second communications interface, that the return data can be used by the first communications interface when it is received thereby from a source of the return data. By doing so, the first communications interface can often prepare for forwarding the return data over its associated communication link such that the data can be forwarded with little or no latency once the data is retrieved from its source, and may be able to initiate the return of data over the communication link prior to all responses being received from the other sources. The early return indication may also serve as an early coherency indication in that the first communications interface is no longer required to wait for updating of a coherency directory to complete prior to forwarding the return data over the communication link.

Owner:IBM CORP

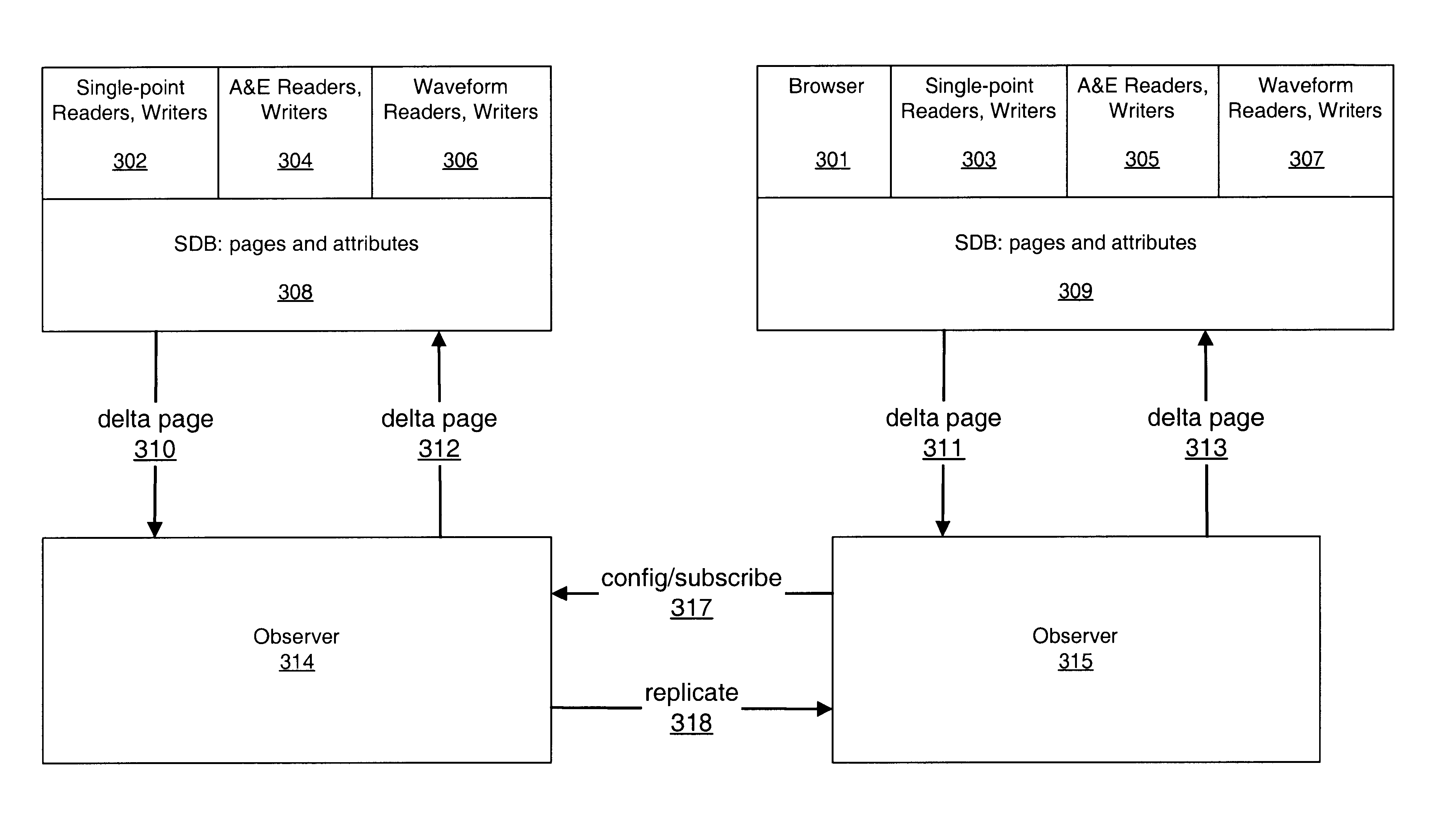

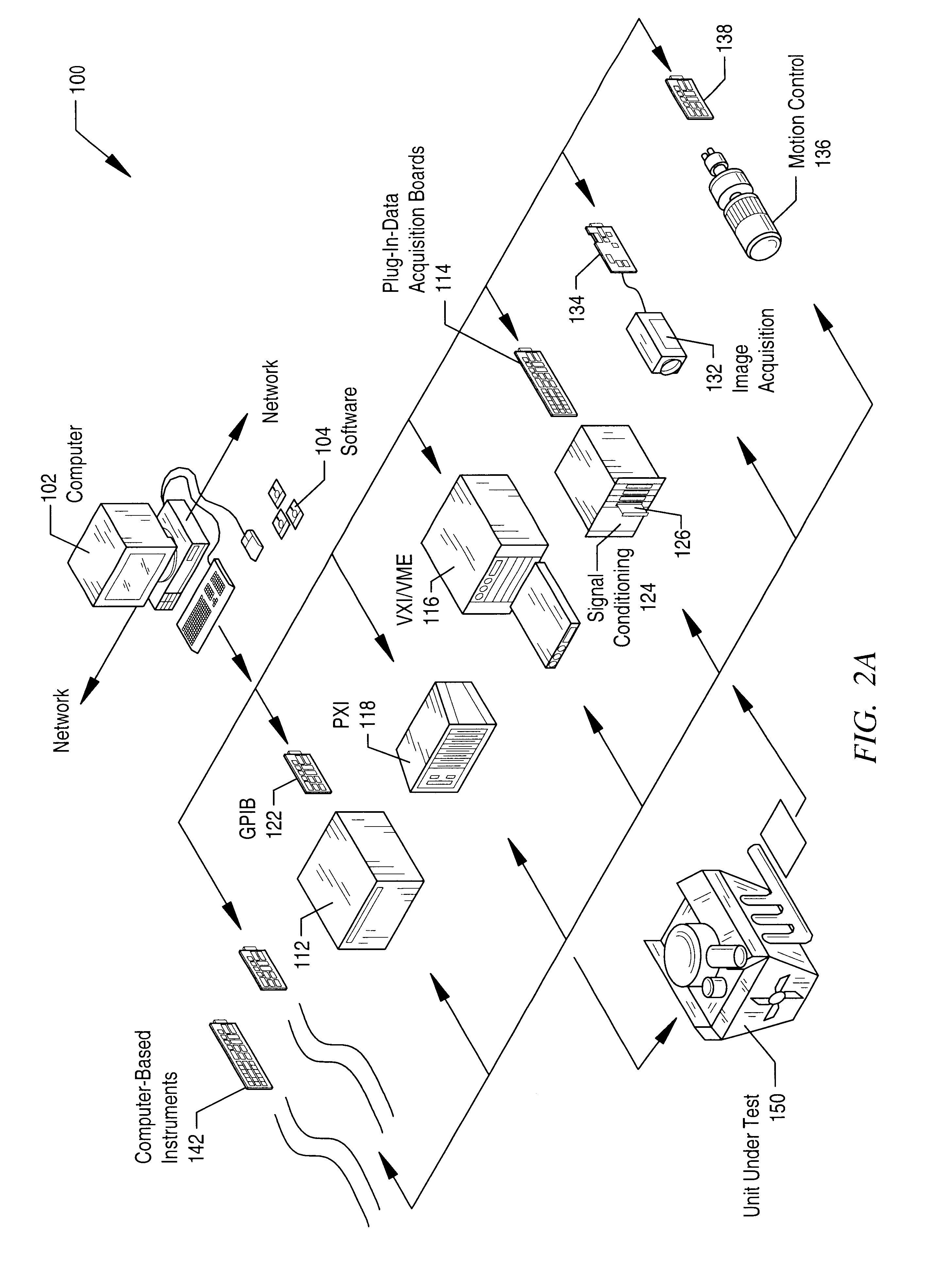

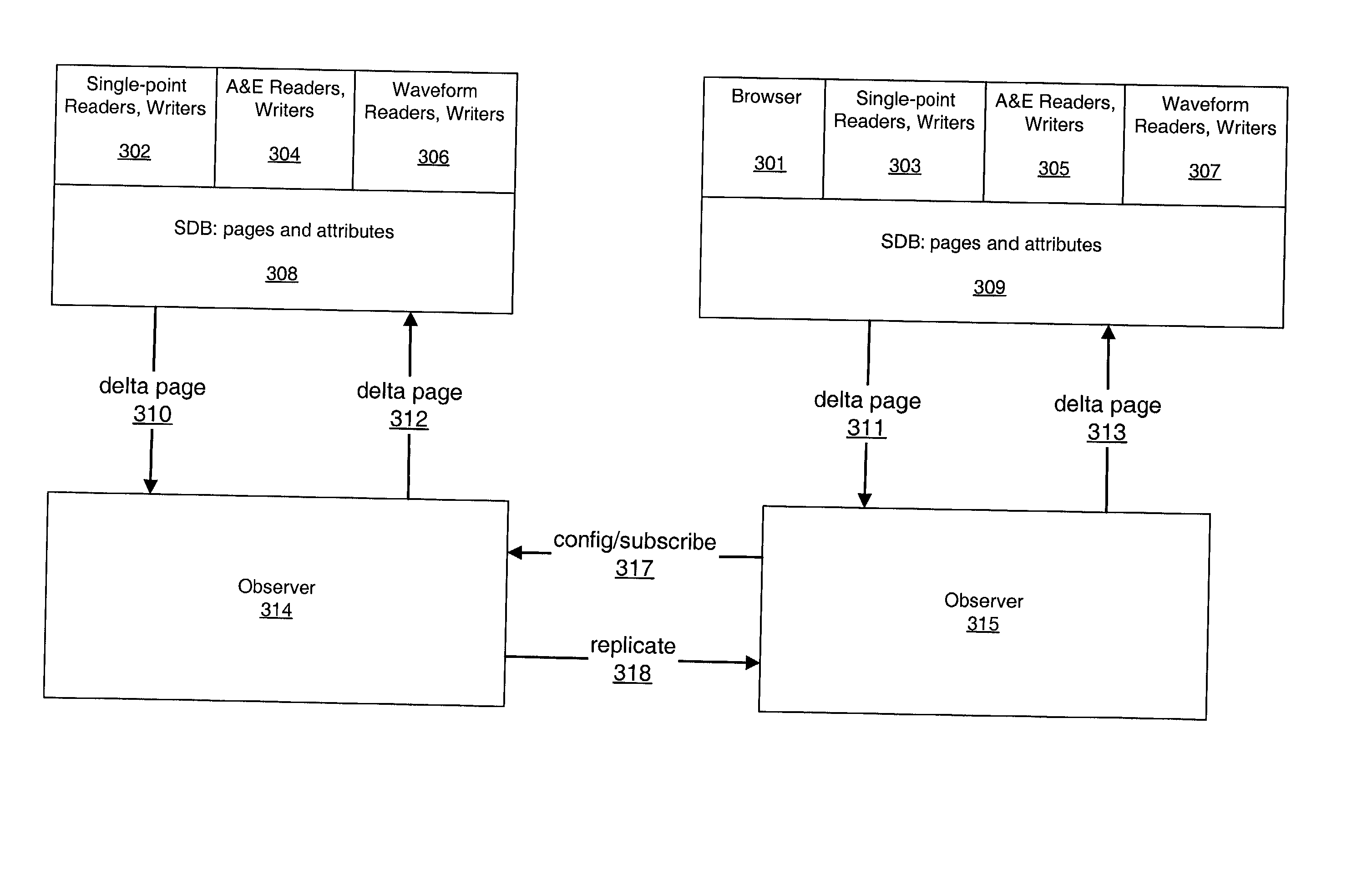

System and method for a shared memory architecture for high speed logging and trending

InactiveUS6745175B2Data processing applicationsHardware monitoringShared memory architectureComputerized system

An improved method and system for high speed logging and trending of data. Logging of data may include: receiving data; and storing the data, and an index to the data, in a shared memory location coupled to a database. Trending of data may include: retrieving requested data from the shared memory location using the index of the requested data, and displaying the retrieved data. User configurable frequencies may be provided for various actions on the data. The data may be measurement data, including a stream of data comprising a plurality of entries. The logging and trending may be: (1) performed substantially concurrently; (2) implemented using shared memory templates. The database may reside on a first computer system, and the logging and trending may be performed on the first computer system or on a second computer system coupled to the first computer system via a network.

Owner:NATIONAL INSTRUMENTS

Early return indication for read exclusive requests in shared memory architecture

InactiveUS20070061519A1Little and latencyMemory systemsShared memory architectureCommunication interface

An early return indication is used to notify a first communications interface, prior to a response being received from any of a plurality of sources coupled to a second communications interface, that the return data can be used by the first communications interface when it is received thereby from a source of the return data if the source has an exclusive copy of the return data. By doing so, the first communications interface can often prepare for forwarding the return data over its associated communication link such that the data can be forwarded with little or no latency once the data is retrieved from its source, and may be able to initiate the return of data over the communication link prior to all responses being received from the other sources. The early return indication may also serves as an early coherency indication in that the first communications interface is no longer required to wait for updating of a coherency directory to complete prior to forwarding the return data over the communication link.

Owner:IBM CORP

Early coherency indication for return data in shared memory architecture

InactiveUS20060143403A1Little and no latencyLower latencyMemory architecture accessing/allocationMultiple digital computer combinationsShared memory architectureCommunication interface

In a shared memory architecture, early coherency indication is used to notify a communications interface, prior to the data for a memory request is returned, and prior to updating a coherency directory in response to the memory request, that the return data can be used by the communications interface when it is received thereby from a source of the return data. By doing so, the communications interface can often begin forwarding the return data over its associated communication link with little or no latency once the data is retrieved from its source. In addition, the communications interface is often no longer required to wait for updating of the coherency directory to complete prior to forwarding the return data over the communication link. As such, the overall latency for handling the memory request is typically reduced.

Owner:IBM CORP

Method and system for providing smart memory architecture

A smart memory system preferably includes a memory including one or more memory chips, and a processor including one or more memory processor chips. The processor includes a common address / data / control memory bus that is configured to provide an asynchronous handshaking interface between the memory array and the memory processor. The processor can offload error data from the memory chip for analysis, and can store poor retention bit address information for memory refreshing in a non-bolatile error retention memory. Porgram logic can also be included for memory address re-configuration. Power management logic can also be included, which can have a process-voltage-temperature compensation voltage generator for providing stable and constant read currents. An asynchronous handshaking interface is provided between the memory array and the memory processor. Write error tagging and write verification circuits can also be included.

Owner:SAMSUNG ELECTRONICS CO LTD

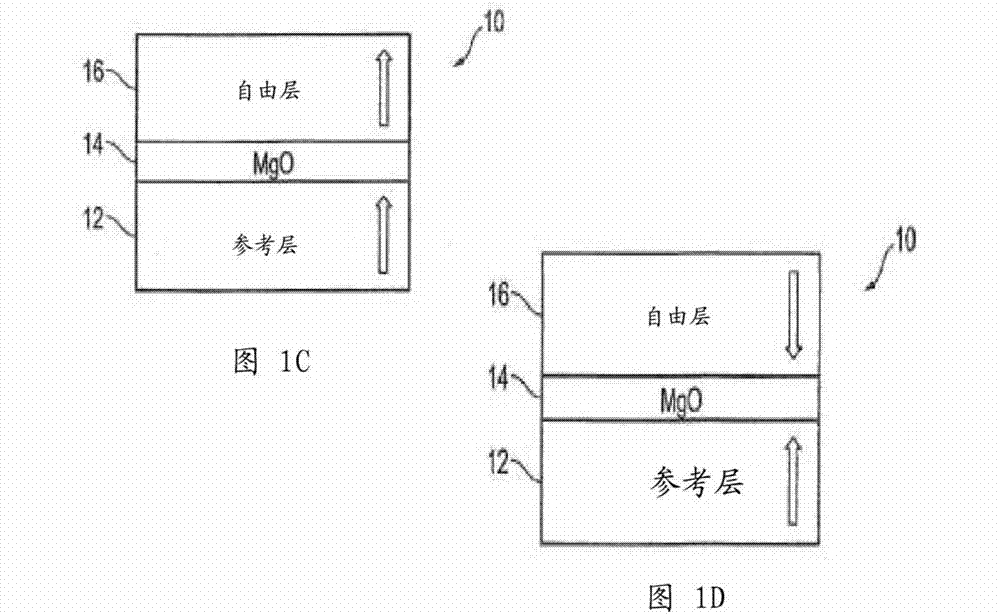

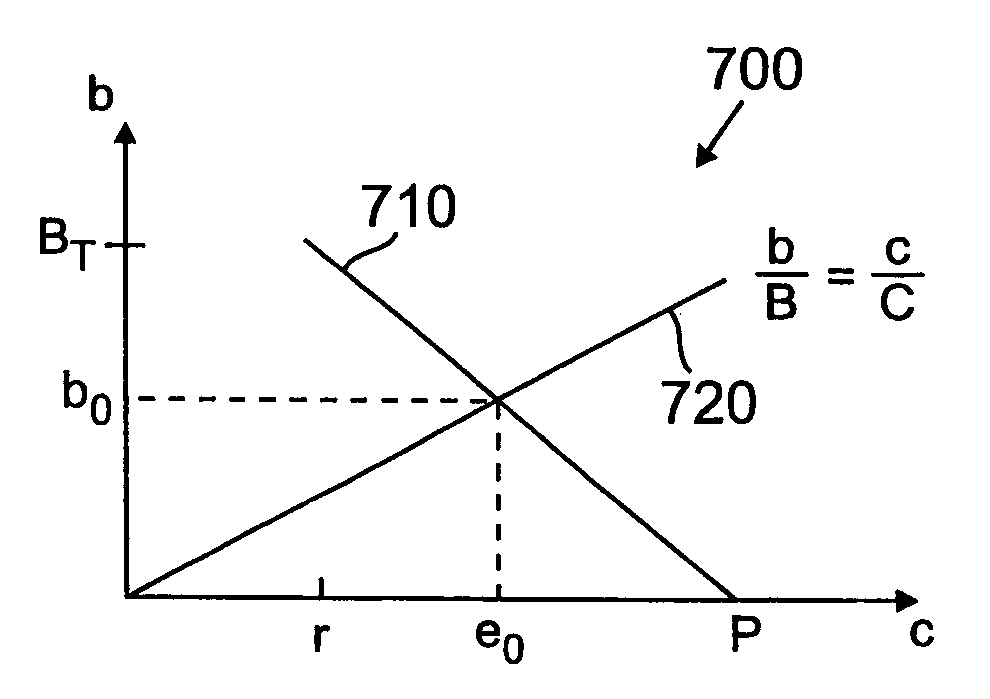

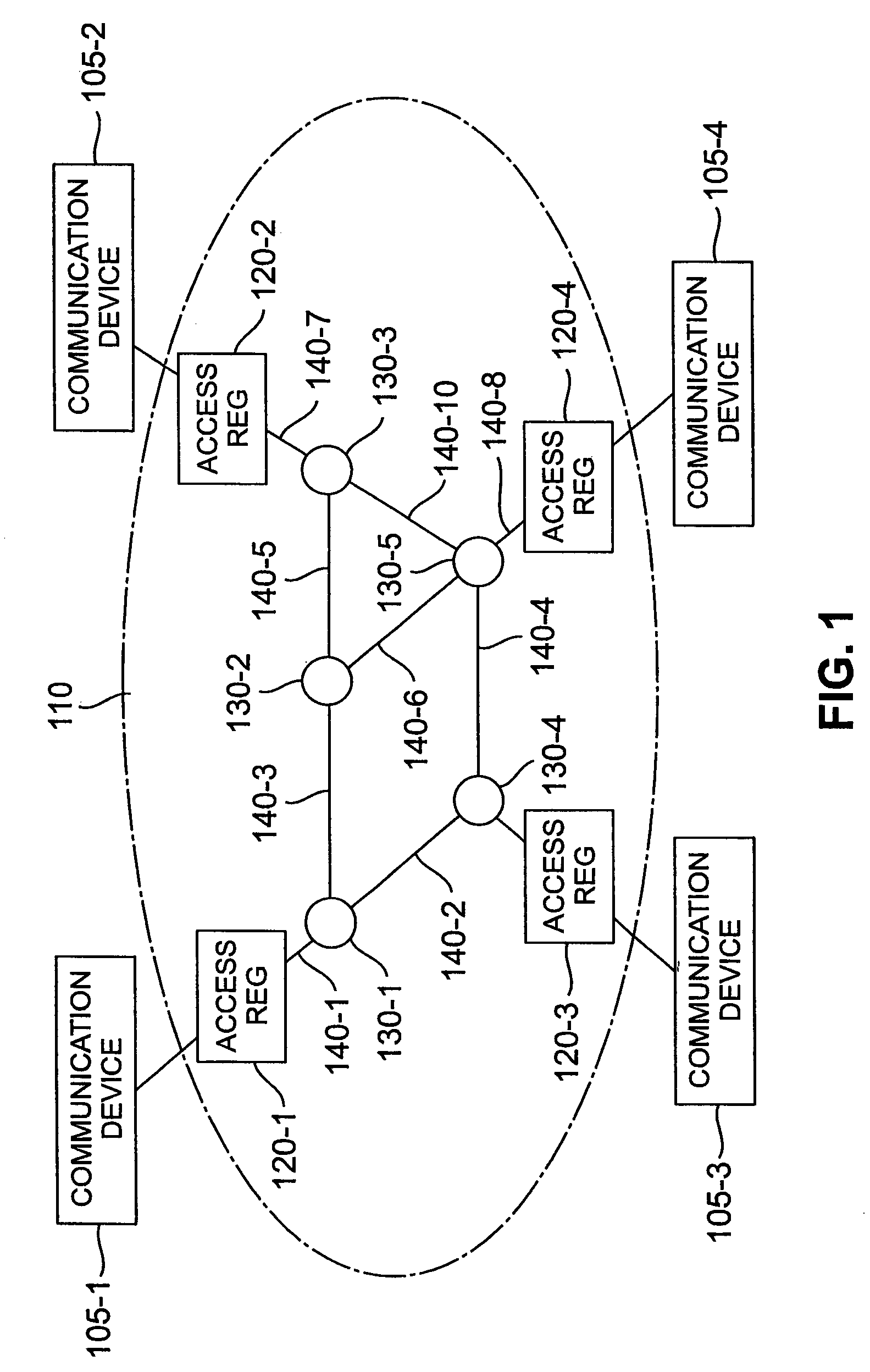

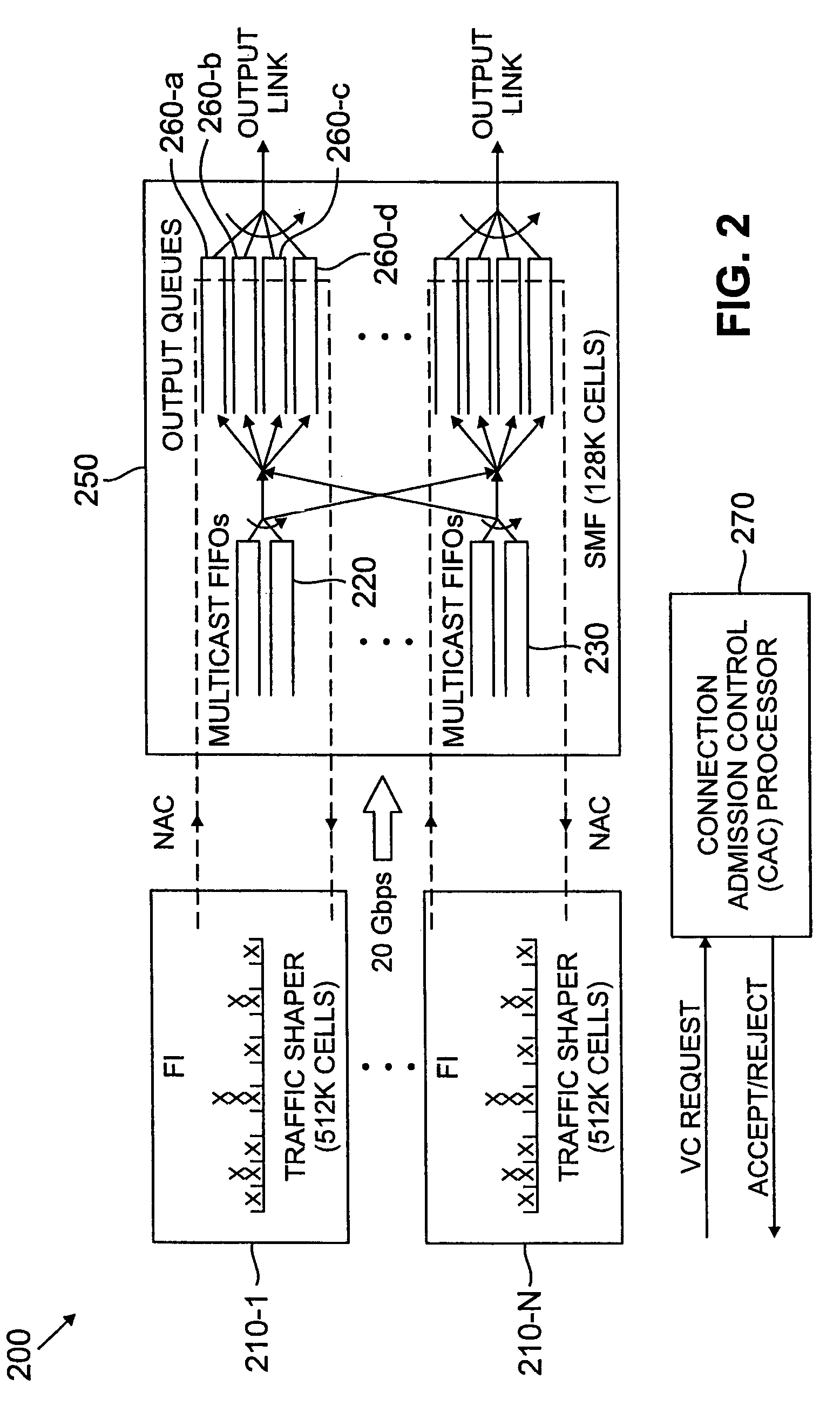

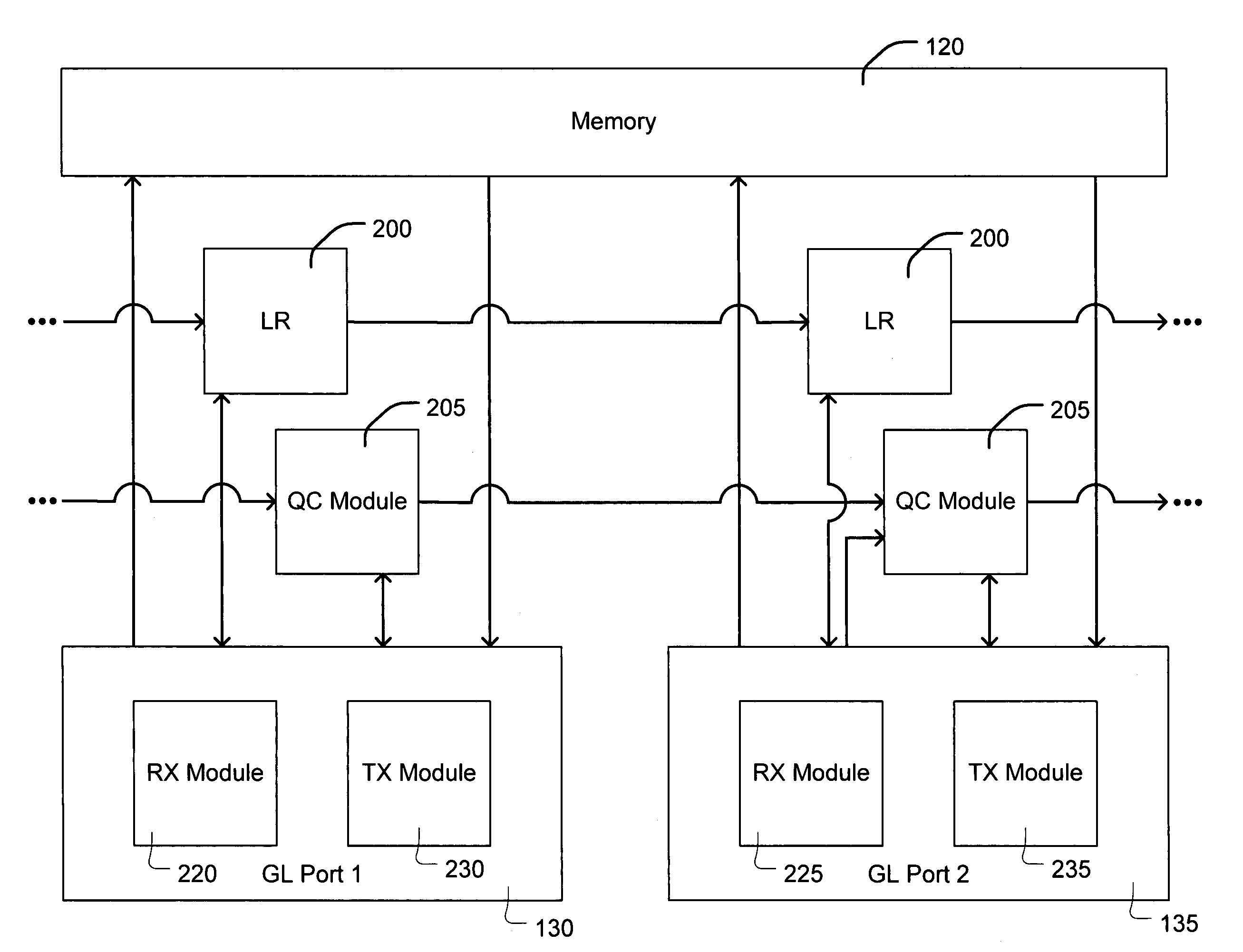

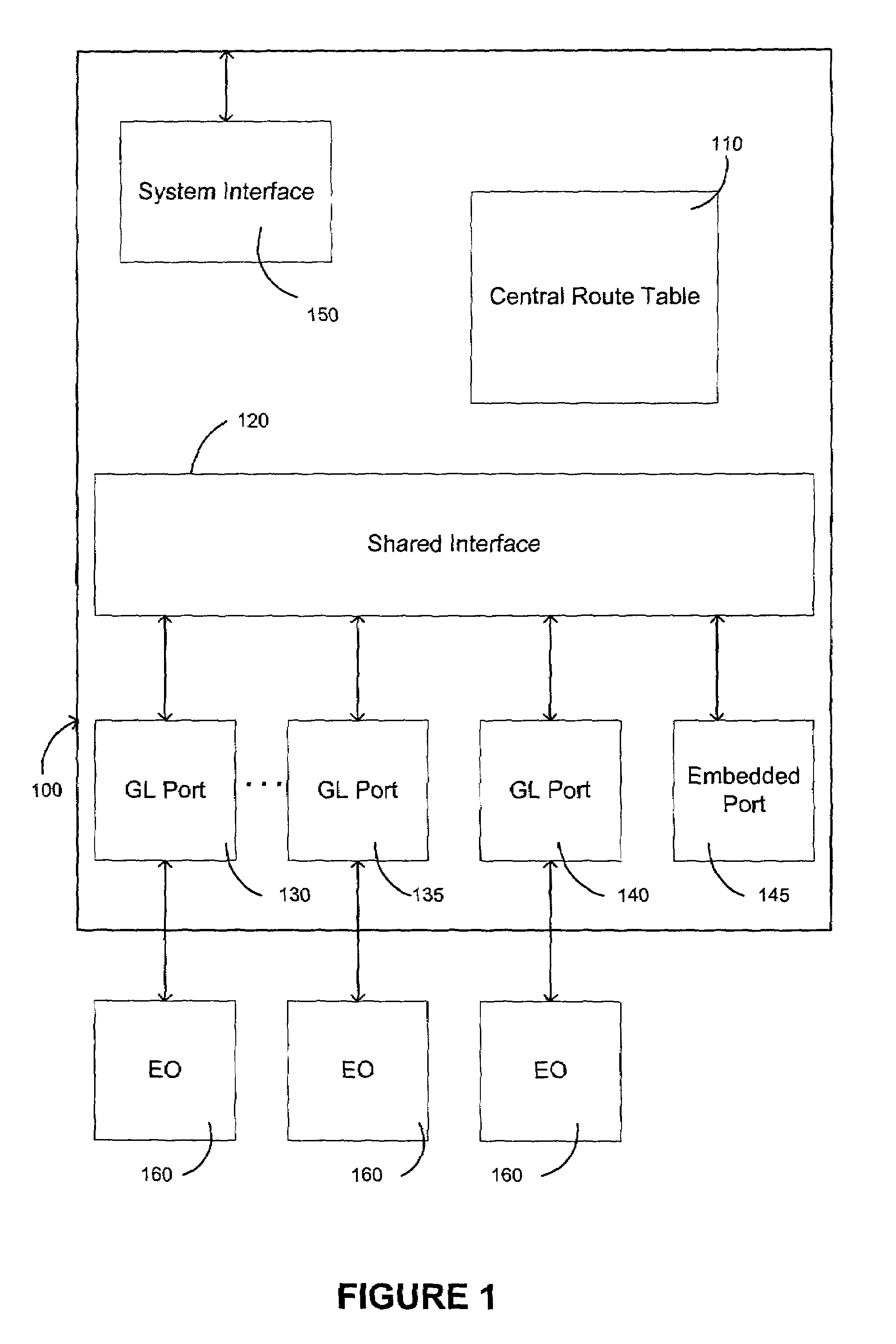

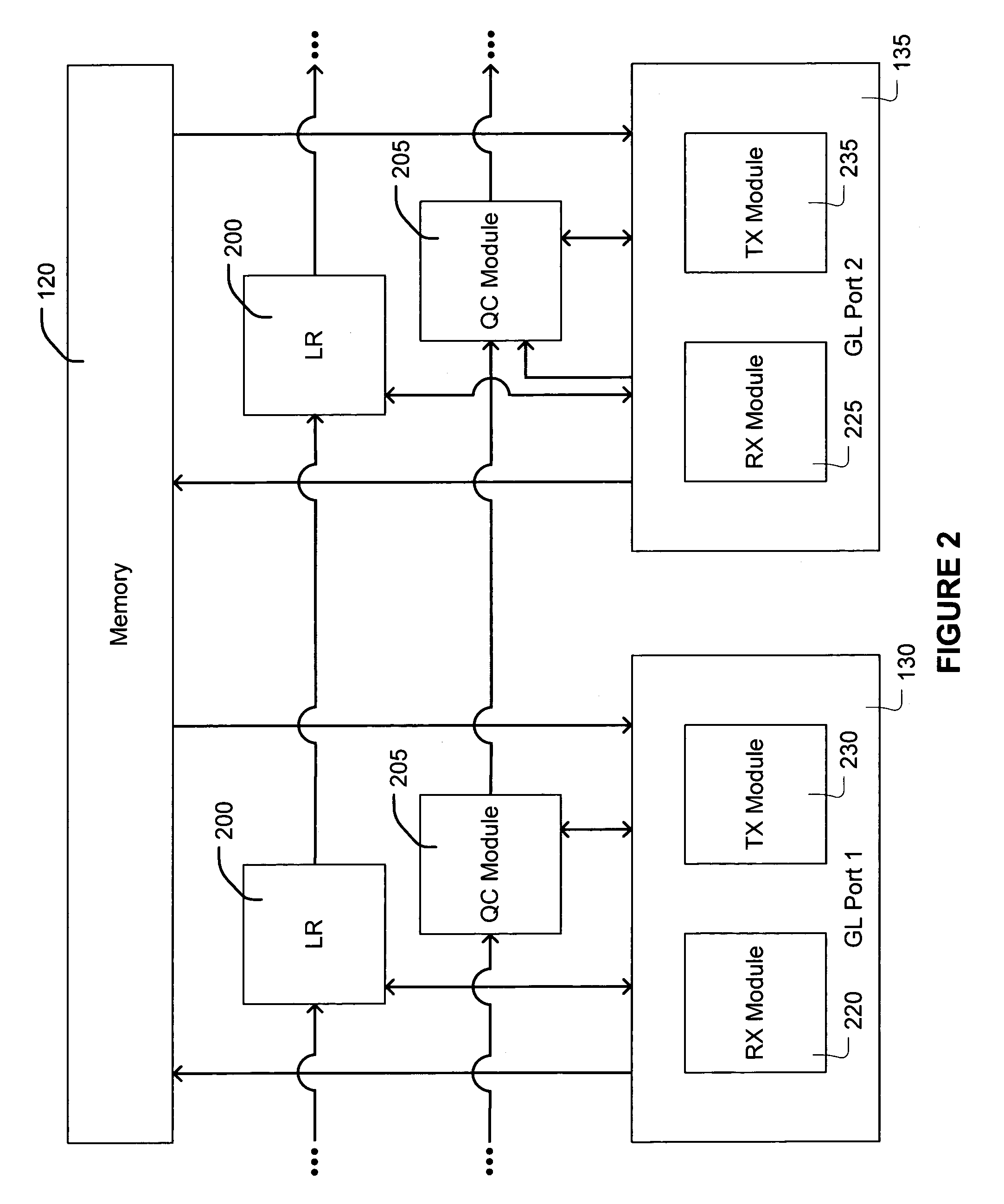

Connection admission control and routing by allocating resources in network nodes

A method and apparatus regulate the admission control of, and requests for routing of, virtual circuits in a network by determining network resource requirements for the virtual circuits. The method is generalized for the shared-memory architecture of a network node, to allocate a buffer size value, Bi, to an output port, i, for use in the effective bandwidth computation. A static allocation policy is utilized to allocate the available buffer, BSMF, to each output port, i. The allocated buffer is determined by selecting a value from a range having a lower bound obtained by partitioning the buffer, BSMF, to evenly divide the buffer space among all the output ports, such that∑iBi=BSMF;and having an upper bound obtained by using the total available buffer, BSMF, for each port in their computation of effective bandwidths. The network resource requirements are based on a set of parameters used to control the flow of information from a communications device onto the virtual circuit. The requirements for network resources typically include buffer space requirements in network nodes and bandwidth requirements in network links, and the parameter used to control the flow of information are those associated with an access regulator, such as a leaky bucket regulator (LBR). The network resource determination is made for the case where lossless performance in the network is required and in the case where statistical multiplexing with limited loss is allowed.

Owner:WSOU INVESTMENTS LLC

System and method for a shared memory architecture for high speed logging and trending

InactiveUS20030028552A1Data processing applicationsHardware monitoringShared memory architectureComputerized system

An improved method and system for high speed logging and trending of data. Logging of data may include: receiving data; and storing the data, and an index to the data, in a shared memory location coupled to a database. Trending of data may include: retrieving requested data from the shared memory location using the index of the requested data, and displaying the retrieved data. User configurable frequencies may be provided for various actions on the data. The data may be measurement data, including a stream of data comprising a plurality of entries. The logging and trending may be: (1) performed substantially concurrently; (2) implemented using shared memory templates. The database may reside on a first computer system, and the logging and trending may be performed on the first computer system or on a second computer system coupled to the first computer system via a network.

Owner:NATIONAL INSTRUMENTS

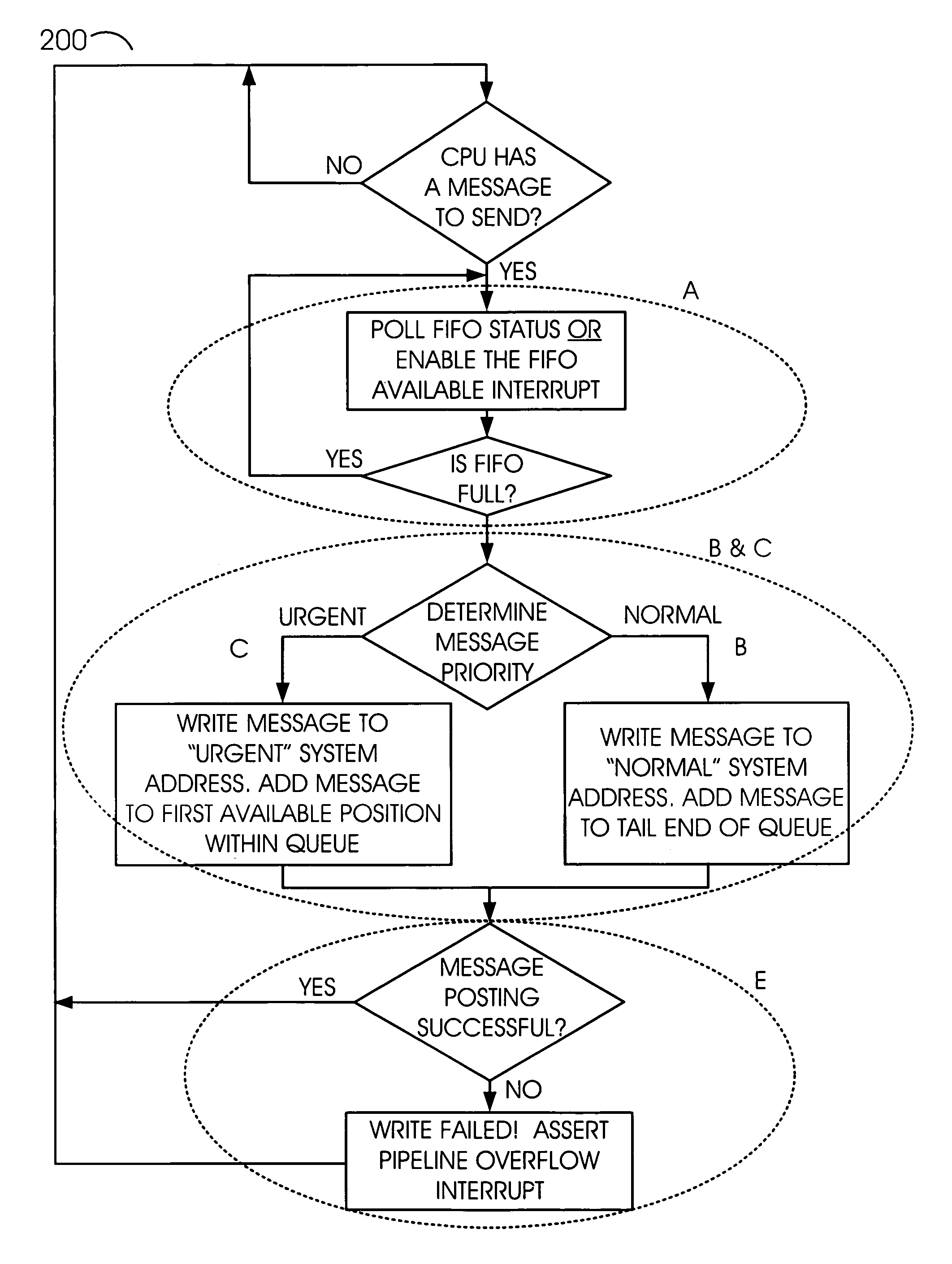

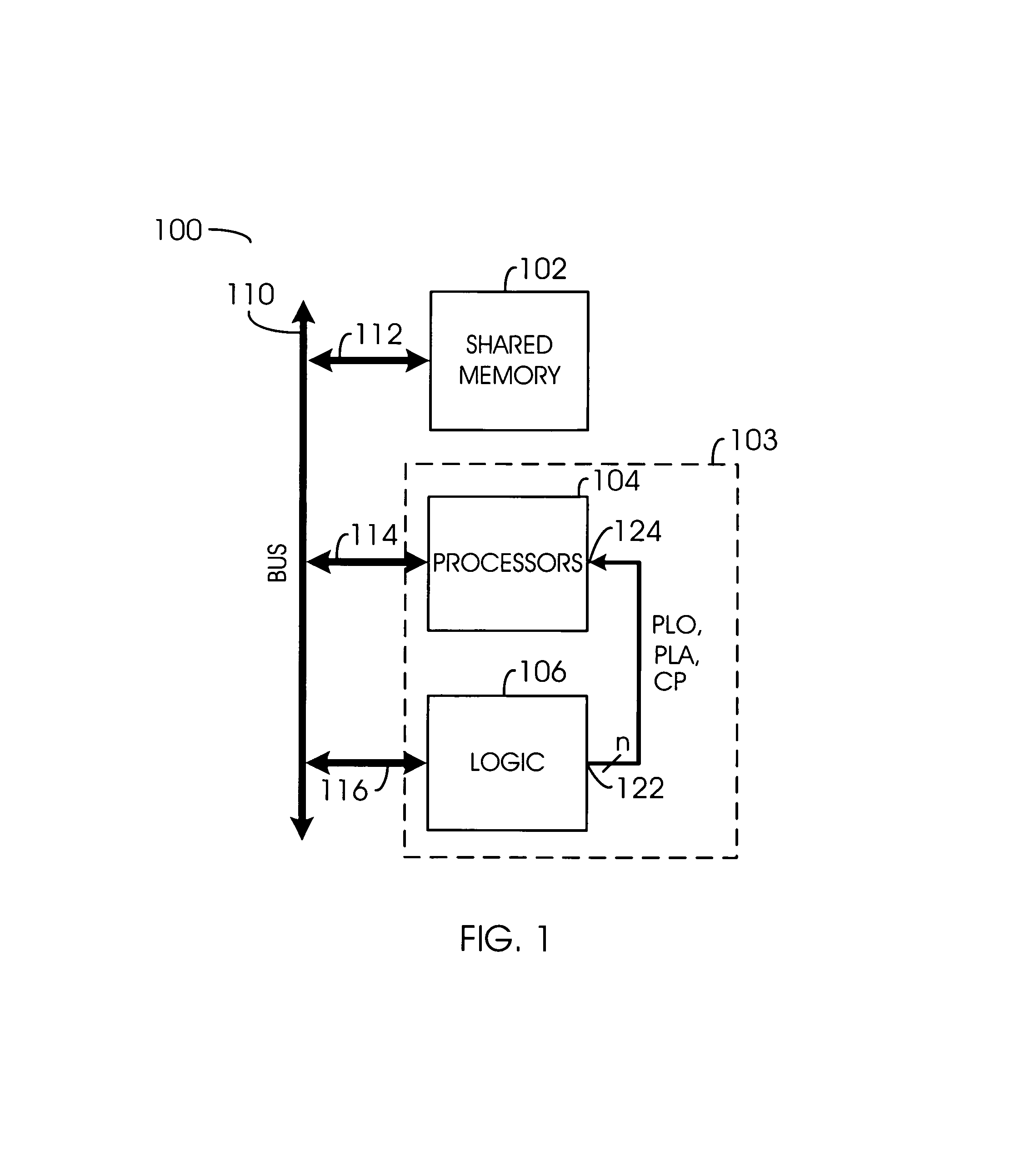

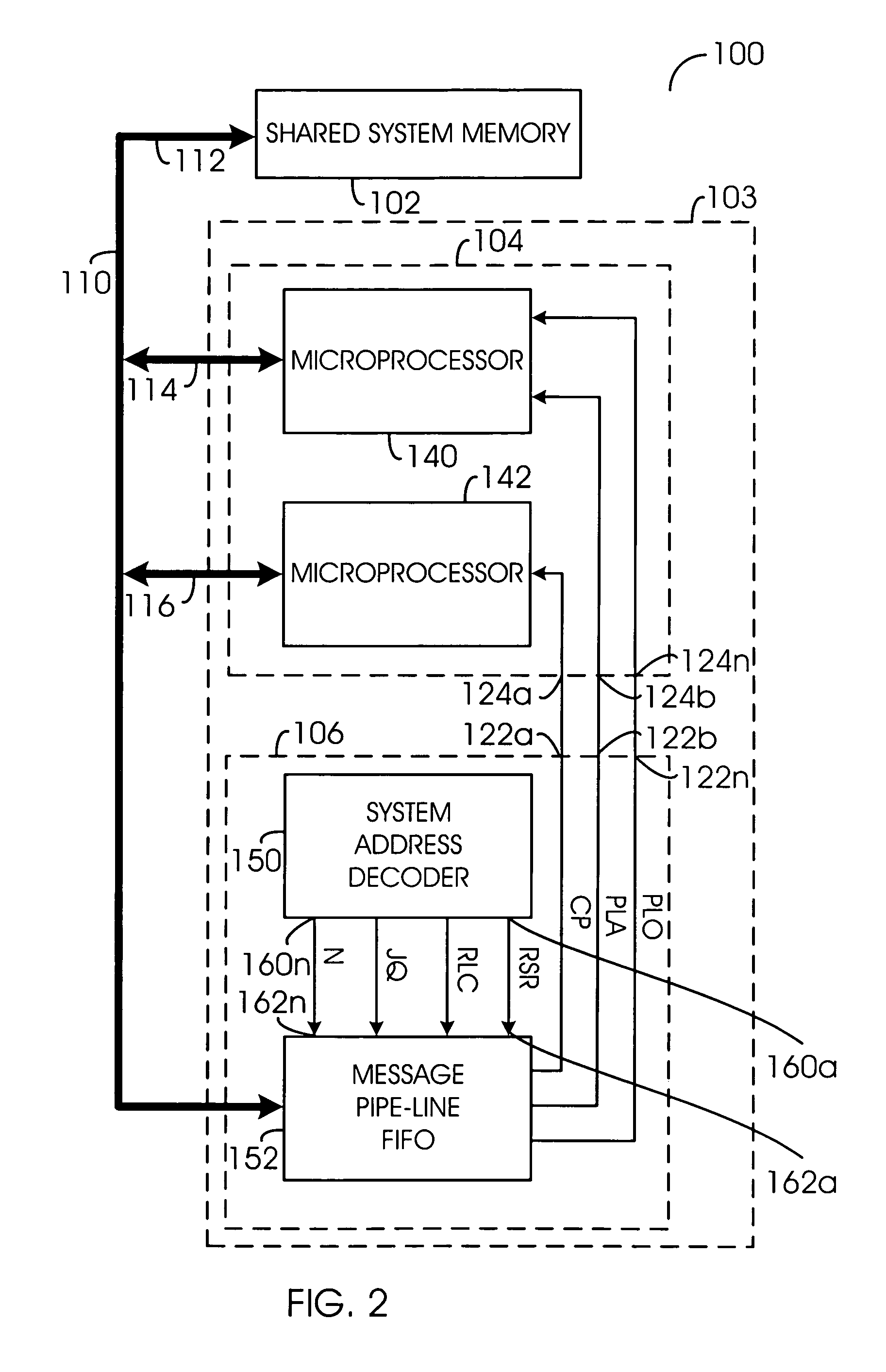

Method for multiprocessor communication within a shared memory architecture

InactiveUS7035908B1Digital computer detailsMultiprogramming arrangementsShared memory architectureMulti processor

An apparatus comprising a shared memory and a multiprocessor logic circuit. The shared memory may be configured to store data. The multiprocessor logic circuit may comprise a plurality of processors and a message circuit. The message circuit may be configured to pass messages between the processors.

Owner:AVAGO TECH INT SALES PTE LTD

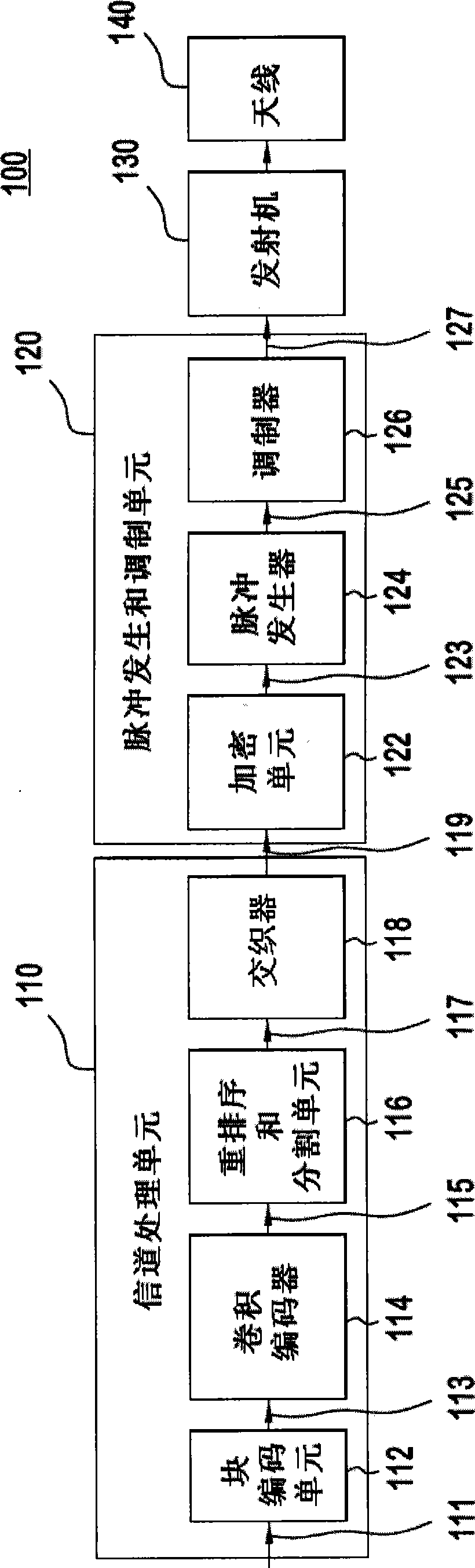

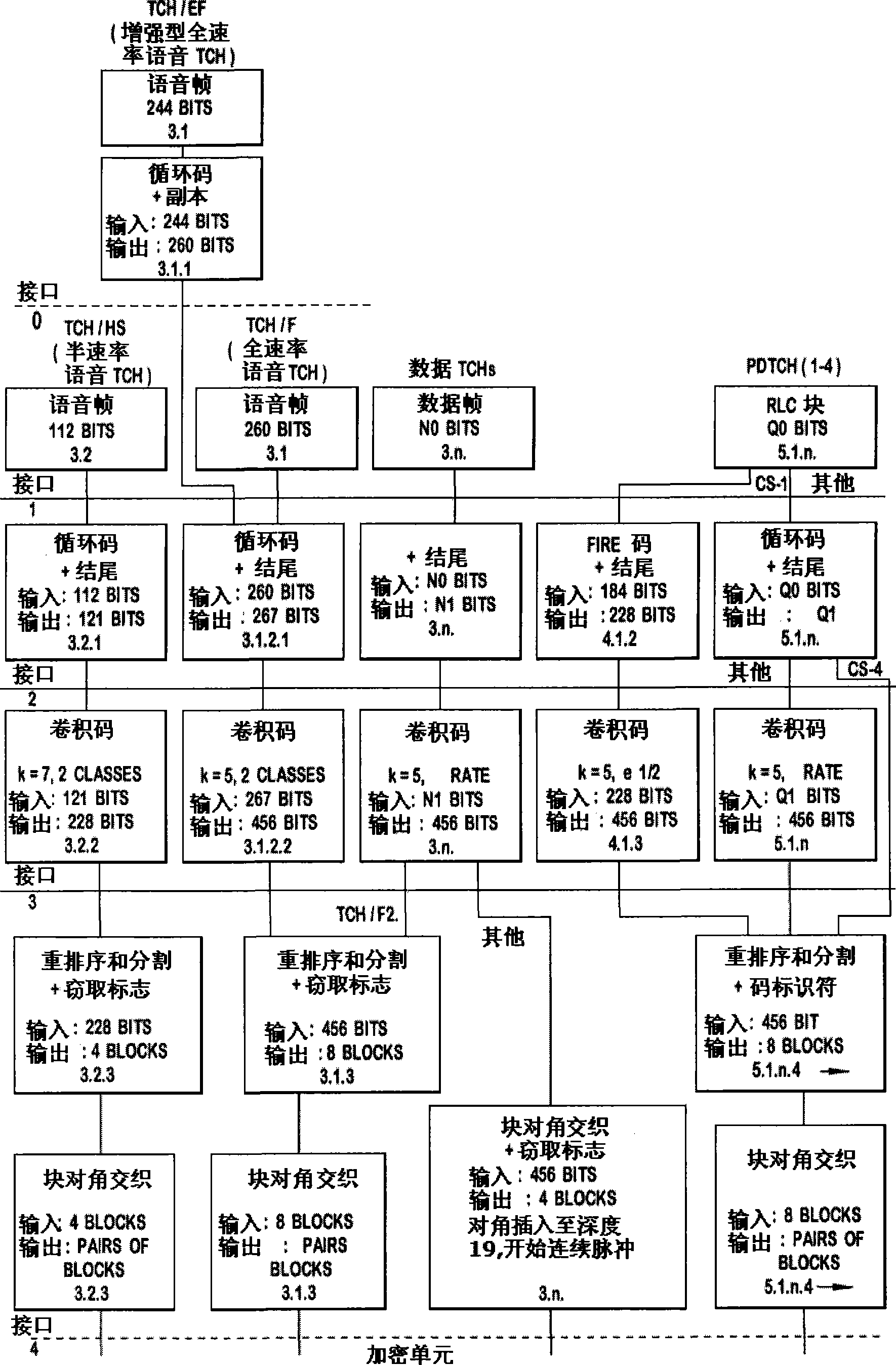

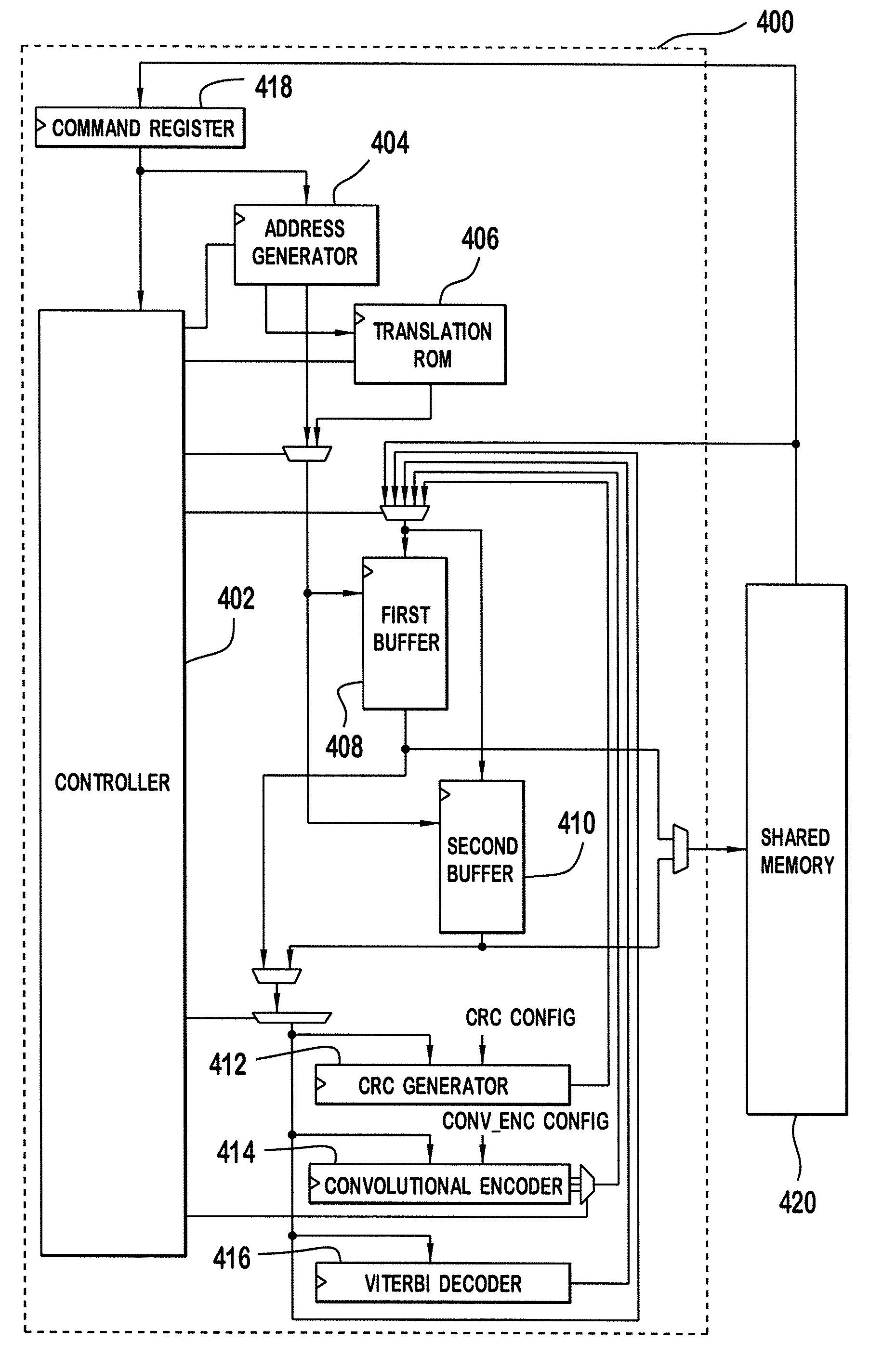

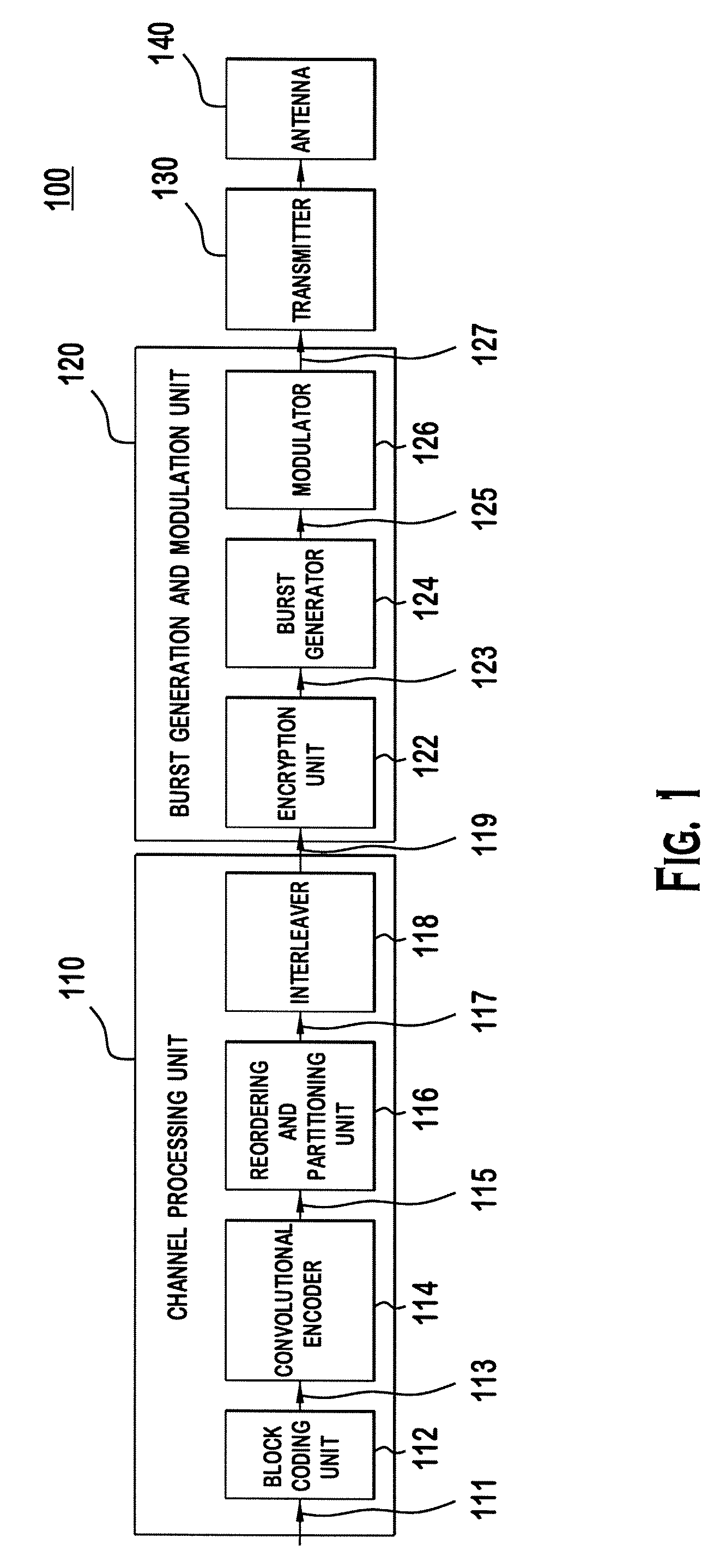

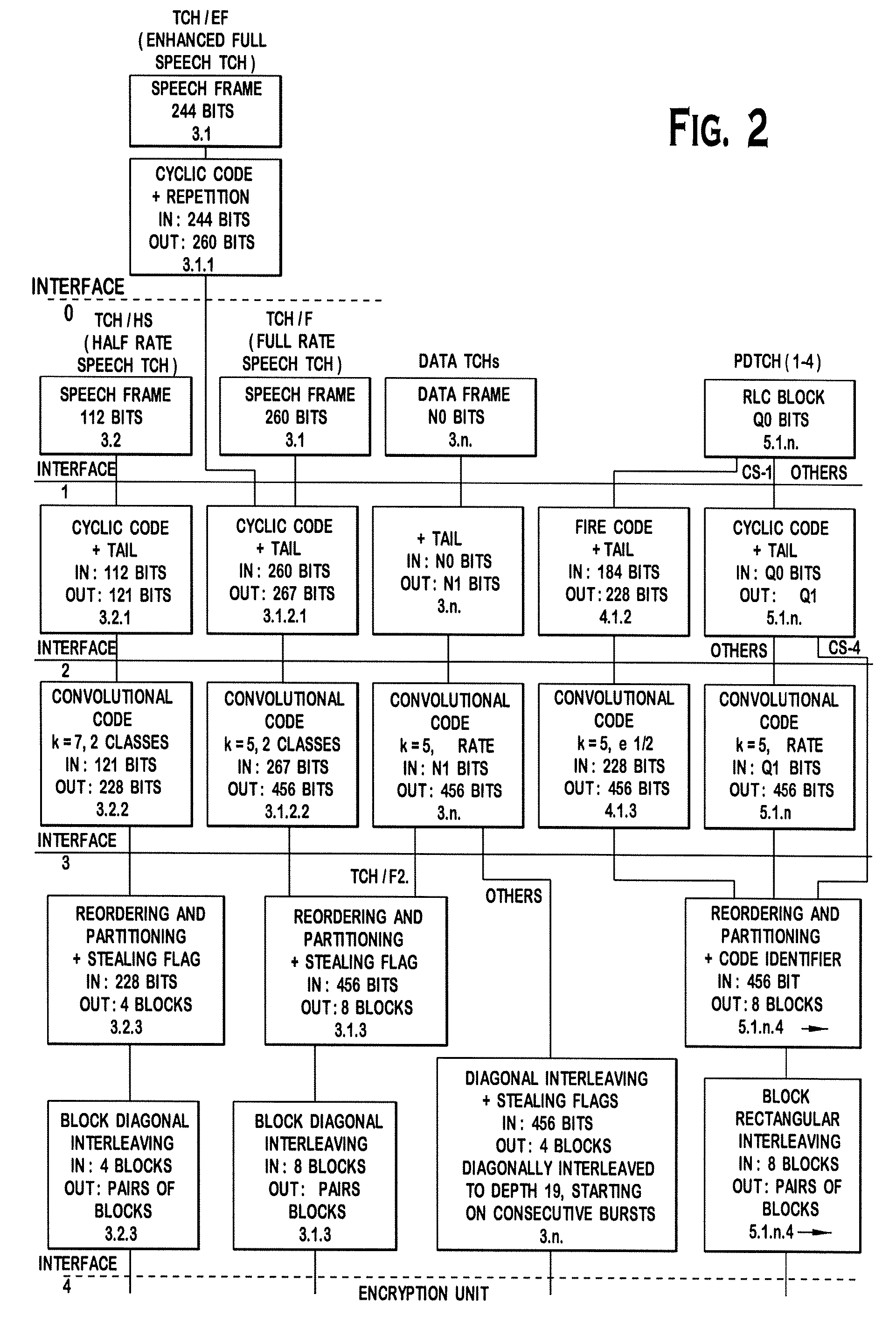

Symbol rate hardware accelerator

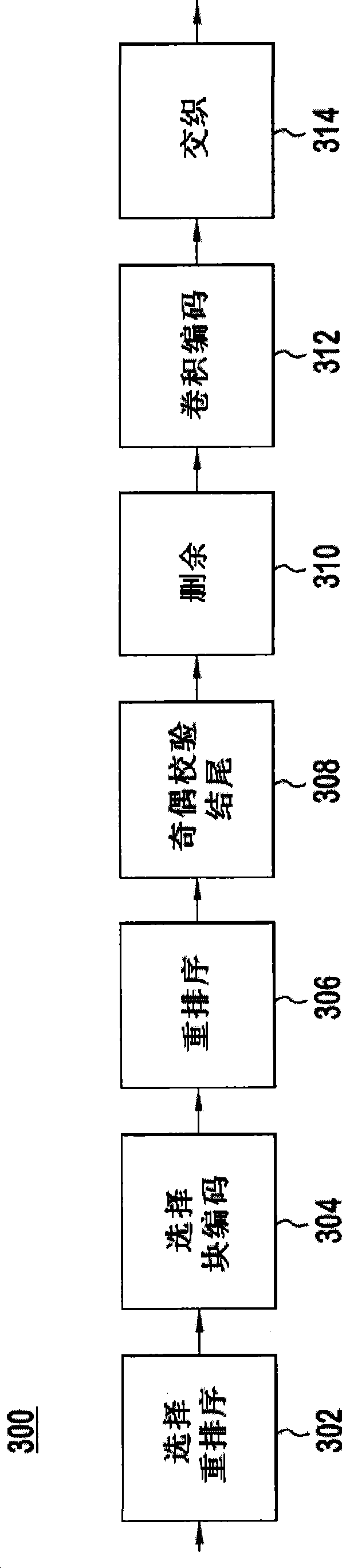

InactiveCN101490994AError detection/correctionForward error control useShared memory architectureAddress generator

The invention relates to a hardware accelerator including a first buffer, a second buffer, address generator(s), a translation read-only memory (ROM), a cyclic redundancy check (CRC) generator, a convolutional encoder and a controller. The first and second buffers store information bits. The address generator(s) generate(s) an address for accessing the first buffer, the second buffer and a shared memory architecture (SMA). The translation ROM is used in generating a translated address for accessing the first buffer and the second buffer. The controller sets parameters for the CRC generator, the convolutional encoder and the address generator, and performs a predefined sequence of control commands for channel processing, such as reordering, block coding, parity tailing, puncturing, convolutional encoding, and interleaving, on the information bits by manipulating the information bits while moving the information bits among the first buffer, the second buffer, the SMA, the CRC generator, and the convolutional encoder.

Owner:INTERDIGITAL TECH CORP

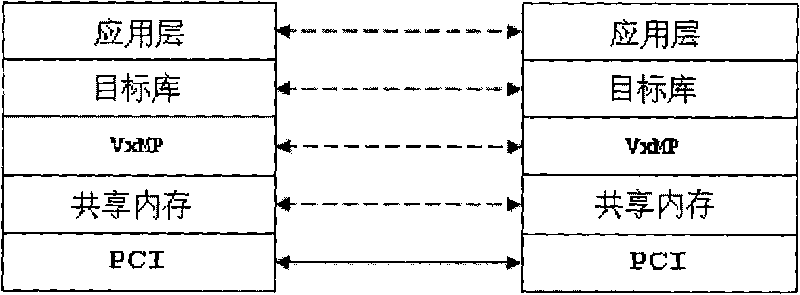

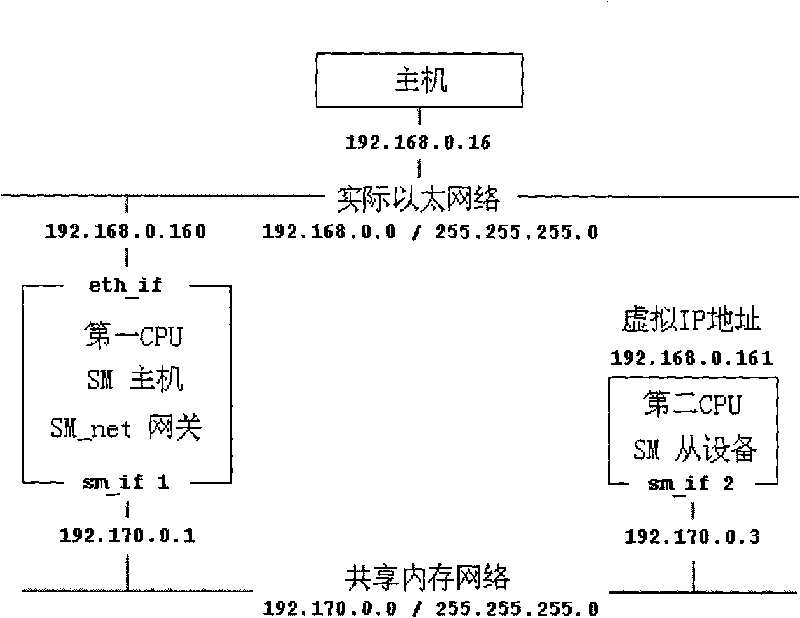

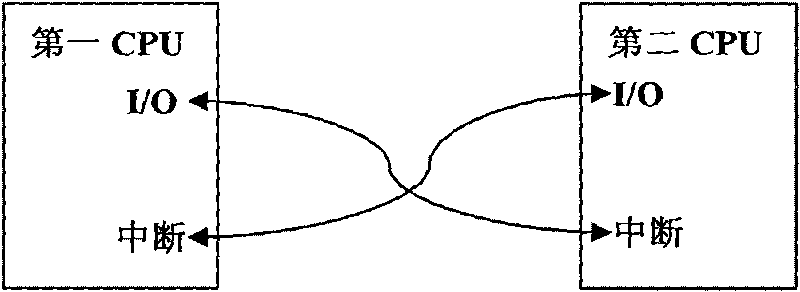

Shared memory architecture-based multiprocessor communication device and method thereof

InactiveCN101739380AData transmission is completely transparentDigital computer detailsElectric digital data processingOperational systemSpace mapping

The invention relates to a shared memory architecture-based multiprocessor communication device and a method thereof. The device comprises a first CPU and a second CPU, wherein a section of memory space on the first CPU is mapped to a PCI address space of the second CPU to form a section of a linear shared memory space which can be accessed by both the first CPU and the second CPU so as to realize information sharing, and an address space used for transmitting ethernet messages between the first CPU and the second CPU and an address space for sharing objects between a plurality of tasks on the first CPU and the second CPU are arranged in the shared memory space to achieve information transfer. On the basis of taking characteristics of a conventional tight coupling mode as a reference, by combining with a commercial operation system, the shared memory architecture-based multiprocessor communication device establishes a shared memory architecture-based communication mechanism between the two CPUs, and realizes the parallel processing of complex data while performing system control.

Owner:CHINESE AERONAUTICAL RADIO ELECTRONICS RES INST

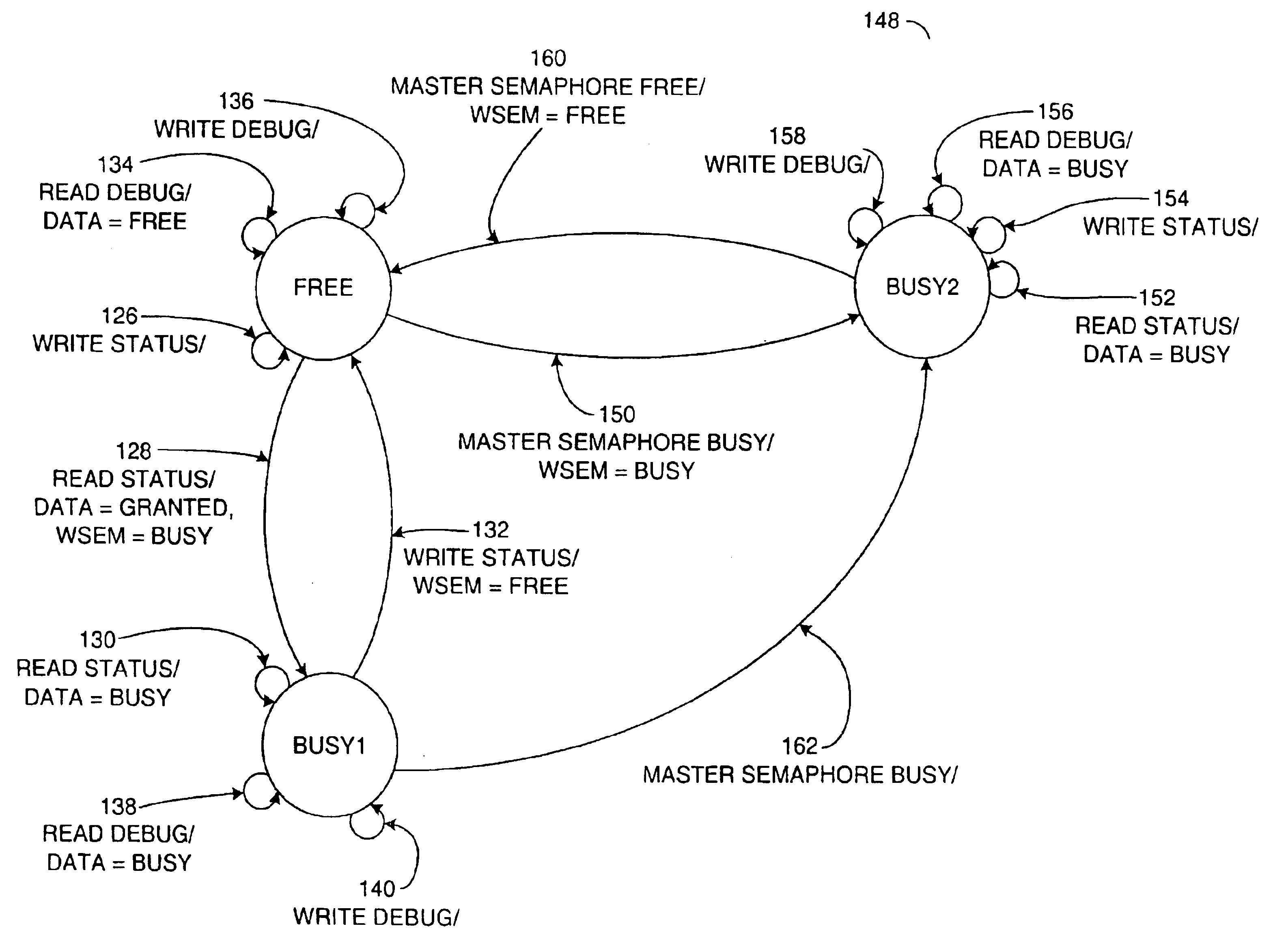

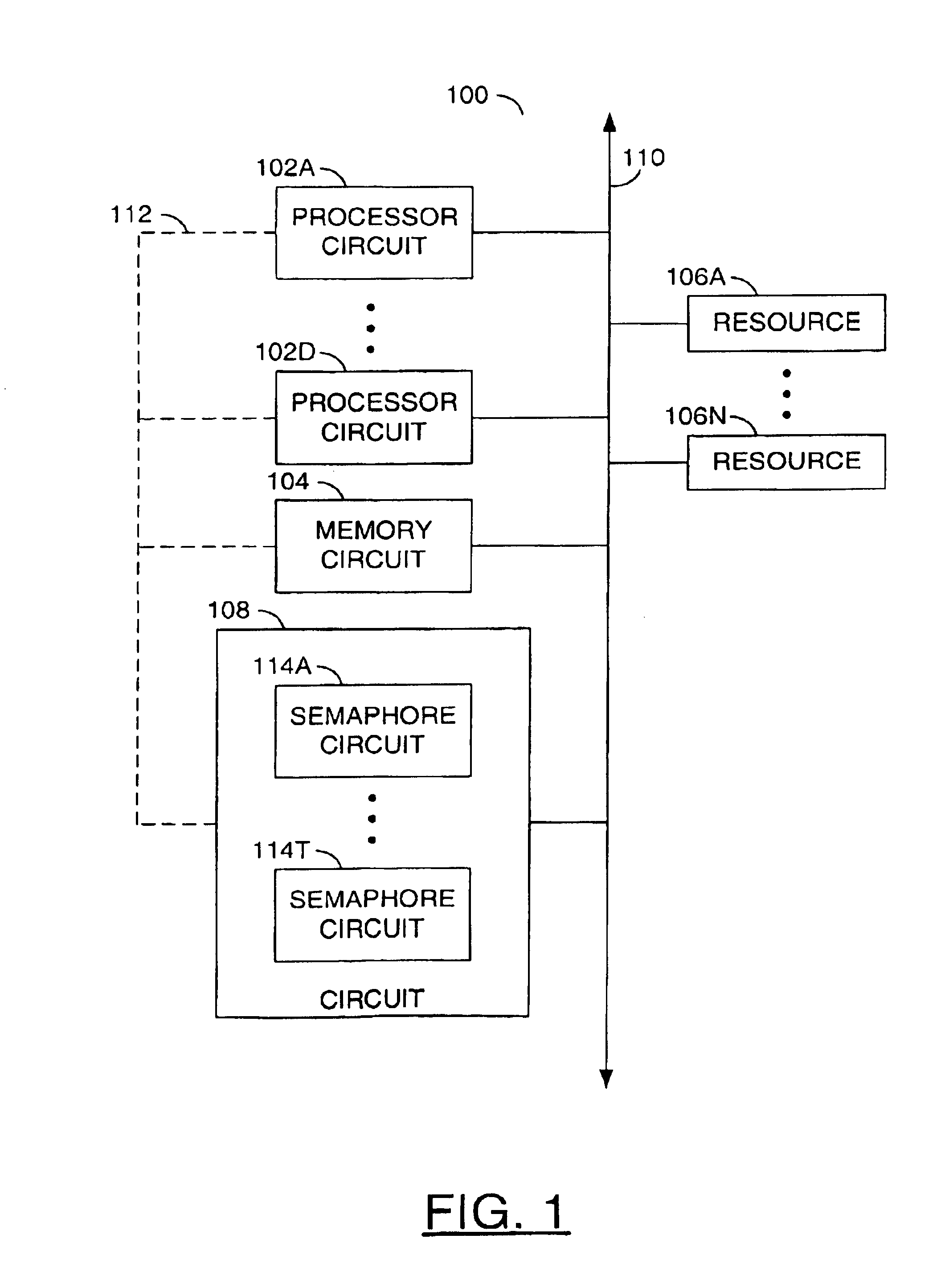

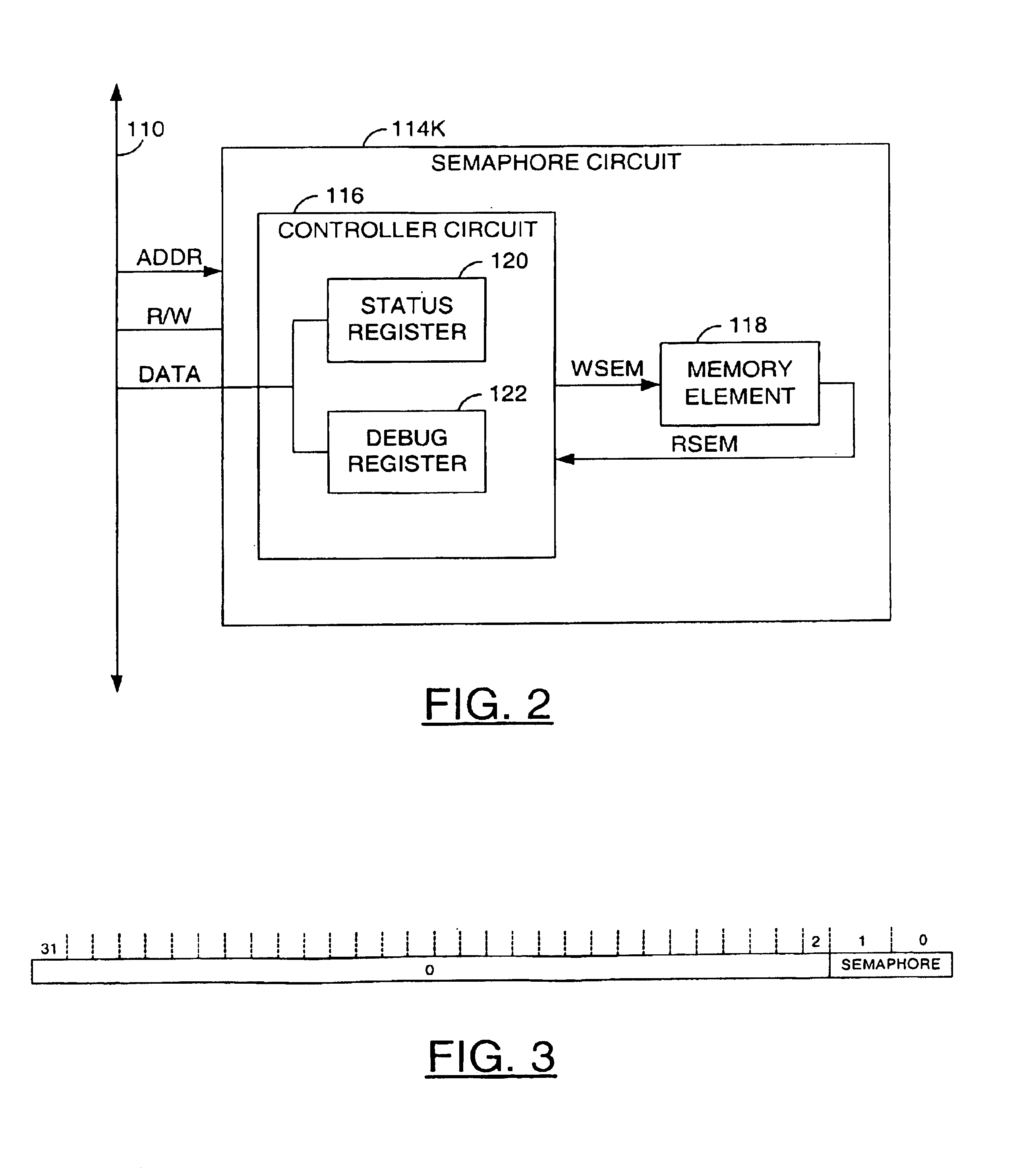

Hardware semaphores for a multi-processor system within a shared memory architecture

InactiveUS6892258B1Minimize involvementRobust solutionMemory systemsShared memory architectureMulti processor

A circuit generally comprising a memory element and a controller. The memory element may define a semaphore allocatable to a resource. The controller may be configured to (i) present a granted status in response to a processor reading a first address while the semaphore has a free status, (ii) set the semaphore to a busy status in response to presenting the granted status, and (iii) present the busy status in response to the processor reading the first address while the semaphore has the busy status.

Owner:AVAGO TECH INT SALES PTE LTD

Early return indication for read exclusive requests in shared memory architecture

InactiveUS7536514B2Little and latencyMemory adressing/allocation/relocationShared memory architectureCommunication interface

An early return indication is used to notify a first communications interface, prior to a response being received from any of a plurality of sources coupled to a second communications interface, that the return data can be used by the first communications interface when it is received thereby from a source of the return data if the source has an exclusive copy of the return data. By doing so, the first communications interface can often prepare for forwarding the return data over its associated communication link such that the data can be forwarded with little or no latency once the data is retrieved from its source, and may be able to initiate the return of data over the communication link prior to all responses being received from the other sources. The early return indication may also serves as an early coherency indication in that the first communications interface is no longer required to wait for updating of a coherency directory to complete prior to forwarding the return data over the communication link.

Owner:IBM CORP

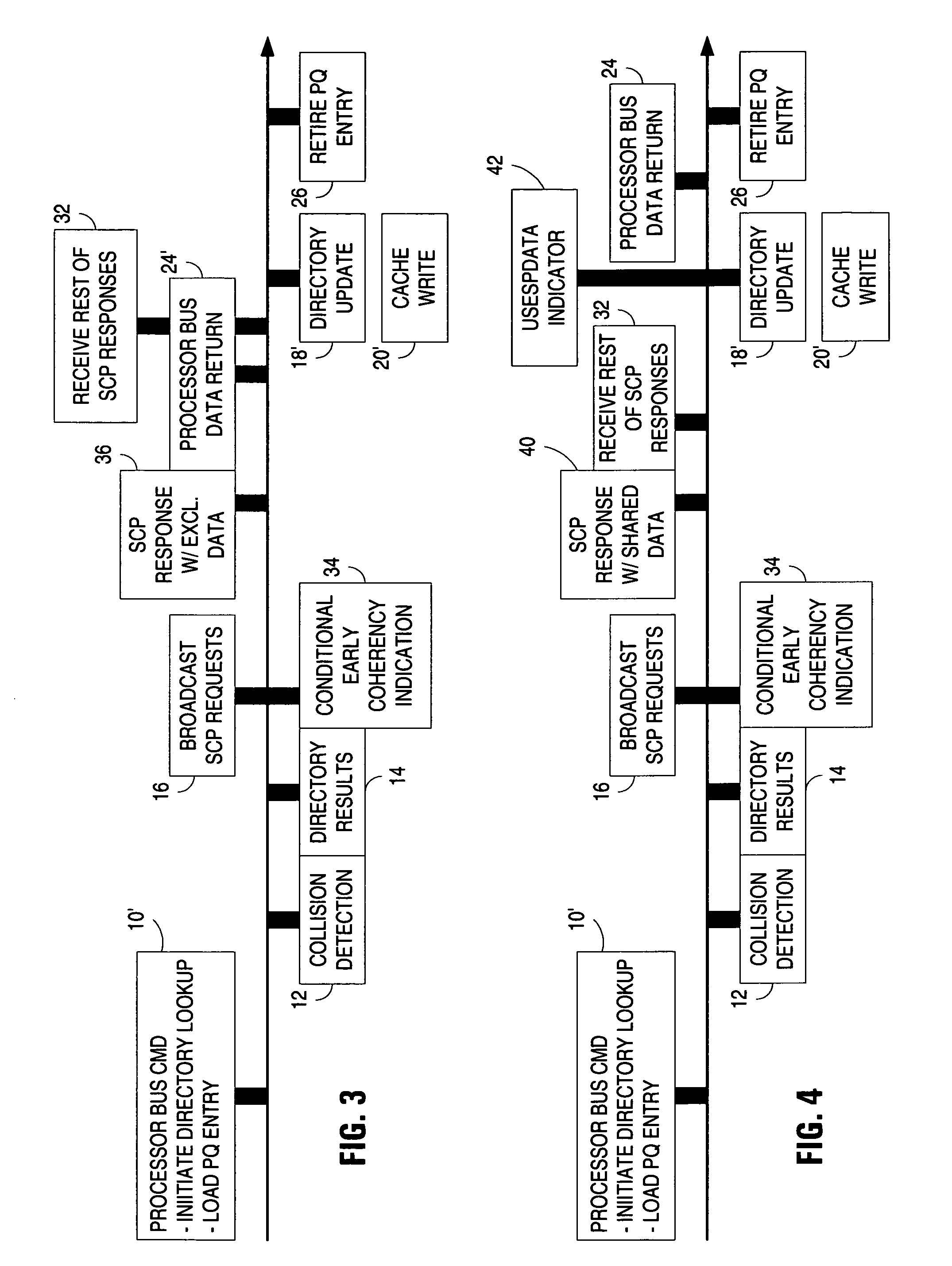

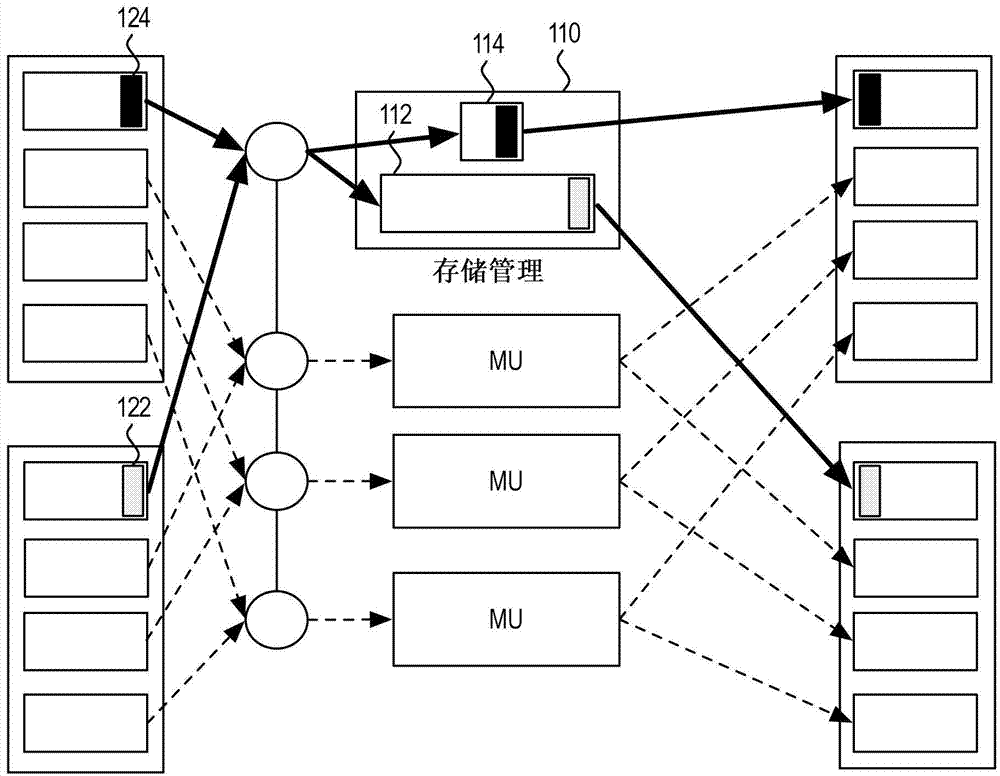

System and method for improving multicast performance in banked shared memory architectures

A system and method for improving multicast performance in banked shared memory architectures. Temporal localities created by multicast packets in a shared memory bank are addressed through caching. In one embodiment, multicast packets are stored in a cache memory (110) that is associated with a bank of shared memory (114). In another embodiment, read requests for multicast packets are stored in a read request cache, wherein additional read requests are accumulated prior to an actual read event.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

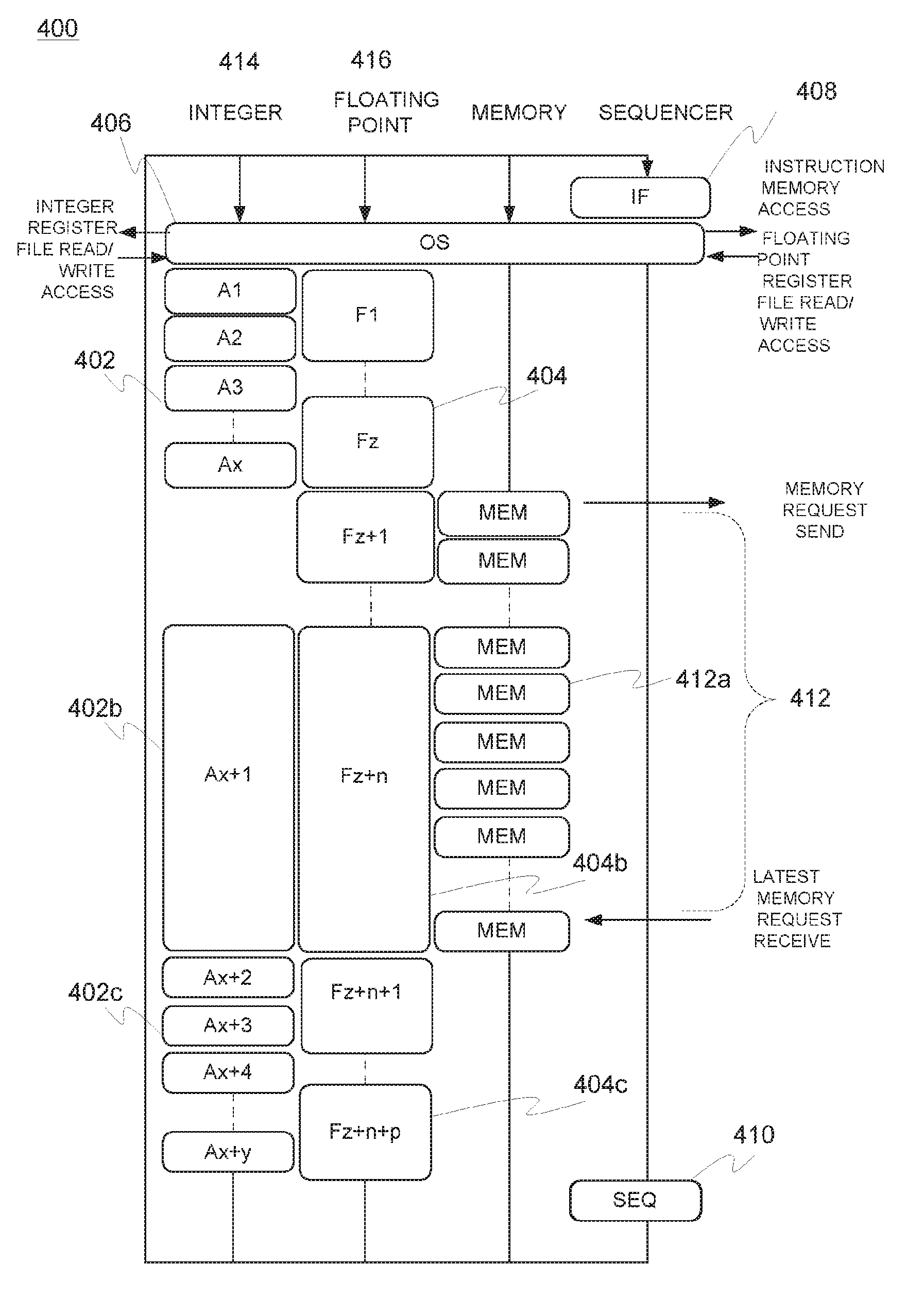

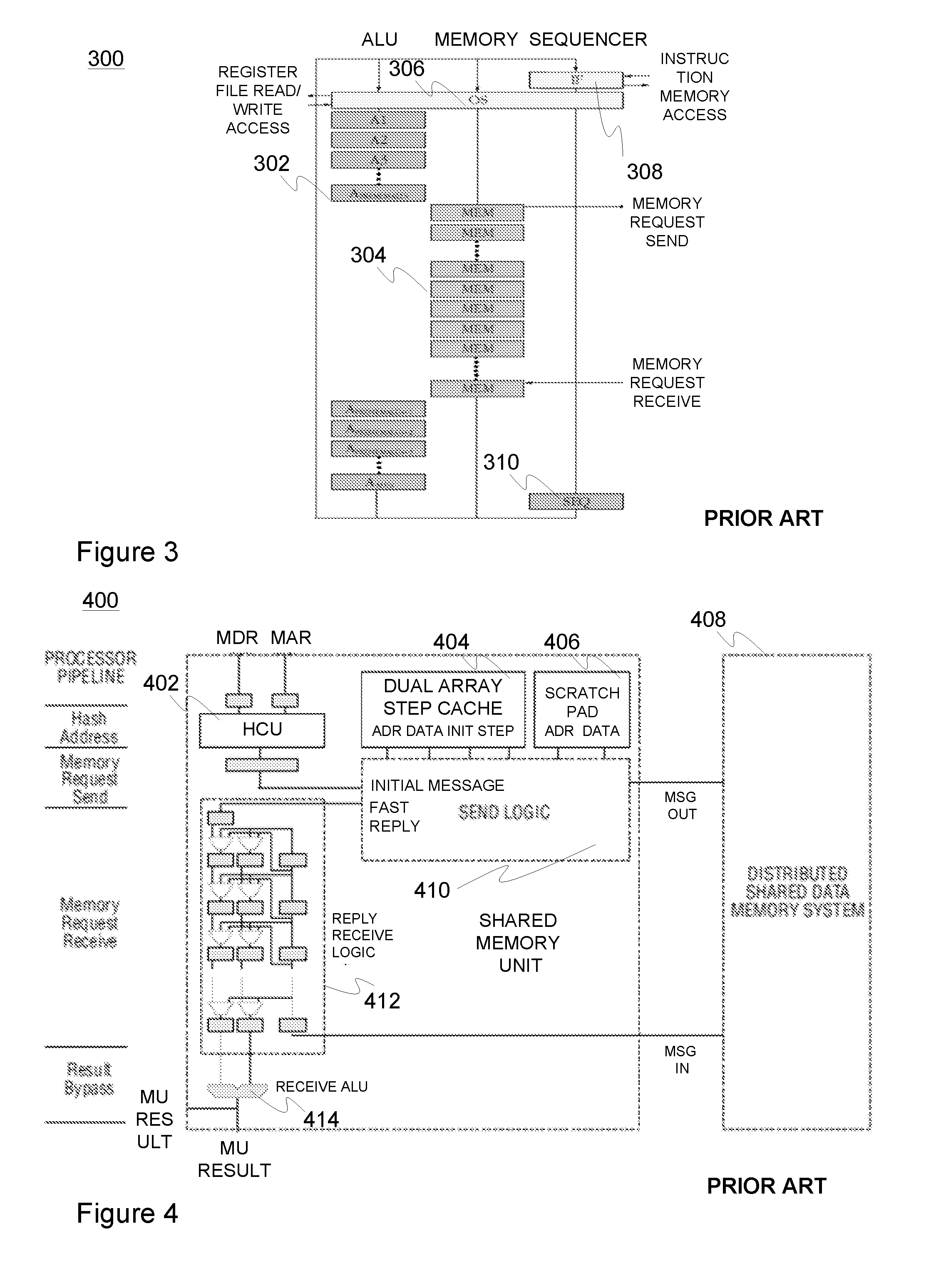

Floating-point supportive pipeline for emulated shared memory architectures

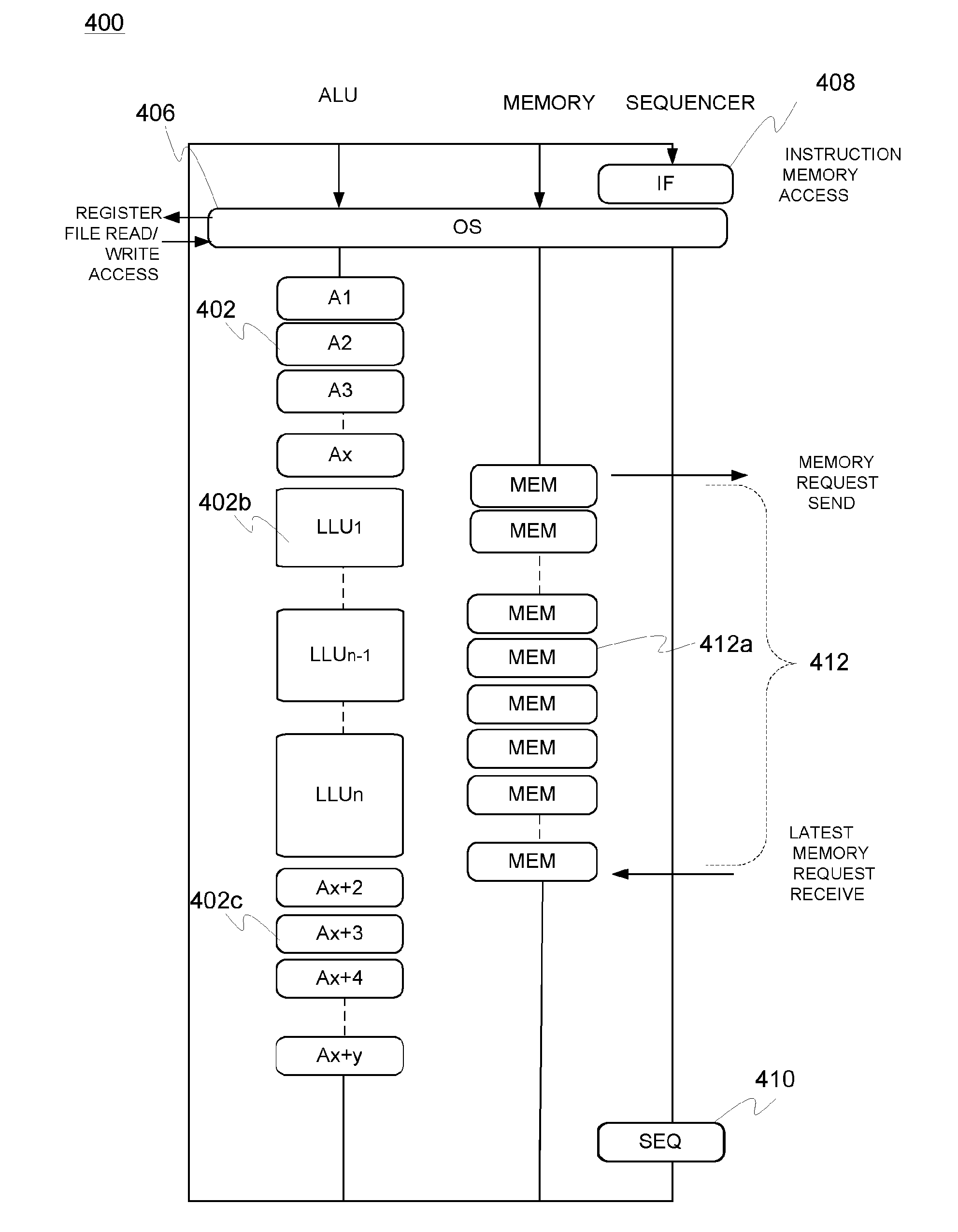

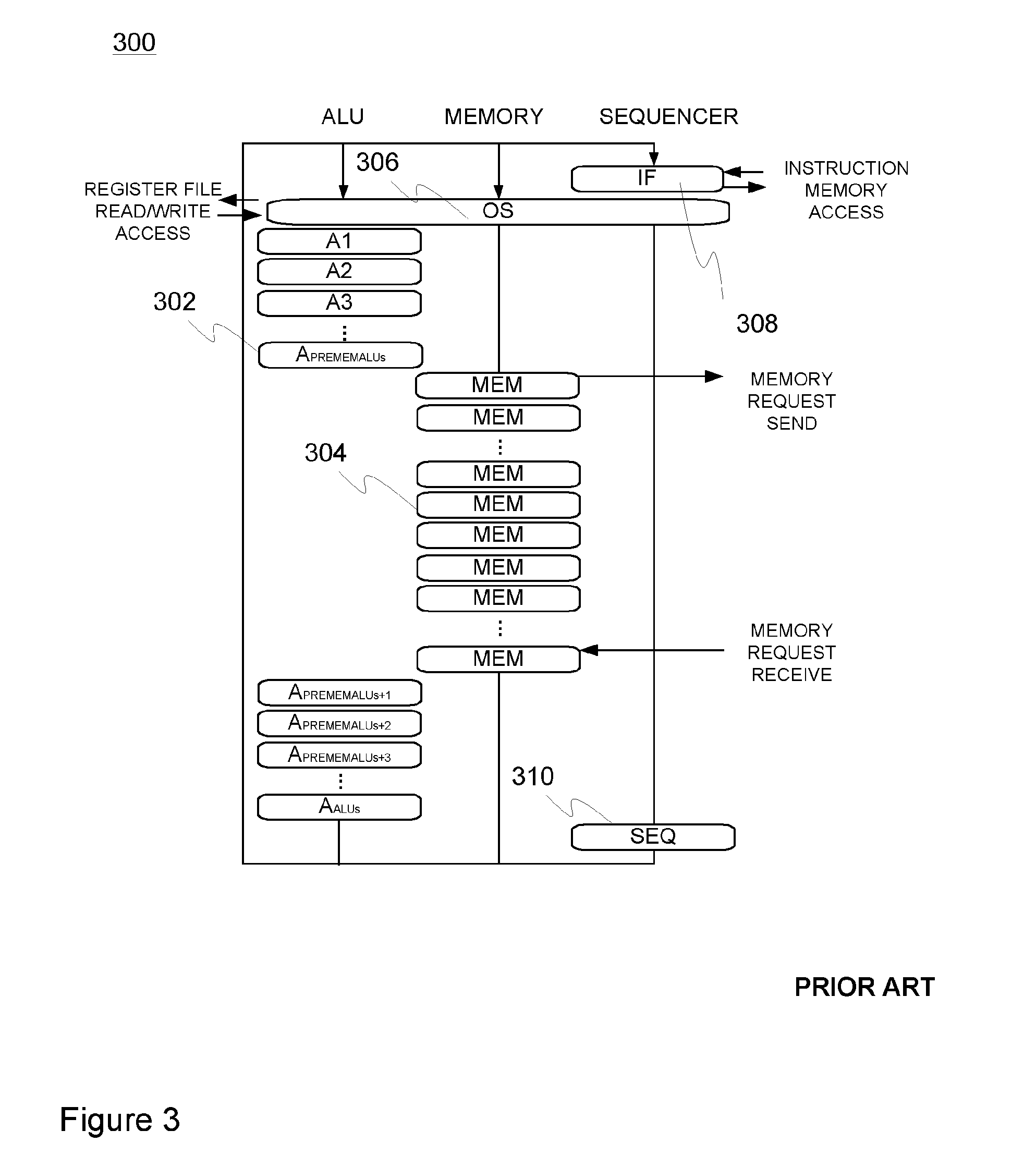

PendingUS20160283249A1Efficient executionImprove throughputGeneral purpose stored program computerConcurrent instruction executionShared memory architectureArithmetic logic unit

A processor architecture arrangement for emulated shared memory (ESM) architectures, comprising a number of multi-threaded processors each provided with interleaved inter-thread pipeline (400) and a plurality of functional units (402, 402b, 402c, 404, 404b, 404c) for carrying out arithmetic and logical operations on data, wherein the pipeline (400) comprises at least two operatively parallel pipeline branches (414, 416), first pipeline branch (414) comprising a first sub-group of said plurality of functional units (402, 402b, 402c), such as ALUs (arithmetic logic unit), arranged for carrying out integer operations, and second pipeline branch (416) comprising a second, non-overlapping sub-group of said plurality of functional units (404, 404b, 404c), such as FPUs (floating point unit), arranged for carrying out floating point operations, and further wherein one or more of the functional units (404b) of at least said second sub-group arranged for floating point operations are located operatively in parallel with the memory access segment (412, 412a) of the pipeline (400).

Owner:TEKNOLOGIAN TUTKIMUSKESKUS VTT

Symbol rate hardware accelerator

InactiveUS8015471B2Stay flexibleCode conversionDigital computer detailsShared memory architectureAddress generator

A hardware accelerator includes a first buffer, a second buffer, address generator(s), a translation read-only memory (ROM), a cyclic redundancy check (CRC) generator, a convolutional encoder and a controller. The first and second buffers store information bits. The address generator(s) generate(s) an address for accessing the first buffer, the second buffer and a shared memory architecture (SMA). The translation ROM is used in generating a translated address for accessing the first buffer and the second buffer. The controller sets parameters for the CRC generator, the convolutional encoder and the address generator, and performs a predefined sequence of control commands for channel processing, such as reordering, block coding, parity tailing, puncturing, convolutional encoding, and interleaving, on the information bits by manipulating the information bits while moving the information bits among the first buffer, the second buffer, the SMA, the CRC generator, and the convolutional encoder.

Owner:INTERDIGITAL TECH CORP

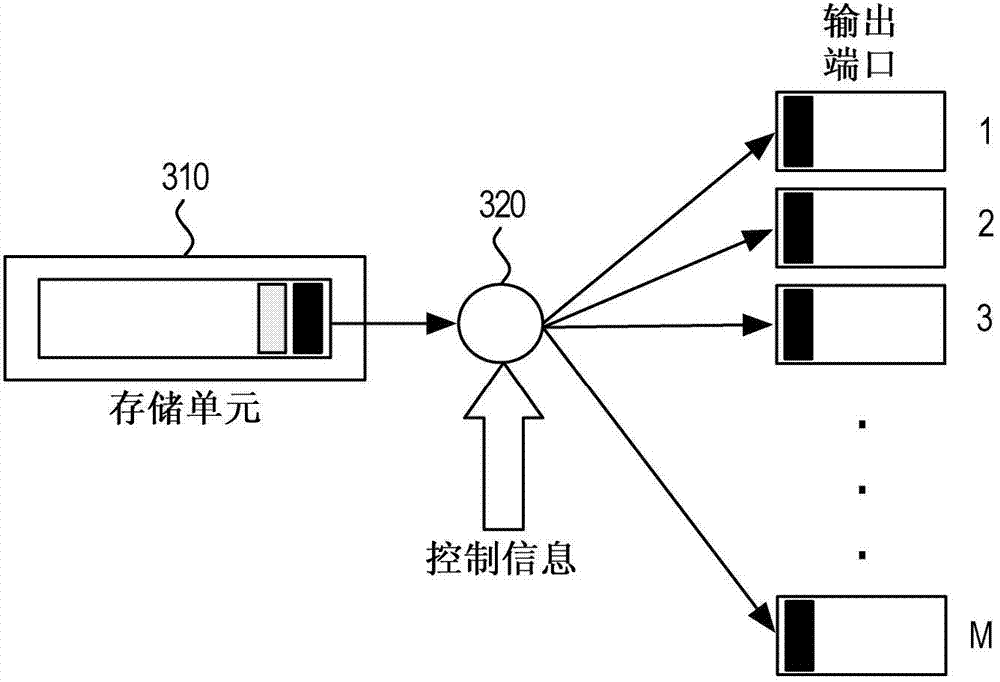

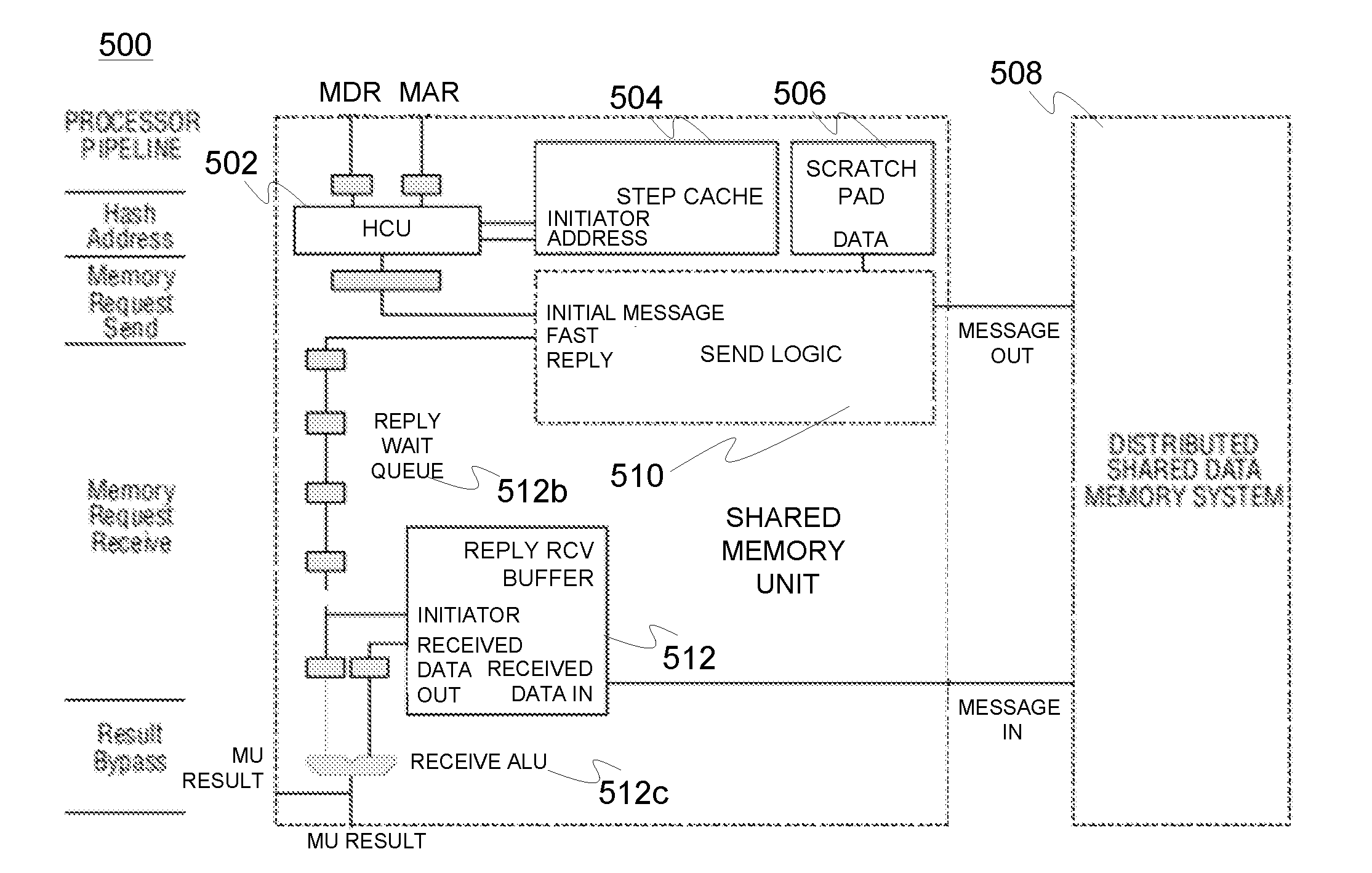

Memory unit for emulated shared memory architectures

ActiveUS20160124856A1Expedites memory accessEasy accessMemory architecture accessing/allocationProgram synchronisationShared memory architectureData field

A memory unit for handling data memory references of a multi-threaded processor with interleaved inter-thread pipeline in emulated shared memory architectures, includes a step cache defining associative cache memory array where data stays valid till the end of ongoing step of multithreaded execution, the memory array incorporating a plurality of cache lines with data fields, each line containing a data field for address tag and data field thread id of the first thread referring to a data memory location specified by the address tag, a scratchpad defining a memory buffer for storing internal data of multioperations, the buffer including a single data field for each processor thread, the memory unit configured to access the step cache for a cache search and scratchpad for retrieving and / or storing internal data at different clock cycles and different stages of the processor pipeline during multioperation execution involving data memory reference by the processor.

Owner:TEKNOLOGIAN TUTKIMUSKESKUS VTT

Early coherency indication for return data in shared memory architecture

InactiveUS8010682B2Little and latencyLower latencyMemory architecture accessing/allocationMultiple digital computer combinationsCommunication interfaceShared memory architecture

In a shared memory architecture, early coherency indication is used to notify a communications interface, prior to the data for a memory request is returned, and prior to updating a coherency directory in response to the memory request, that the return data can be used by the communications interface when it is received thereby from a source of the return data. By doing so, the communications interface can often begin forwarding the return data over its associated communication link with little or no latency once the data is retrieved from its source. In addition, the communications interface is often no longer required to wait for updating of the coherency directory to complete prior to forwarding the return data over the communication link. As such, the overall latency for handling the memory request is typically reduced.

Owner:IBM CORP

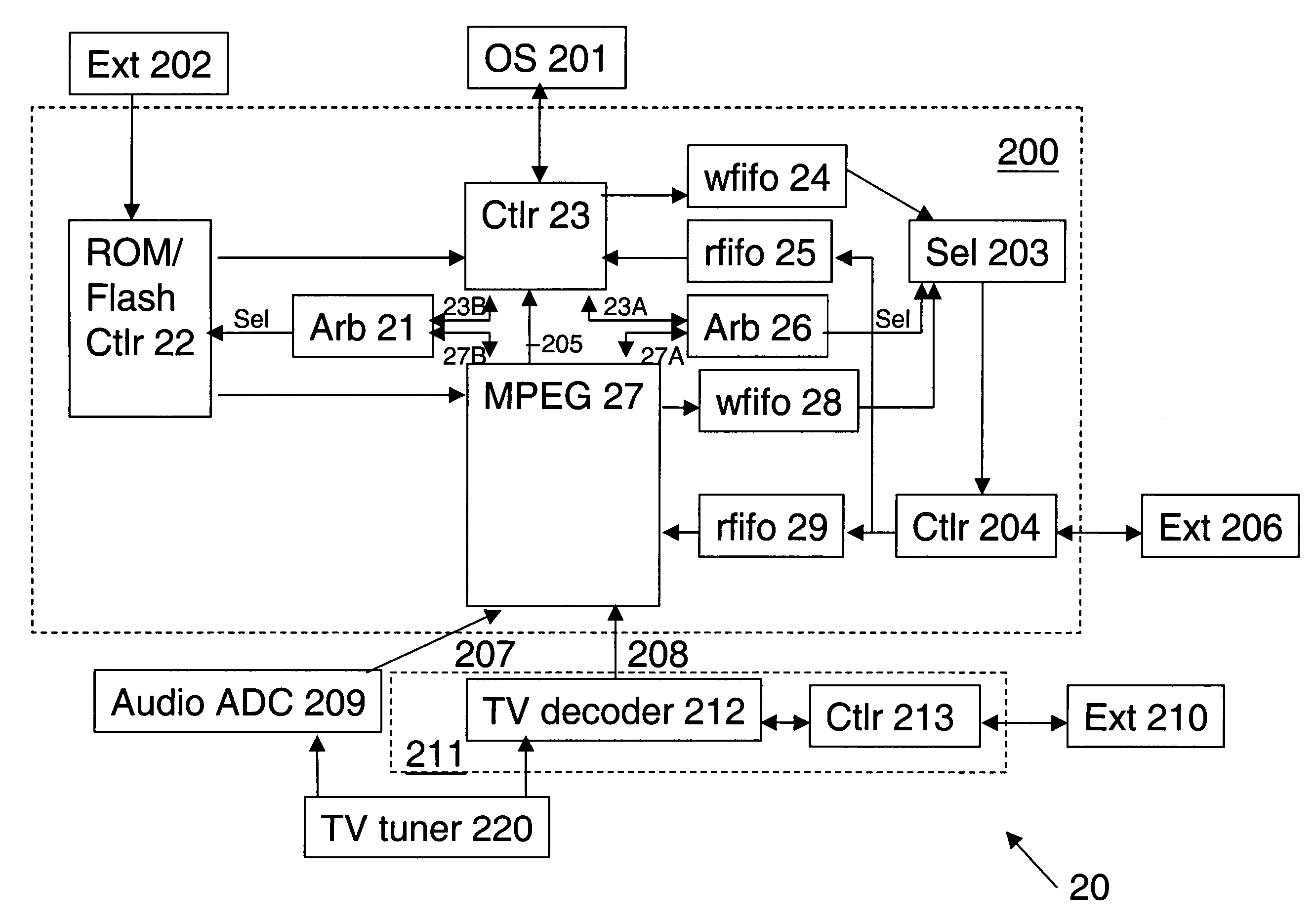

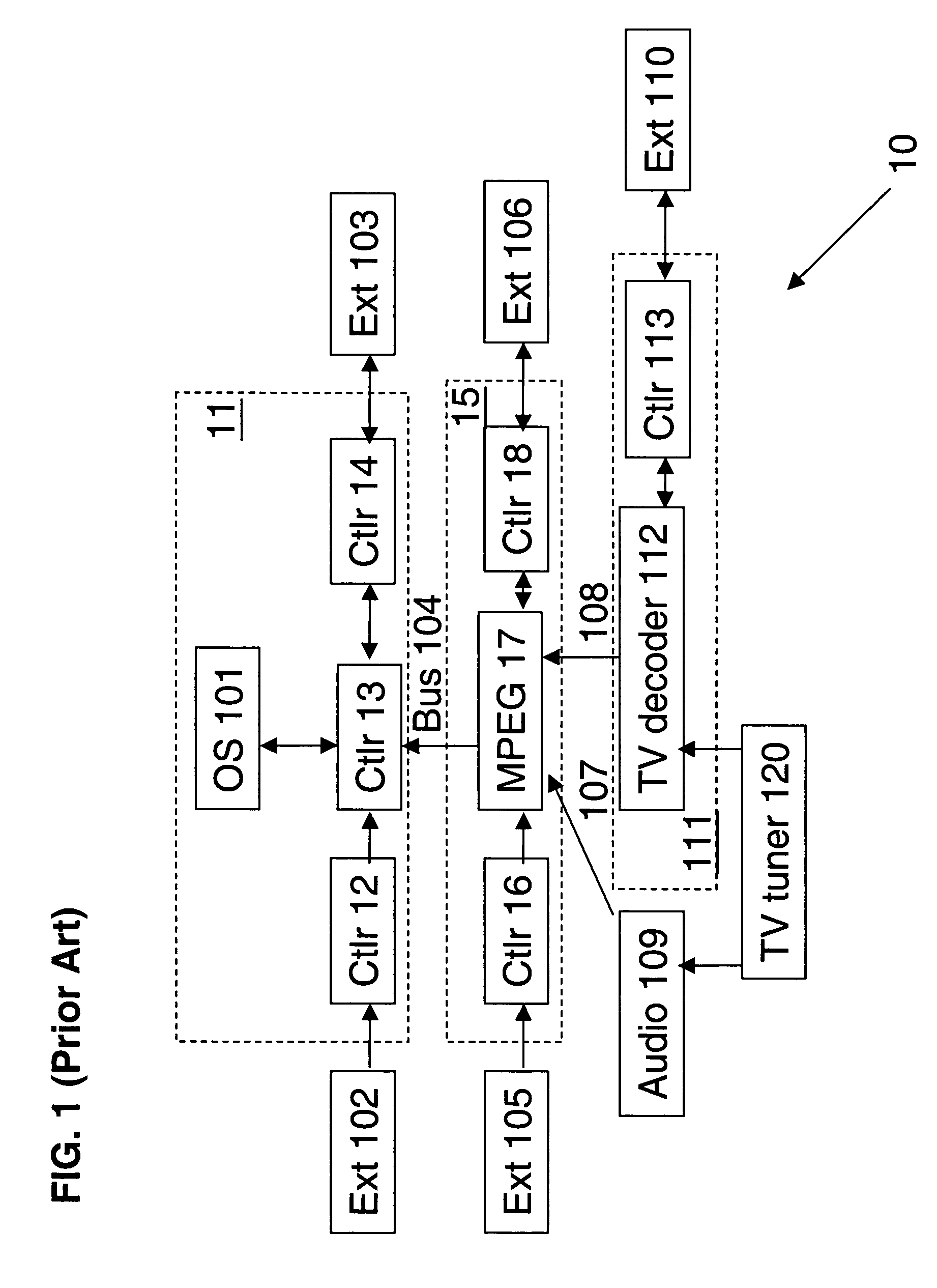

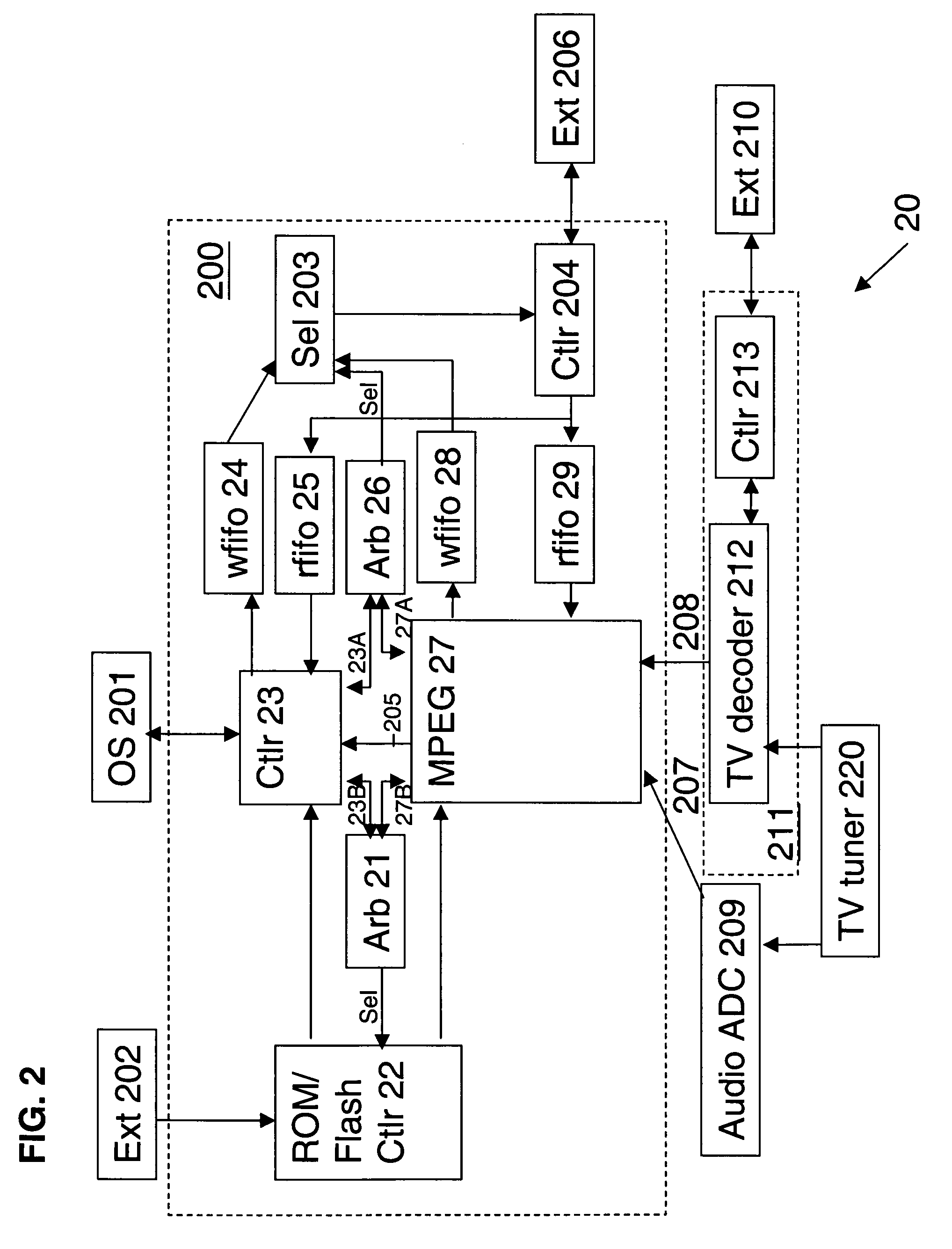

Shared memory architecture and method in an optical storage and recording system

InactiveUS20050254335A1Eliminate the problemTelevision system detailsAnalogue secracy/subscription systemsShared memory architectureExternal storage

A system includes an integrated encoder comprising an optical storage controller for coupling to an optical storage medium, and a data encoder for coding input data coupled to the optical storage controller, a first external memory coupled to a first memory controller in the integrated encoder, and a second external memory coupled to a second memory controller in the integrated encoder. In one aspect, the integrated encoder further comprises a first memory arbiter for selectively directing access to the first external memory by the optical storage controller and the data encoder, and a second memory arbiter for selectively directing access to the second external memory by the optical storage controller and the data encoder.

Owner:SUNPLUS TECH CO LTD

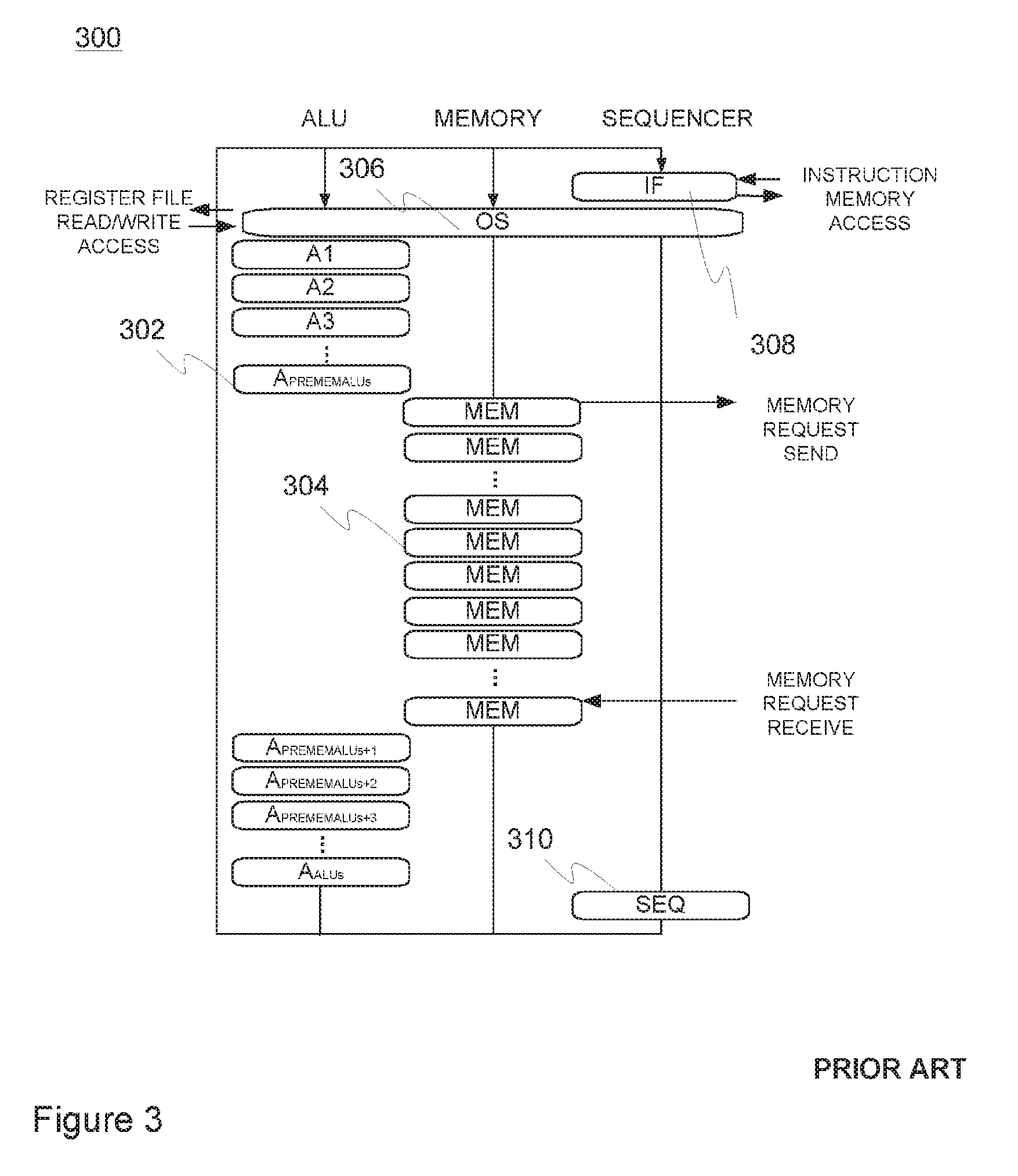

Architecture for long latency operations in emulated shared memory architectures

ActiveUS20160314001A1Feasible solutionLower latencyConcurrent instruction executionShared memory architectureLong latency

A processor architecture arrangement for emulated shared memory (ESM) architectures, comprises a number of, preferably a plurality of, multi-threaded processors each provided with interleaved inter-thread pipeline, wherein the pipeline comprises a plurality of functional units arranged in series for executing arithmetic, logical and optionally further operations on data, wherein one or more functional units of lower latency are positioned prior to the memory access segment in said pipeline and one or more long latency units (LLU) for executing more complex operations associated with longer latency are positioned operatively in parallel with the memory access segment. In some embodiments, the pipeline may contain multiple branches in parallel with the memory access segment, each branch containing at least one long latency unit.

Owner:TEKNOLOGIAN TUTKIMUSKESKUS VTT

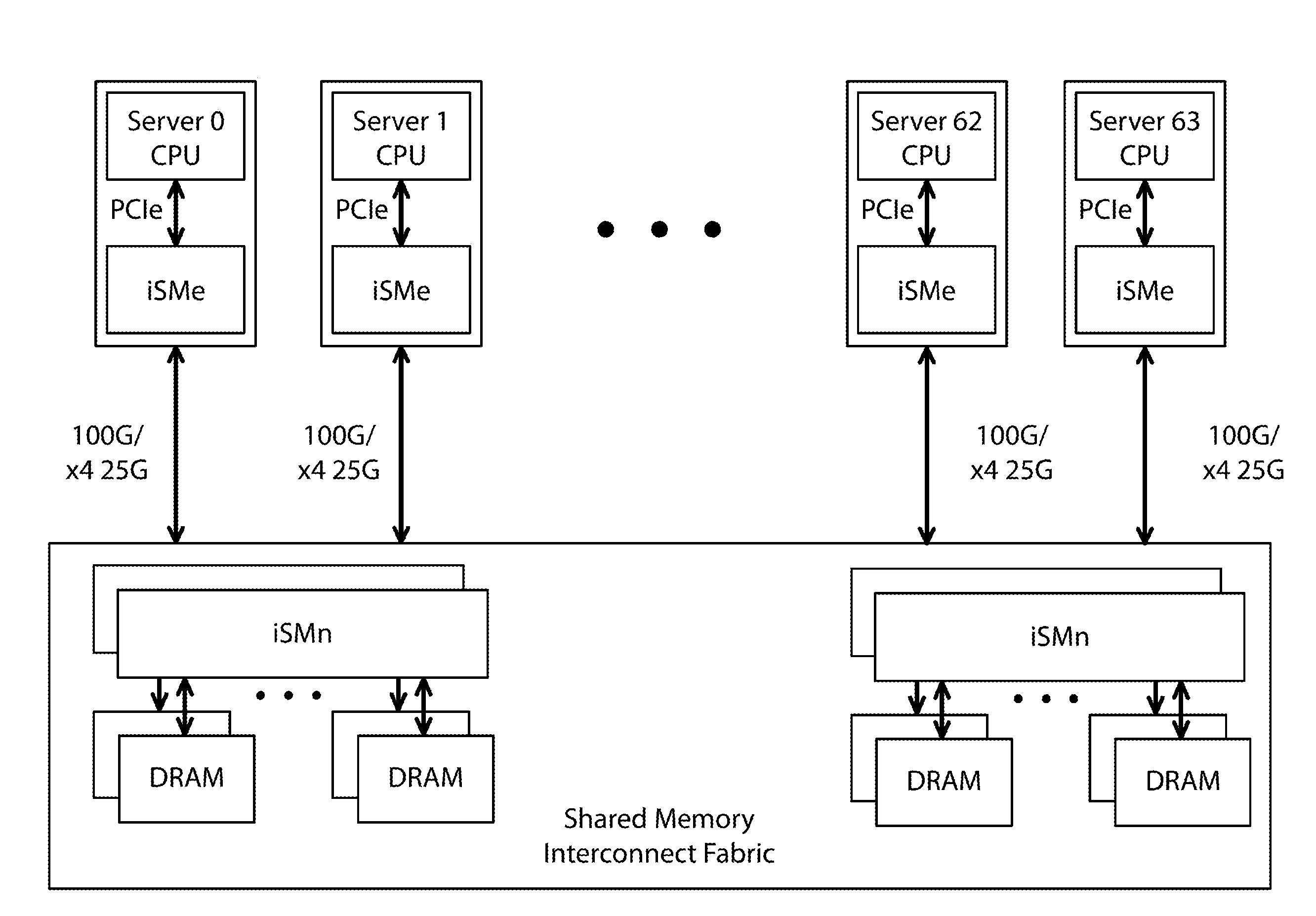

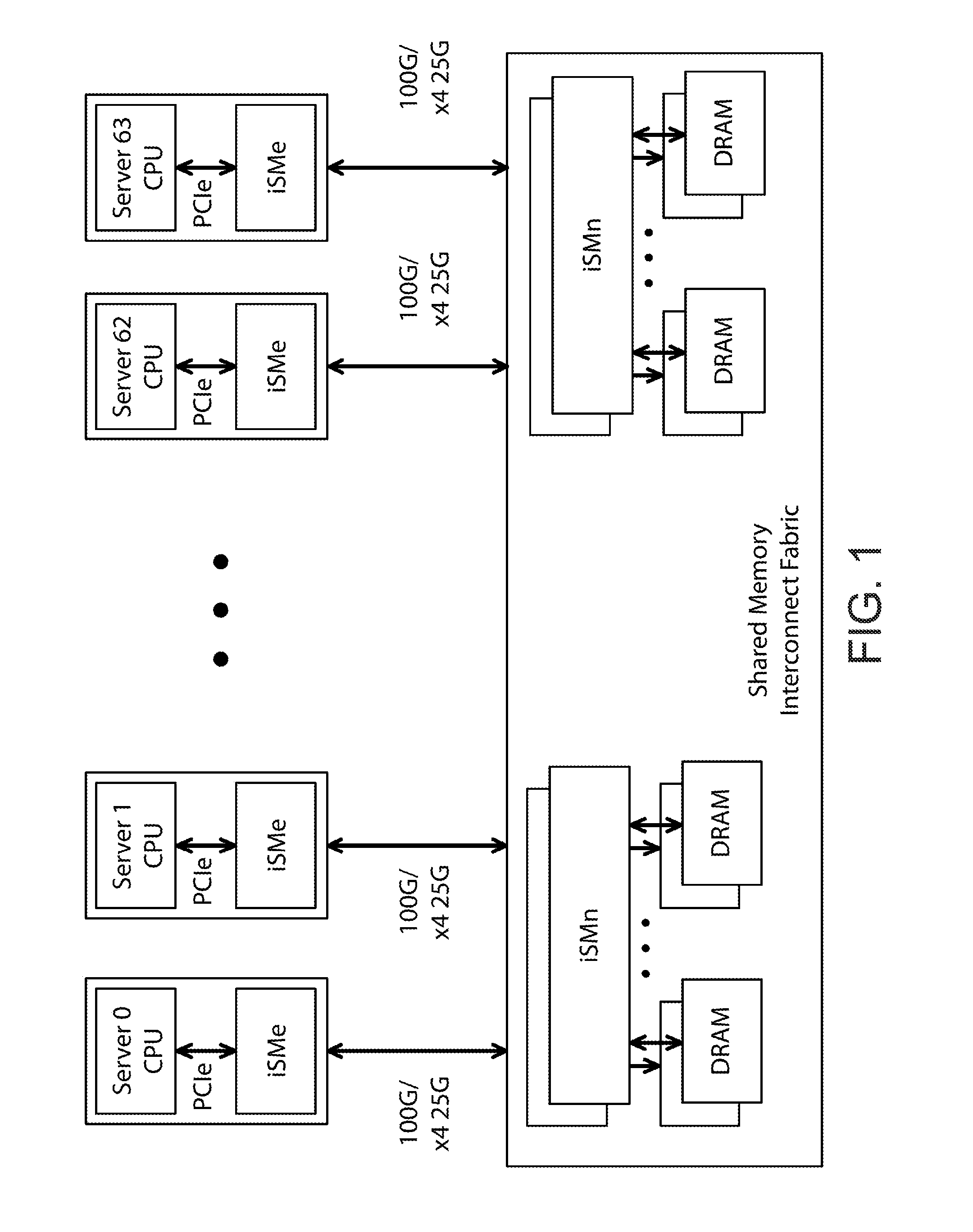

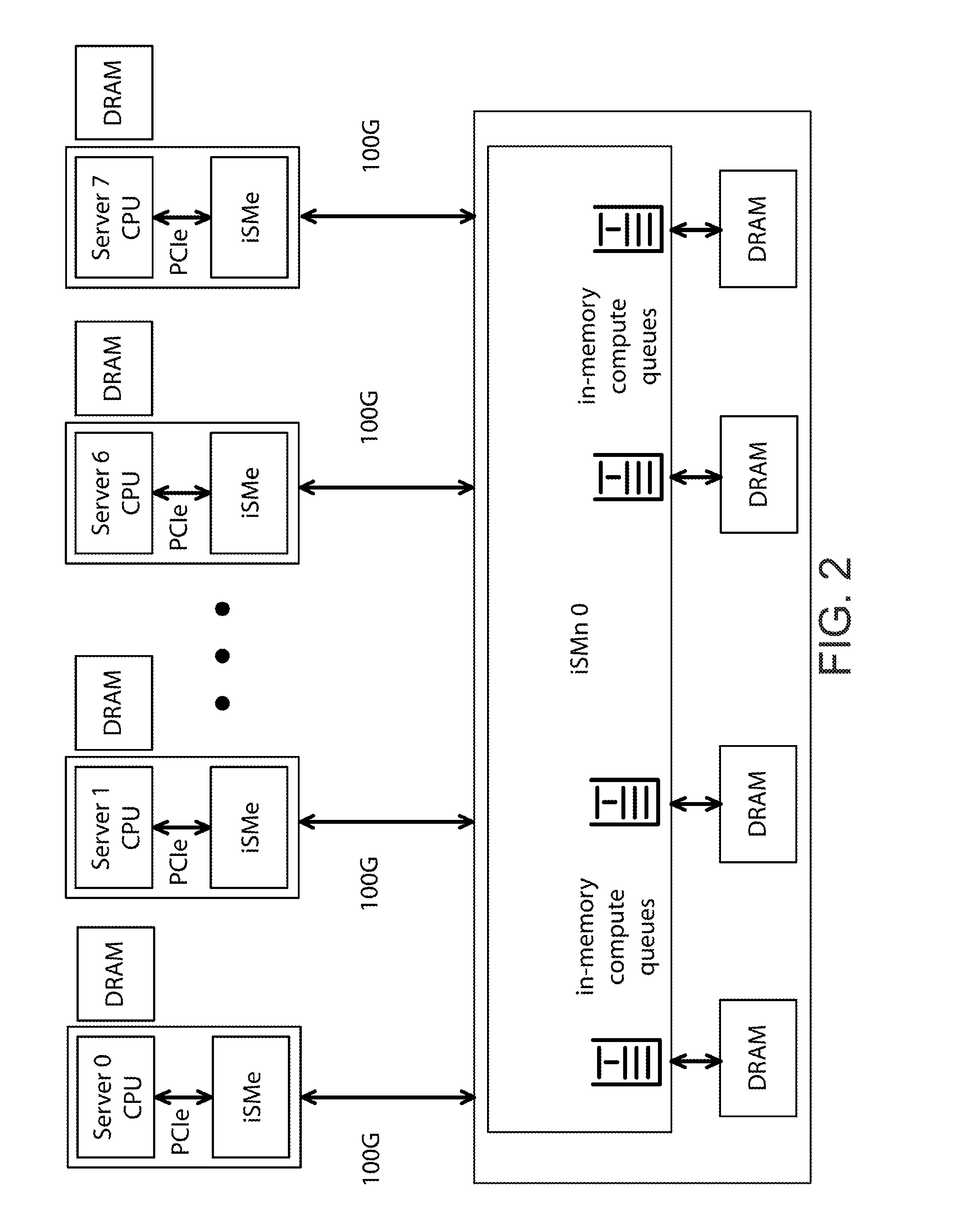

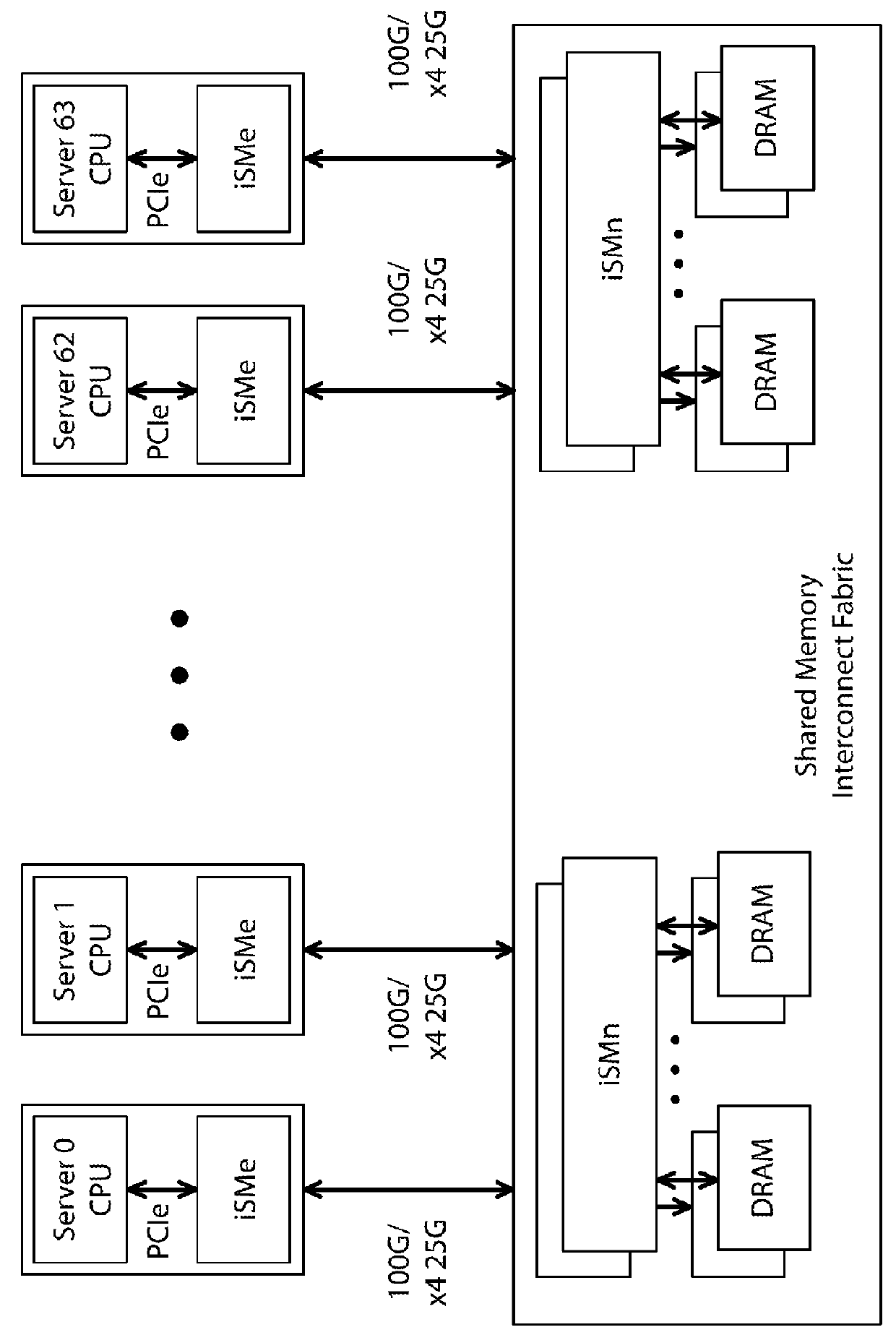

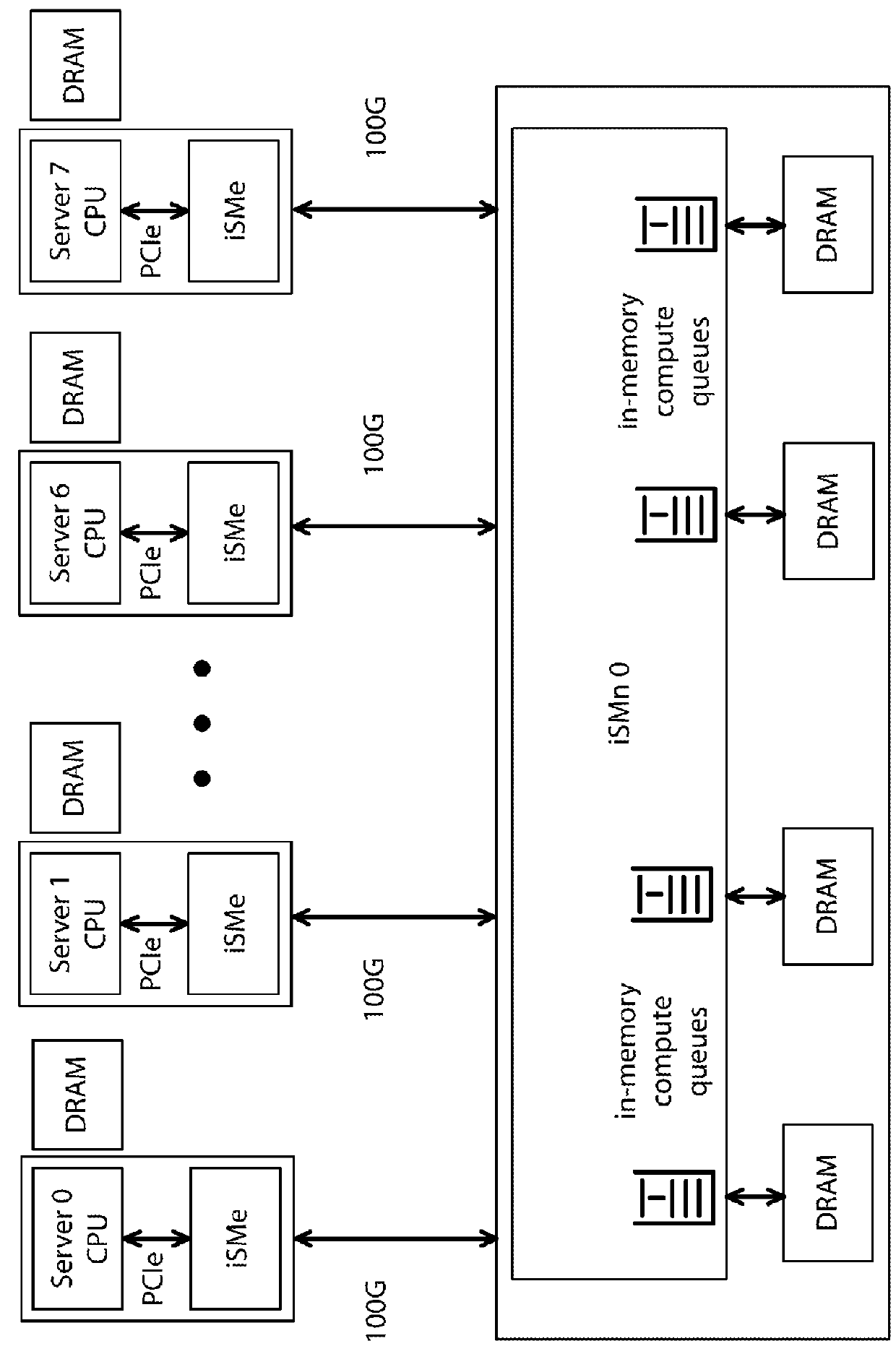

ISOLATED SHARED MEMORY ARCHITECTURE (iSMA)

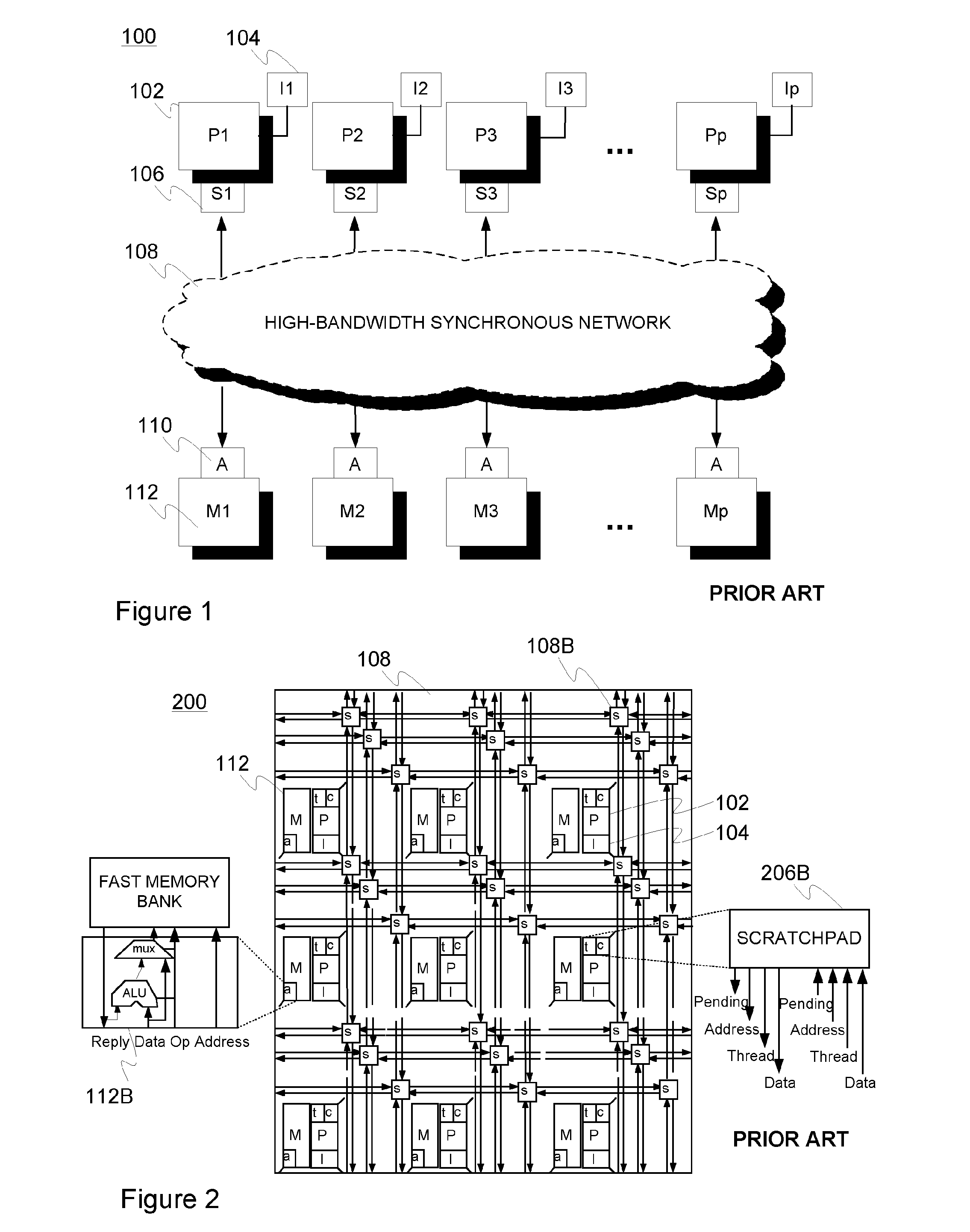

InactiveUS20160110136A1Improve efficiencyInput/output to record carriersEnergy efficient computingShared memory architectureMassively parallel

Techniques for a massively parallel and memory centric computing system. The system has a plurality of processing units operably coupled to each other through one or more communication channels. Each of the plurality of processing units has an ISMn interface device. Each of the plurality of ISMn interface devices is coupled to an ISMe endpoint connected to each of the processing units. The system has a plurality of DRAM or Flash memories configured in a disaggregated architecture and one or more switch nodes operably coupling the plurality of DRAM or Flash memories in the disaggregated architecture. The system has a plurality of high speed optical cables configured to communicate at a transmission rate of 100 G or greater to facilitate communication from any one of the plurality of processing units to any one of the plurality of DRAM or Flash memories.

Owner:RAMBUS INC

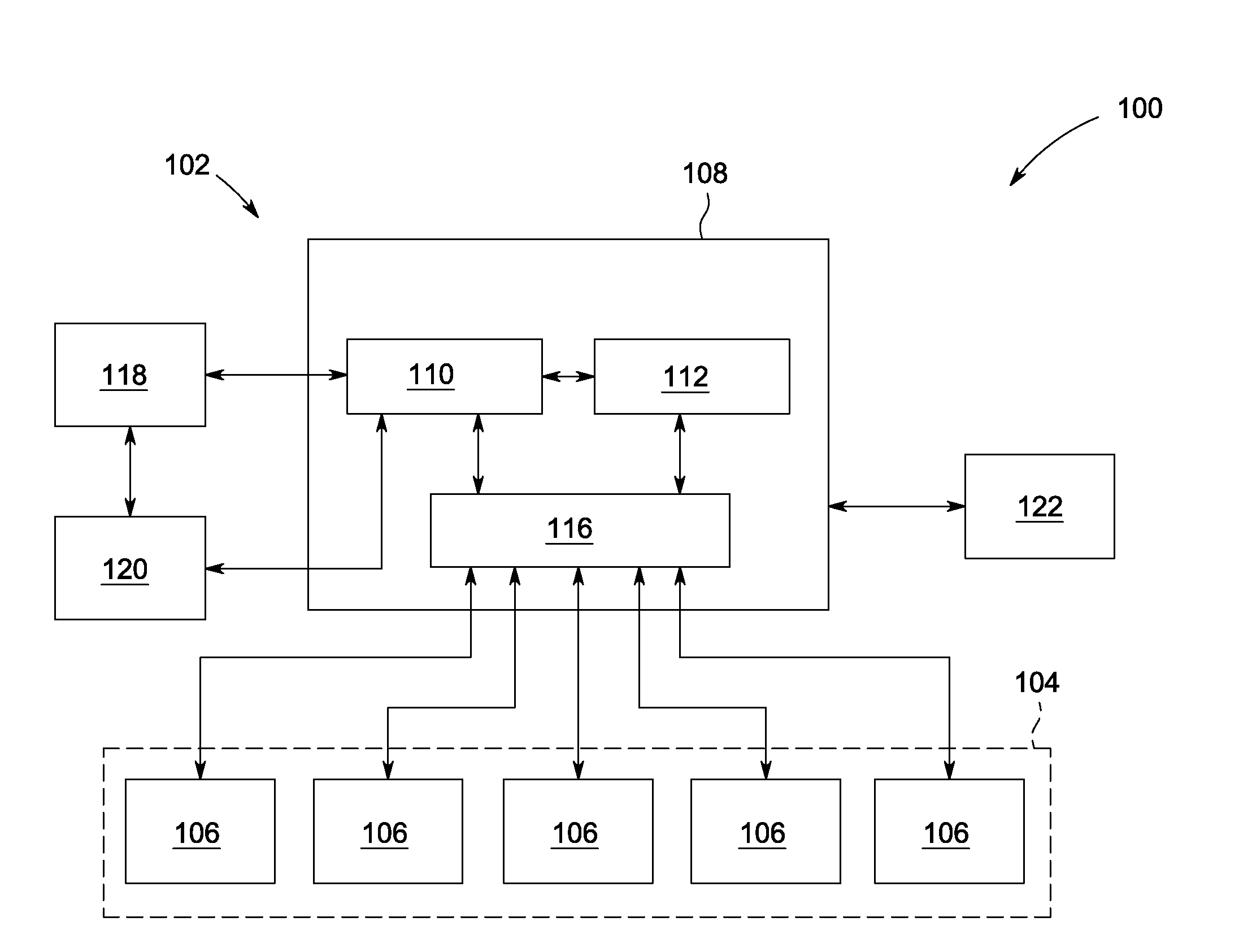

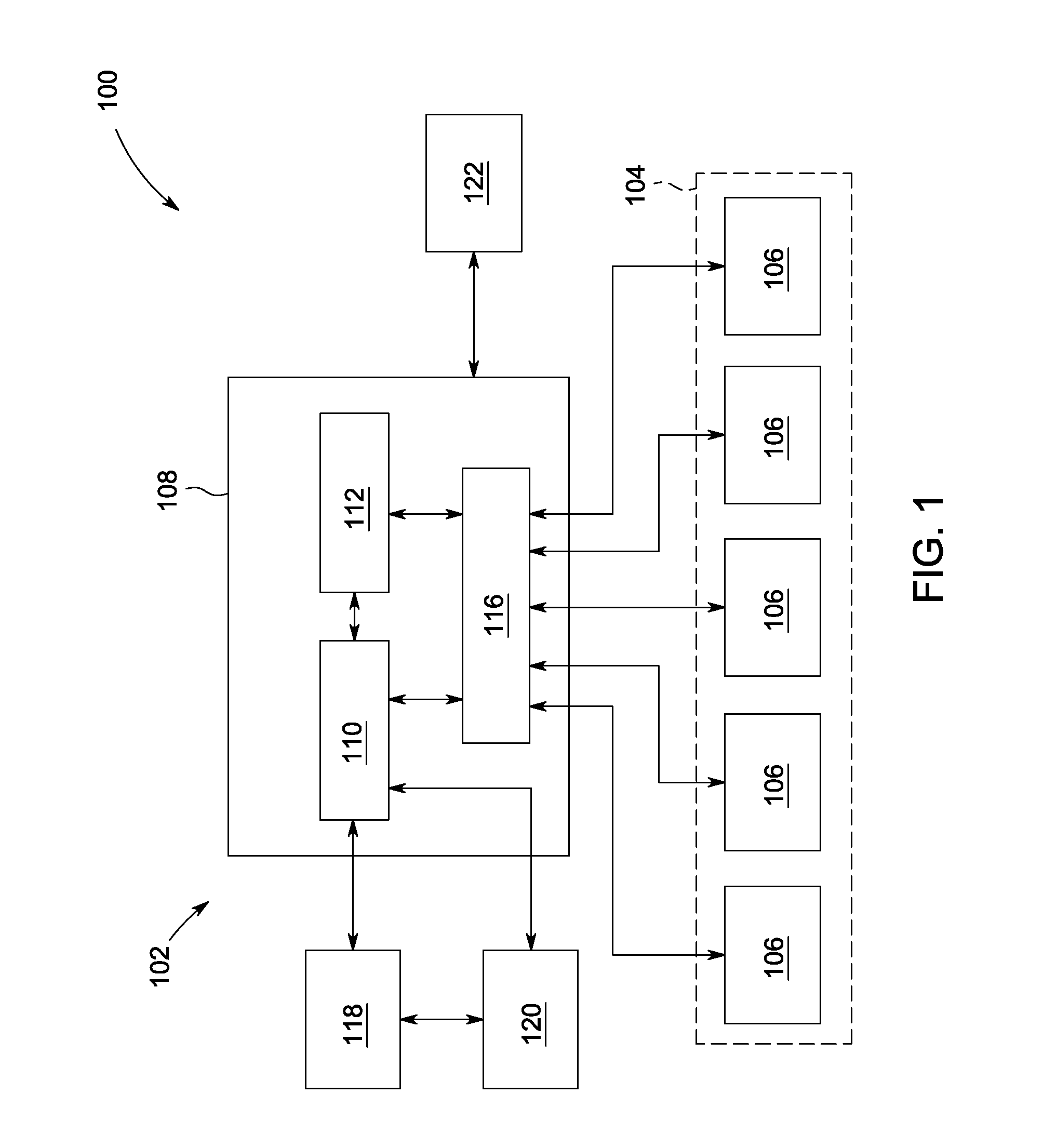

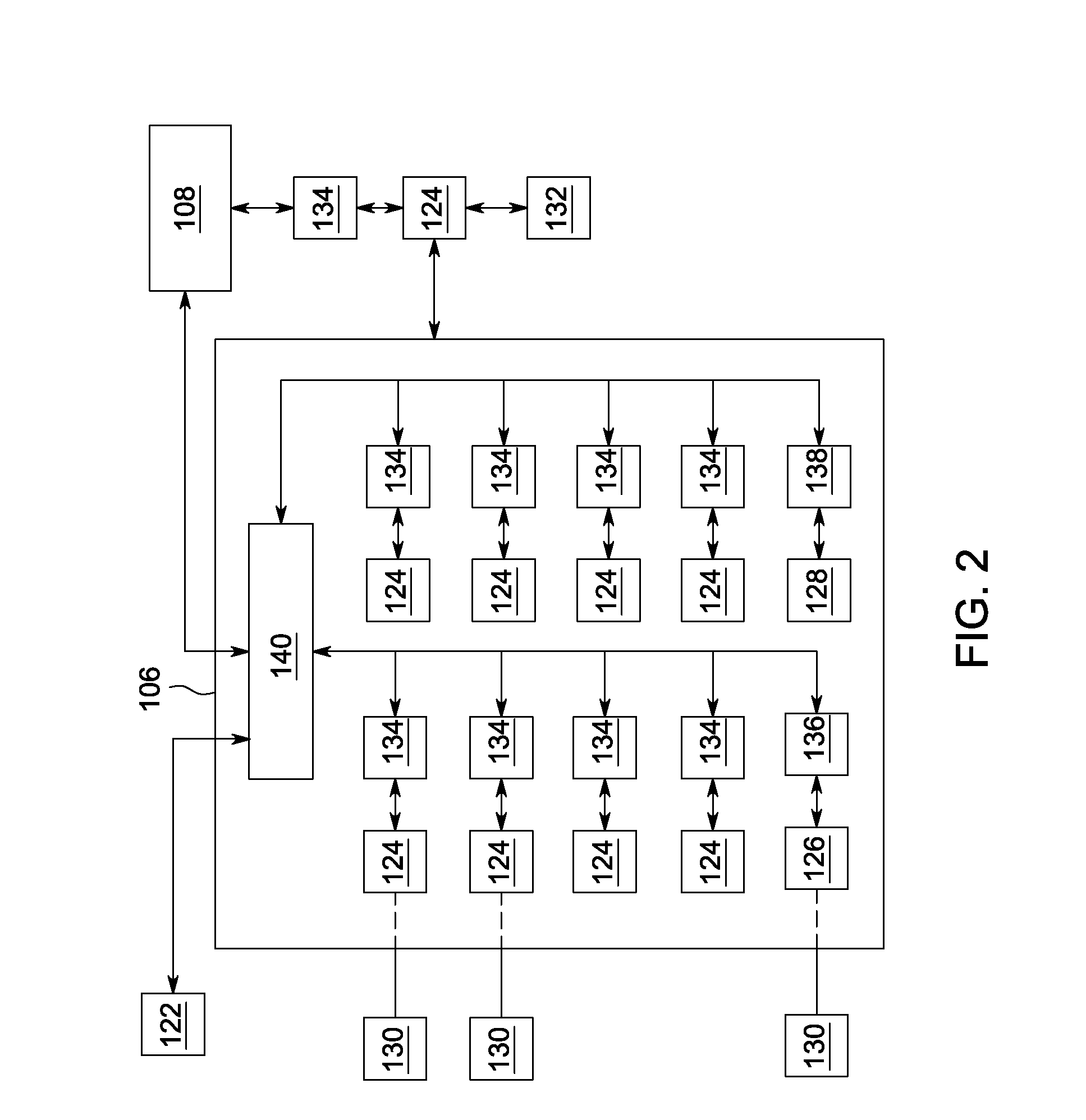

Shared memory architecture for protection of electrical distribution equipment

ActiveUS20120120536A1Emergency protective arrangement detailsArrangements responsive to excess currentShared memory architecturePower equipment

A power equipment protection system includes a plurality of trip units including at least a first trip unit and a second trip unit, wherein each of the plurality of trip units is configured to monitor operation data. The system also includes a shared memory device coupled to the plurality of trip units via a network, wherein the shared memory device is configured to transmit a synchronization request to at least the second trip unit, receive a response from the second trip unit, including operation data associated with the second trip unit, receive a data request from the first trip unit, and transmit the operation data associated with the second trip unit to the first trip unit via the network.

Owner:ABB (SCHWEIZ) AG

Isolated shared memory architecture (iSMA)

ActiveUS9250831B1Improve efficiencyInput/output to record carriersMemory adressing/allocation/relocationShared memory architectureParallel computing

Techniques for a massively parallel and memory centric computing system. The system has a plurality of processing units operably coupled to each other through one or more communication channels. Each of the plurality of processing units has an ISMn interface device. Each of the plurality of ISMn interface devices is coupled to an ISMe endpoint connected to each of the processing units. The system has a plurality of DRAM or Flash memories configured in a disaggregated architecture and one or more switch nodes operably coupling the plurality of DRAM or Flash memories in the disaggregated architecture. The system has a plurality of high speed optical cables configured to communicate at a transmission rate of 100 G or greater to facilitate communication from any one of the plurality of processing units to any one of the plurality of DRAM or Flash memories.

Owner:RAMBUS INC

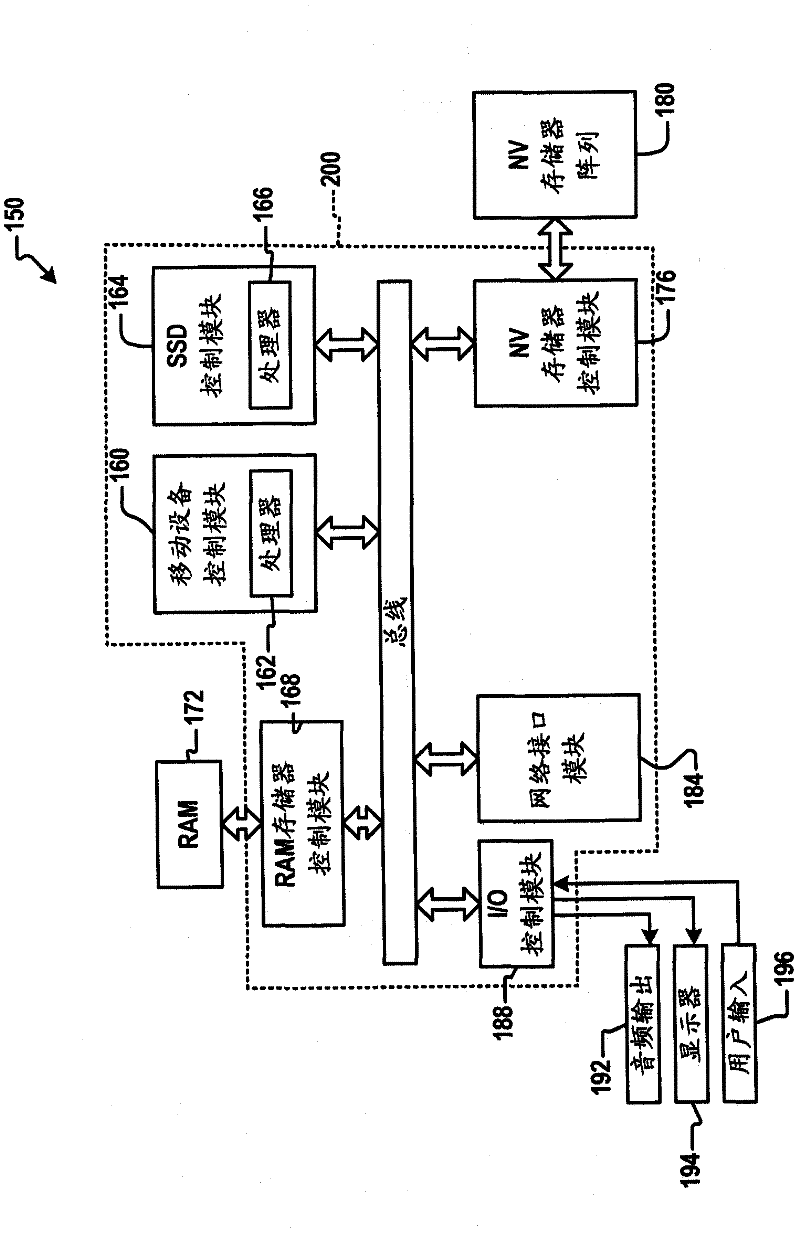

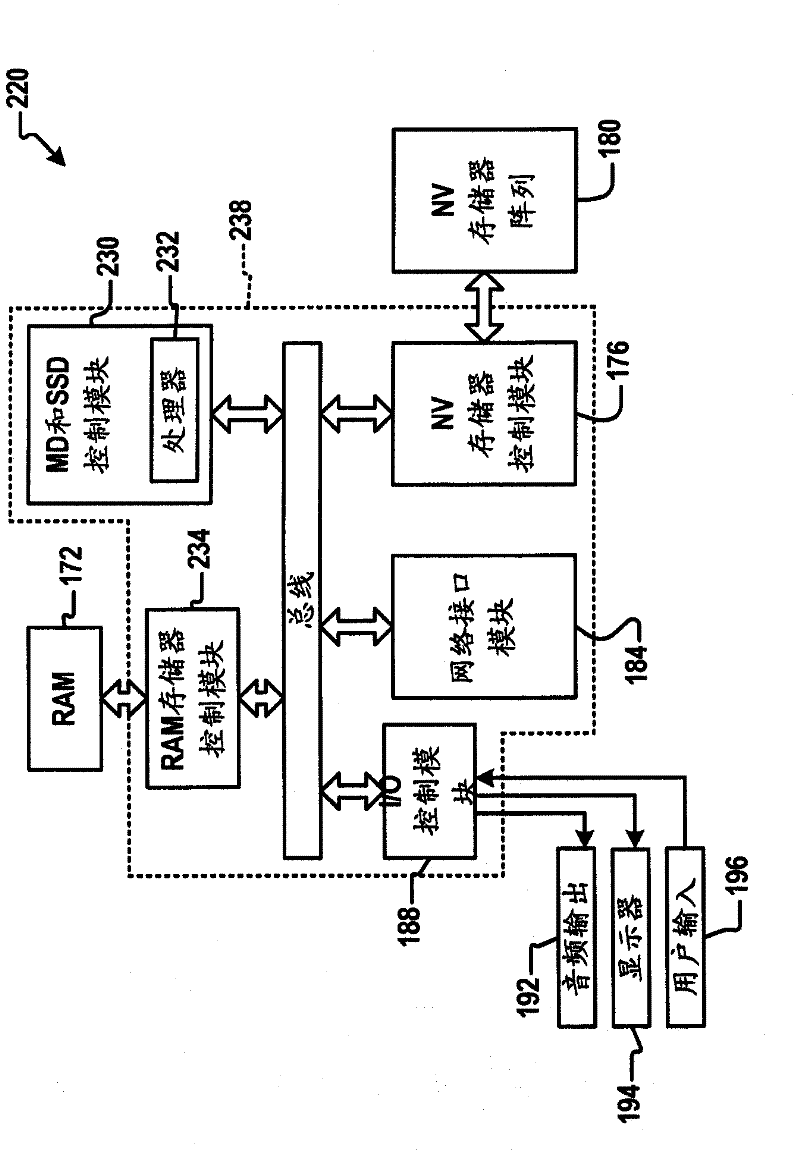

Combined mobile device and solid state disk with a shared memory architecture

InactiveCN102203746AMemory adressing/allocation/relocationArchitecture with single central processing unitShared memory architectureStatic random-access memory

A mobile device includes a system-on-chip (SOC) that includes a mobile device control module, a solid state disk (SSD) control module, and a random access memory (RAM) control module. The mobile device control module executes application programs for the mobile device. The solid-state disk (SSD) control module controls SSD operations. The RAM control module communicates with the mobile device control module and the SSD control module and stores both SSD-related data and mobile device-related data in a single RAM.

Owner:MARVELL WORLD TRADE LTD

Multi-rate shared memory architecture for frame storage and switching

ActiveUS7054312B2Efficiently storing dataData efficientMultiplex system selection arrangementsData switching by path configurationShared memory architectureFibre Channel switch

Shared memory provides buffering and switching for all frames that flow through a fiber channel switch. Received frames are written to shared memory by the receiving port then read from shared memory by the transmitting port. Shared memory provides for data to be written to a buffer at one rate, and read from a buffer at a different rate, or vice versa.

Owner:AVAGO TECH INT SALES PTE LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com