Patents

Literature

47results about How to "Reduce access conflicts" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

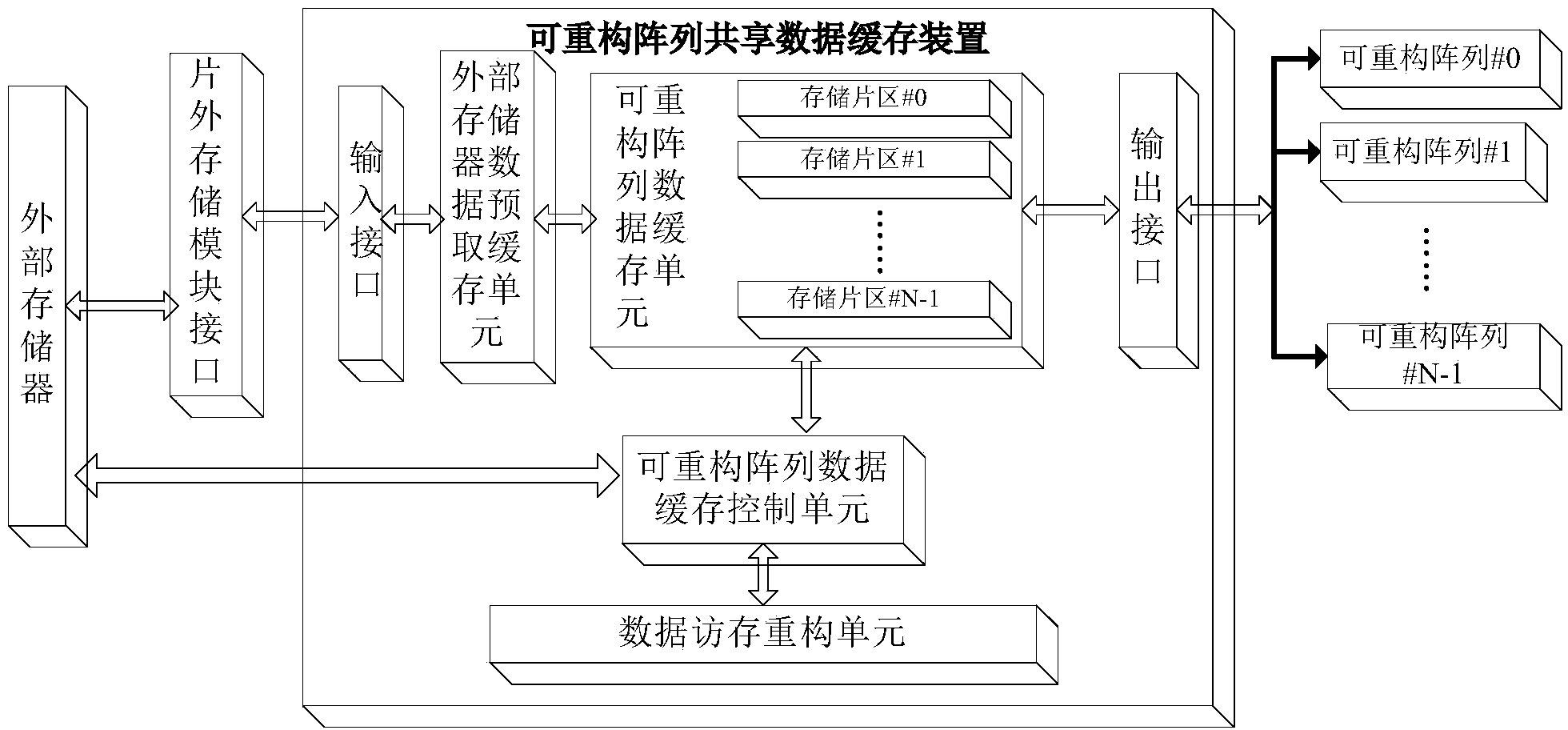

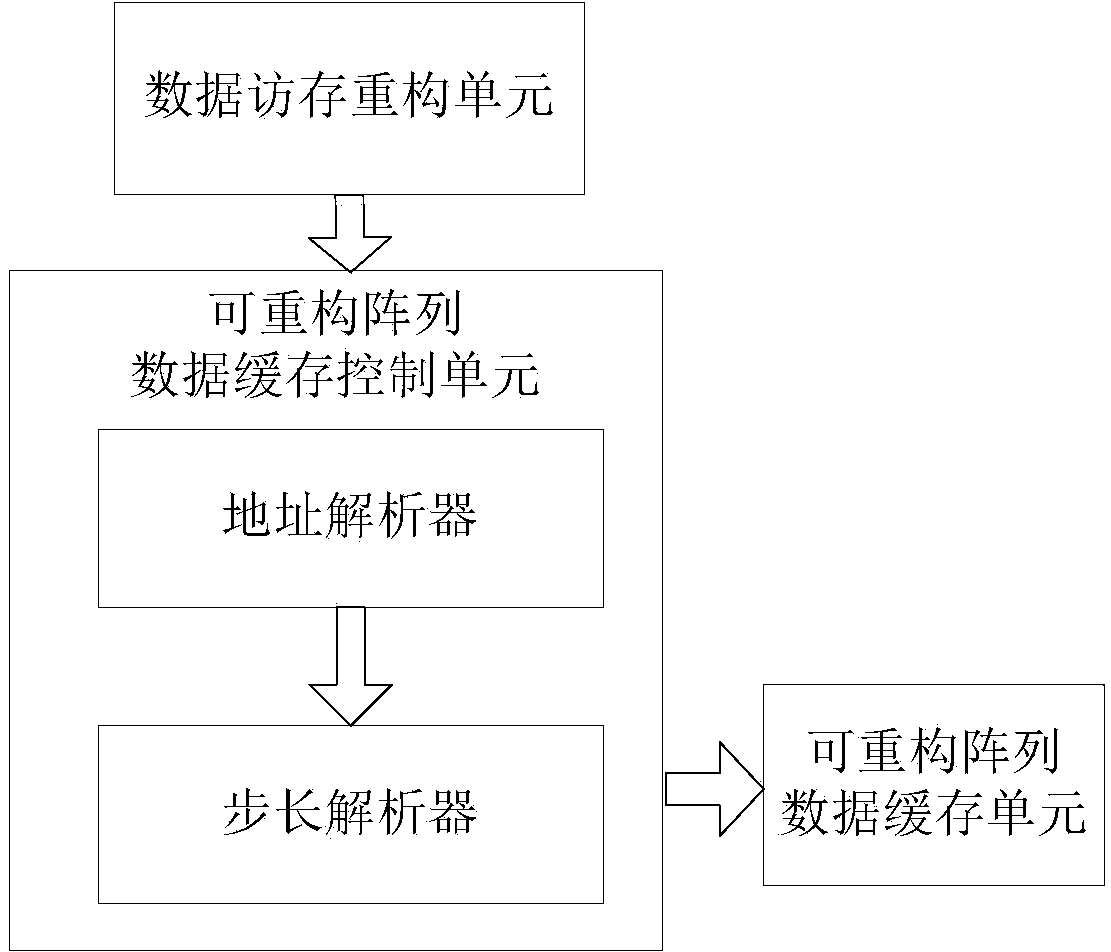

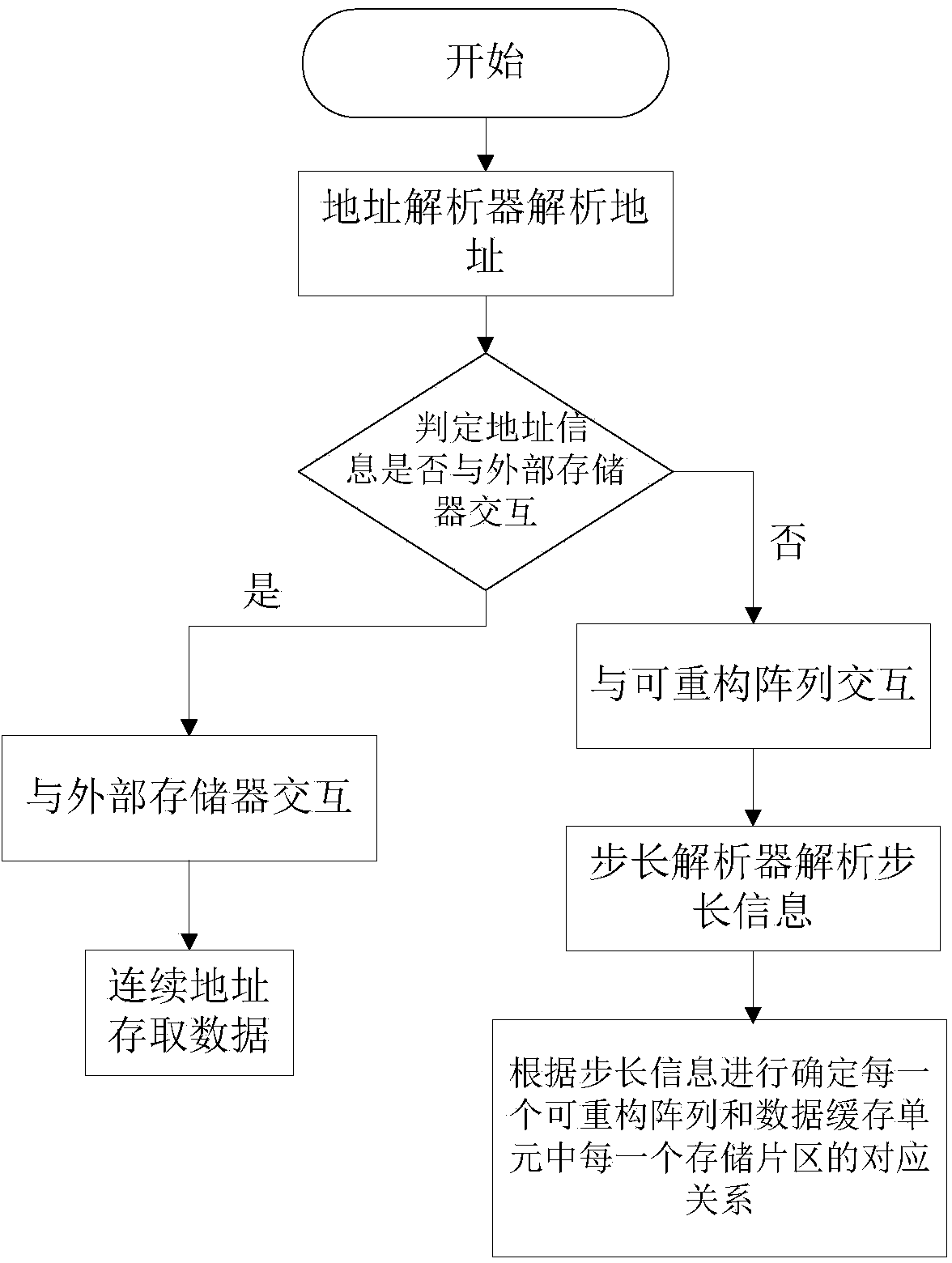

Shared data caching device for a plurality of coarse-grained dynamic reconfigurable arrays and control method

ActiveCN103927270AChange access methodImprove computing powerMemory adressing/allocation/relocationExternal storageData memory

The invention discloses a shared data caching device for a plurality of coarse-grained dynamic reconfigurable arrays and a control method of the shared data caching device. The shared data caching device comprises a reconfigurable array data caching control unit, a reconfigurable array data caching unit, an external memory data prefetching caching unit and a data memory access reconfiguration unit, wherein the reconfigurable array data caching control unit is used for controlling data interaction between the reconfigurable arrays and the reconfigurable array data caching unit and data interaction between the reconfigurable array data caching unit and an external memory, the reconfigurable array data caching unit is used for storing data fetched from the external memory, the external memory data prefetching caching unit is used for prefetching data to be accessed to the reconfigurable array data caching unit, and the data memory access reconfiguration unit is used for sending address information and step length information needed by the reconfigurable array data caching unit. The control method is used for achieving data sharing between the coarse-grained dynamic reconfigurable arrays in a reconfigurable system. By means of the shared data caching device and the control method, access conflict is reduced, data processing time of the reconfigurable system is shortened, and the calculation performance of large-scale coarse-grained reconfigurable arrays is improved.

Owner:SOUTHEAST UNIV

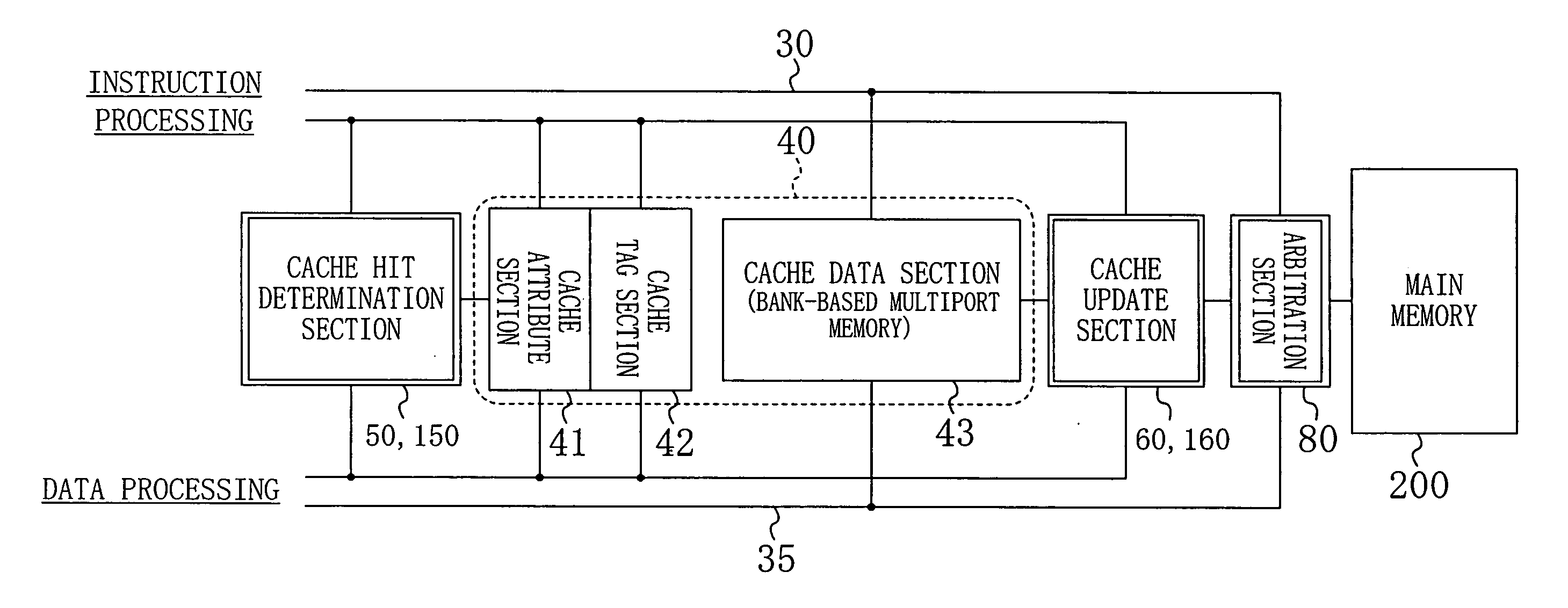

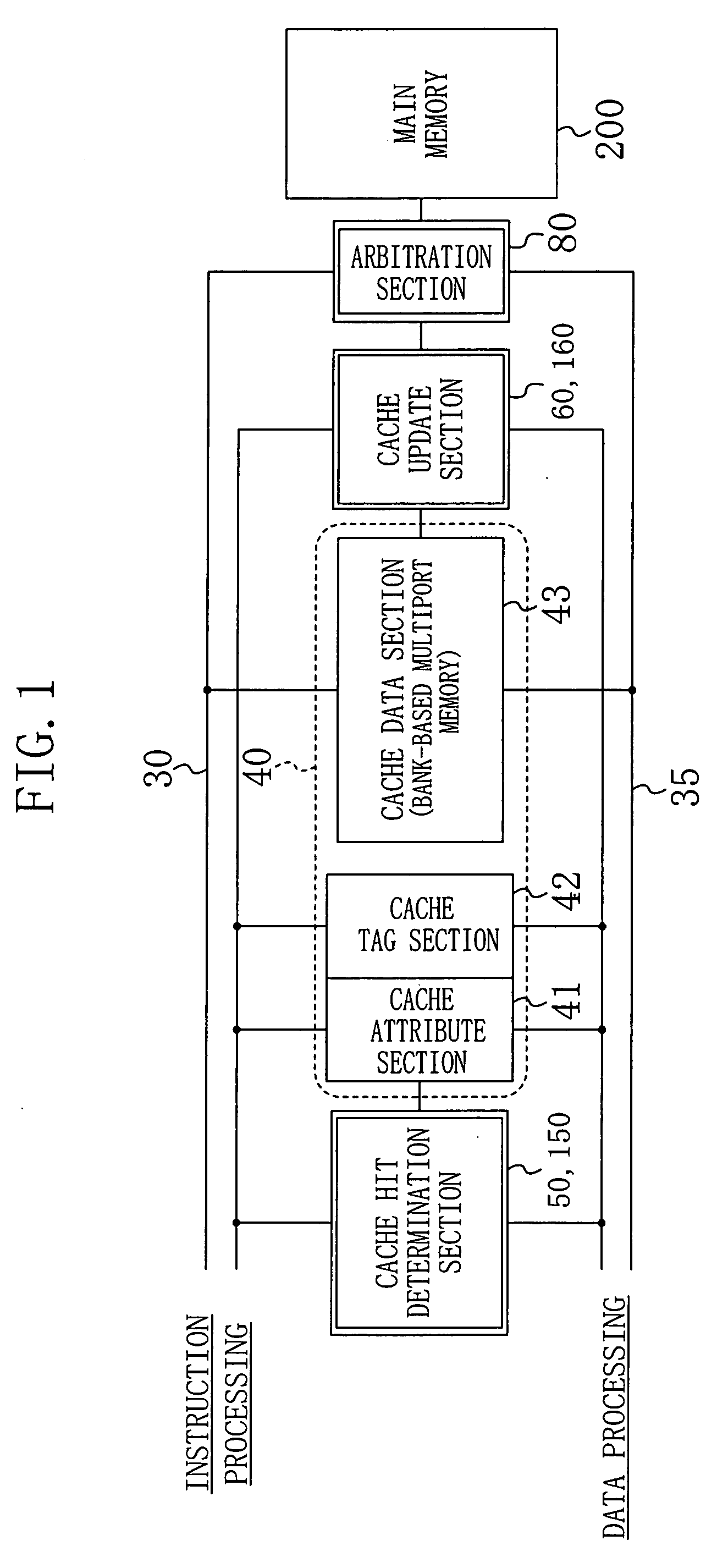

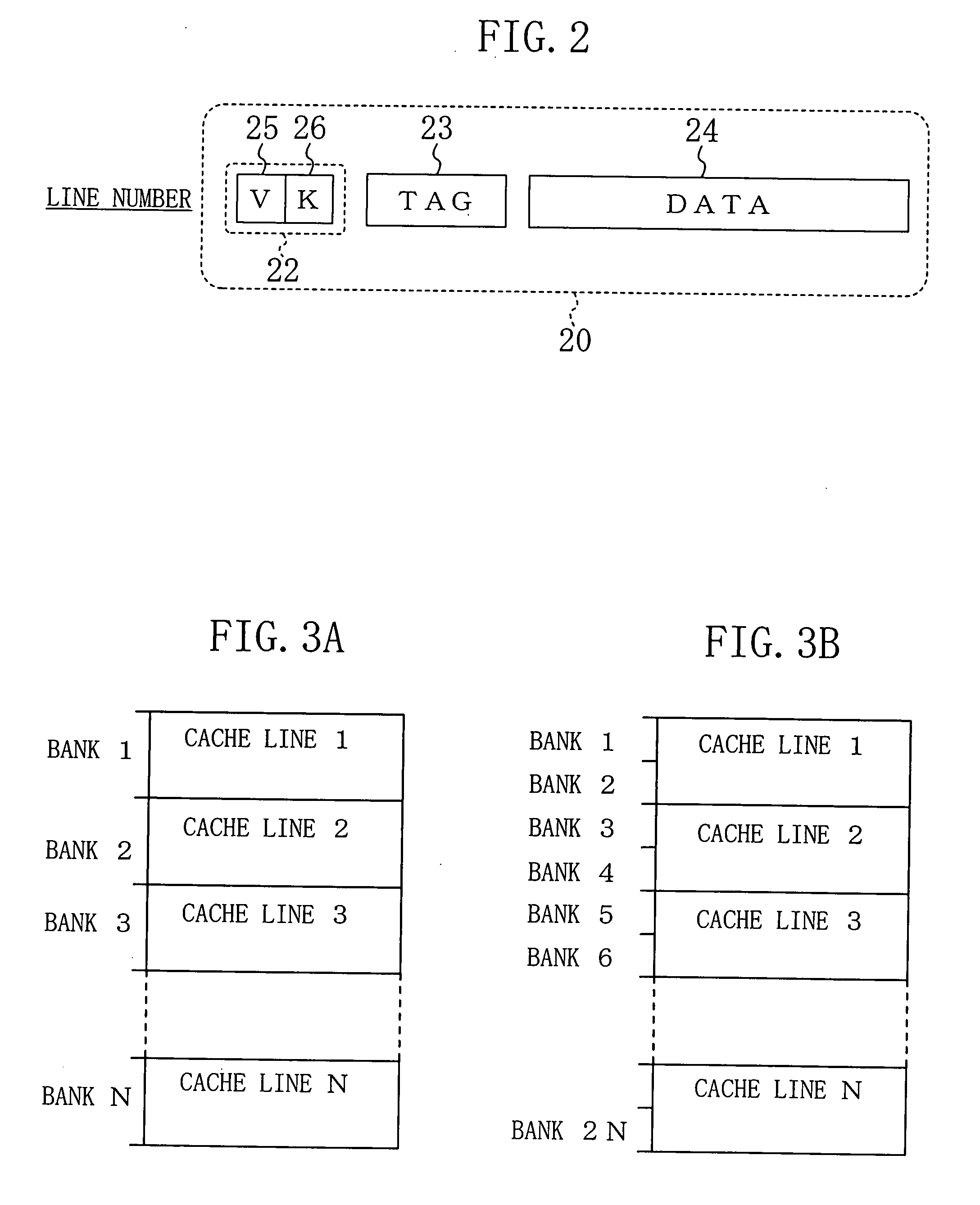

Cache memory system

InactiveUS20080016282A1Avoid it happening againReduce equipment costsMemory systemsParallel computingData storing

A cache memory system includes: a plurality of cache lines, each including a data section for storing data of main memory and a line classification section for storing identification information that indicates whether the data stored in the data section is for instruction processing or for data processing; a cache hit determination section for determining whether or not there is a cache hit by using the identification information stored in each of the cache lines; and a cache update section for updating one of the cache lines that has to be updated, according to result of the determination.

Owner:PANASONIC CORP

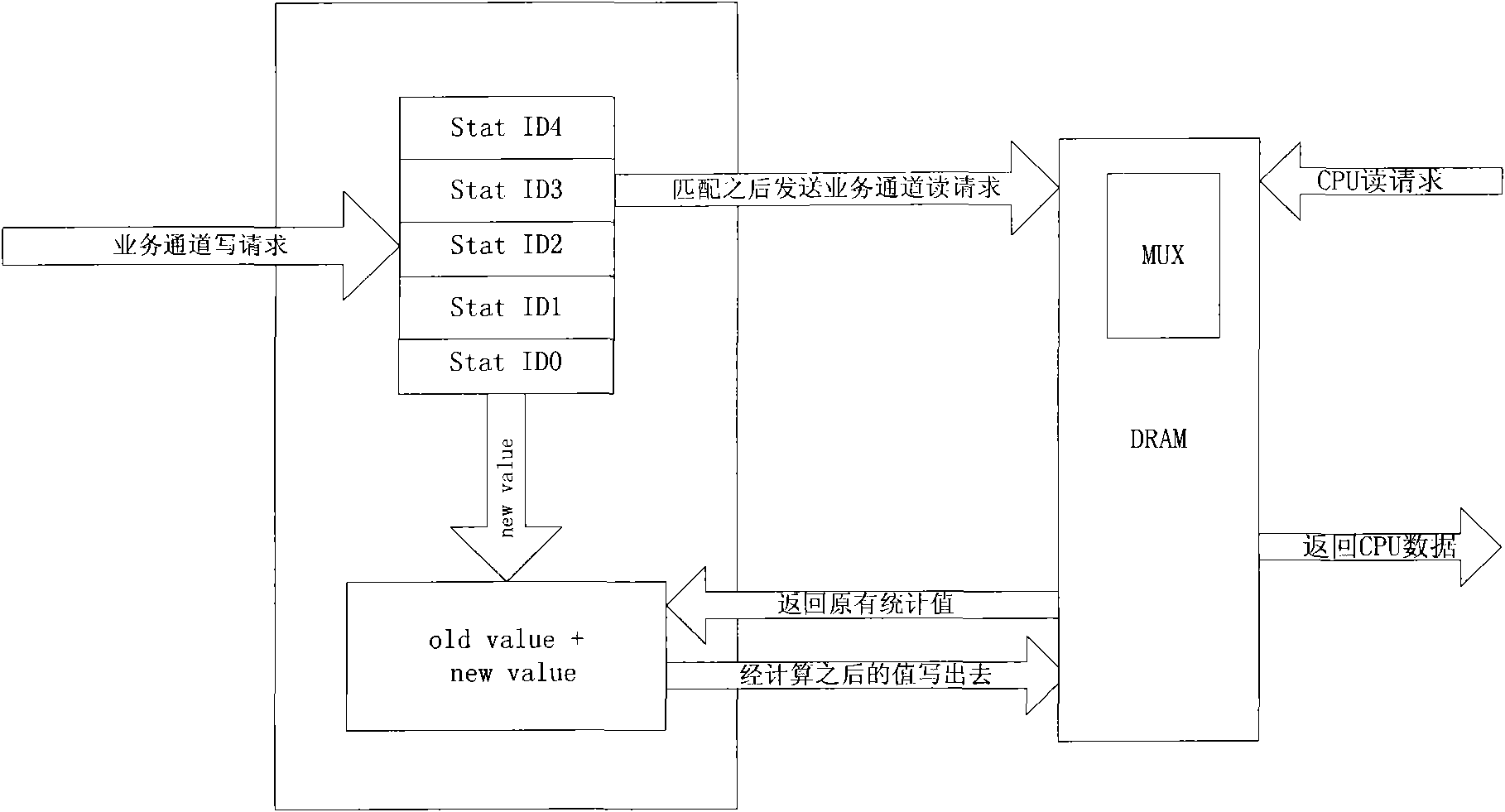

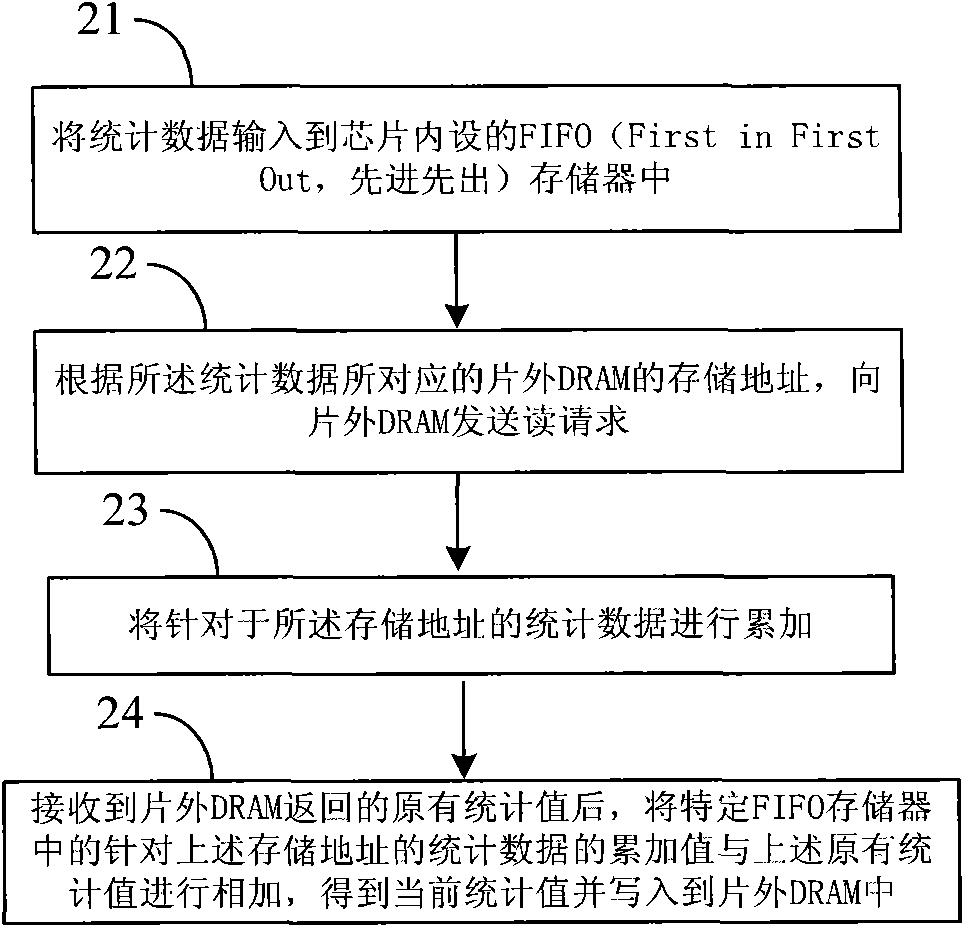

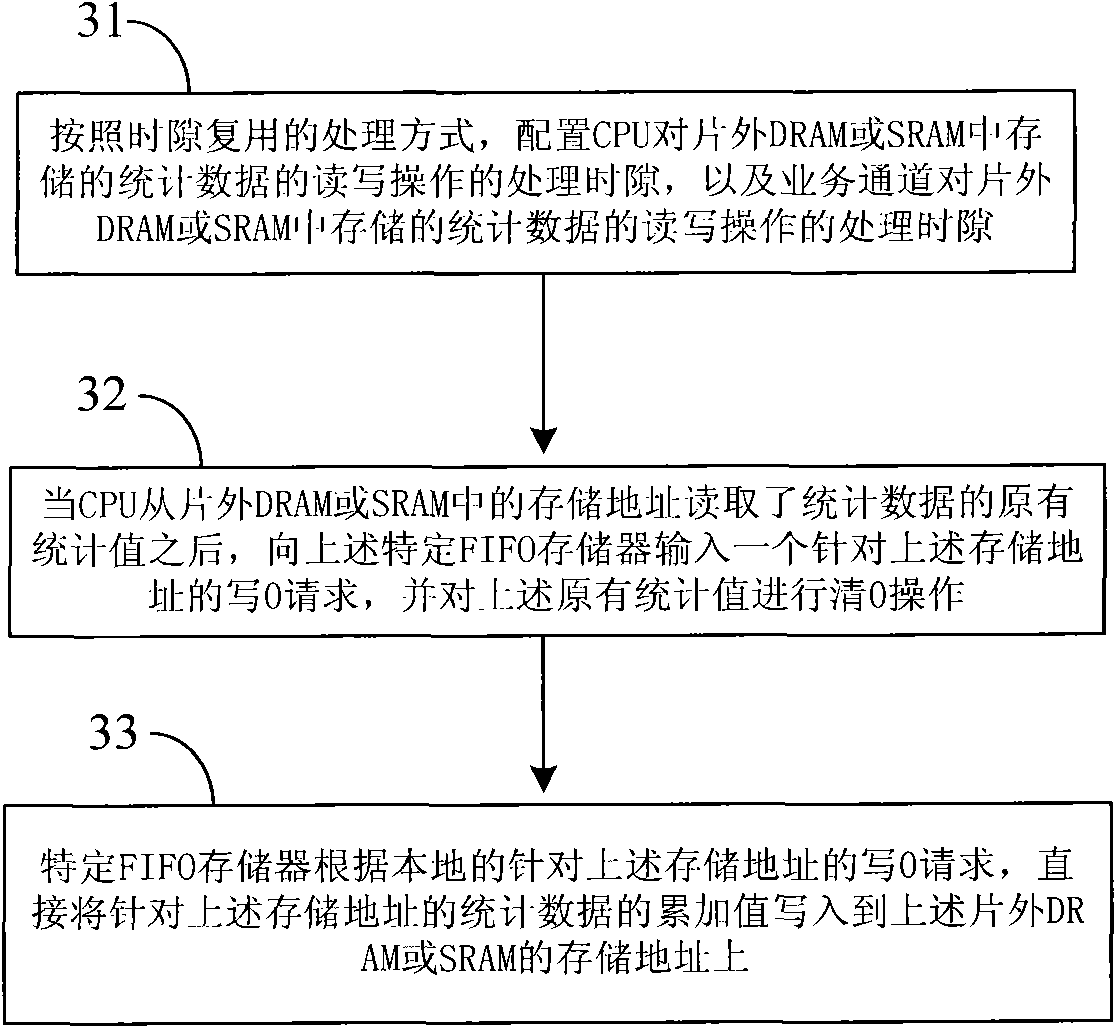

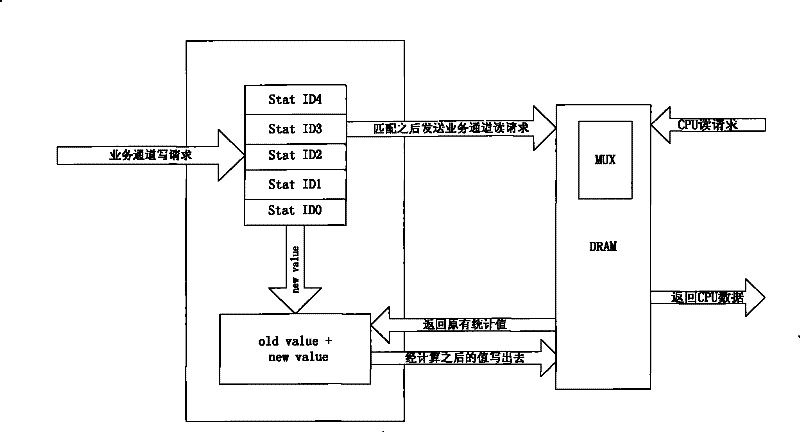

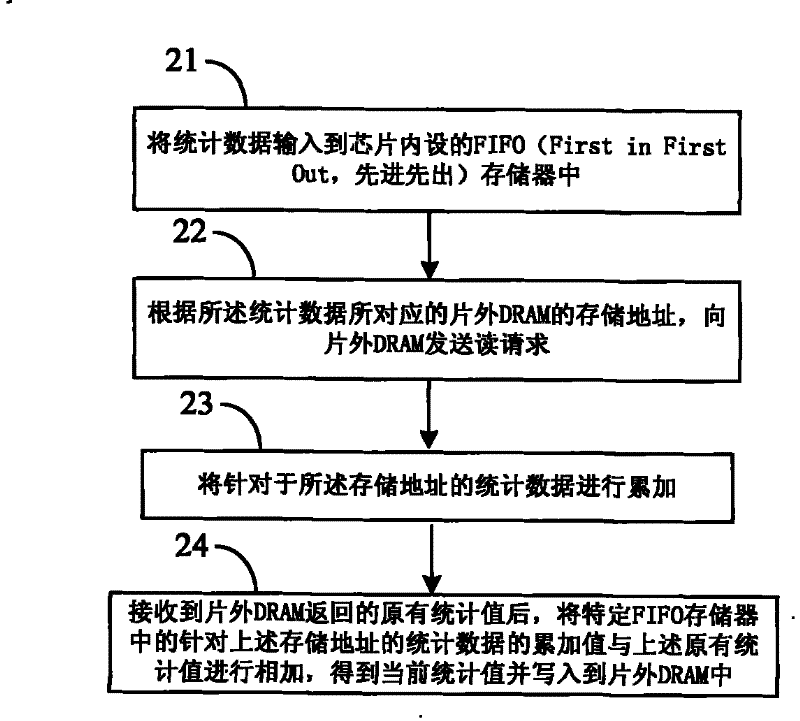

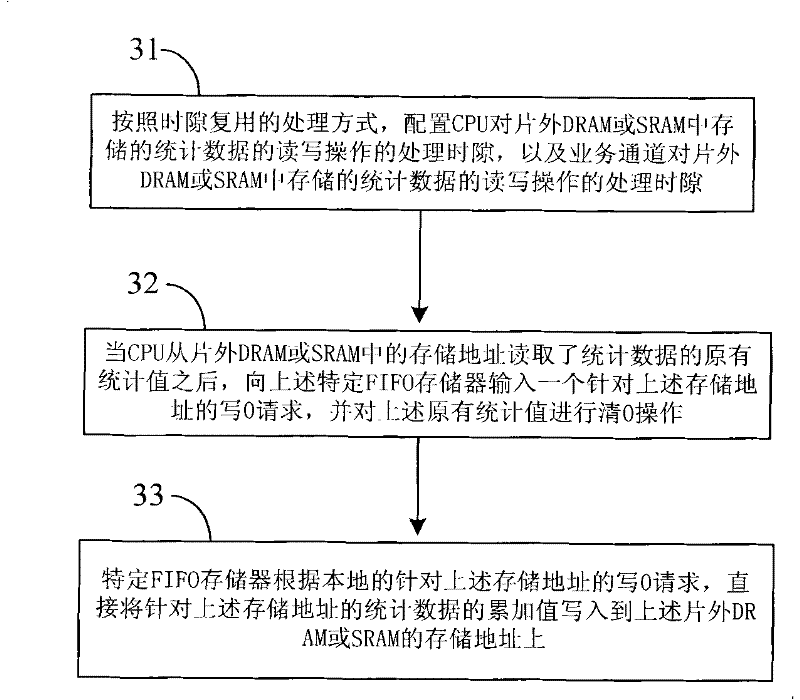

Management method and management device for statistical data of chip

InactiveCN101848135AEasy to handleReduce read and write operationsMemory adressing/allocation/relocationData switching networksMemory addressComputer science

The embodiment of the invention provides a management method and a management device for statistical data of a chip. The method mainly comprises the following steps: accumulating statistical data corresponding to the same memory address in an off-chip memory device to obtain an accumulated value, obtaining an original statistical value at the same memory address in the off-chip memory device, adding the accumulated valve to the original statistical value to obtain a current statistical value, and writing the current statistical value into the same memory address in the off-chip memory device. By adopting the invention, a plurality of statistical data at the same memory address can be written into an off-chip memory through one writing operation. Thereby, the reading and writing operations of the off-chip memory are effectively buffered, the conflict with the access to a bank in the off-chip memory is effectively reduced, and the processing capacity of the chip to the statistical data is greatly improved.

Owner:HUAWEI TECH CO LTD

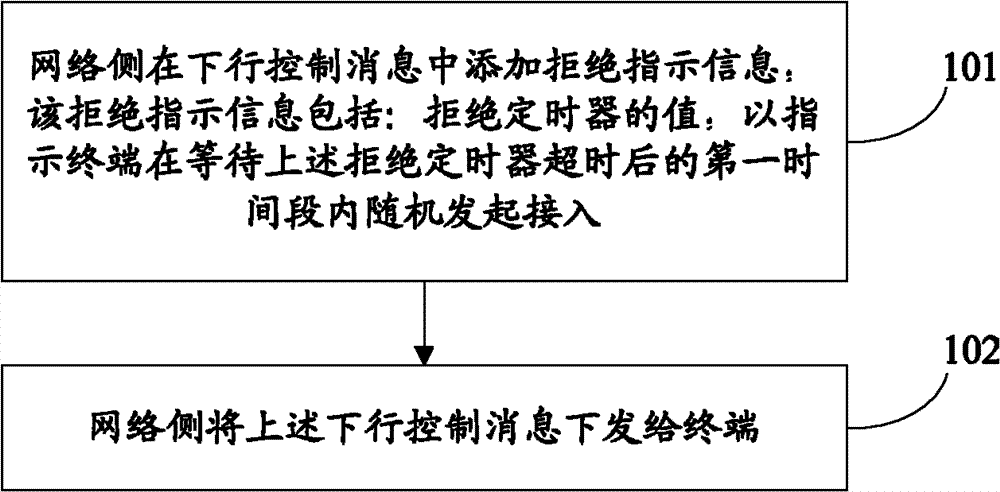

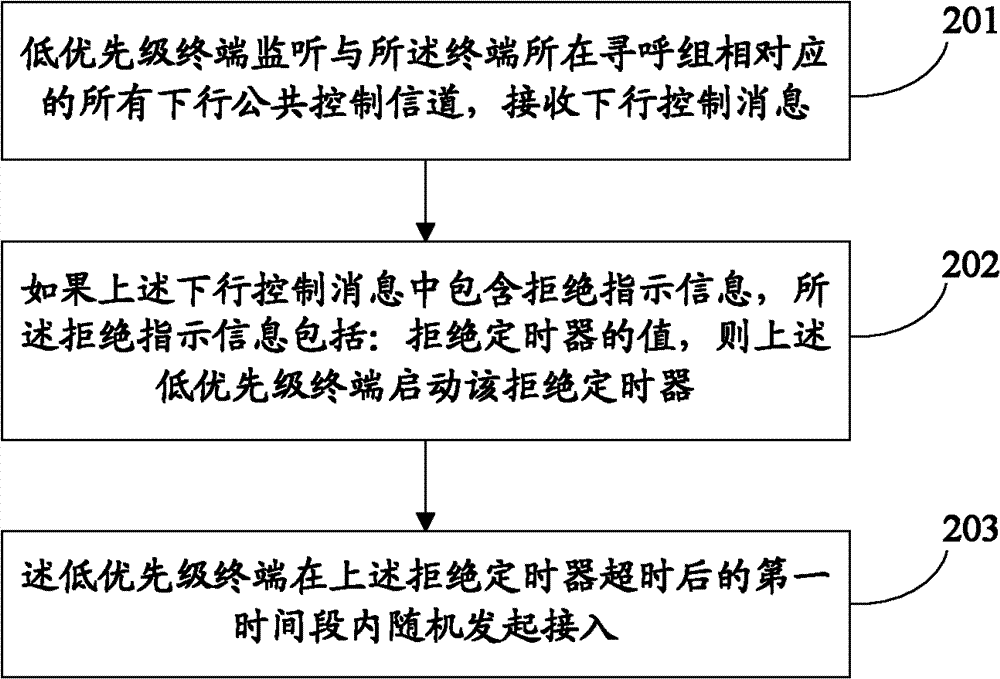

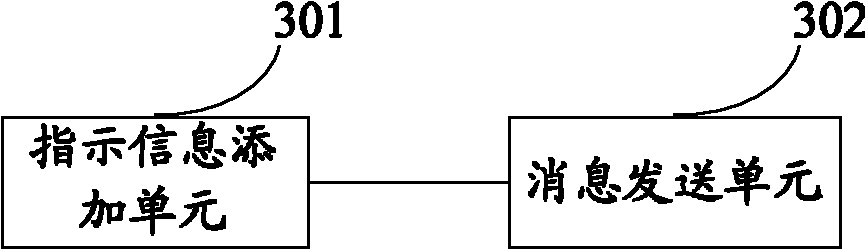

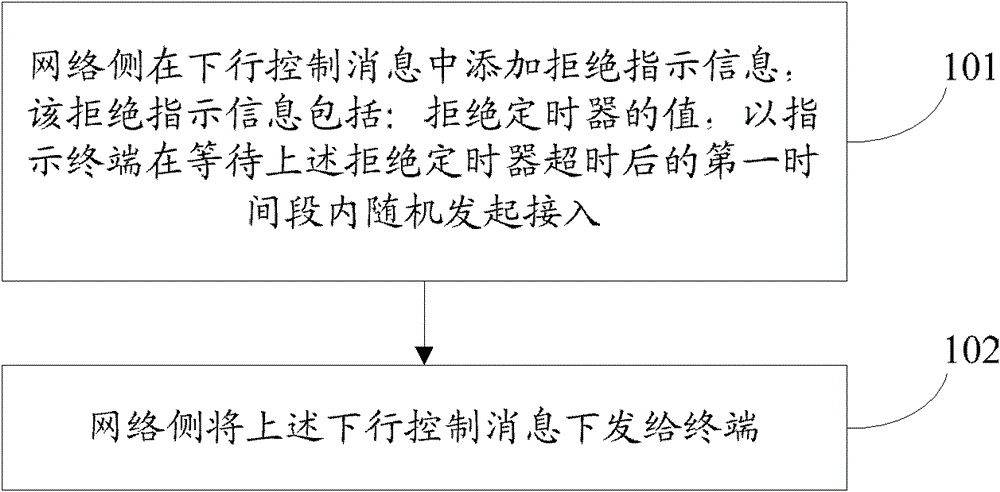

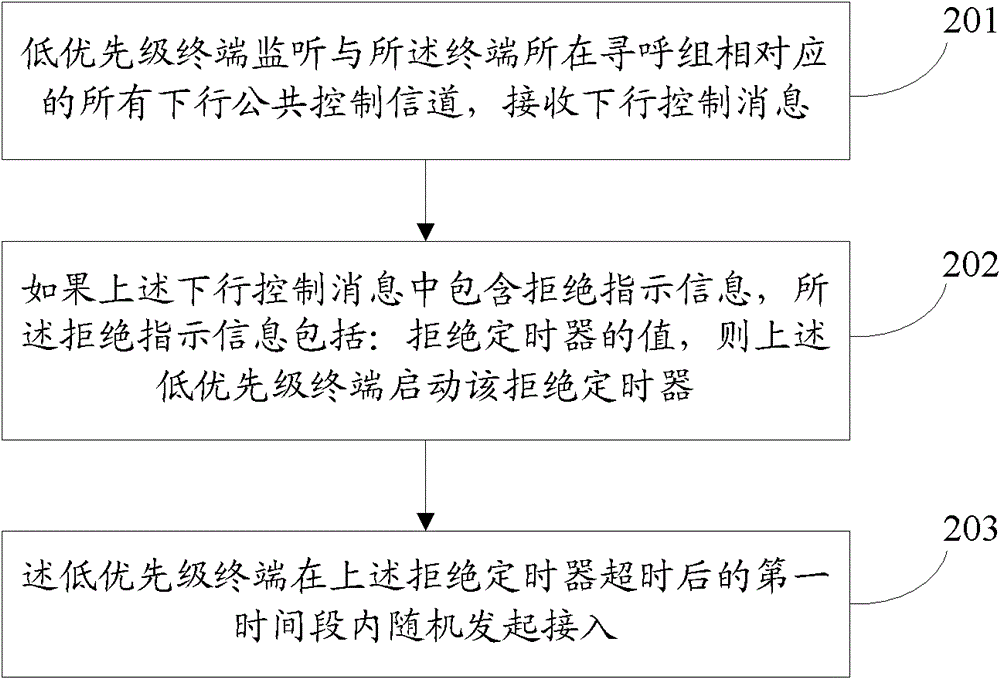

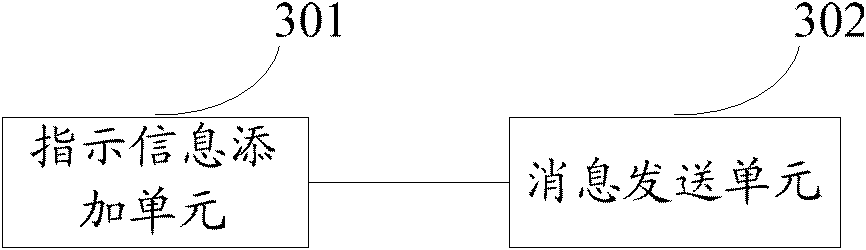

Method and device for controlling terminal access and method and device for terminal access

The invention relates to the technical field of communication and discloses a method and a device for controlling terminal access. The method comprises that a network side adds a rejection indicating message in a downlink control message, and the rejection indicating message comprises a value of a rejection timer, so that a terminal is indicated to initiate access randomly within a first time period after the time the rejection timer is exceeded; and the network side issues the downlink control message to the terminal. The invention also discloses a method and a device for the terminal access. By the aid of the method and the device for controlling the terminal access, the terminal access can be controlled effectively, and access conflicts are reduced.

Owner:HUAWEI TECH CO LTD

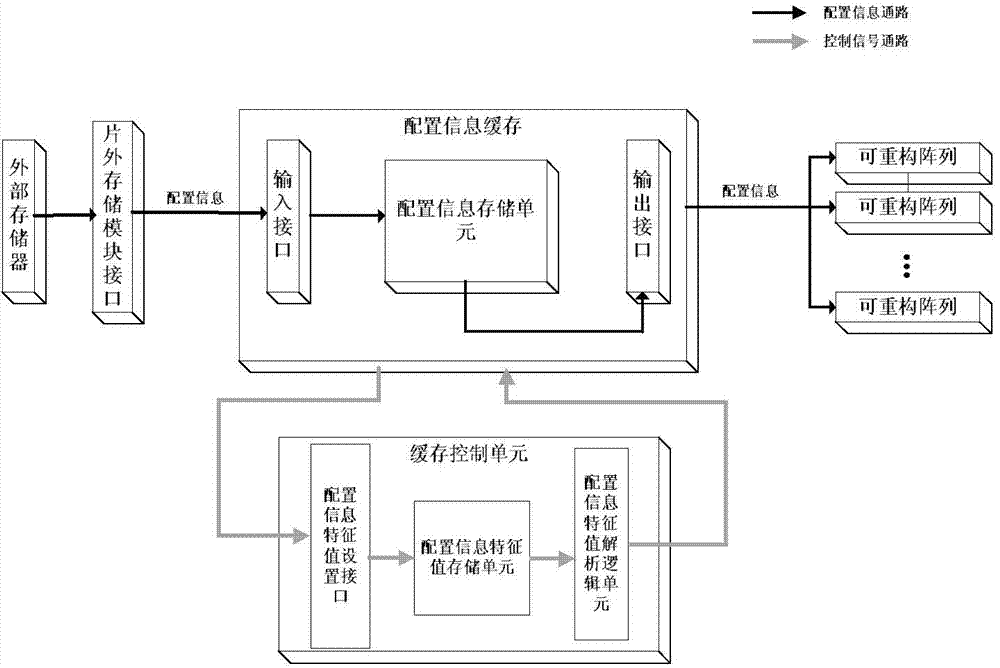

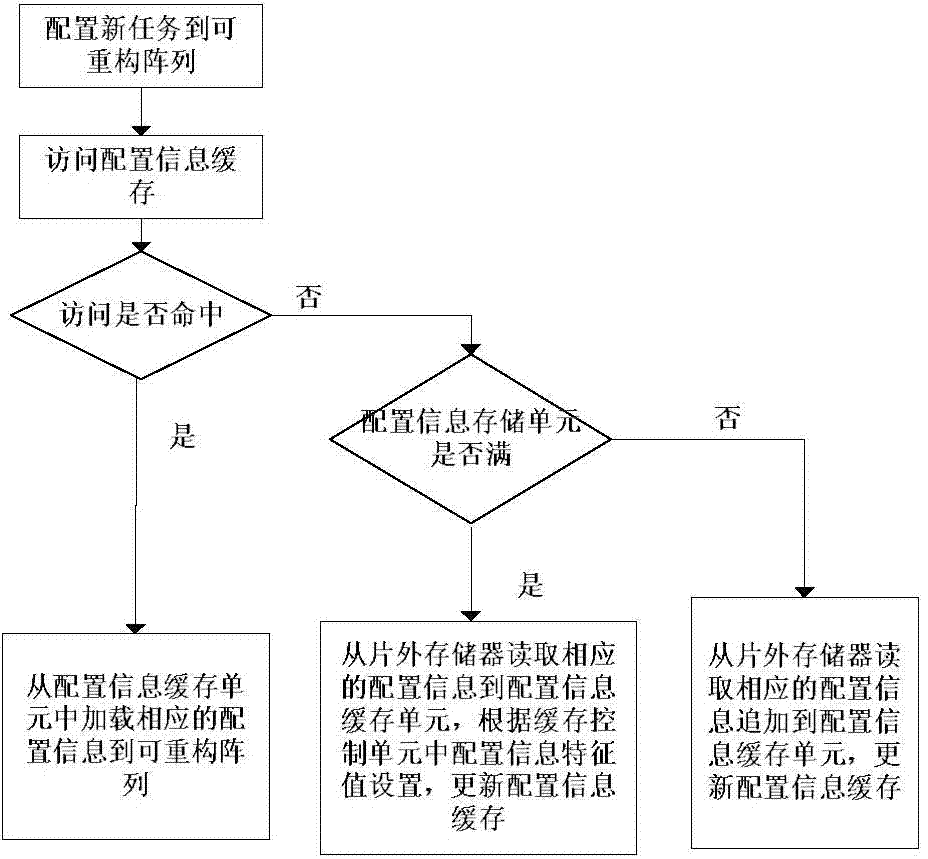

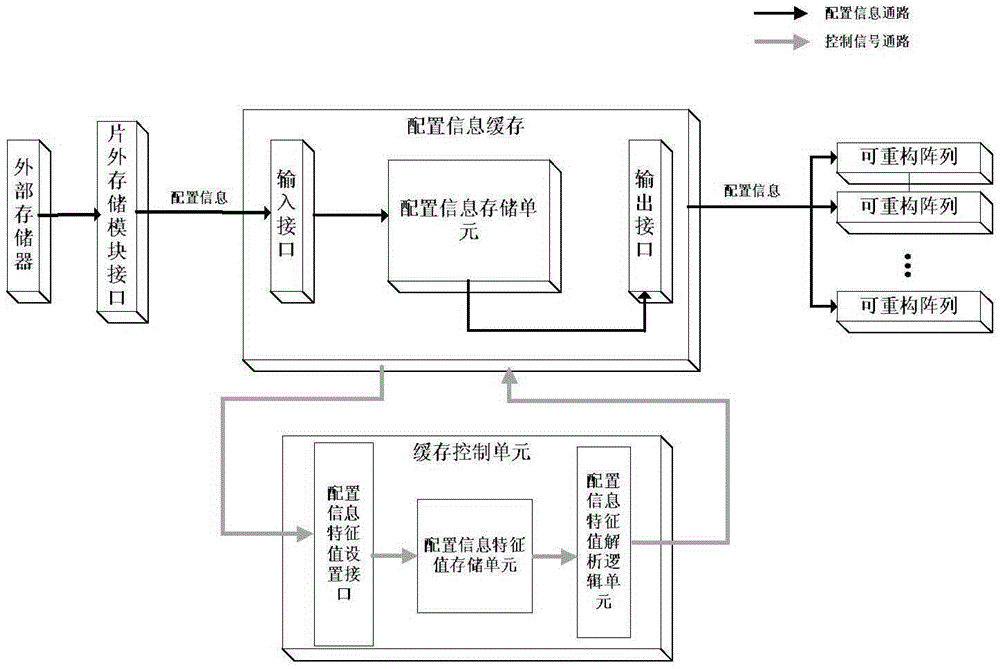

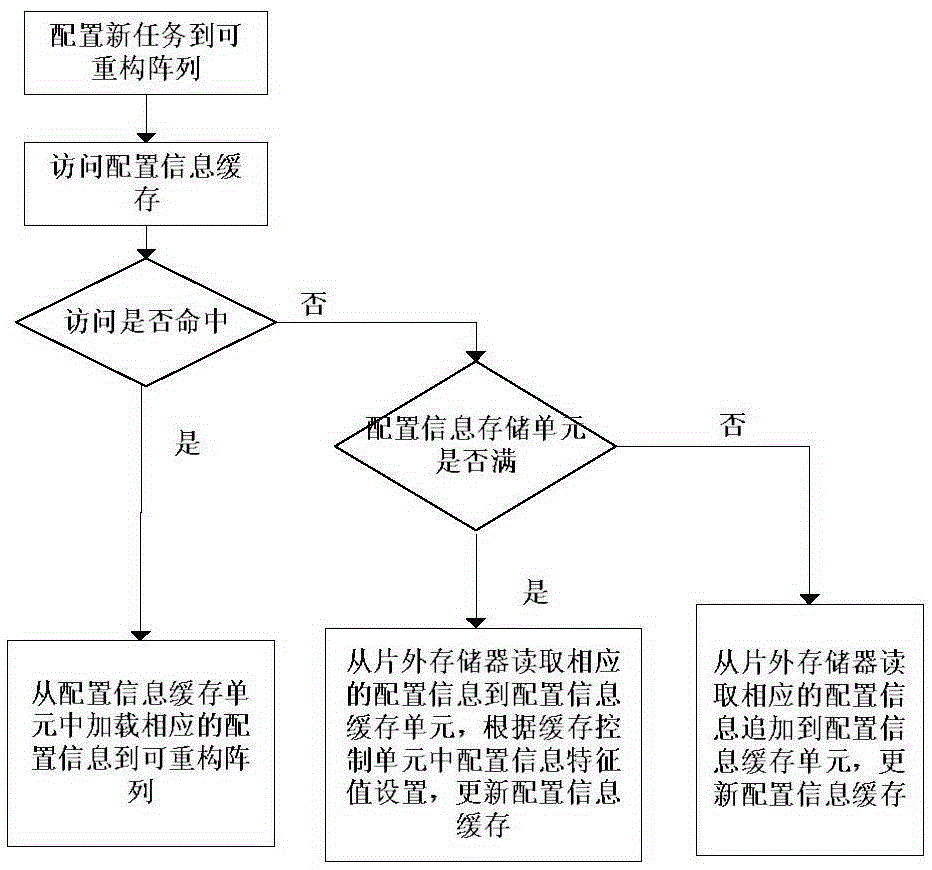

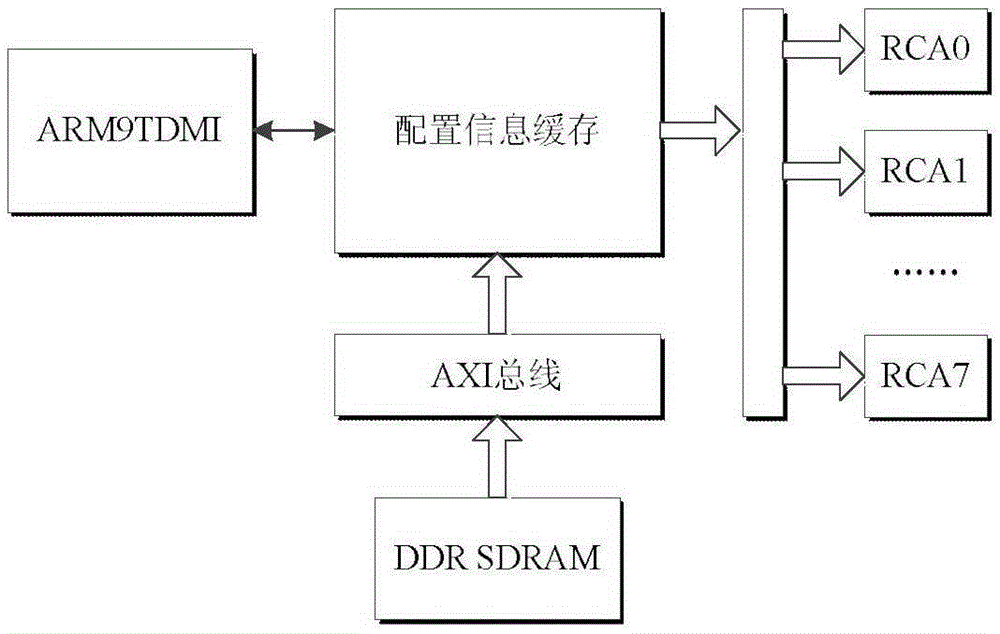

Controller for realizing configuration information cache update in reconfigurable system

ActiveCN103488585AImprove dynamic refactoring efficiencyIncrease profitMemory adressing/allocation/relocationProgram loading/initiatingExternal storageMemory interface

The invention discloses a controller for realizing configuration information cache update in a reconfigurable system. The controller comprises a configuration information cache unit, an off-chip memory interface module and a cache control unit, wherein the configuration information cache unit is used for caching configuration information which can be used by a certain reconfigurable array or some reconfigurable arrays within a period of time, the off-chip memory interface module is used for reading the configuration information from an external memory and transmitting the configuration information to the configuration information cache unit, and the cache control unit is used for controlling the reconfiguration process of the reconfigurable arrays. The reconfiguration process includes: mapping subtasks in an algorithm application to a certain reconfigurable array; setting a priority strategy of the configuration information cache unit; replacing the configuration information in the configuration information cache unit according to an LRU_FRQ replacement strategy. The invention further provides a method for realizing configuration information cache update in the reconfigurable system. Cache is updated by the aid of the LRU_FRQ replacement strategy, a traditional mode of updating the configuration information cache is changed, and the dynamic reconfiguration efficiency of the reconfigurable system is improved.

Owner:SOUTHEAST UNIV

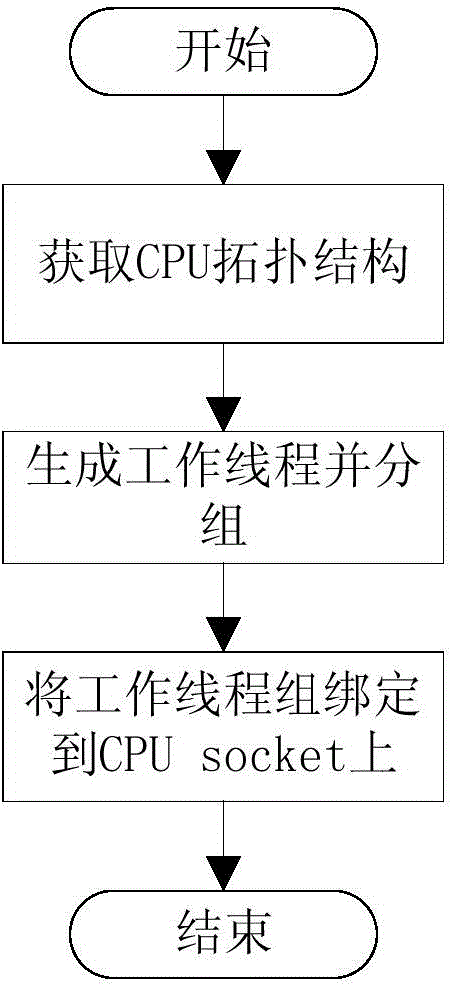

Parallel breadth-first search method based on shared memory system structure

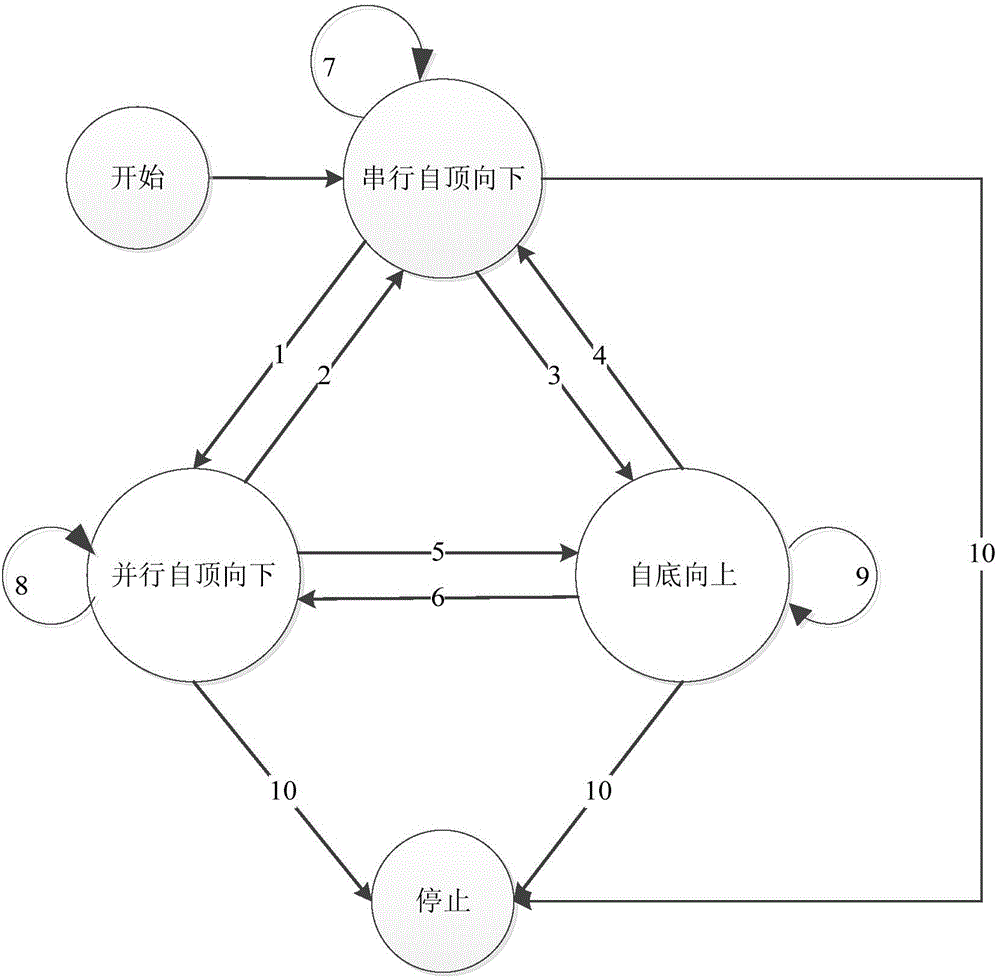

InactiveCN106033442AReduce access conflictsImprove memory access efficiencySpecial data processing applicationsCPU socketShared memory architecture

The invention discloses a parallel breadth-first search method based on a shared memory system structure. The method comprises: a worker thread grouping and binding process, a data interval partition process, and a task execution process. The method specifically comprises: obtaining a CPU topological structure of an operating environment, partitioning and binding a worker thread group; partitioning data intervals, and making the data intervals corresponding to the worker thread groups; task execution using a hierarchical ergodic method, in an execution process, automatically selecting a proper state from a series top-down state, a parallel top-down state, and a bottom-up state, as a self execution state, and when count in an activated state in a certain layer is zero, ending task execution. The method reduces communication among CPU sockets through task partition, data consistency maintenance among the CPU sockets is eliminated, and improves data reading efficiency through data binding and automatic switching, so as to improve operation efficiency of program.

Owner:PEKING UNIV

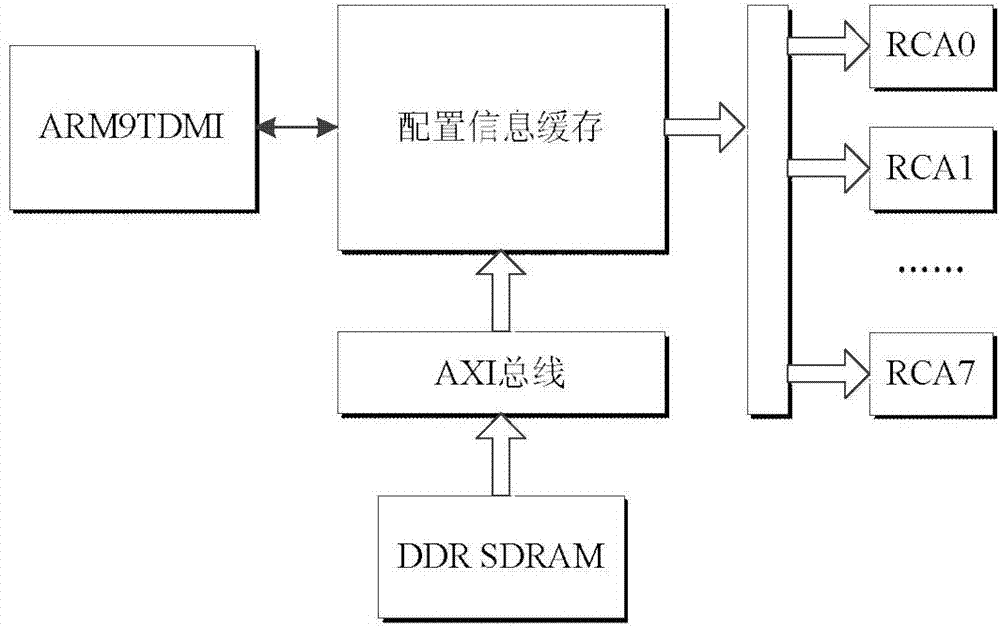

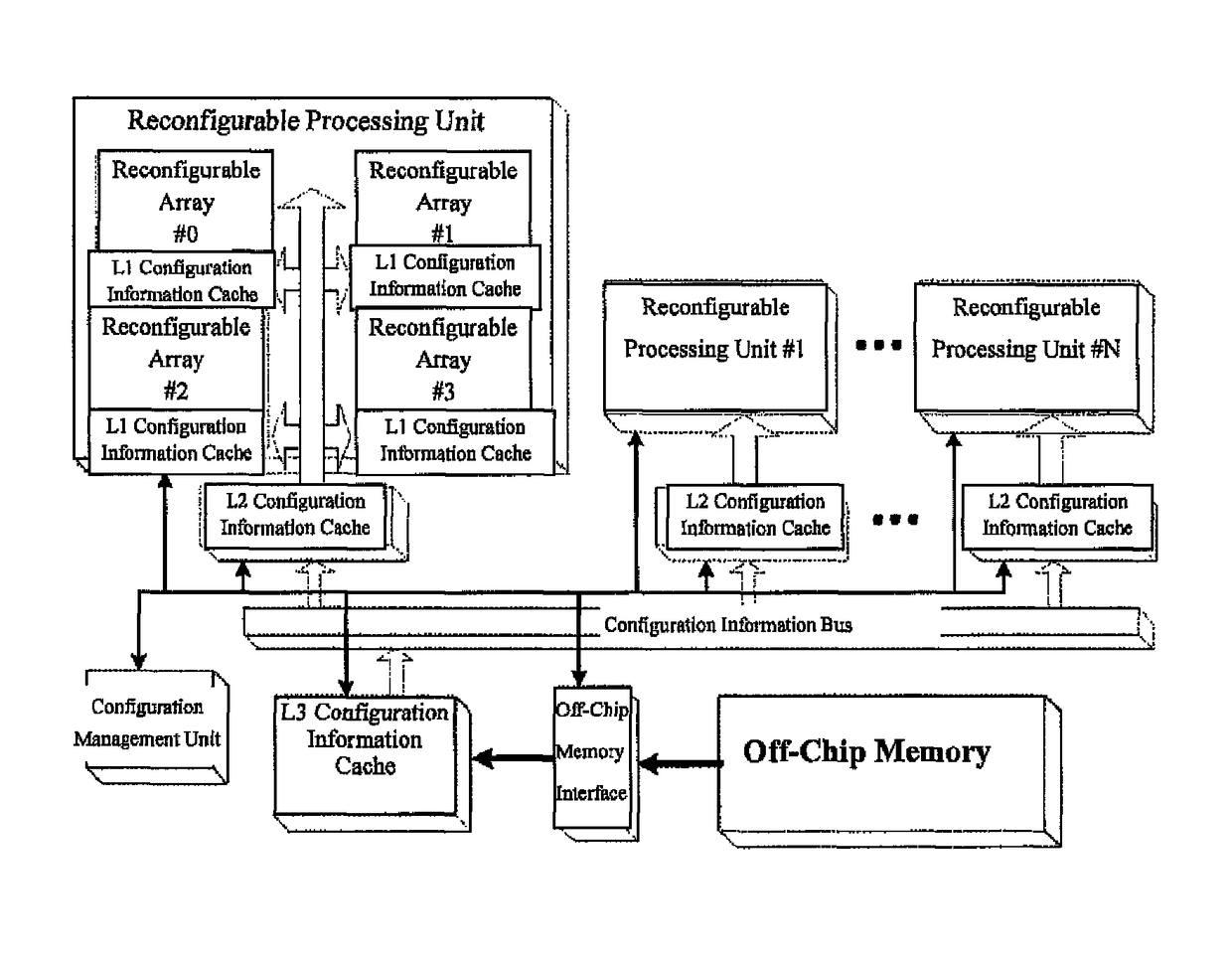

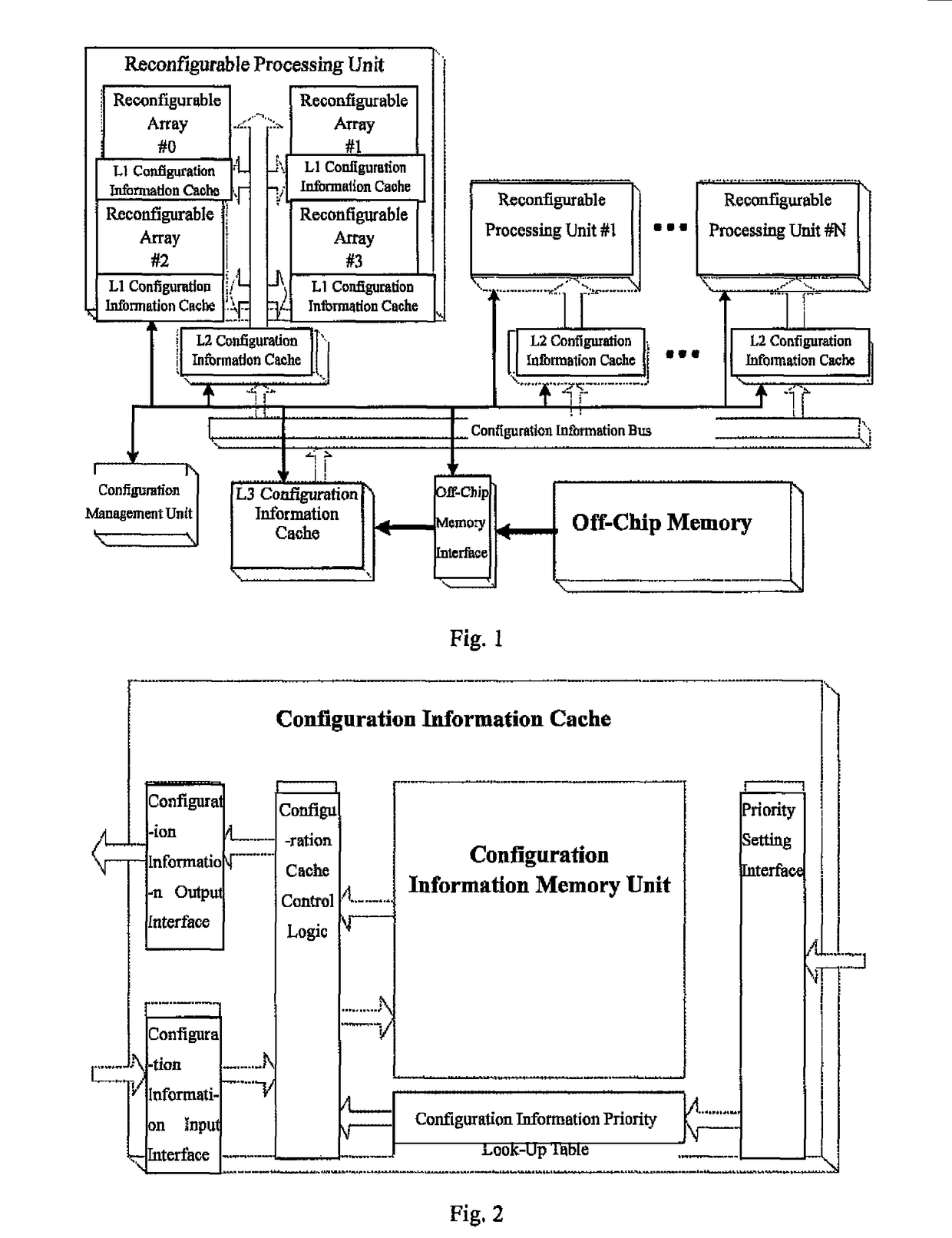

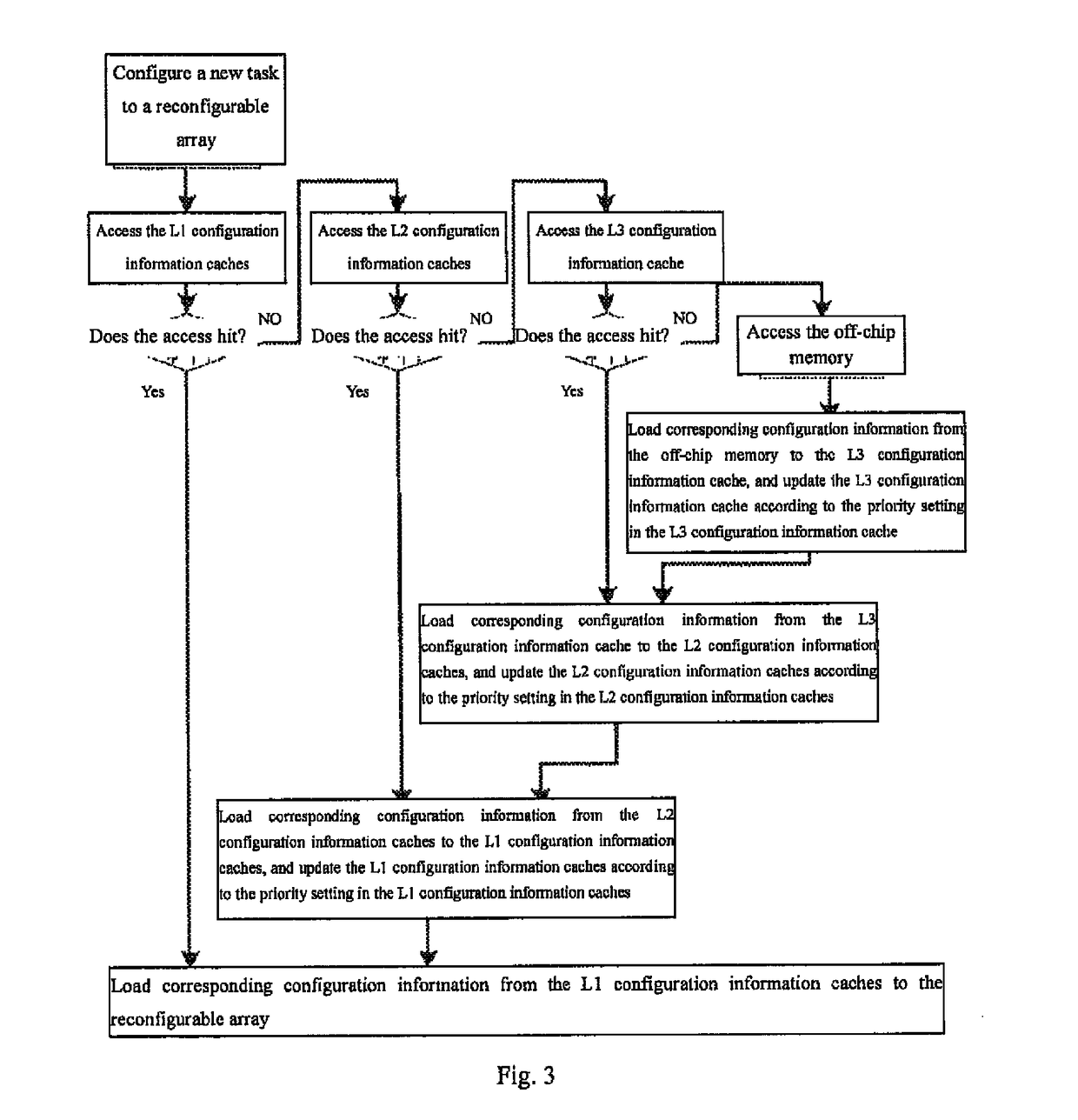

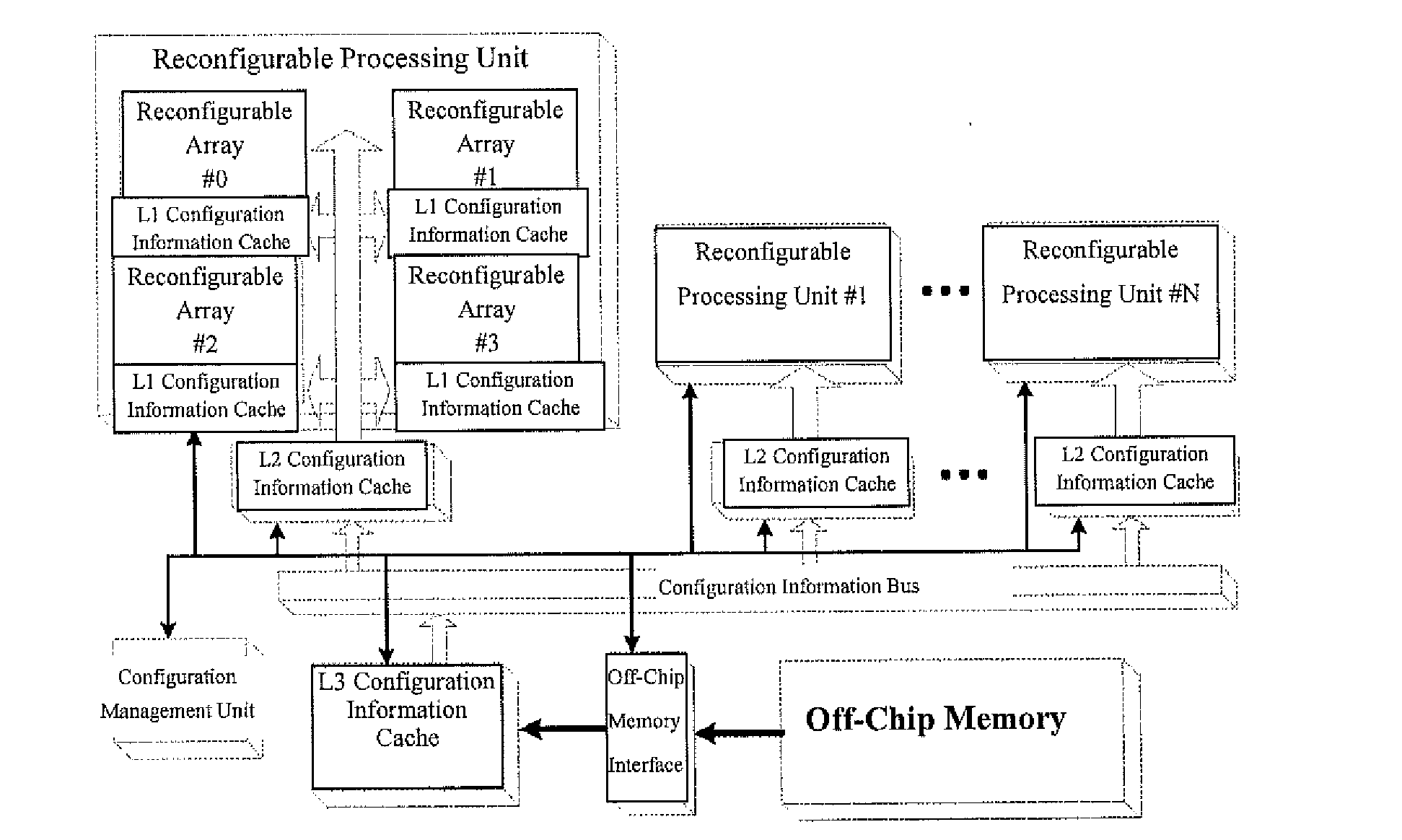

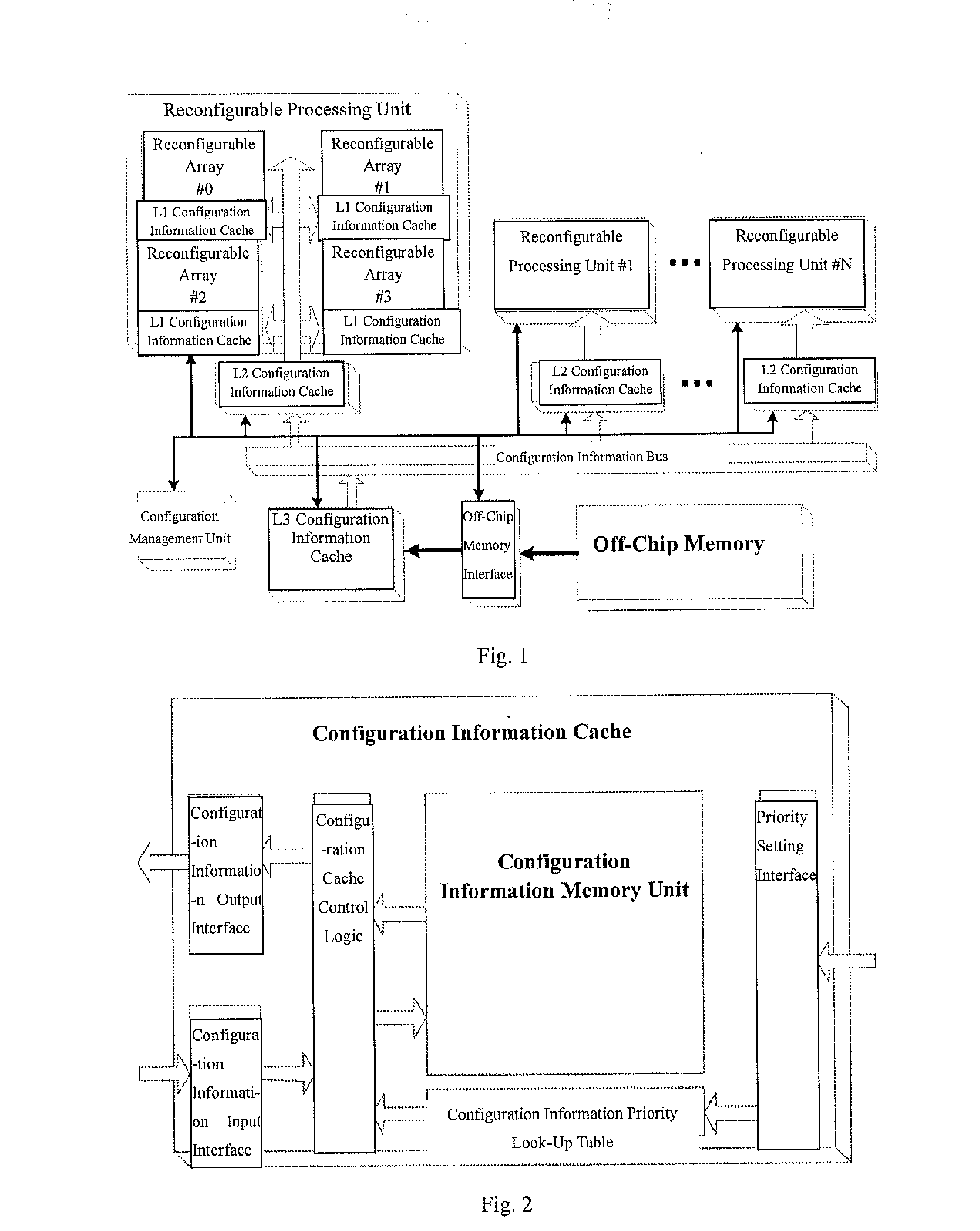

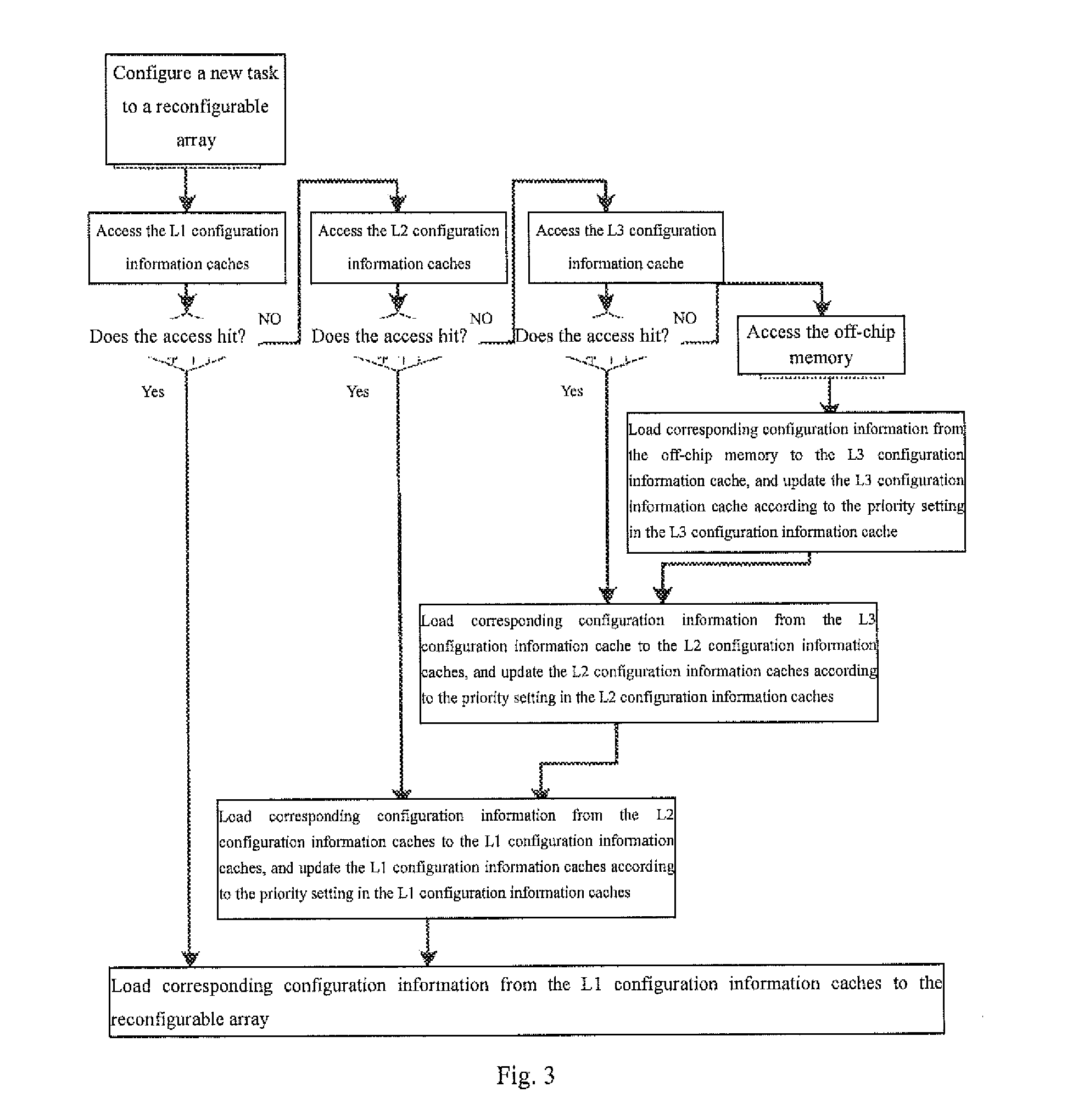

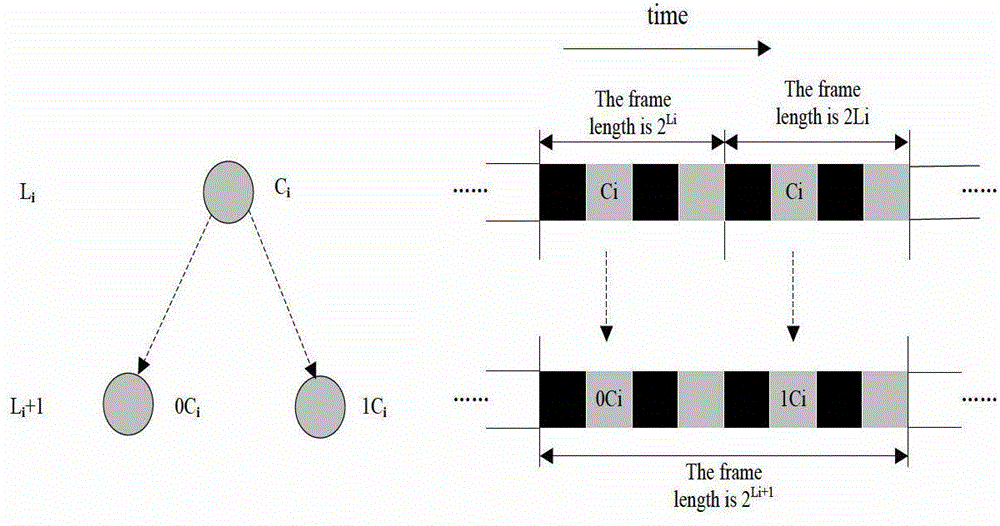

Cache structure and management method for use in implementing reconfigurable system configuration information storage

ActiveUS9734056B2Increase profitReduce access conflictsMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer architectureMemory interface

A cache structure for use in implementing reconfigurable system configuration information storage, comprises: layered configuration information cache units: for use in caching configuration information that may be used by a certain or several reconfigurable arrays within a period of time; an off-chip memory interface module: for use in establishing communication; a configuration management unit: for use in managing a reconfiguration process of the reconfigurable arrays, in mapping each subtask in an algorithm application to a certain reconfigurable array, thus the reconfigurable array will, on the basis of the mapped subtask, load the corresponding configuration information to complete a function reconfiguration for the reconfigurable array. This increases the utilization efficiency of configuration information caches. Also provided is a method for managing the reconfigurable system configuration information caches, employing a mixed priority cache update method, and changing a mode for managing the configuration information caches in a conventional reconfigurable system, thus increasing the dynamic reconfiguration efficiency in a complex reconfigurable system.

Owner:SOUTHEAST UNIV

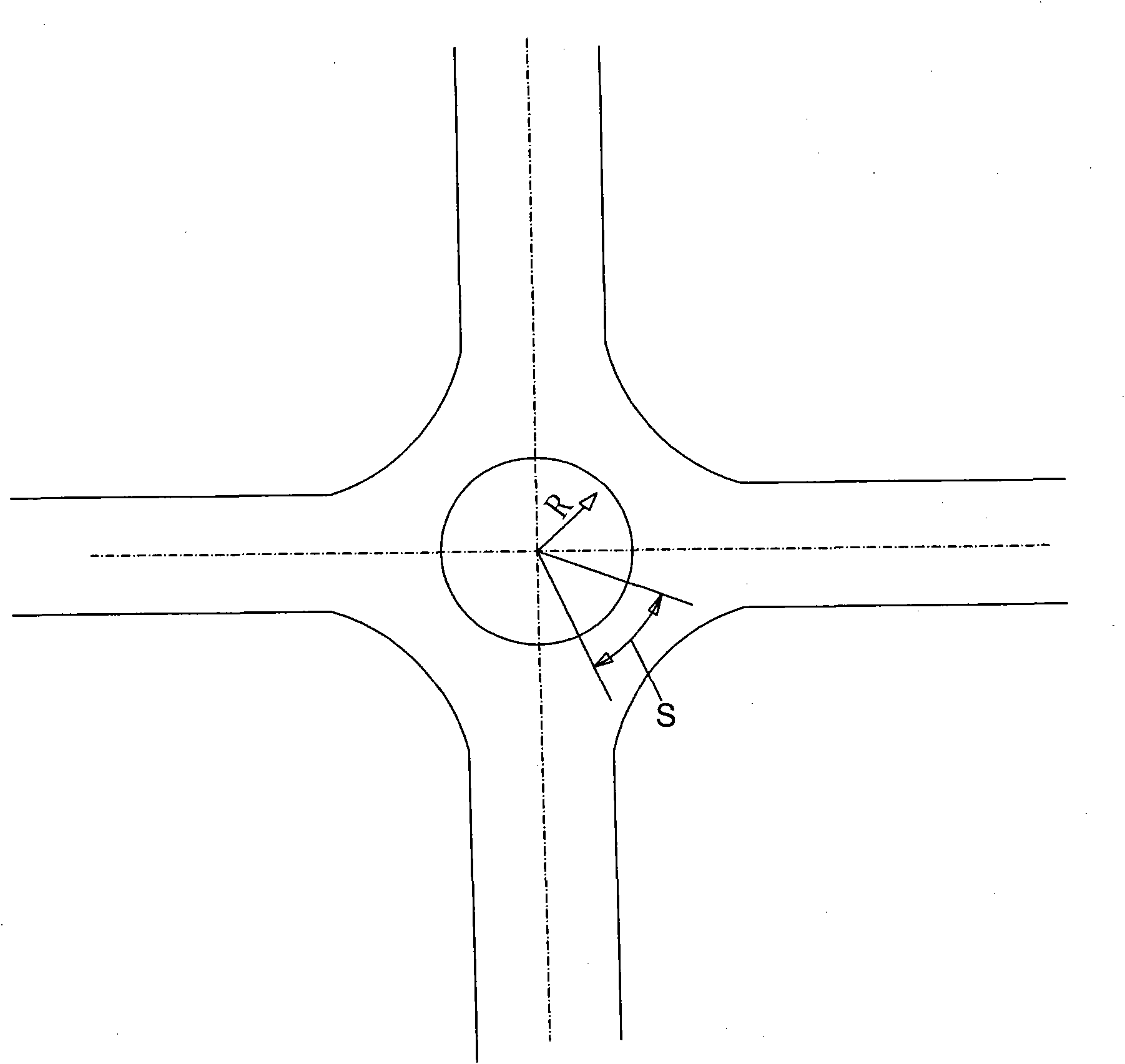

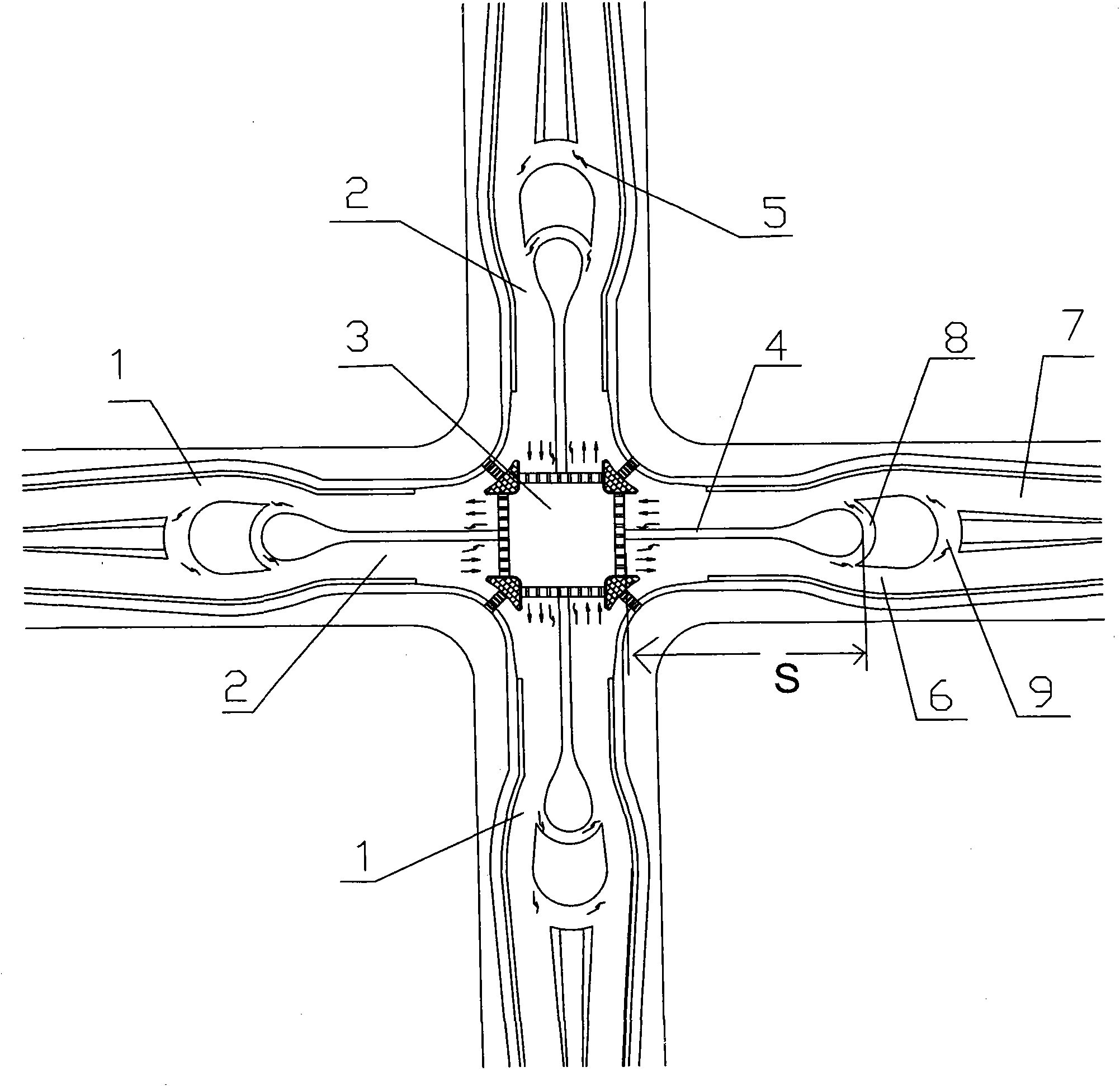

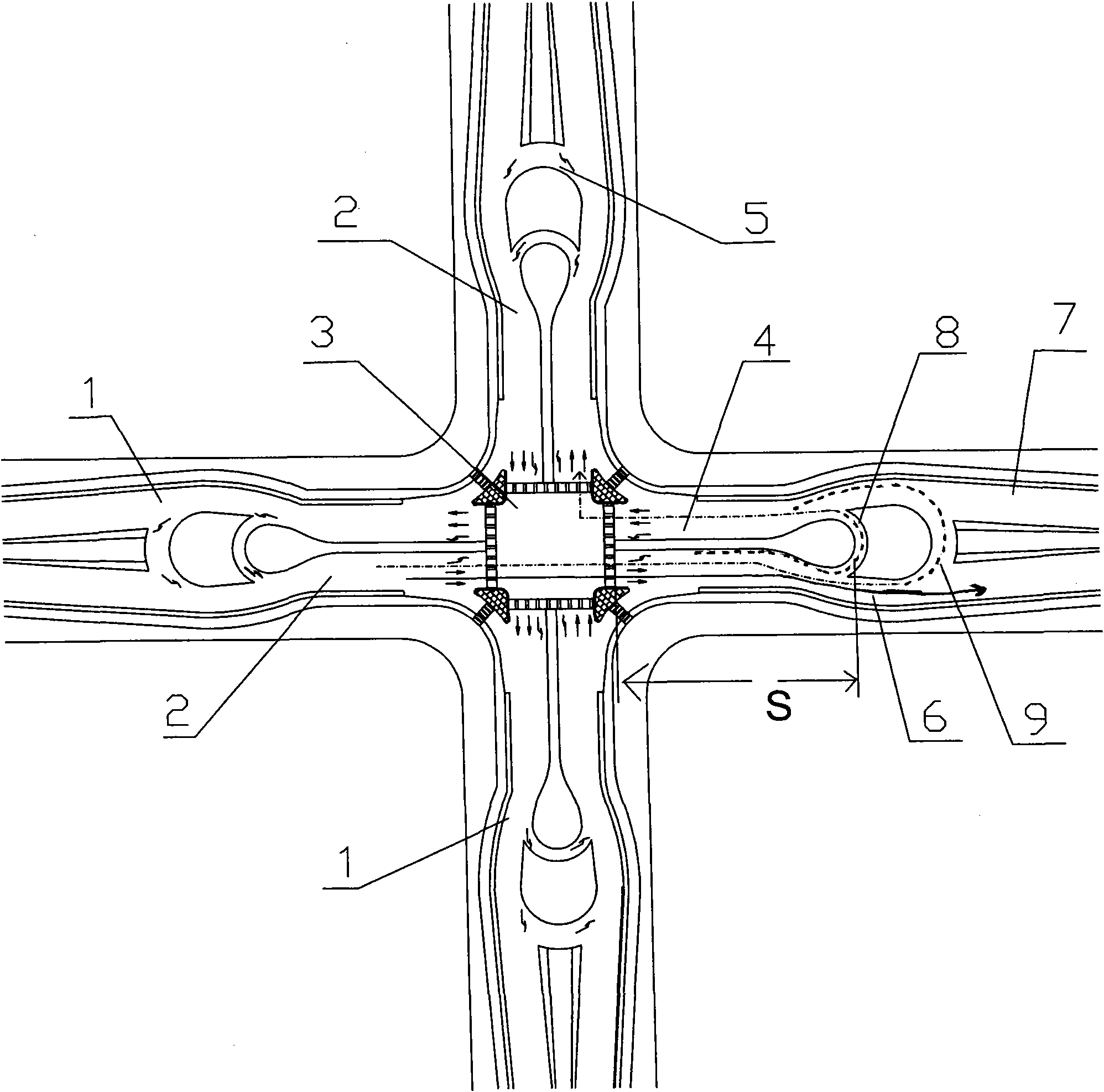

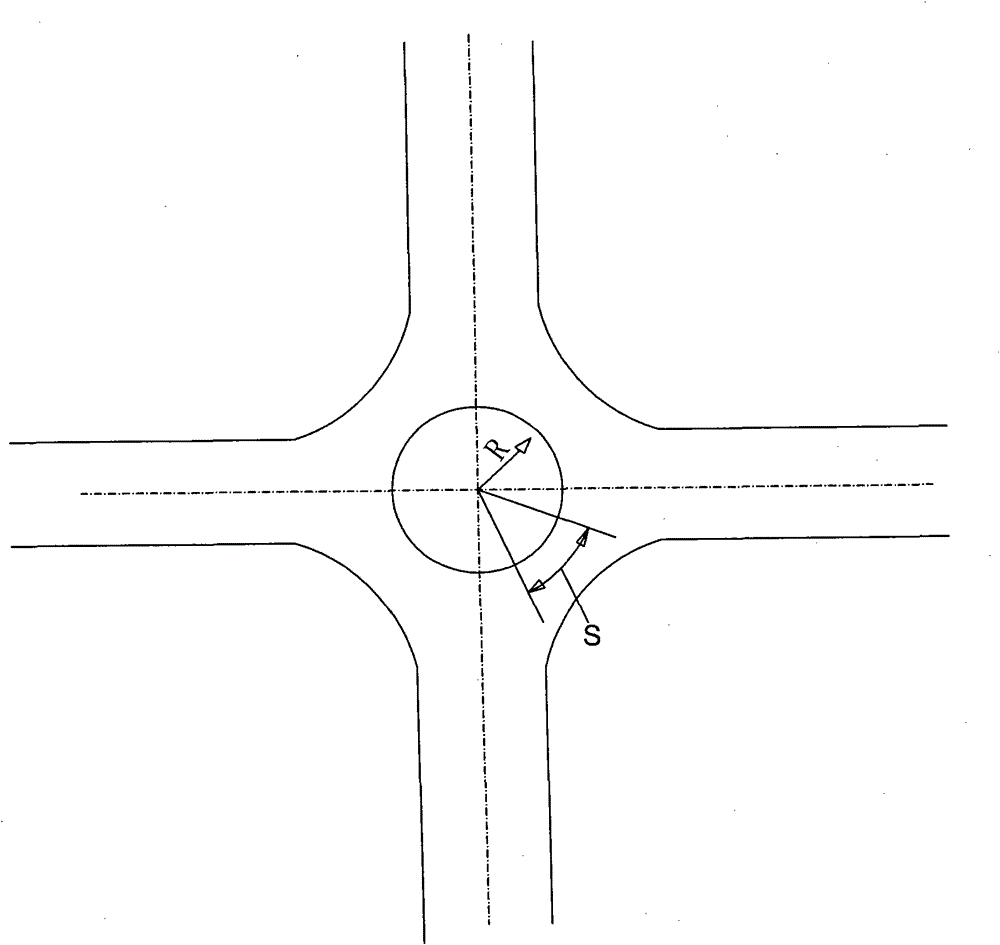

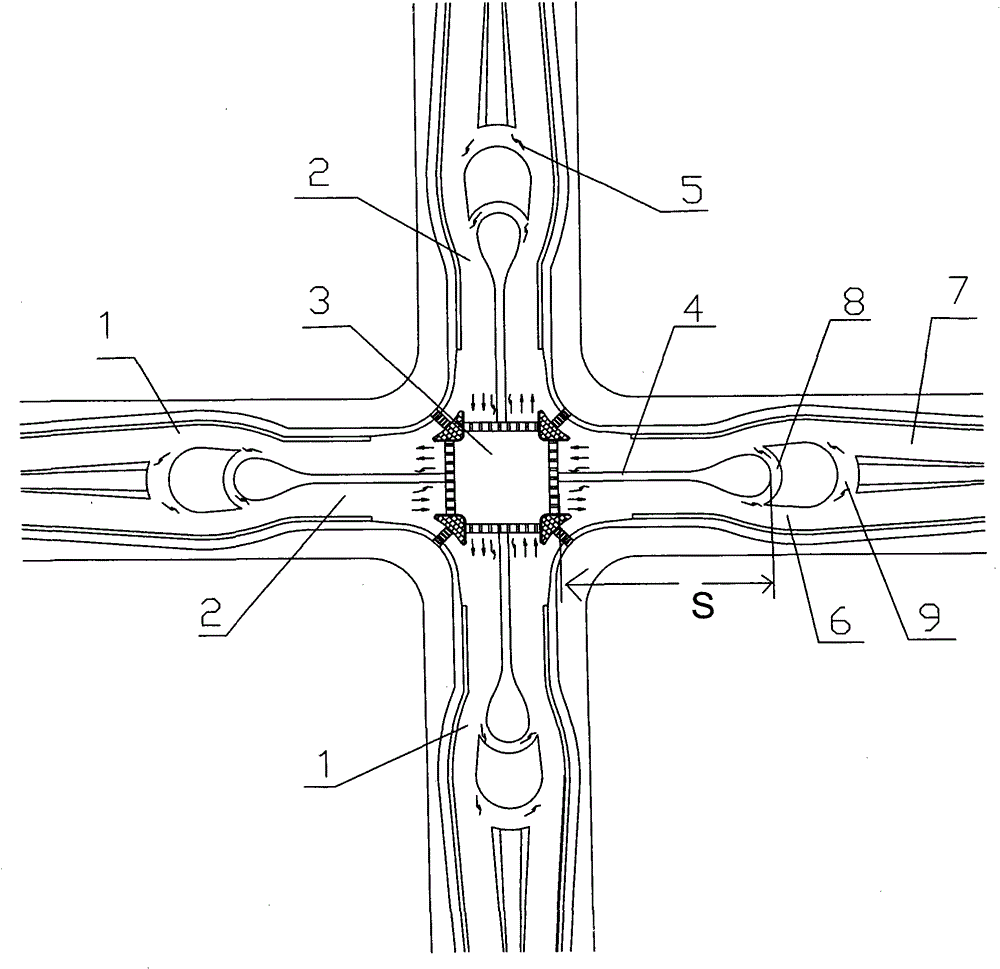

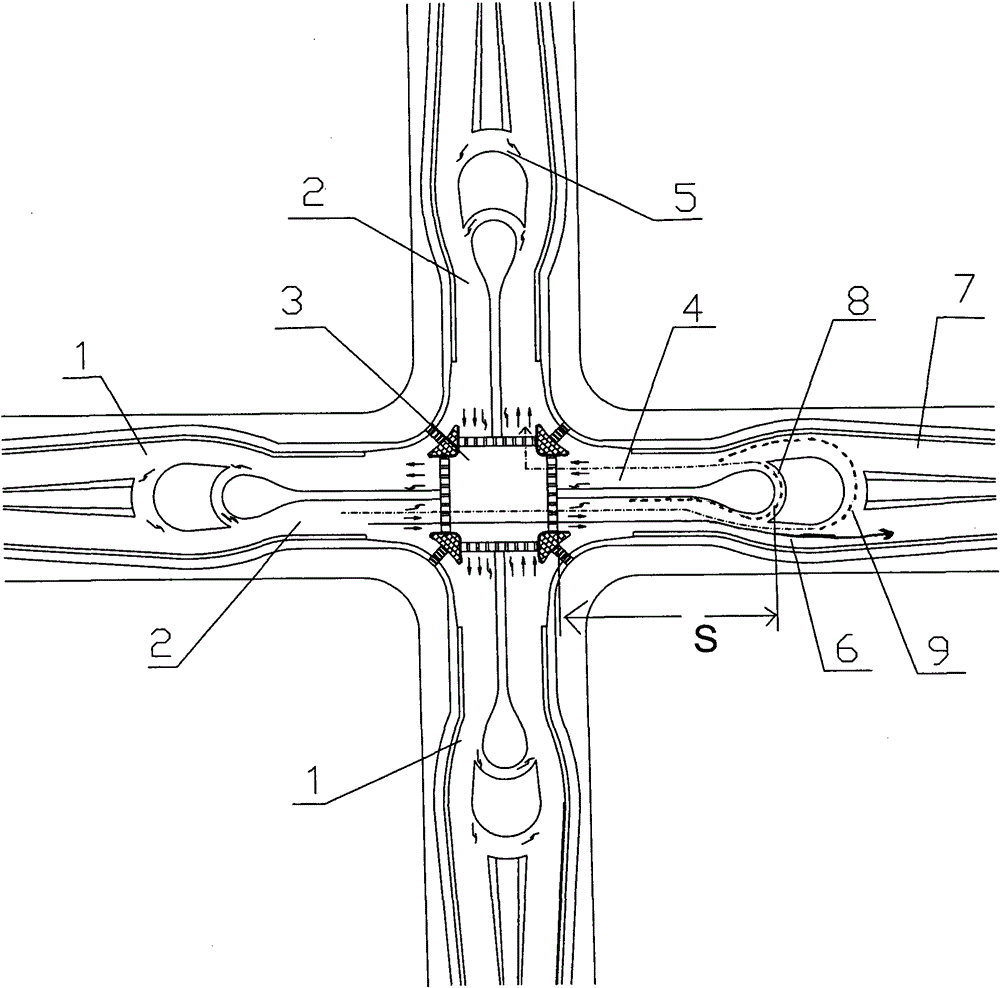

High-efficiency passing method for road intersection

The invention discloses a high-efficiency passing method for a road intersection adopting non-circular roundabout. A left turning passage for communicating two-way lanes of a major trunk road is built in the middle section of a straight through isolating band of the two-way lanes of the major trunk road; an approximately quincuncial or oval traffic ring island is formed on the intersection; resources of middle section of the road are thoroughly used for enhancing the passing capability of the intersection; and waste road resources are better applied. Under the condition that no overpass is built on a suburban intersection and a T-junction and buildings along streets are not removed, a left turning vehicle stream and a straight vehicle stream on the major trunk road quickly can pass the intersection without any parking; under the condition that the left turning vehicle stream and the straight vehicle stream on the major trunk road are not disturbed, a left turning vehicle stream and a straight vehicle stream on a secondary trunk road can successfully pass the intersection so that the passing vehicle streams on the intersection are simple and sequential; and 50% traffic light conversion is reduced, therefore, the purposes of largely enhancing the vehicle passing efficiency and realizing quick passing are achieved.

Owner:江西省中业景观工程安装有限公司

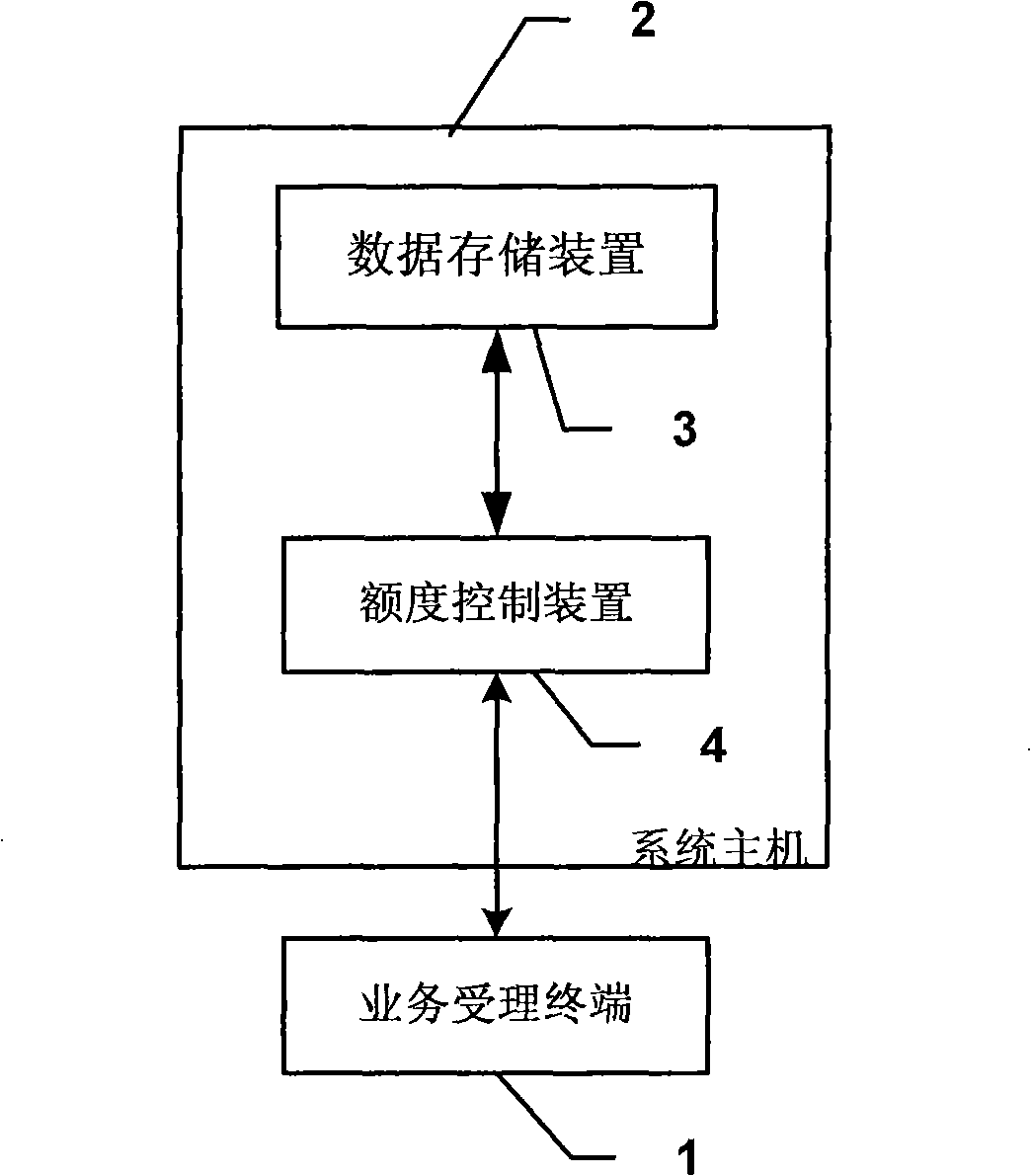

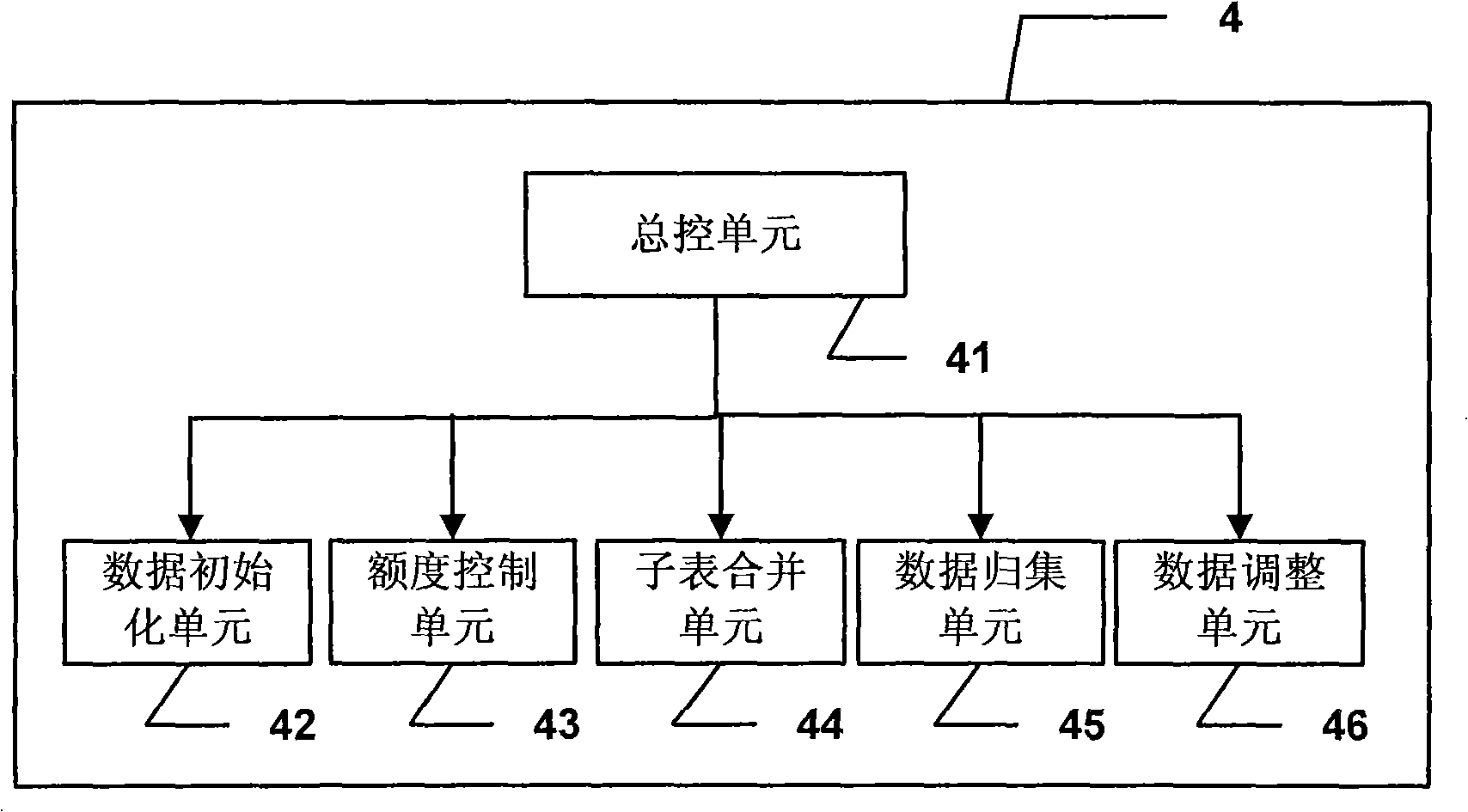

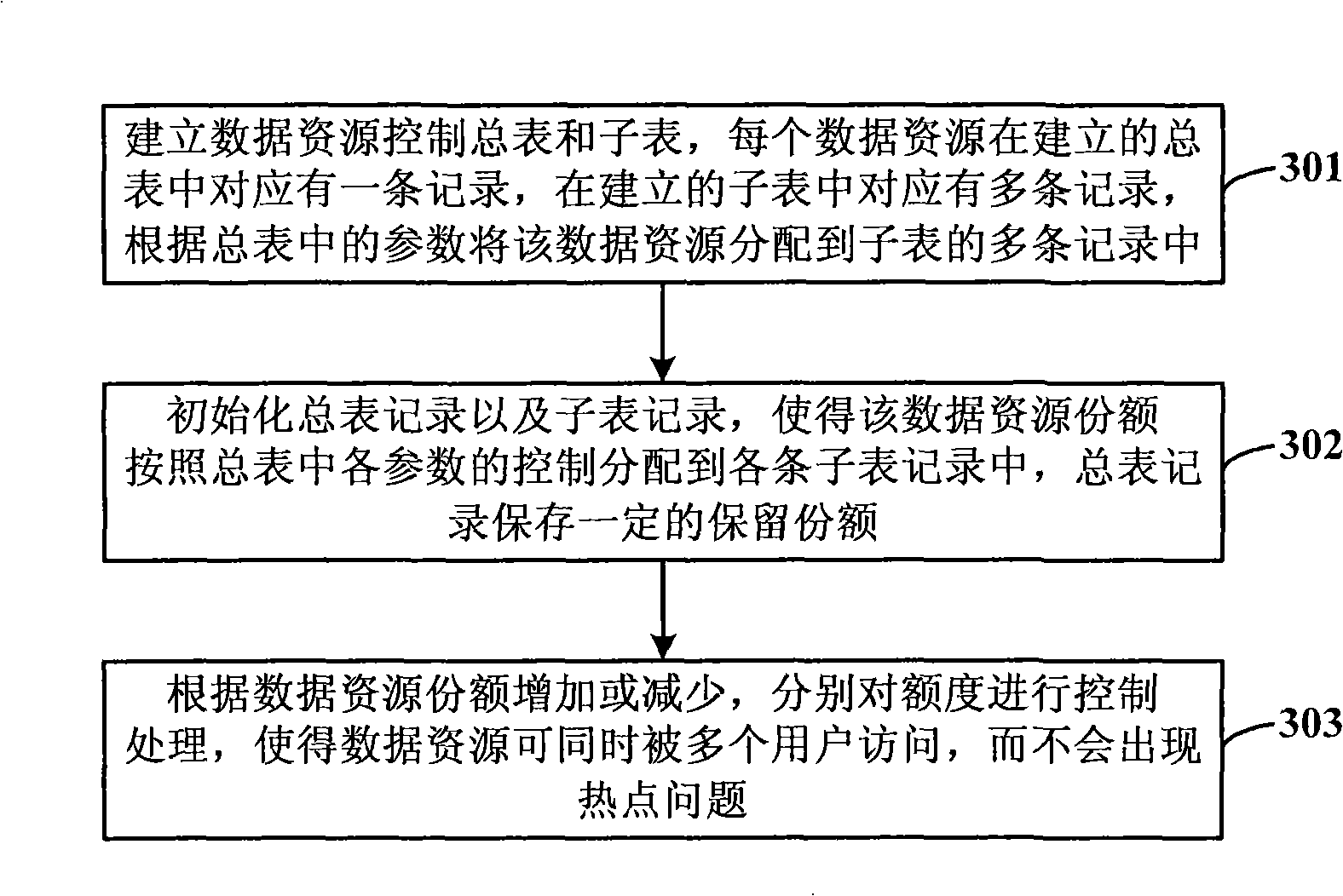

System and method for resolving data resource access hot point problem

InactiveCN101299259AMeet the requirements of quota controlReduce access conflictsCommerceSpecial data processing applicationsData accessFinancial transaction

The present invention discloses a system for solving the data resource hot spots accessing problem, which include: a system host computer, for executing the limit initialization setting, the limit control, the limit aggregation or limit regulation operations according to instruction received from the service accept and hear terminal; a service accept and hear terminal, for receiving the business application initiated by the customers or tellers through Internet bank, telephone bank, counter, self-service terminal; the service accept and hear terminal is connected with to the system host computer through network. Meanwhile, the invention discloses a method for solving the data resource hot spots accessing problem. The use of this invention can not only satisfy the data resource limit control requirement, wherein, the data resources can be accessed by a plurality of users simultaneously, the different point-of-sales share a same limit of resources, thereby reducing data access collision and preventing the emergence of hot spots; but also can have a maximize utilization to the limit resources.

Owner:INDUSTRIAL AND COMMERCIAL BANK OF CHINA

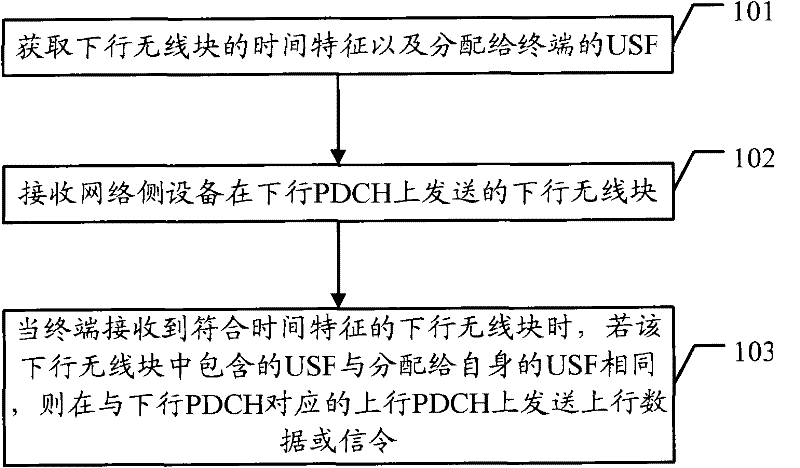

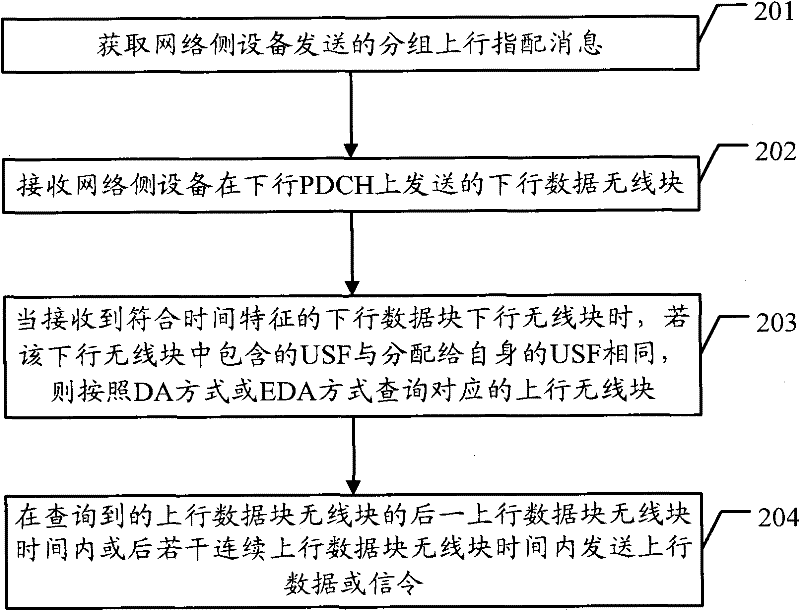

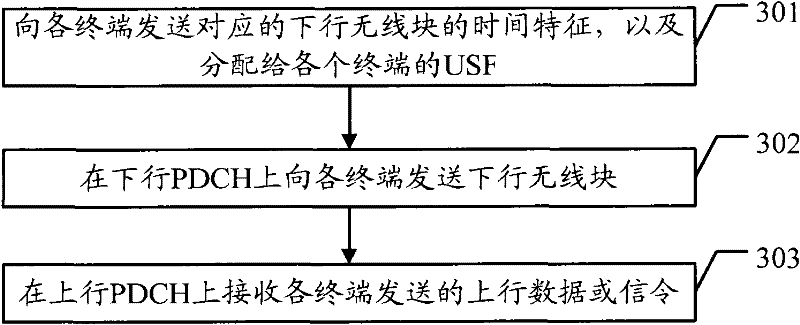

Data scheduling method and system thereof, and correlated equipment

InactiveCN102457977AImprove reusabilityIncrease success rateAssess restrictionTelecommunicationsData scheduling

The embodiment of the invention discloses a data scheduling method and a system thereof, and correlated equipment, so that multiplexity of a packet radio channel and a success rate of packet radio access can be effectively improved. According to the embodiment of the invention, the method comprises the following steps that: a terminal obtains a time characteristic of a downlink radio block and an uplink state flag (USF) that is distributed to the terminal, wherein the time characteristic of the downlink radio block is used for indicating that the terminal needs to detect a downlink radio block of the USF; a downlink radio block that is sent by network side equipment at a downlink packet data channel (PDCH) is received; when a downlink radio block that accords with the time characteristic is received, it is determined whether a USF contained in the downlink radio block is identical with a USF that is distributed to the downlink radio block itself; and if so, uplink data or signaling are / is sent at an uplink PDCH that is corresponded to the downlink PDCH. In addition, the embodiment of the invention also provides a data scheduling system and correlated equipment. According to the embodiment of the invention, multiplexity of a packet radio channel and a success rate of packet radio access can be effectively improved.

Owner:HUAWEI TECH CO LTD

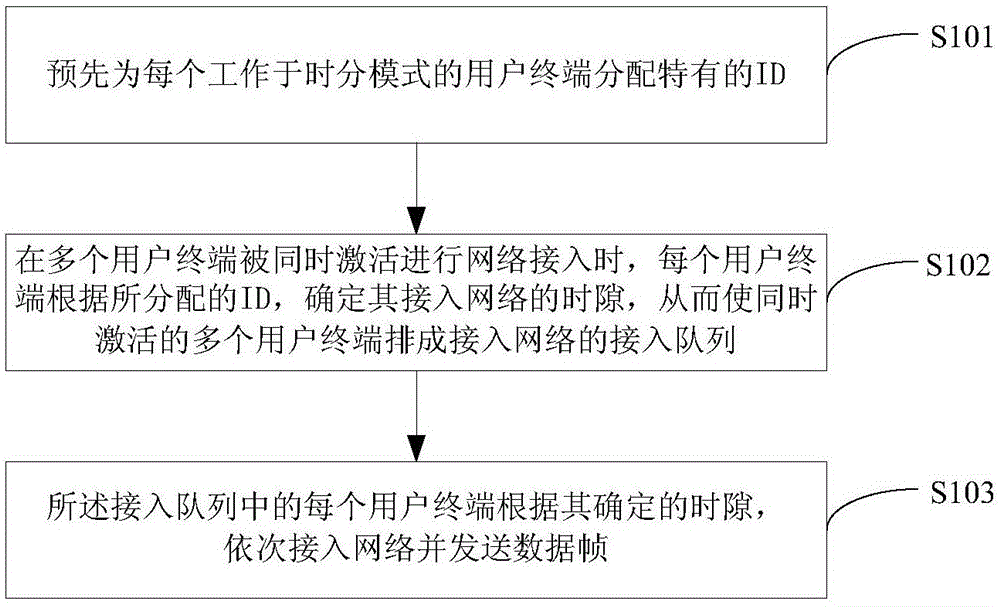

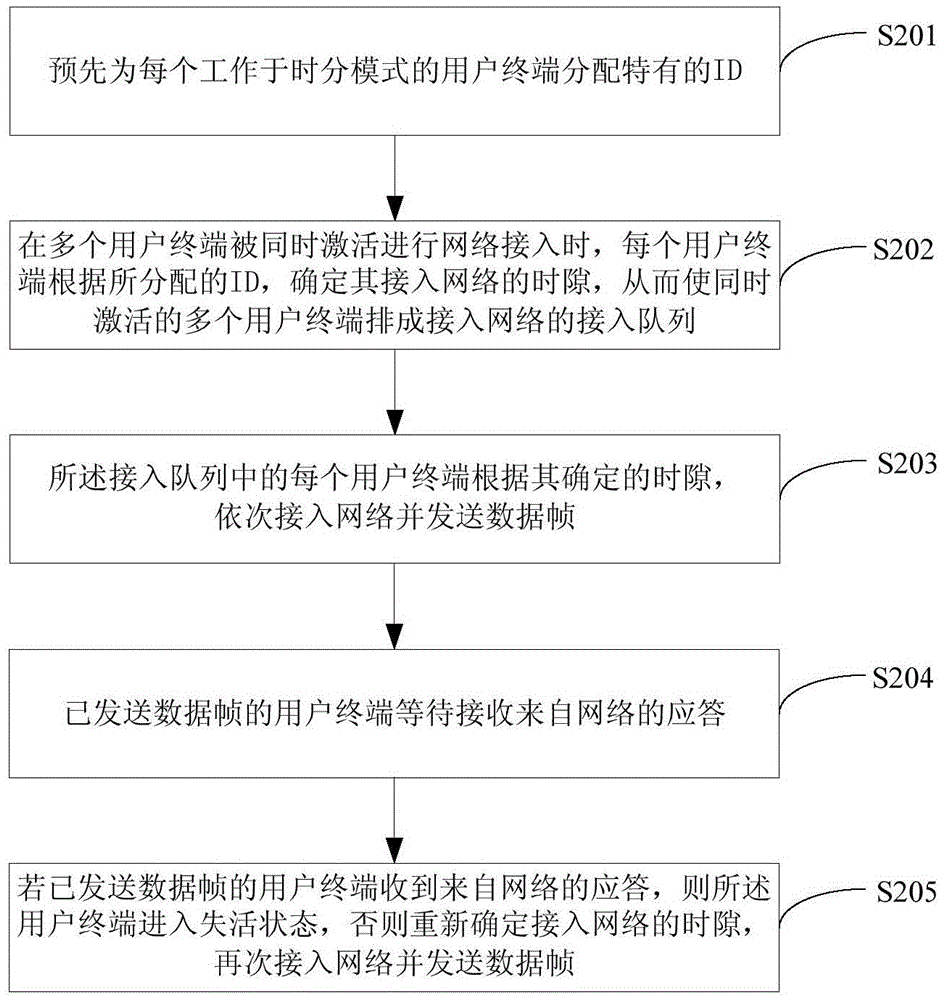

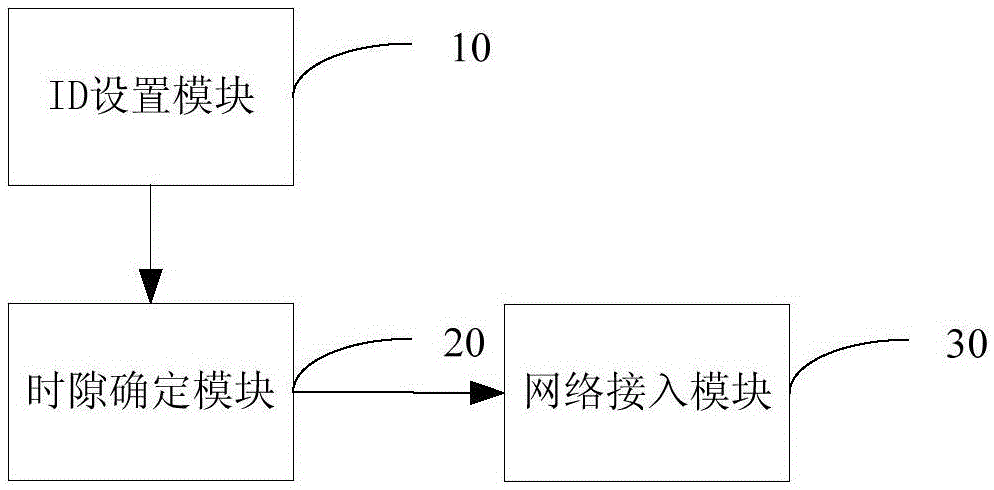

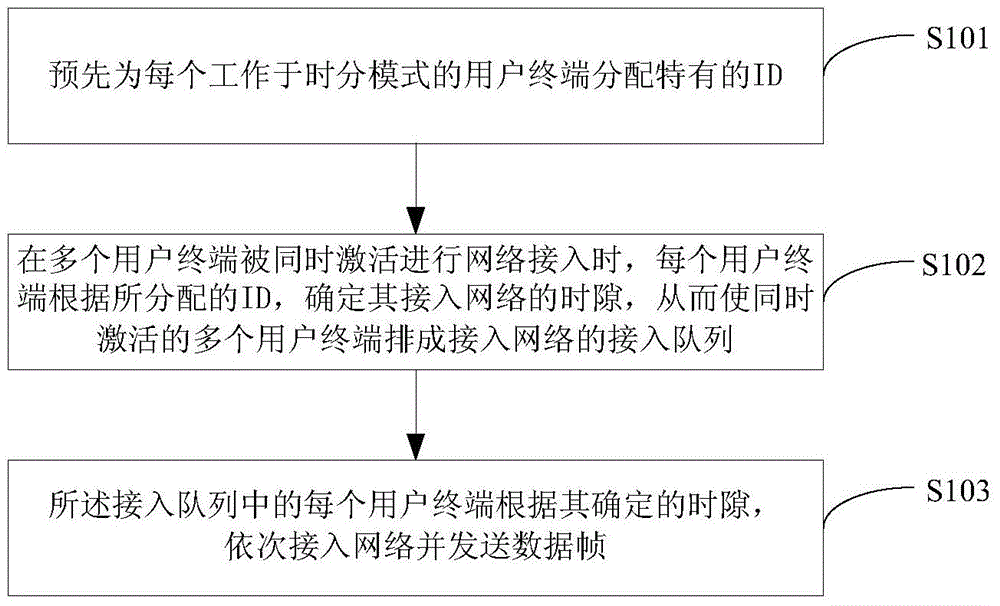

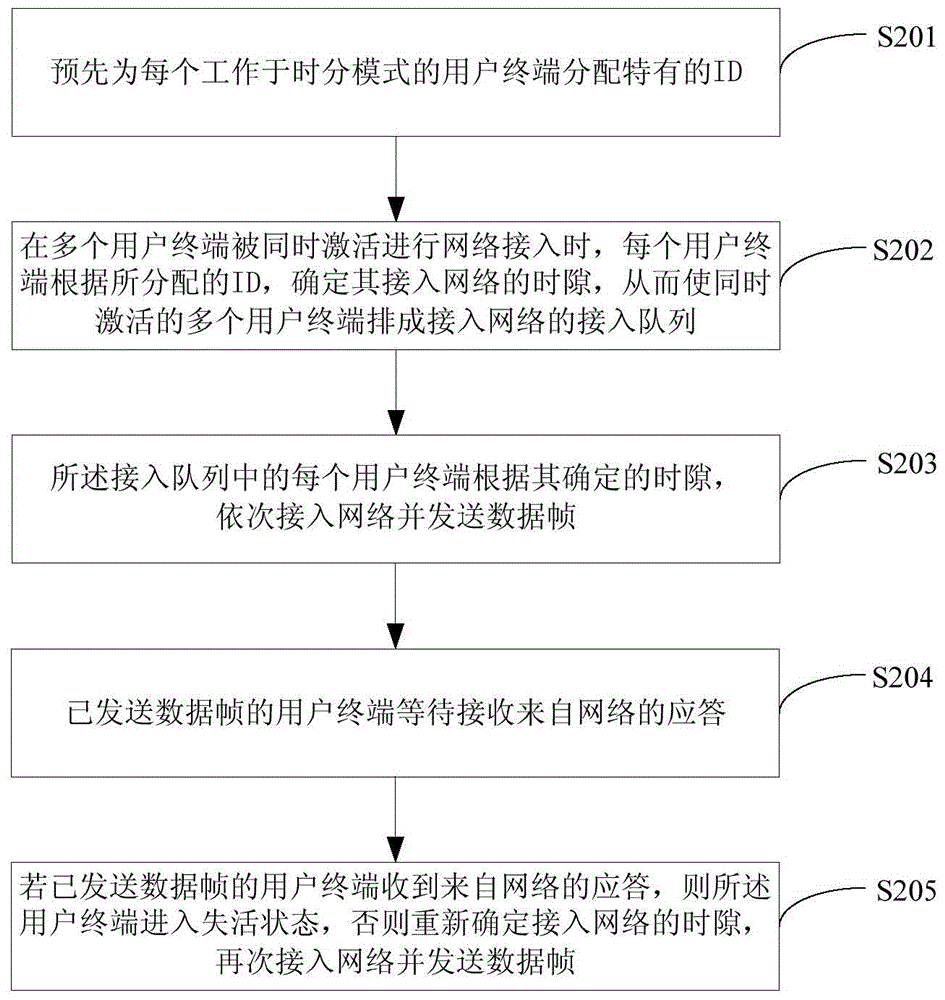

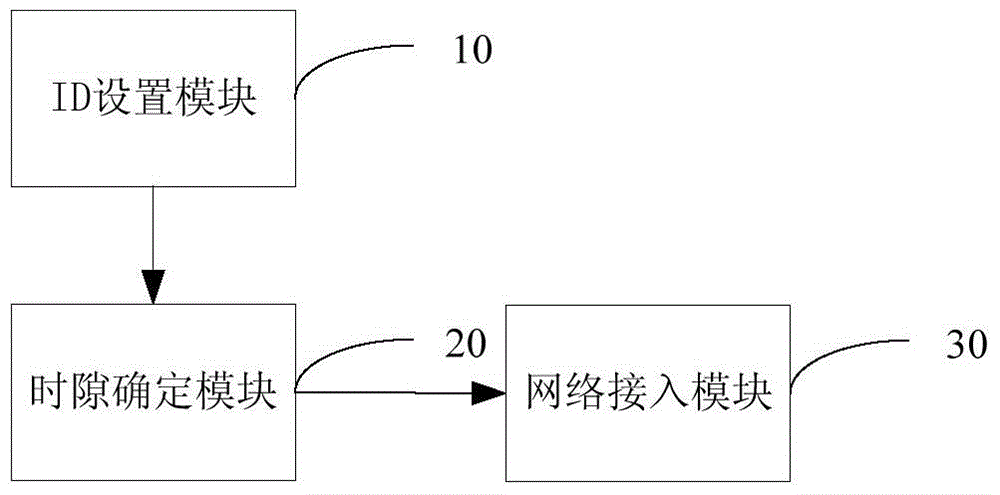

Method and device for preventing access collision of multiple user terminals

InactiveCN105307282AIncrease usageReduce access conflictsWireless communicationComputer networkAccess time

The invention discloses a method and device for preventing access collision of multiple user terminals. The method comprises the following steps: a specific user ID is allocated for each user terminal working in a time division mode in advance; when multiple user terminals working in a time division mode are activated at the same time and access a network, each user terminal determines a corresponding network access time slot according to the allocated user ID so that the user terminals activated at the same time forms an access queue for accessing the network; and each user terminal in the access queue accesses the network in sequence according to the determined time slot and sends data frames. The method has the advantages of largely reducing access collision and improving the use rate of channels when multiple user terminals are activated at the same time and need network access.

Owner:北京中博浩勇国际经贸有限公司

Cache structure and management method for use in implementing reconfigurable system configuration information storage

ActiveUS20150254180A1Increase profitReduce access conflictsMemory architecture accessing/allocationMemory adressing/allocation/relocationMemory interfaceStructure of Management Information

Disclosed is a cache structure for use in implementing reconfigurable system configuration information storage, comprising: layered configuration information cache units: for use in caching configuration information that may be used by a certain or several reconfigurable arrays within a period of time; an off-chip memory interface module: for use in establishing communication; a configuration anagement unit: for use in managing a reconfiguration process of the reconfigurable arrays, in mapping each subtask in an algorithm application to a certain reconfigurable array, thus the reconfigurable array will, on the basis of the mapped subtask, load the corresponding configuration information to complete a function reconfiguration for the reconfigurable array. This increases the utilization efficiency of configuration information caches. Also provided is a method for managing the reconfigurable system configuration information caches, employing a mixed priority cache update method, and changing a mode for managing the configuration information caches in a conventional reconfigurable system, thus increasing the dynamic reconfiguration efficiency in a complex reconfigurable system.

Owner:SOUTHEAST UNIV

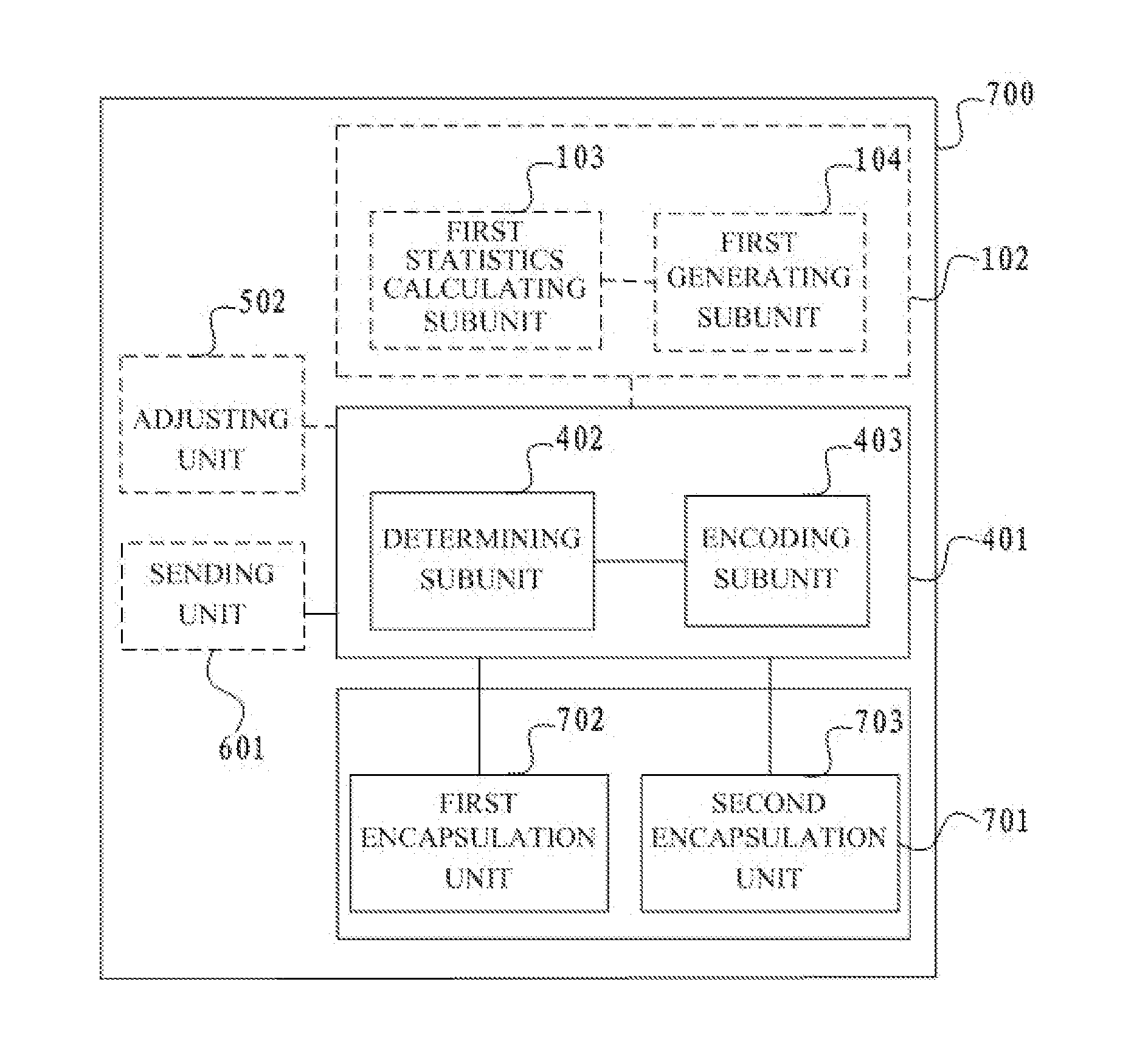

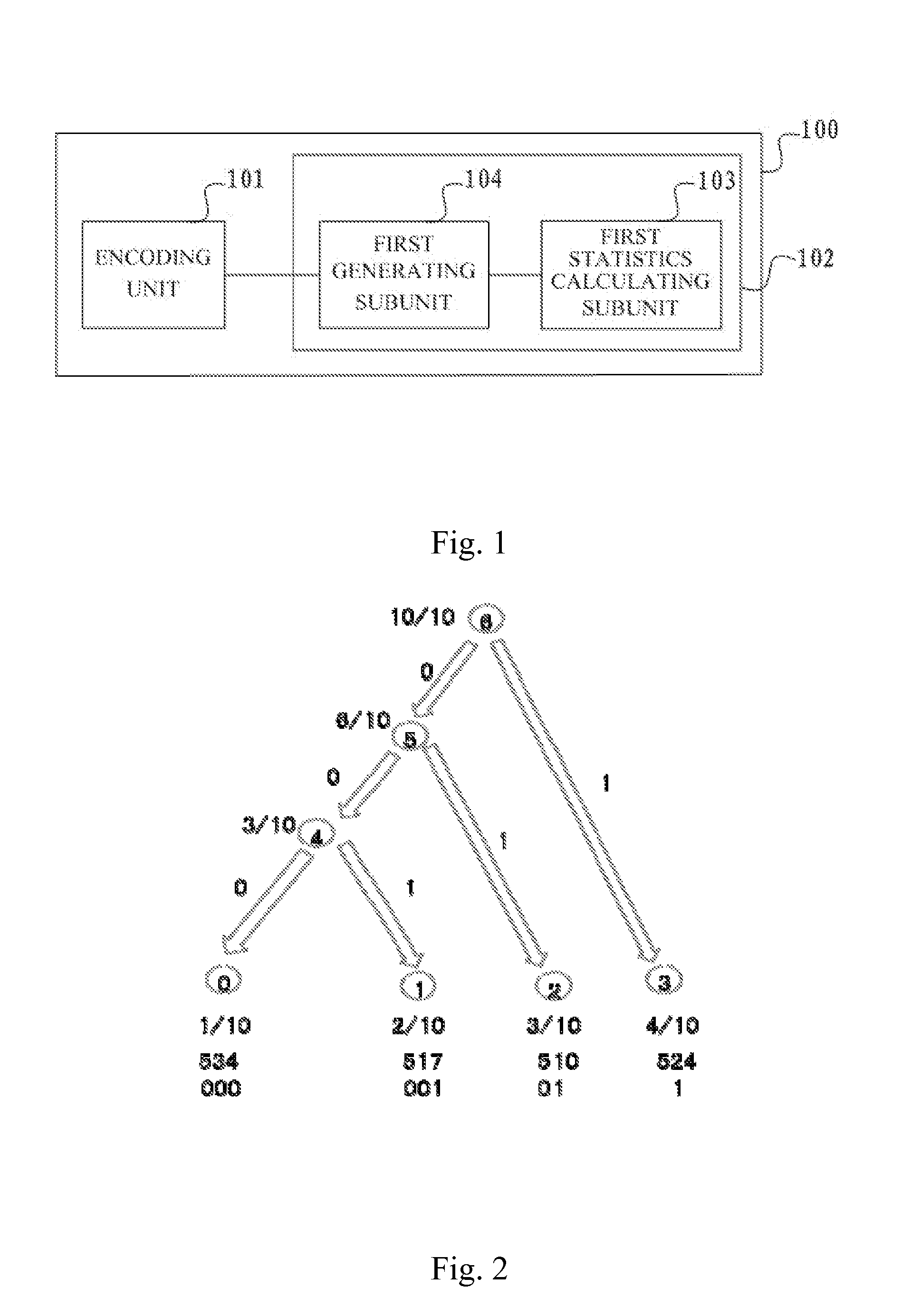

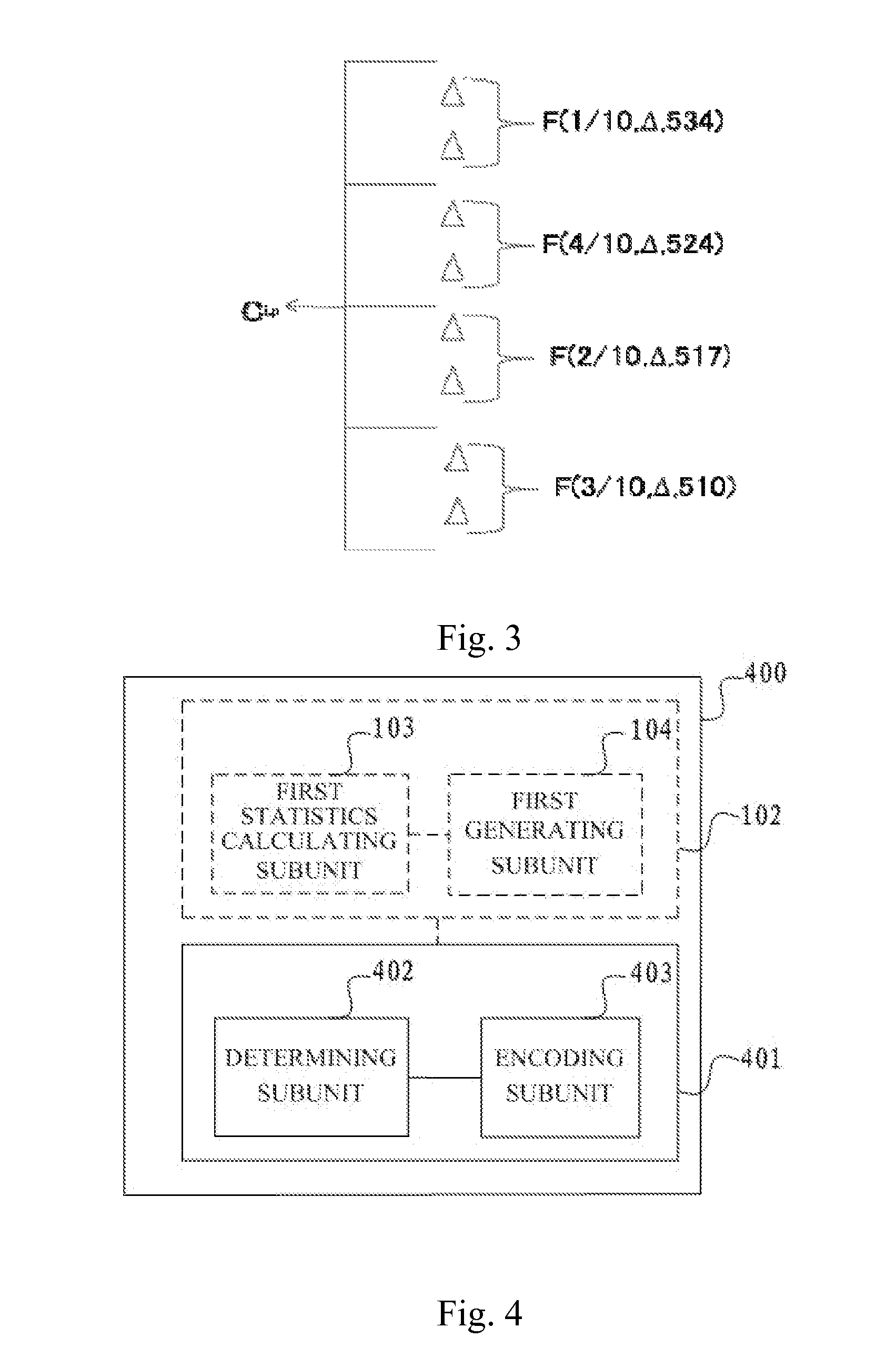

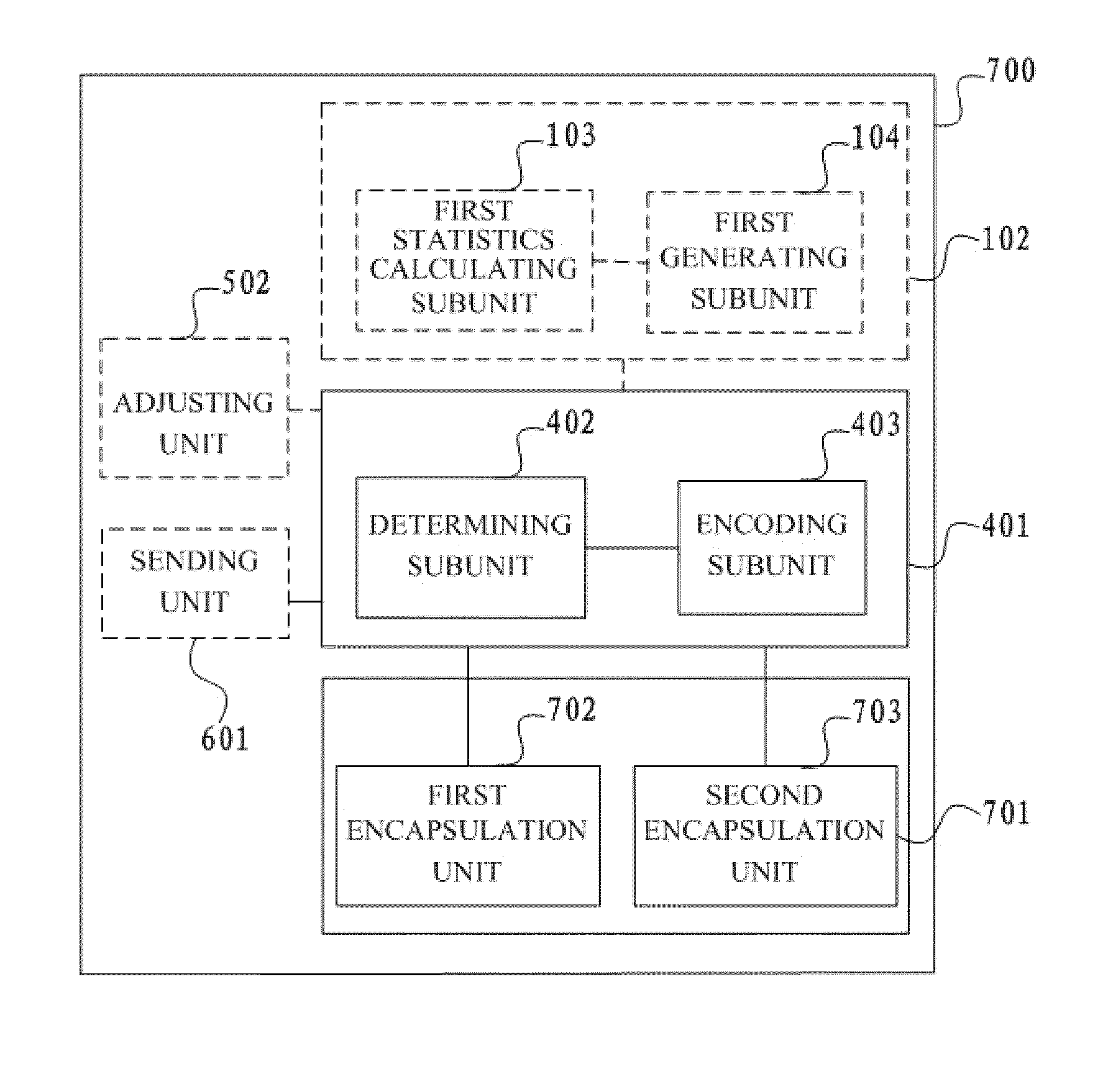

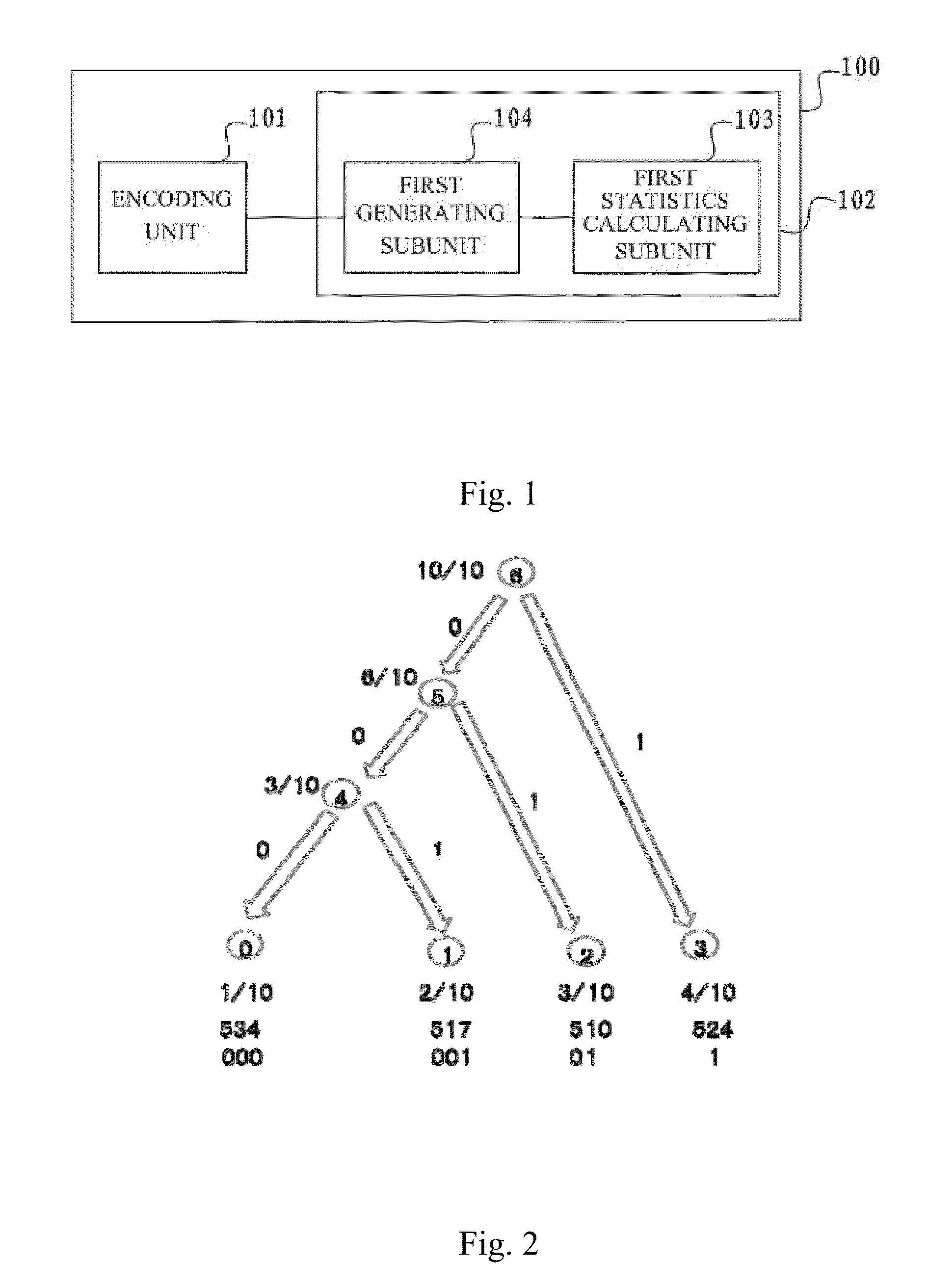

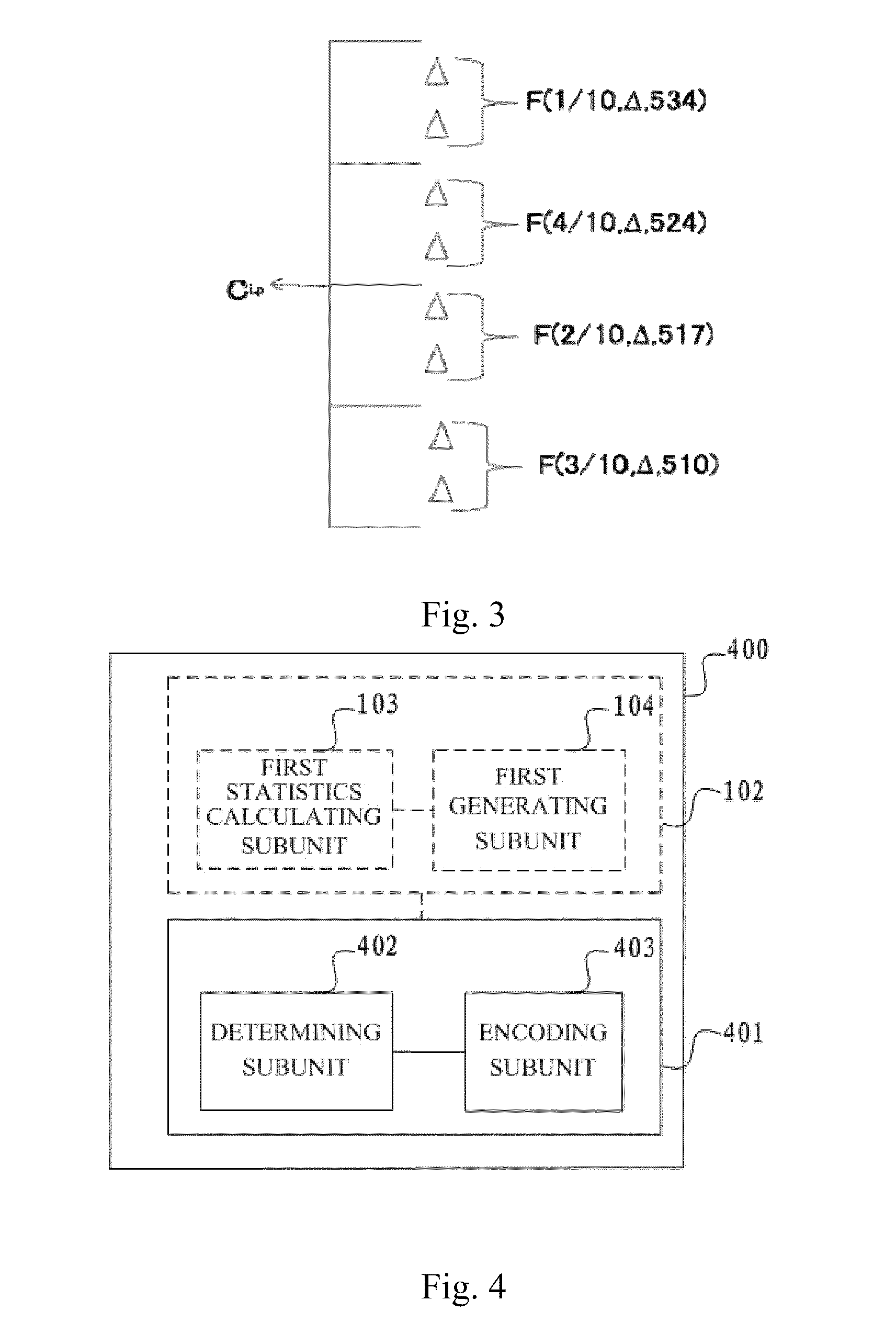

Data processing system and data processing method

InactiveUS20120263255A1Reduced channel loadLess channel access collisionEnergy efficient ICTParticular environment based servicesData processing systemOriginal data

The present invention provides a source node device and a destination node in a data processing system, a data processing system, and a decoding method. The source node device includes: an encoding unit, configured to conduct an encoding processing on collected original data according to a codebook including an encoded numerical value and a sending interval corresponding to the original data so as to encode the original data into encoded data having a corresponding encoded numerical value included in the codebook, and to determine a sending interval corresponding to the encoded data included in the codebook. According to the present invention, sending intervals of different encoded data are determined and distinguished based on sending intervals corresponding to the encoded data included in the codebook, which provides a better performance on channel load, channel access collisions, energy efficiency, consumed resources or lifetime of the data processing system.

Owner:FUJITSU LTD

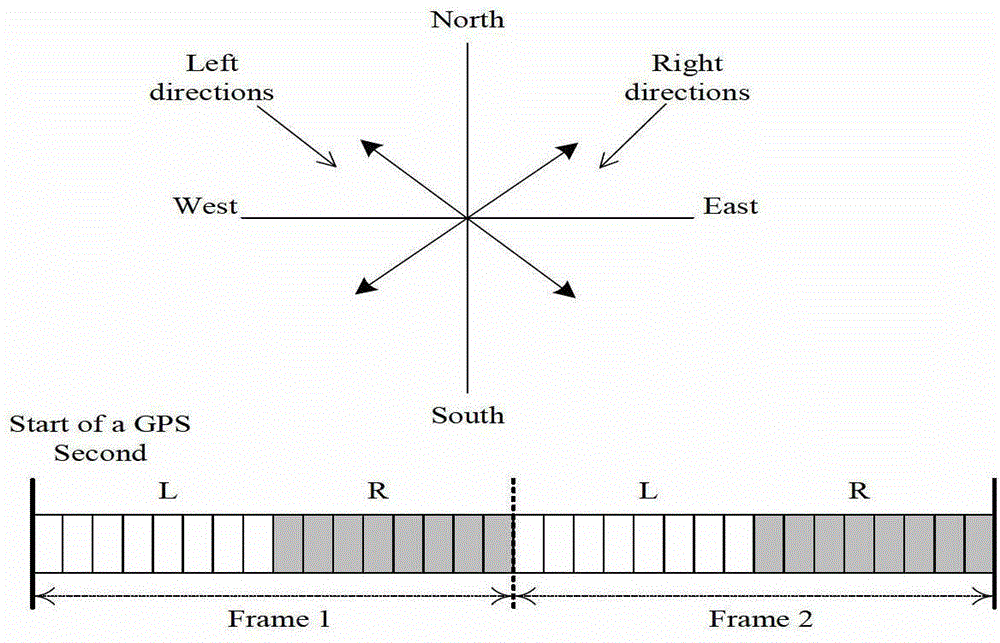

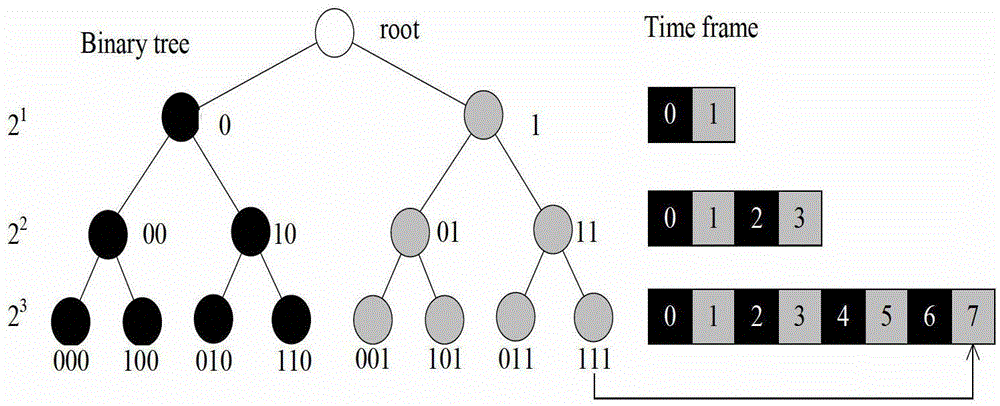

A tdma-based adaptive time slot allocation method for vehicular ad hoc networks

InactiveCN103096327BReduce access conflictsMeet the needs of fast access channelRadio transmission for post communicationNetwork planningExtensibilityTime division multiple access

The invention relates to a vehicle-mounted ad hoc network self-adaptive time slot distributing method based on a time division multiple address (TDMA), the vehicle-mounted ad hoc network self-adaptive time slot distributing method divides a time frame into a left time slot set and a right time slot set, nodes are divided into a left node set and a right node set based on the moving direction of the nodes, and the nodes in the left / right node sets choose competition time slots in the left / right time slot set based on the current geographical location information and according to certain rules. The vehicle-mounted ad hoc network self-adaptive time slot distributing method based on the TDMA reduces the ratio of a connect-in confliction and an amalgamation confliction occurred by the nodes to a large degree; and based on density changes of the nodes sensed by the nodes, the lengths of frames are dynamically adjusted, so that the demand that the nodes swiftly connect-in channels is satisfied; low time delay of the vehicle-mounted ad hoc network self-adaptive time slot distributing method based on the TDMA is authenticated by both theoretical analysis and simulating experiments. Compared with the prior art, the vehicle-mounted ad hoc network self-adaptive time slot distributing method based on the TDMA has the advantages of having less quantities of confliction nodes, higher utilizing ratio of the channels and good expansibility.

Owner:HENAN UNIVERSITY OF TECHNOLOGY +1

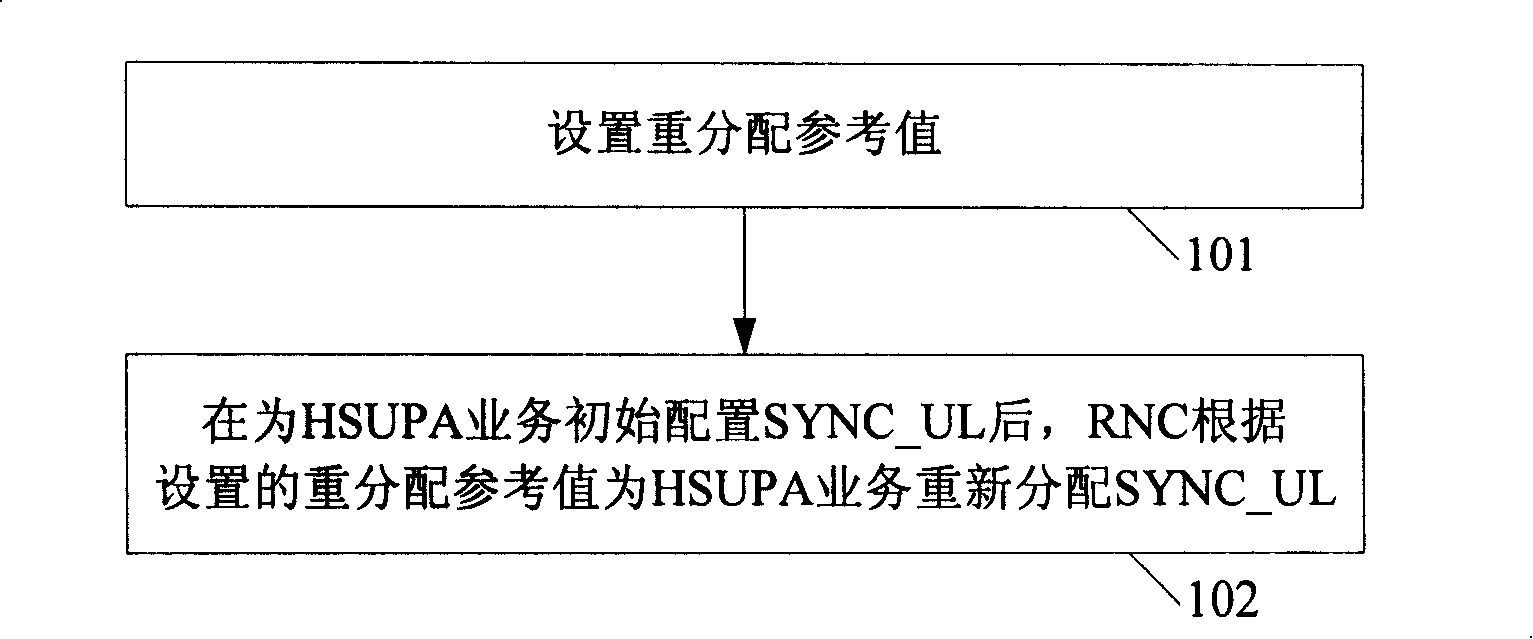

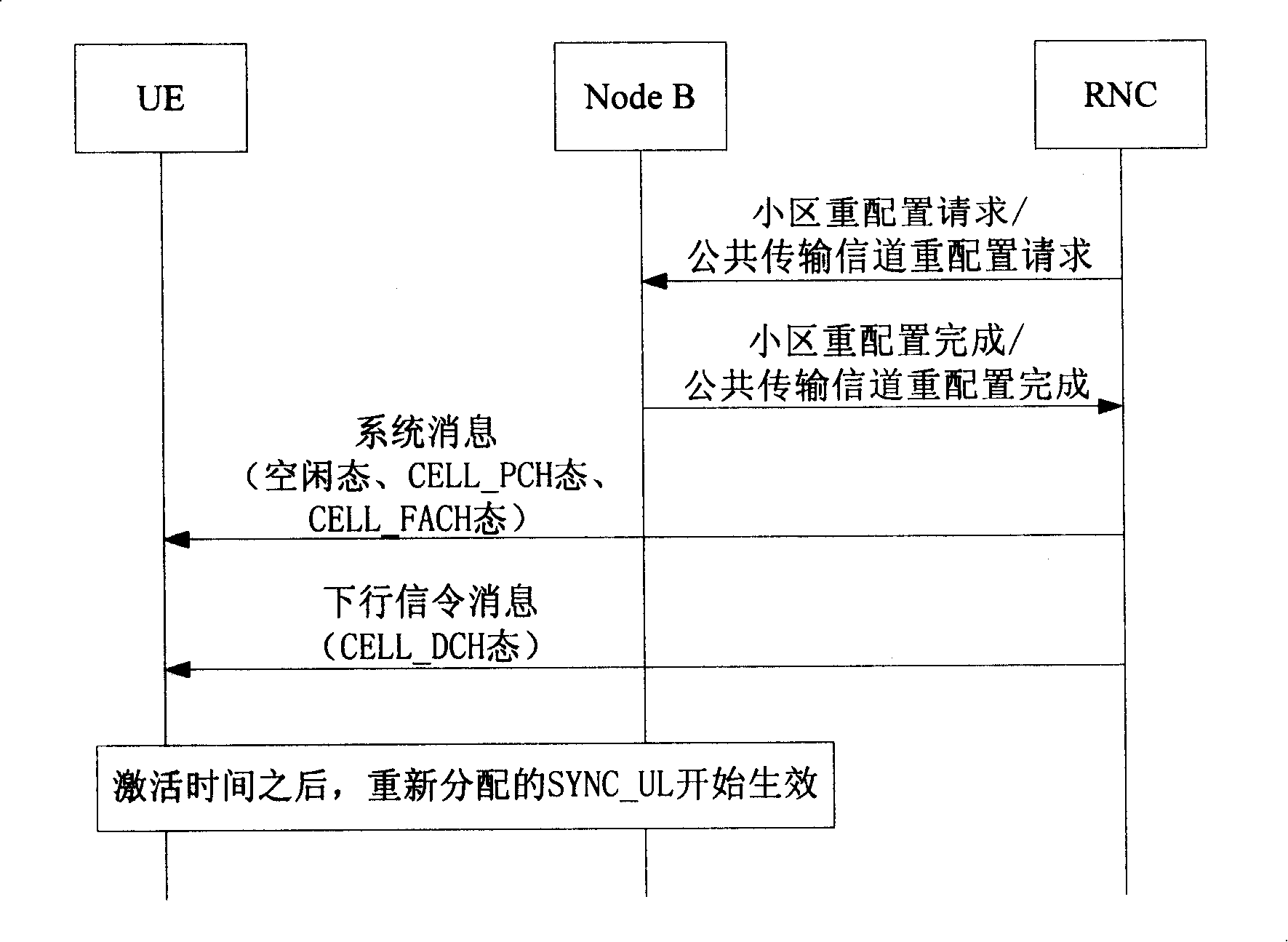

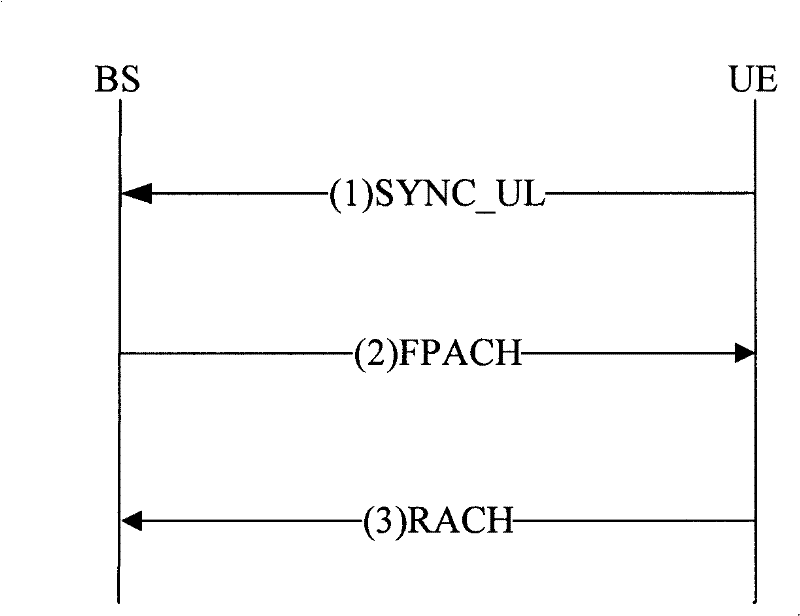

An allocation method for uplink synchronous code

InactiveCN101242641AImprove rationalityImprove access capabilitiesRadio/inductive link selection arrangementsRadio transmission for post communicationS distributionTelecommunications

The invention provides a distributing method of uplink synchronized code, which includes setting reference for redistributing, initially configuring uplink synchronized code (SYNC_UL) for high speed uplink packet access (HSUPA) service, then redistributing SYNC_UL by radio network controller for HSUPA service according to the set reference for redistributing. The invention can improve rationality of uplink synchronized code's distribution, reduce access collision caused by unreasonable distribution of SYNC_UL, improve access performance of the system, and bring good feeling to the user in the same time.

Owner:TD TECH COMM TECH LTD

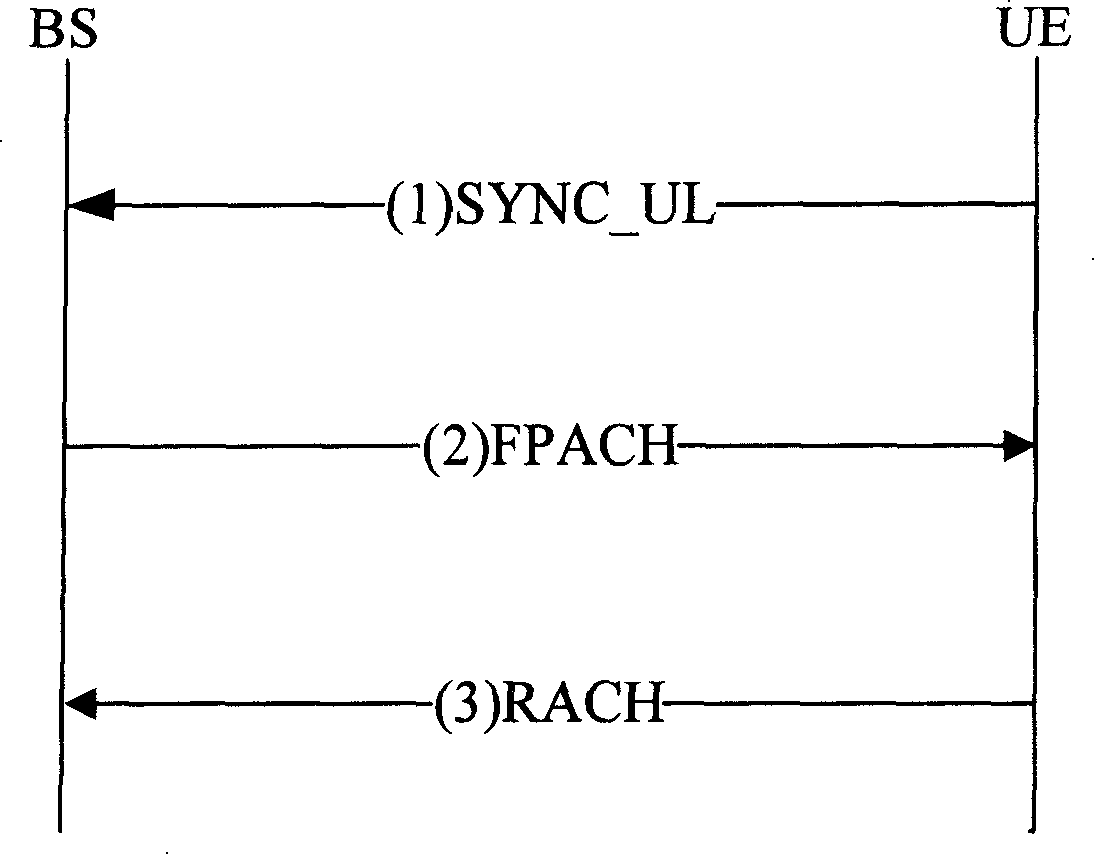

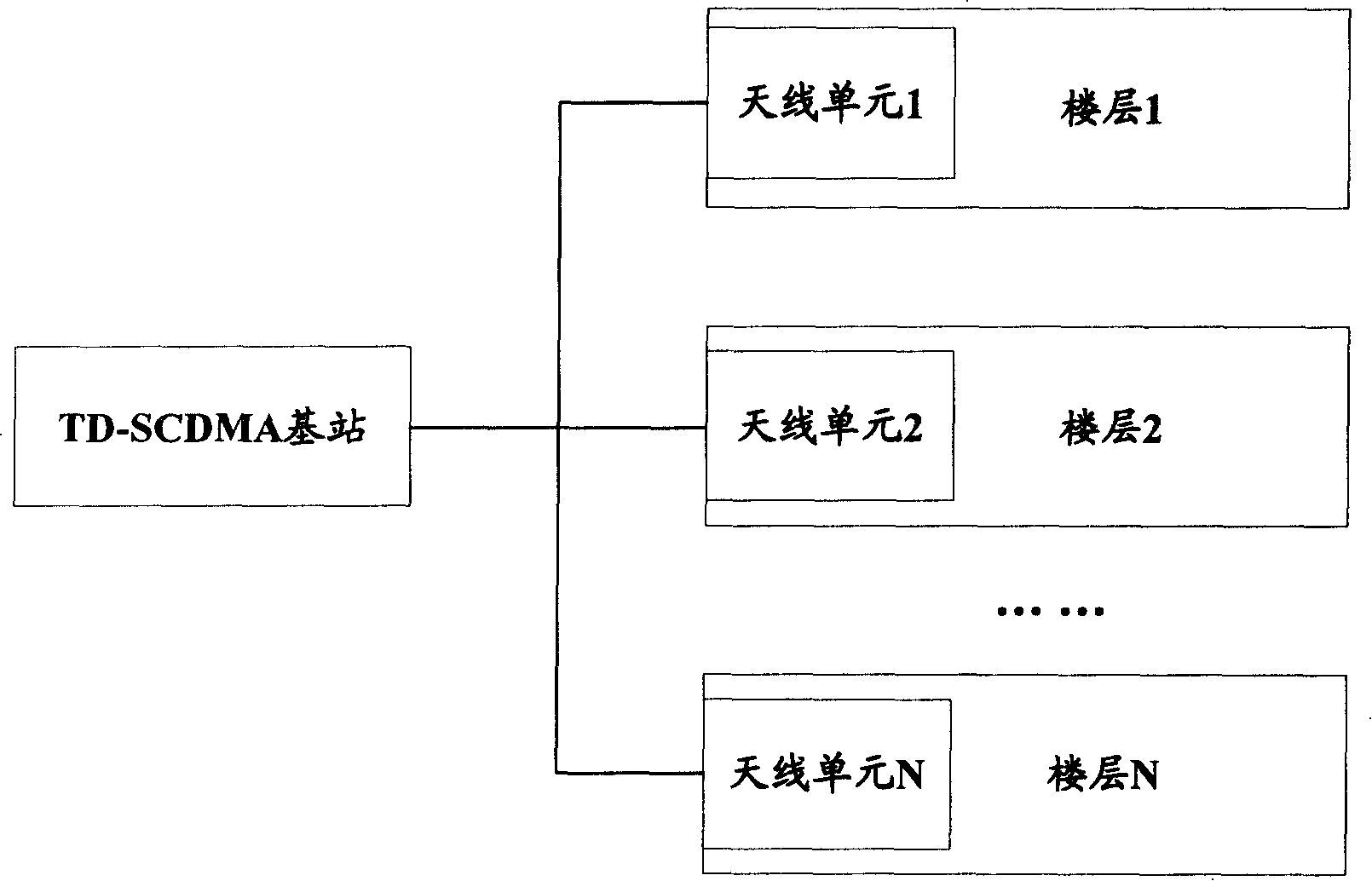

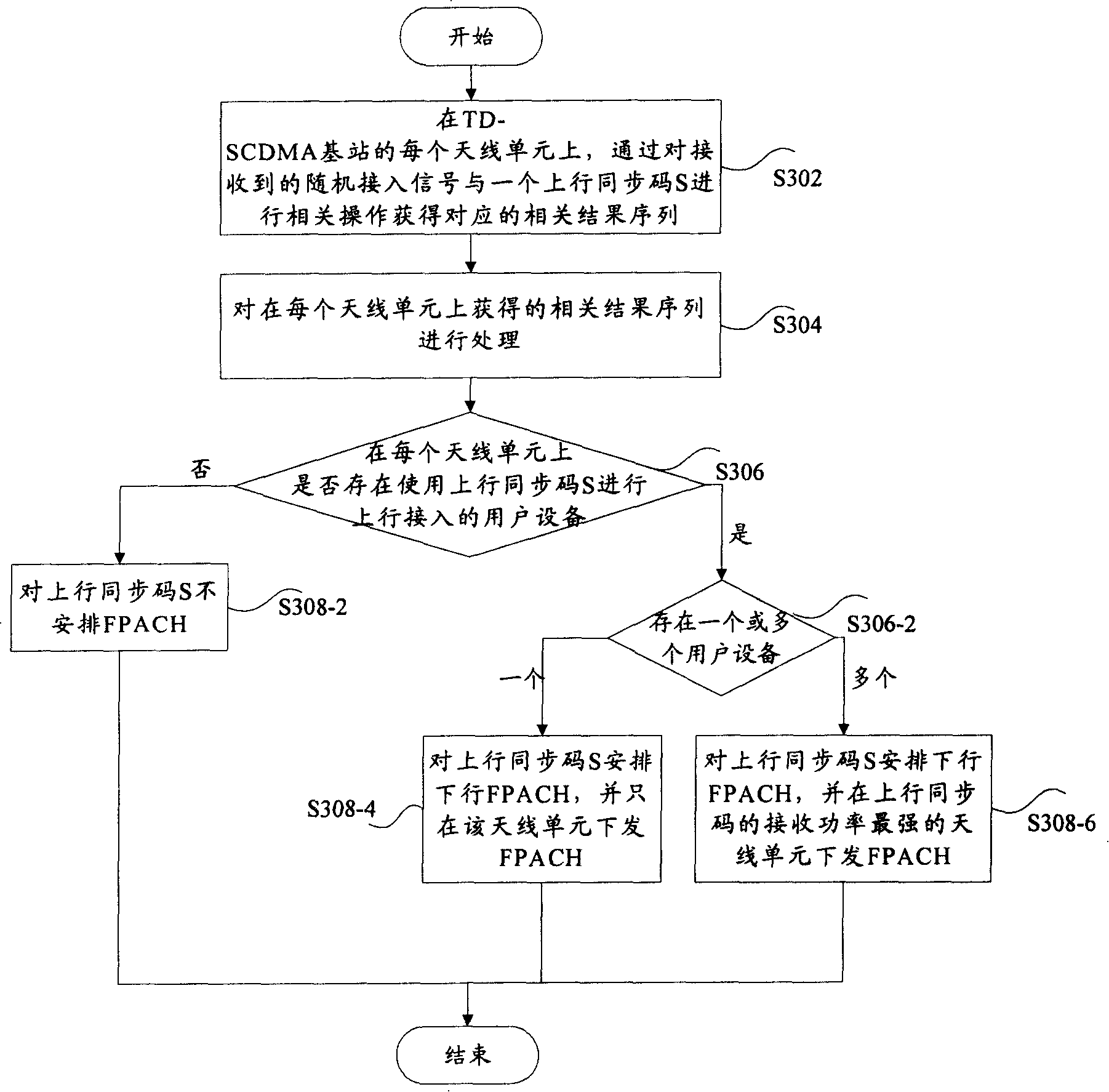

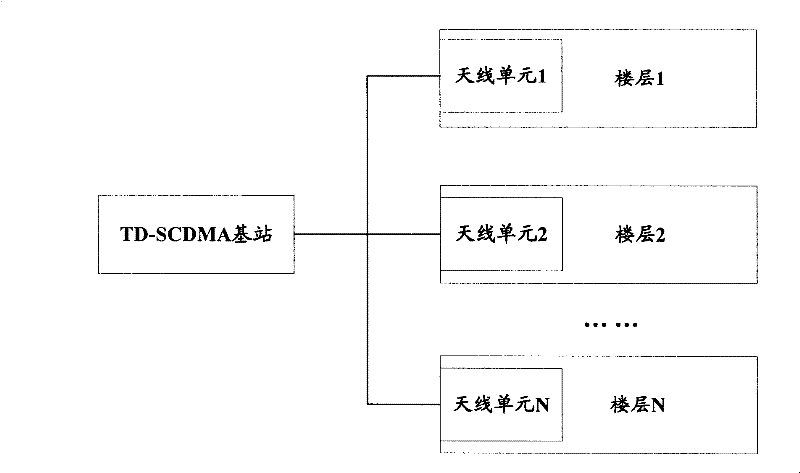

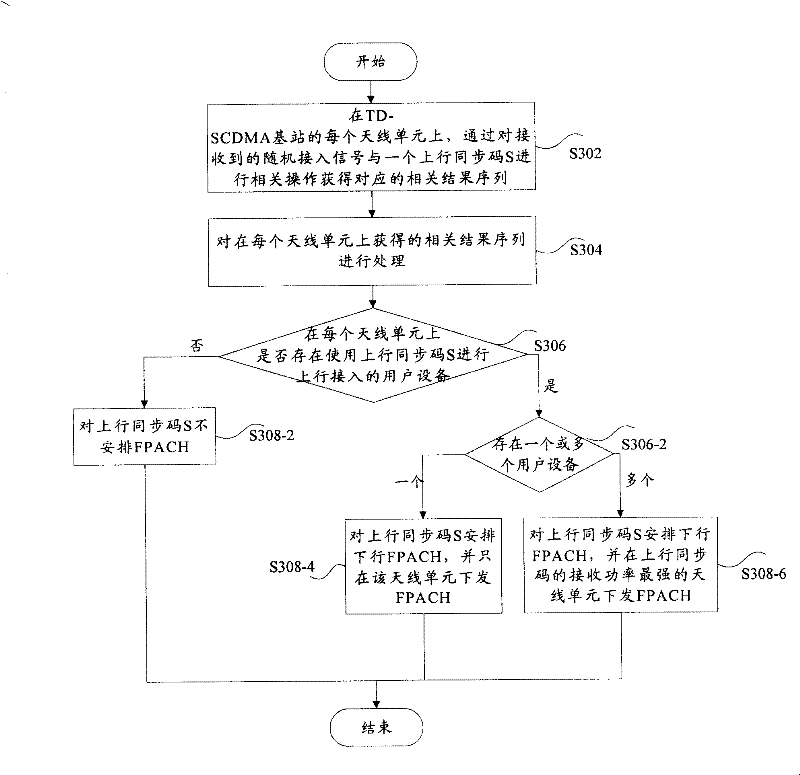

Accidental access method for Time Division-Synchronous Code Division Multiple Access system

InactiveCN101174884AAvoid wastingReduce access conflictsRadio/inductive link selection arrangementsRadio transmission for post communicationAccess methodUser device

The present invention discloses a random access method of time division synchronous code division multiple access system which is based on the distributed antennae; the method comprises the following processing that every antenna unit of the base station of the time division synchronous code division multiple access system implements the relevant operations on received random access signal and uplink synchronous code to obtain the corresponding result sequence; the relevant result sequence obtained by every antenna unit is processed to judge whether the user device which uses the uplink synchronous code for uplink access exists on every antenna unit; with the judging result, people decide whether to arrange a downlink forward-link physical access channel for the uplink synchronous code. With the technical proposal, at least two of the following beneficial effects are realized that the probability of system access conflict is reduced and the success rate of system access is increased.

Owner:ZTE CORP

Controller for updating configuration information cache in reconfigurable systems

ActiveCN103488585BImprove dynamic refactoring efficiencyIncrease profitProgram loading/initiatingMemory systemsExternal storageMemory interface

The invention discloses a controller for realizing configuration information cache update in a reconfigurable system. The controller comprises a configuration information cache unit, an off-chip memory interface module and a cache control unit, wherein the configuration information cache unit is used for caching configuration information which can be used by a certain reconfigurable array or some reconfigurable arrays within a period of time, the off-chip memory interface module is used for reading the configuration information from an external memory and transmitting the configuration information to the configuration information cache unit, and the cache control unit is used for controlling the reconfiguration process of the reconfigurable arrays. The reconfiguration process includes: mapping subtasks in an algorithm application to a certain reconfigurable array; setting a priority strategy of the configuration information cache unit; replacing the configuration information in the configuration information cache unit according to an LRU_FRQ replacement strategy. The invention further provides a method for realizing configuration information cache update in the reconfigurable system. Cache is updated by the aid of the LRU_FRQ replacement strategy, a traditional mode of updating the configuration information cache is changed, and the dynamic reconfiguration efficiency of the reconfigurable system is improved.

Owner:SOUTHEAST UNIV

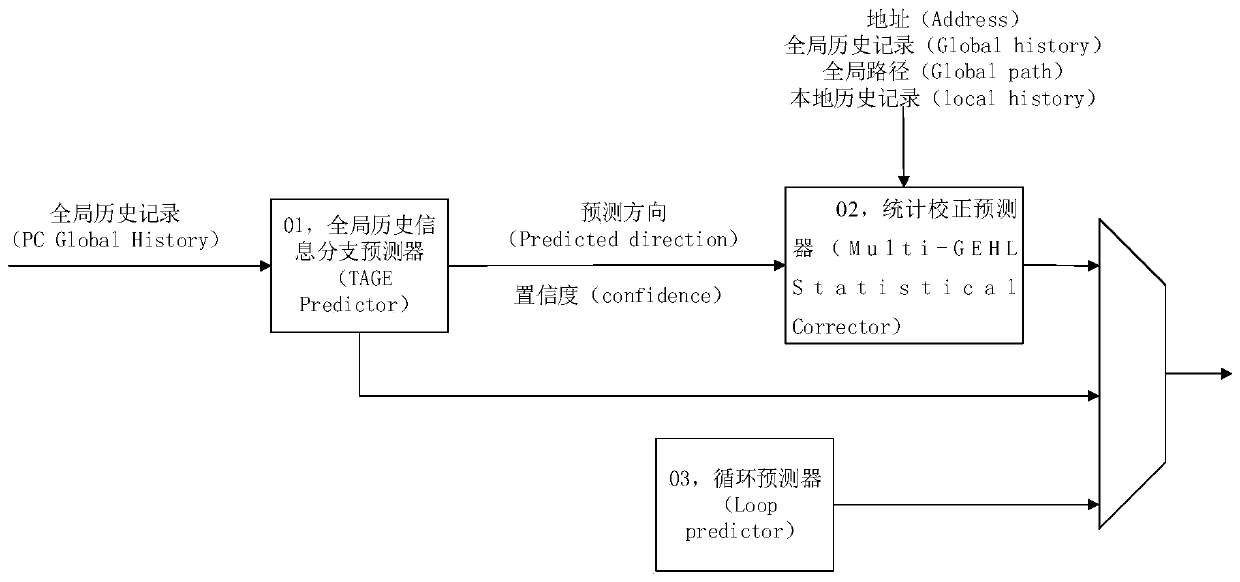

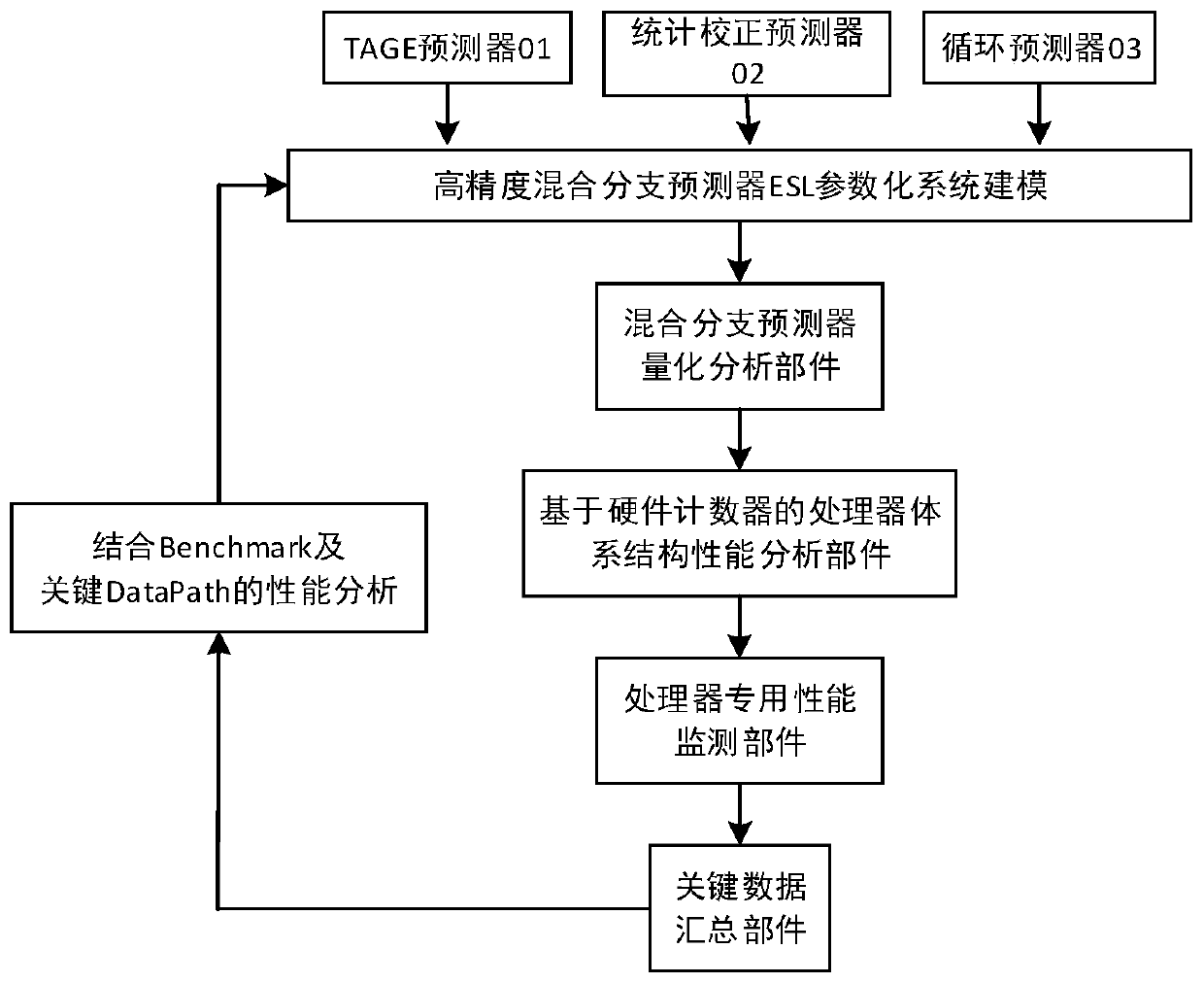

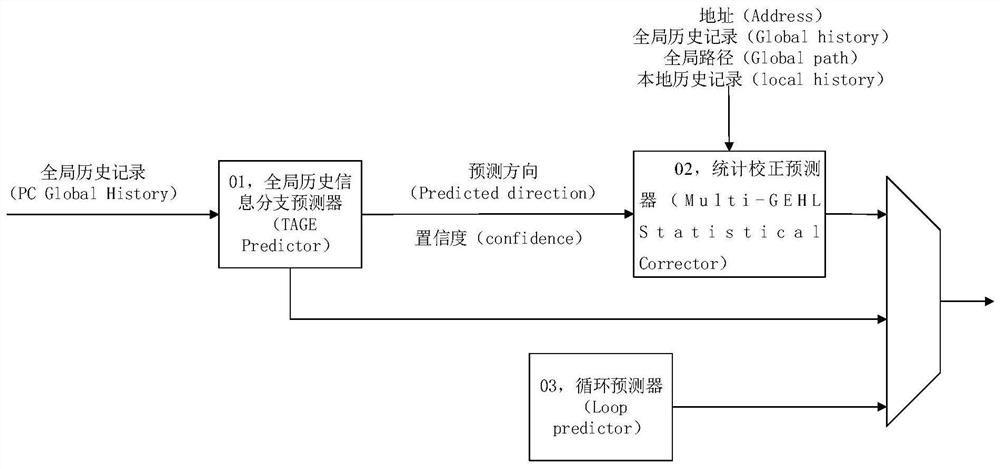

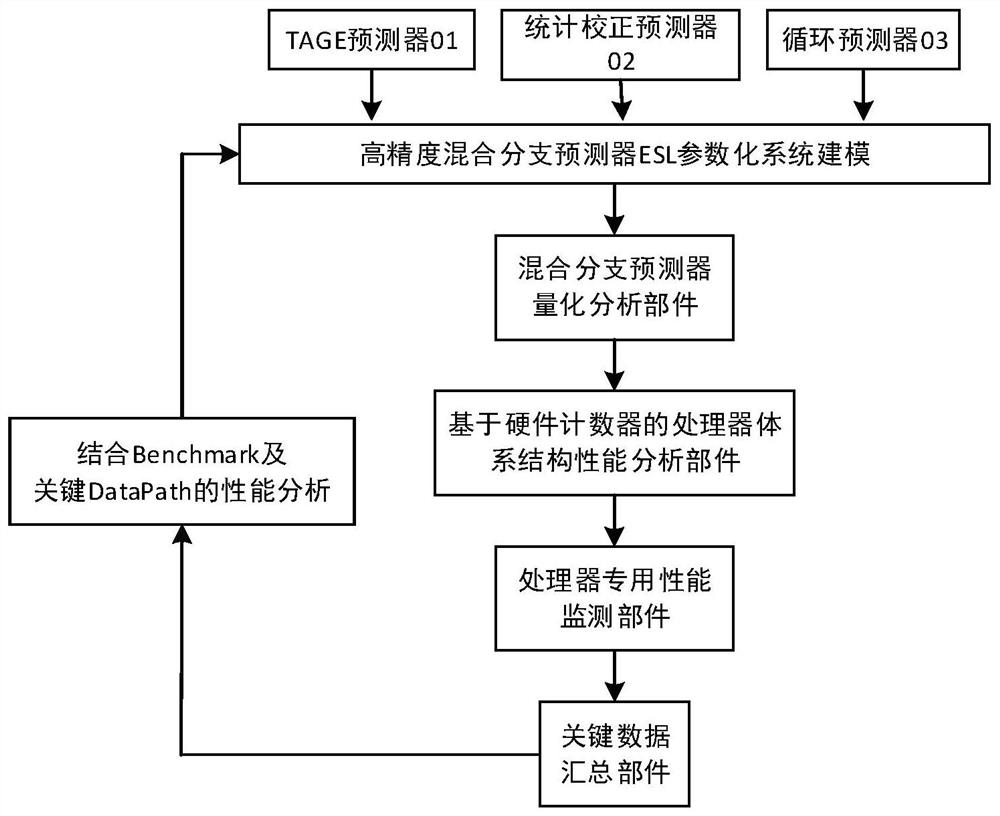

Hybrid branch prediction device and method for out-of-order high-performance cores

ActiveCN111078295AAlleviate rename blockingReduce access conflictsConcurrent instruction executionAlgorithmConfidence metric

The invention discloses a hybrid branch prediction device and method for out-of-order high-performance cores, and relates to the field of computer branch prediction. The device can evaluate the performance of the micro-architecture level of the processor, and reduce out-of-order high-performance processor renaming blockage caused by branch prediction failure and instruction missing; and the deviceprovides a hybrid branch predictor which is high in precision and flexible and configurable in parameterization. The hybrid branch predictor is composed of a global historical information branch TAGEpredictor, a statistical correction predictor and a cycle predictor. According to the TAGE predictor, a parameterized Tagged component and a split reading improvement strategy are utilized, high-precision branch prediction is achieved, and access conflicts are reduced; the statistical correction predictor is used for confirming or restoring the prediction result of the TAGE predictor according tothe prediction result and the confidence coefficient of the TAGE predictor; and the loop predictor is configured to predict a regular loop having a long loop subject using a replacement strategy anda loop branch conversion technique. Limited hardware storage overhead is fully utilized, access conflicts are reduced to a great extent, and the overall performance of the processor is improved whilebranch prediction precision is improved.

Owner:核芯互联科技(青岛)有限公司

High-efficiency passing method for road intersection

The invention discloses a high-efficiency passing method for a road intersection. A left turning passage for communicating two-way lanes of a major trunk road is built in the middle section of a straight through isolating band of the two-way lanes of the major trunk road; an approximately quincuncial or oval traffic ring island is formed on the intersection; resources of middle section of the road are thoroughly used for enhancing the passing capability of the intersection; and waste road resources are better applied. Under the condition that no overpass is built on a suburban intersection and a T-junction and buildings along streets are not removed, a left turning vehicle stream and a straight vehicle stream on the major trunk road quickly can pass the intersection without any parking; under the condition that the left turning vehicle stream and the straight vehicle stream on the major trunk road are not disturbed, a left turning vehicle stream and a straight vehicle stream on a secondary trunk road can successfully pass the intersection so that the passing vehicle streams on the intersection are simple and sequential; and 50% traffic light conversion is reduced, therefore, the purposes of largely enhancing the vehicle passing efficiency and realizing quick passing are achieved.

Owner:江西省中业景观工程安装有限公司

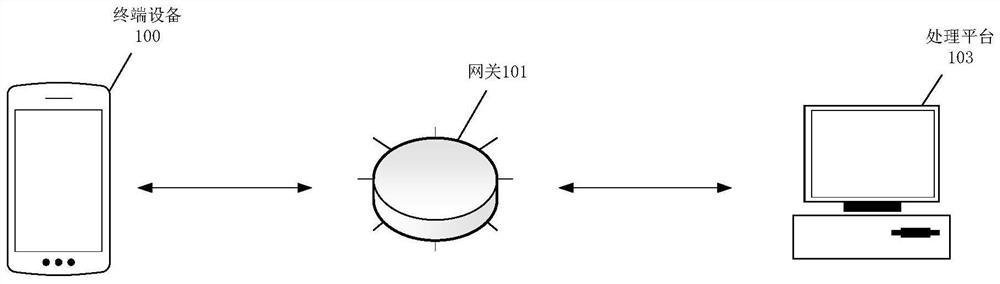

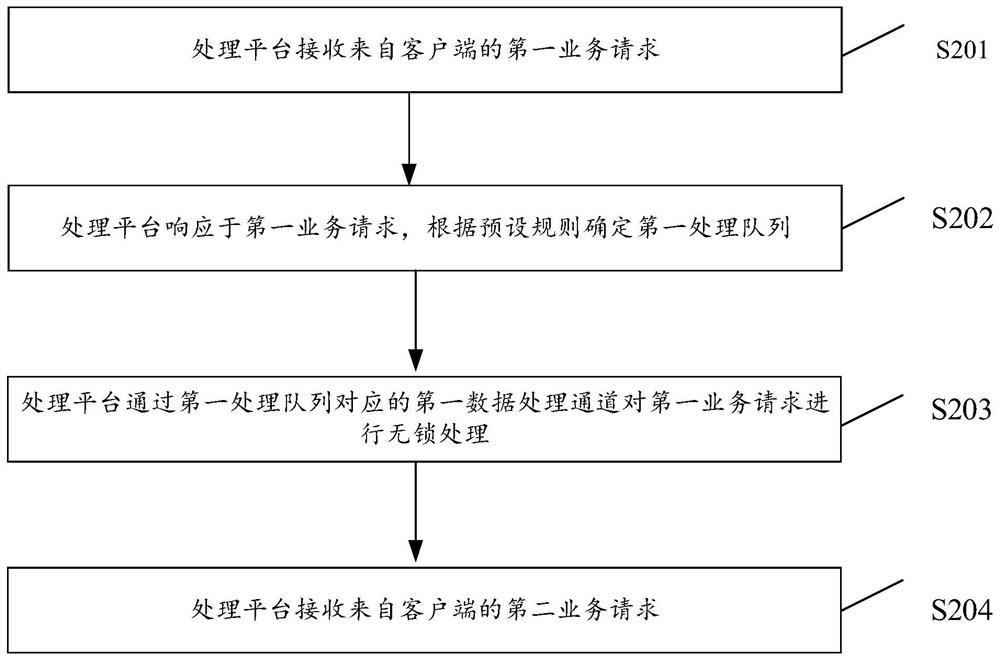

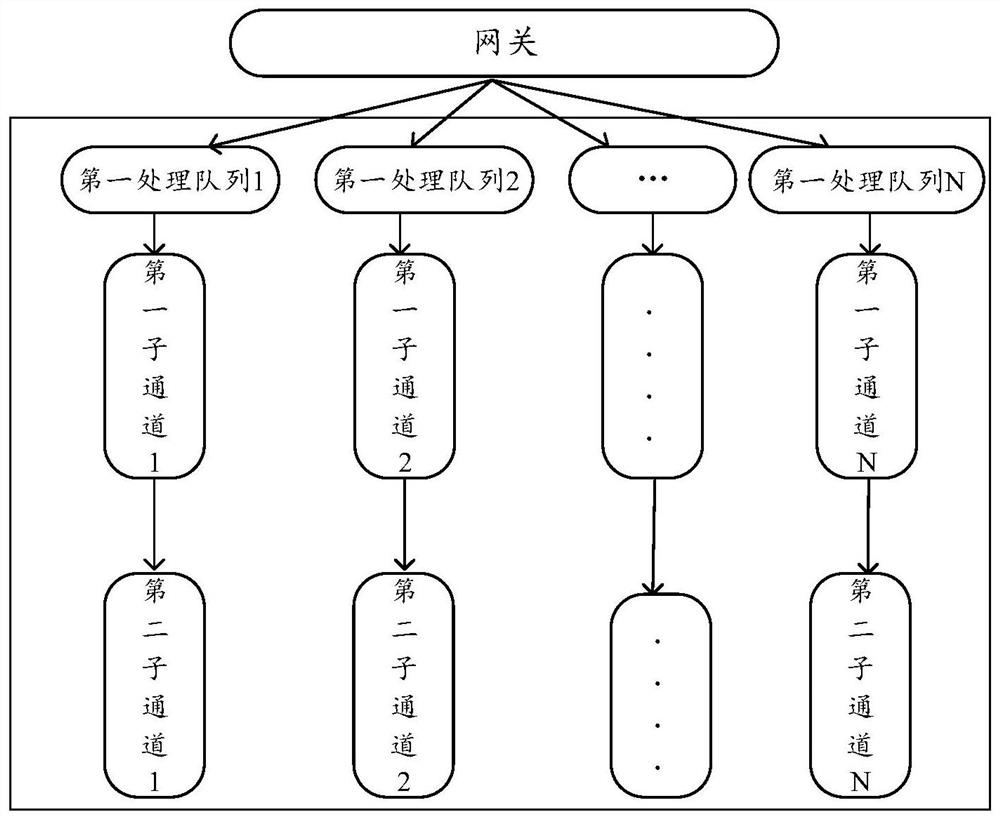

Service data processing method and related equipment

PendingCN113312184AImprove data processing capabilitiesImprove processing speedProgram synchronisationInterprogram communicationDistributed servicesIndustrial engineering

The embodiment of the invention discloses a service data processing method and related equipment. The service data processing method comprises the steps that a first service request from a client is received, and a first processing queue is determined according to a preset rule in response to the first service request; lock-free processing is carried out on the first service request through a first data processing channel corresponding to the first processing queue, and the first data processing channel is used for processing the distributed service request in a single-process mode. By adopting the embodiment of the invention, the service processing speed can be improved.

Owner:PING AN SECURITIES CO LTD

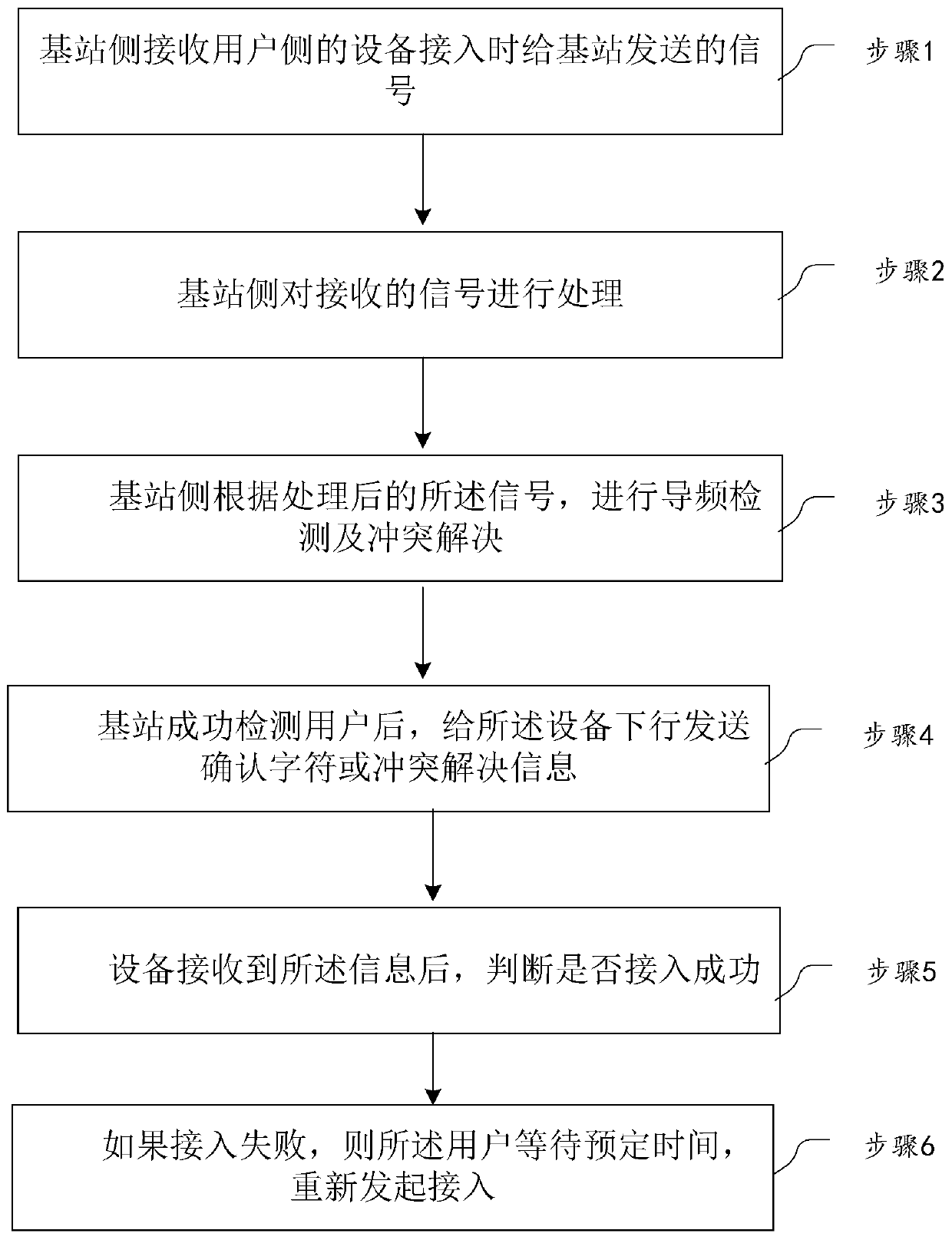

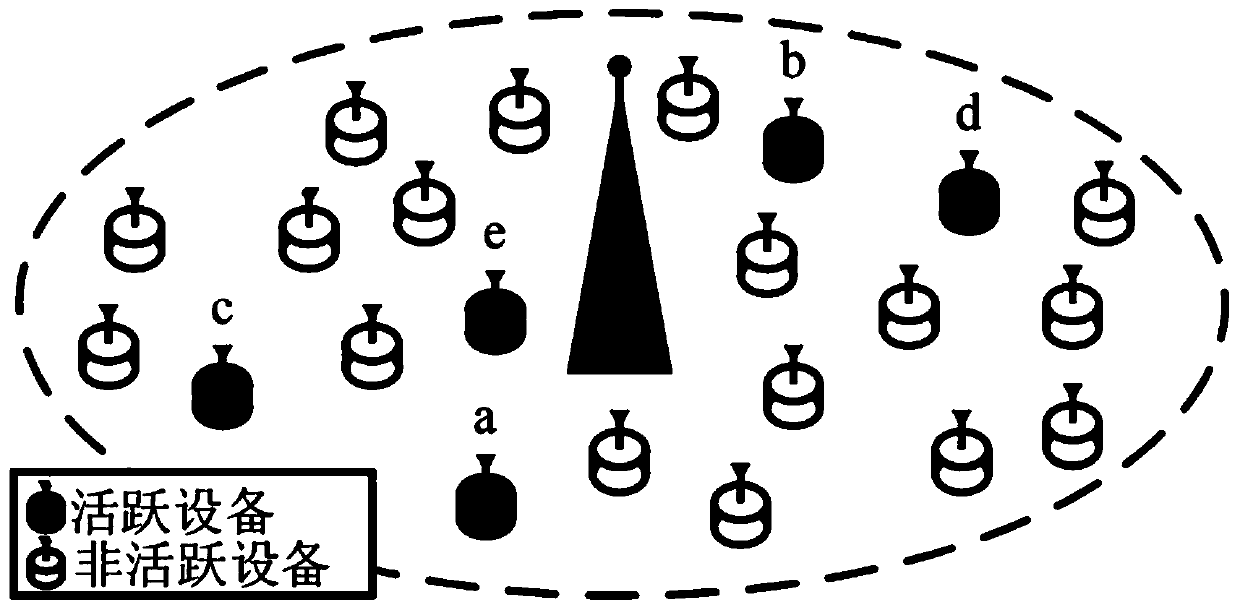

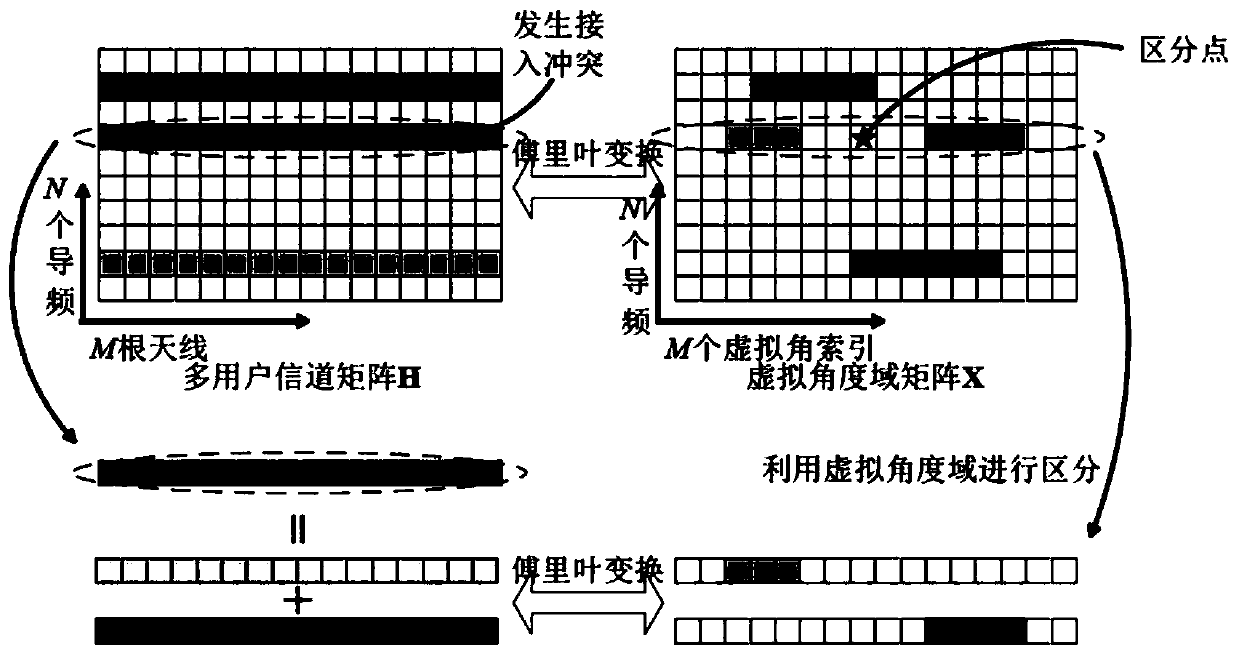

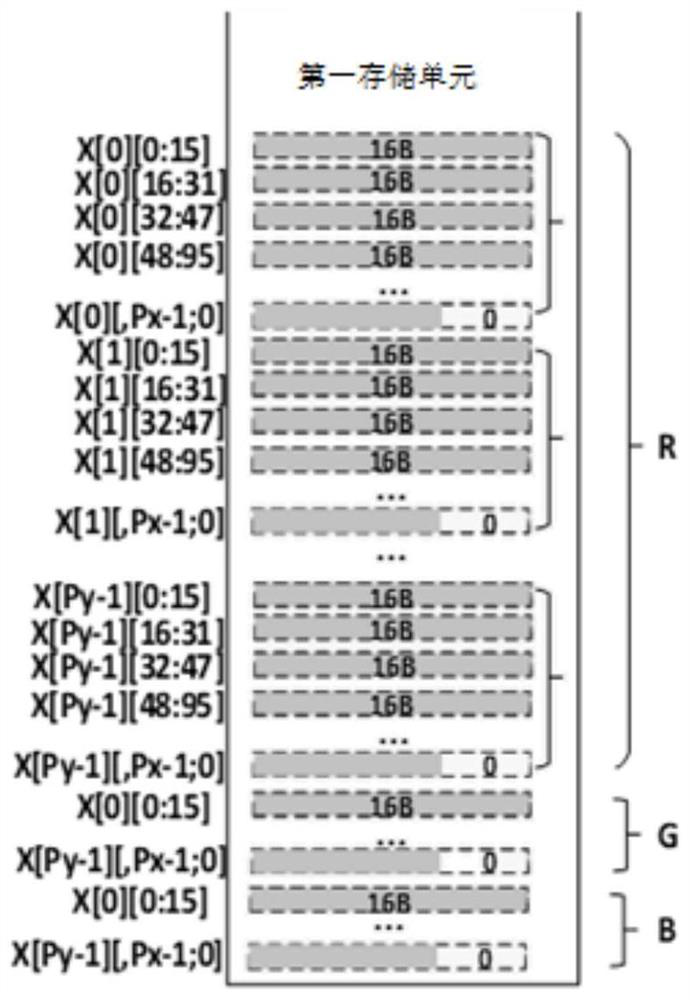

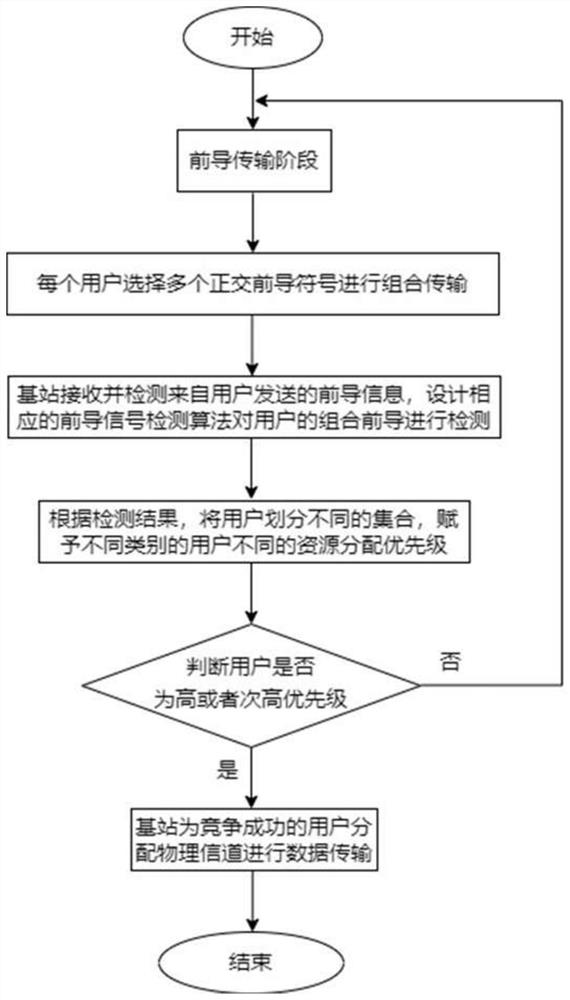

Multi-user random access method and system, and base station

ActiveCN111586884AIncrease the number ofReduce access conflictsWireless communicationAlgorithmEngineering

The embodiment of the invention provides a multi-user random access method and system utilizing angle domain cluster sparse characteristics, and a base station. The method comprises the following steps: 1, a base station side receives a signal Q sent to a base station when equipment of a user side gets access; 2, the base station side processes the received signals, wherein the step 2 specificallycomprises: performing Fourier transform Y = QW = AHW + ZW = AX + N on a received signal Q so as to convert a system model to a multi-antenna virtual angle domain, wherein the matrix W represents a discrete Fourier transform matrix, X = HW is a channel information matrix of an angle domain, n=ZW; and 3, the base station side performs pilot frequency detection and conflict resolution according to the processed signals.

Owner:BEIJING JIAOTONG UNIV

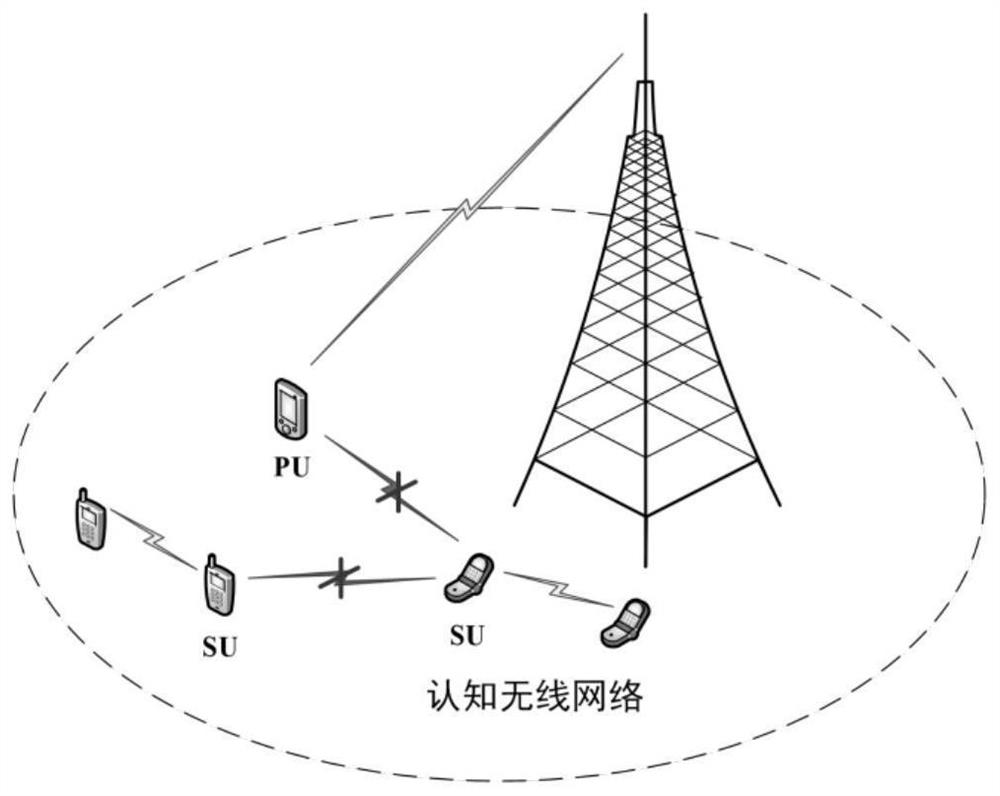

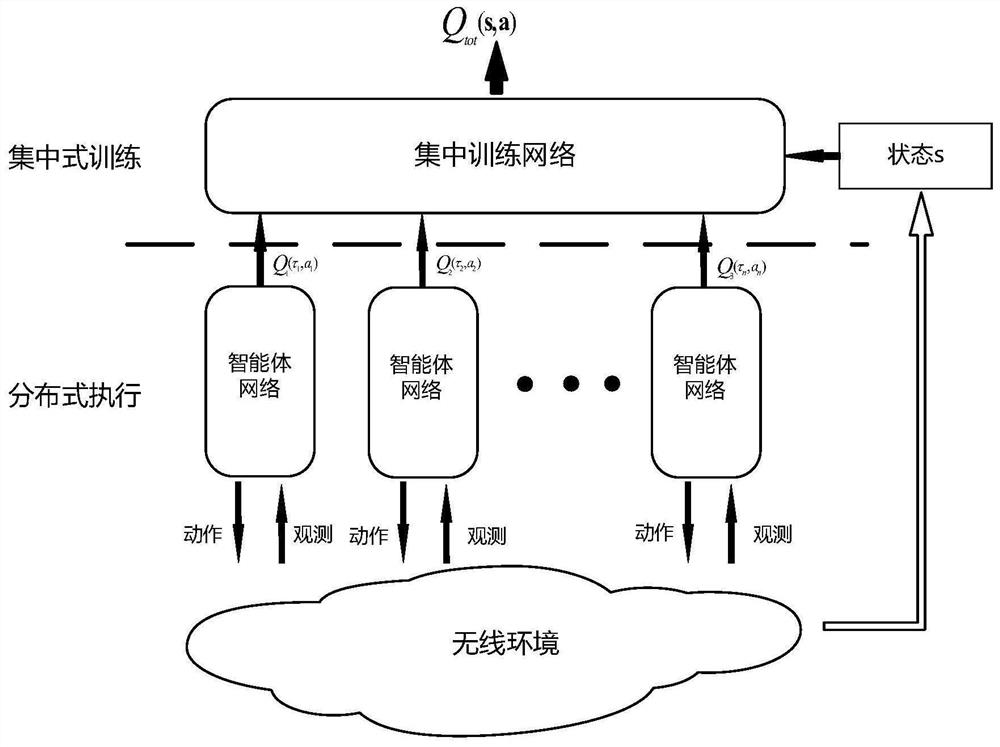

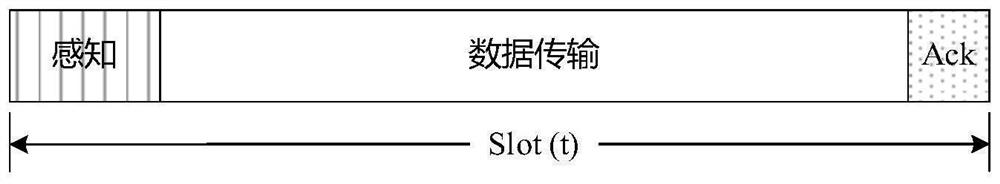

Distributed dynamic spectrum access method based on multi-agent reinforcement learning

PendingCN113923794AImprove utilization efficiencyReduce access conflictsCharacter and pattern recognitionNeural learning methodsCognitive userAccess method

The invention discloses a distributed dynamic spectrum access method based on multi-agent reinforcement learning, and the method enables a multi-user distributed dynamic spectrum access problem to be modeled into a multi-agent Markov cooperative game model, and constructs a centralized training and distributed execution multi-agent reinforcement learning framework. The multi-agent reinforcement learning framework comprises an offline training module and an online execution module, the online execution module performs spectrum access of the cognitive user by using a learned access strategy, and the offline training module dynamically updates the online execution module according to a spectrum access result of the cognitive user. The invention provides a multi-user cooperative spectrum access method with self-adaptive communication environment and extensible network scale, which reduces access conflicts among cognitive users while avoiding interference to authorized users, thereby maximizing the access success rate of the cognitive users and improving the utilization efficiency of the spectrum.

Owner:NAT UNIV OF DEFENSE TECH

Chip statistical data management method and device

InactiveCN101848135BEasy to handleReduce read and write operationsMemory adressing/allocation/relocationData switching networksMemory addressComputer architecture

The embodiment of the invention provides a management method and a management device for statistical data of a chip. The method mainly comprises the following steps: accumulating statistical data corresponding to the same memory address in an off-chip memory device to obtain an accumulated value, obtaining an original statistical value at the same memory address in the off-chip memory device, adding the accumulated valve to the original statistical value to obtain a current statistical value, and writing the current statistical value into the same memory address in the off-chip memory device. By adopting the invention, a plurality of statistical data at the same memory address can be written into an off-chip memory through one writing operation. Thereby, the reading and writing operations of the off-chip memory are effectively buffered, the conflict with the access to a bank in the off-chip memory is effectively reduced, and the processing capacity of the chip to the statistical data is greatly improved.

Owner:HUAWEI TECH CO LTD

A method and device for preventing multi-user terminal access conflicts

InactiveCN105307282BIncrease usageReduce access conflictsWireless communicationAccess timeComputer terminal

The invention discloses a method and device for preventing access collision of multiple user terminals. The method comprises the following steps: a specific user ID is allocated for each user terminal working in a time division mode in advance; when multiple user terminals working in a time division mode are activated at the same time and access a network, each user terminal determines a corresponding network access time slot according to the allocated user ID so that the user terminals activated at the same time forms an access queue for accessing the network; and each user terminal in the access queue accesses the network in sequence according to the determined time slot and sends data frames. The method has the advantages of largely reducing access collision and improving the use rate of channels when multiple user terminals are activated at the same time and need network access.

Owner:北京中博浩勇国际经贸有限公司

A hybrid branch prediction device and method for out-of-order high-performance cores

ActiveCN111078295BAlleviate rename blockingReduce access conflictsConcurrent instruction executionAlgorithmConfidence metric

The invention discloses a hybrid branch prediction device and method of out-of-order high-performance cores, and relates to the field of computer branch prediction. The device can perform performance evaluation at the microarchitecture level of the processor, and alleviate the renaming blockage of out-of-order high-performance processors caused by branch prediction failures and missing instructions; the device provides a high-precision, flexible and configurable hybrid The branch predictor is composed of a global historical information branch TAGE predictor, a statistical correction predictor and a cycle predictor; the TAGE predictor utilizes parameterized Tagged components and split read improvement strategies to achieve high-precision branch prediction and reduce access conflict; the statistical correction predictor is used to confirm or restore the prediction result of the TAGE predictor according to the prediction result and confidence of the TAGE predictor; the cycle predictor is used to use the replacement strategy and the cycle branch reduction technology to predict The principal's rule loop. The invention makes full use of limited hardware storage overhead, greatly reduces access conflicts, and improves the overall performance of the processor while improving branch prediction accuracy.

Owner:核芯互联科技(青岛)有限公司

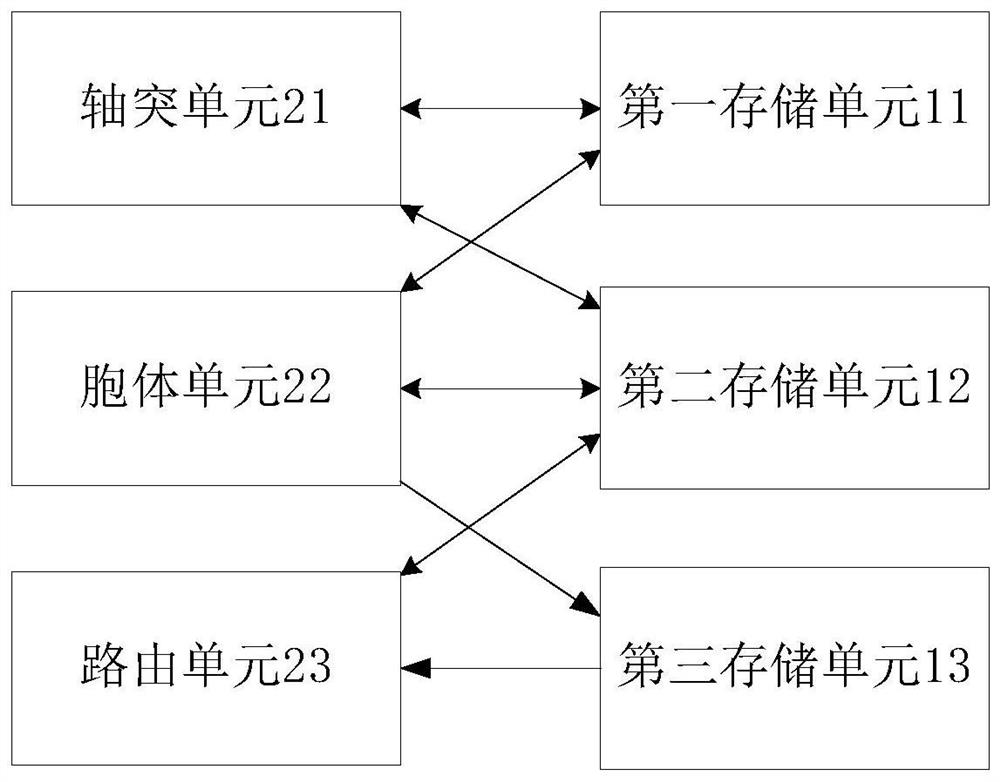

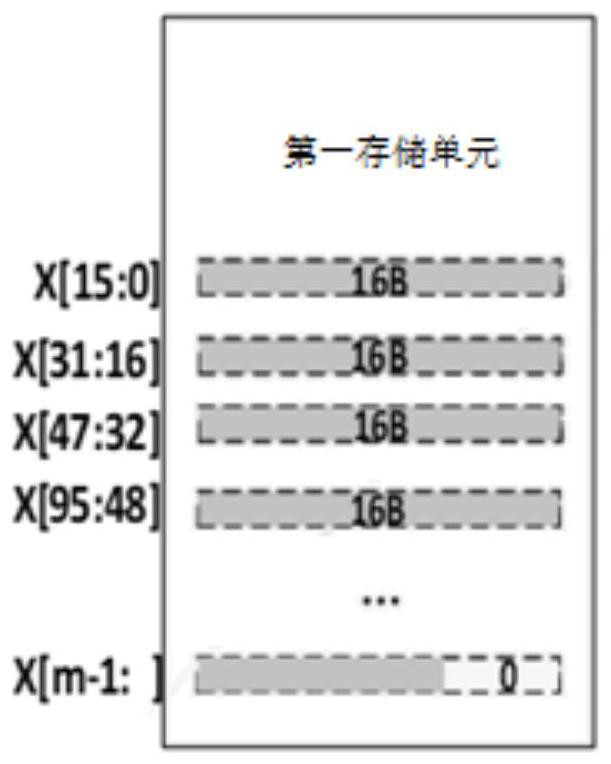

Storage component and artificial intelligence processor

ActiveCN112596881AImprove reading and writing efficiencyEasy to readInput/output to record carriersProgram initiation/switchingComputer hardwareEngineering

The invention relates to a storage component and an artificial intelligence processor. The storage component is applied to computing cores of the artificial intelligence processor, the artificial intelligence processor comprises a plurality of computing cores, each computing core comprises a processing component and a storage component, and the storage component comprises a first storage unit, a second storage unit and a third storage unit; and the processing component includes an axon unit, a cell unit, and a routing unit. According to the storage component provided by the embodiment of the invention, the processing component and the storage component can be arranged in the computing core, so that the storage component directly receives the read-write access of the processing component, and the storage component outside the core does not need to be read and written. The distributed storage architecture of the plurality of storage units can store different data respectively, so that the processing component can access the plurality of storage units conveniently, and the distributed storage architecture is suitable for the processing component of a many-core architecture. The size of the artificial intelligence processor can be reduced, the power consumption of the artificial intelligence processor is reduced, and the processing efficiency of the artificial intelligence processor is improved.

Owner:TSINGHUA UNIV

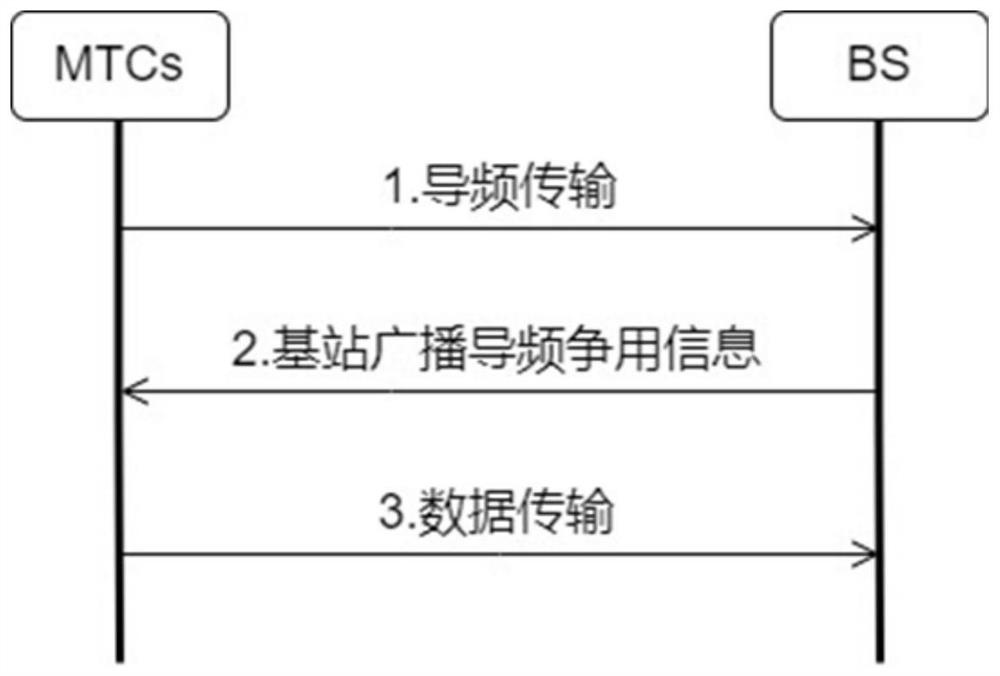

Large-scale terminal random access method based on conflict estimation

PendingCN114745808AReduce signaling overheadReduce Random Access CollisionsHigh level techniquesMachine-to-machine/machine-type communication serviceCellular networkEngineering

The invention relates to a large-scale terminal random access method based on conflict estimation, which solves the problem of multi-user overload access under limited preamble resources, is suitable for the scene of burst transmission of scattered small data packets of a large number of machine type devices, and specifically comprises the following steps of: firstly, performing random access; a large-scale random access protocol with low signaling overhead and controllable user conflicts is designed; then, a multi-preamble combination random access competition scheme is provided; and finally, designing an efficient channel resource allocation scheme based on conflict estimation. According to the invention, on the premise that the number of orthogonal preambles is not increased, large-scale terminal random access conflicts are reduced, the access success probability of users is improved, and the multiple access capability of a cellular network is improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

Data processing system and data processing method

InactiveUS8750412B2Improve energy efficiencyReduce loadEnergy efficient ICTParticular environment based servicesData processing systemOriginal data

The present invention provides a source node device and a destination node in a data processing system, a data processing system, and a decoding method. The source node device includes: an encoding unit, configured to conduct an encoding processing on collected original data according to a codebook including an encoded numerical value and a sending interval corresponding to the original data so as to encode the original data into encoded data having a corresponding encoded numerical value included in the codebook, and to determine a sending interval corresponding to the encoded data included in the codebook. According to the present invention, sending intervals of different encoded data are determined and distinguished based on sending intervals corresponding to the encoded data included in the codebook, which provides a better performance on channel load, channel access collisions, energy efficiency, consumed resources or lifetime of the data processing system.

Owner:FUJITSU LTD

Method and device for controlling terminal access, terminal access method and device

ActiveCN102781065BControl accessReduce access conflictsAssess restrictionAccess methodComputer terminal

The present invention relates to the technical field of communications. Disclosed are a terminal access control method and device. The method comprises the following steps: a network side adds reject indication information, which includes a reject timer value, to a downlink control message in order to indicate that a terminal should wait until the reject timer expires and then initiate random access during a first time period; the network side issues the downlink control message to the terminal. The invention also provides a terminal access method and device. Applying the invention can effectively control the terminal access and reduce the access conflicts.

Owner:HUAWEI TECH CO LTD

Accidental access method for Time Division-Synchronous Code Division Multiple Access system

InactiveCN101174884BAvoid wastingReduce access conflictsSpatial transmit diversityWireless communicationAccess methodRandom access memory

The present invention discloses a random access method of time division synchronous code division multiple access system which is based on the distributed antennae; the method comprises the following processing that every antenna unit of the base station of the time division synchronous code division multiple access system implements the relevant operations on received random access signal and uplink synchronous code to obtain the corresponding result sequence; the relevant result sequence obtained by every antenna unit is processed to judge whether the user device which uses the uplink synchronous code for uplink access exists on every antenna unit; with the judging result, people decide whether to arrange a downlink forward-link physical access channel for the uplink synchronous code. With the technical proposal, at least two of the following beneficial effects are realized that the probability of system access conflict is reduced and the success rate of system access is increased.

Owner:ZTE CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com