Patents

Literature

89results about How to "Reduce the number of memory accesses" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

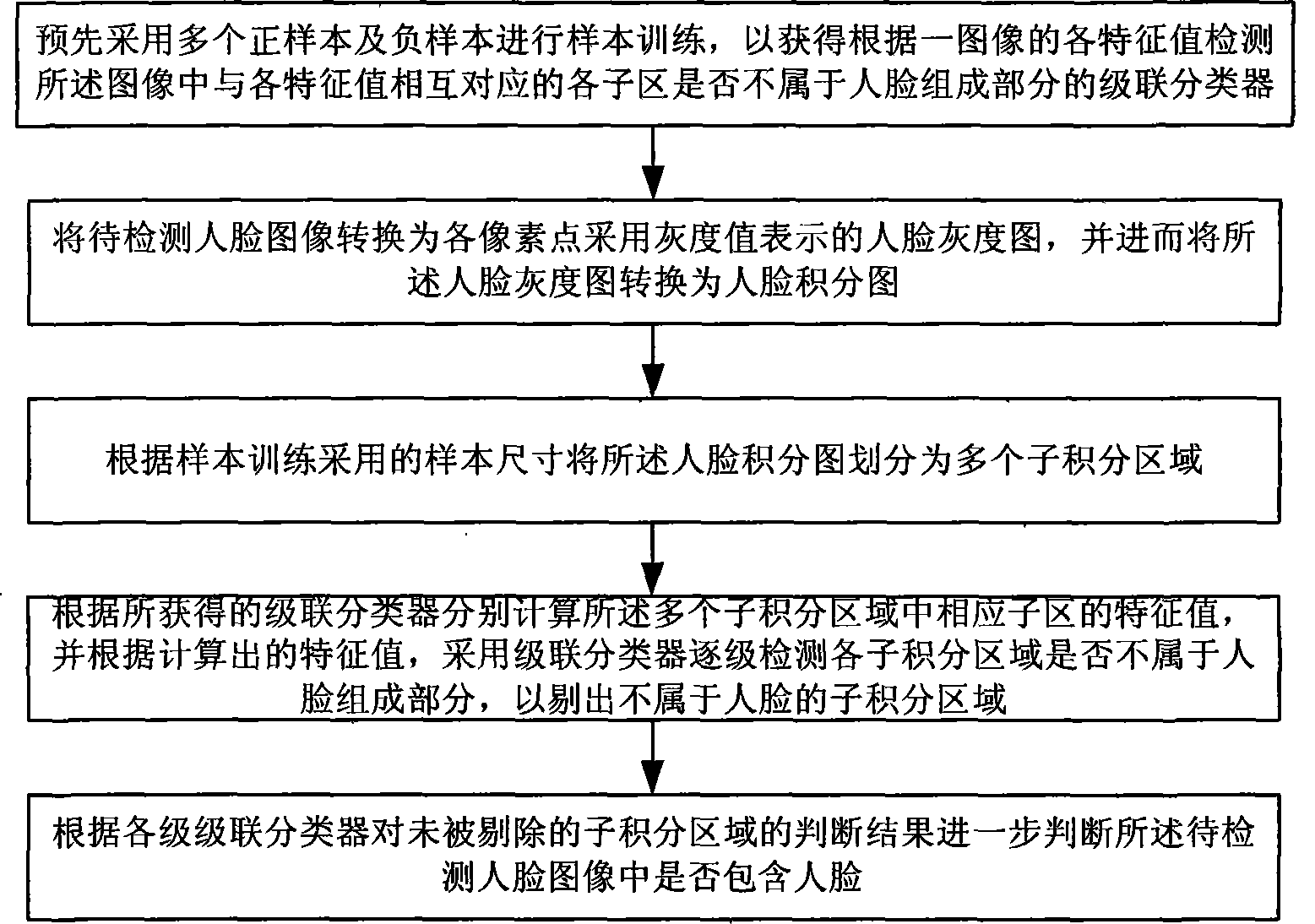

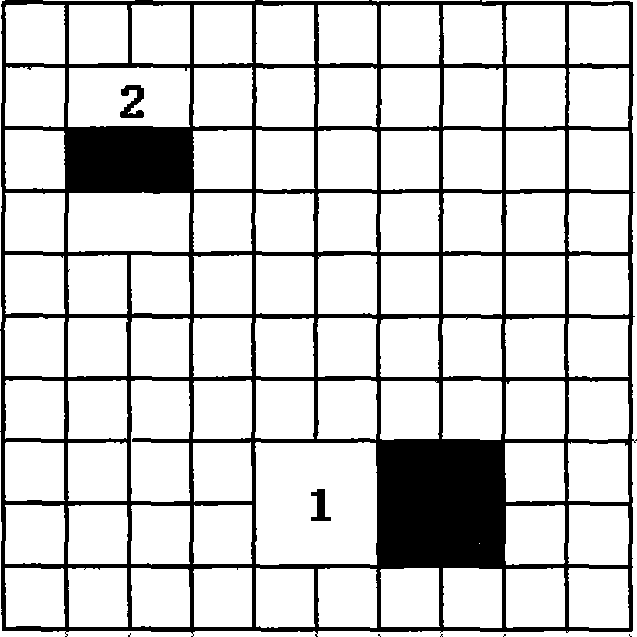

Human face detection method

InactiveCN101369315AQuick checkMeet the requirements of small memory spacePerson identificationCharacter and pattern recognitionFace detectionFloating point

Provided is a face detecting method, first through training positive negative samples, a cascade classifier is obtained to detect whether each subarea corresponding to each eigenvalue does not belong to face component in the image according to the eigenvalue of the image, then the face image to be detected is converted to a face grey chart, which is furthermore converted to a face integral image, then the face integral image is divided into a plurality of sub-integral domains, and then the eigenvalue in the corresponding sub-domain of each sub-integral domain is computed according to the obtained cascade classifier, and based on the computed eigenvalue, the cascade classifier is adopted to detect step by step whether each sub-integral domain does not belong to the face component, to eliminate the sub-integral domains which do not belong to the face component, finally, face repeated are is processed combinedly to determine position and size of the face according to the judgement result. Due to floating point and fixed point operation adopted in the detecting process, detecting speed of the face is effectively advanced, meanwhile appropriative memory is reduced.

Owner:SHANGHAI ISVISION INTELLIGENT RECOGNITION TECH

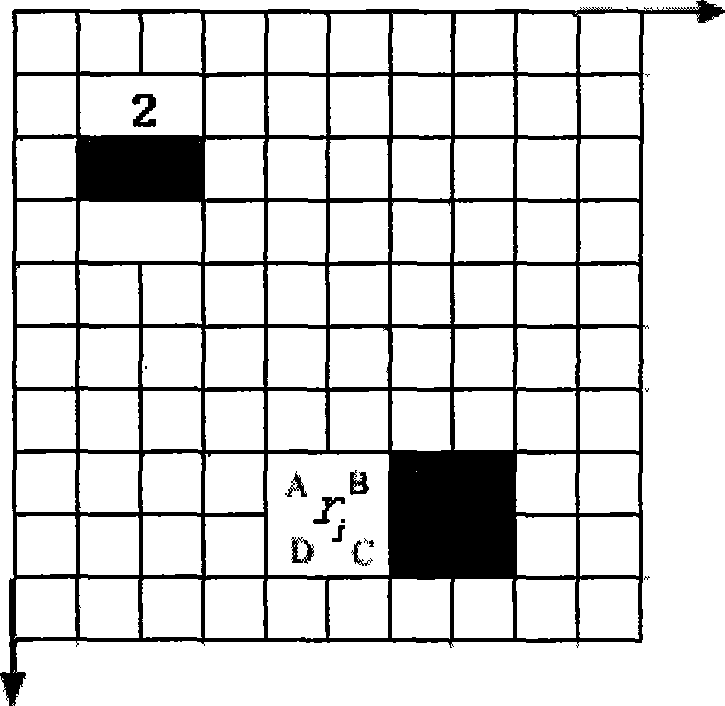

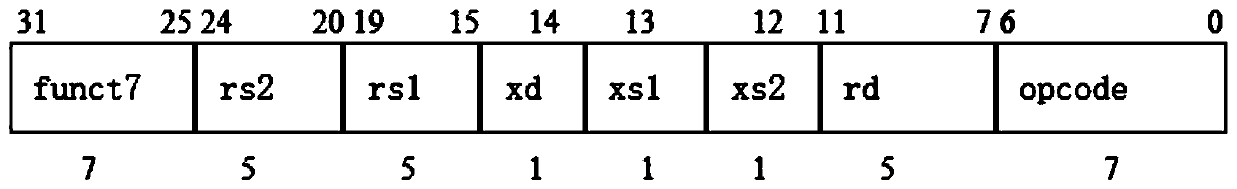

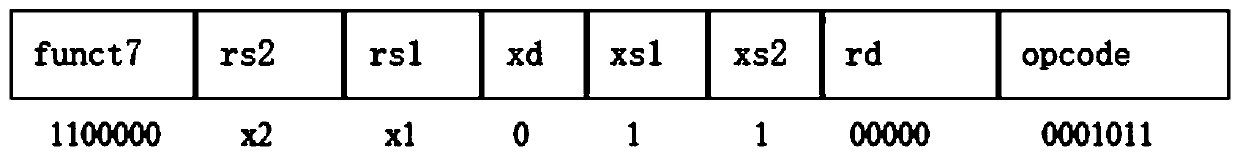

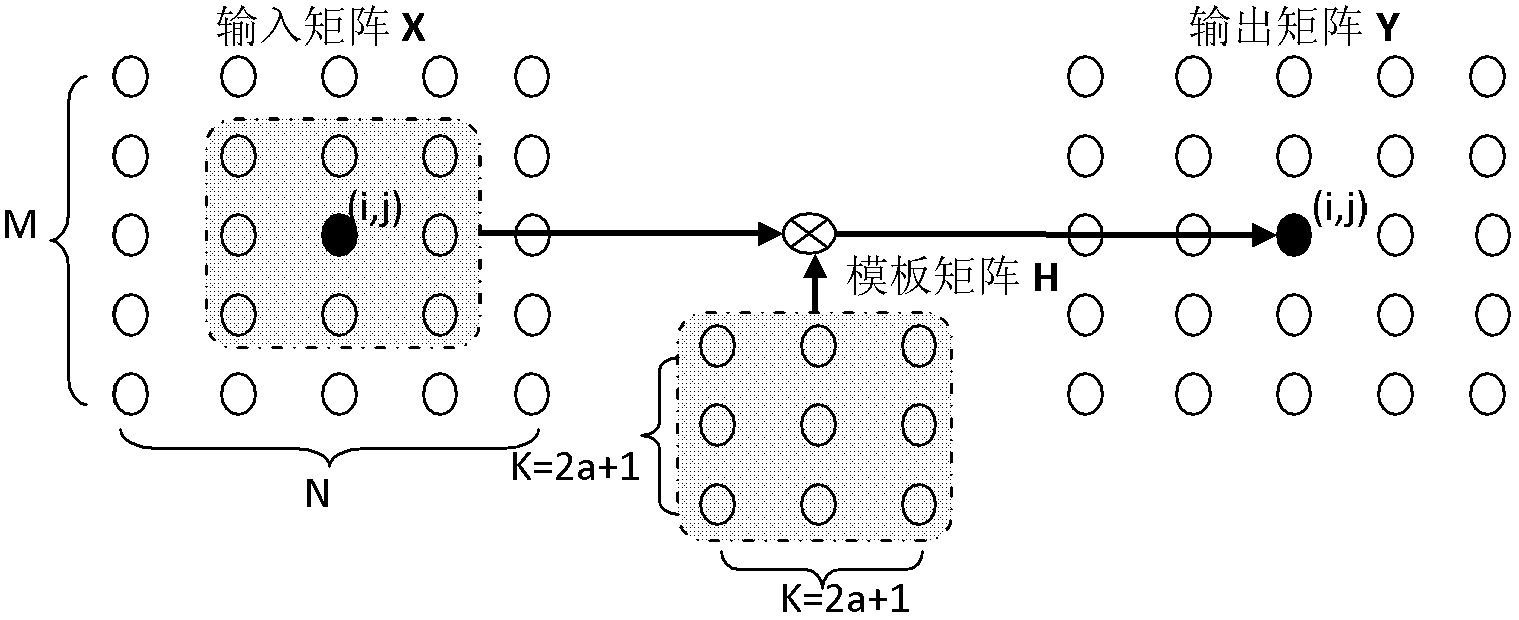

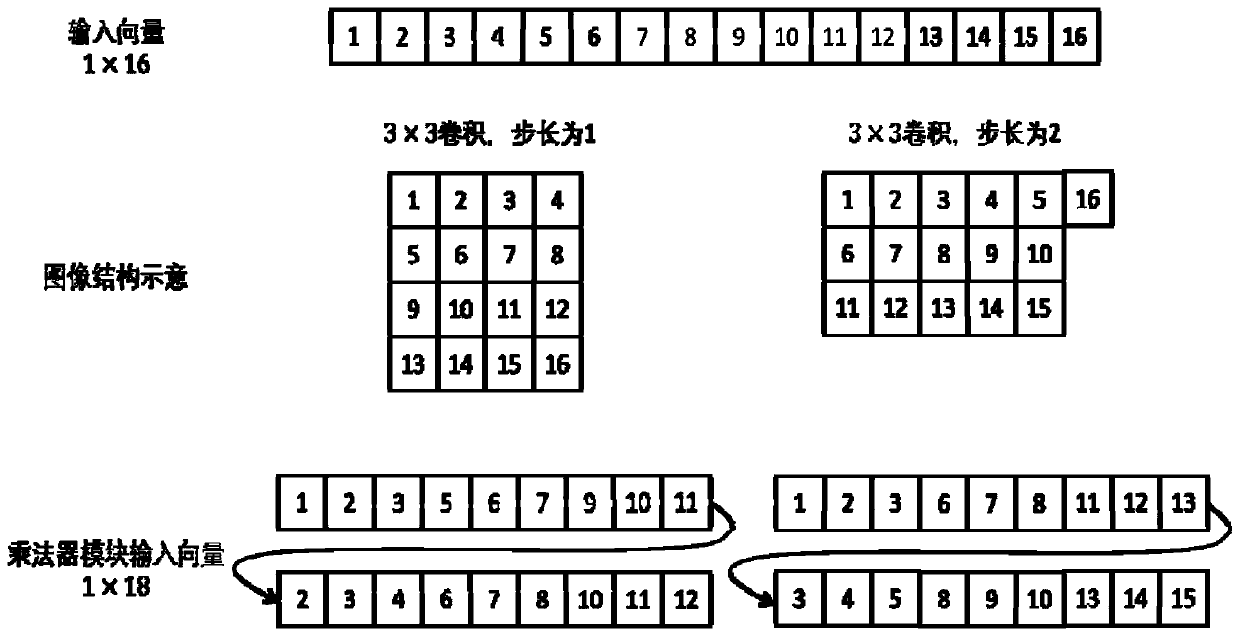

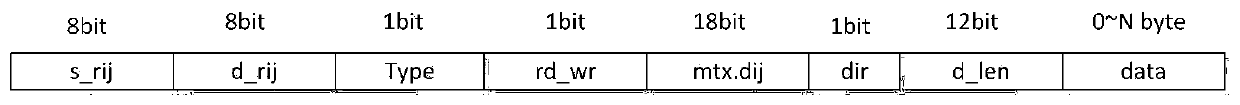

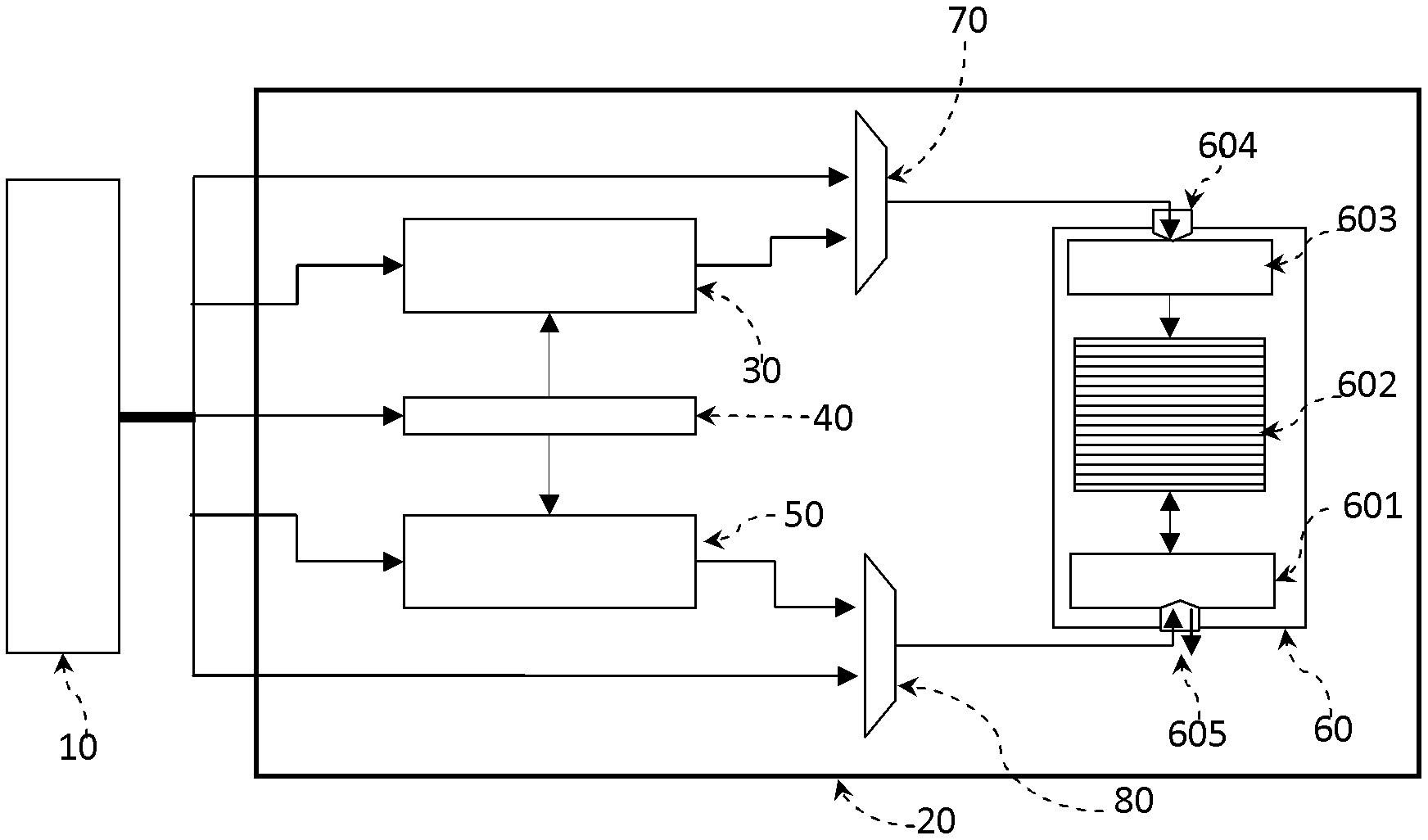

Matrix convolution calculation method, interface, coprocessor and system based on RISC-V architecture

ActiveCN109857460ACalculation speedReduce the number of memory accessesConcurrent instruction executionNeural architecturesExtensibilityProcessor design

The invention discloses a set based on RISC-. According to the method and system complete mechanism of the instruction, the interface and the coprocessor for matrix convolution calculation of the V instruction set architecture, traditional matrix convolution calculation is efficiently achieved in a software and hardware combined mode, and RISC-is utilized. Extensibility of V instruction sets, a small number of instructions and a special convolution calculation unit (namely a coprocessor) are designed; the memory access times and the execution period of a matrix convolution calculation instruction are reduced, the complexity of application layer software calculation is reduced, the efficiency of large matrix convolution calculation is improved, the calculation speed of matrix convolution isincreased, flexible calling of upper-layer developers is facilitated, and the coding design is simplified. Meanwhile, RISC-is utilized. The processor designed by the V instruction set also has greatadvantages in power consumption, size and flexibility compared with ARM, X86 and other architectures, can adapt to different application scenes, and has a wide prospect in the field of artificial intelligence.

Owner:NANJING HUAJIE IMI TECH CO LTD

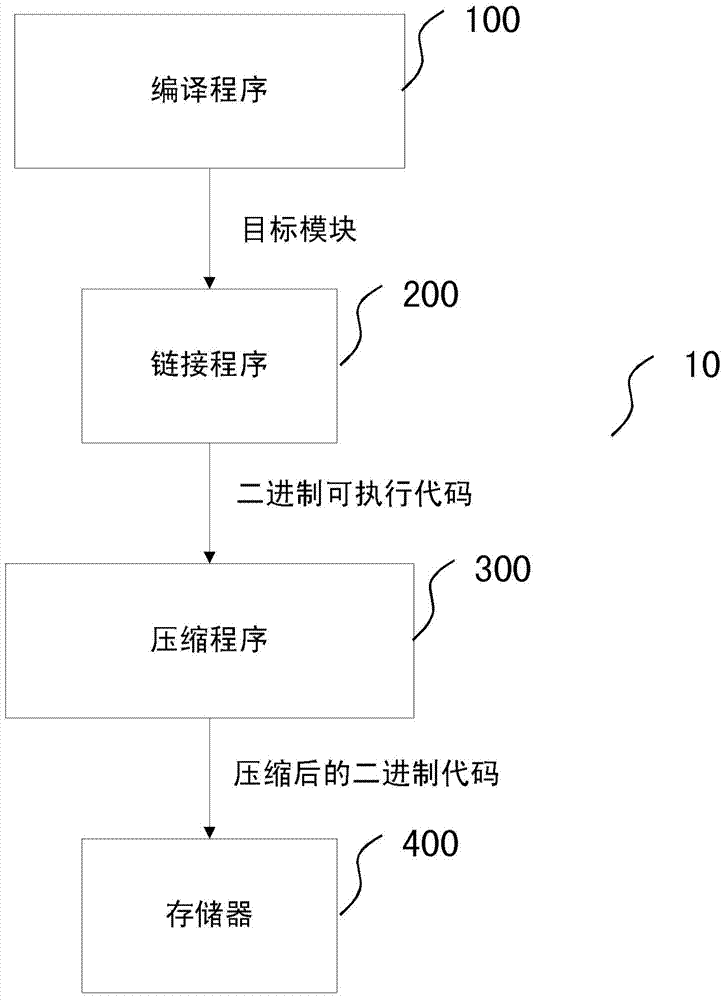

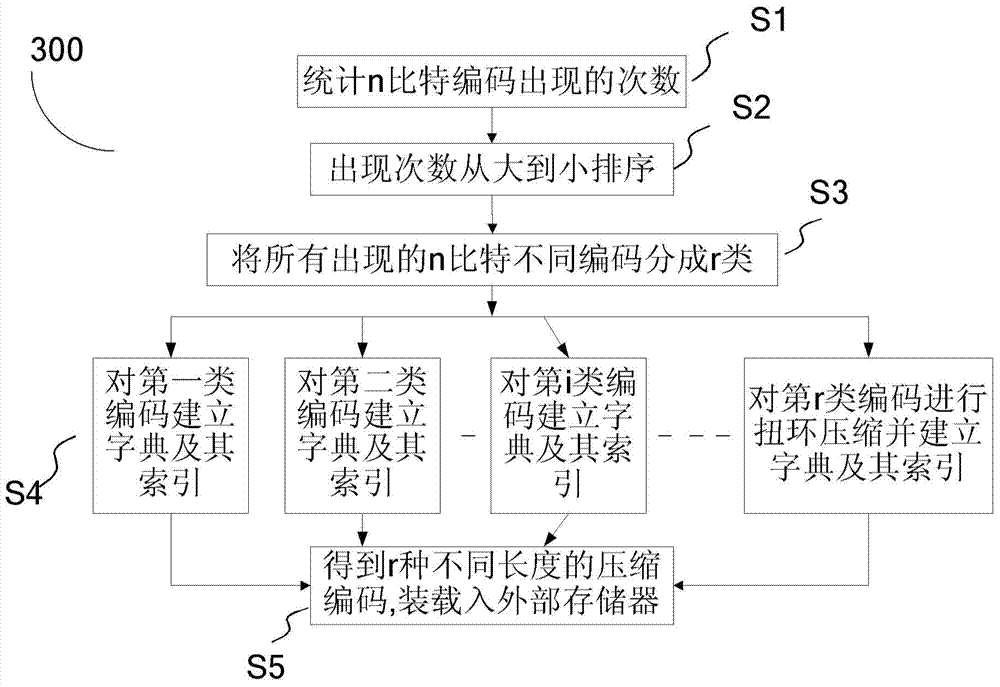

Executable code compression method of embedded type system and code uncompressing system

ActiveCN104331269AReduce redundancyReduce correlationNext instruction address formationInstruction codeAddress mapping

The invention provides an executable code compression method of an embedded type system. The executable code compression method comprises the following steps: step S1: counting appearing times of different codes in a binary code set; step S2: sorting appearing frequencies of all the different codes to form a new sorted code frequency table; step S3: dividing all the different appearing codes into r classes according to information in the code frequency table; step S4: compressing front (r-1) classes of codes by using dictionaries with different indexing lengths and carrying out torsion circle displacement dictionary compression on the rth class of the codes; step 5: storing r constructed dictionaries and indexing sets thereof into an external memorizer respectively. The invention further provides an uncompressing system of executable codes of the embedded type system; a central processing unit is used for obtaining the needed compressed codes from the r dictionaries and the indexing sets thereof by an address mapping logic of an uncompressing logic; instruction codes in the binary code set are obtained though an uncompressing unit in the uncompressing logic.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Patricia tree rapid lookup method

ActiveCN101241499AImprove effective useImprove search efficiencySpecial data processing applicationsHigh bandwidthDirect memory access

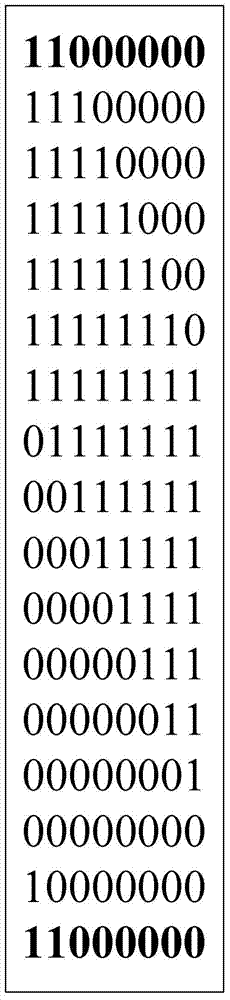

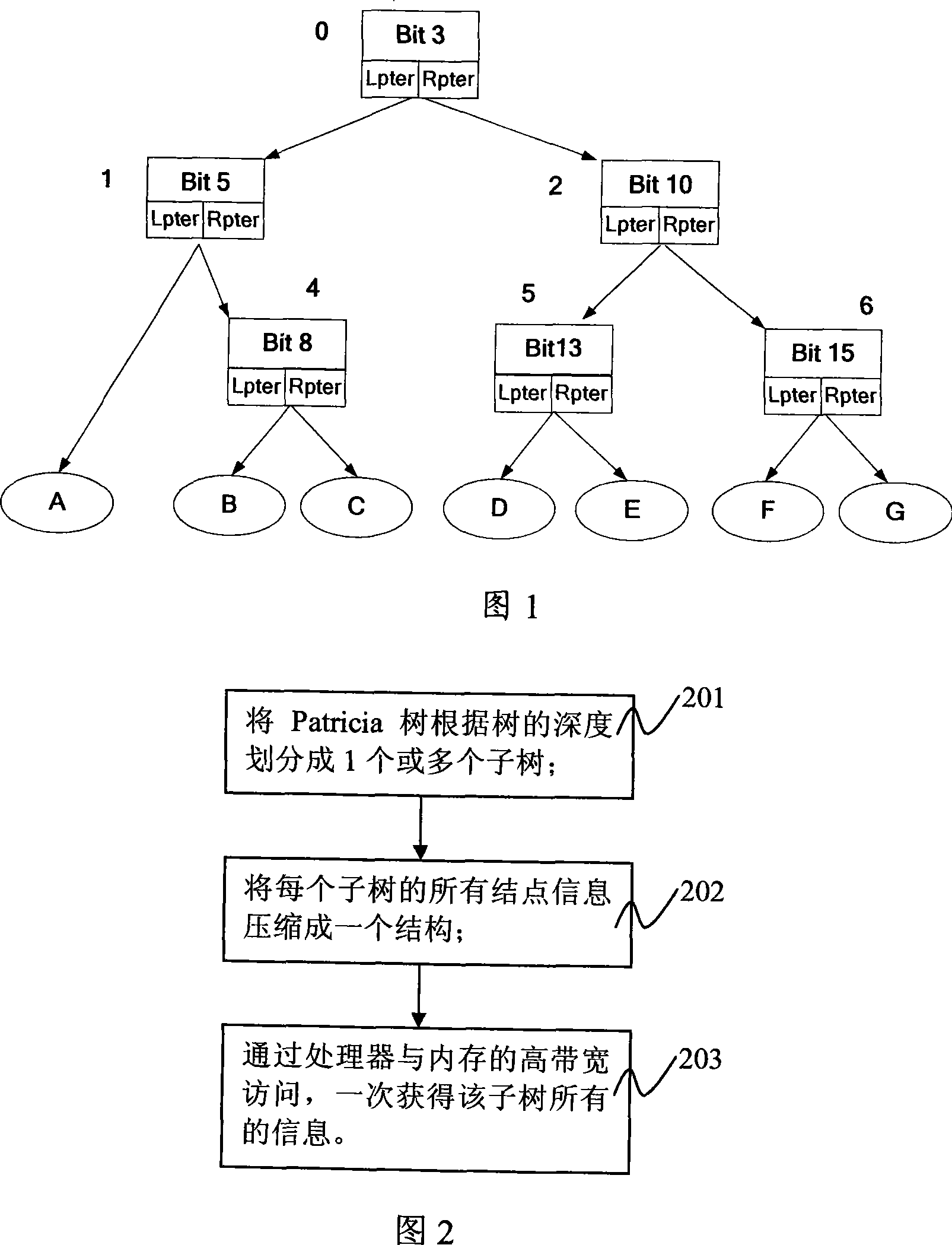

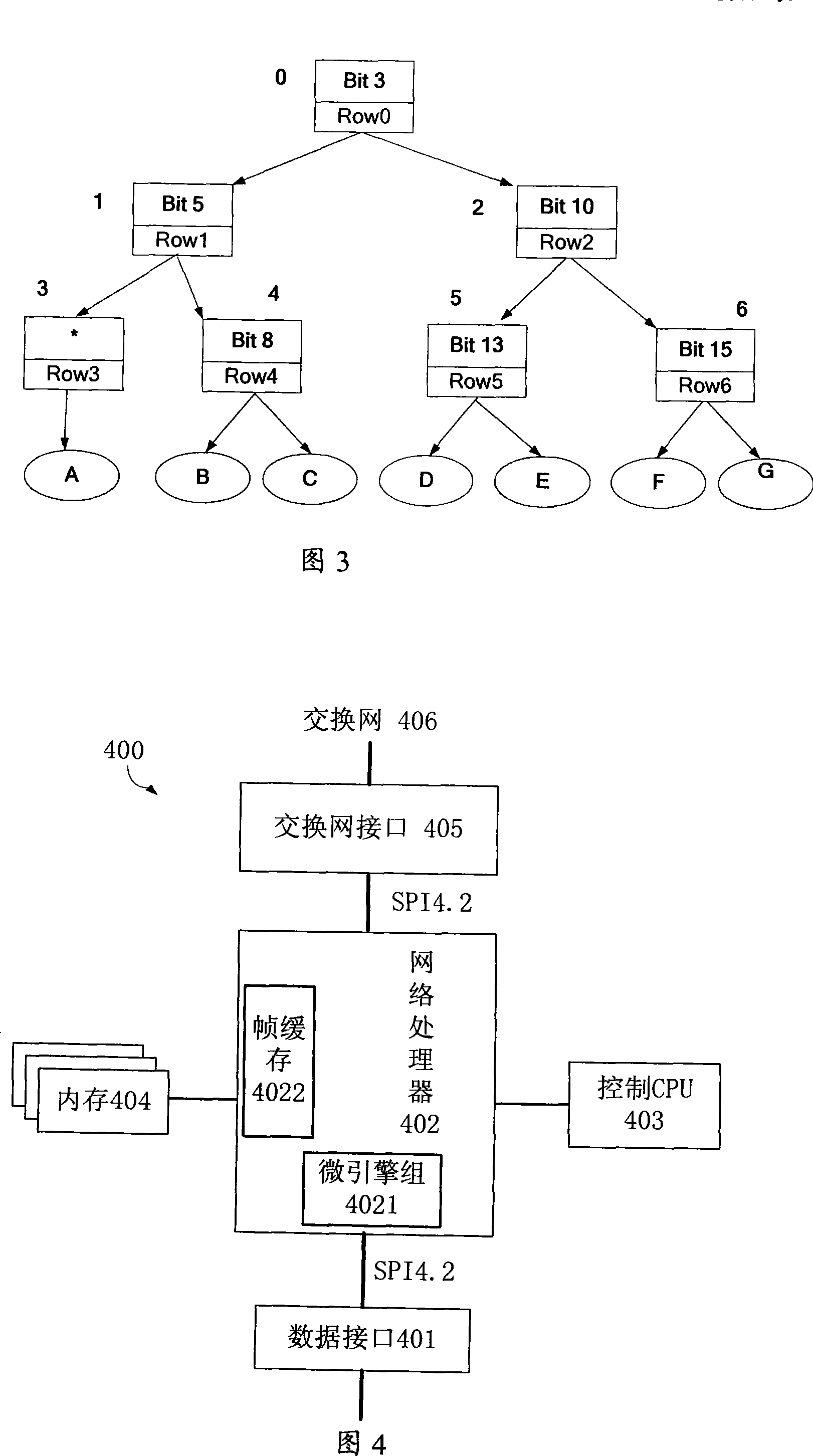

The present invention provides a method of Patricia tree rapid inquiry, including following steps: (a) dividing Patricia tree into one or more subtrees according to depth of tree; (b) compressing all node information of each subtree to a compressing tree structure; (c) accessing high bandwidth of the EMS memory via the processor, acquiring all information of a subtree by reading once memory, the method of Patricia tree rapid inquiry can reduce times of smart memory access of Patricia inquiry tree computing and improve finding efficiency.

Owner:ZTE CORP

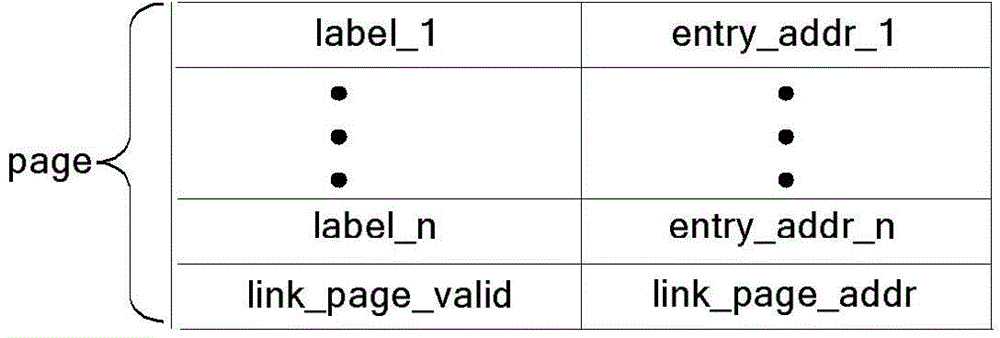

Table establishing and lookup method applied to network processor

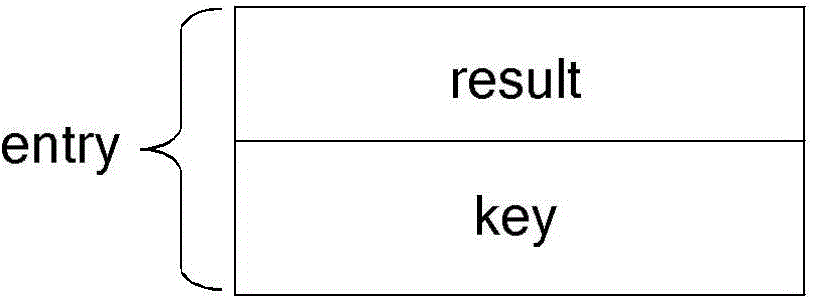

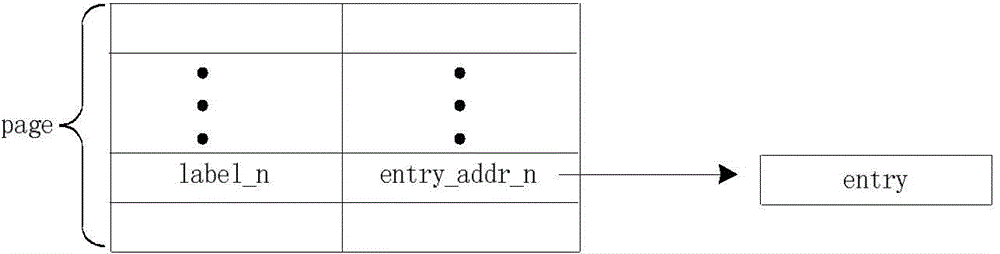

ActiveCN104158744ASimple structureSolve fill rateData switching networksSpecial data processing applicationsResource utilizationLookup table

The invention provides a table establishing and lookup method applied to a network processor. According to the method, different types of hash tables are established and adopted, and the tables are unrelated and subjected to independent two-stage lookup. The method comprises the following steps: establishing different types of hash tables according to the size of the entry of the tables to be established; allocating different memory spaces to each table; assigning the size of the tables as well as first addresses; obtaining a search key, the type of a lookup table, and the first address of the table according to information extracted from a message during looking up; performing twice hash conversion on the key at the same time, wherein in the primary conversion process, the key is converted into an offset address to determine to determine an index value of the key in an index table, and in the secondary conversion process, the key is converted into a label to distinguish conflict items; reading content from a result table according to the index value to realize matching, so as to obtain a searched result. The method effectively reduces the access number of a memory, and further improves the look-up speed of the network processor and the resource utilization rate of the memory.

Owner:NO 32 RES INST OF CHINA ELECTRONICS TECH GRP

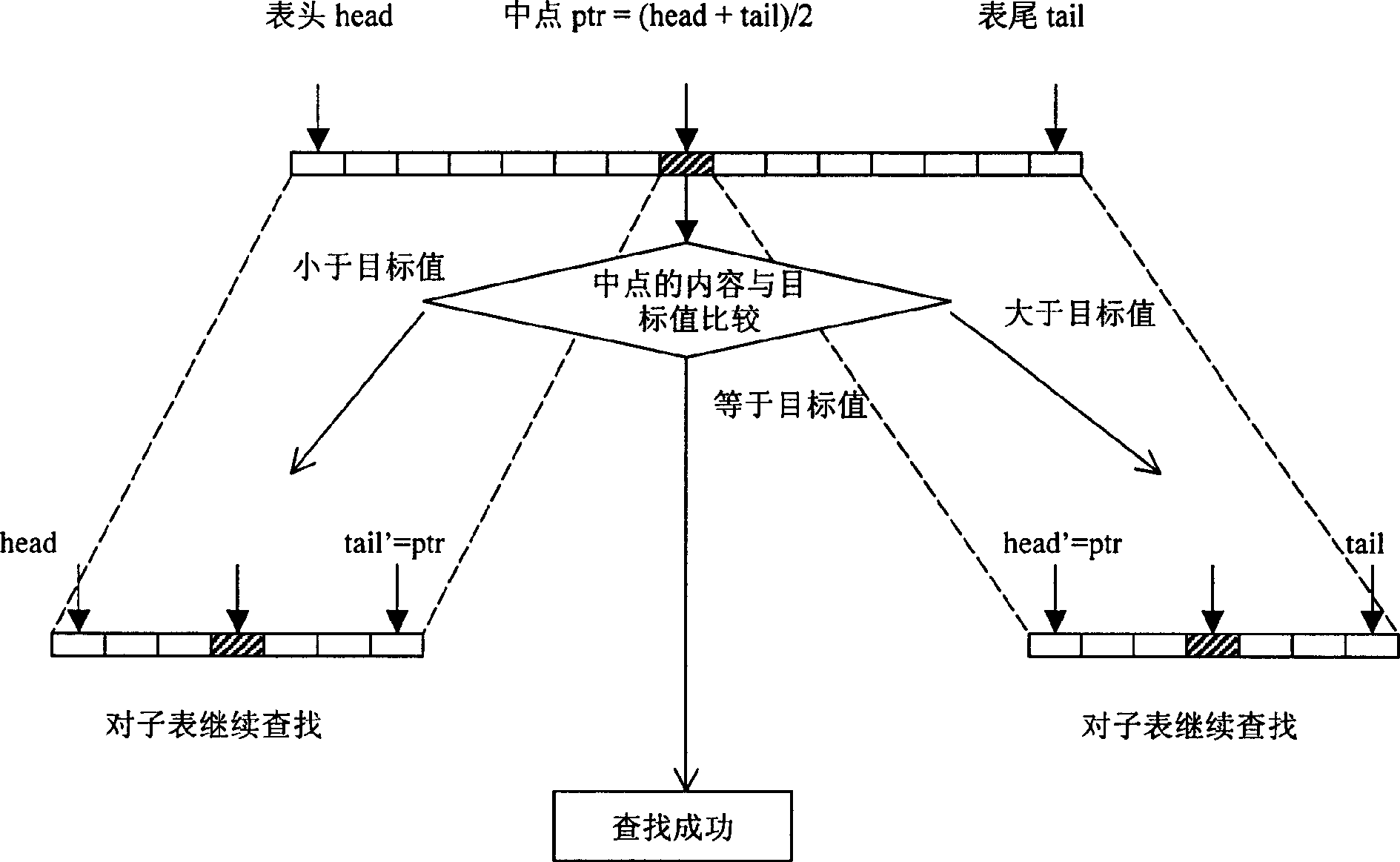

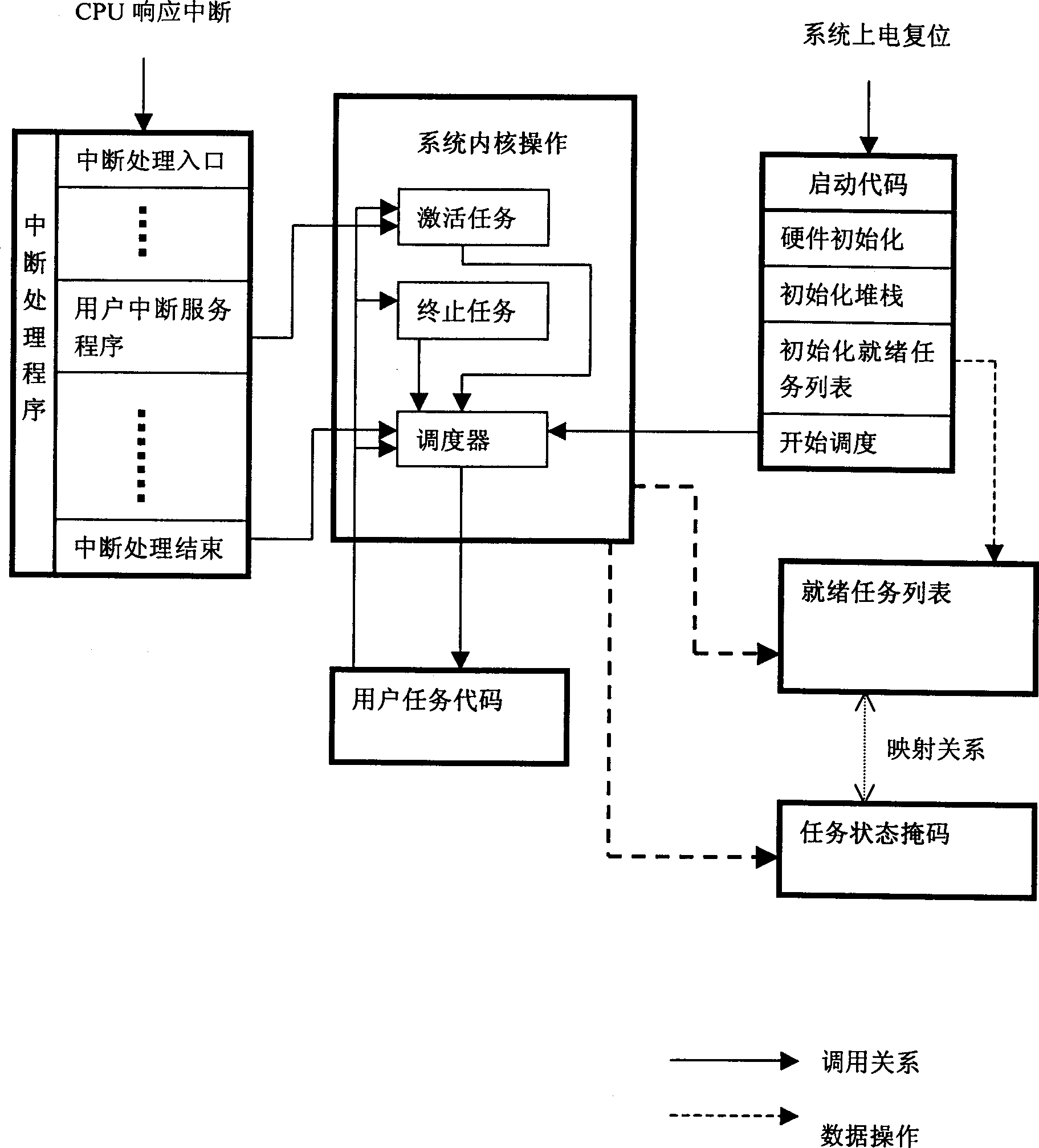

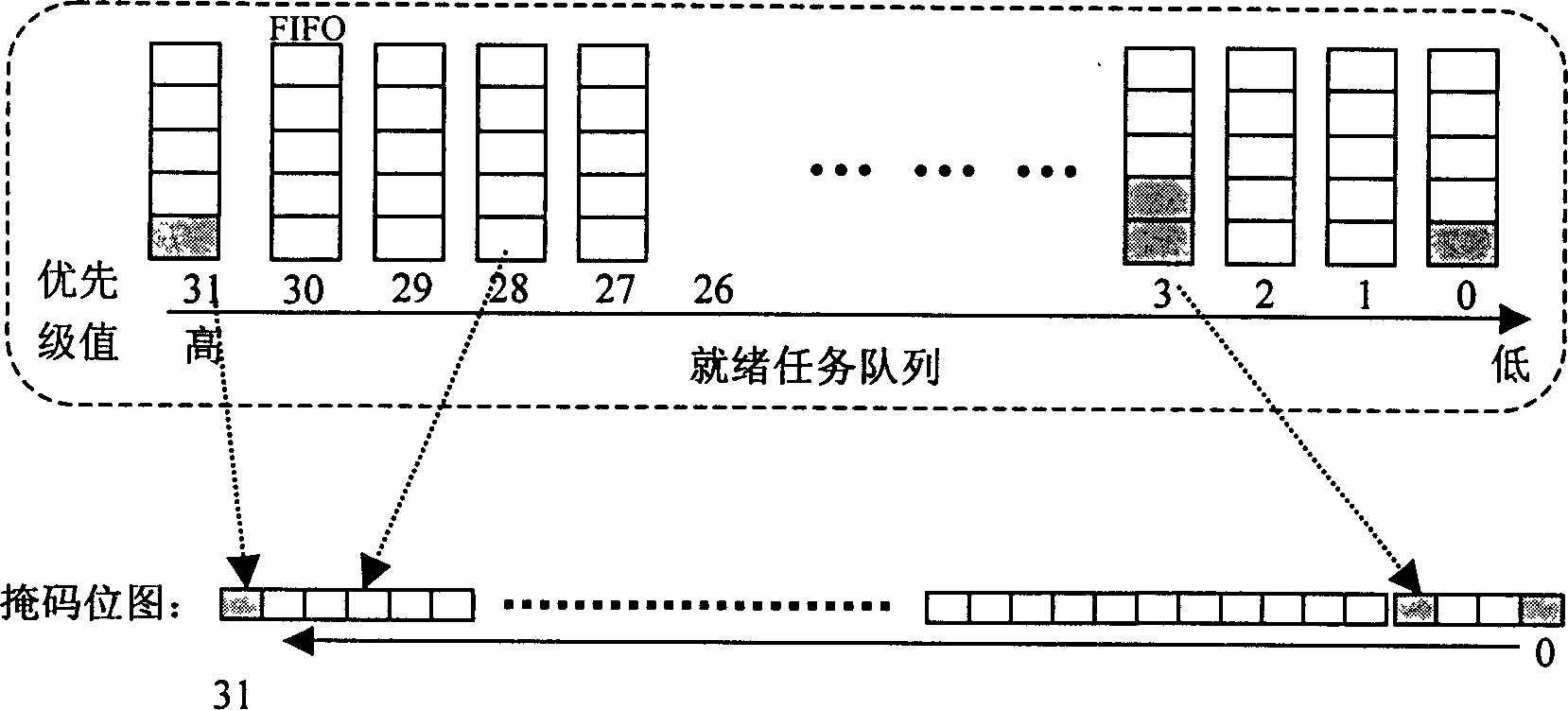

Binary chop type task dispatching method for embedding real-time operating system

InactiveCN1529233AReduce storage space requirementsReduce the number of memory accessesProgram control using stored programsProgram loading/initiatingOperational systemTime delays

State mask is adopted in the method to indicate ready state of user's task in a task table arranged in sequence according to priority high and low. Then, dichotomy search is realized by using shift comparison to locate task with highest priority rapidly, based on which, despatcher carries out task scheduling and switching. The method uses first level mask to indicate priority distribution of tasks in ready state. Dichotomy search is utilized to raise efficiency for locating task with highest priority, and to reduce number of time for accessing memory. The invention possesses advantages of stable dispatching time delay, high efficiency and less memory space used. The method implemented in MPC555 hardware platform is as a part of Tsinghua open system of electrical car (Tsinghua OSEK).

Owner:TSINGHUA UNIV

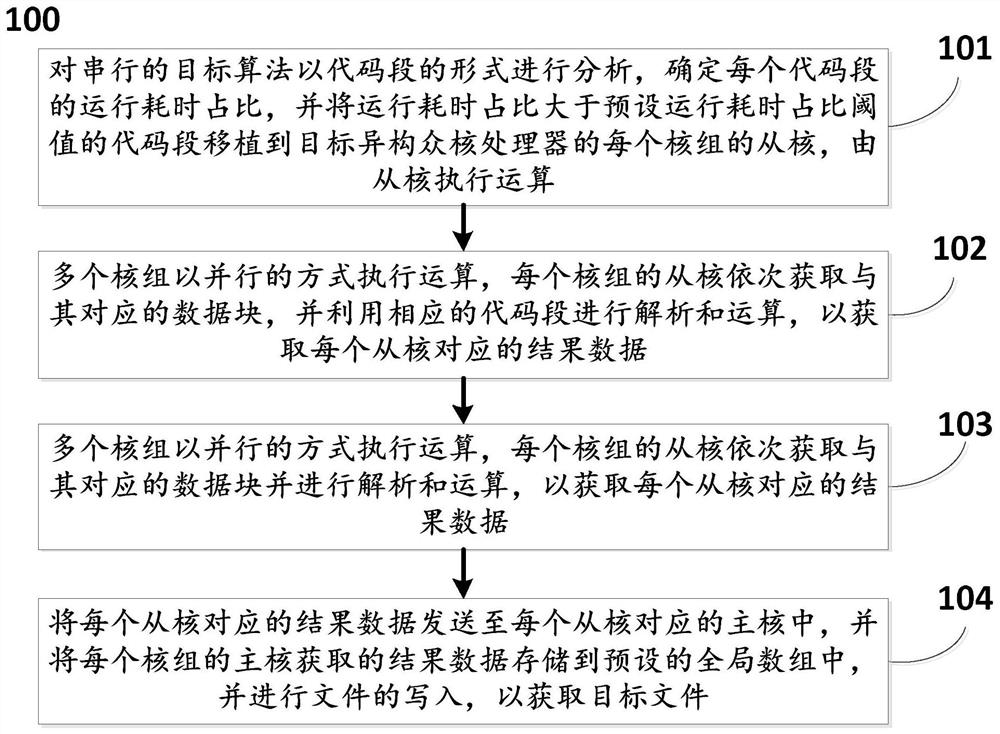

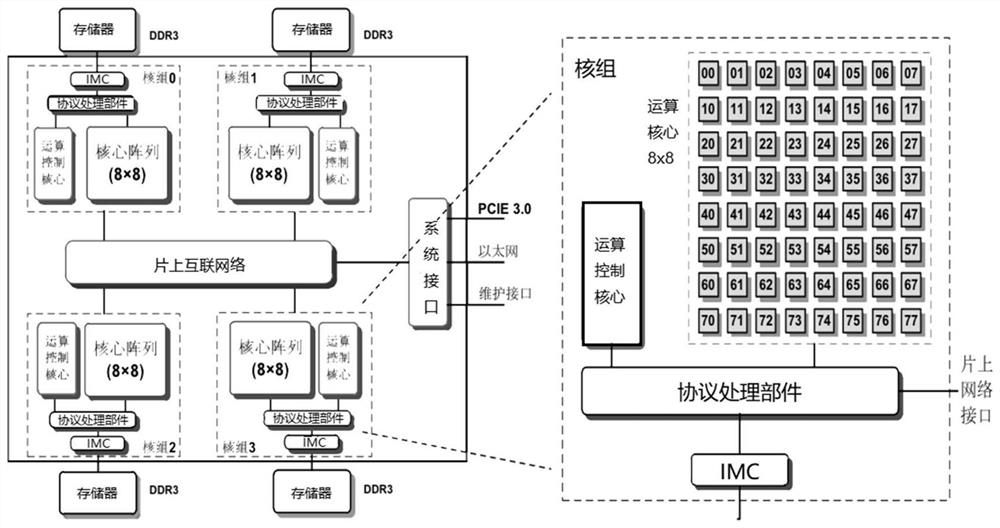

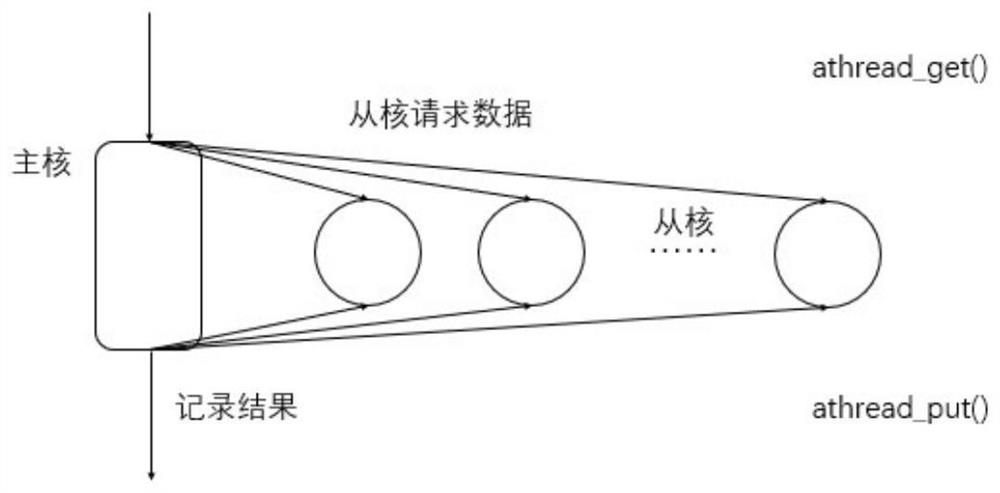

Algorithm parallel processing method and system based on heterogeneous many-core processor

ActiveCN112306678AAvoid read and write errorsReduce the number of memory accessesResource allocationDigital computer detailsComputational scienceMemory address

The invention relates to an algorithm parallel processing method and system based on a heterogeneous many-core processor, and the method comprises the steps: taking a code segment with large operationtime consumption in a serial program as a parallel computing object, carrying out the task division according to the characteristics, determining the task division of a master core and a slave core array, handing over the time-consuming computing to the slave core array for execution, by each slave core, actively acquiring a task and data used for calculation from the main memory and returns a calculation result to the main core, by the main core, updating the main memory data in an asynchronous serial mode so as to avoid data read-write errors caused by data dependence, meanwhile, for the problem of time consumption of master-slave core communication, packaging a single data item in a structural body to realize data packaging, and setting a data master memory address 256B pair boundary of a master core to realize that the single data copy granularity is not less than 256B, so that the bandwidth of a single core group is utilized to the maximum extent, and the data transmission performance is optimized, consumption hiding of communication time is realized by using a double-buffer mechanism, and the parallel efficiency is improved.

Owner:OCEAN UNIV OF CHINA +1

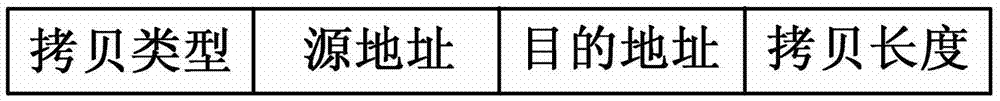

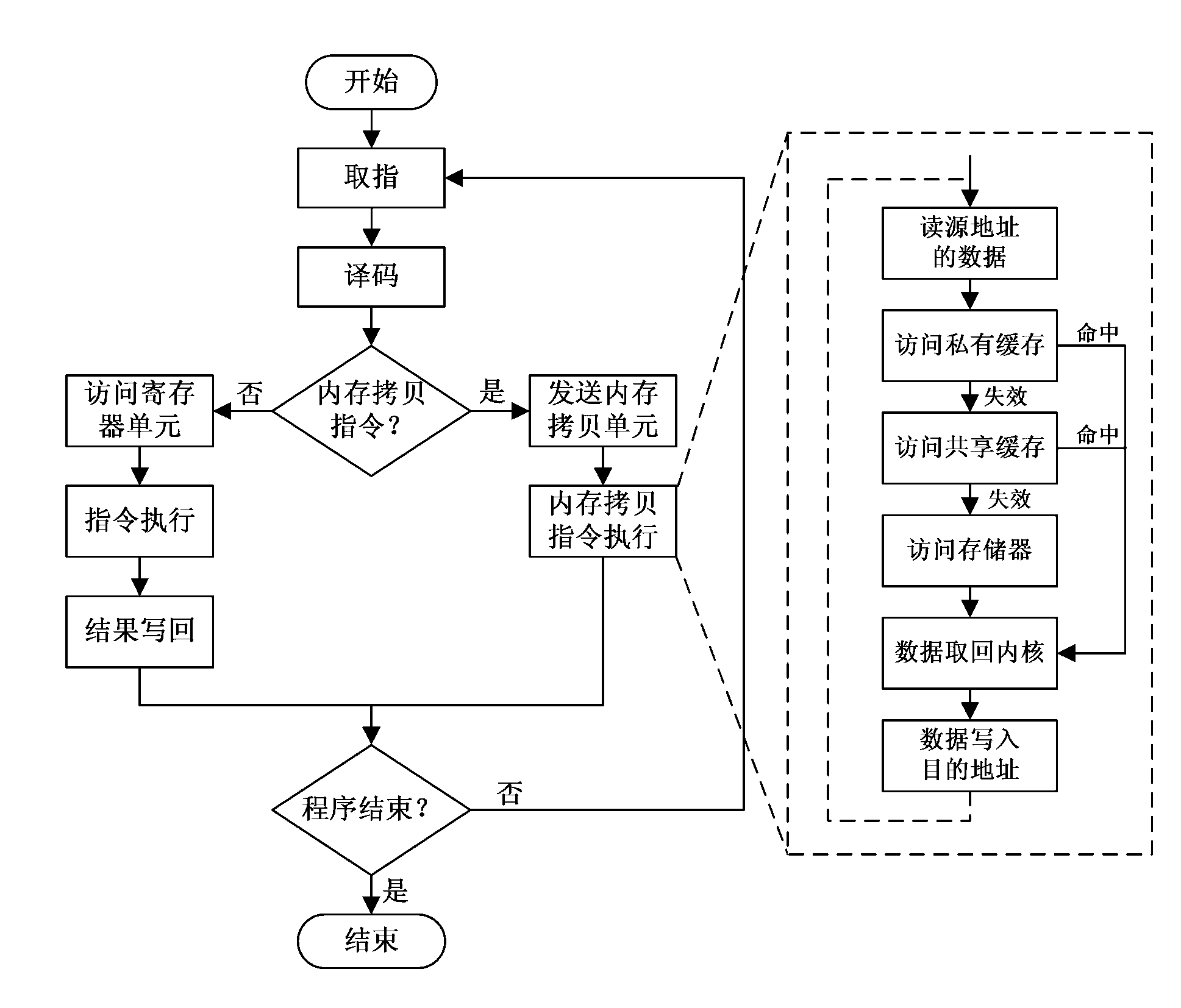

Method and device for accelerating memory copy of microprocessor

ActiveCN102968395AImprove efficiencyImprove concurrencyEnergy efficient ICTConcurrent instruction executionComputer architectureAccess frequency

The invention discloses a method and a device for accelerating a memory copy of a microprocessor. The method comprises the following steps : firstly, adding a memory copy unit in the microprocessor; secondly, transmitting a memory copy instruction obtained by decoding according to a decoding logic to the memory copy unit; and detecting the correlation between a new memory copy request and other memory copy requests by the memory copy unit through a correlated detection part and caching the new memory copy request and the correlation thereof to a request queue; and thirdly, performing the current memory copy request with pages as unit and cooperatively performing the correlated memory copy requests. The device comprises the memory copy unit special for performing the memory copy request and the correlated detection part for detecting the correlation between the new memory copy request and the other memory copy requests in the queue. The method and the device disclosed by the invention have the advantages of high performance of the memory copy, simpleness in realization of hardware, small cost, excellent expansibility, strong compatibility, favorable concurrency, low access frequency and low power consumption.

Owner:NAT UNIV OF DEFENSE TECH

Method for multi-mode string matching according to word length

ActiveCN102609450AReduce the number of memory accessesIncreased average jump distanceSpecial data processing applicationsTheoretical computer scienceMatching methods

The invention discloses a method for multi-mode string matching according to word length, which comprises a precompiling process and a searching process. A shift table, a hash table and a prefix table are constructed in the precompiling process. The method is characterized in that text is read according to the word length, one integer is loaded from the text each time, one machine word is read in and processed each time, and accordingly, weakness of small leap distance caused by small string length in a shortest mode can be overcome; and hash values of three character blocks contained in the integer can be obtained by means of shifting the integer, one by one valuing and OR operation are not needed, calculating speed of the hash values are improved, access memory times are reduced effectively, and memory accessing efficiency is enhanced. By the method, higher efficiency in multi-mode string matching is achieved.

Owner:顾乃杰

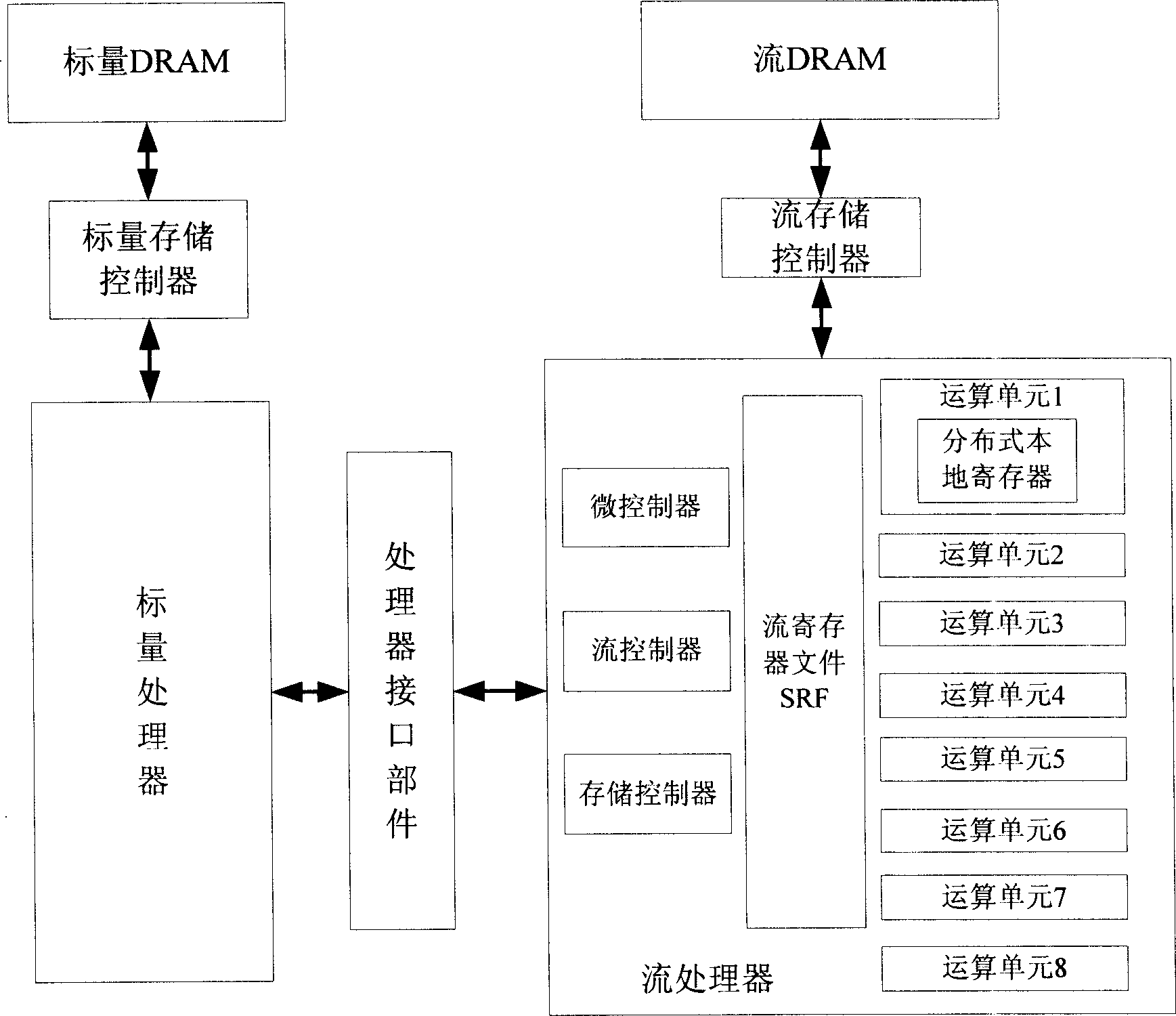

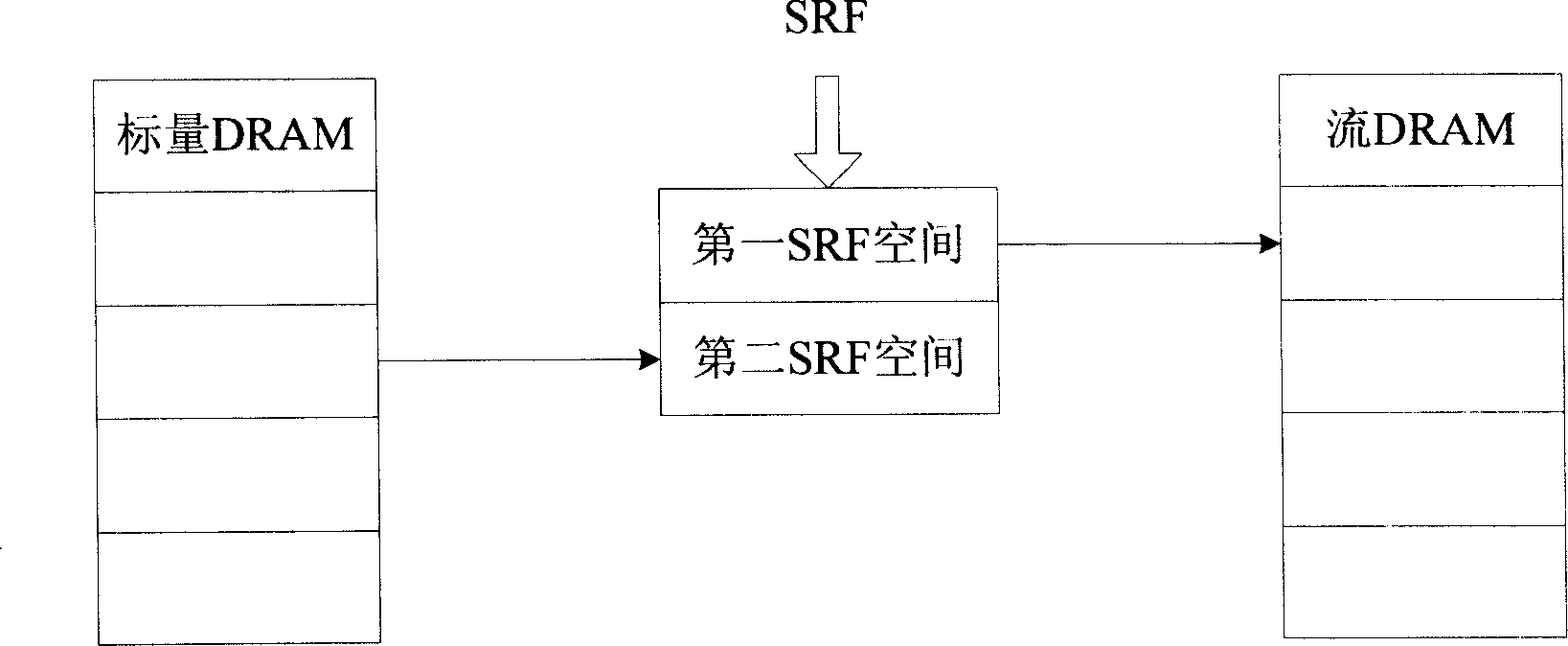

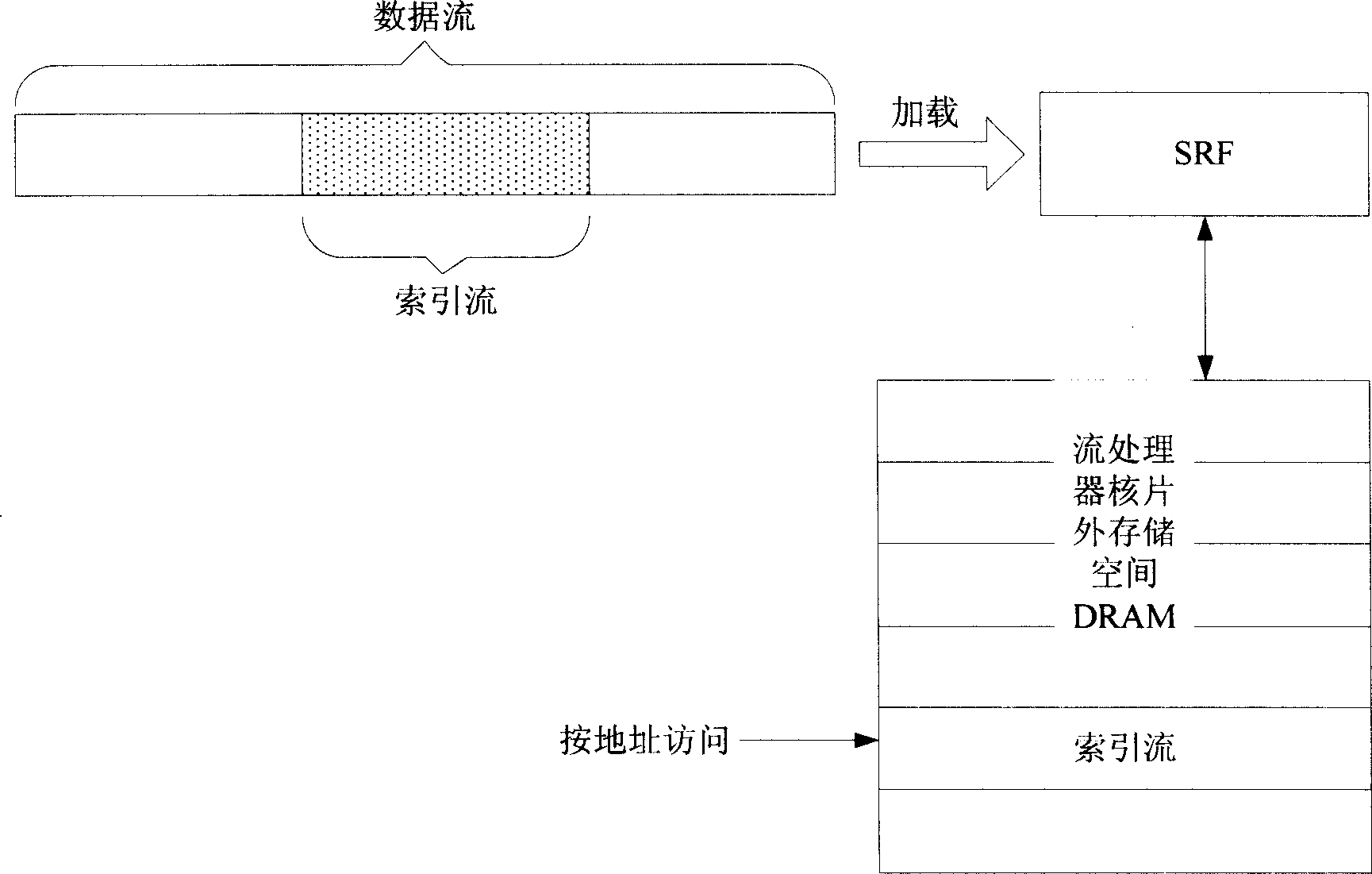

Method for decreasing data access delay in stream processor

InactiveCN1885283ALower latencyAvoid diversionGeneral purpose stored program computerMultiple digital computer combinationsMemory hierarchyScalar processor

The invention relates to a method for reducing the data access delay in flow processor, wherein the invention improves the first memory layer of flow processor, combines scalar DRAM and flow DRAM into chip external share memory shared by scalar processor and flow processor; and uses new method to transmit data flow between chip external share DRAM and flow register document SRF; and uses synchronous mechanism to relate RAW; the scalar processor and flow processor directly send request to the bus when accessing chip external data, to obtain bus priority, and send the accessed address to DRAM controller; the DRAM controller accesses chip external DRAM to obtain the data of DRAM and feedback data to scalar processor or flow processor. The invention can avoid overflowing SRF caused by overlong flow, to avoid transferring data several times in memory space to reduce the data access delay.

Owner:NAT UNIV OF DEFENSE TECH

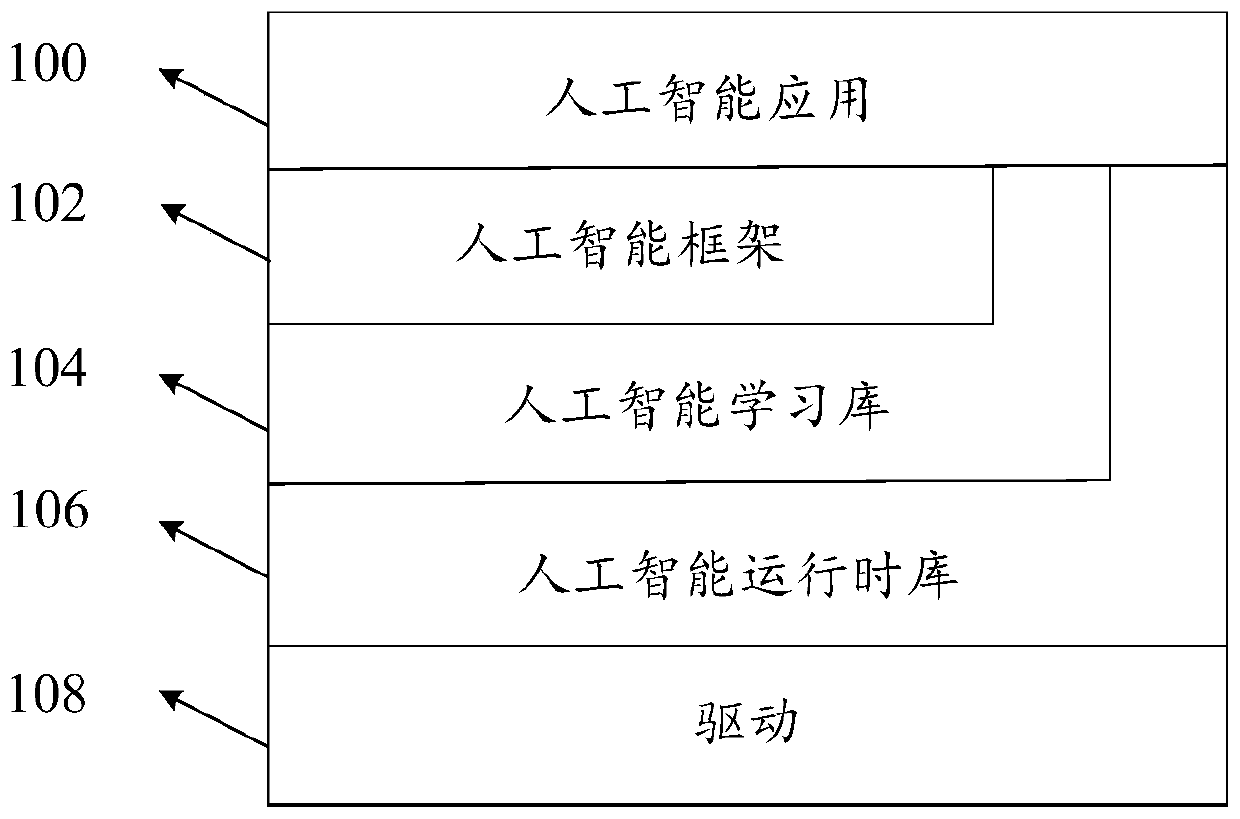

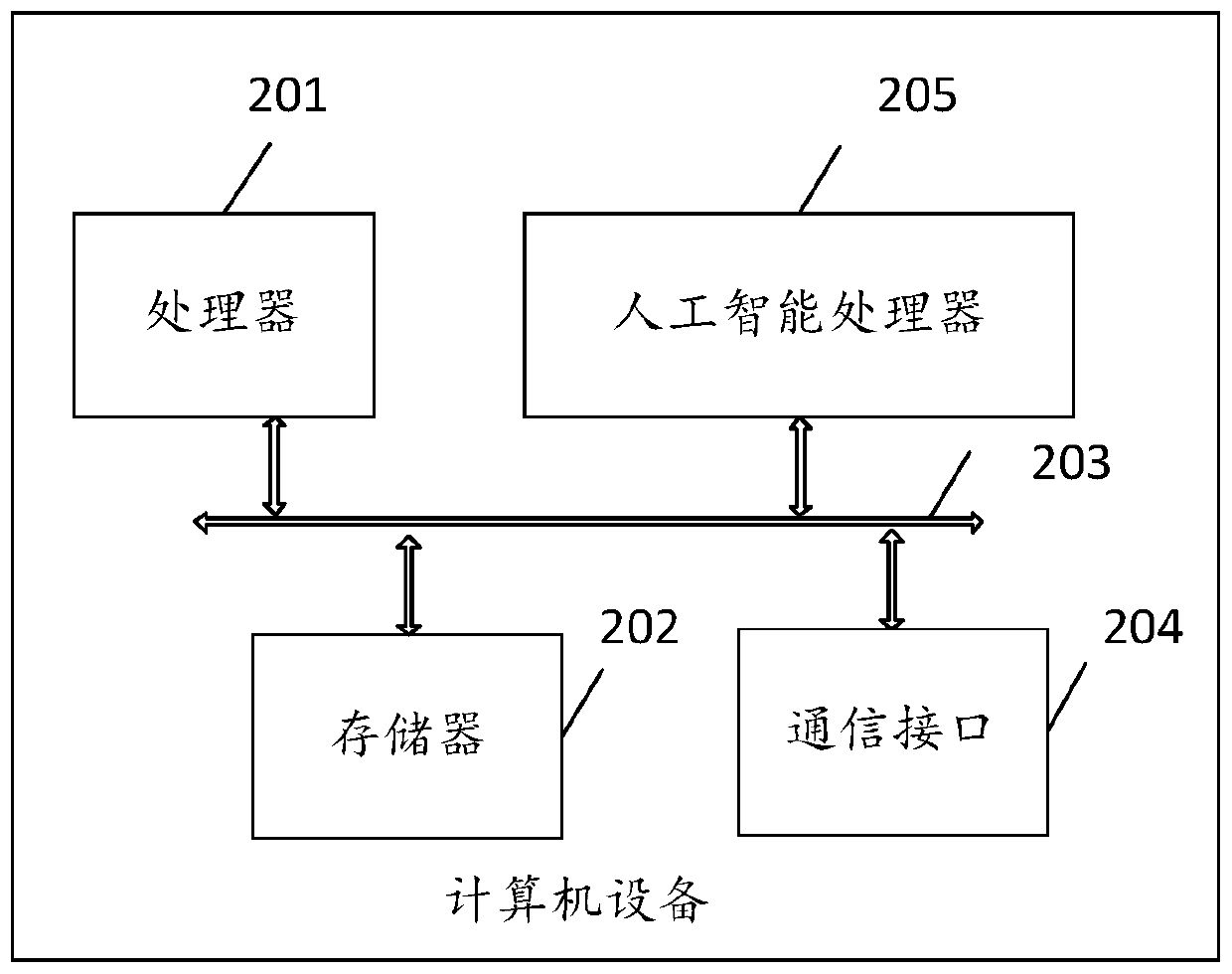

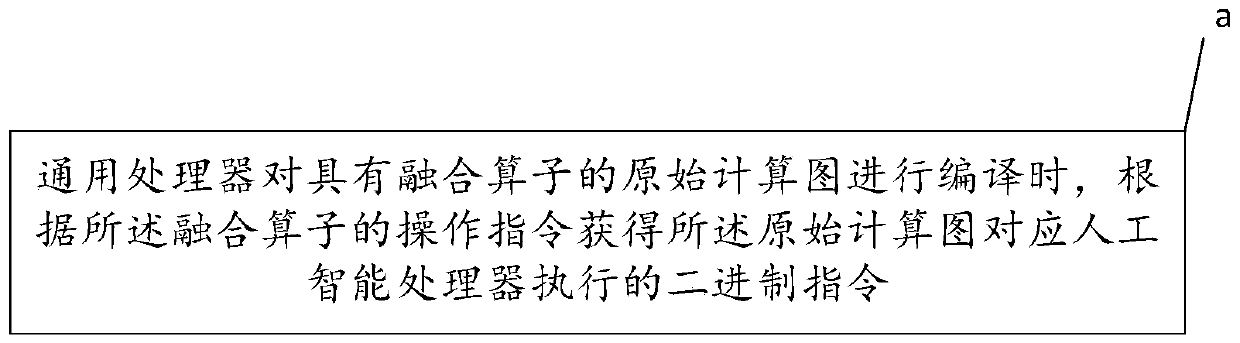

Calculation graph execution method, computer equipment and storage medium

PendingCN111160551AReduce the number of startsReduce the number of memory accessesNeural architecturesPhysical realisationTheoretical computer scienceFusion operator

The embodiment of the invention discloses a calculation graph execution method, computer equipment and a storage medium, and the calculation graph execution method comprises obtaining a binary instruction executed by an artificial intelligence processor corresponding to a calculation graph according to an operation instruction of a fusion operator when a universal processor compiles the calculation graph with the fusion operator.

Owner:SHANGHAI CAMBRICON INFORMATION TECH CO LTD

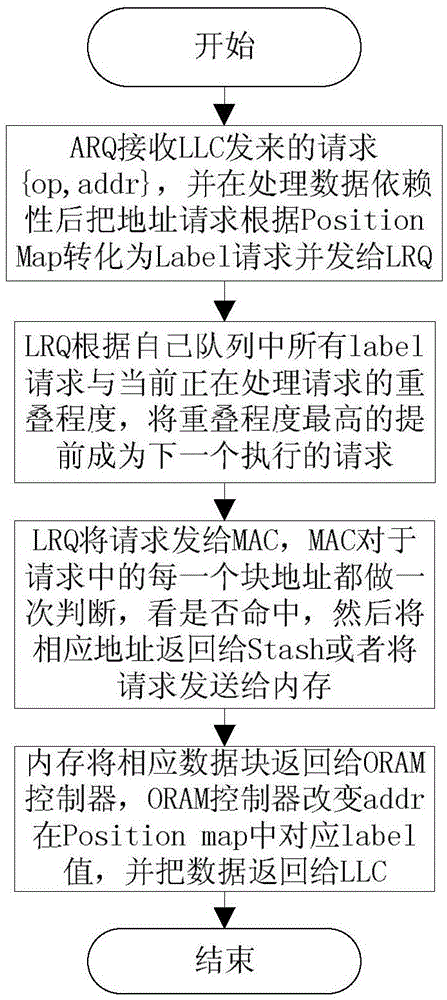

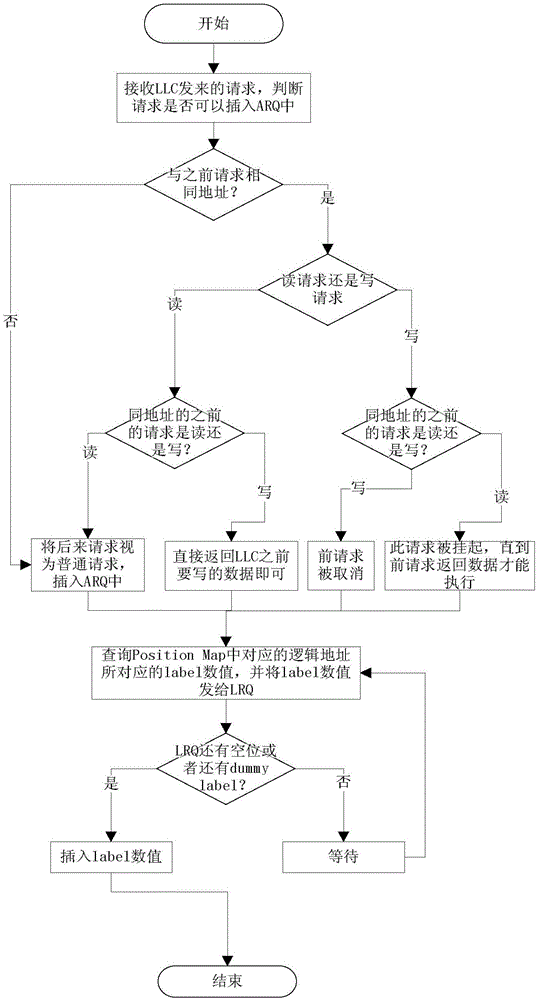

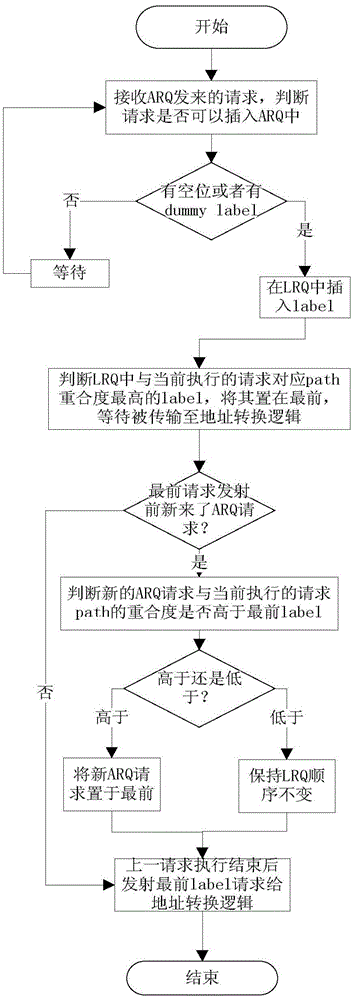

Fork type access method for Path ORAM

InactiveCN105589814AReduce in quantityFast executionEnergy efficient computingMemory systemsHardware structureAccess method

The invention discloses a fork type access method for a Path ORAM. The method comprises a stage of processing a last level cache (LLC) request by an address request queue (ARQ), a stage of processing an ARQ request by a label request queue (LRQ), a stage of processing an LRQ request by address logic, a stage of processing an address logic request by an MAC and a stage of processing an MAC request by a memory, wherein in the stage of processing the address logic request by the MAC, a mode of access to an ORAM tree is of a fork type. According to the method, through hardware structure optimization of a hidden memory access mode and by utilizing access of removing an overlapped part of two adjacent paths, the memory access quantity and cost of the ORAM are reduced, the execution speed of an ORAM system is increased, and the power consumption can be reduced, so that the overall system performance is greatly improved.

Owner:PEKING UNIV

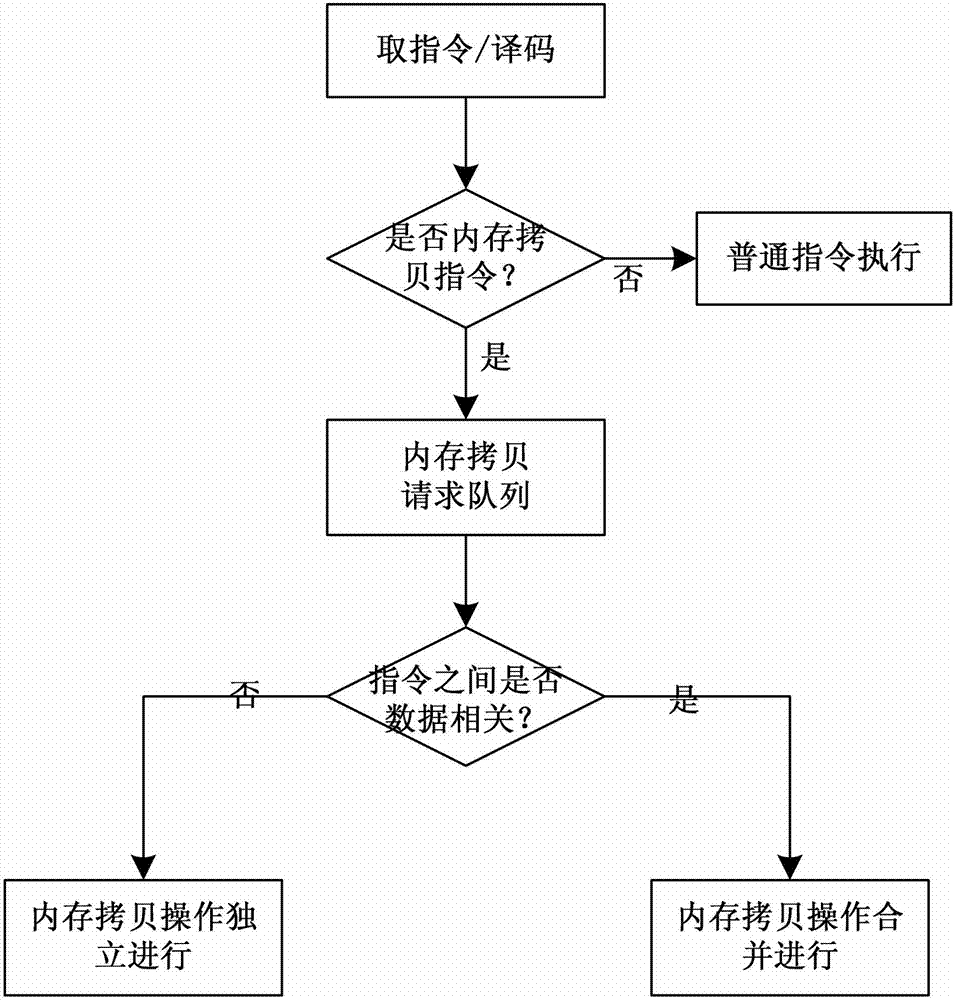

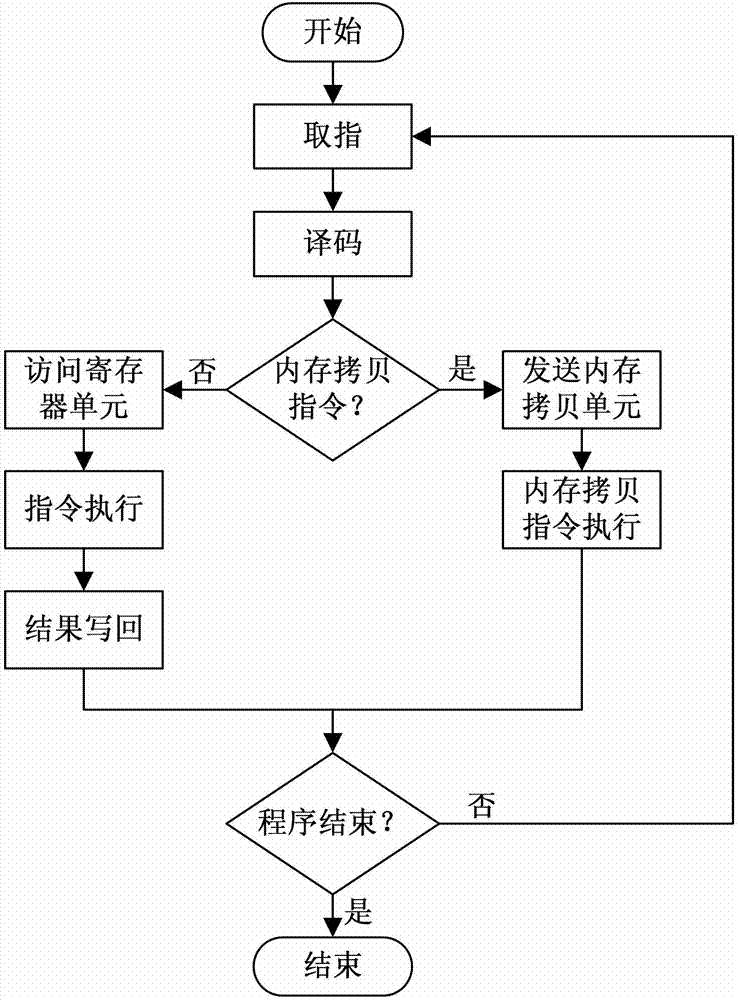

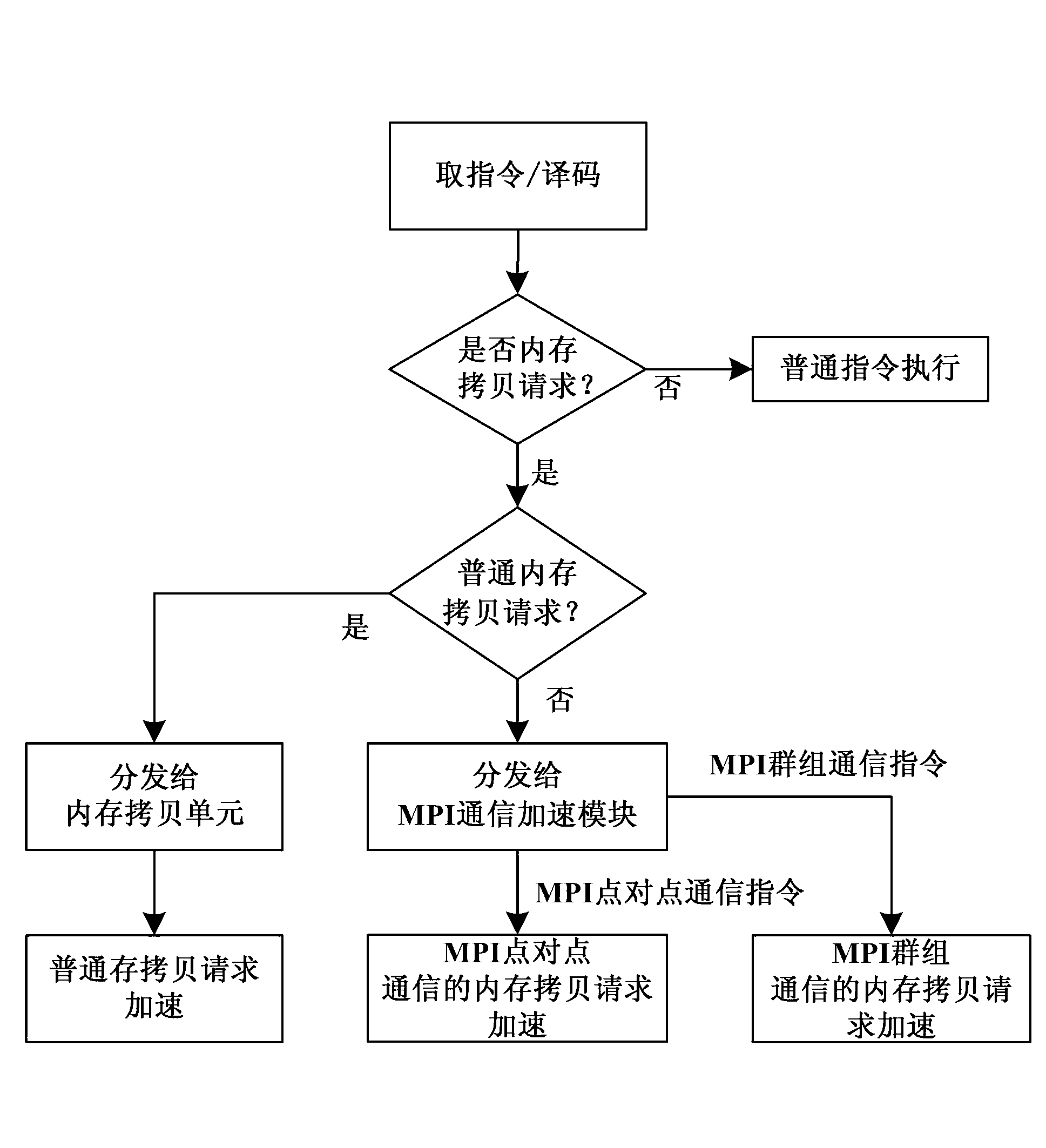

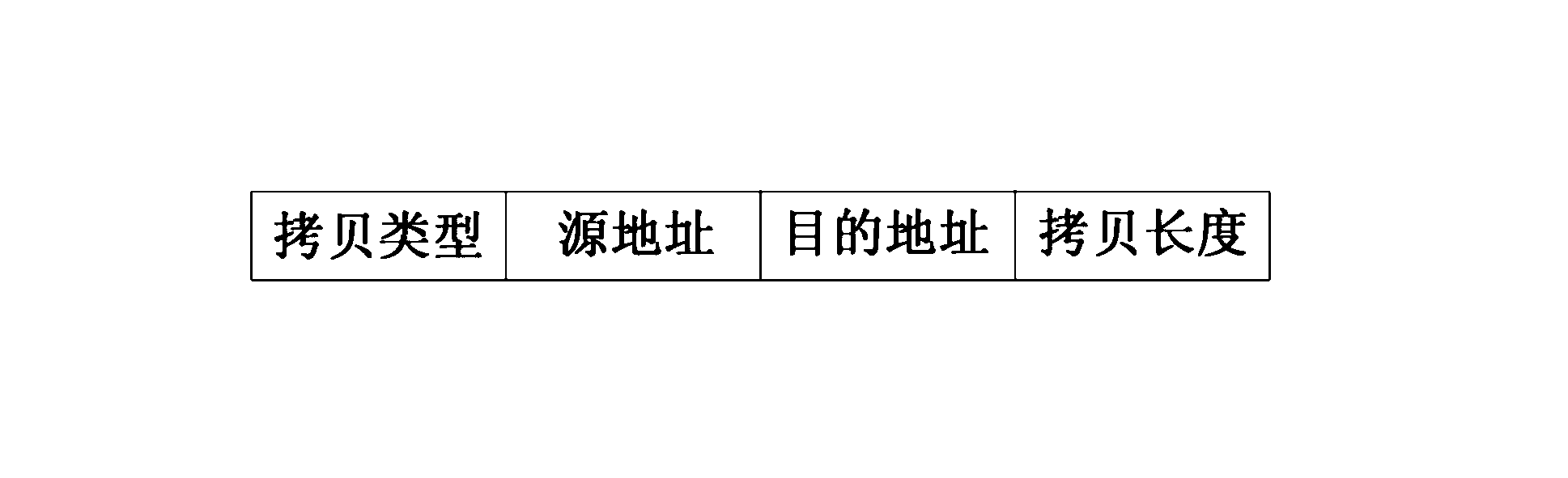

Internal memory copying accelerating method and device facing multi-core microprocessor

ActiveCN103019655AReduce the number of memory accessesImprove efficiencyDigital computer detailsConcurrent instruction executionInternal memoryComputer compatibility

The invention discloses an internal memory copying accelerating method and a device facing a multi-core microprocessor. The method comprises the following steps: an internal memory copying instruction and an MPI (Multi Point Interface) communication accelerating module are added in a microprocessor instruction in a concentration manner to identify internal memory copying request types which are obtained by decoding; general internal memory copying requests are issued to an internal memory copying unit; MPI group communication requests or MPI point-to-point communication requests are issued to the MPI communication accelerating module; the MPI communication accelerating module merges and executes associated internal memory copying requests to improve internal memory copying performance and execution efficiency; and the device comprises a decoding unit, the internal memory copying unit, associated detecting parts, and the MPI communication accelerating module for executing the internal memory copying requests which constitute MPI group communication or MPI point-to-point communication. The method and the device have the advantages of high efficiency of internal memory copying, good performance of multi-core optimization, low hardware design complexity, good compatibility, low power consumption and simplicity in hardware realization.

Owner:NAT UNIV OF DEFENSE TECH

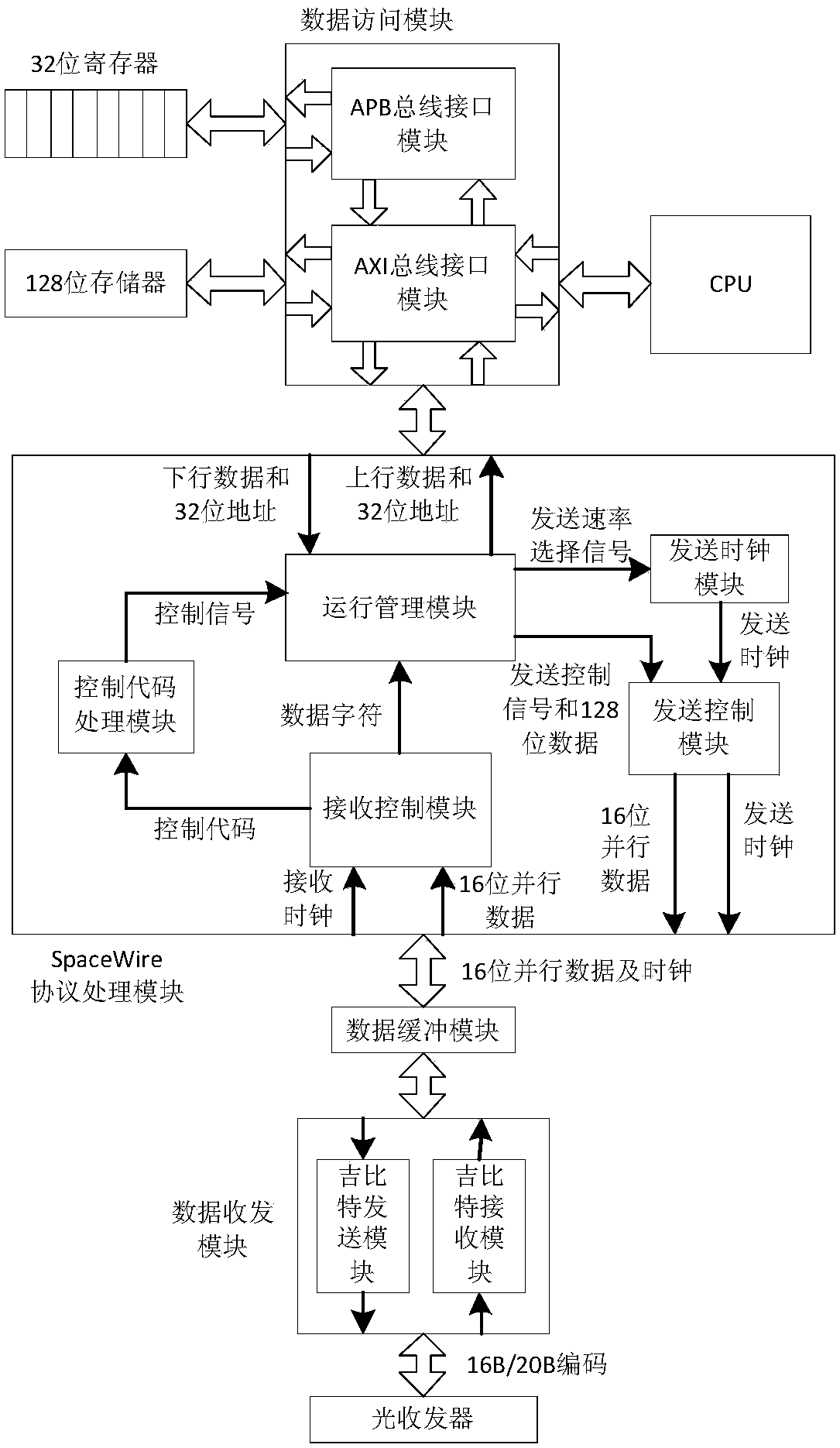

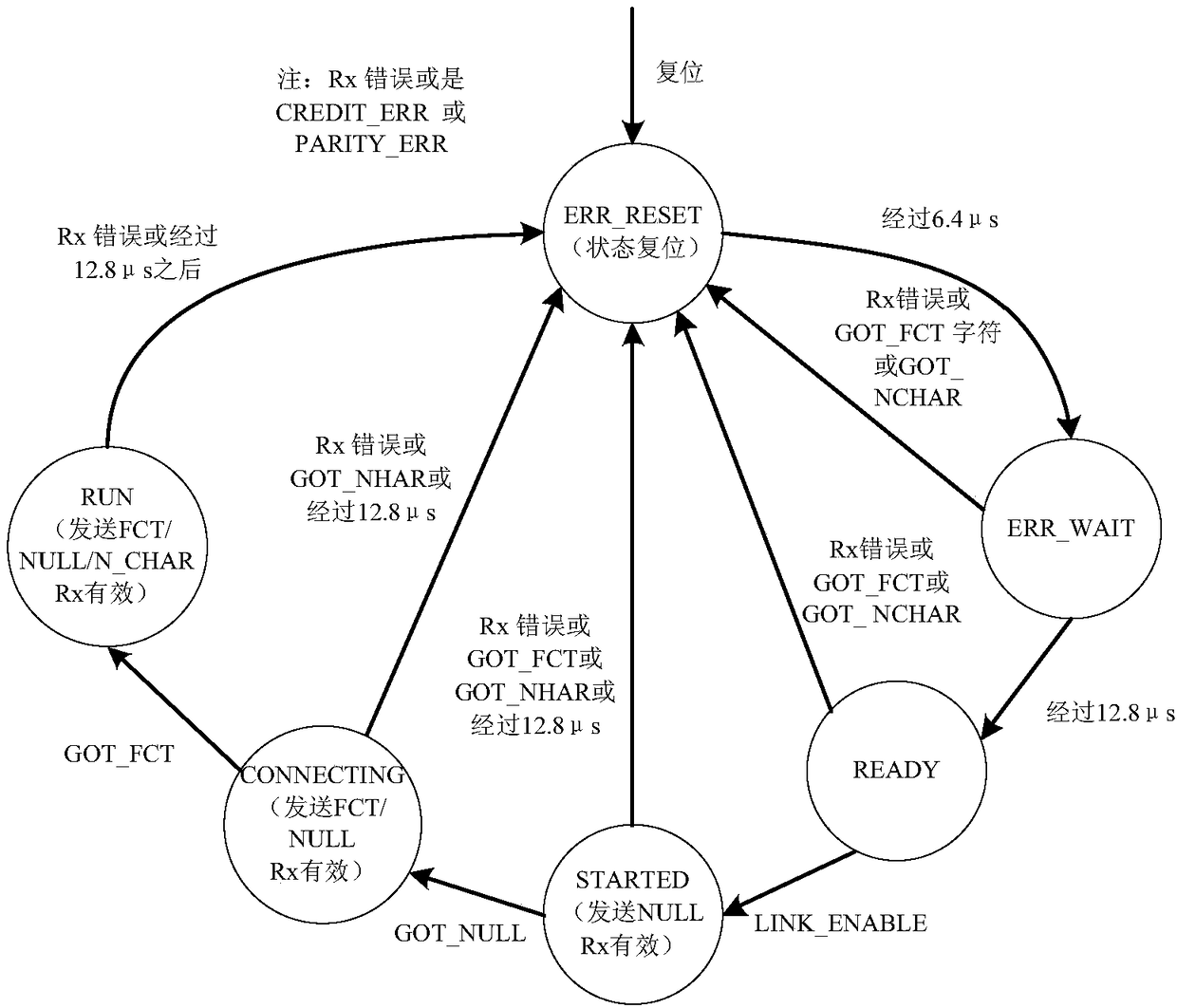

Gigabit-level SpaceWire bus system

ActiveCN108462620AIncrease physical bandwidth capImprove data processing capabilitiesBidirectional transmissionBus networksTransceiverBus interface

The invention relates to a gigabit-level SpaceWire bus system. The system comprises a data sending and receiving module, a data buffer module, a SpaceWire protocol processing module and a data processing module; the data sending and receiving module is used for performing format conversion of 16B / 20B coding and parallel data of an optical transceiver; the data buffer module is used for synchronization and data caching of asynchronous clock domains; the SpaceWire protocol processing module is used for receiving and sending bus data, identifying a control code and a data character, updating a protocol state, sending uplink data and addresses and receiving downlink data and addresses; and the data processing module is used for providing a bus interface for the SpaceWire protocol processing module, an external CPU, an external storage and an external register, and providing conversion of bus protocols on AXI and APB chips. According to the gigabit-level SpaceWire bus system disclosed by the invention, the access frequency and time can be reduced; the bus utilization rate can be increased; and high-speed data transmission requirements of spacecrafts can be satisfied.

Owner:BEIJING INST OF CONTROL ENG

Blocking-rendering based generation of anti-aliasing line segment in GPU

ActiveCN102096935AGenerate fastIncrease generation speedDrawing from basic elementsImage memory managementAnti-aliasingComputer science

The invention discloses a blocking rendering algorithm based generation technology of a sawtooth line segment in a GPU, which is carried out after finishing blocking of the line segment. The generation technology comprises the following steps of determining left and right (or up and down) intersection points of the line segment in a block according to the block where the line segment is located and a line segment generation direction; writing intersection point data in a memory; generating a coordinate which has the width of 1 after taking out the line segment from the memory; expanding the coordinate according to the width of the line segment; shearing according to a block boundary and the like. The realization mode can be matched with the blocking rendering algorithm to finish rapid generation of the sawtooth line segment.

Owner:CHANGSHA JINGJIA MICROELECTRONICS

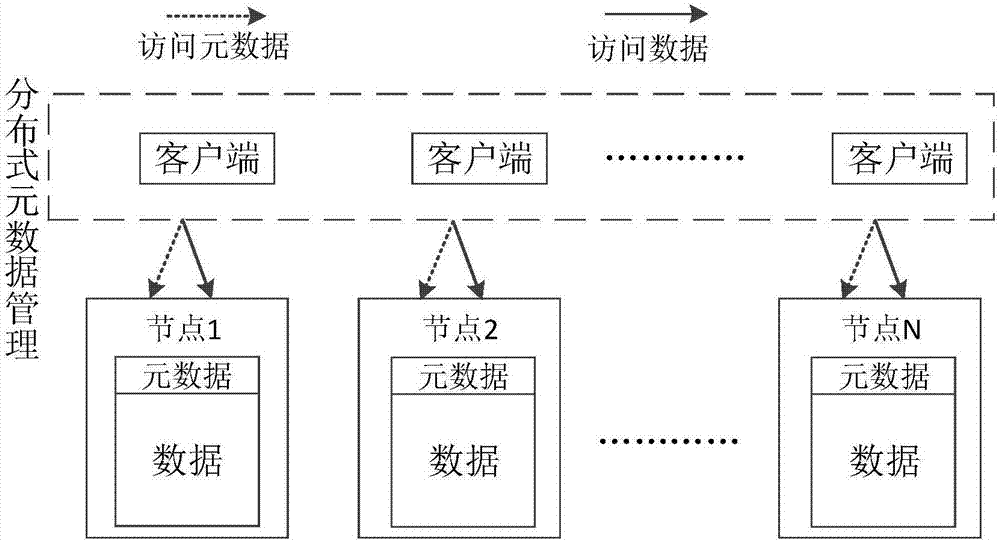

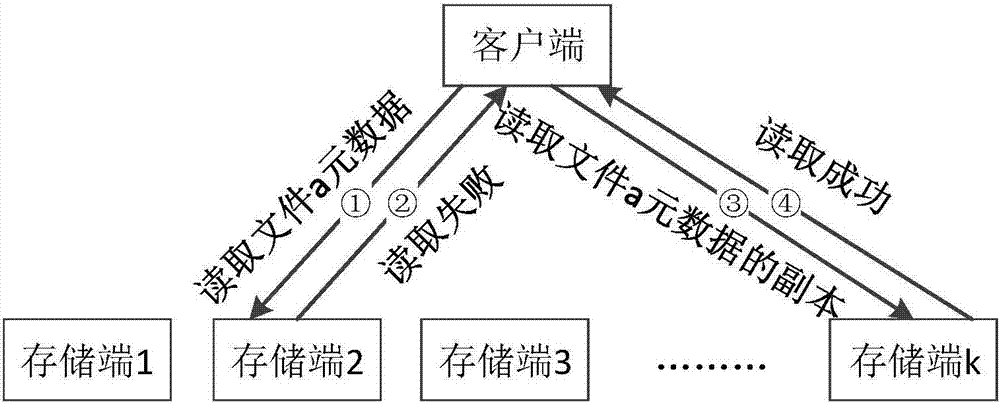

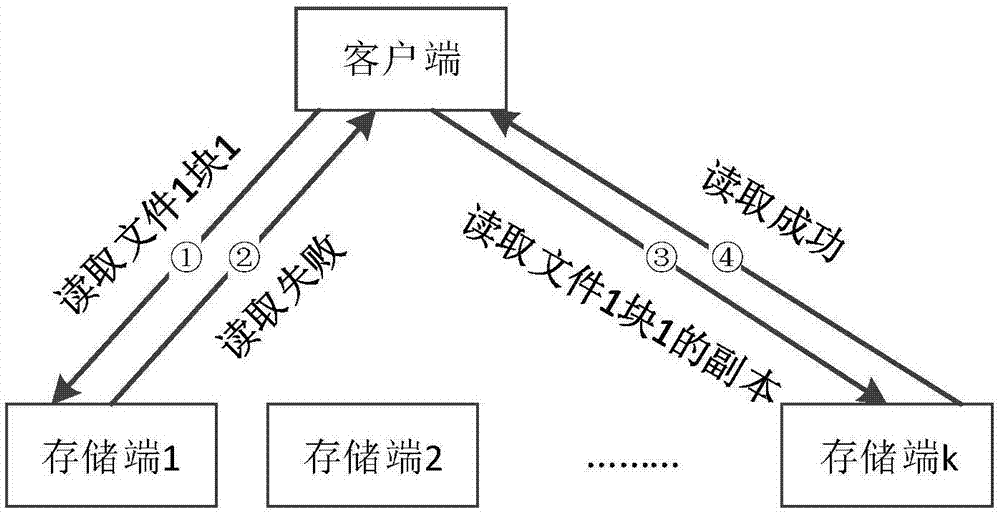

Distributed-data management method

InactiveCN107291876AReduce the number of memory accessesEliminate resource and performance bottlenecksSpecial data processing applicationsHash tableTree structure

The invention discloses a distributed-data management method and belongs to the field of distributed storage. The technical scheme includes that scattering metadata management functions to multiple nodes, creating mapping relations, selecting an implementation structure that a common multi-fork tree and a hash table are combined in metadata tree structure design, adding full path fields to the data structure of metadata, and writing new data prior to logs in data writing; after file metadata is acquired, acquiring the storage location of file data in a mapping mode; meanwhile, supporting file metadata copies and file data copies, adopting the different mapping relations to ensure that the copies are distributed to the different nodes when the file metadata copies or the file data copies are used, and enabling a copy storage terminal to take over a main storage end in operation rapidly when the file metadata or the file data is written and the main storage end fails. By the arrangement, performance and reliability of distributed data management can be effectively improved.

Owner:HUAZHONG UNIV OF SCI & TECH

Parallel filtering method and corresponding device

ActiveCN103227622AFast filteringReduce the number of memory accessesDigital technique networkService efficiencyPower consumption

The invention discloses a parallel filtering method and a corresponding device. The device comprises a multi-granularity storer, a data caching device, a coefficient buffering and broadcasting device, a vector operation device and a command queuing device, wherein the multi-granularity storer is used for storing to-be-filtered data, a filtering coefficient and filtering result data; the data caching device is used for caching, reading and updating the to-be-filtered data taken out; the coefficient buffering and broadcasting device is used for caching and broadcasting the filtering coefficient taken out; the command queuing device is used for storing and outputting a parallel filtering operation command; and the vector operation device is used for conducting vector operation based on the to-be-filtered data and output coefficient data, and for writing an operation result in the multi-granularity storer. The invention further discloses the parallel filtering method. According to the parallel filtering method and the corresponding device, the filtering speed is high; the access storage time is reduced; the service efficiency of the data is improved; the power consumption is reduced; and an application scope is wide.

Owner:BEIJING SMART LOGIC TECH CO LTD

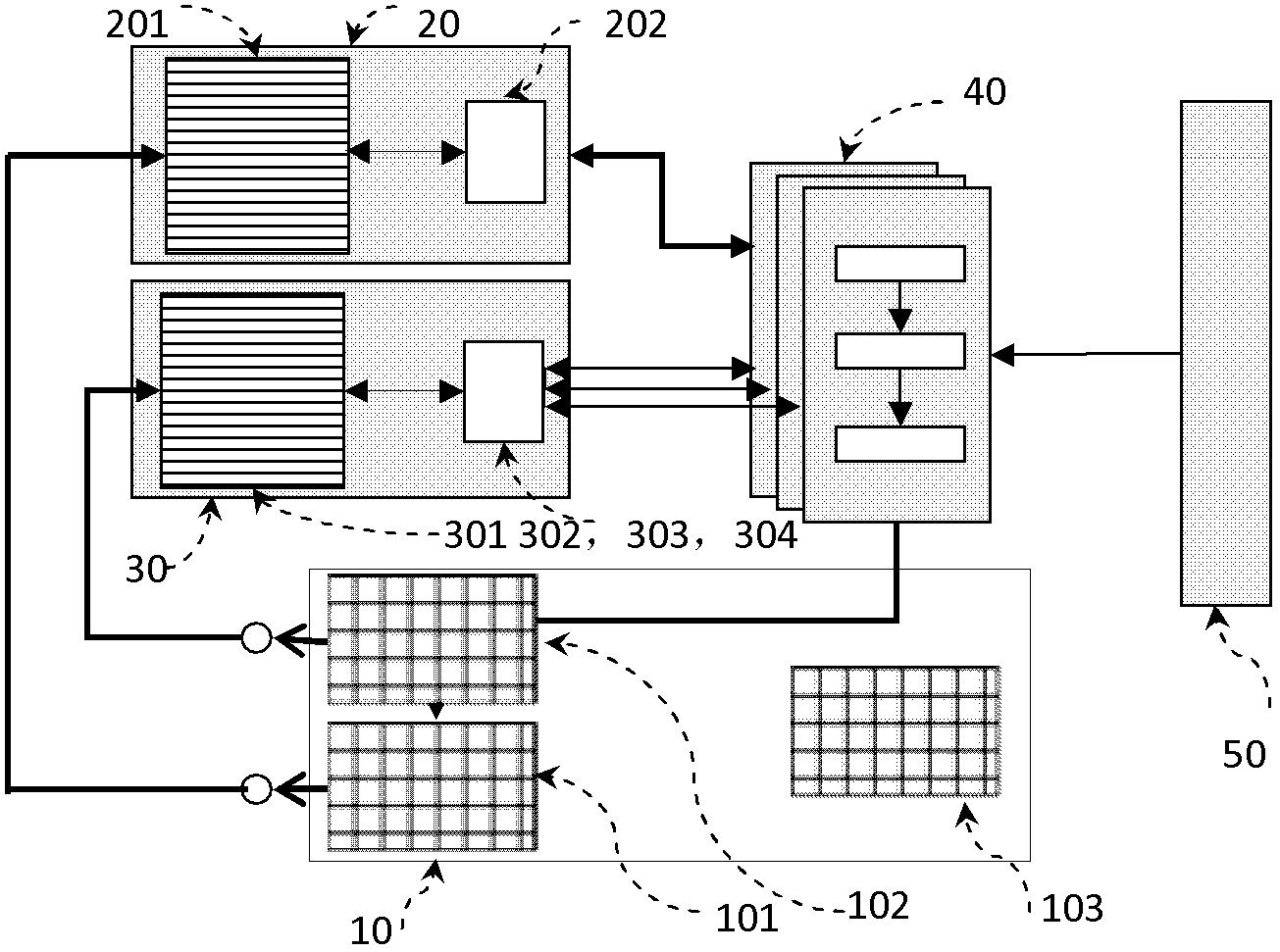

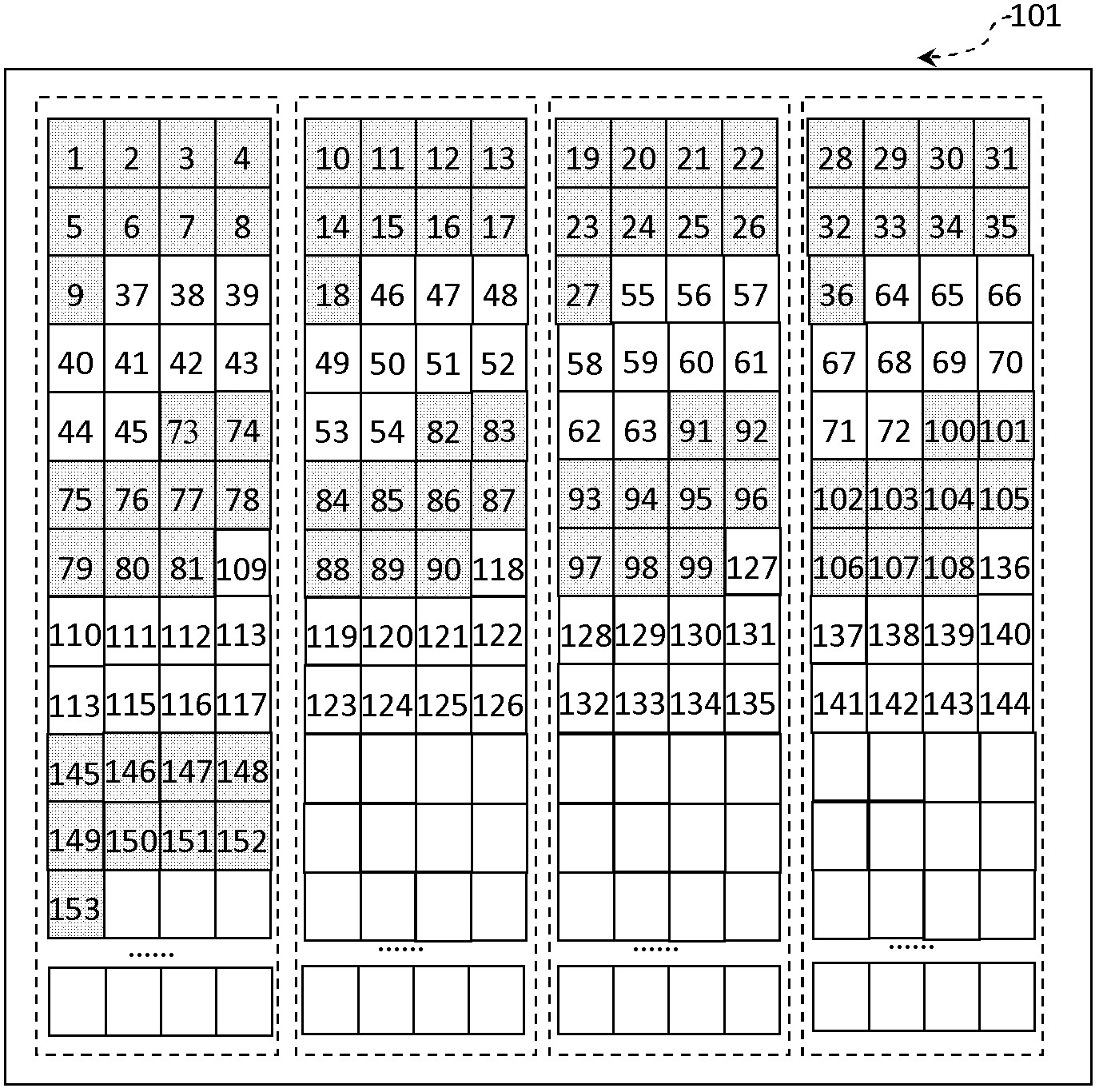

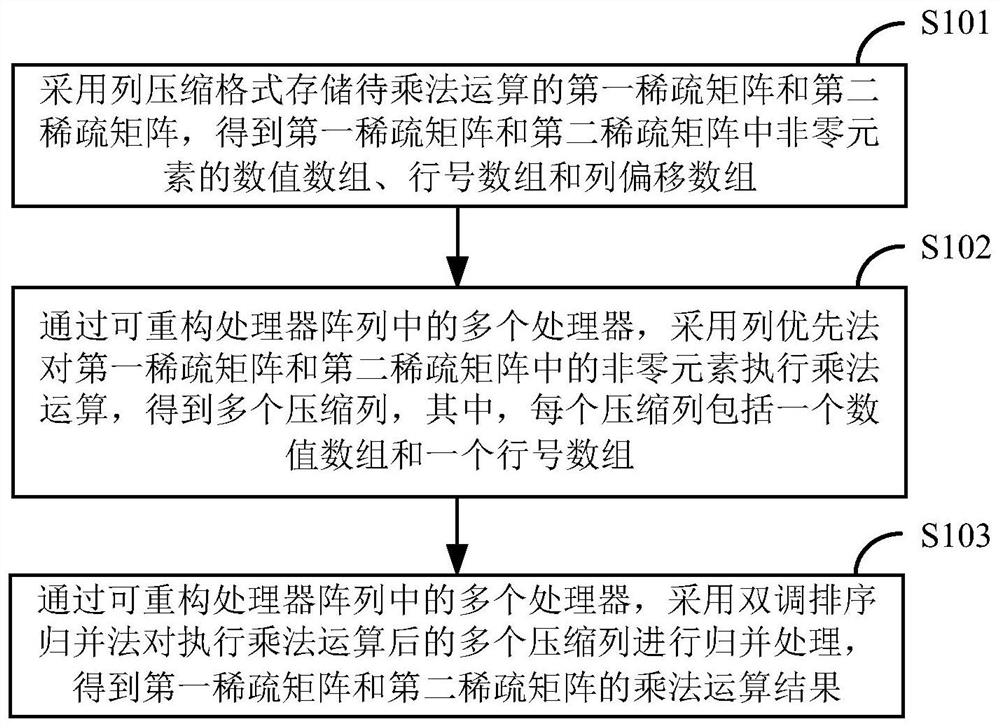

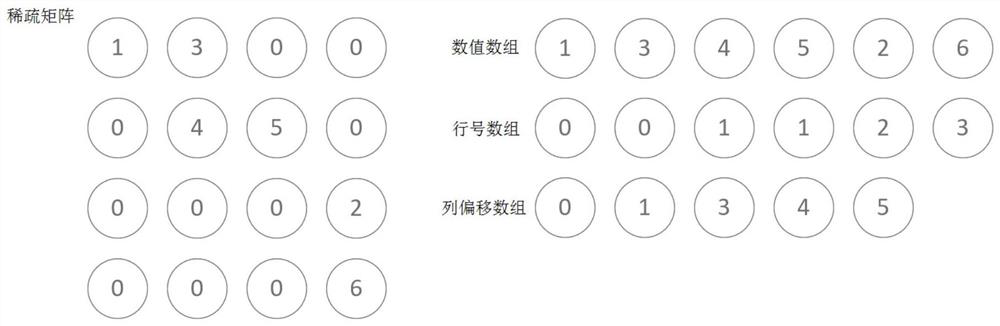

Method and device for realizing sparse matrix multiplication on reconfigurable processor array

ActiveCN112507284AReduce configuration timesReduce the number of memory accessesDigital data processing detailsEnergy efficient computingComputational scienceEngineering

The invention discloses a method and device for realizing sparse matrix multiplication on a reconfigurable processor array, and the method comprises the steps: storing a first sparse matrix and a second sparse matrix to be multiplied in a column compression format, obtaining a numerical array, a row number array and a column offset array of non-zero elements in the first sparse matrix and the second sparse matrix; multiplying non-zero elements in the first sparse matrix and the second sparse matrix by using a column priority method through a plurality of processors in the reconfigurable processor array to obtain a plurality of compressed columns, each compressed column comprising a numerical array and a row number array; and merging the plurality of compressed columns subjected to multiplication by adopting a double-tone sorting and merging method through a plurality of processors in the reconfigurable processor array to obtain multiplication results of the first sparse matrix and thesecond sparse matrix. According to the invention, sparse matrix multiplication can be efficiently realized on the reconfigurable processing array.

Owner:TSINGHUA UNIV

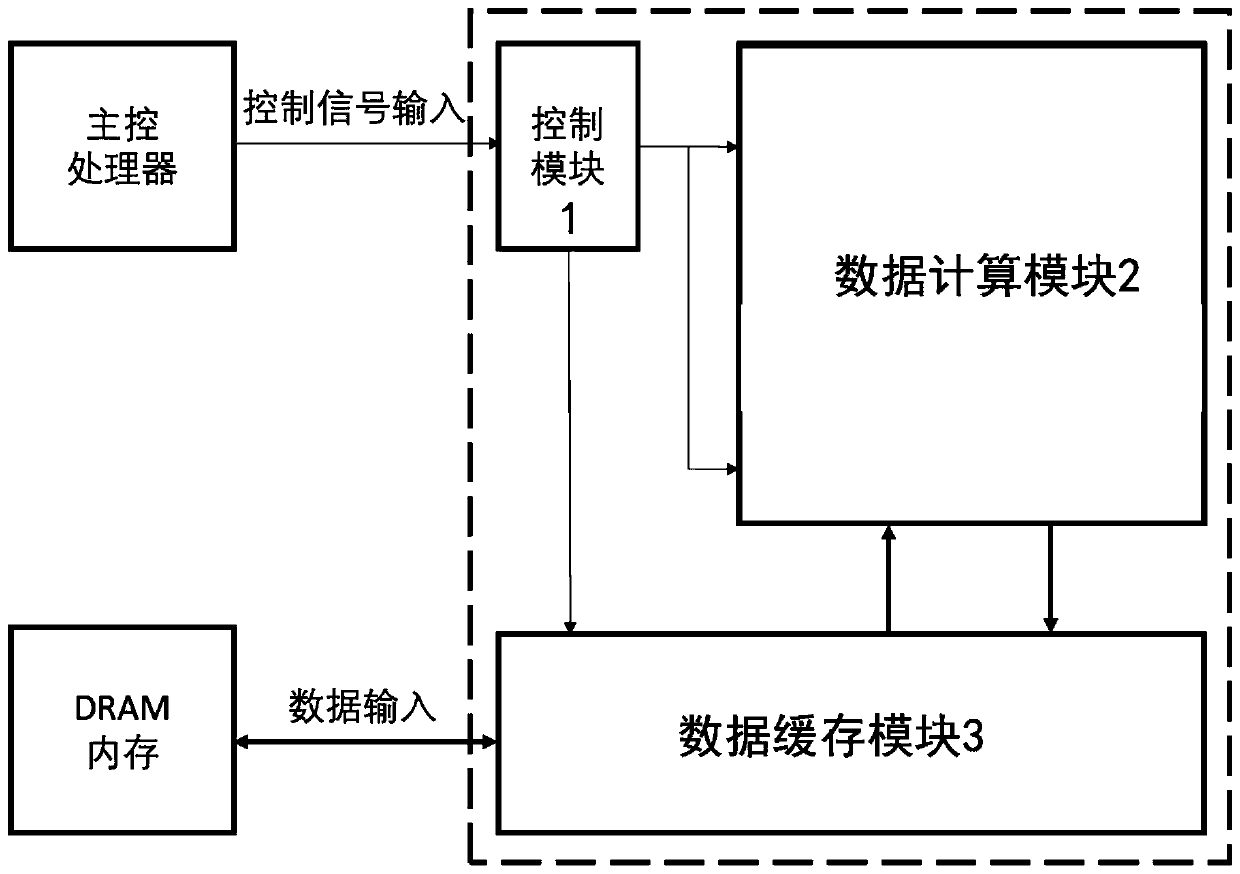

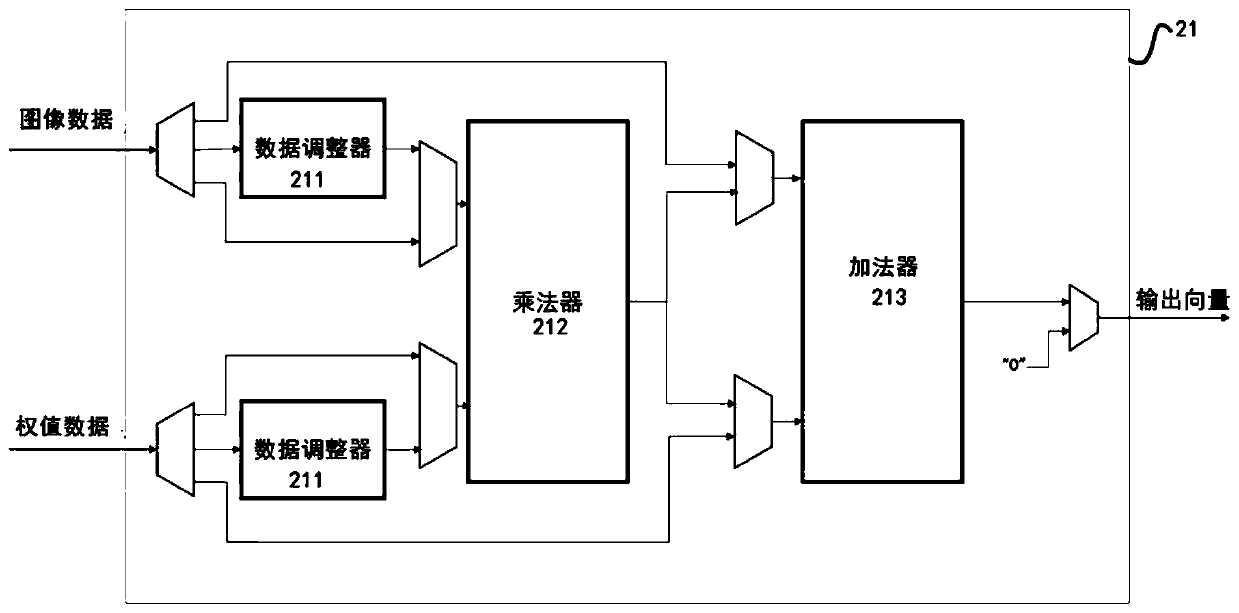

Deep learning accelerator suitable for stacked hourglass network

ActiveCN109993293AAchieve Computational Acceleration PerformanceCalculation speedNeural architecturesPhysical realisationTime delaysData acquisition

The invention discloses a deep learning accelerator suitable for a stacked hourglass network. A parallel computing layer computing unit improves the computing parallelism, and a data caching module improves the utilization rate of data loaded into the accelerator while accelerating the computing speed; and meanwhile, a data adjuster in the accelerator can carry out self-adaptive data arrangement sequence change according to different calculation layer operations, so that the integrity of acquired data can be improved, the data acquisition efficiency is improved, and the time delay of a memoryaccess process is reduced. Therefore, according to the accelerator, the memory bandwidth is effectively reduced by reducing the number of memory accesses and improving the memory access efficiency while the algorithm calculation speed is increased, so that the overall calculation acceleration performance of the accelerator is realized.

Owner:SUN YAT SEN UNIV

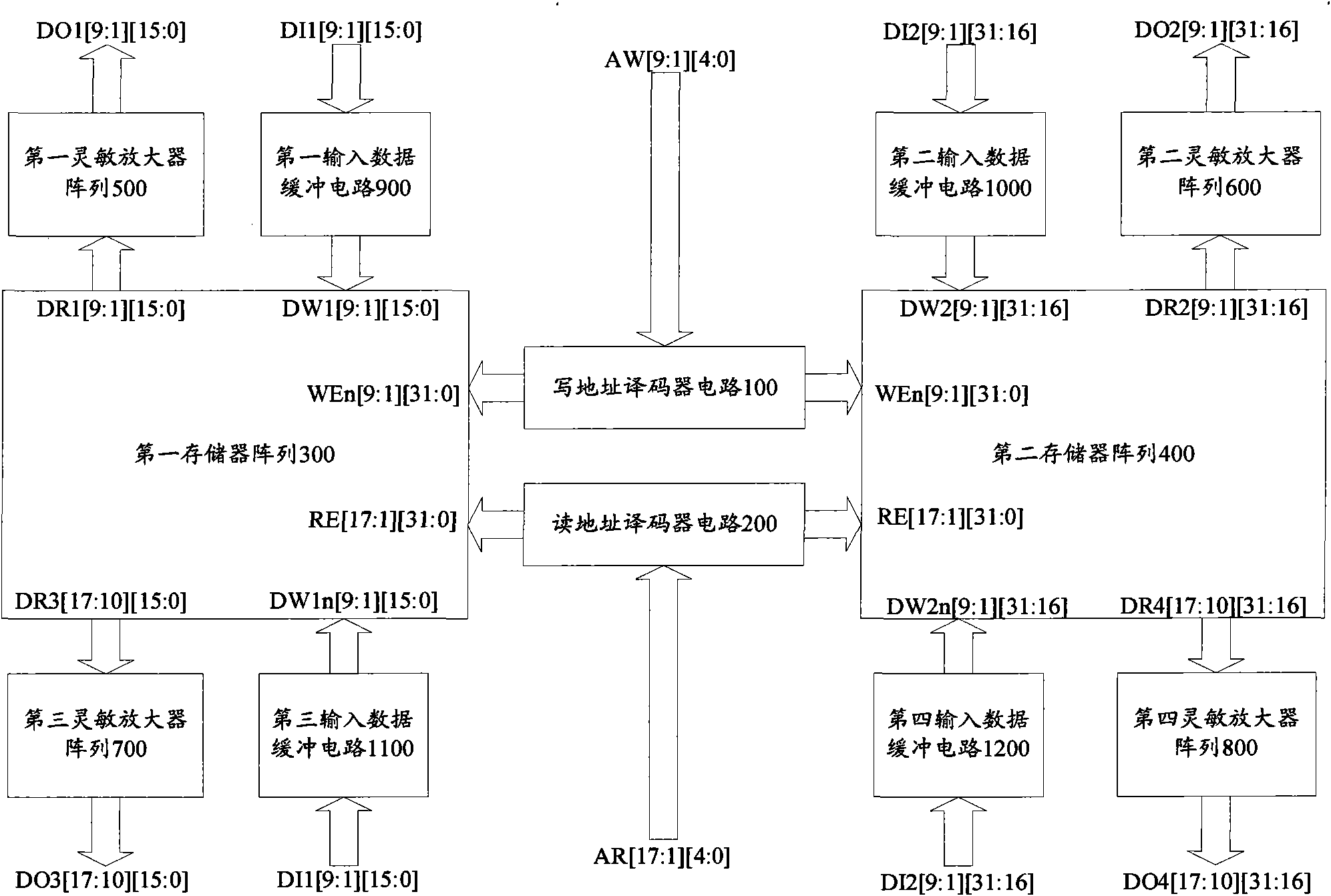

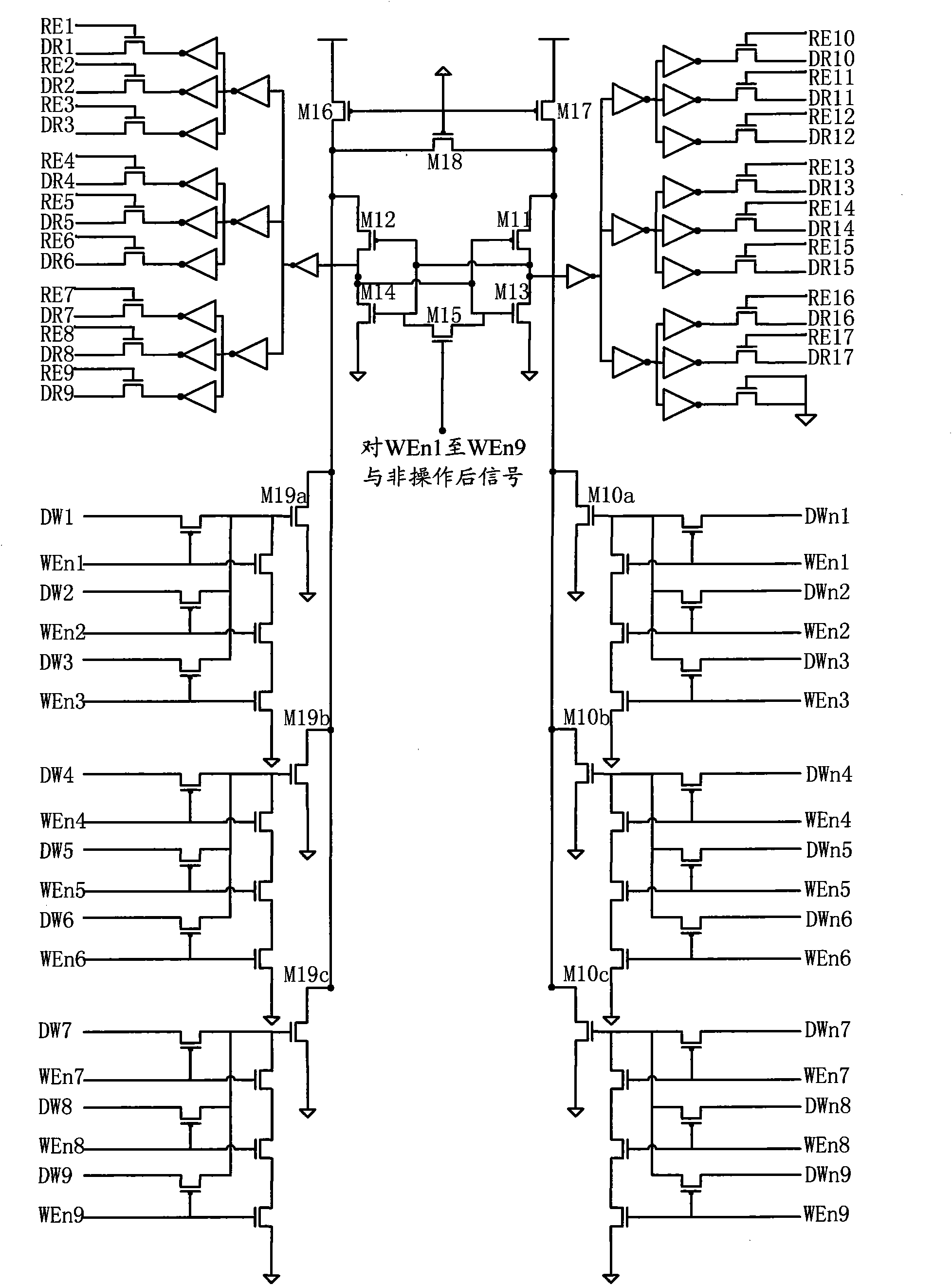

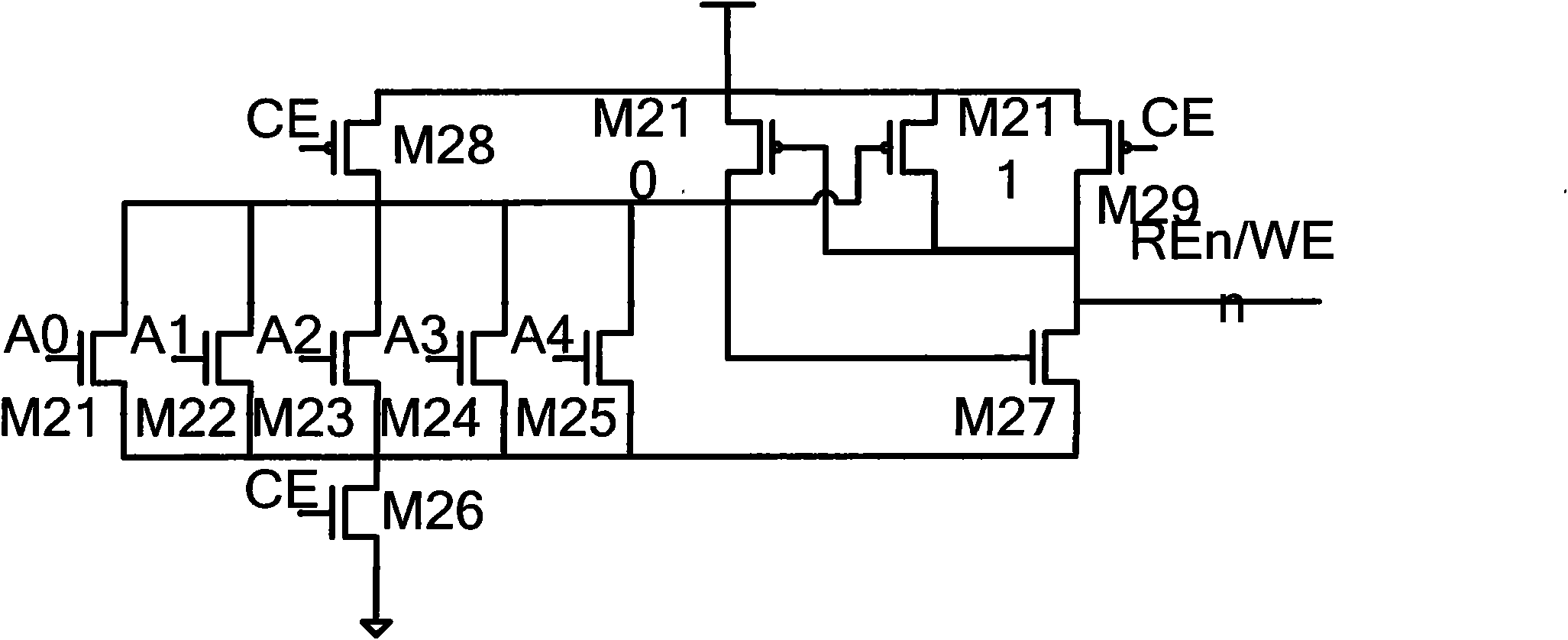

Multiport register file circuit

InactiveCN101916586AReduce areaReduce the number of memory accessesDigital storageHemt circuitsSense amplifier

The invention provides a multiport register file circuit comprising a write address decoder circuit, a read address decoder circuit, a first memory array, a second memory array, a first input data buffer circuit, a third input data buffer circuit, a first sense amplifier array, a third sense amplifier array, a second input data buffer circuit, a fourth input data buffer circuit, a second sense amplifier array and a fourth sense amplifier array, wherein the first memory array and the second memory array are respectively connected with the write address decoder circuit and the read address decoder circuit, the first input data buffer circuit and the third input data buffer circuit are mutually reversed and are connected with the first memory array, the first sense amplifier array and the third sense amplifier array are connected with the first memory array, the second input data buffer circuit and the fourth input data buffer circuit are mutually reversed and are connected with the second memory array, and the second sense amplifier array and the fourth sense amplifier array are connected with the second memory array. The multiport register file circuit can supply 17 read data ports and 9 write data ports at the same time, and each port has 32-bit data signals, thereby the multiport register file circuit is capable of being applied into a digital signal processor of a very long instruction word structure.

Owner:TSINGHUA UNIV

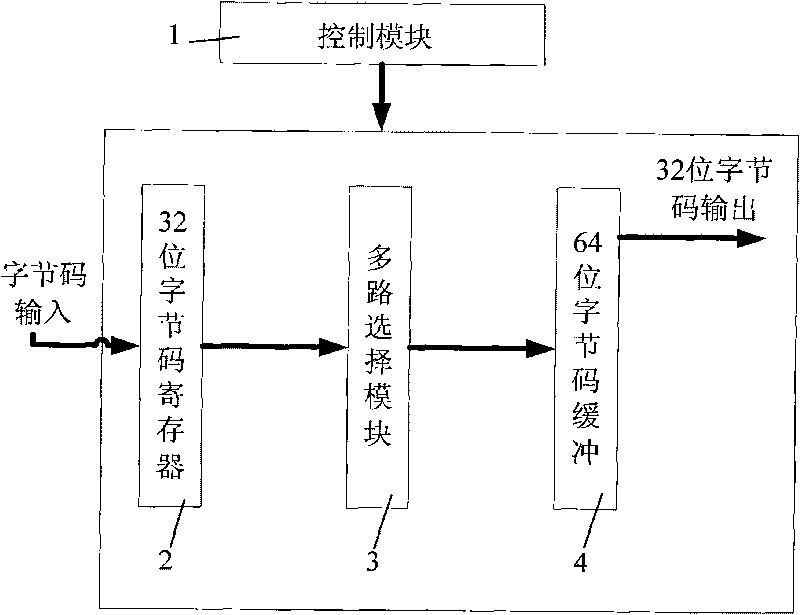

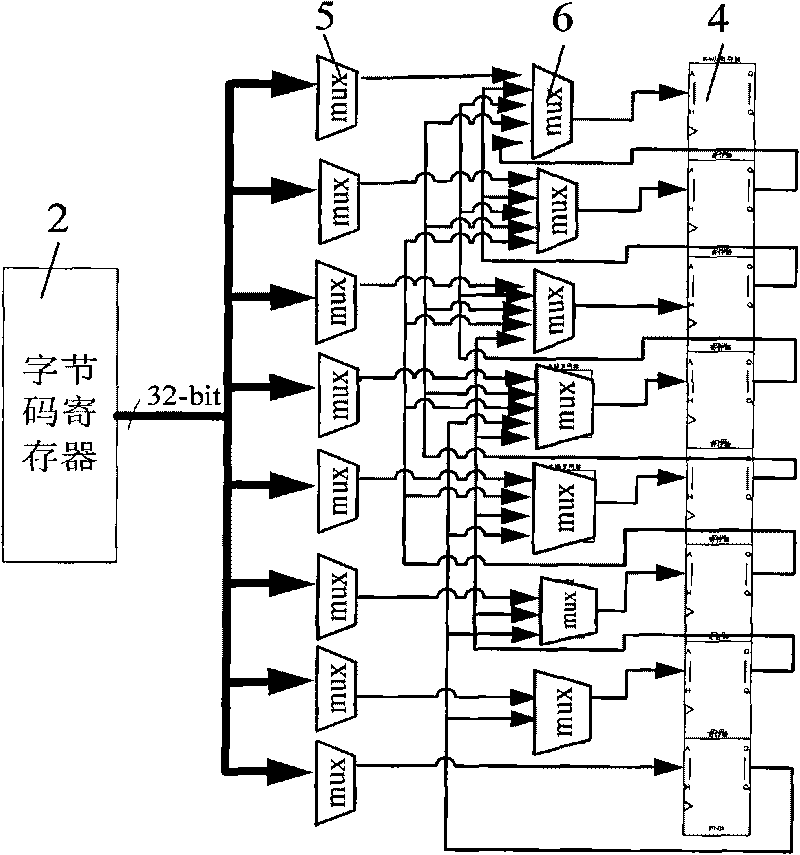

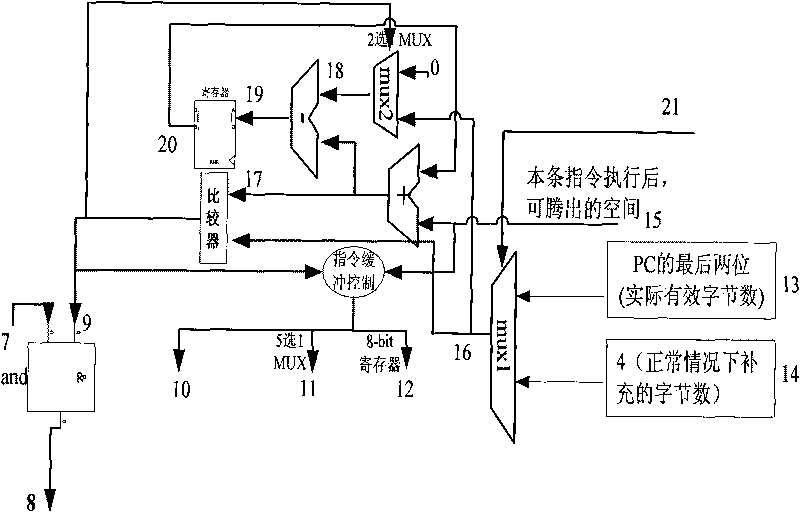

Byte code buffer device for improving instruction fetch bandwidth of Java processor and using method thereof

InactiveCN101699391AAvoid the needReduce the number of memory accessesArchitecture with single central processing unitMachine execution arrangementsInstruction memoryProcessor register

The invention relates to a byte code buffer device for improving the instruction fetch bandwidth of a Java processor and a using method thereof. In the invention, a byte code register, a multi-path selection module and a byte code buffer are sequentially connected; the input end of the byte code register is connected with an instruction memory, and the output end of the byte code buffer is connected with a decoding section of the Java processor; the input end of a control module is connected with the decoding section of the Java processor, and the output end of the control module is respectively connected with the byte code register, the multi-path selection module and the byte code buffer; and the byte code register has 32 bits, the byte code buffer has 64 bits, and high 4-bit bytes of the byte code buffer are connected with the decoding section of the Java processor. When the available space of the byte code buffer is not less than 4 bytes, the byte code buffer device of the invention reads 4 bytes from the register and transfers the 4 bytes to the correct position of the buffer through the multi-path selection module to enable the byte code to be executed to be always in high bytes completely, thereby reducing the access and storage times and improving the instruction fetch bandwidth.

Owner:JIANGNAN UNIV

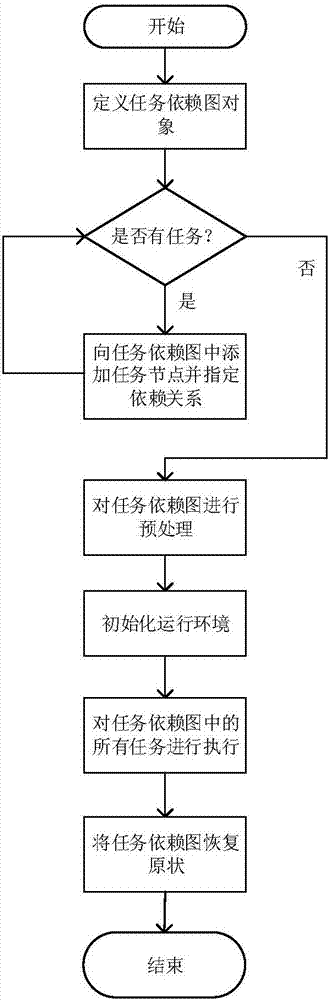

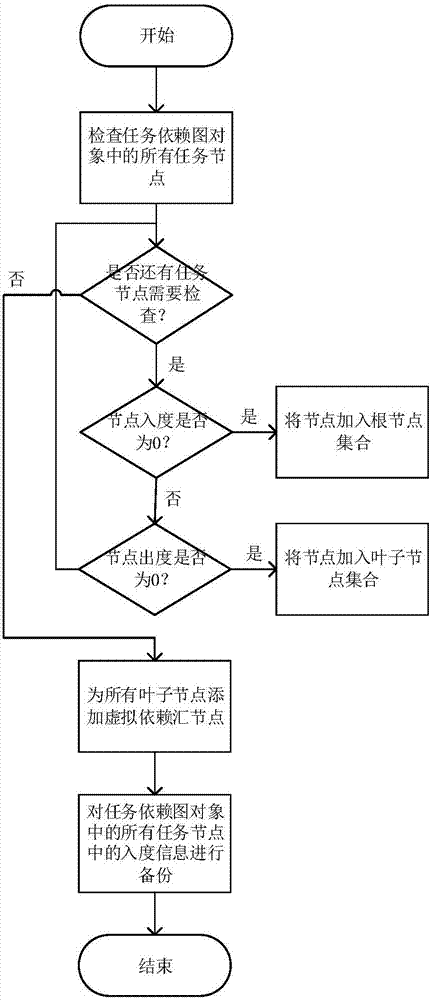

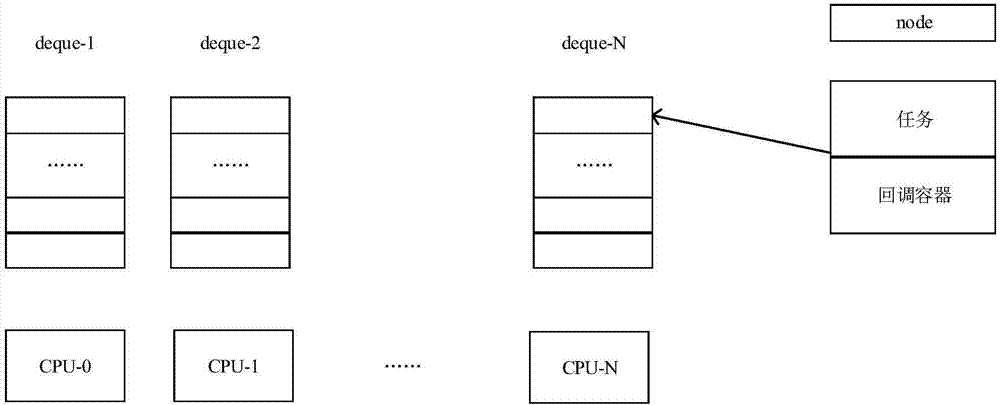

Task scheduling method and system based on task stealing

ActiveCN107220111ATo achieve load balancingLower latencyProgram initiation/switchingResource allocationThread poolTask dependency

The invention discloses a task scheduling method and system based on task stealing. The method comprises the steps that a task dependence graph is constructed, and a dependence point serves as a callback function to be registered into a callback container of a depended point; a lock-free double-end queue is allocated to threads in a thread pool respectively and is emptied, and root nodes are put at the bottoms of the lock-free double-end queues of the threads in a polling mode; if the lock-free double-end queues of the threads are not empty, the nodes are taken out of the bottoms of the lock-free double-end queues and are executed; if the lock-free double-end queues of the threads are empty, the nodes are stolen from the tops of the lock-free double-end queues of other threads, the stolen nodes are pressed into the bottoms of the lock-free double-end queues of the threads and are taken out for execution; after execution of all node tasks is completed, the in-degrees of the nodes in the task dependence graph are recovered to original values, and blockage to a main thread is ended. The task scheduling method and the system are oriented to large task-level parallel application programs, and the performance of traditional task-level parallel application programs can be effectively improved.

Owner:HUAZHONG UNIV OF SCI & TECH

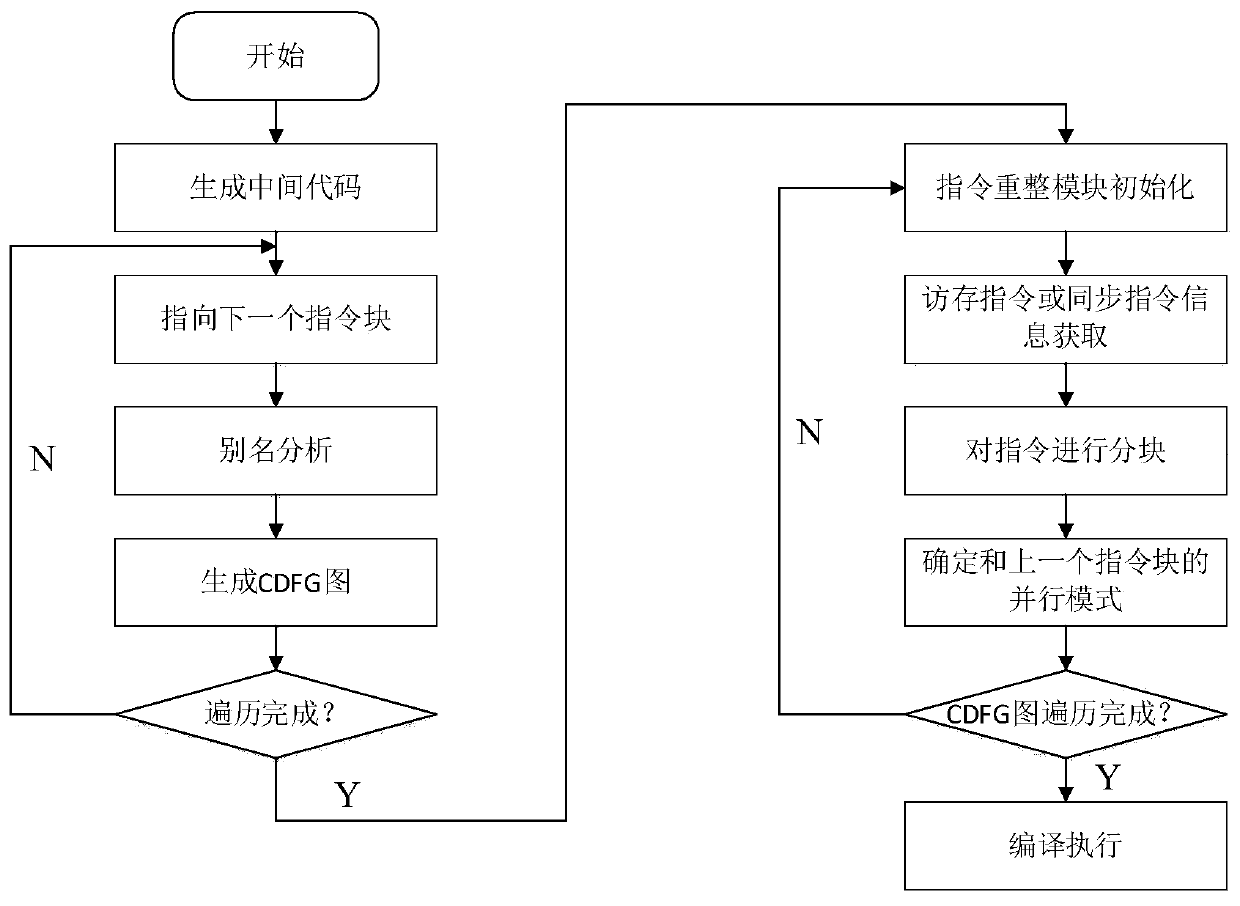

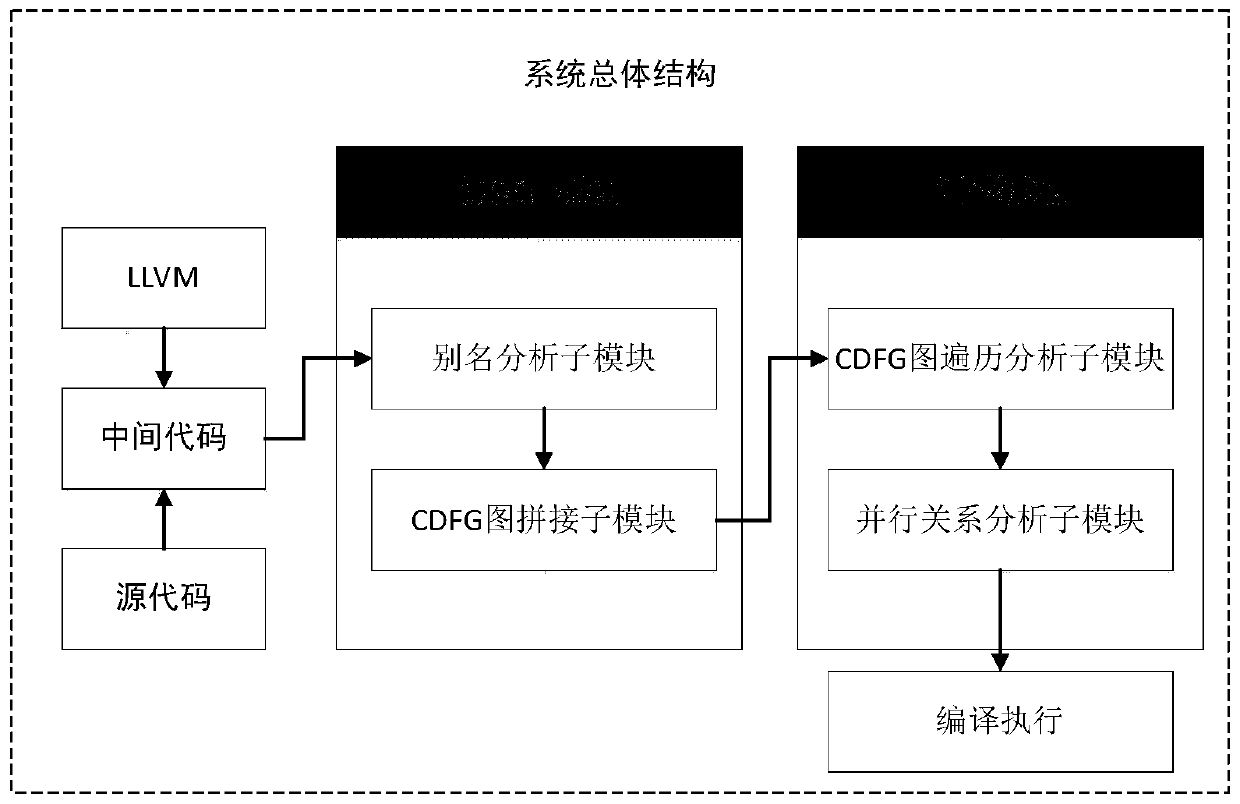

FPGA graph processing acceleration method and system based on OpenCL

ActiveCN110852930AReduce the number of memory accessesReduce memory access latencyConcurrent instruction executionProcessor architectures/configurationCode generationComputer architecture

The invention discloses an FPGA graph processing acceleration method and system based on OpenCL, and belongs to the field of big data processing. The method comprises the following steps: generating acomplete control data flow diagram CDFG according to an intermediate code IR obtained by disassembling; partitioning the complete CDFG graph again according to Load and Store instructions to obtain new CDFG instruction blocks, and determining a parallel mode between the CDFG instruction blocks; analyzing Load and Store instructions in all the new CDFG instruction blocks, and determining a division mode of the BRAM on the FPGA chip; and reorganizing an on-chip memory of the FPGA by adopting a BRAM division mode, translating all new CDFG instruction blocks into corresponding hardware description languages according to a parallel mode among the instruction blocks, compiling and generating a binary file capable of running on the FPGA, and burning the binary file to the FPGA for running. By adopting a pipeline technology and readjusting the instruction in the instruction block, the memory access frequency is reduced, and the memory access delay is reduced; on-chip storage partitioning is adopted, writing conflicts of different assembly lines on the same memory block are reduced, and therefore the system efficiency is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

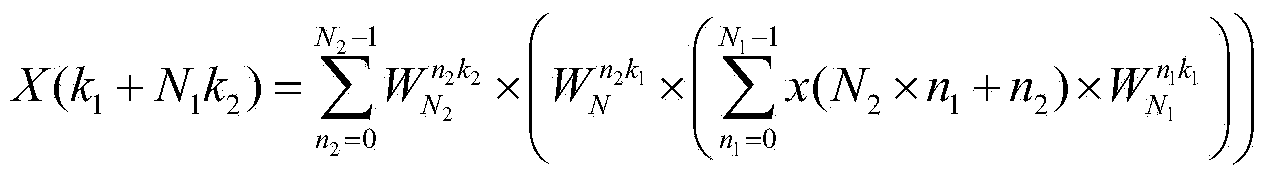

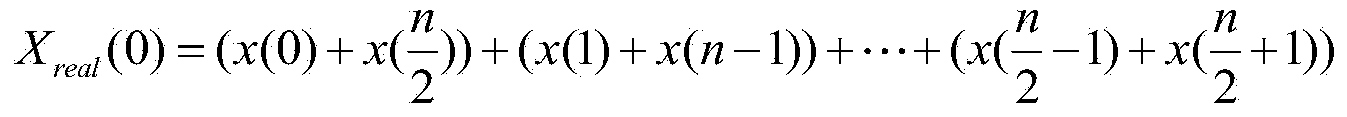

FFTW3 optimization method based on loongson 3B processor

ActiveCN103902506AImprove performanceReduce the number of memory accessesComplex mathematical operationsParallel computingDiscrete Fourier transform

The invention discloses an FFTW3 optimization method based on a loongson 3B processor. The FFTW3 optimization method is characterized by comprising the steps of utilizing a vector quantity instruction method and a Cooley-Tukey algorithm for optimization in complex number discrete Fourier transform with the calculation scale being a sum, and utilizing the vector quantity instruction method and a real part and imaginary part individual processing method for optimization in real number discrete Fourier transform calculation. According to the FFTW3 optimization method based on the loongson 3B processor, the running performance of FFTW3 on the loongson 3B processor can be effectively improved, and therefore the FFTW3 can be efficiently obtained on the loongson 3B processor.

Owner:INST OF ADVANCED TECH UNIV OF SCI & TECH OF CHINA

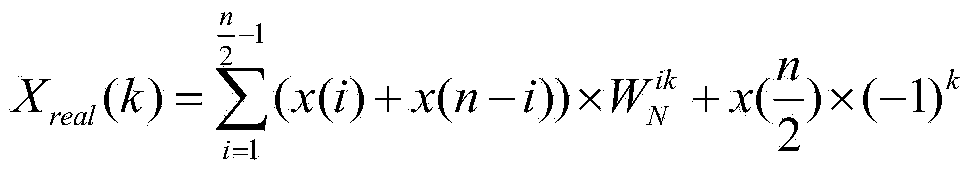

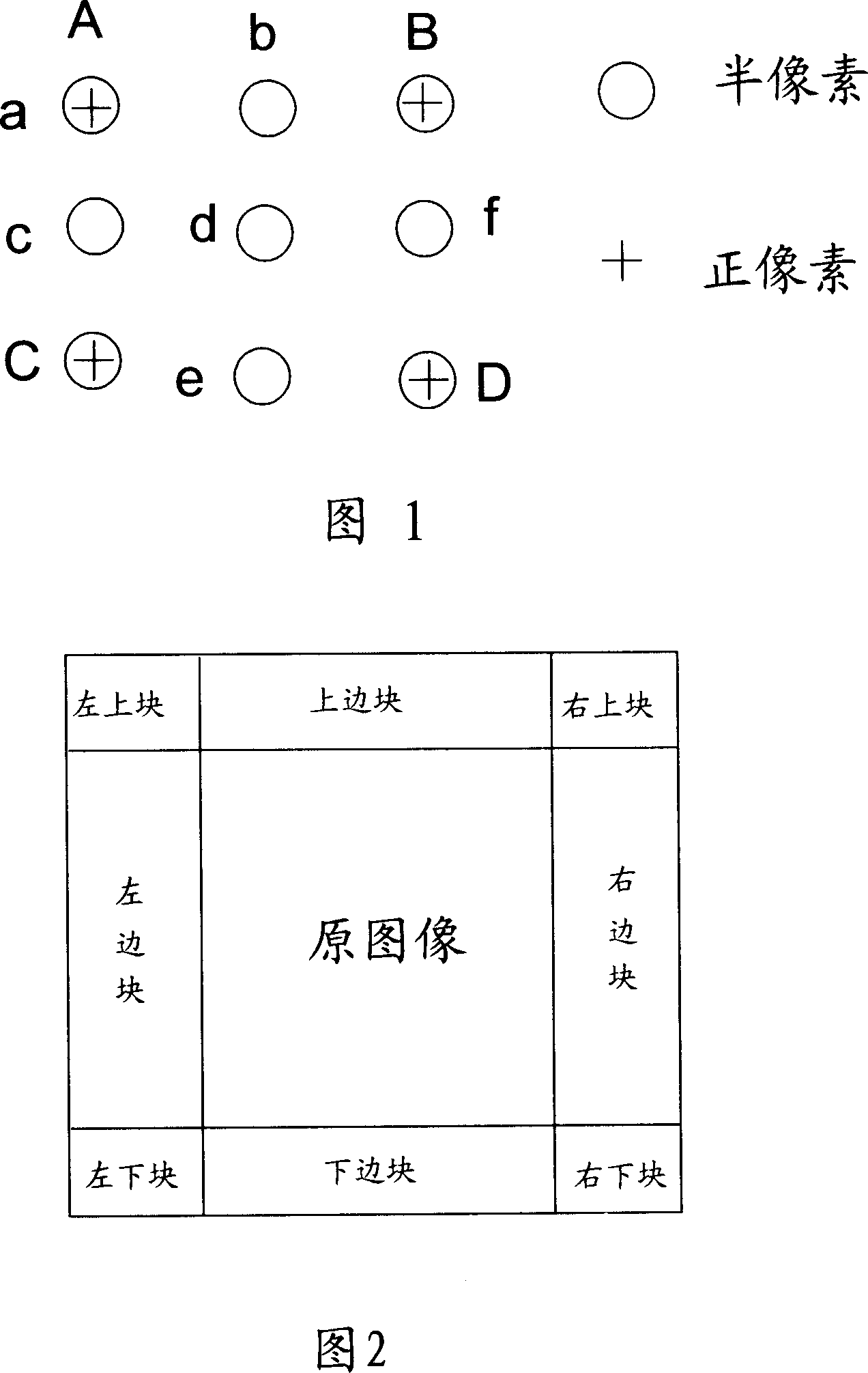

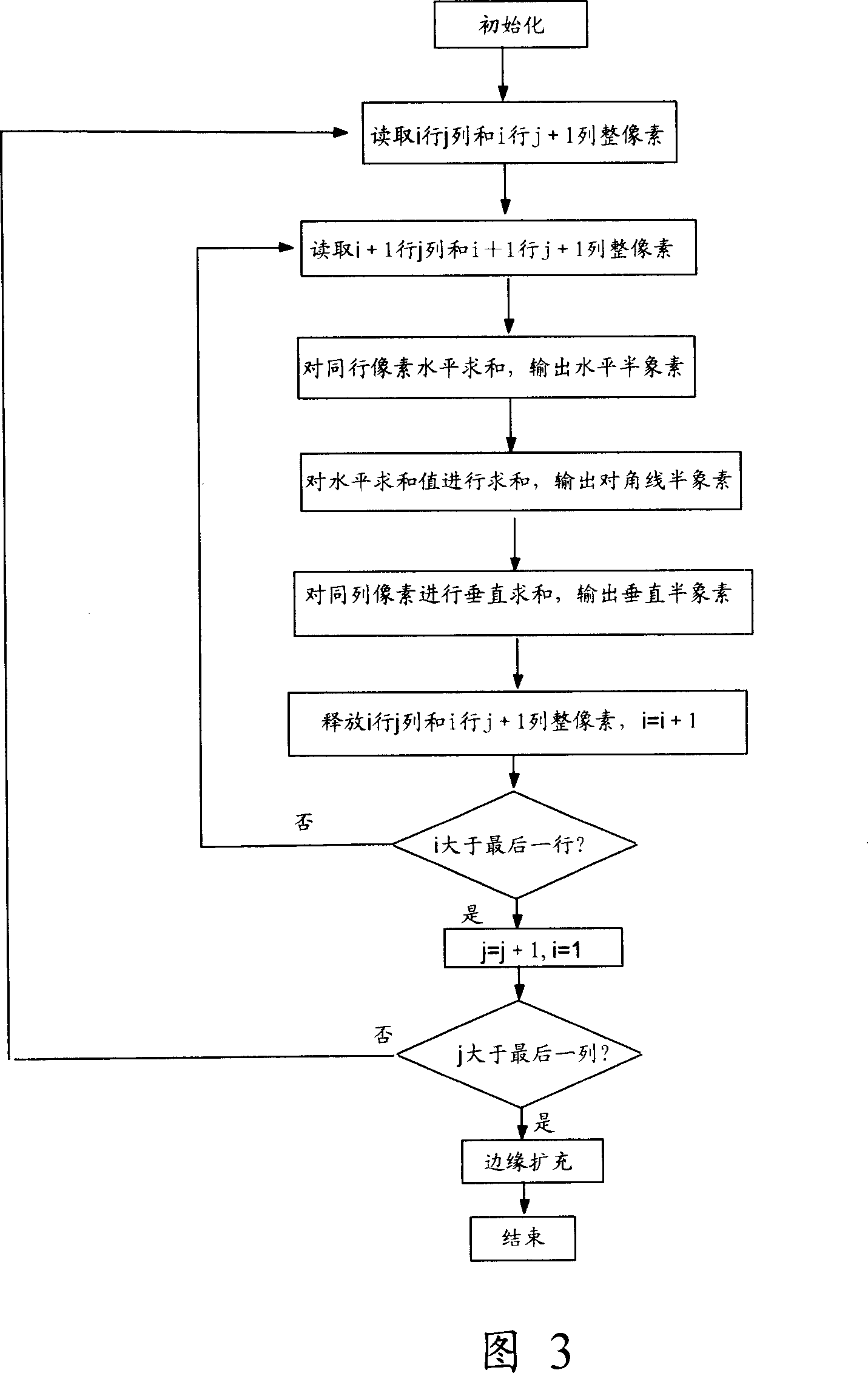

Semi-pixel element image interpolation method

InactiveCN101001384ASimplified edge augmentation methodReduce computationTelevision systemsDigital video signal modificationDiagonalComputer vision

This invention relates to an image interpolation method for semi-pixel including: reading four complete pixels of adjacent two lines and two rows in a complete pixel image to compute half pixels utilizing the middle sum values of the same line and same row in the four complete pixel points, which utilizes the relationship among a diagonal half-pixel, a level half pixel and a vertical half pixel to traverse complete pixel images once and output level, vertical and diagonal half-pixel images to reduce the times of access to further reduce the operation of coders. This invention provides a simplified method for forming edge block images.

Owner:YULONG COMPUTER TELECOMM SCI (SHENZHEN) CO LTD

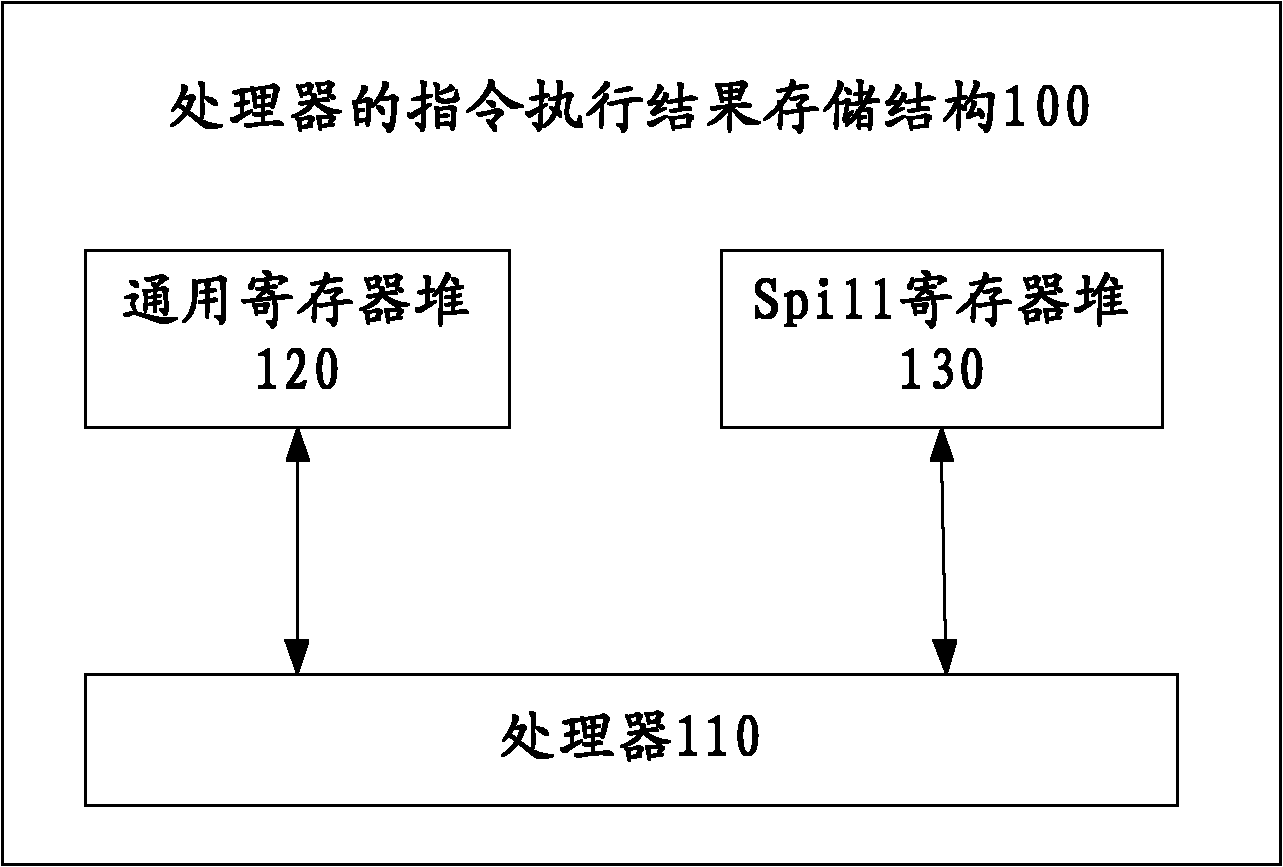

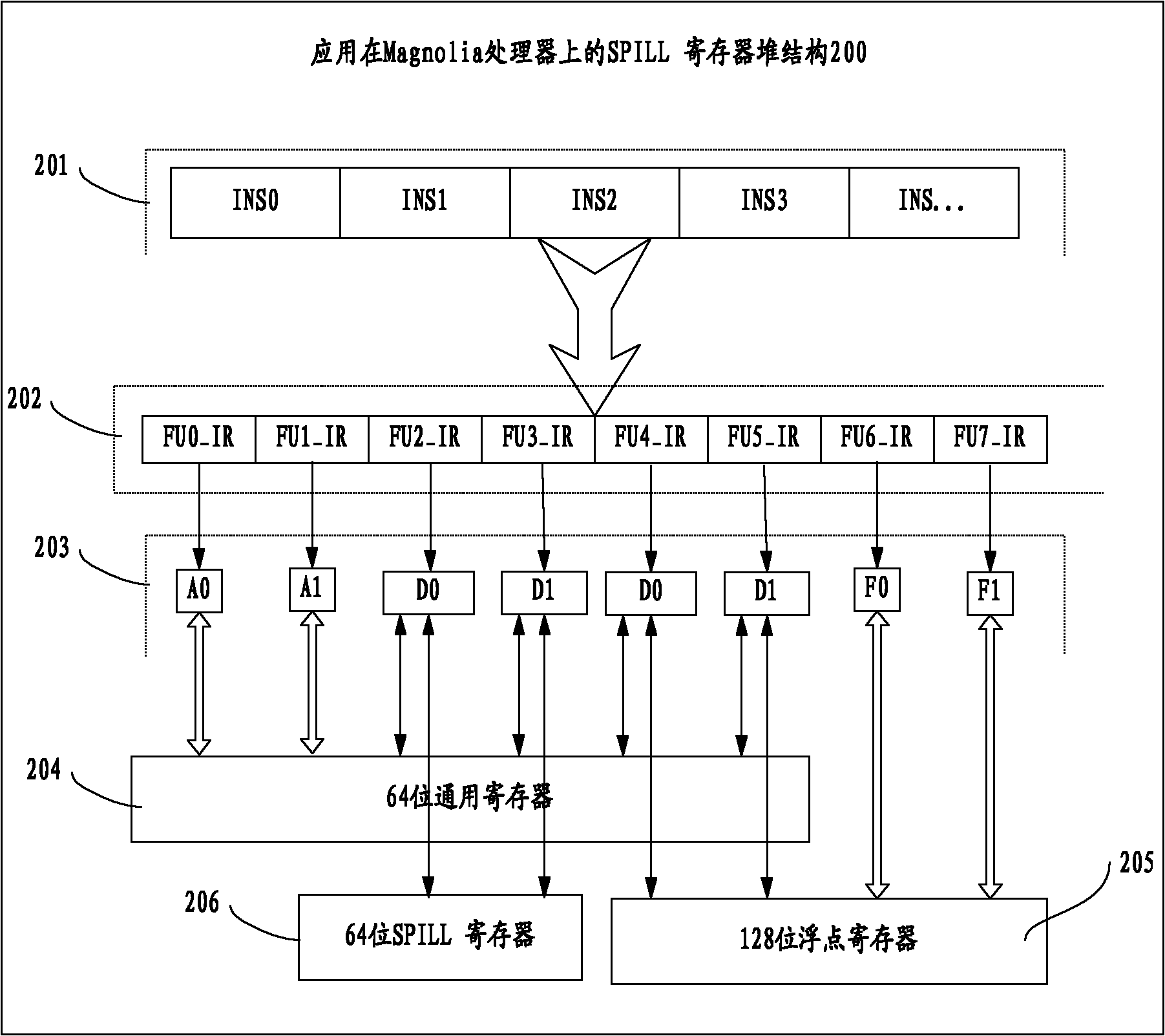

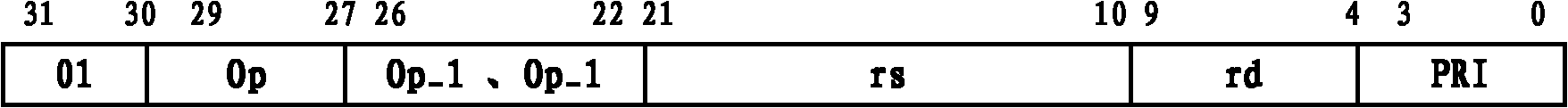

Command executing result storage structure for processor

InactiveCN102063287AReduce the number of memory accessesReduce processing timeMemory systemsMachine execution arrangementsGeneral purposeProcessor register

The invention provides a command executing result storage structure for a processor, which comprises the processor, a general purpose register file and a SPILL register file. The general purpose register file and the SPILL register pile are respectively connected with the processor; when the command executing result data volume of the processor is larger than the memory space of the general purpose register file, and a part of command executing results of the processor is saved in a general register file, and the other part of the command executing results of the processor are saved in the SPILL register file. By using the command executing result storage structure for the processor, when a register spills, the frequency of memory access can be effectively reduced, energy consumption can be reduced, spilled data can be quickly saved in the SPILL register file, and the executing efficiency of the processor can be improved.

Owner:TSINGHUA UNIV

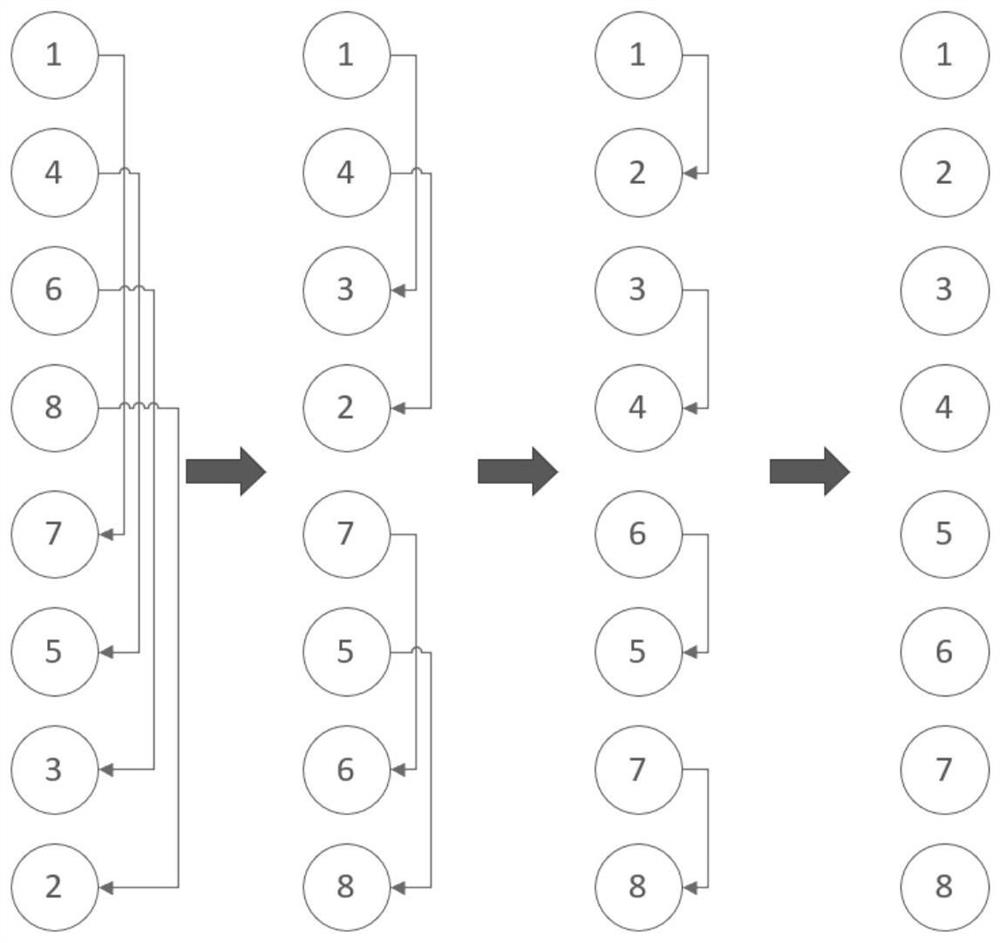

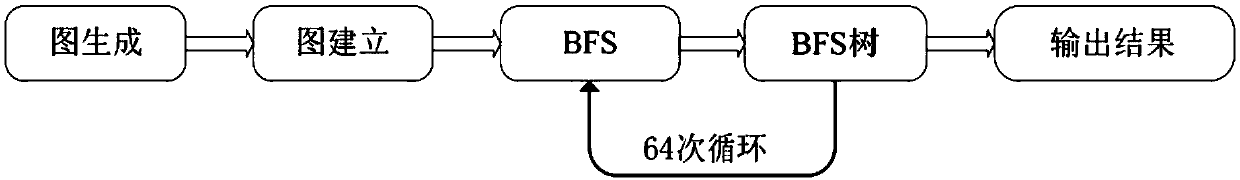

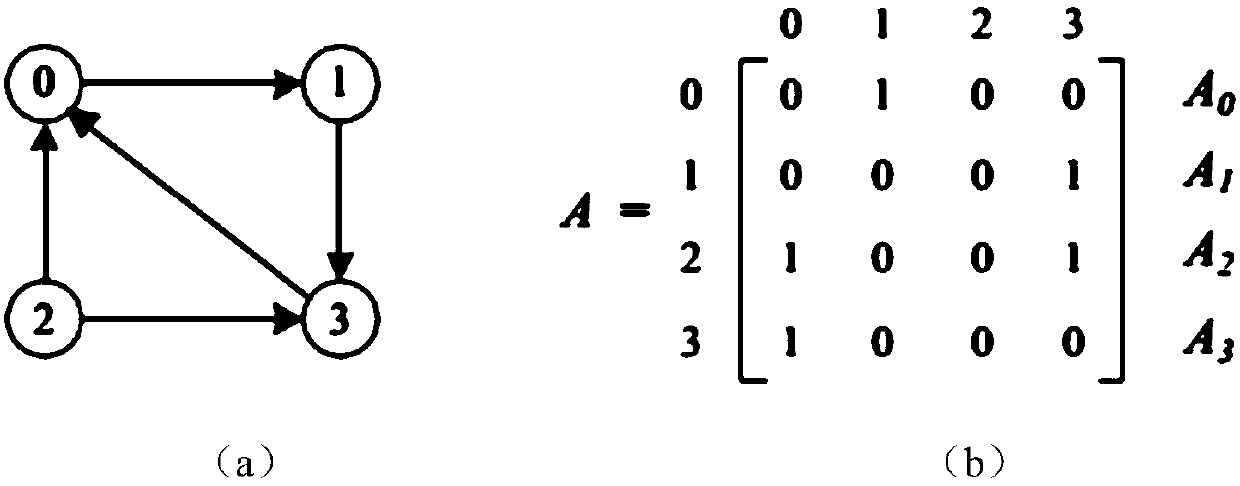

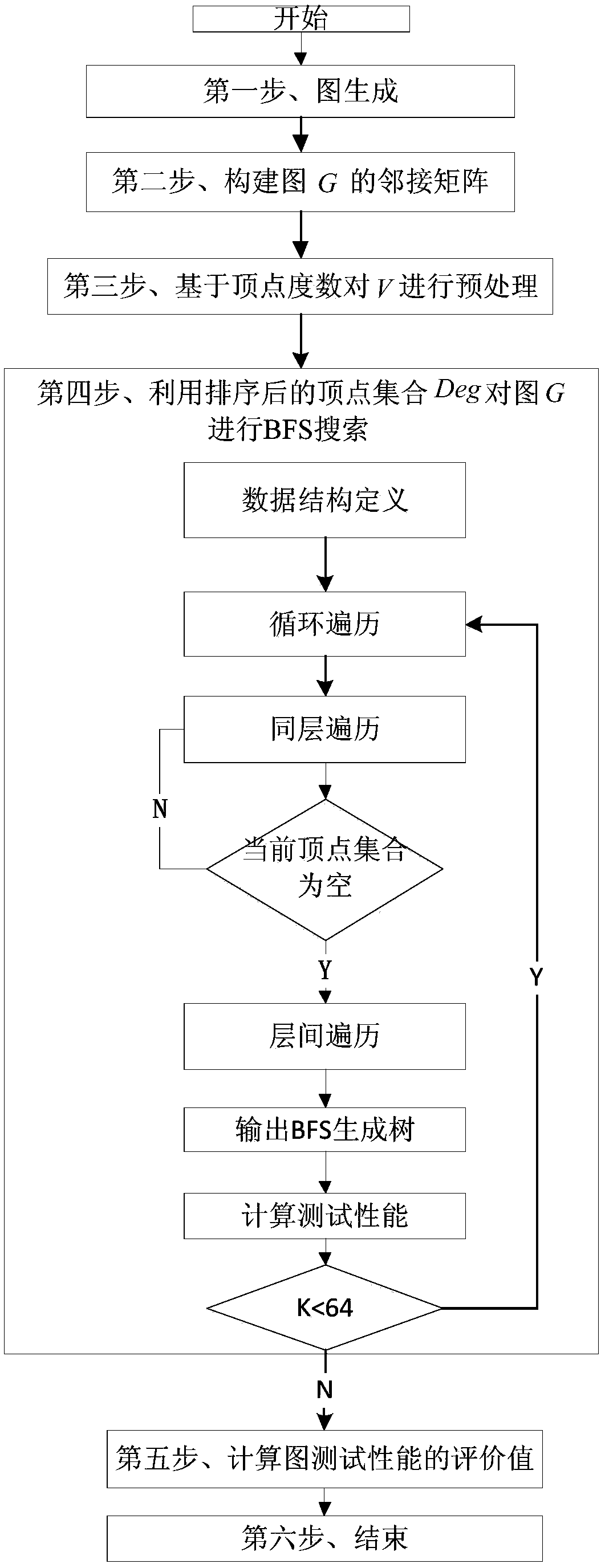

Super computer big data processing capability testing method based on vertex reordering

ActiveCN109656798AAvoid invalid memory accessImprove hit rateHardware monitoringOther databases indexingSupercomputerGraph traversal

The invention discloses a super computer big data processing capability test method based on vertex reordering, and aims to improve the super computer big data processing capability test speed. technical scheme comprises the following steps of generating an adjoining matrix for a map and a construction map, sorting the vertexes in the graph based on the vertex degrees; carrying out BFS search on the graph by utilizing the sequenced vertex set, wherein the characteristic that the probability of edge association of vertexes with high degrees is also high during the same layer of traversal is utilized, transversing and searching child nodes of nodes in the current layer of vertex set, and transversing and checking the vertexes with high degrees preferentially, so that invalid memory access traversal is reduced to the maximum extent. By adopting the method, the hit rate of the edge relation between the nodes can be improved, invalid memory access times are reduced, unnecessary memory access is avoided to the maximum extent, graph traversal is accelerated, and the testing speed of the big data processing capacity of the super computer is increased.

Owner:NAT UNIV OF DEFENSE TECH

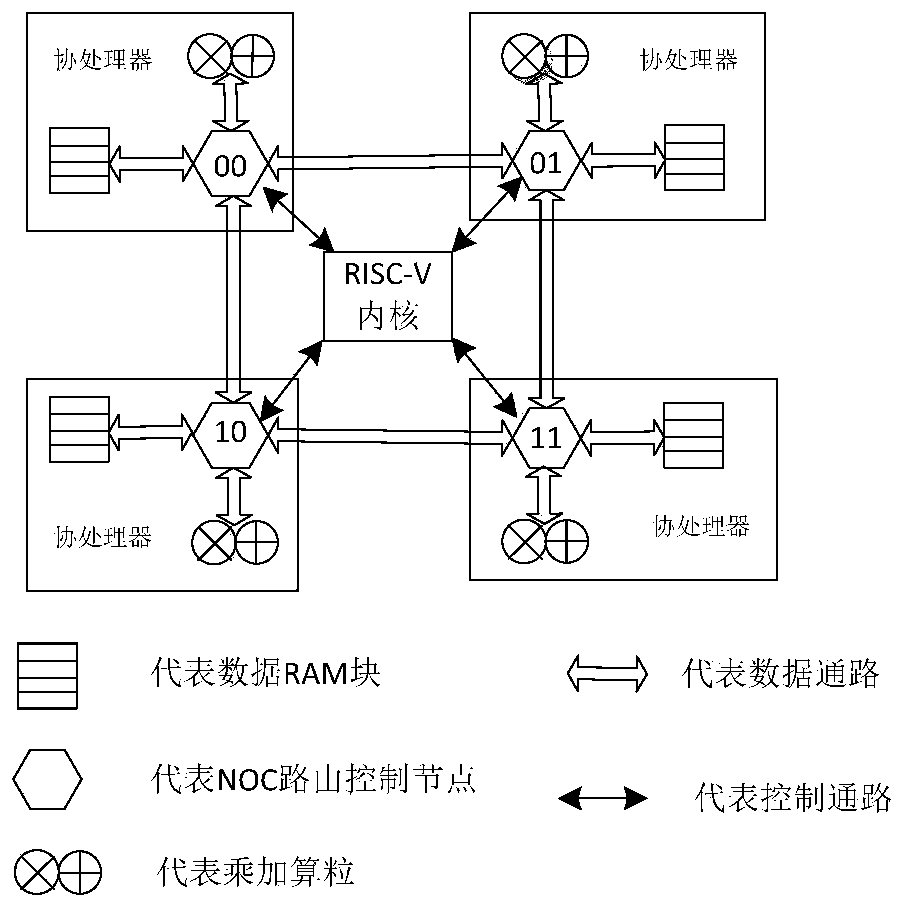

Near data stream computing acceleration array based on RISC-V

PendingCN111159094AReduce the burden onRealize near-data streaming computingMultiple digital computer combinationsElectric digital data processingComputational scienceCoprocessor

The invention provides a near data stream computing acceleration array based on RISC-V. The near data stream computing acceleration array comprises an RSIC-V core and an acceleration array which is arranged around the RSIC-V core and is composed of a plurality of coprocessors. Each coprocessor comprises an NOC routing control node, an RAM block and a multiply-add particle. Wherein the RAM block isused for realizing caching of to-be-calculated data, the multiply-add particle is used for realizing multiply-accumulate calculation, and the NOC routing control node realizes interconnection with other adjacent coprocessors on one hand, and is also connected with the data RAM block and the multiply-add particle on the other hand. According to the method, to-be-calculated data is dispersedly stored in a plurality of ram blocks, and multiply-add calculation operators are placed as close as possible to rams. Adjacent coprocessors are interconnected by adopting an on-chip network structure, andthe relationship between a producer and a consumer is realized in a calculation process. Therefore, one calculation process can be converted into a process that a data stream flows between the coprocessor acceleration arrays for calculation after being split and mapped.

Owner:TIANJIN CHIP SEA INNOVATION TECH CO LTD +1

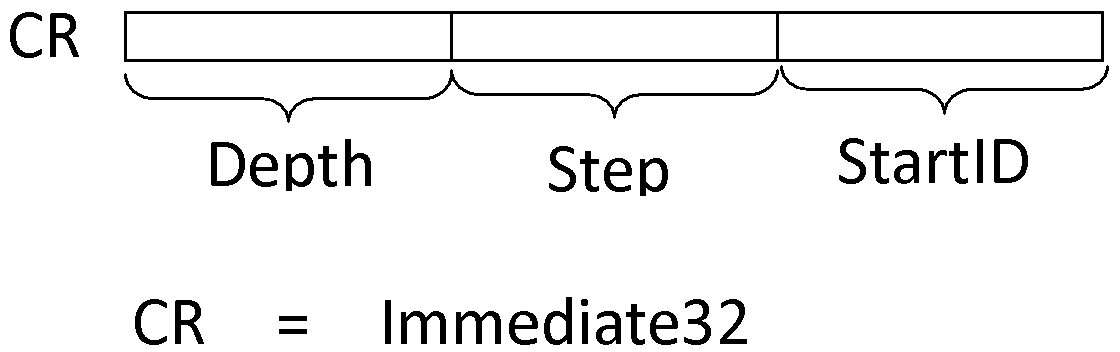

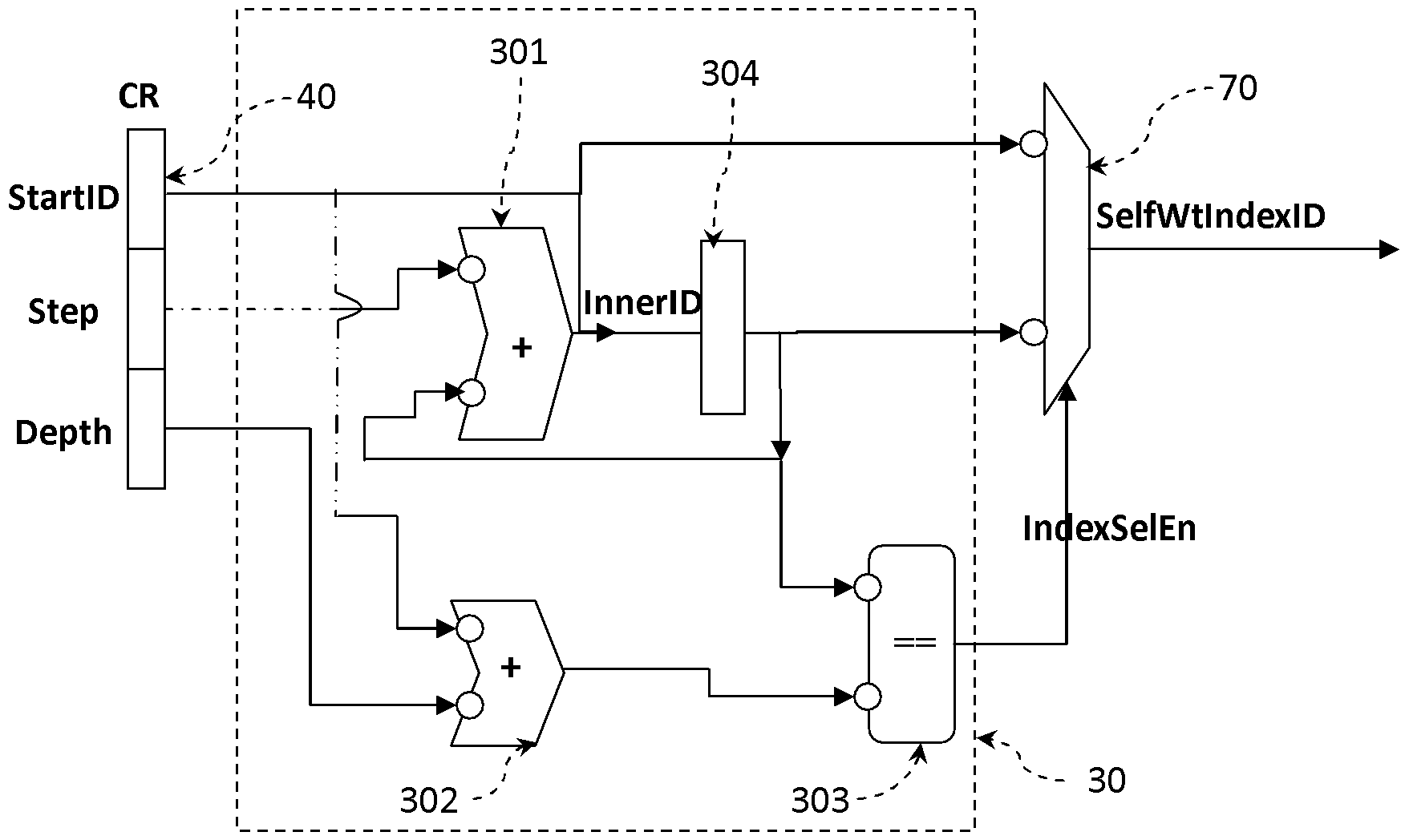

Self-indexing register file pile device

ActiveCN103235762AAvoid programming complexityImprove convenienceEnergy efficient ICTMemory adressing/allocation/relocationIndex registerRegister file

The invention discloses a self-indexing register file pile device. The self-indexing register file pile device comprises a register storage body and peripheral logic of the register storage body, wherein the register storage body is configured to have a self-indexing region and a common region; the size of the self-indexing region and a starting register number can be configured flexibly; and a literal register number way is adopted for indexing in the common region. The register file pile device when initiating a reading-writing startup signal calculates the currently necessary index number automatically, and the reading and writing operations are within the self-indexing region; and when the reading and writing operations reach to the border of the self-indexing region, the next operation is turned to the starting position of the self-indexing region automatically. The self-indexing register file pile device has programming convenience and can save power consumption of a processor.

Owner:BEIJING SMART LOGIC TECH CO LTD

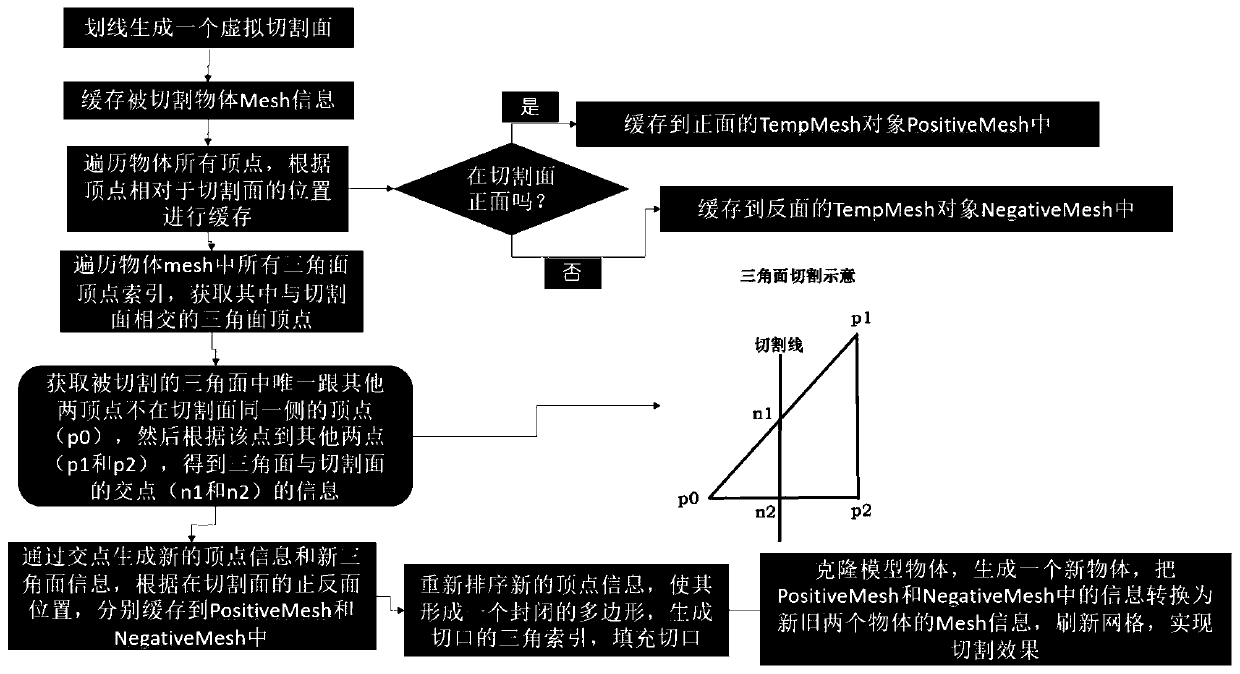

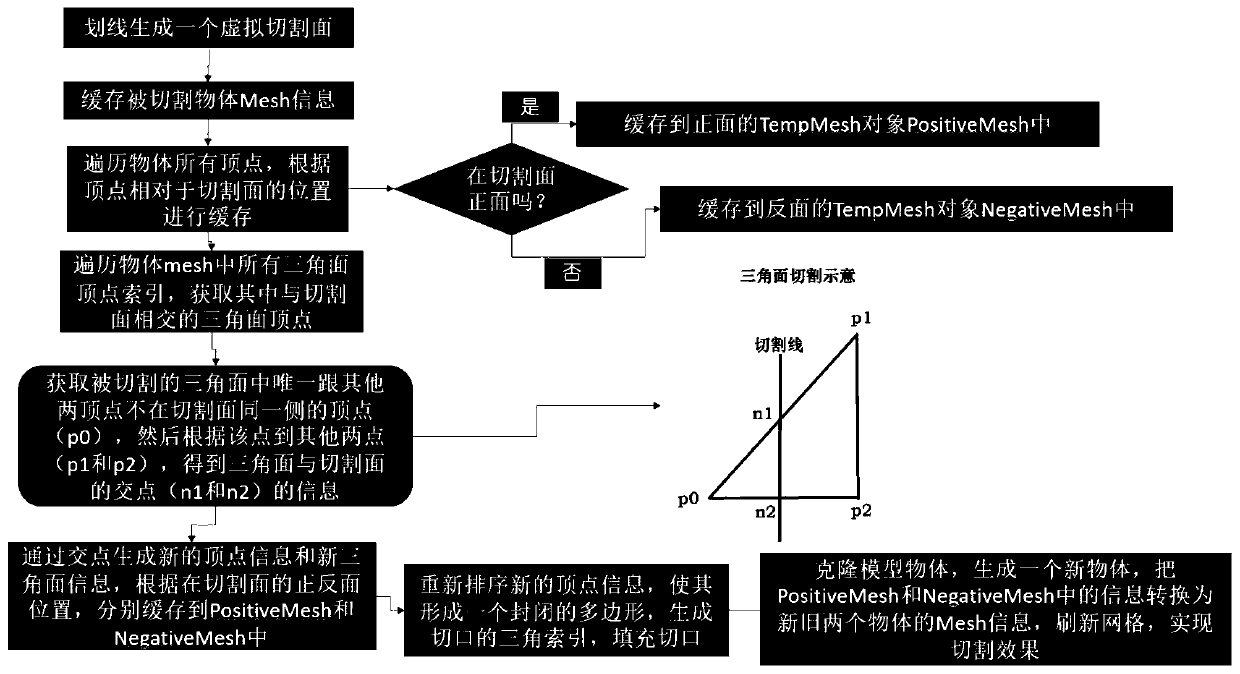

Cutting method based on Unity 3D model

ActiveCN111145356AImprove experienceContribute to a solid perceptionImage analysisInput/output processes for data processingReduced modelTriacontagon

A cutting method based on a Unity 3D model comprises the following steps: S1, obtaining a cutting surface of the model, wherein the model comprises vertexes and triangular surfaces formed by every three vertexes; S2, obtaining a triangular surface intersecting with the cutting surface in the model; S3, emitting rays to the other two vertexes from the vertex on one side of the intersecting triangular surface, obtaining an intersection point with the cutting surface, constructing first-class new triangular surfaces located on the two sides based on the intersection point, and generating new vertex information and first-class new triangular surface information through all the intersection points; S4, reordering the new vertex information to form a closed polygon on the profile, and generatinga second type of new triangular surface filling profile; and S5, cloning the original model to generate a new model, the vertex information on the two sides of the cutting surface covering the original model and the new model respectively to generate independent sub-models on the two sides of the cutting surface, and moving the sub-models to realize a cutting separation effect. Flexible and freecutting and separating effects can be achieved, the cutting experience is improved, the model cutting operation is simplified, and the cutting process performance is improved.

Owner:GUANGDONG VTRON TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com