Patents

Literature

234results about How to "To achieve load balancing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

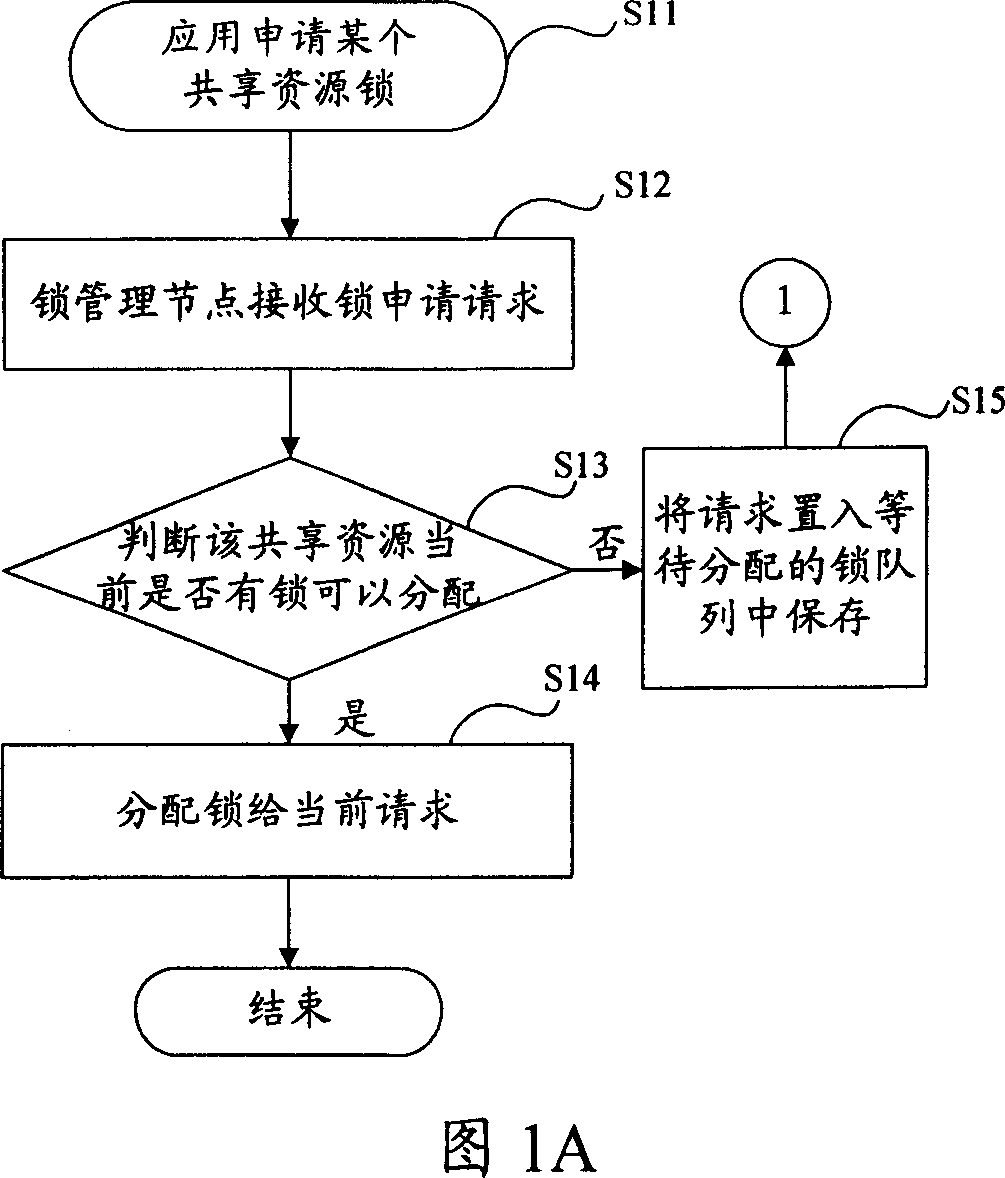

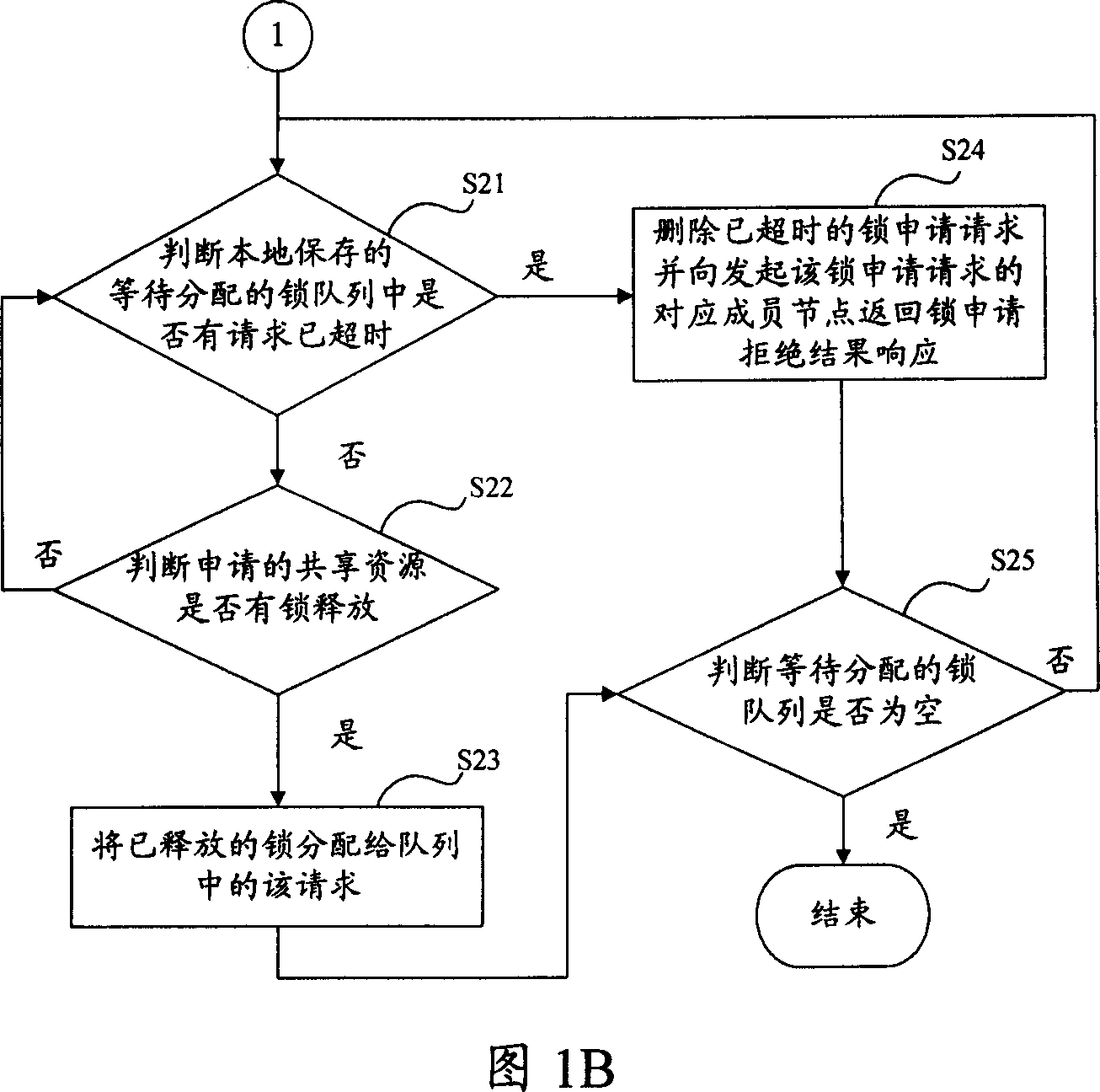

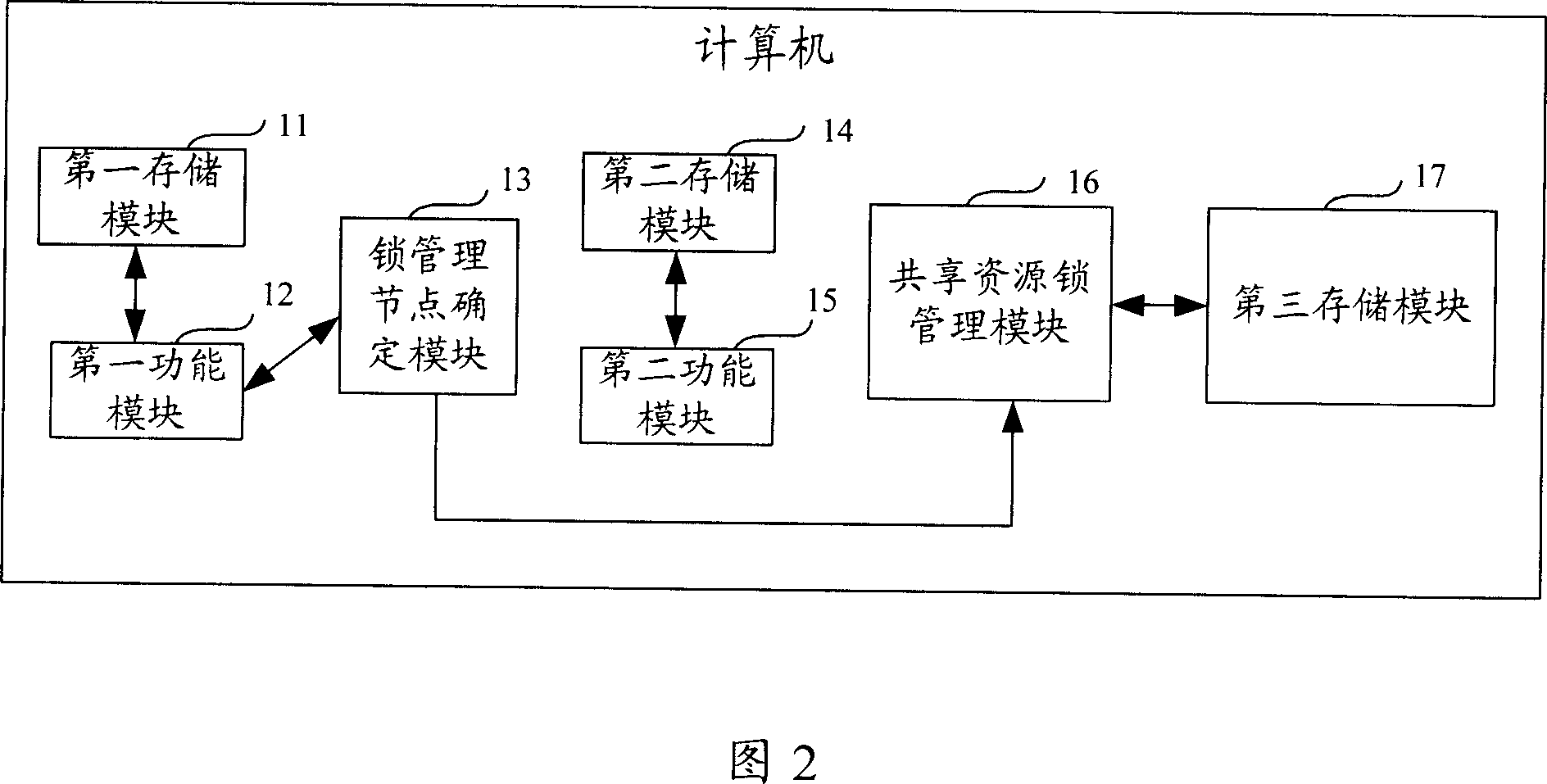

Method for distributing shared resource lock in computer cluster system and cluster system

ActiveCN1945539AAvoid performance bottlenecksTo achieve load balancingMultiprogramming arrangementsExtensibilityComputer cluster

This invention publishes a locked distribution method of shared resources in a type of computer integration system including: making more than one node of system members as locking management node of all the shared resources in the system, meanwhile each shared resource matches a locking management node; when one apply asks for applicant or release shored resources lock, and send applicant to the corresponding locking management node of this shored resources, the corresponding locking management node will finish the distribution and release of lock's applicant. This invention also publishes the corresponding structure of computer integration system. The invention can be used for realizing high usable locking service in the integration, and it has a high expansibility and realizes a balance of load.

Owner:HUAWEI TECH CO LTD +1

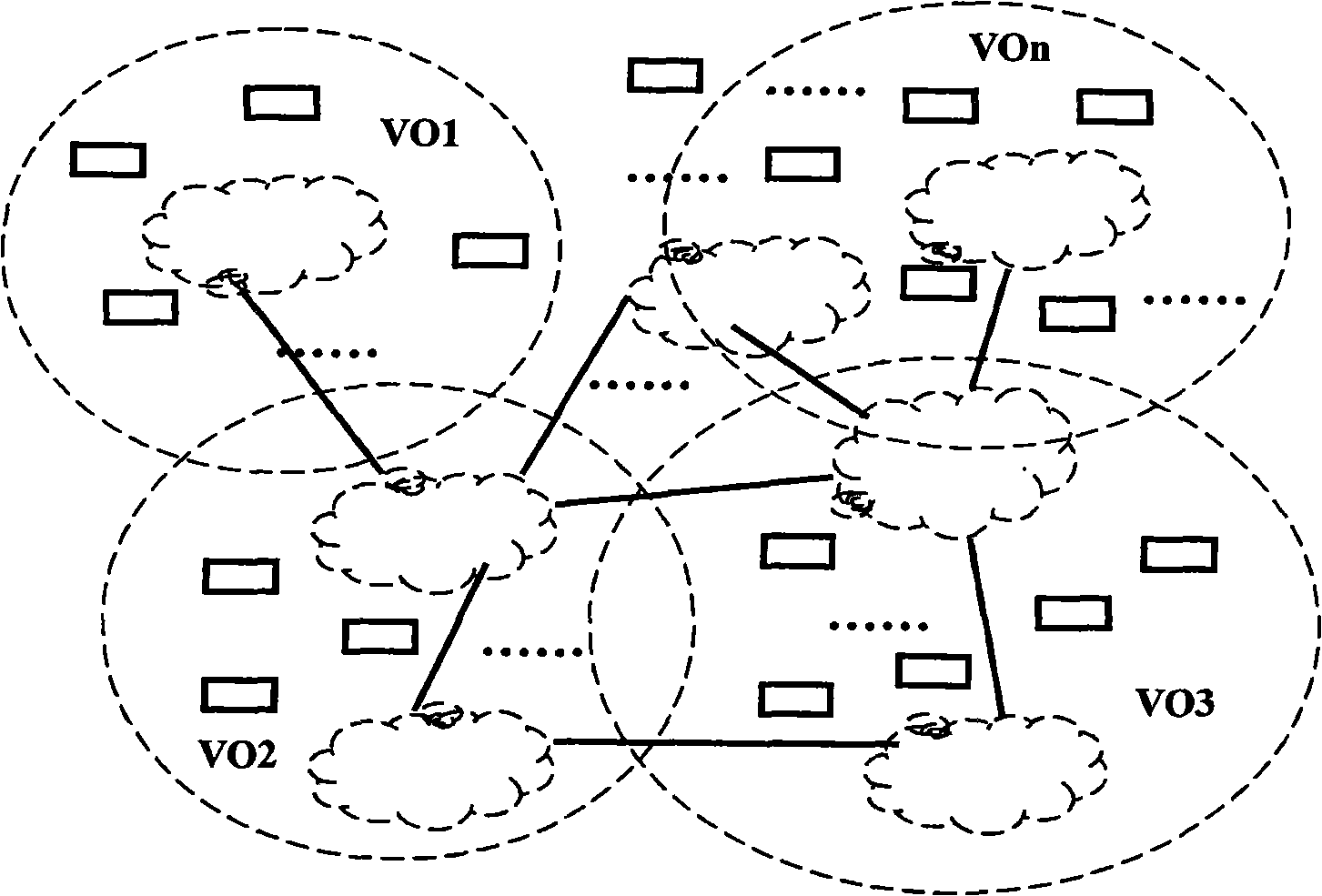

Grid calculation environment task cross-domain control method

Disclosed is an operation cross-domain control method in a grid computing environment; the method uses a trust mechanism to realize the operation control in the grid environment, makes trust evaluation to the available resources in the grid, analyzes the operation to be processed, and uses a mobile agent to transfer the operation to an appropriate resource for execution, according to the information provided by a control system in the grid. The proposal overcomes the disadvantages of poor reliability, non-guaranteed response time, over-long running time on the resources and imperfect fault management in other operation control proposals, and can achieve self-adaptation to the resource and operation control in the grid, reduce the grid traffic and improve the utilization rate of the network to form an operational parallel solution, thereby achieving the purpose of improving the utilization efficiency of grid resources and the execution efficiency of grid computing, speeding up the execution of tasks and improving the accuracy of the results so as to enhance the processing efficiency of distributed systems.

Owner:JIANGSU YITONG HIGH TECH

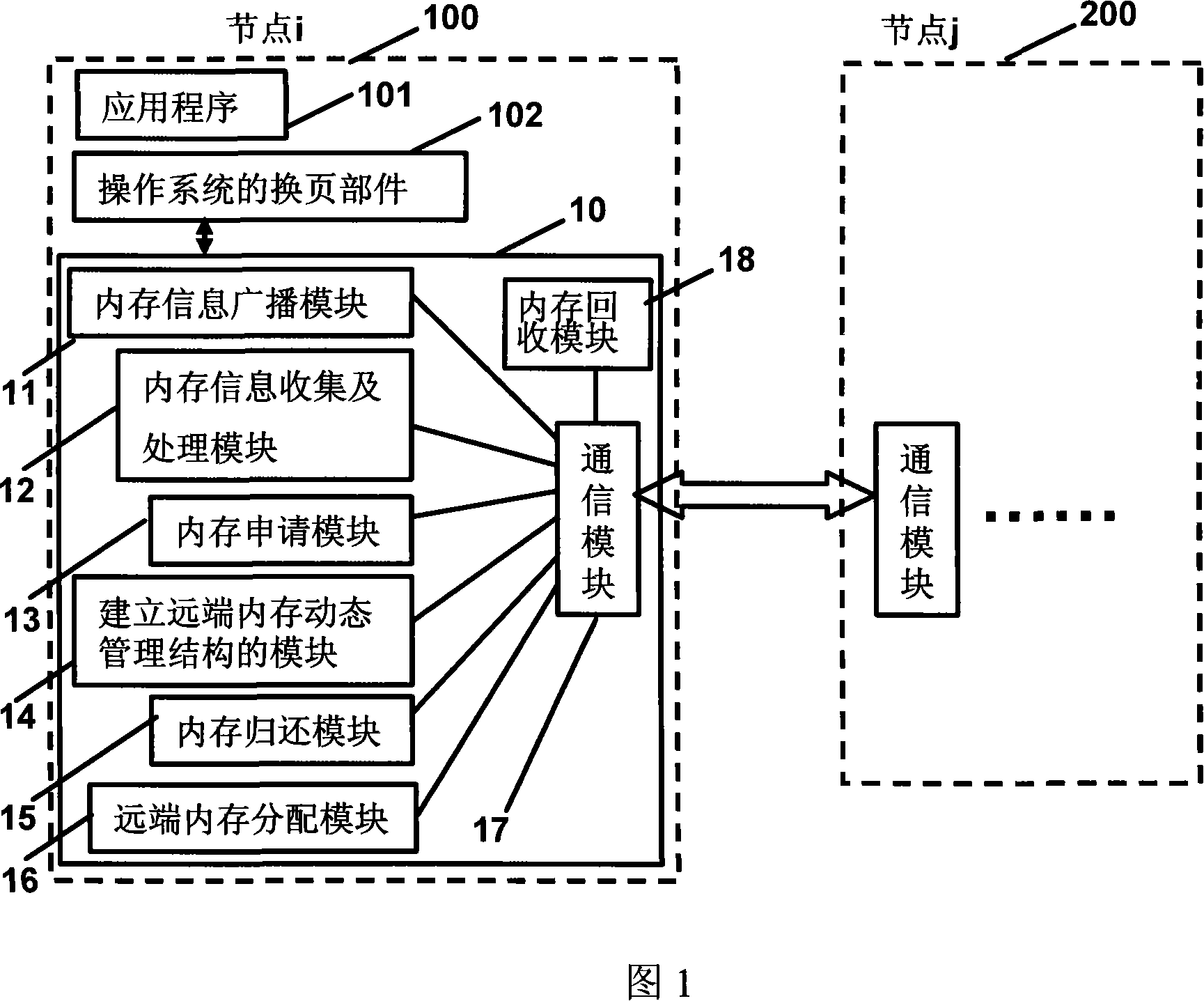

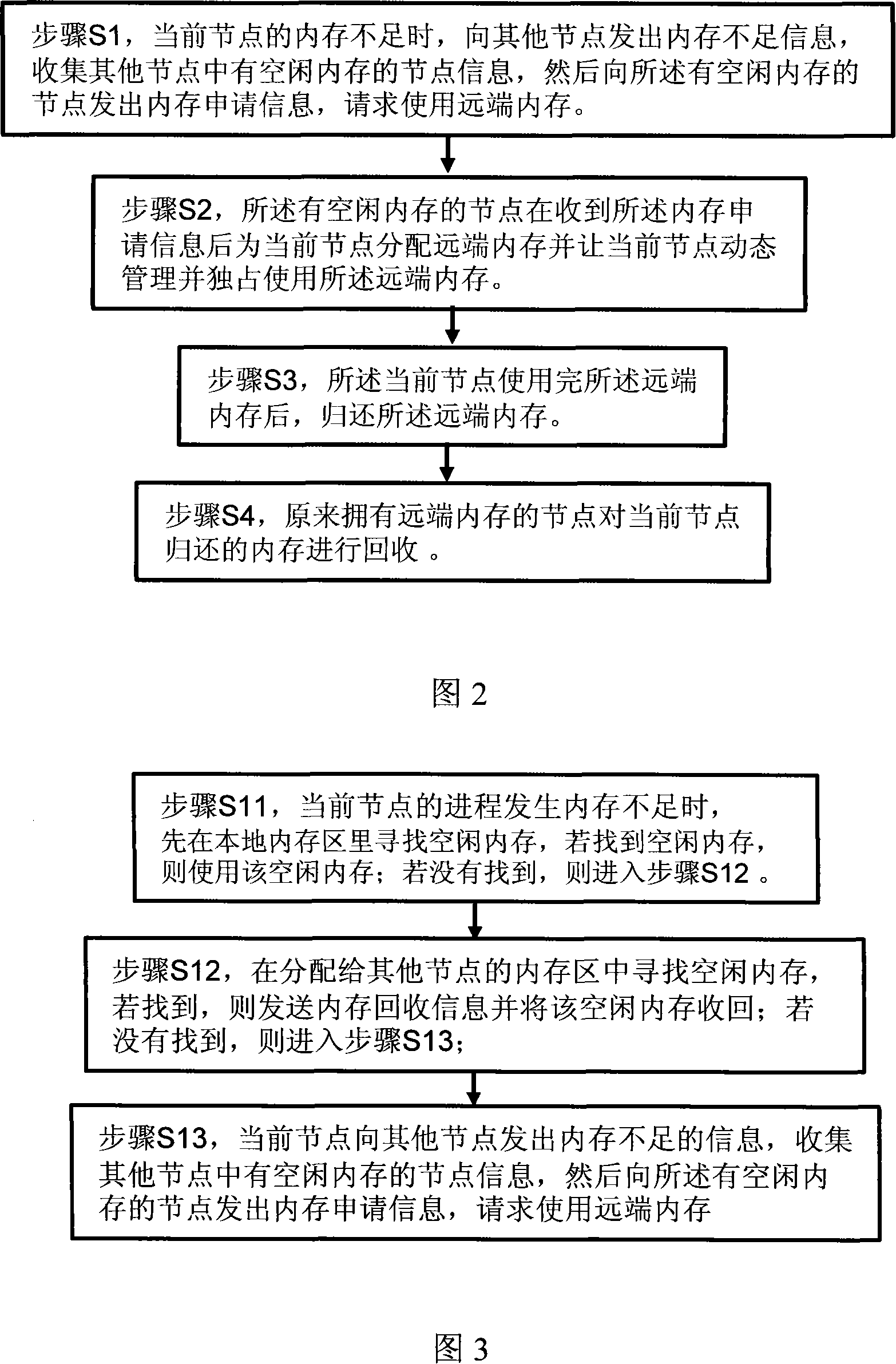

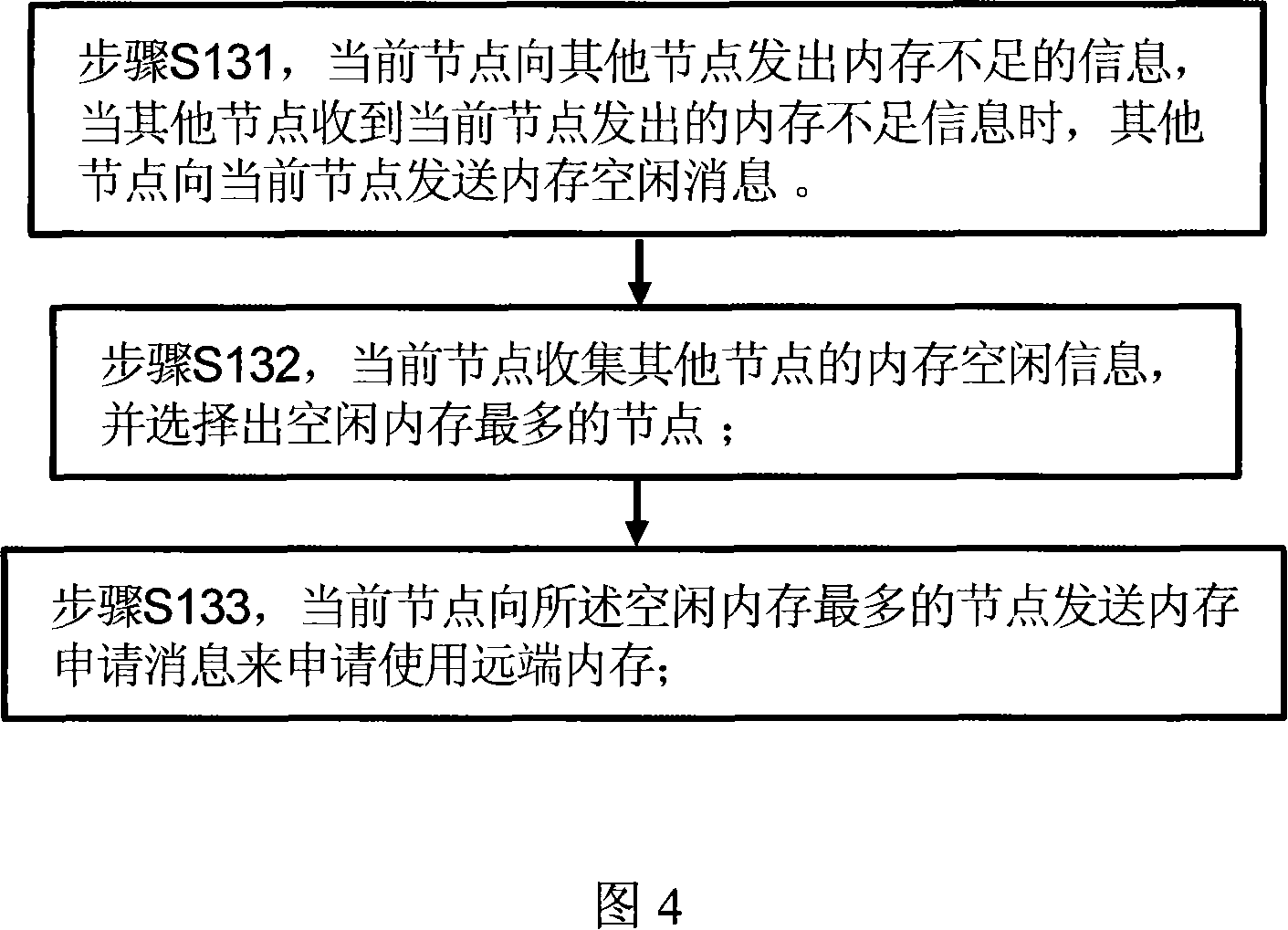

EMS memory sharing system, device and method

ActiveCN101158927AIncrease profitImprove reliabilityMemory adressing/allocation/relocationOperational systemOut of memory

The invention discloses a memory shared system and a device, as well as a method in a multi-core NUMA system. The system comprises a plurality of nodes; the operating system of each node comprises a memory shared device, the memory shared device comprises: a memory information collection and processing module, a memory application module, as well as a module establishing a distant end memory dynamic management structure and connected with a communication module. The method comprises the following steps: step S1, when the memory of a front node is deficient, the memory deficiency information is sent to other nodes, the node information of vacant memory in other nodes is collected, then the memory application information is sent to the nodes with vacant memory requesting the use of distant end memory; step S2, after receiving the memory application information, the nodes with vacant memory distributes the distant end memory with the current node, and let the current node dynamically manages and exclusively uses the distant end memory. The invention borrows the distant end vacant memory to accomplish the load leveling of the entire system.

Owner:HUAWEI TECH CO LTD

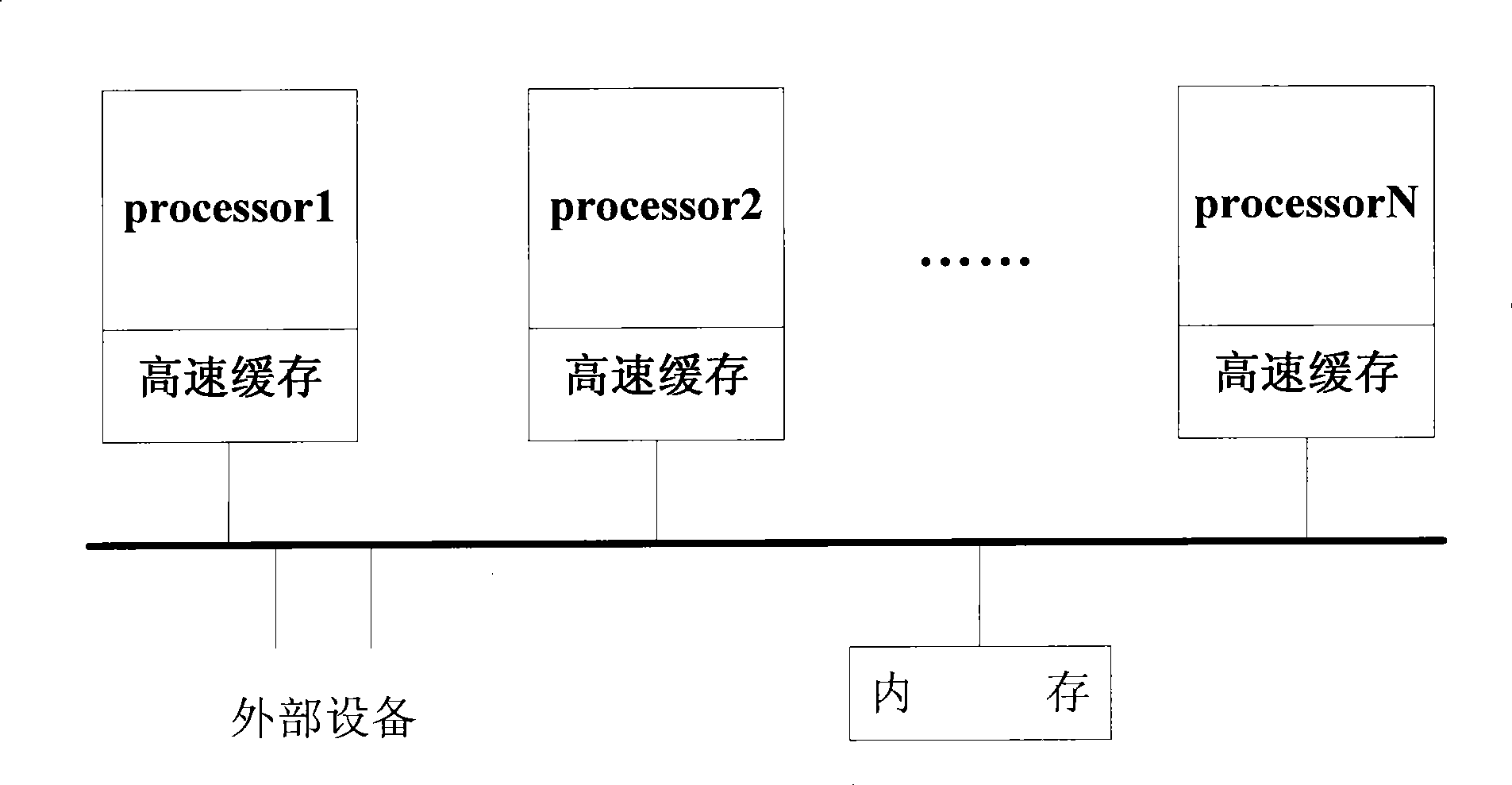

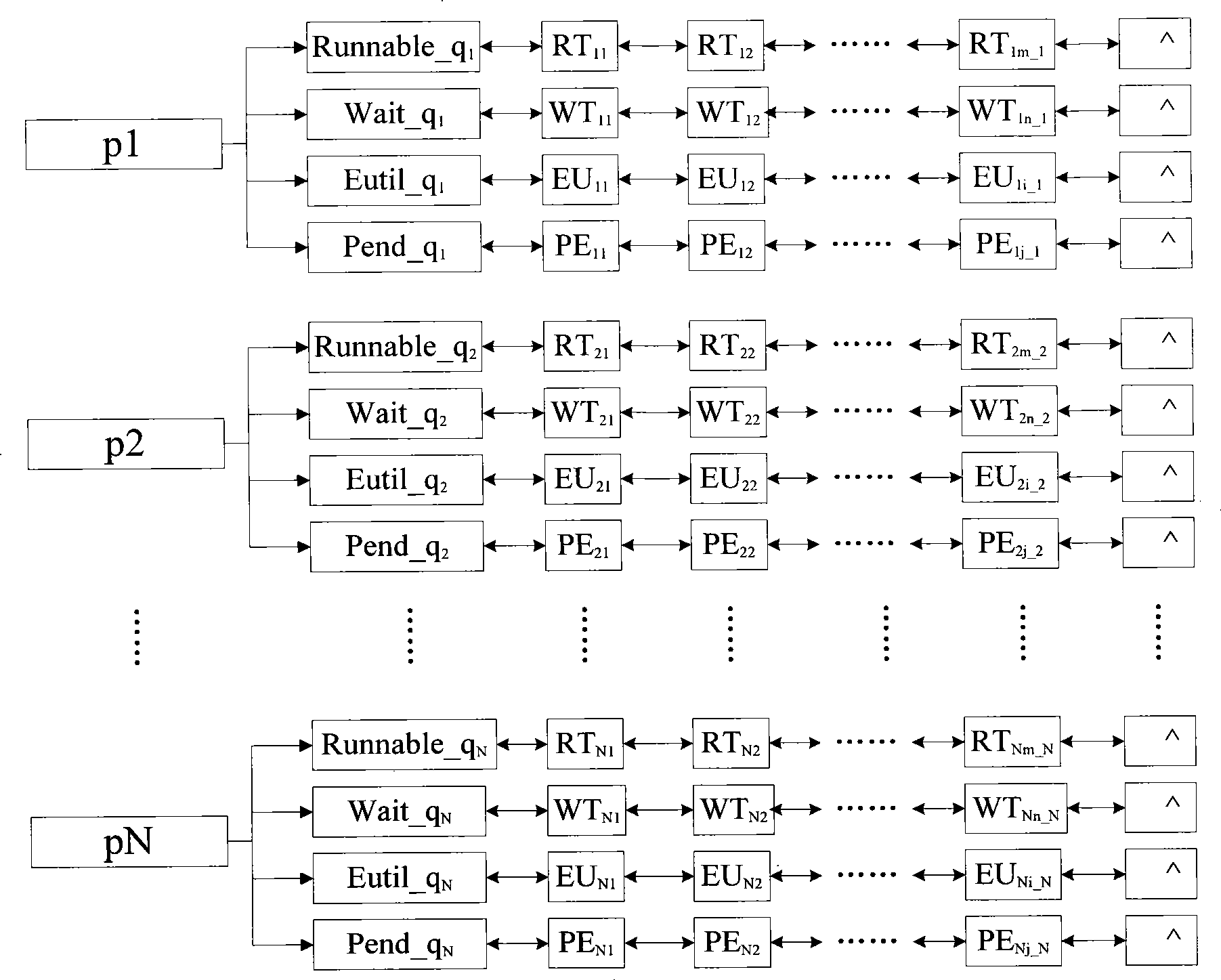

AEDF task scheduling method based on SMP

InactiveCN101446910AIncrease profitTo achieve load balancingResource allocationCurrent loadDynamic load balancing

The invention provides an AEDF task scheduling method based on an SMP, and designs a dynamic load balancing method which runs on the SMP and is based on self-adapting. The method can track the current load condition of all the processors in the SMP in real time, and reasonably allocates different tasks into the processor with less loads in a dynamic way; in the process of task scheduling, by counting the runtime of the hung task, the method regulates time slot, period and blocking time of the next time of running in a dynamic way. The AEDF task scheduling method based on the SMP can comprehensively analyze the current load condition of the processors, task-fulfilling situation and the running condition of the system, and reasonably allocates resources of the processors, thus effectively improving the utilization rate of the resources of all the processors in the SMP, shortening execution time needed by completing all the tasks, and leading the operating efficiency and the using effectiveness of the SMP to be effectively improved; meanwhile, by adopting the execution sequence of dynamic scheduling task resources, the real-time requirements for executing urgent task can be guaranteed in a preferential way.

Owner:HARBIN ENG UNIV

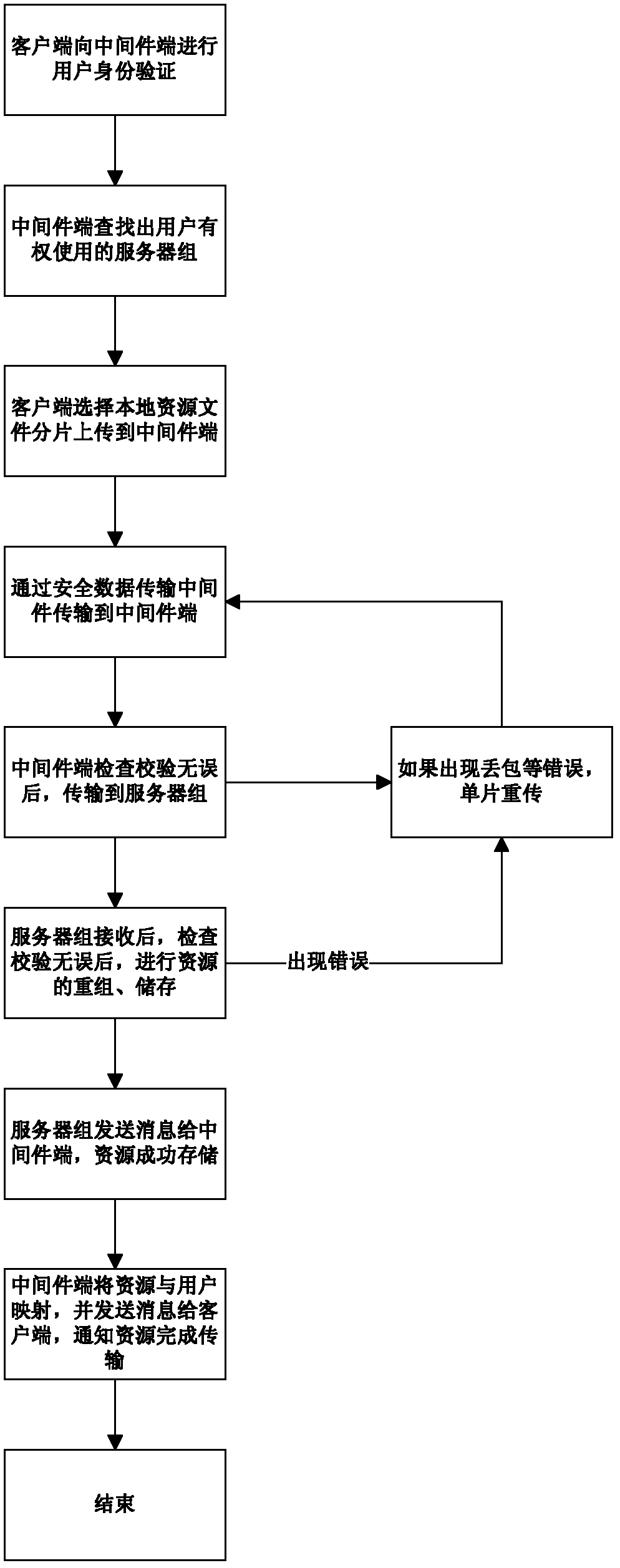

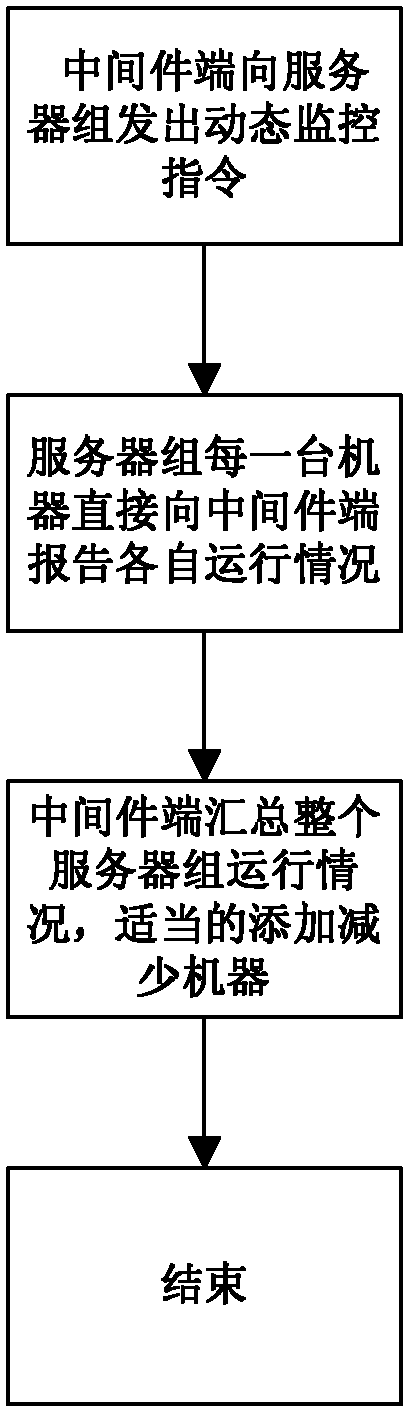

Cloud storage method for cloud computing server

InactiveCN102255974AFix security issuesGuaranteed correctnessTransmissionComputer network technologyClient-side

The invention discloses a cloud storage method for a cloud computing server, belonging to the technical field of computer networks. The method comprises the following steps: (1) a cloud computing server group server and a client registers on a middleware server respectively; (2) the middleware server searches a local database and looks up an operable file and a usable cloud computing server group for a current user; (3) the user selects an upload file, fragments the file and transmits the file fragments to the middleware server; (4) the middleware server verifies the upload file, requires the user to retransmit if the upload file is wrong, and transmits data to the cloud computing server group usable for the user if the upload file is correct; (5) the cloud computer server group rearranges and stores the received data and sends a success message to the middleware server; and (6) the middleware server establishes mapping between the name of the upload file and the user. According to the invention, the correctness and integrity of a data transmitting process and the privacy of data are ensured, and high reliability of normal work of the server group is ensured.

Owner:无锡中科方德软件有限公司

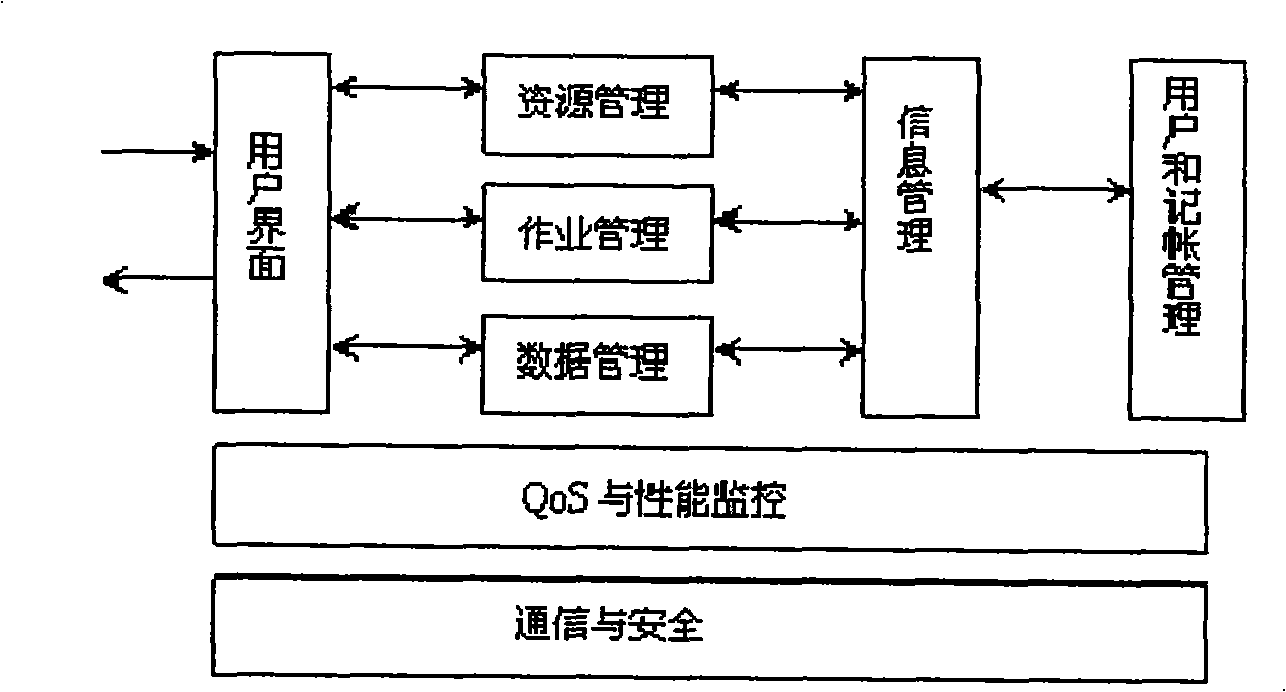

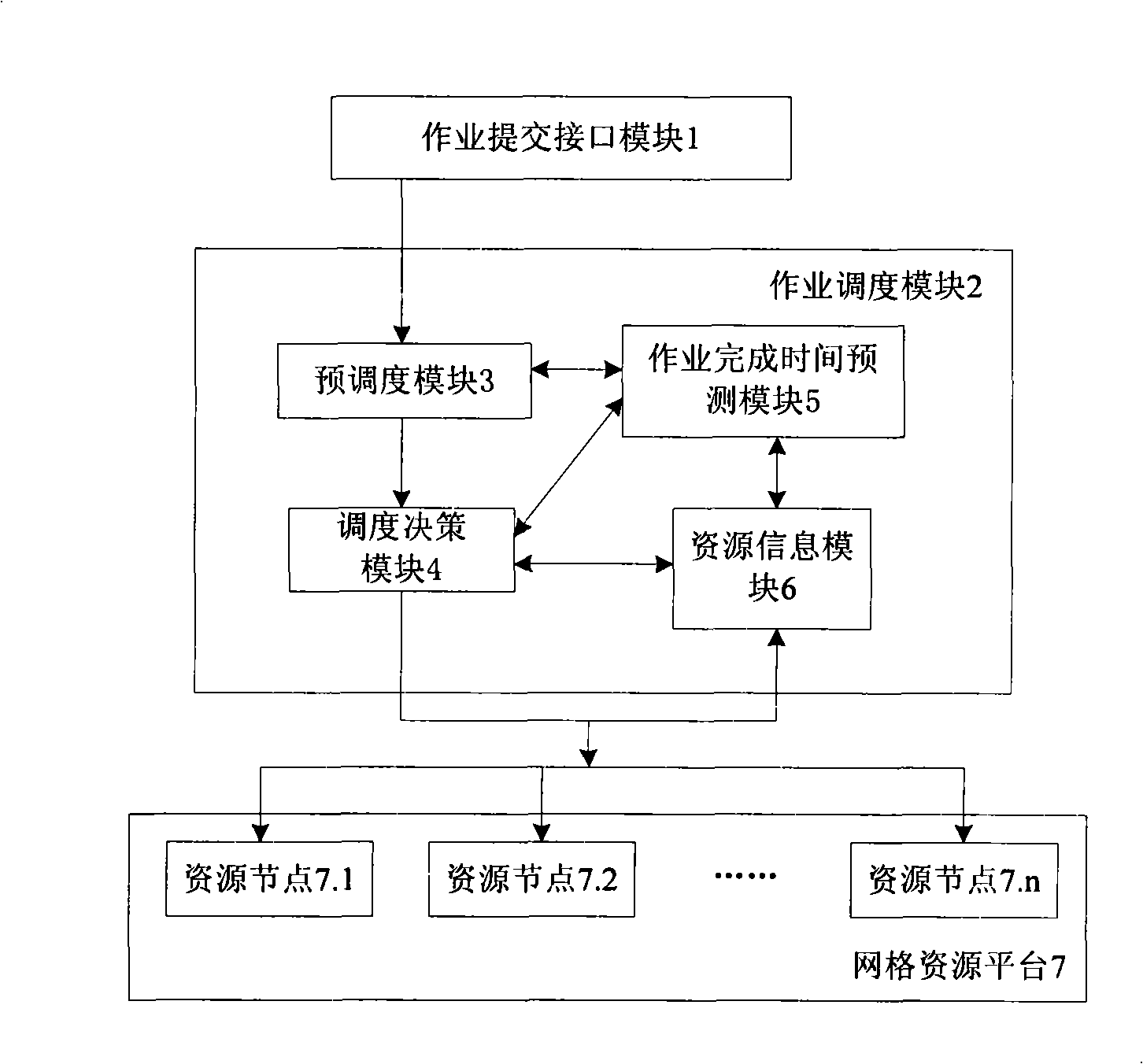

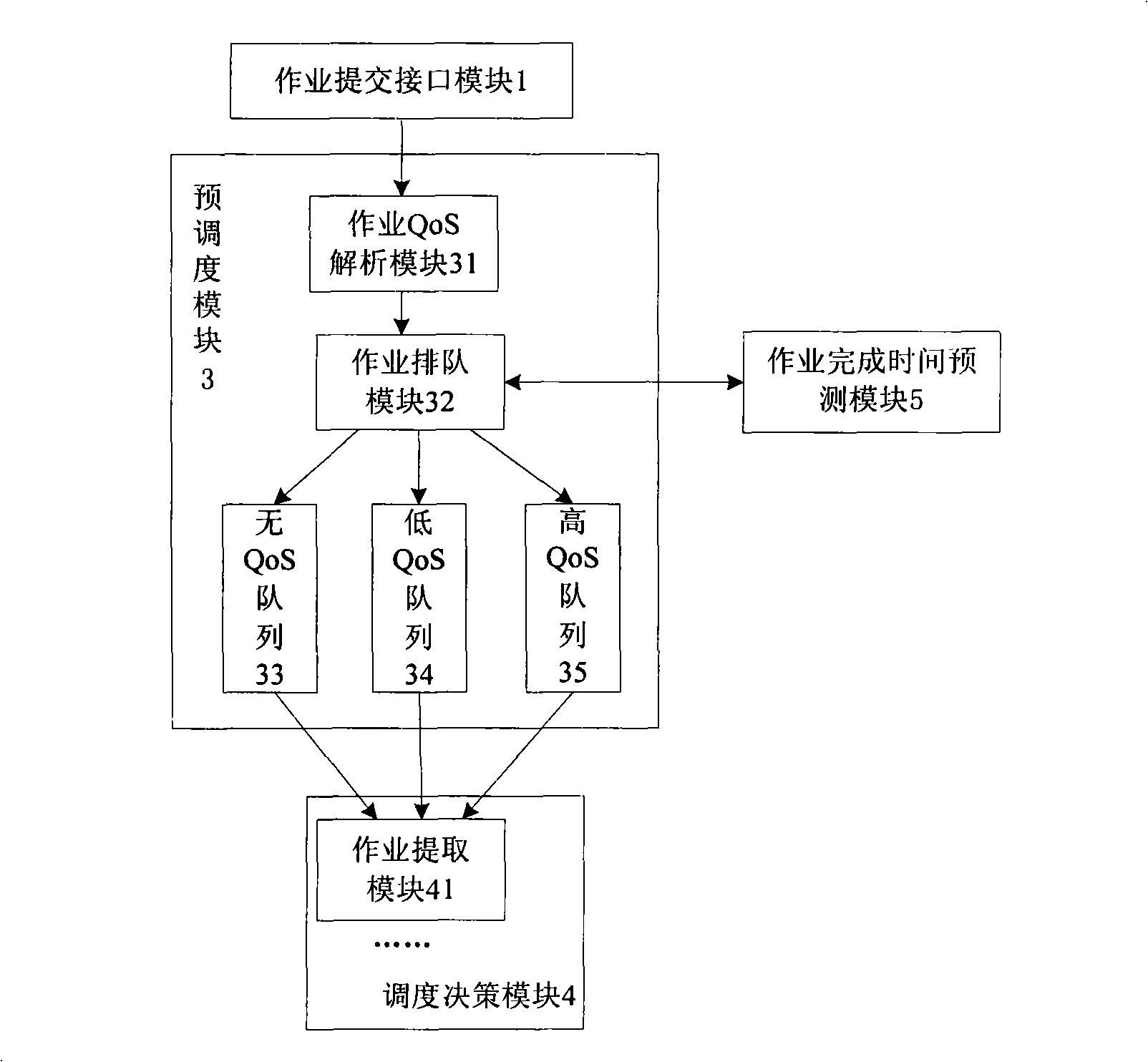

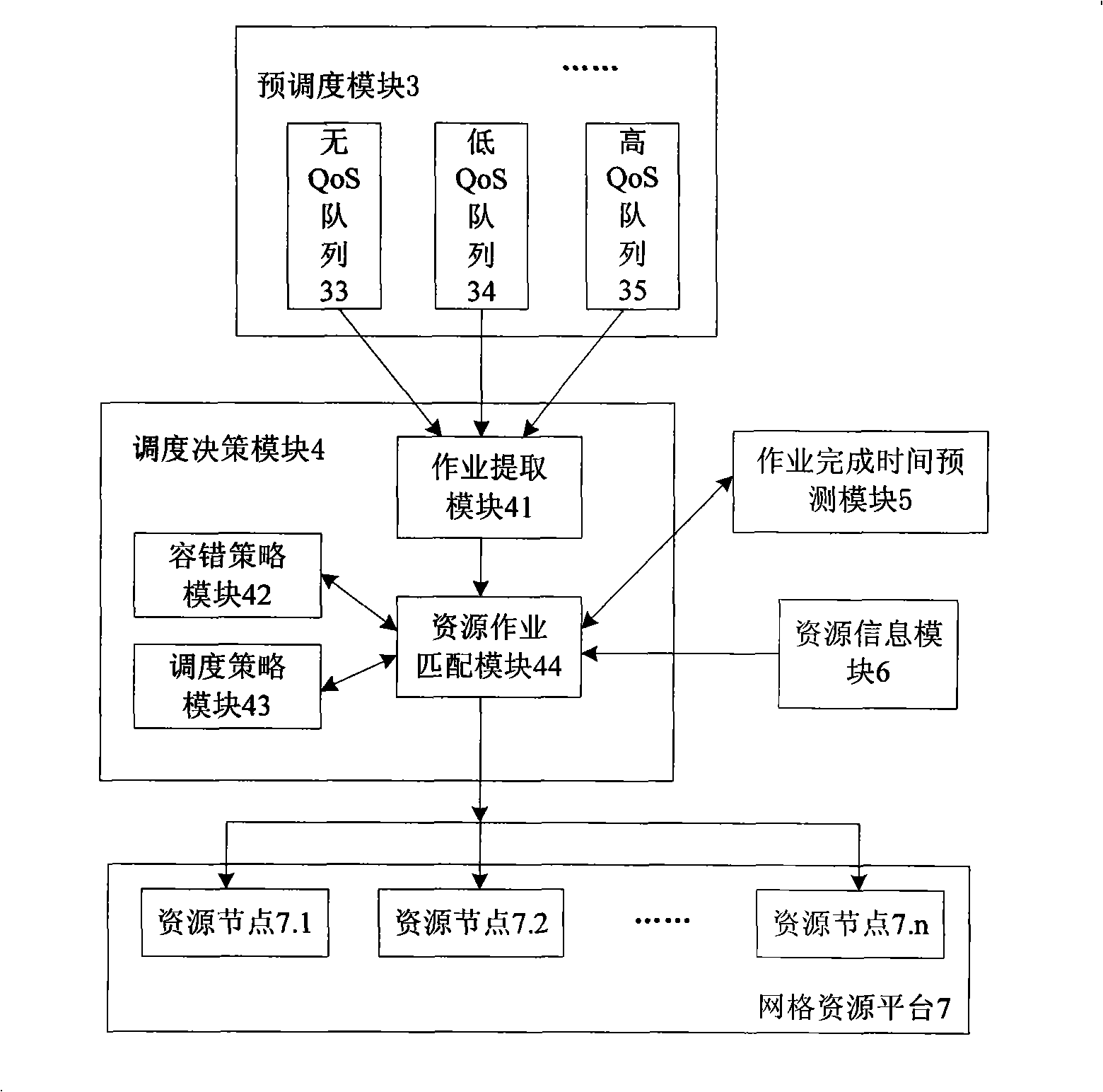

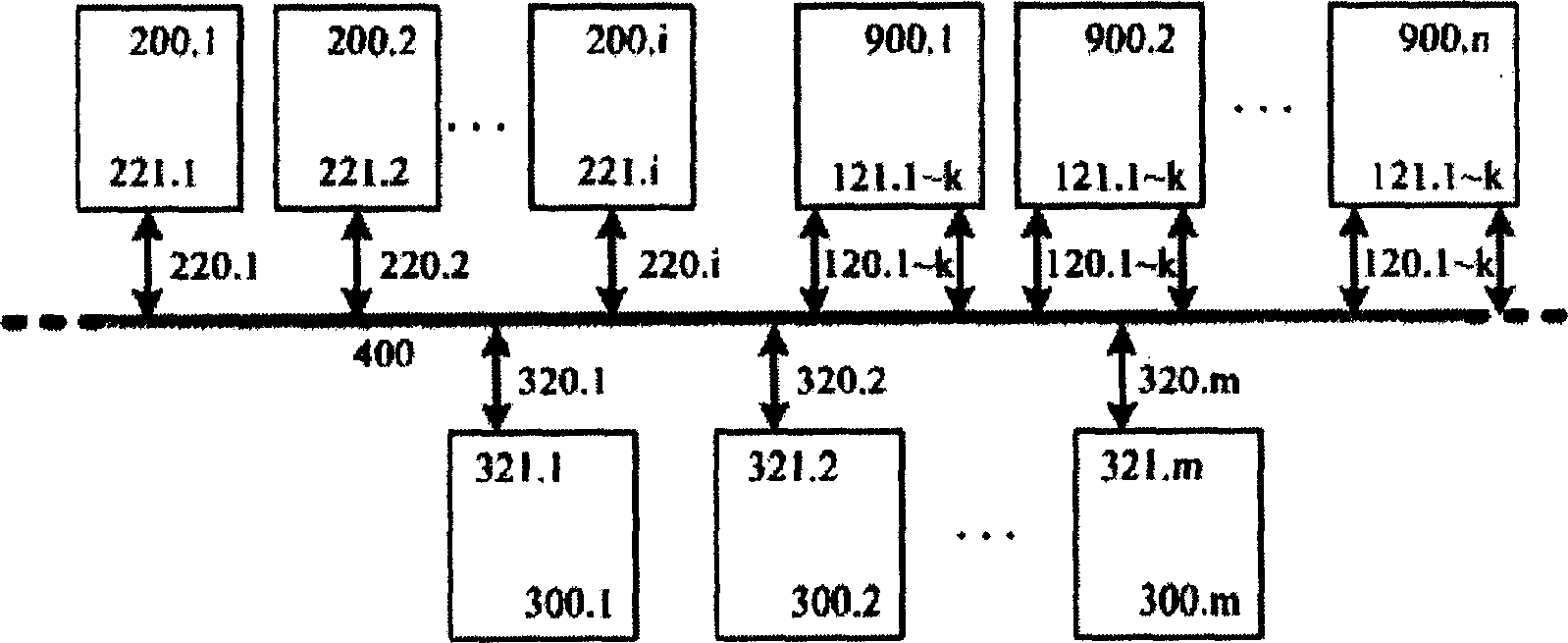

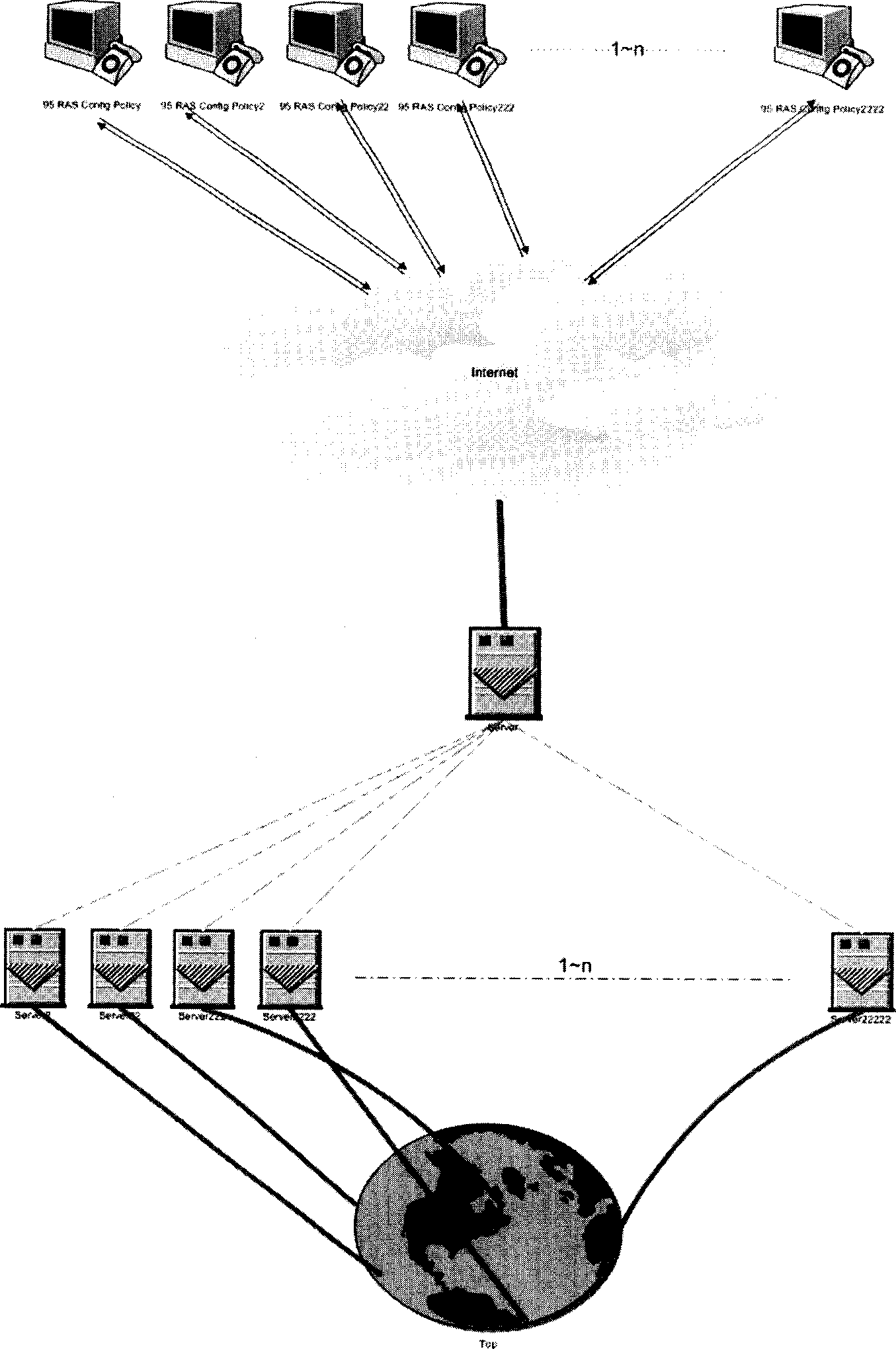

Job scheduling system suitable for grid environment and based on reliable expense

InactiveCN101309208AIncrease profitImprove accuracyError preventionData switching networksExtensibilityFault tolerance

The invention relates to an operation scheduling system which is applicable to the grid environment and based on the reliability cost; as indicated in graph 1, the whole system includes three layers: the first layer is an operation submission interface module 1; the second layer is an operation scheduling module 2 and the grid resource platform 7 as the substrate layer. From the perspective of the operational principle, the core of the invention is the operation scheduling module in the second layer, which includes a pre-scheduling module 3, a scheduling strategy module 4, an operation finish time prediction module 5 and a resource information module 6. The operation scheduling system in the invention proposes an operation running time prediction model and a resource usability prediction model; the operation running time prediction model based on the mathematical model and the resource usability prediction model based on the Markov model have high accuracy and high generality. The operation scheduling system adopts the copy fault-tolerance strategy, the primary copy asynchronous operation fault-tolerance strategy and the retry fault-tolerance respectively according to different operation service quality requirements and resource characteristics so that the operation scheduling system has high flexibility and high validity; meanwhile, the operation scheduling system supports the computation-intensive operation and the data-intensive operation to have good generality. Compared with the scheduling system in the prior art, the operation scheduling system has the advantages of supporting more concurrent users, improving the resource utilization rate, good generality, good extensibility and high system throughput.

Owner:HUAZHONG UNIV OF SCI & TECH

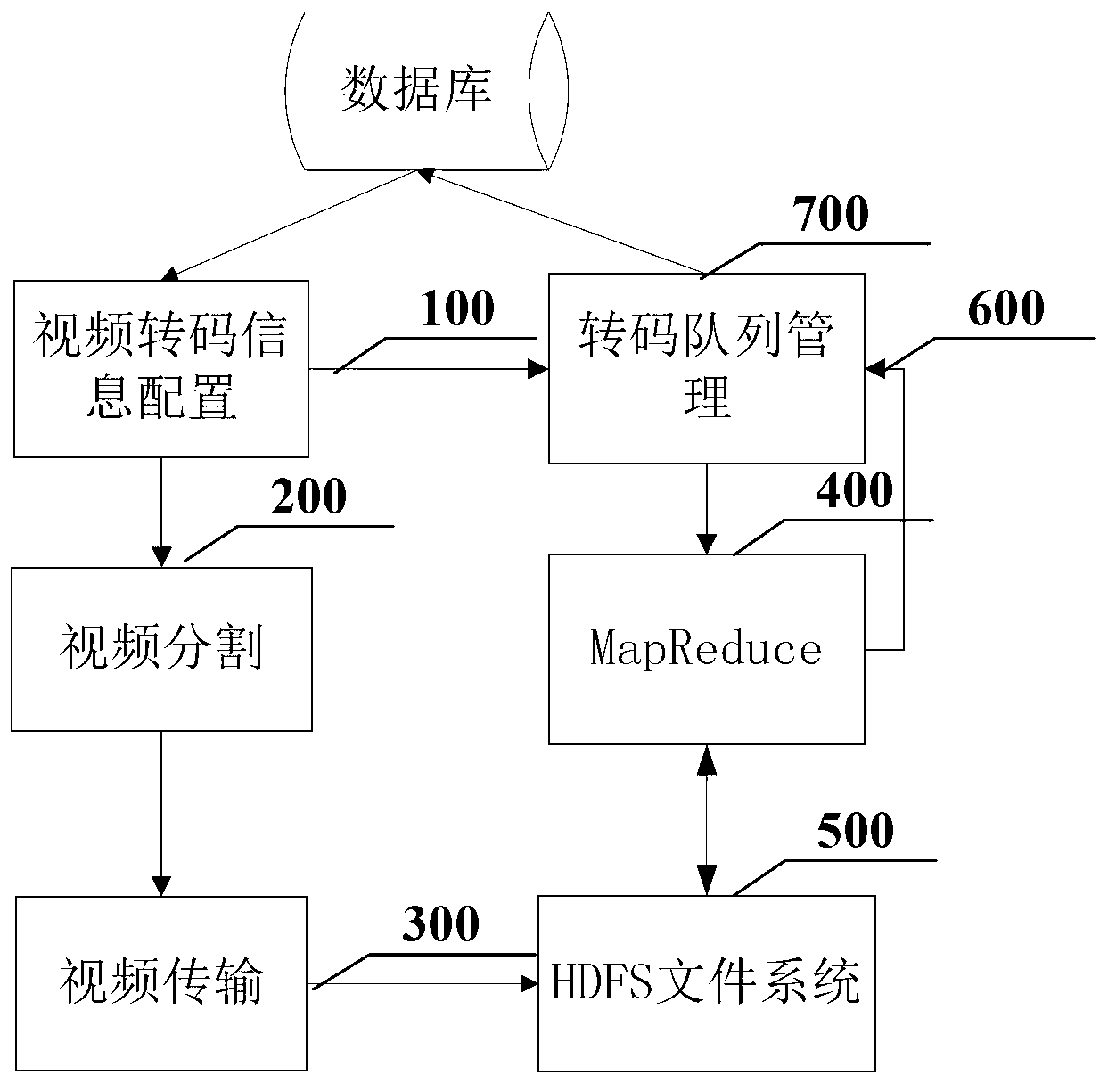

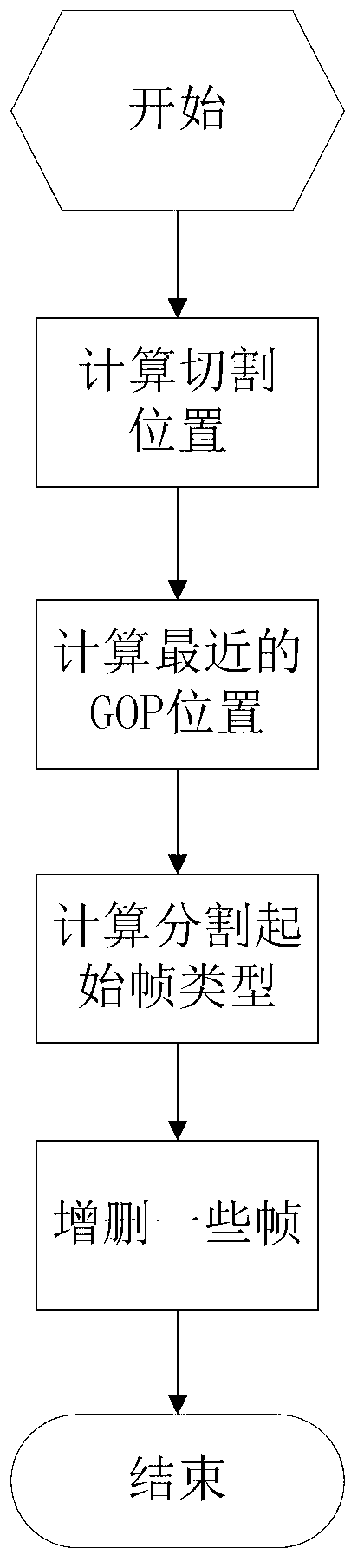

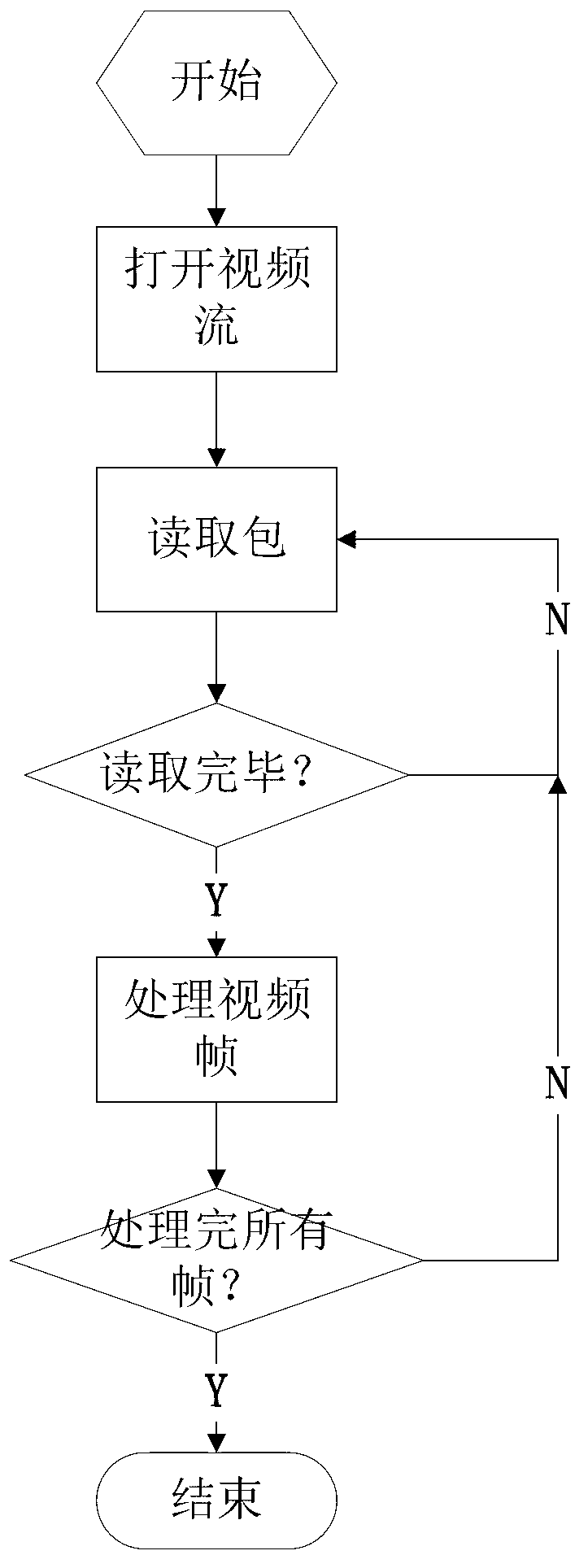

Hadoop-platform-based method for improving video transcoding efficiency

InactiveCN103297807AReduce time consumptionReduce complexityTelevision systemsSelective content distributionDistributed File SystemComputer graphics (images)

The invention provides a hadoop-platform-based method for improving video transcoding efficiency and relates to hadoop-platform-based technologies for improving video adaptive technology efficiency. The hadoop-platform-based method for improving the video transcoding efficiency comprises subjecting a video file to storage segmentation processing through a hadoop platform; subjecting video clips generated by segmentation to FFmpeg (Fast forward moving pictures experts group) transcoding; and consigning transcoding results to an HDFS (Hadoop Distributed File System) for video clip combination, and accordingly transcoding tasks of a large amount of video data can be completed efficiently. According to the efficient video transcoding of the hadoop-platform-based video adaptive technology, the video transcoding speed is fast, the network bandwidth traffic consumption is low, the load balancing processing is appropriate and the transcoding time consumption is reduced significantly.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

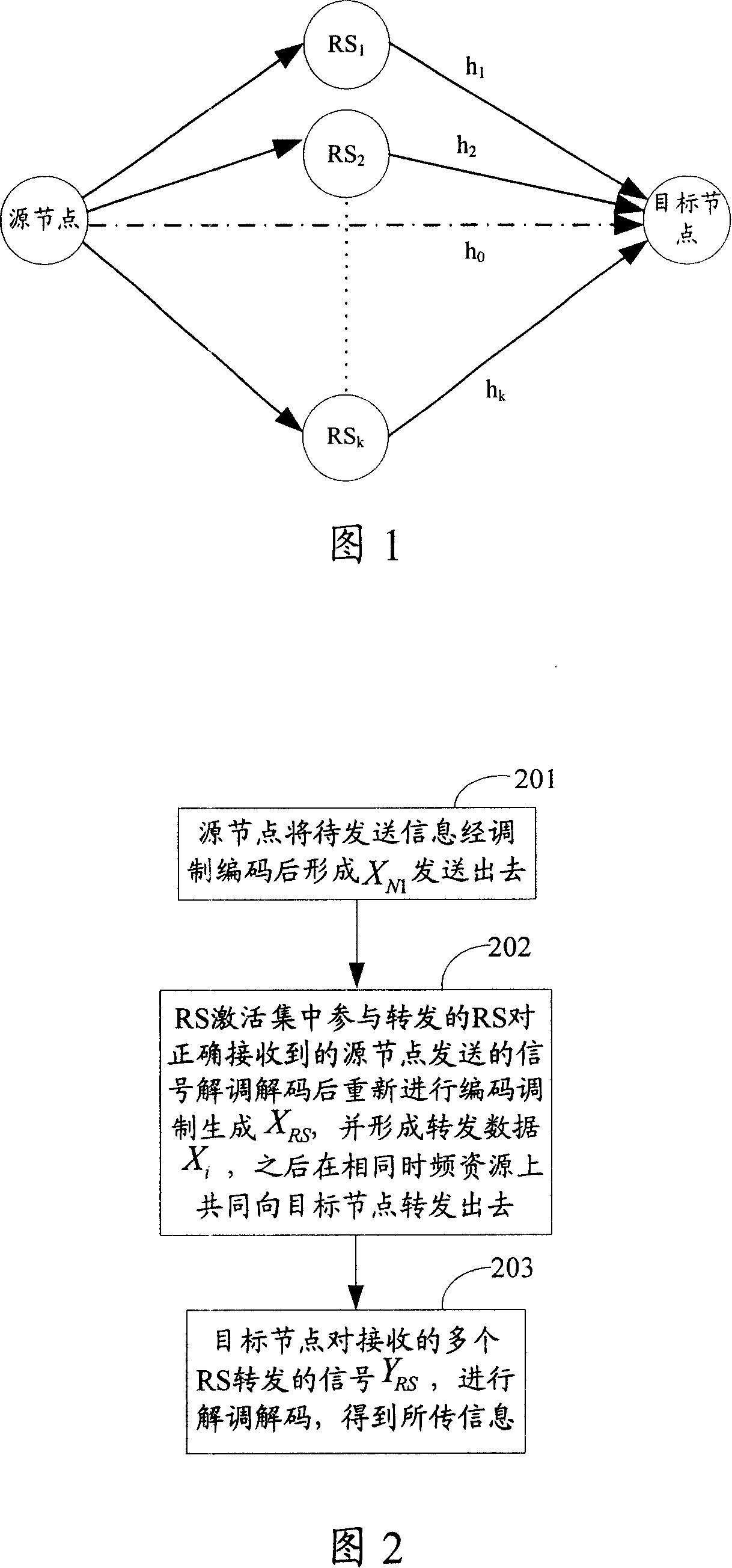

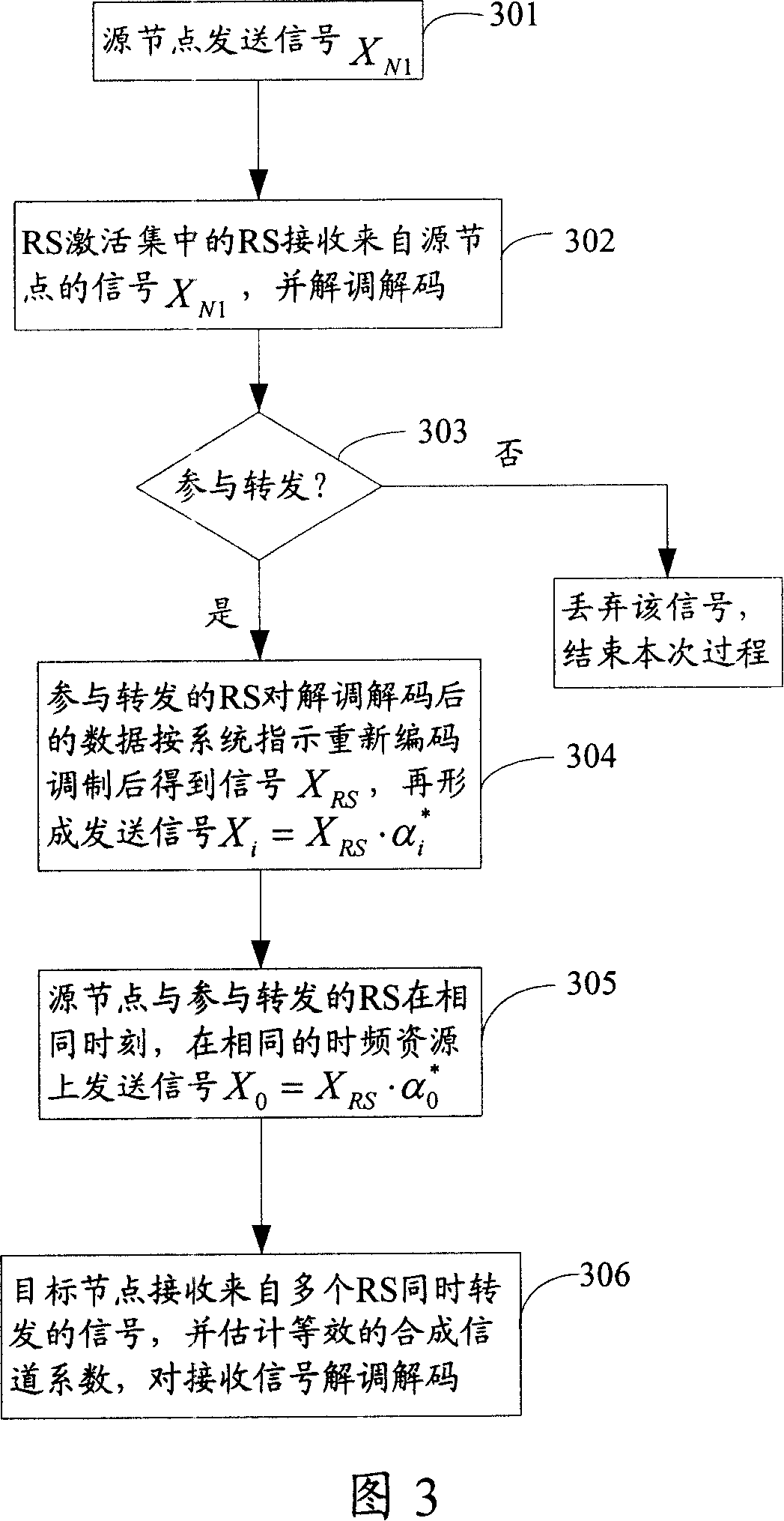

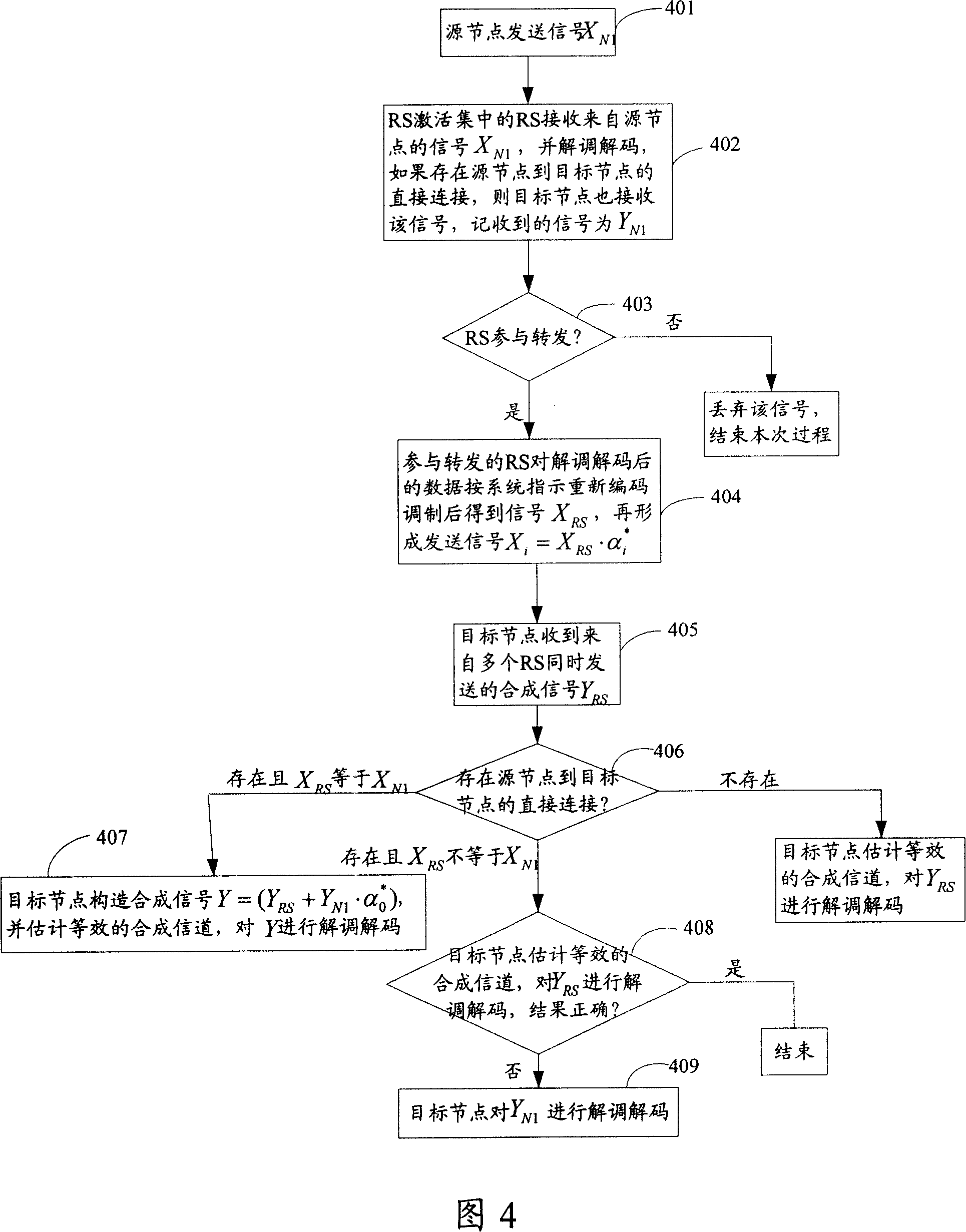

Transmission method and transmission system of wireless relay system

ActiveCN101141172AImprove reliabilityTo achieve load balancingPower managementSite diversityQuality of serviceTransport system

The present invention discloses a transmission method in a wireless relay system, which jointly transmits data between a source node and a destination node through a plurality of relay stations. These relay stations transmit same data on the same time frequency resources and transmit different data on varied time frequency resources. The destination node demodulates and decodes received superposed signals of the same data transmitted by the RS on the same time frequency resource. In addition, the destination node demodulates and decodes different data transmitted by the RS on the varied time frequency resource and matches demodulated and decoded data on demand and finally acquires the transmitted data. Moreover, the present invention discloses a wireless relay transmission system. The transmission method and the transmission system provided by the present invention can improve link transmission reliability, realize load balance amongst a plurality of relay stations, avoid overload of single relay station and enhance service quality for the destination node.

Owner:HUAWEI TECH CO LTD

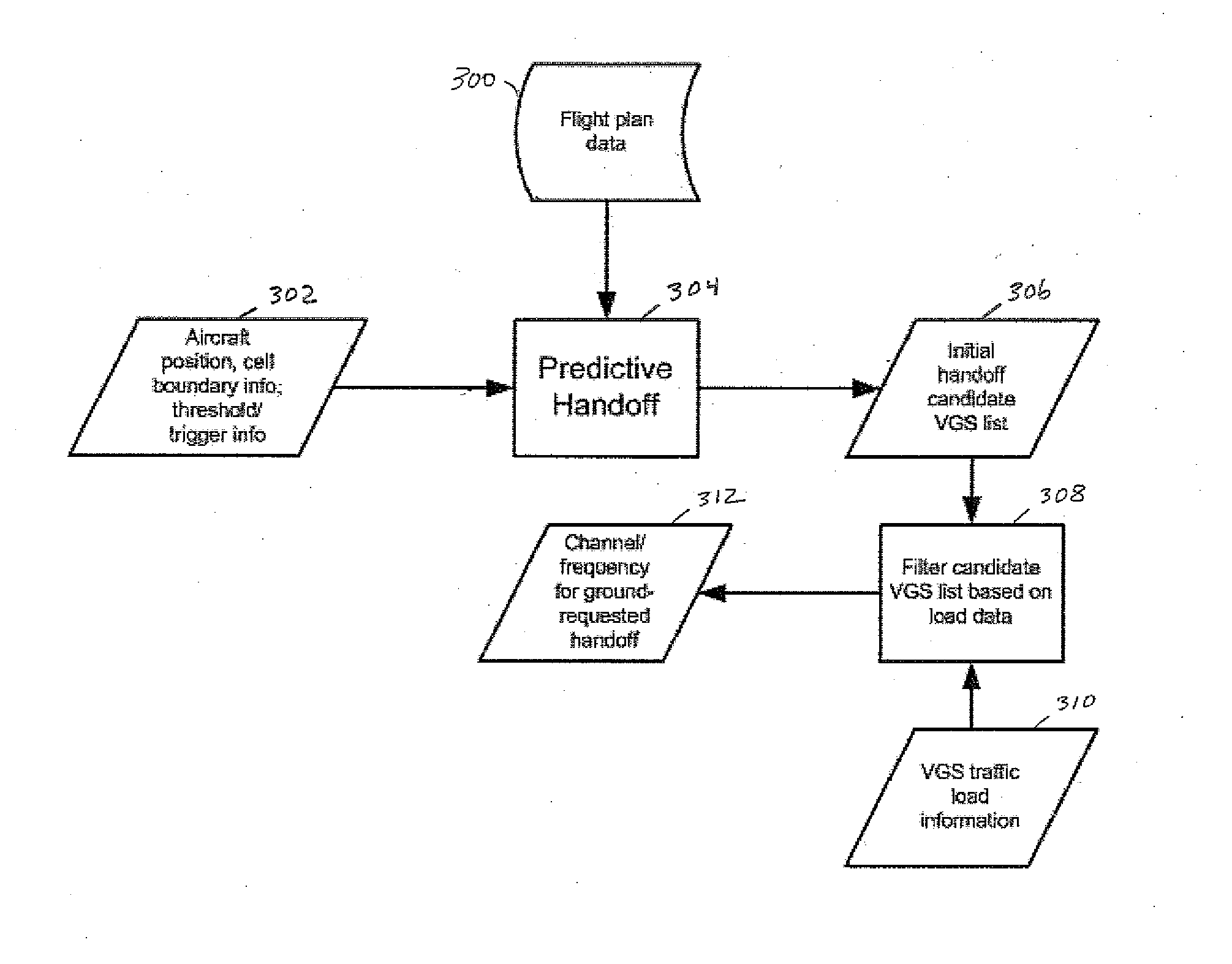

System and Method for Load Balancing and Handoff Management Based on Flight Plan and Channel Occupancy

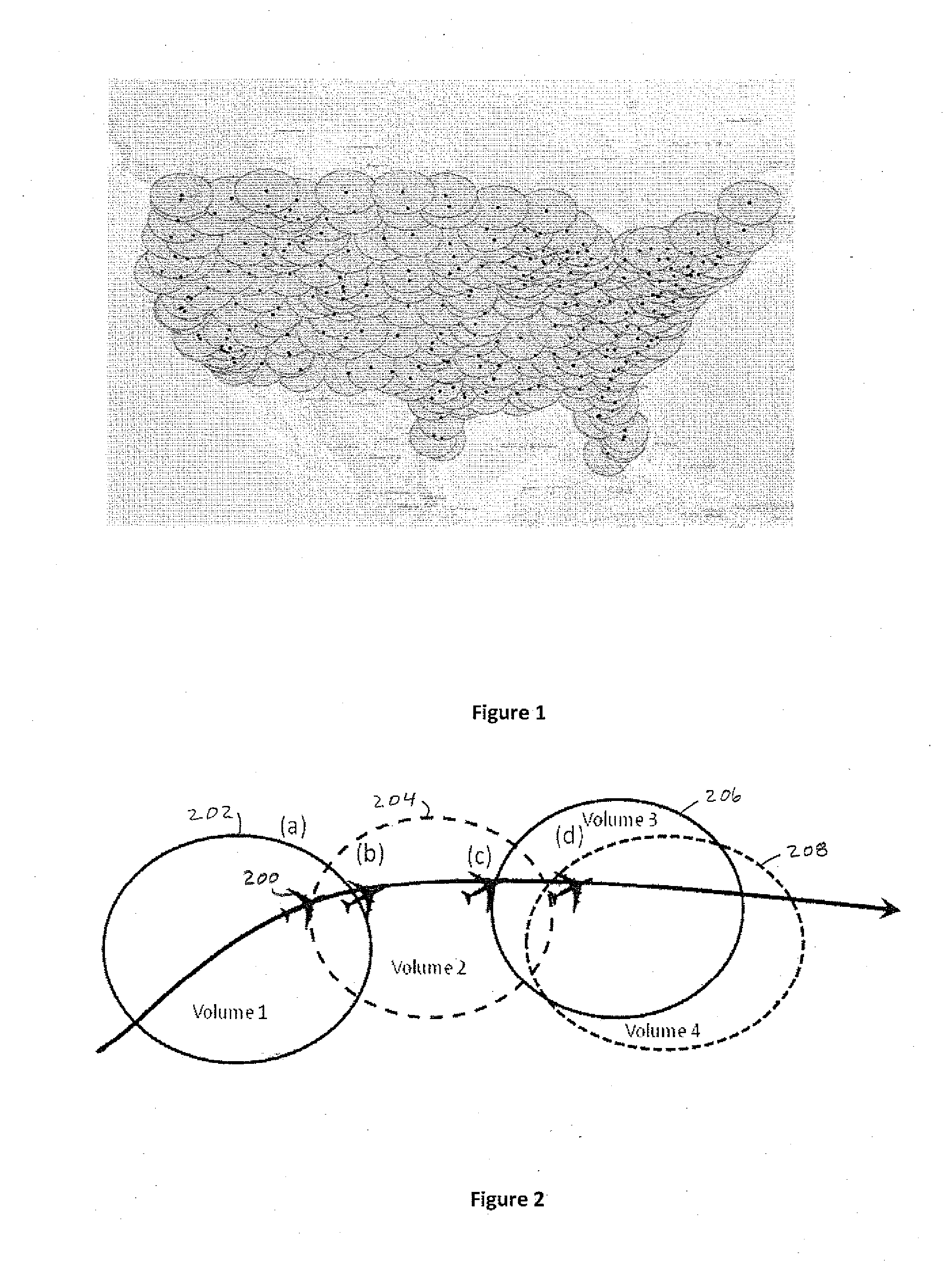

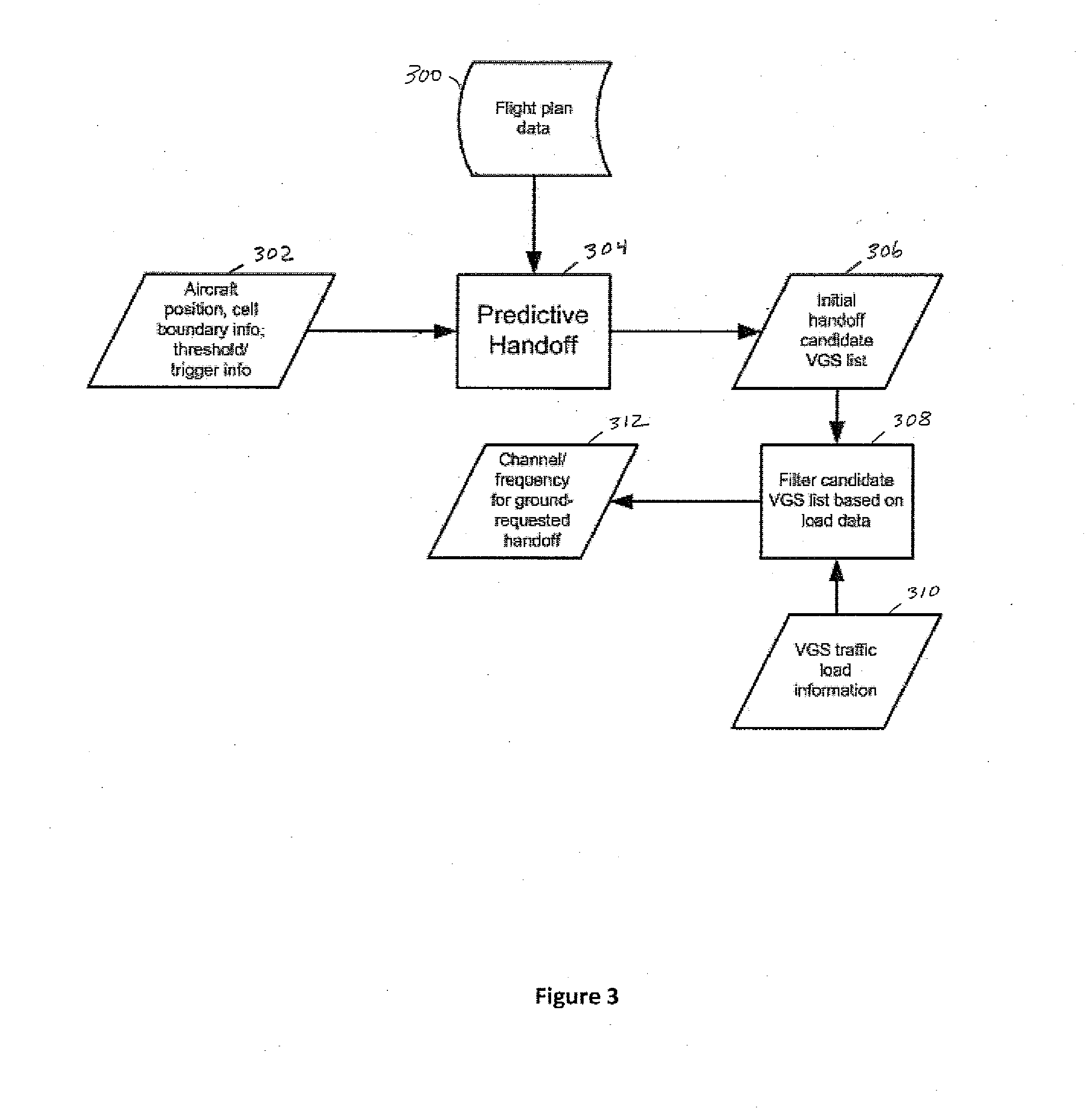

InactiveUS20120021740A1Optimal predictive handoff choiceTo achieve load balancingRadio transmissionWireless commuication servicesChannel occupancyAirplane

A predictive system and method for aircraft load balancing and handoff management leverages the aircraft flight plan as well as channel occupancy and loading information. Several novel techniques are applied to the load balancing and handoff management problem: Use of aircraft position and flight plan information to geographically and temporally predict the appropriate ground stations that the aircraft should connect to for handoff, and monitoring the load of ground stations and using the ground-requested, aircraft initiated handoff procedure to influence the aircraft to connect to lightly loaded ground stations.

Owner:TELCORDIA TECHNOLOGIES INC

Method and apparatus for load balancing internet traffic

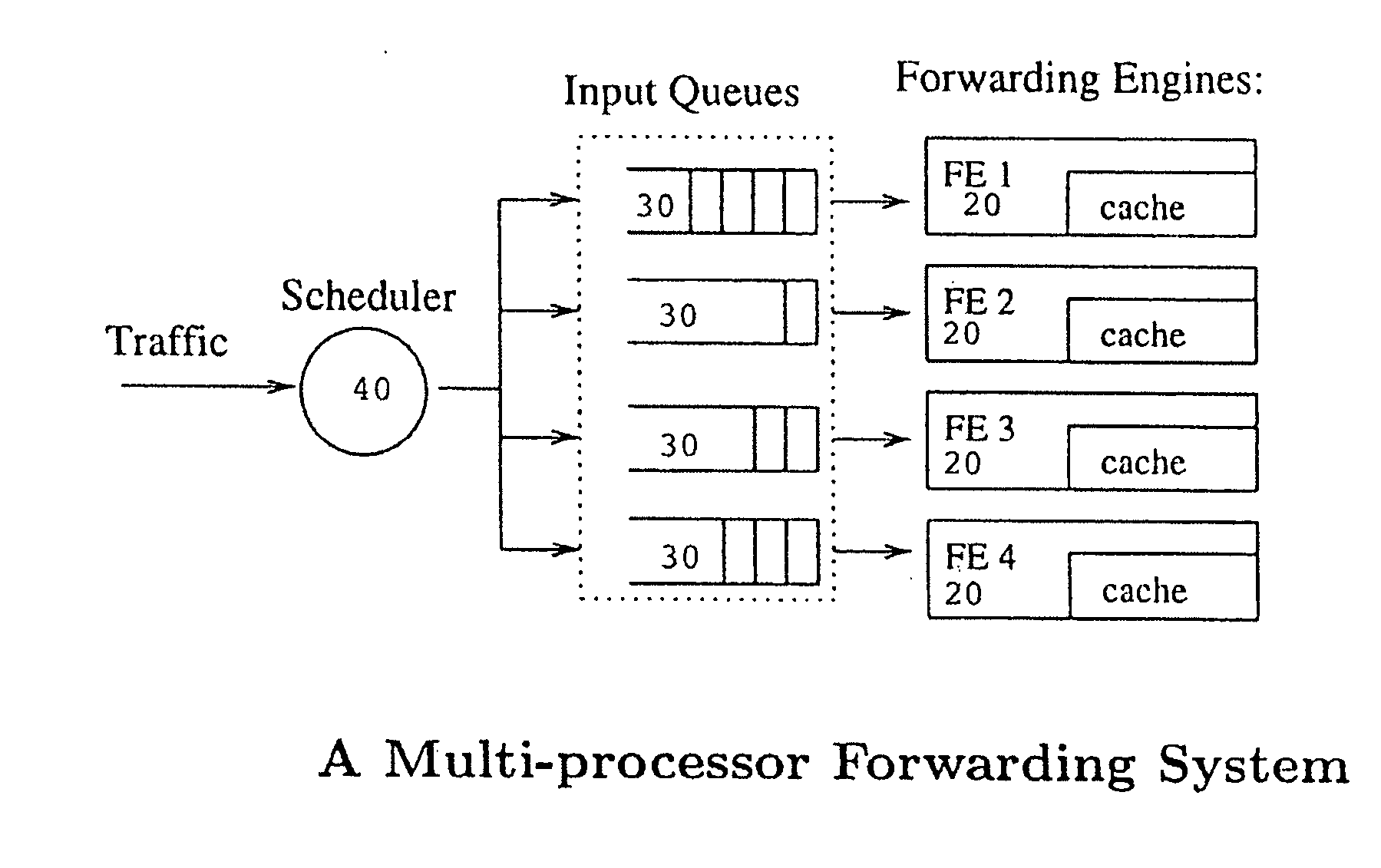

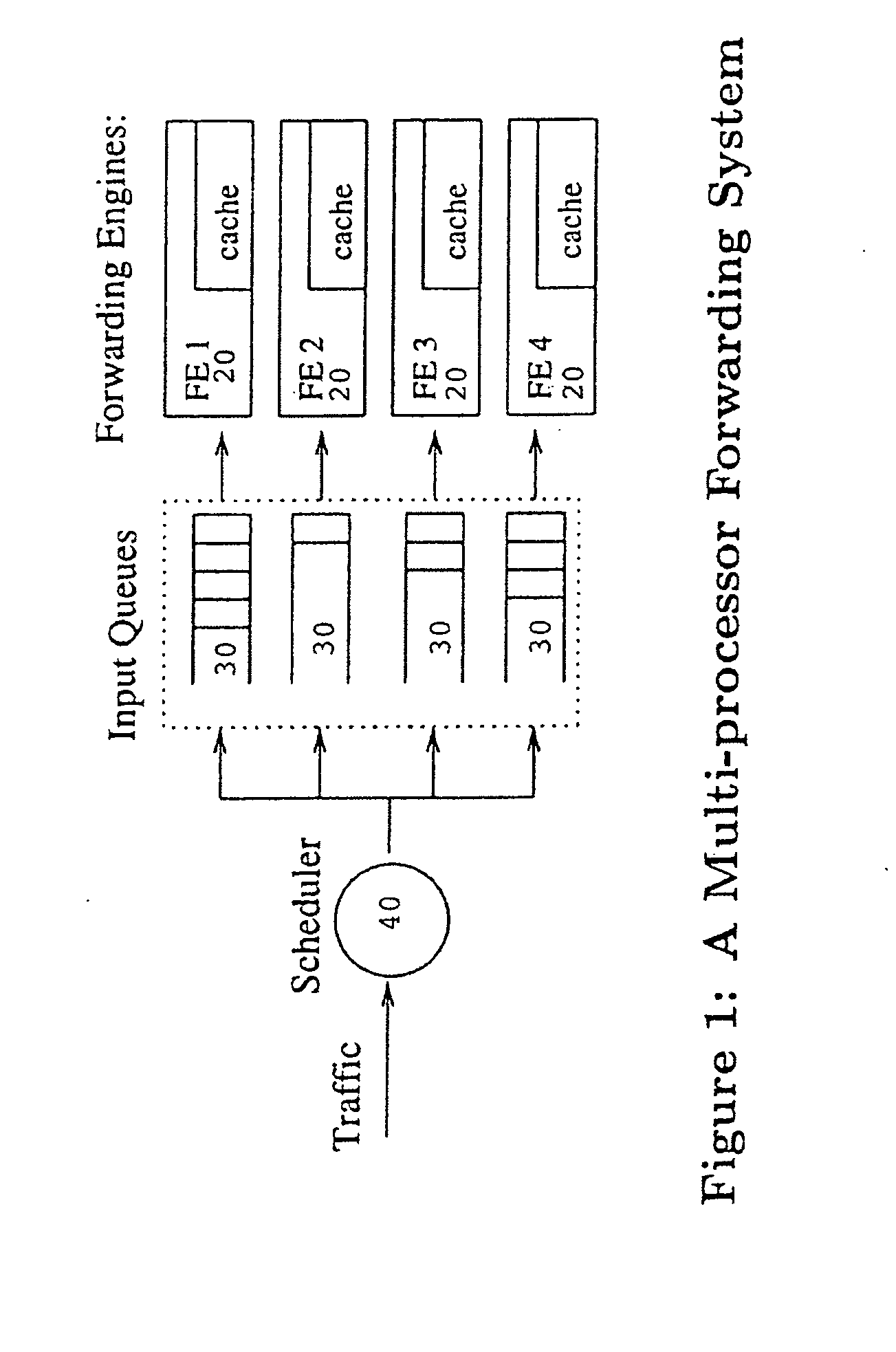

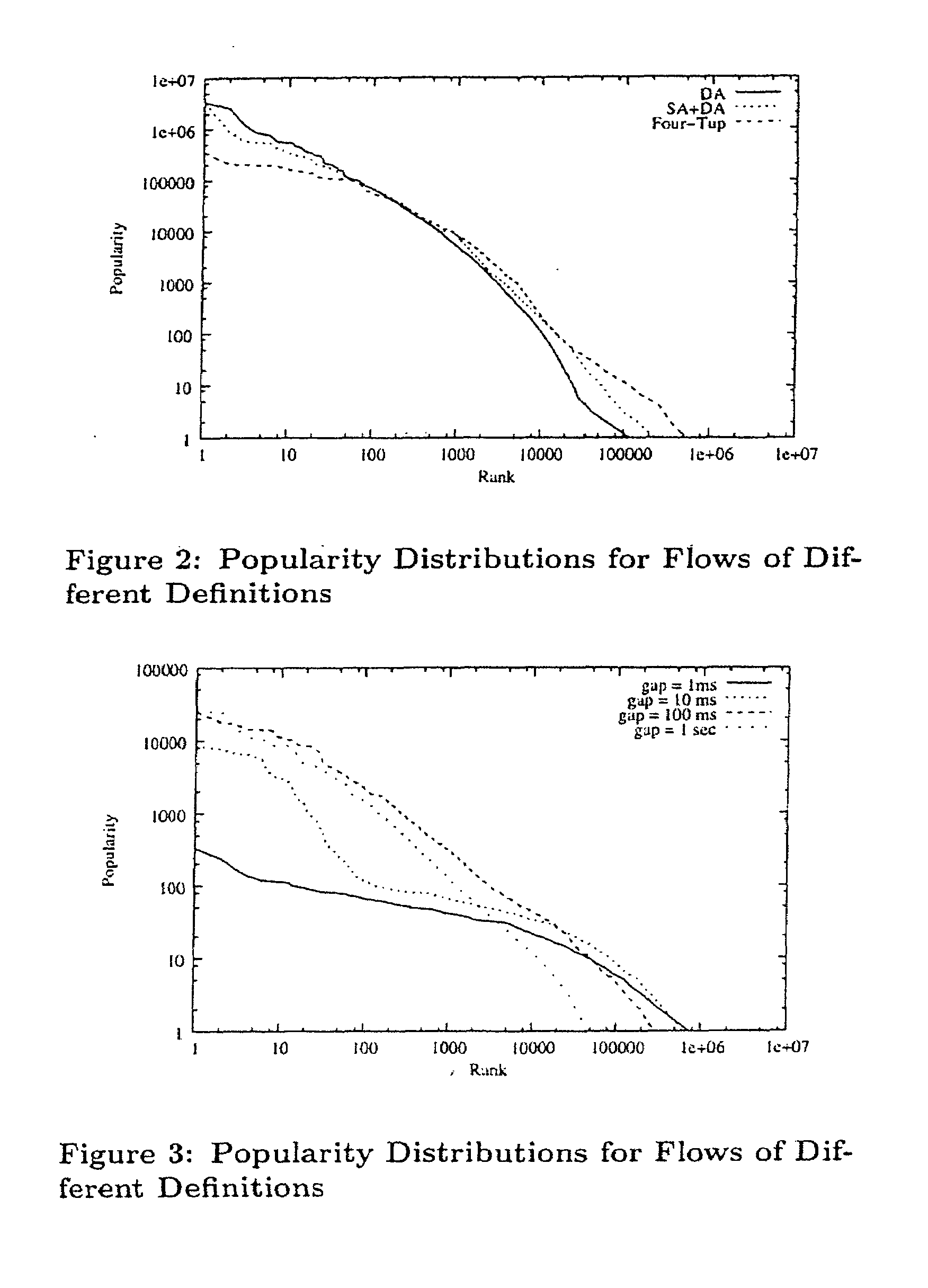

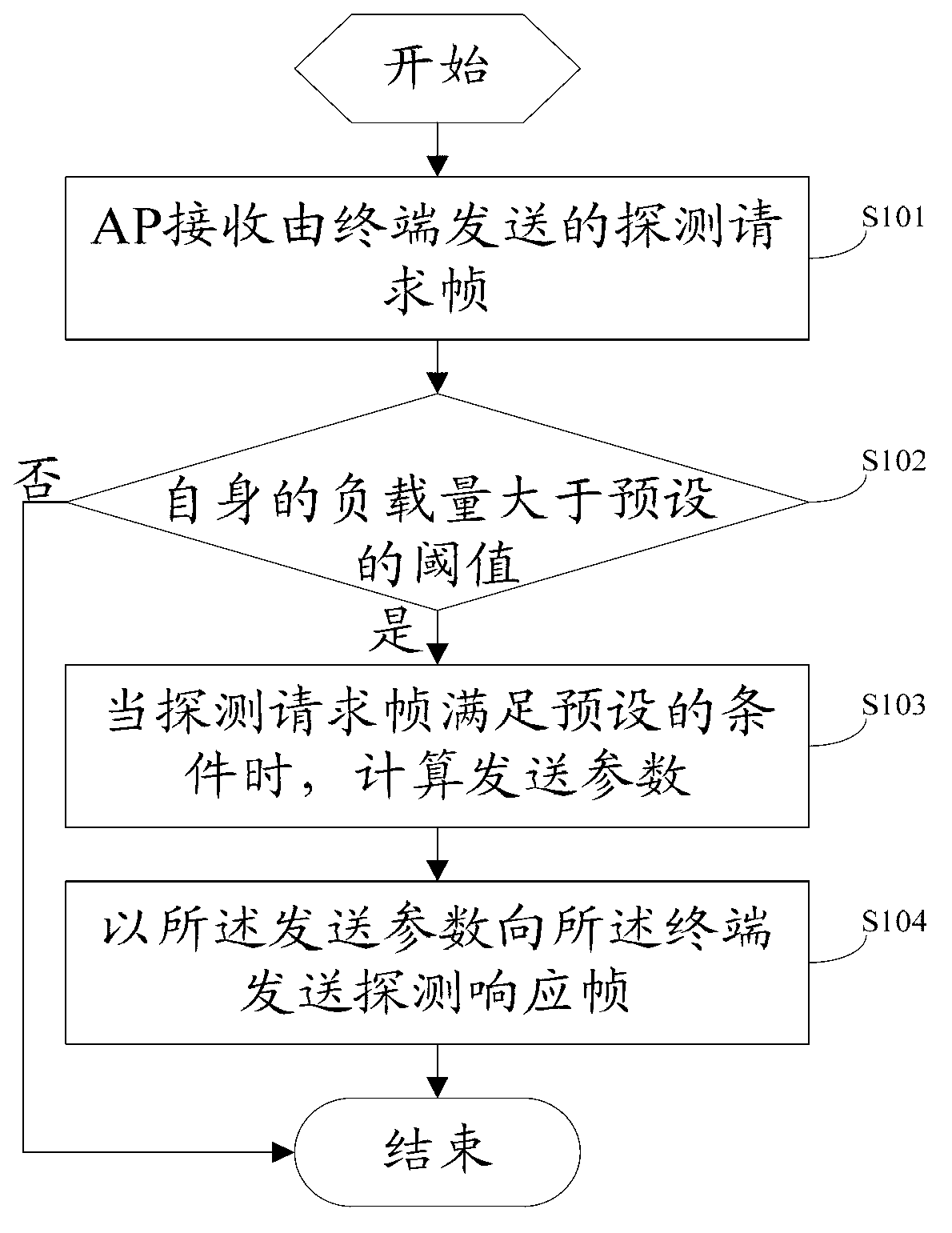

InactiveUS20080101233A1To achieve load balancingError preventionTransmission systemsTraffic capacityInternet traffic

A load balancer is provided wherein packets are transmitted to a burst distributor and a hash splitter. The burst distributor consults a flow table to make a determination as to which forwarding engine will receive the packet, and if the flow table is full, returns an invalid forwarding engine. A selector sends the packet to the forwarding engine returned by the burst distributor, unless the burst distributor returns an invalid forwarding engine, in which case the selector sends the packet to the forwarding engine selected by the hash splitter. The system is scalable by adding additional burst distributors and using a hash splitter to determine which burst distributor receives a packet.

Owner:THE GOVERNORS OF THE UNIV OF ALBERTA

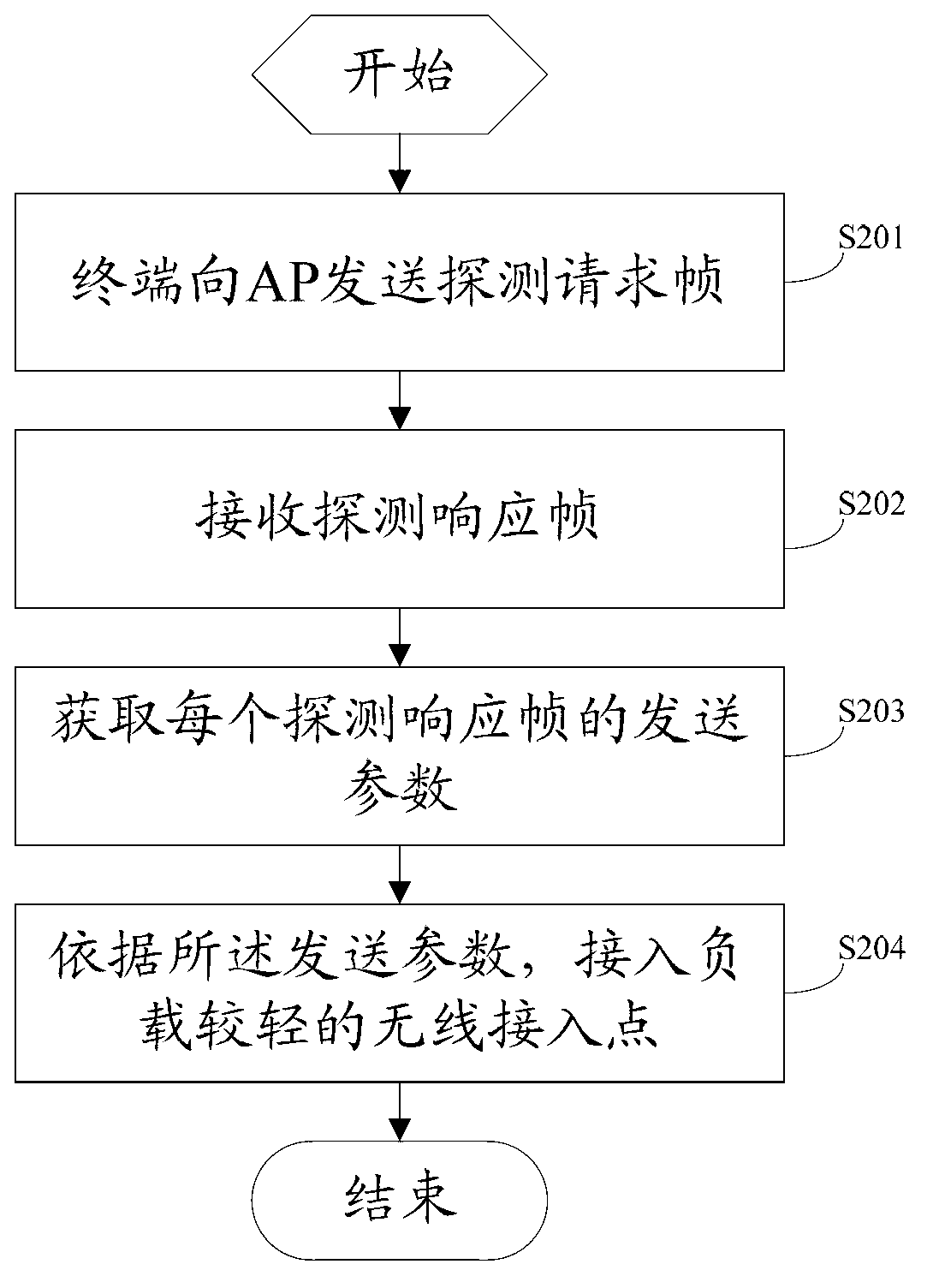

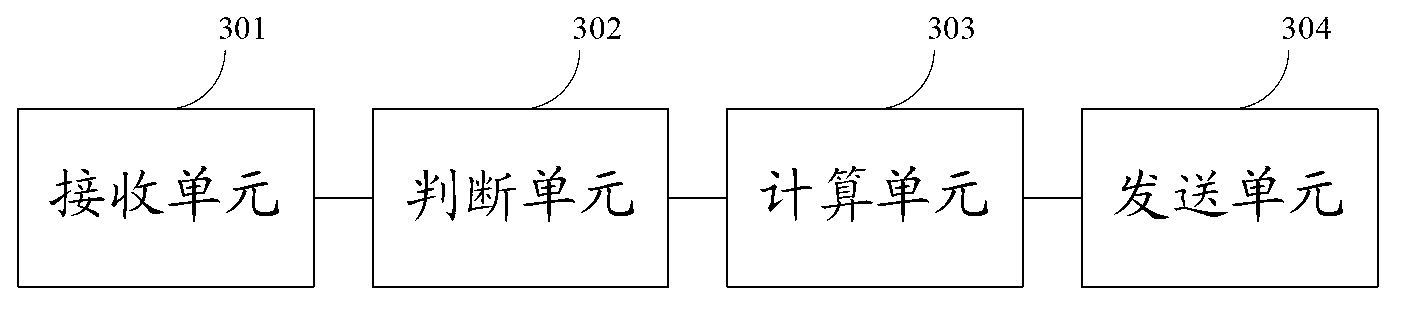

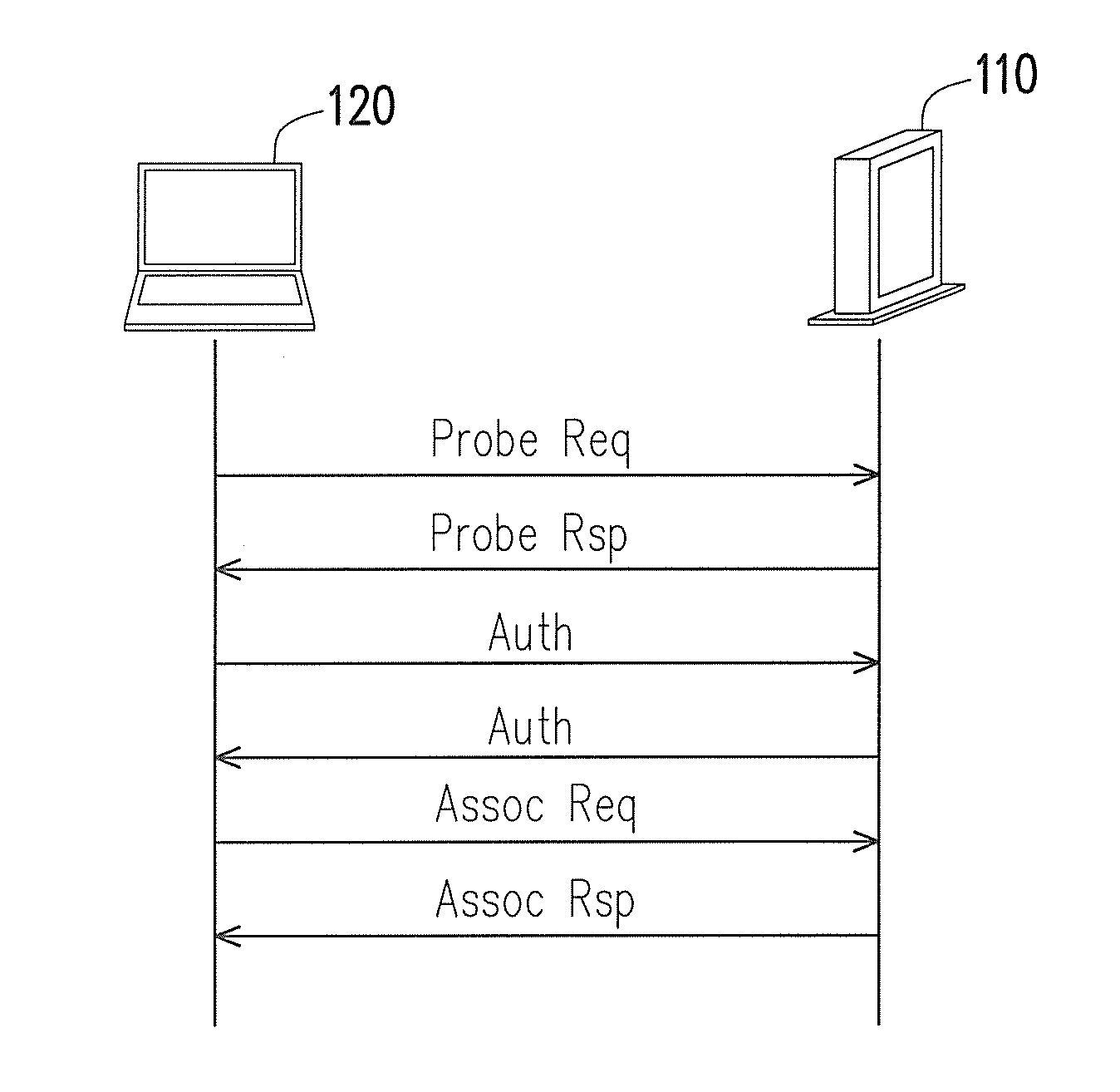

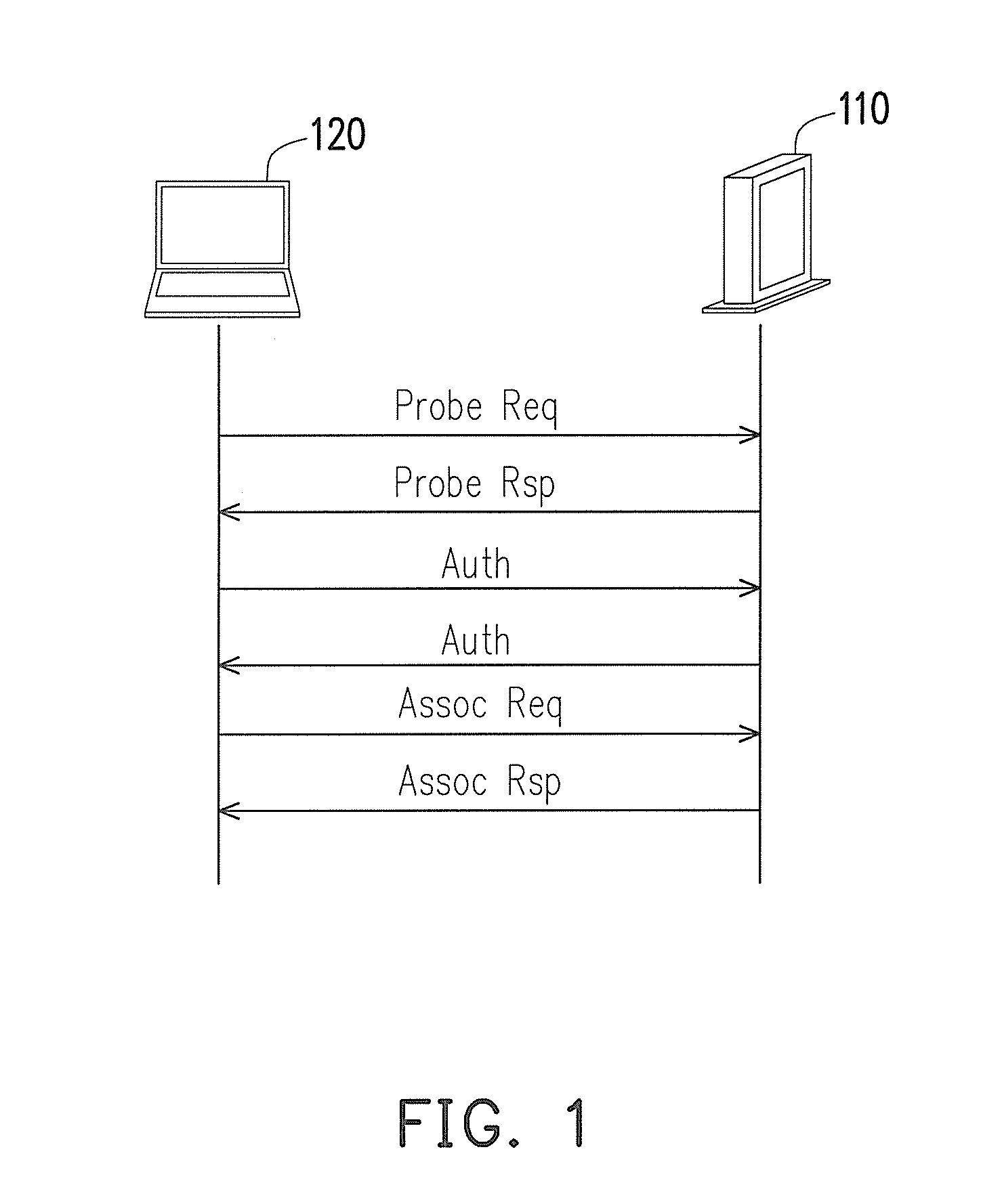

Wireless access point accessing method and wireless access point and terminal

ActiveCN102802232ATo achieve load balancingAvoid being offline for long periods of timeAssess restrictionConnection managementTelecommunicationsHeavy load

The invention provides a wireless access point accessing method which comprises the steps of receiving a detection requesting frame sent by a terminal to judge whether self-capacity is larger than a preset threshold, when the self-capacity is larger than the preset threshold and the detection requesting frame meets preset conditions, calculating sending parameters, and sending detection response frame to the terminal according to the sending parameters, so that the terminal can access to wireless access points with light load according to the sending parameters. The wireless access point accessing method can actively inform current capacity situation of the terminal, and the terminal can access into access points (AP) with light load according to sending parameters of different APs, thereby avoiding the fact that the terminal sends detection requesting frame to APs with heavy load for multiple times and is rejected for multiple times, further balancing load among APs, and avoiding the fact that users cannot get access to the internet for a long time.

Owner:HUAWEI TECH CO LTD

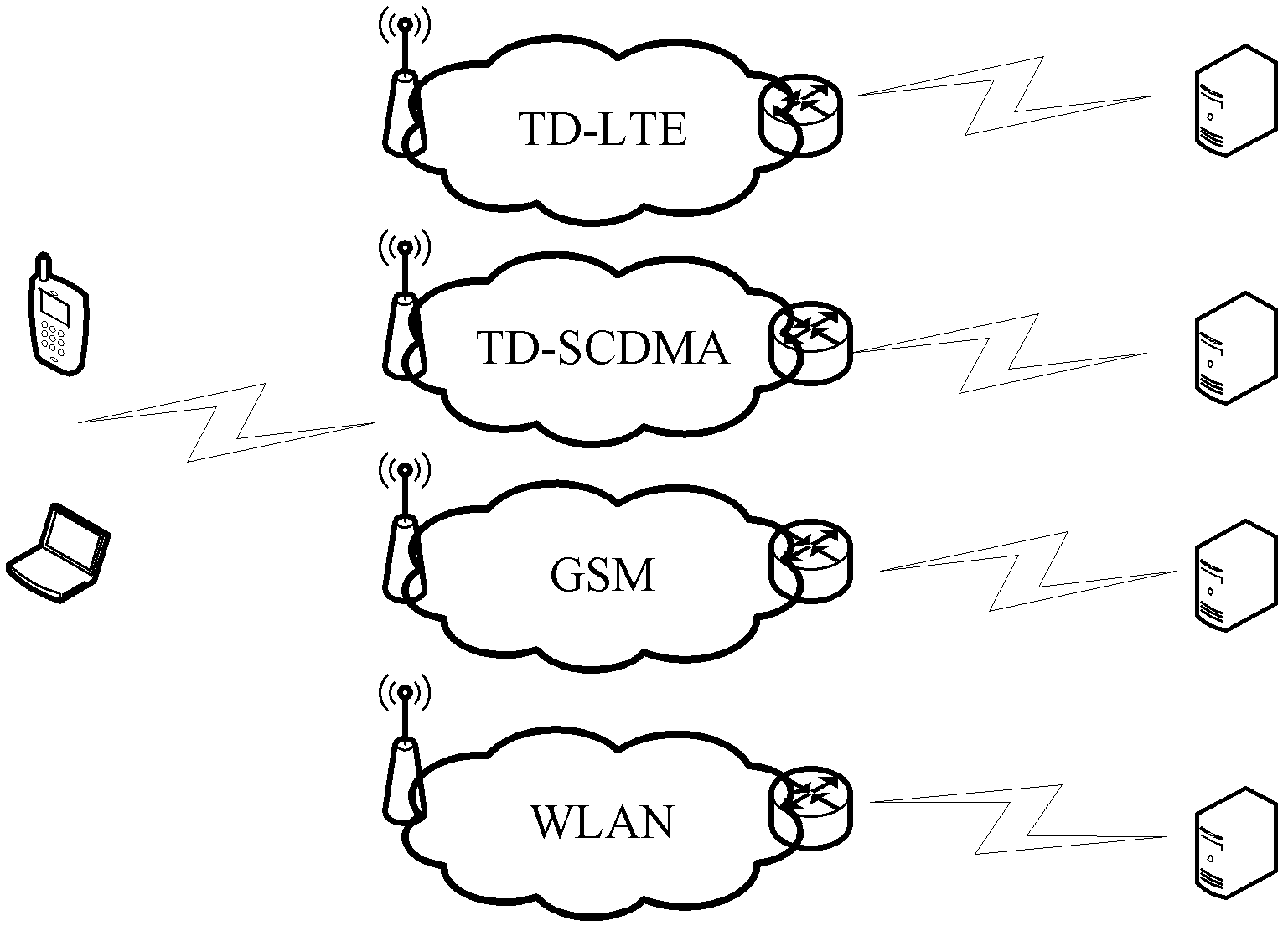

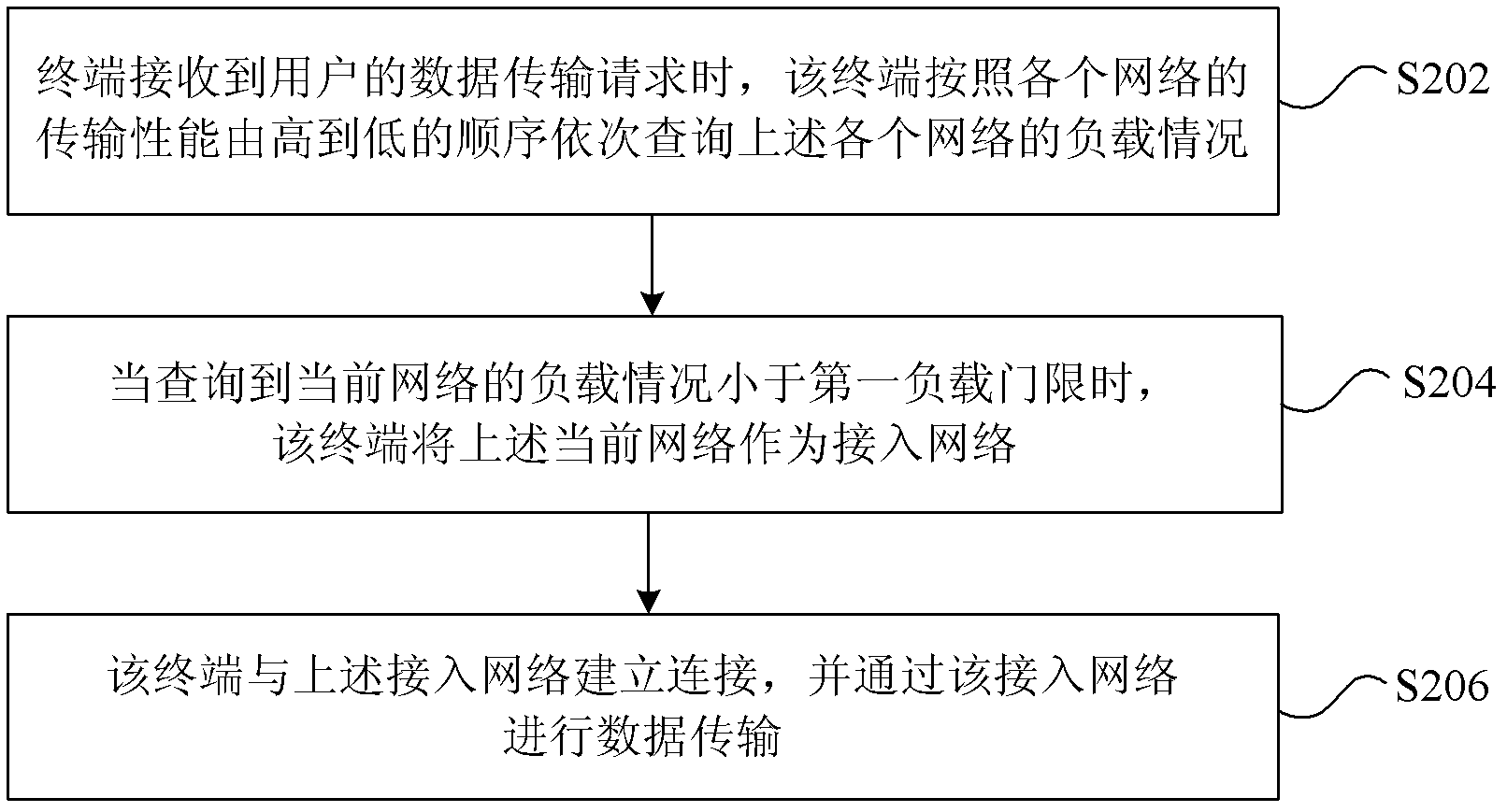

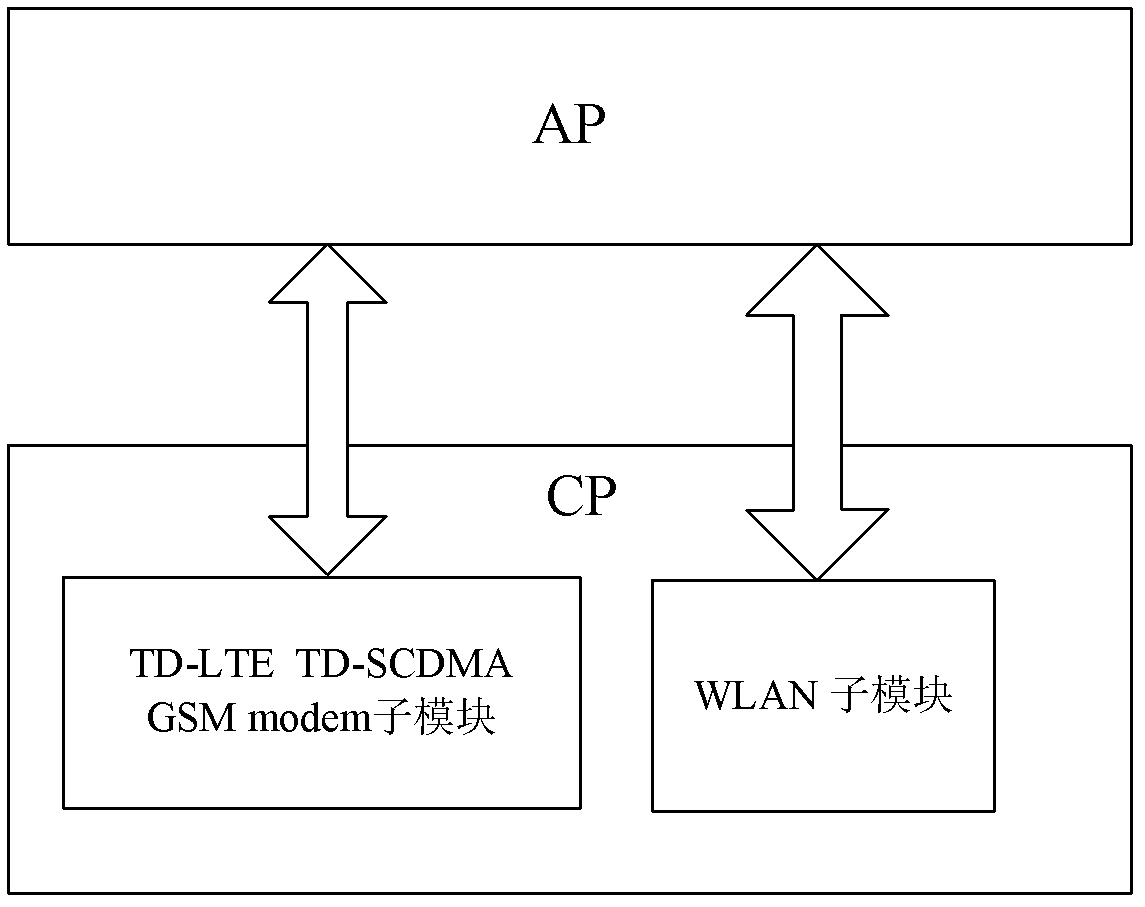

Multi-network-based data transmission method and device

InactiveCN102573010ASolve the problem that the network with better transmission performance cannot be usedQuality improvementAssess restrictionAccess networkHeterogeneous network

The invention discloses a multi-network-based data transmission method and a multi-network-based data transmission device. The multi-network-based data transmission method comprises the following steps: when a terminal receives a data transmission request of a user, querying the load conditions of all networks in sequence according to the decreasing sequence of the transmission performances of all the networks by the terminal; when the load condition of a current network is queried to be smaller than a first load threshold, regarding the current network as an access network by the terminal; and establishing a connection between the access network and the terminal and carrying out data transmission through the access network. According to the invention, the problem that multi-mode terminals in relevant technologies cannot use a network with a superior transmission performance is solved, and the terminal can select an appropriate network according to the load conditions of the networks, so that the network access quality of the terminal is increased and the load balance among heterogeneous networks is realized.

Owner:SHANGHAI ZTE SOFTWARE CO LTD

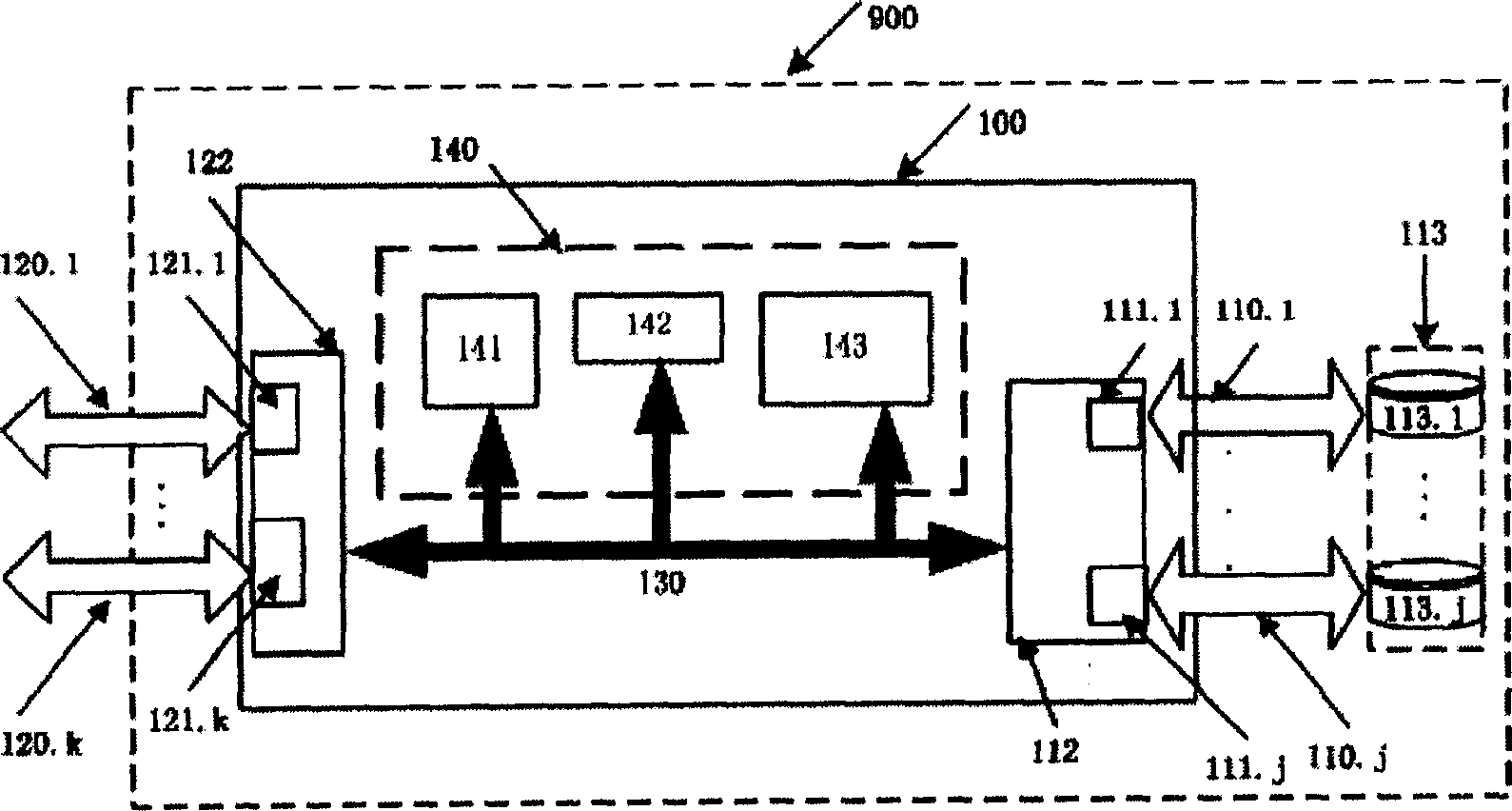

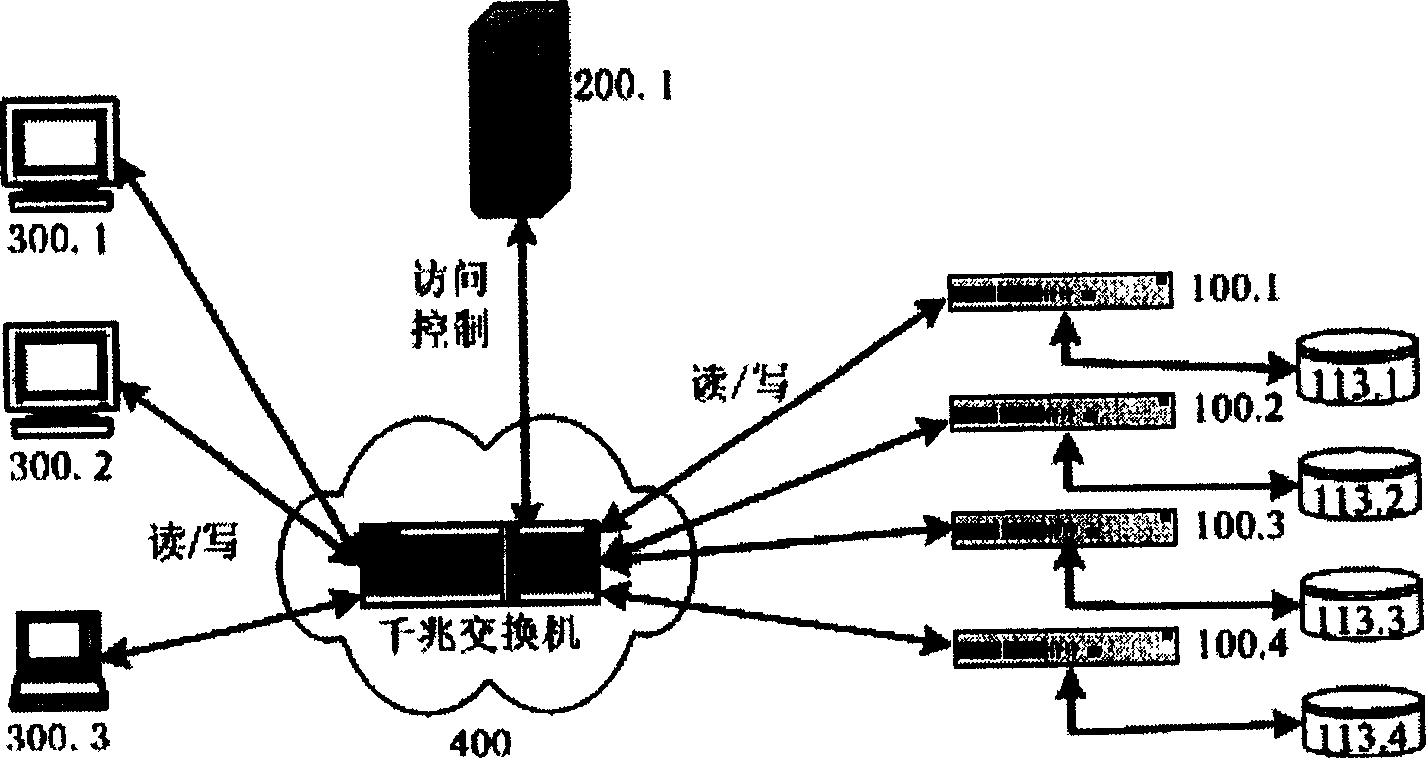

Expandable storage system and control method based on objects

ActiveCN1728665AEasy to expandImprove scalabilityData switching by path configurationSpecial data processing applicationsObject basedAdaptive management

Combining file with block mode, the invention puts forward a brand new object interface in order to overcome disadvantages of system structure and mode of user service in current mass storage system. The system includes I pieces of metadata server, N pieces of object storage node, and M pieces of client end connected to each other. Three parties among client ends, object storage node and severs are realized. The system provides high expandability, and synchronous growth of memory capacity and collective bandwidth of data transmission. Changing traditional data management and control mode, object storage node OSN executes fussy functions for managing data at bottom layer of file system, and MS implements management of metadata. Based on object storage, the system realizes adaptive management function.

Owner:HUAZHONG UNIV OF SCI & TECH

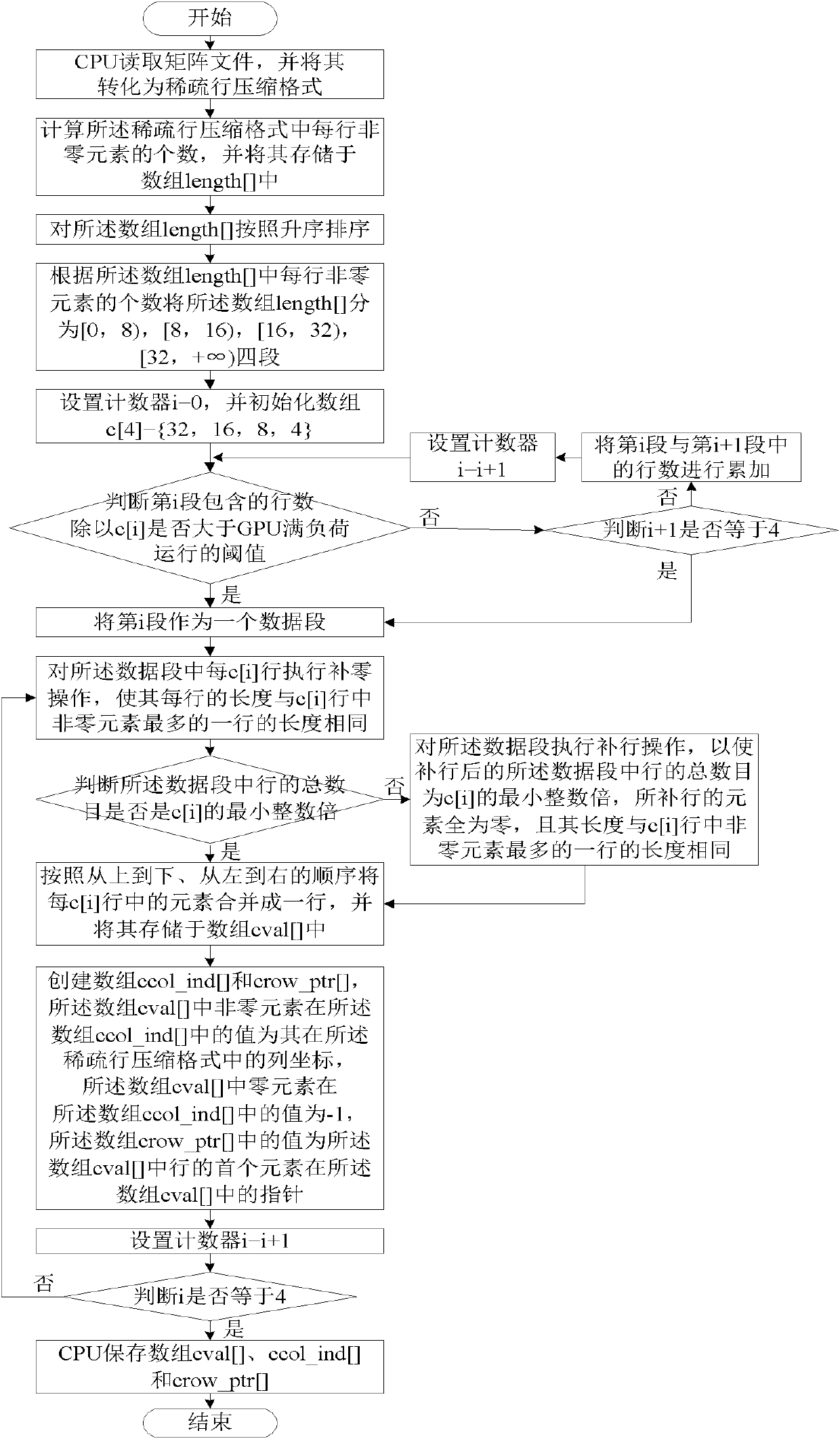

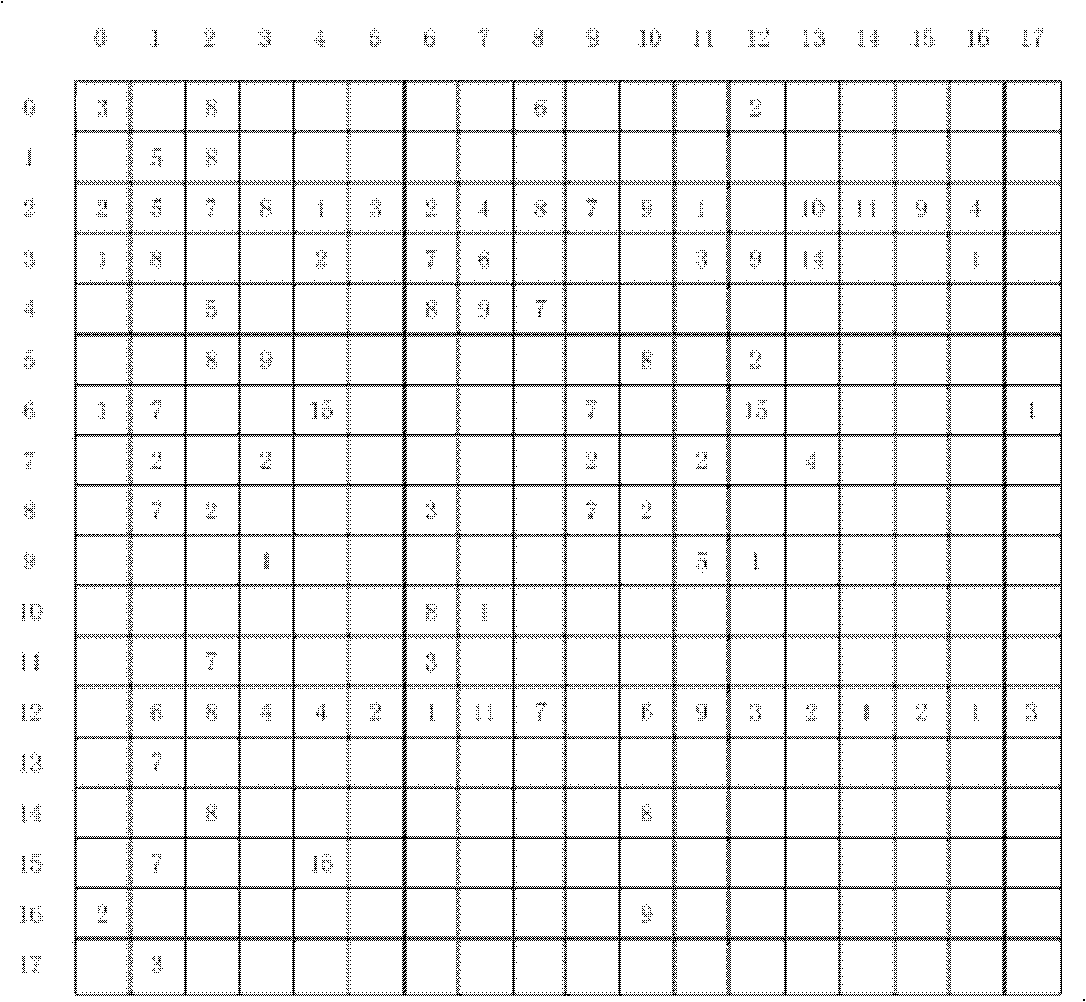

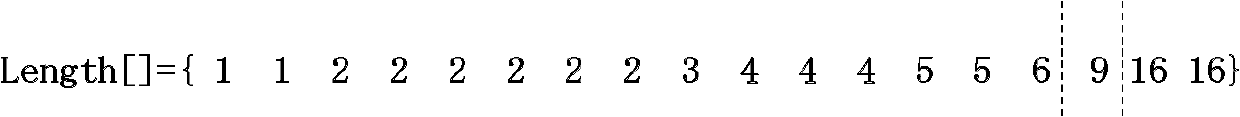

Sparse matrix data storage method based on ground power unit (GPU)

InactiveCN102436438AReduce reduction stepsAvoid thread idlingComplex mathematical operationsDisplay memoryEngineering

The invention discloses a sparse matrix data storage method based on a ground power unit (GPU). The method comprises the following steps of: 1), sequencing the line length array length [] according to ascending order; 2), classifying the array length [] into four sections of [0, 8), [8, 16), [16, 32), [32, +infinity) according to the number of every line of non-zero element; respectively combining the 32nd, 16th, 8th, 4th lines in every section; 3], zeroizing the line in every data section and performing the line filling operation on every data section, wherein the element of the filled line is zero completely; 4], generating three one-dimensional arrays of cval [], ccol_ind [], crow_ptr [] of the SC-CSR format. In the method of the invention, the line length change amplitude of every line is reduced via segment treatment, thereby reducing the load unbalance between the thread bunch and the thread block; the adjacent lines are staggered and combined to avoid the resource waste of the thread bunch calculation when the non-zero element is less than 32, and to improve the efficiency of joint access of the CUDA display memory and decrease the step of calculating kernel and reducing lines, and therefore obviously improving the calculating performance of the vector multiplication of the sparse matrix.

Owner:HUAZHONG UNIV OF SCI & TECH

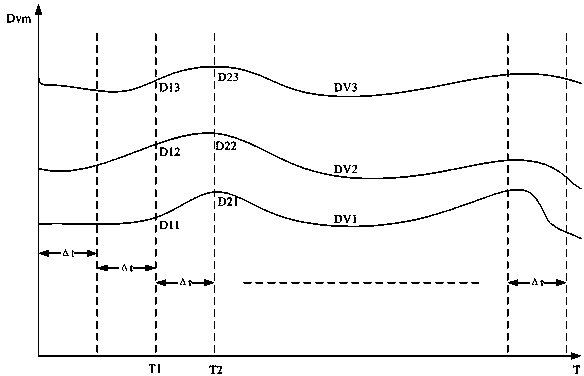

Virtual machine cluster resource allocation and scheduling method

ActiveCN103473139AReduce resource usageTo achieve load balancingResource allocationSoftware simulation/interpretation/emulationVirtual machineResource use

The invention discloses a virtual machine cluster resource allocation and scheduling method. The method comprises the following steps: S1, seeking a load alert time point of a virtual machine from a historical load database, wherein the load alert time point is a moment when a virtual machine resource utilization Dvm of more than one virtual machine reaches an alert value ALR; S2, presetting a first time length mu, starting a physical machine at a moment mu before the load alert time point, wherein the added physical resource SN of the newly started physical machine is required to be greater than N*Mvm*A2, and migrating the virtual machine to the newly started physical machine. By using the virtual machine cluster resource allocation and scheduling method, the load alert time point is determined according to historical data, and the physical machine is started in advance to perform shunt migration on the virtual machine to guarantee that the resource utilization rate of each virtual machine is reduced in advance; the physical machine is started in advance and the virtual machine is smoothly migrated, so that the purpose of load balance is achieved.

Owner:SICHUAN ZHONGDIAN AOSTAR INFORMATION TECHNOLOGIES CO LTD

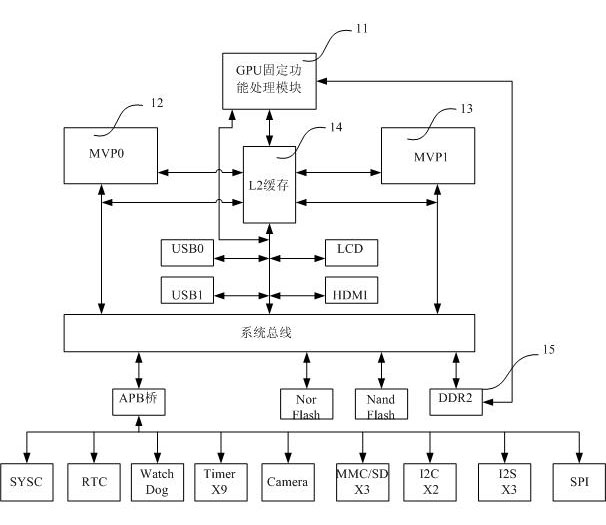

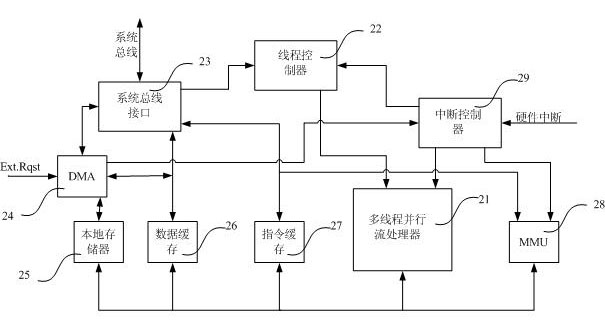

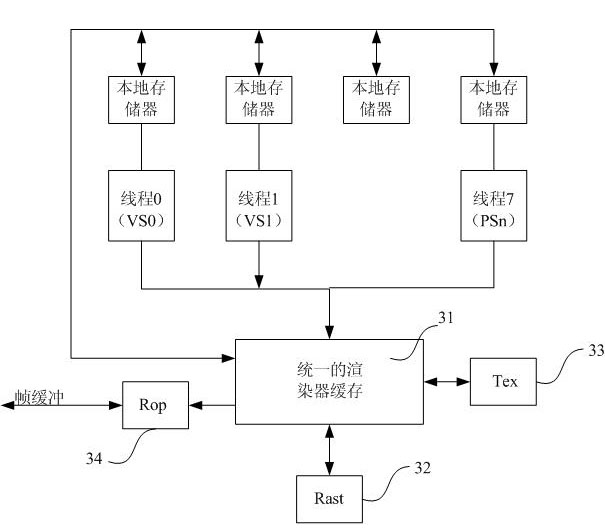

Multithreading processor realizing functions of central processing unit and graphics processor and method

ActiveCN102147722AUsable area The usable area is smallTo achieve load balancingResource allocationConcurrent instruction executionFixed-functionGraphics

Owner:SHENZHEN ZHONGWEIDIAN TECH

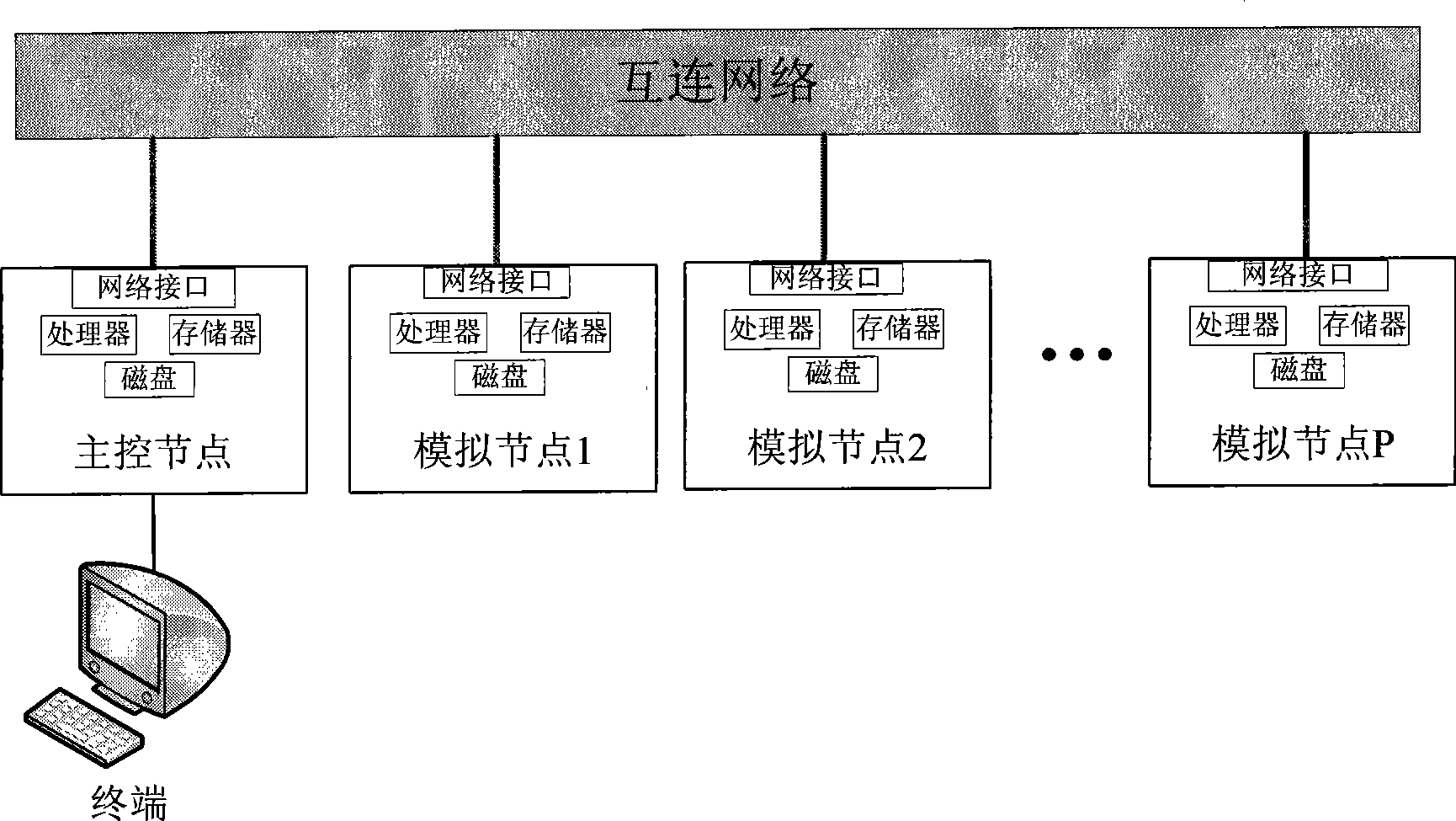

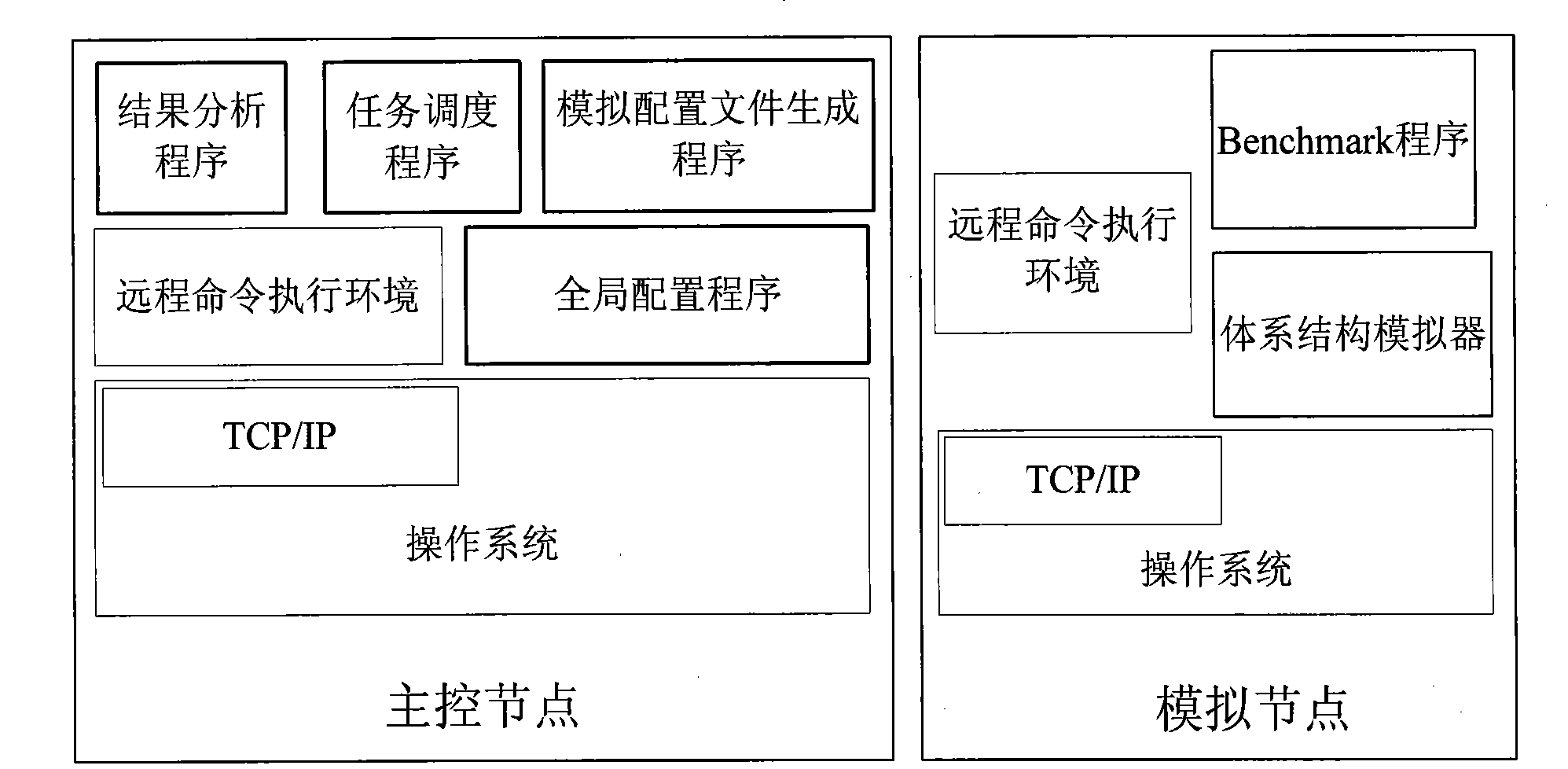

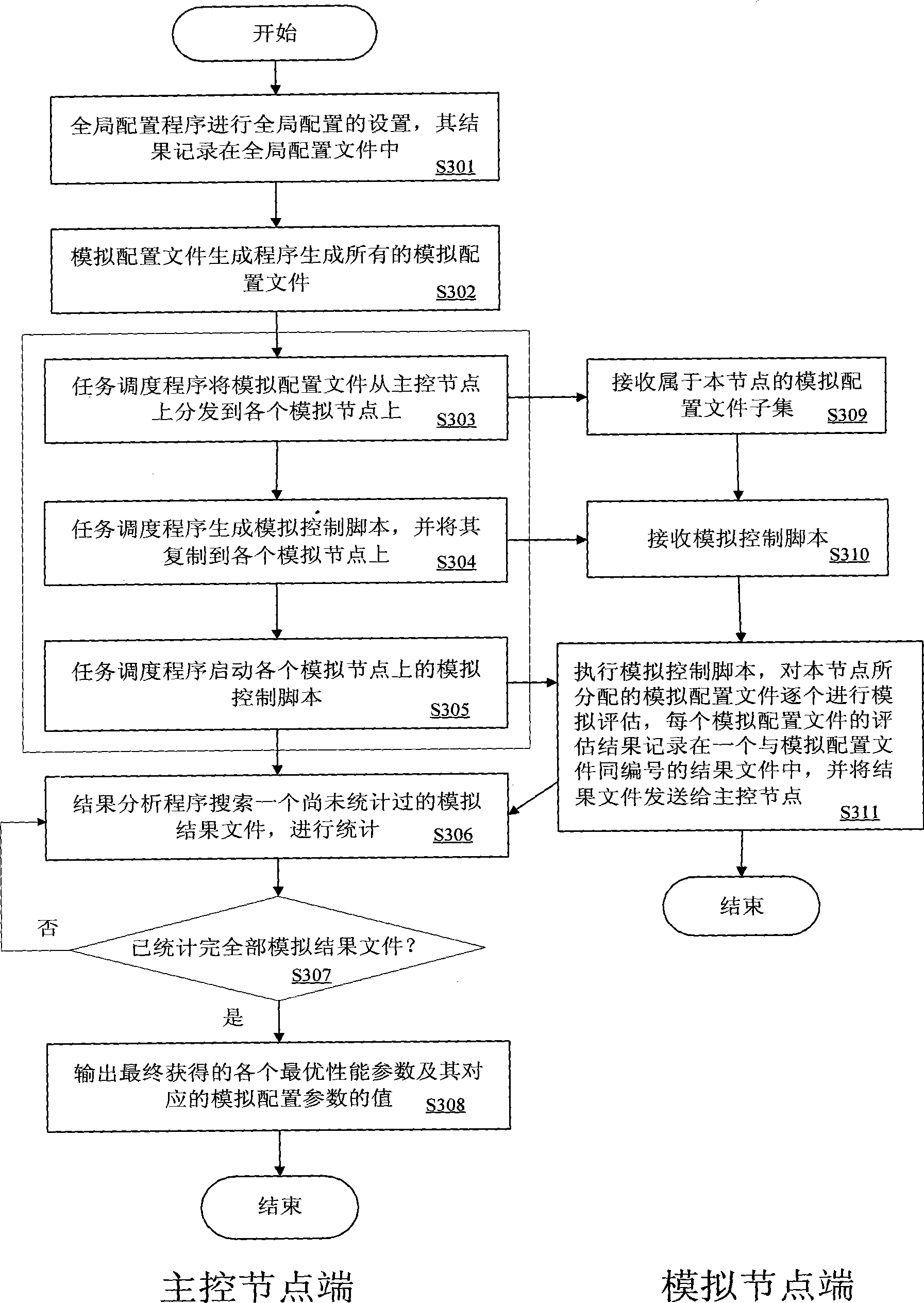

Computer architecture scheme parallel simulation optimization method based on cluster system

InactiveCN101464922ARealize automatic generationRealize automatic schedulingDigital computer detailsSoftware simulation/interpretation/emulationCluster systemsComputerized system

The invention discloses a parallel method for simulating and optimizing the computer architecture scheme based on a cluster system, and aims to provide a parallel method for simulating and optimizing the design scheme of the computer architecture. The technical scheme is that a parallel computer system which consists of a main control node and simulation nodes and is provided with a remote command execution environment is firstly built, and a global configuration program, a simulated configuration file generating program, a task dispatching program and a result analyzing program are arranged on the main control node, wherein, the global configuration program is used for arranging global configuration; the simulated configuration file generating program is used for generating all simulated configuration files; the task dispatching program distributes simulation evaluation tasks to each node, controls each simulation node and performs simulation evaluation; and the result analyzing program searches simulation result files sent from the simulation nodes for statistics, screens out optimal configuration parameter values, and outputs a report. By adopting the invention, the time for evaluation and optimization can be reduced, and the selection accuracy is improved.

Owner:NAT UNIV OF DEFENSE TECH

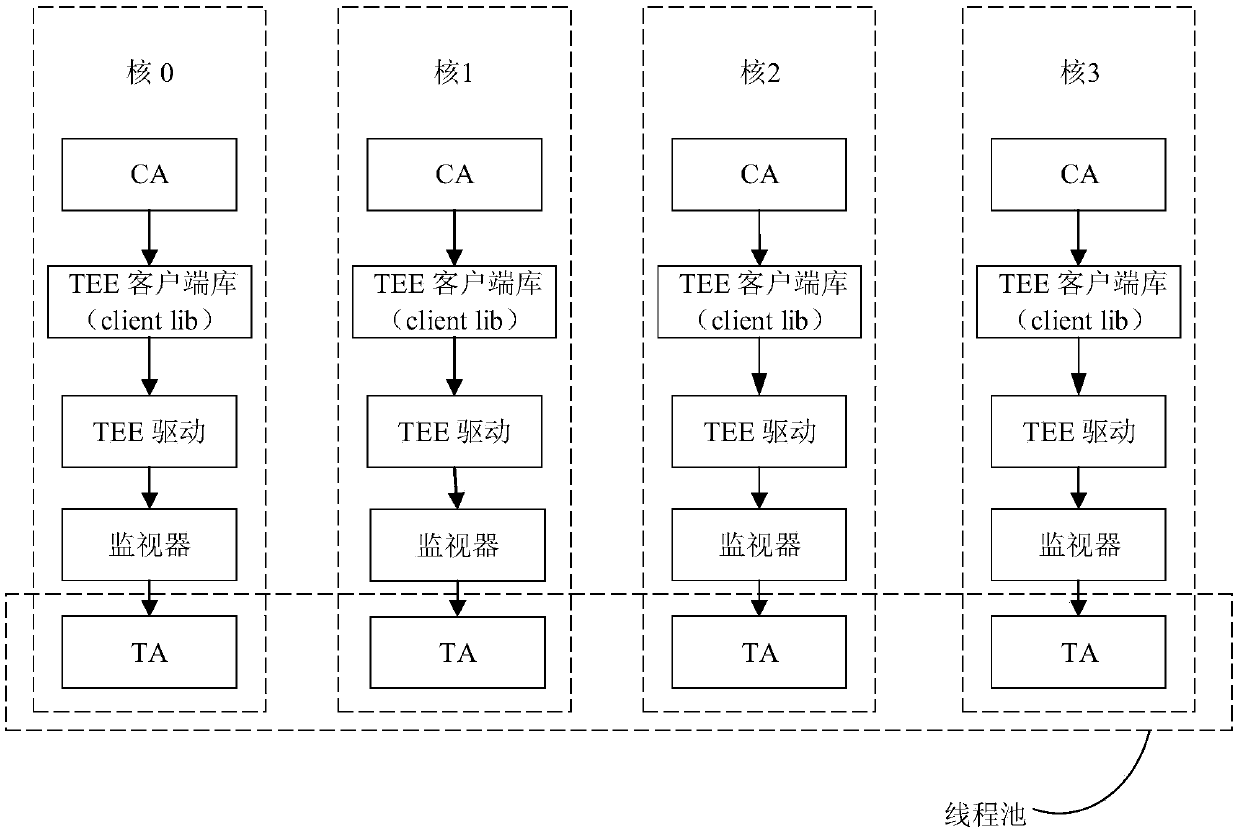

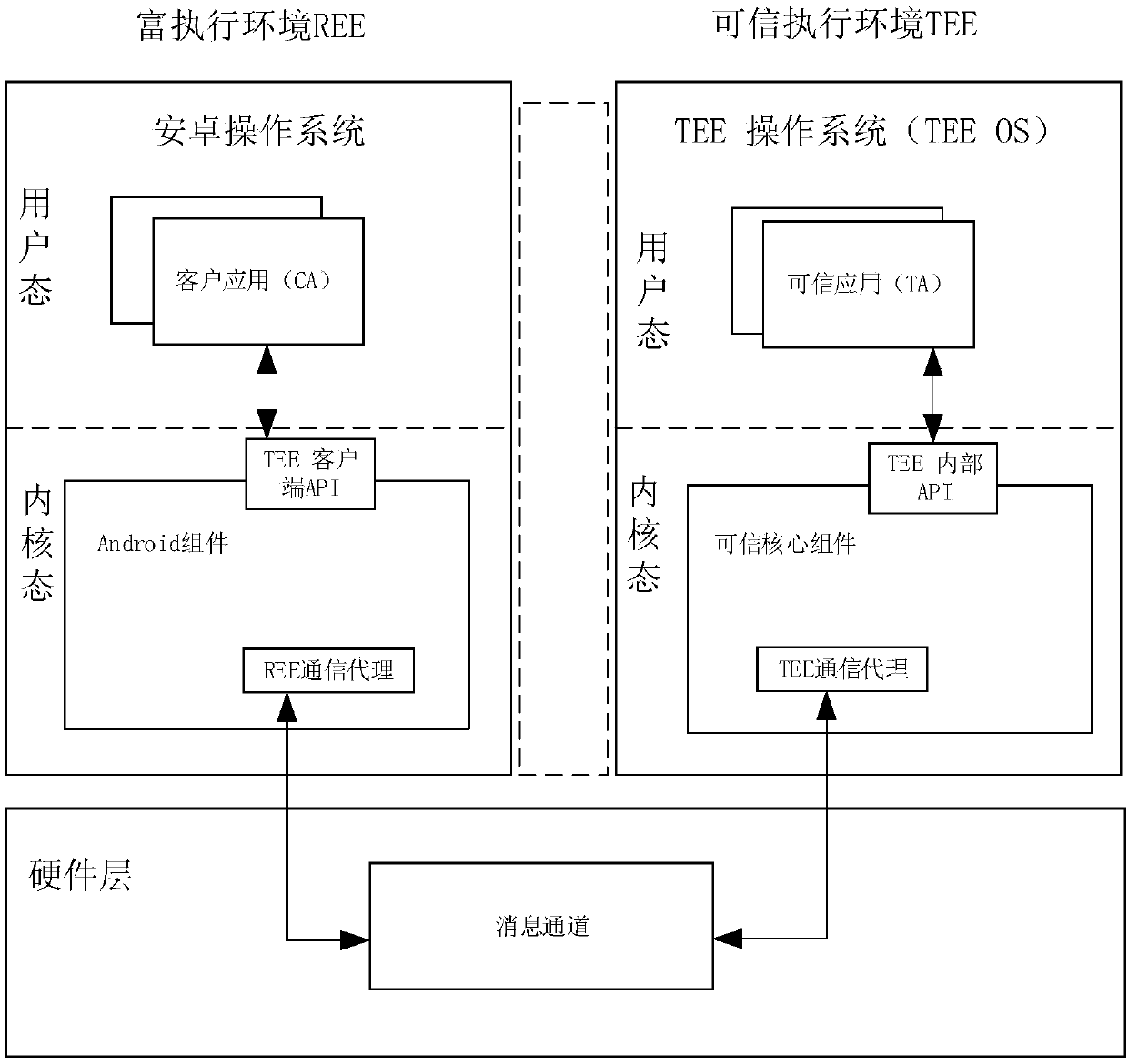

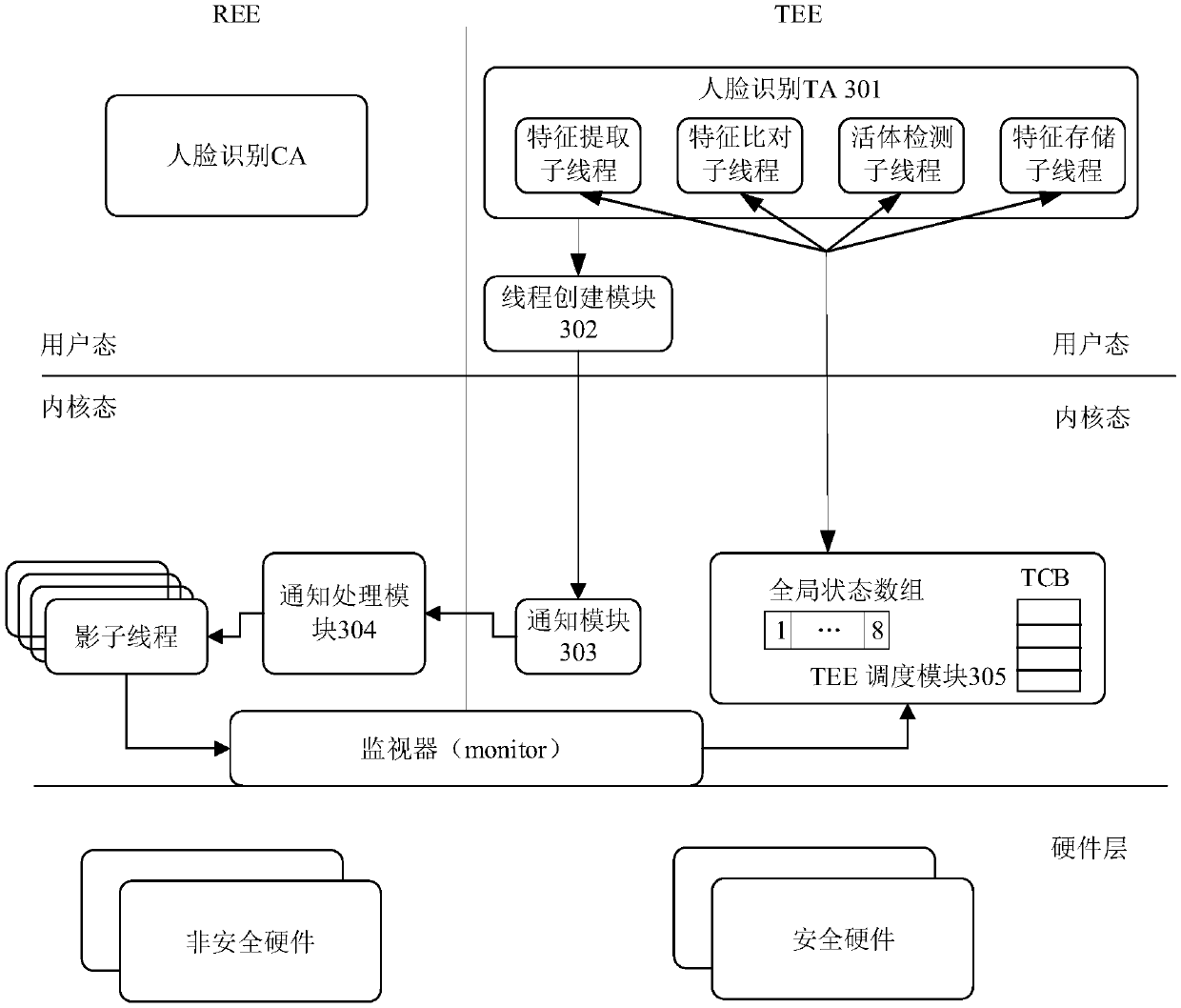

Method, device and system for realizing multi-core parallel at TEE side

ActiveCN109960582AImplement the callImprove performanceResource allocationInternal/peripheral component protectionParallel computingComputerized system

The invention provides a method and a device for realizing multi-core parallel at a TEE side, a computer system and the like. The method comprises the following steps: a TEE creates a plurality of sub-threads, wherein the sub-threads are used for realizing sub-functions of a TA deployed at a TEE side; for each sub-thread, the TEE triggers a rich execution environment (REE) to generate a shadow thread corresponding to the sub-thread, and the operation of the shadow thread promotes a core running the shadow thread to enter the TEE; and the TEE schedules the created sub-thread to the core where the corresponding shadow thread is located for execution. By utilizing the method, a plurality of service logics in the service with high performance requirements can be operated in parallel in the TEE, and the TEE triggers the REE to generate the thread and automatically enters the TEE side, so that active kernel adding at the TEE side is realized, and the parallel flexibility of the TEE side is improved.

Owner:HUAWEI TECH CO LTD

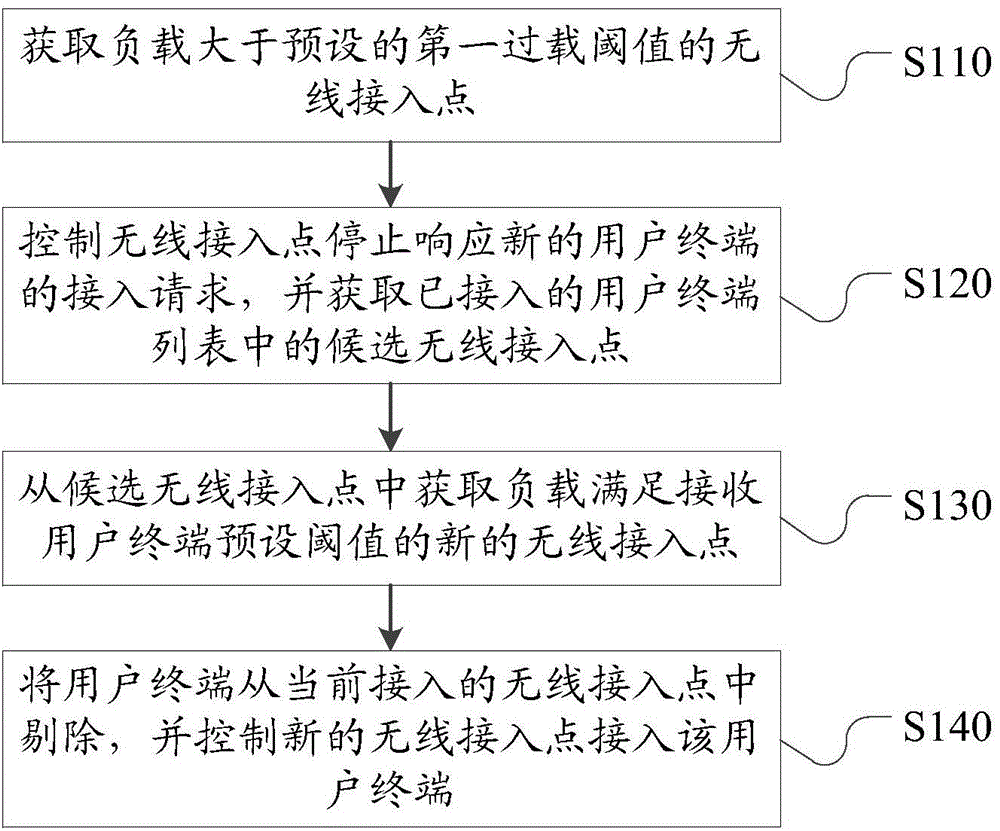

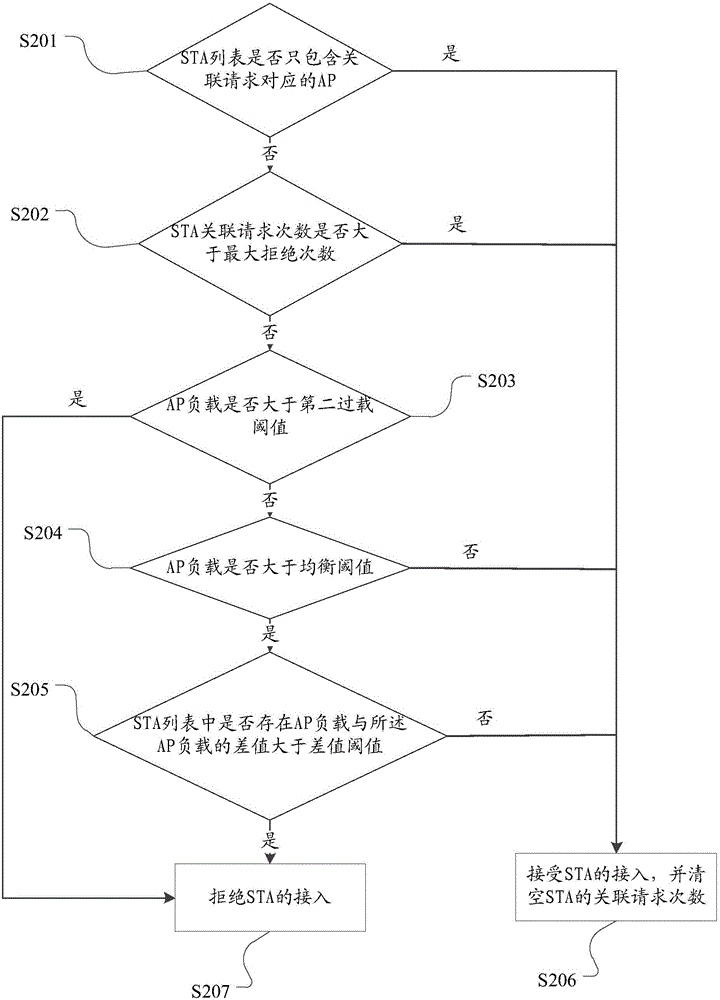

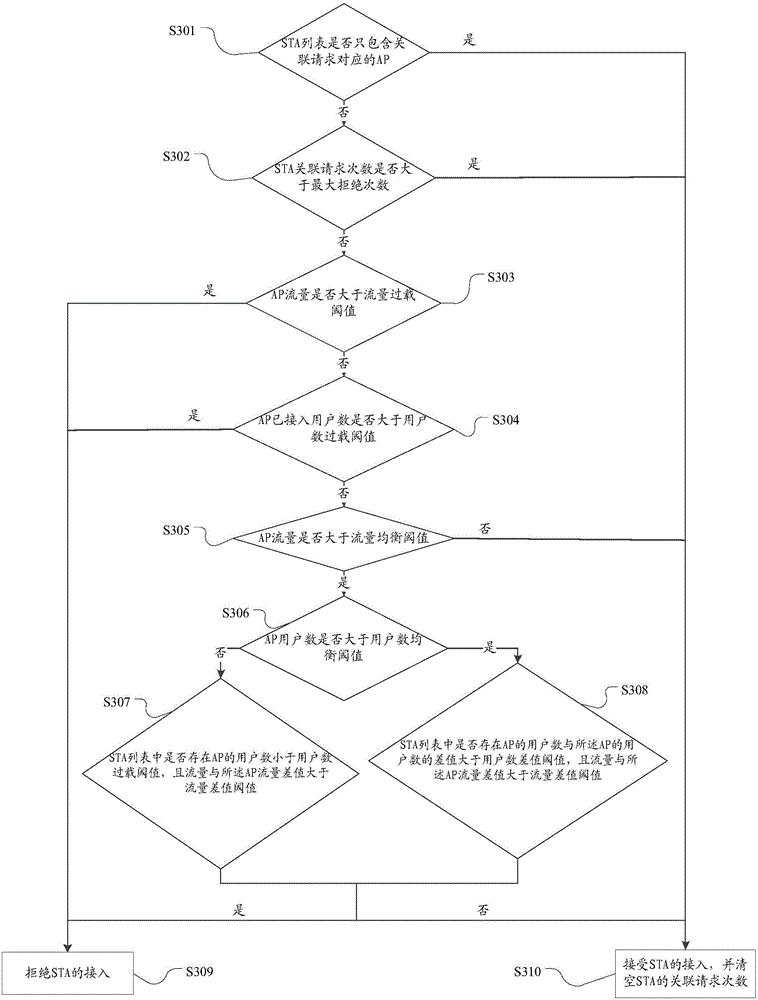

Load balancing method of concentrated-type wireless local area network and access controller

ActiveCN104023357ALoad adjustment in timeTo achieve load balancingNetwork traffic/resource managementAssess restrictionStructure of Management InformationComputer terminal

The invention provides a load balancing method of a concentrated-type wireless local area network and an access controller. The method includes the steps of obtaining a wireless access point with a load larger than a preset first overload threshold value, controlling the wireless access point to stop responding to an access request of a new user terminal, obtaining a candidate wireless access point in an accessed user terminal list which records information of correlated candidate wireless access points of user terminals, obtaining a new wireless access point with a load meeting a preset threshold value of the new user terminal from the candidate wireless access point, removing the user terminal from the wireless access point accessed currently and controlling the new wireless access point to have access to the user terminal. When a network topological structure is changed, loads of APs are adjusted in time, and loads in the whole network are balanced.

Owner:COMBA TELECOM SYST CHINA LTD

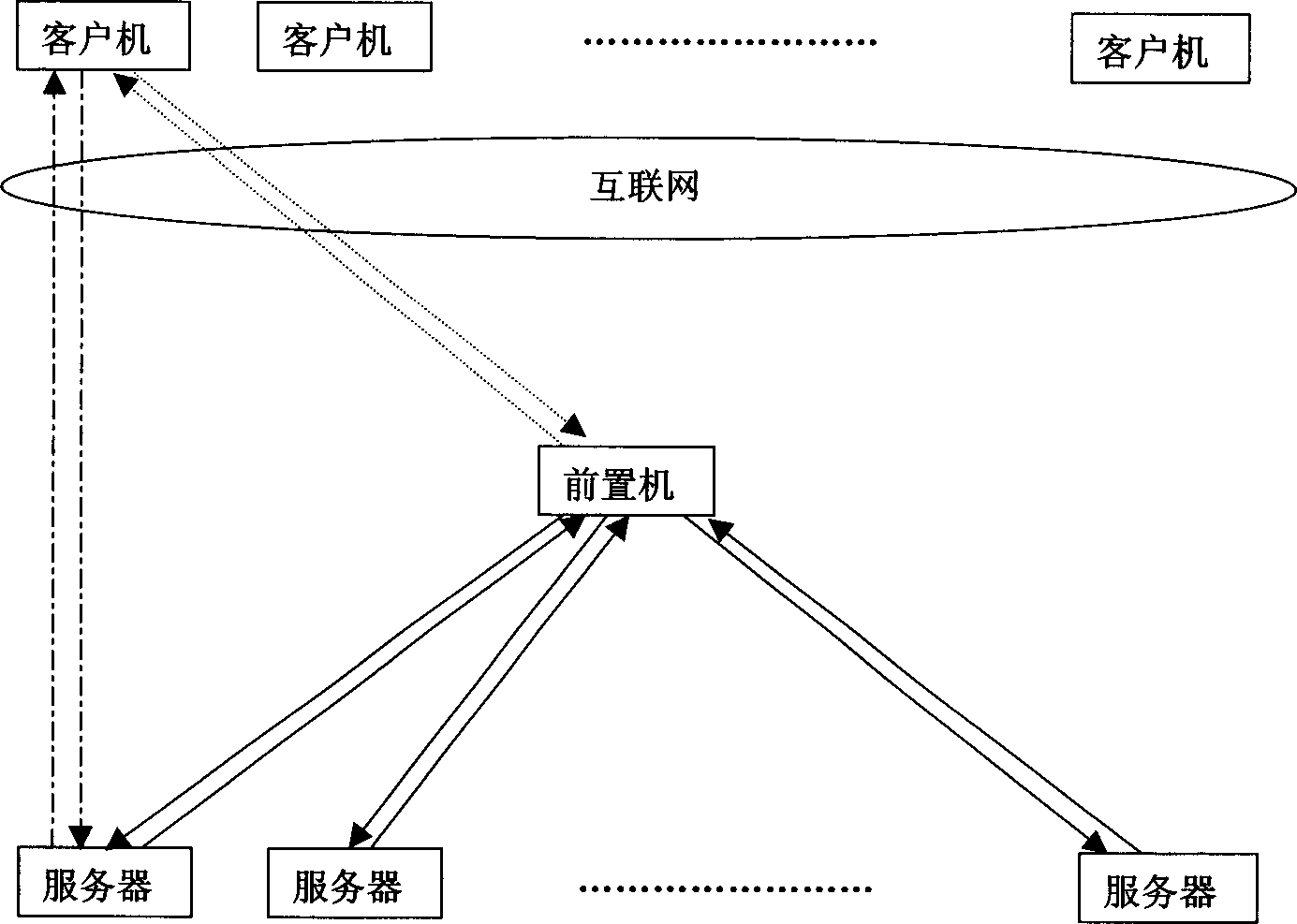

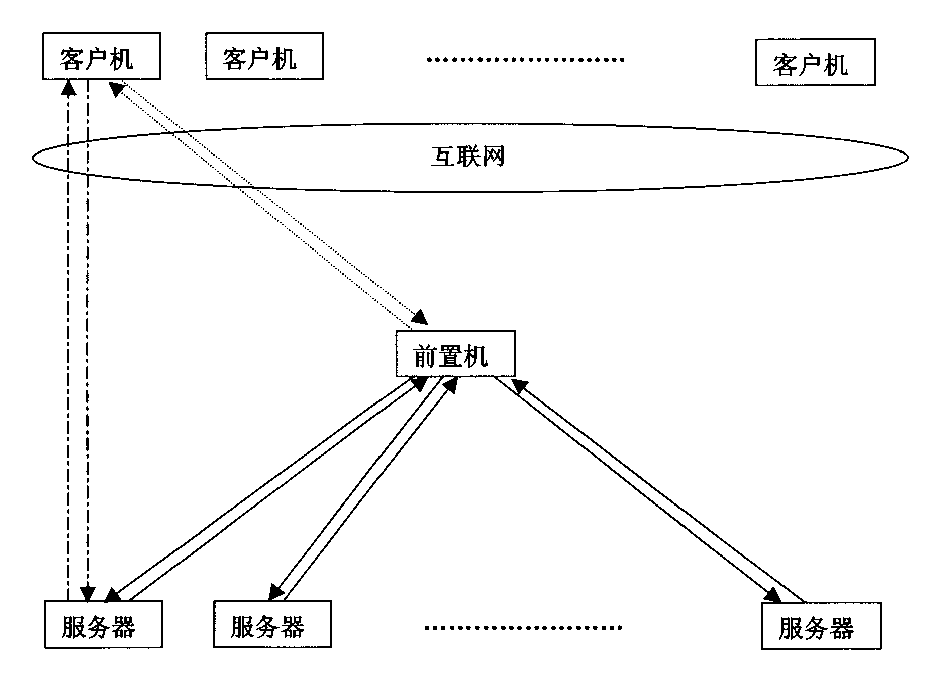

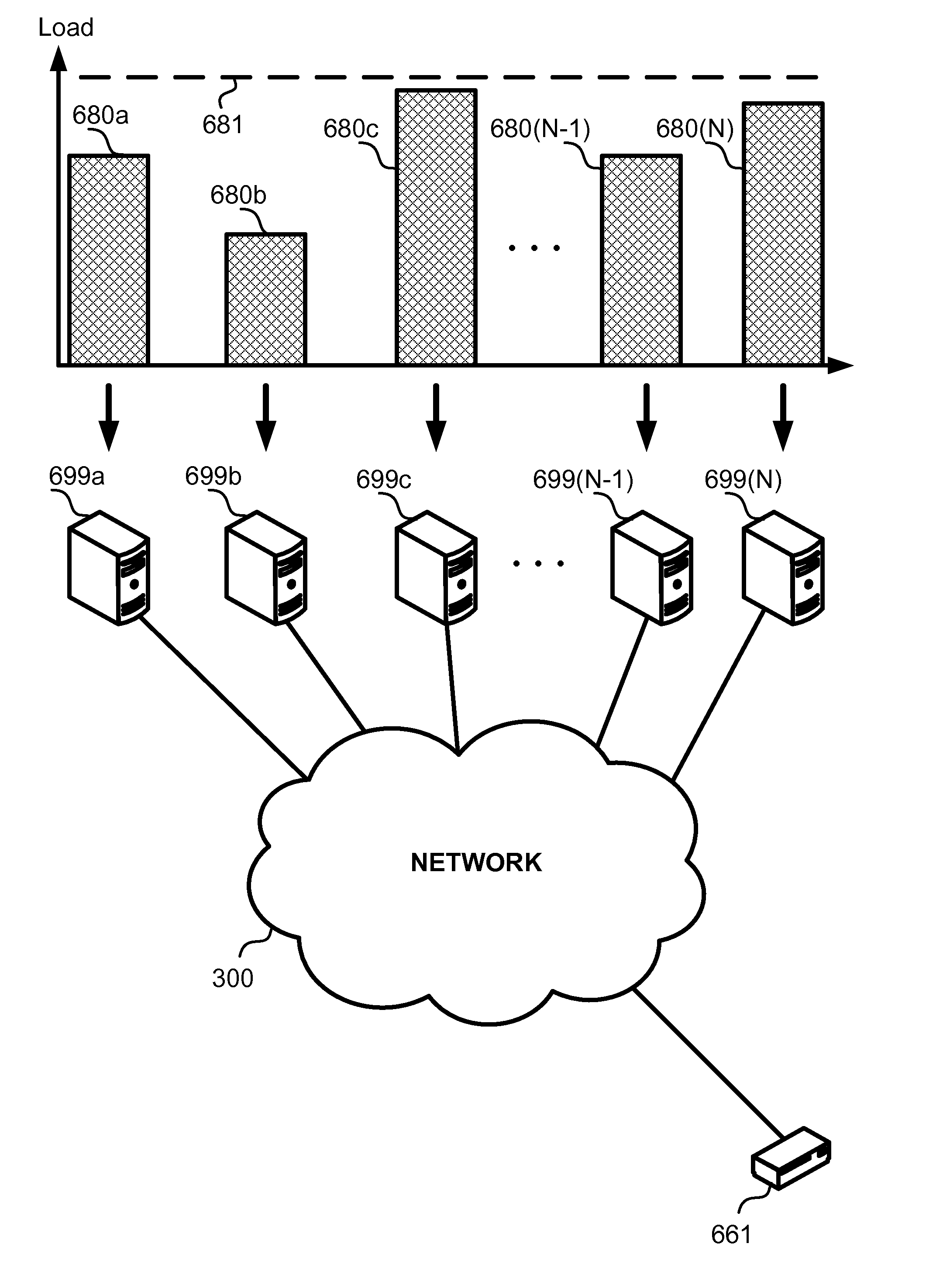

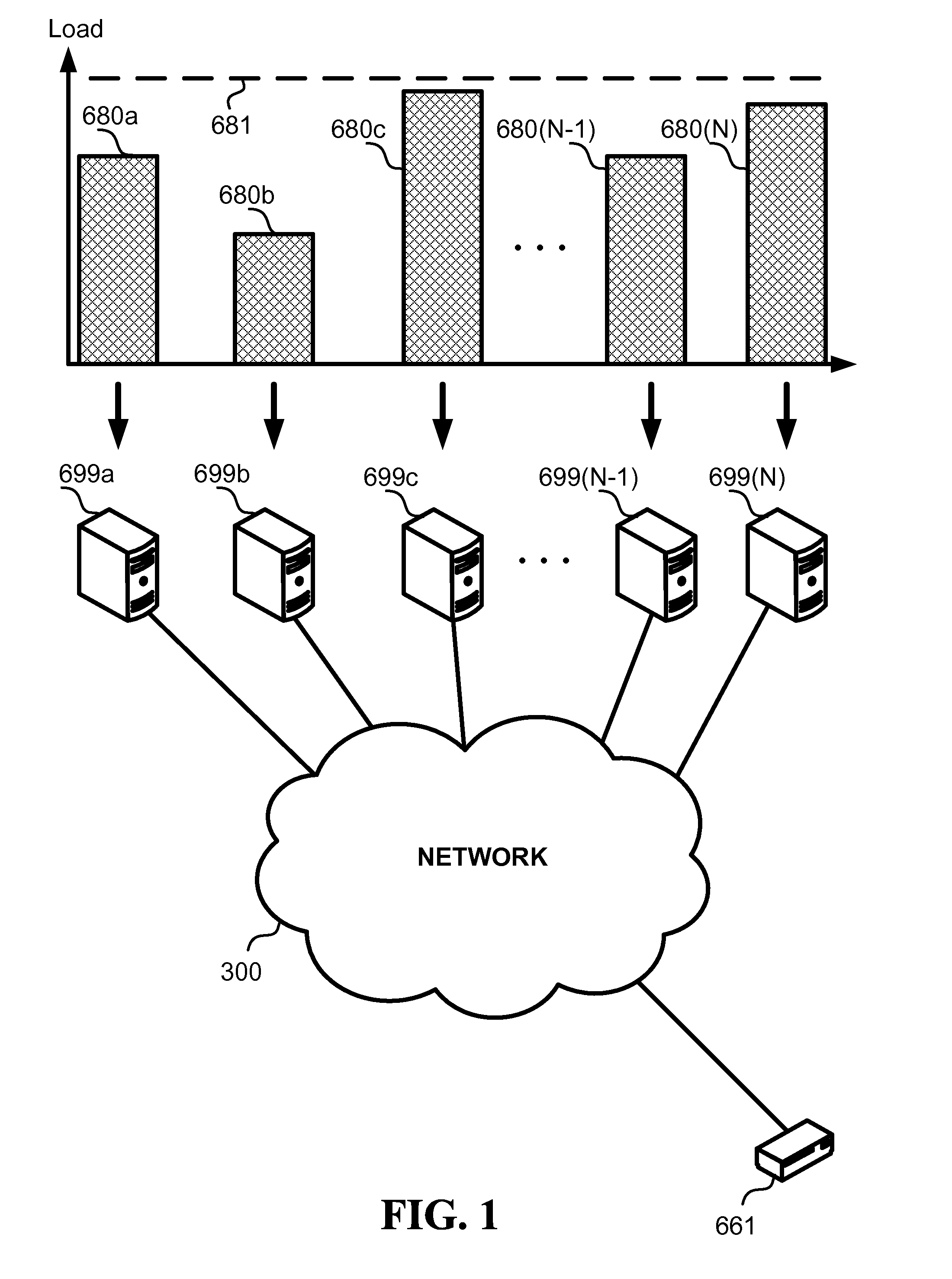

Several customer terminals interdynamic load equalizing method and its system

InactiveCN1367439ATo achieve load balancingMake full use of network bandwidthProgram controlMemory systemsClient-sideLocal area network

The present invention discloses a multiple customer terminals interactive load balacing method and its system. It is implemented by using several customer terminals, a process front-end and several logic operation servers and their connection, in which the system loads can be distributed on several logic operation servers, according to the load condition the quantity of servers can be increased or reduce,d and the state information of every logic operation server is stored in the process front-end, and the curtomer can directly create connection with correspondent logic operation server according to the indication of process front-end, and the process front-end can be connected with every logic operation server by means of local area network of interconnection network. It can implement load balance, and its logic operation servers can be separately distributed so as to can fully utilize bandwidth of network.

Owner:SNAIL GAMES

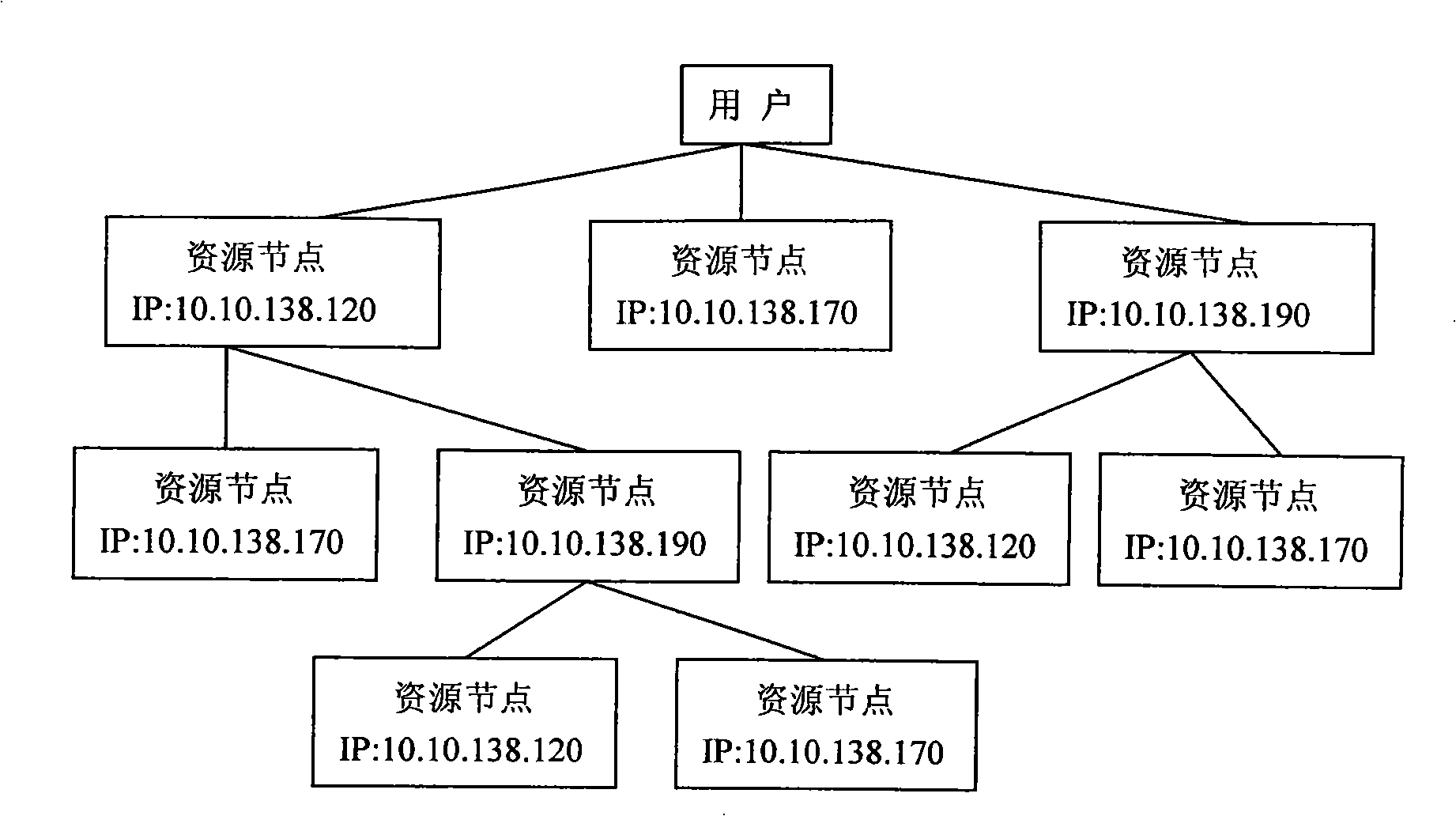

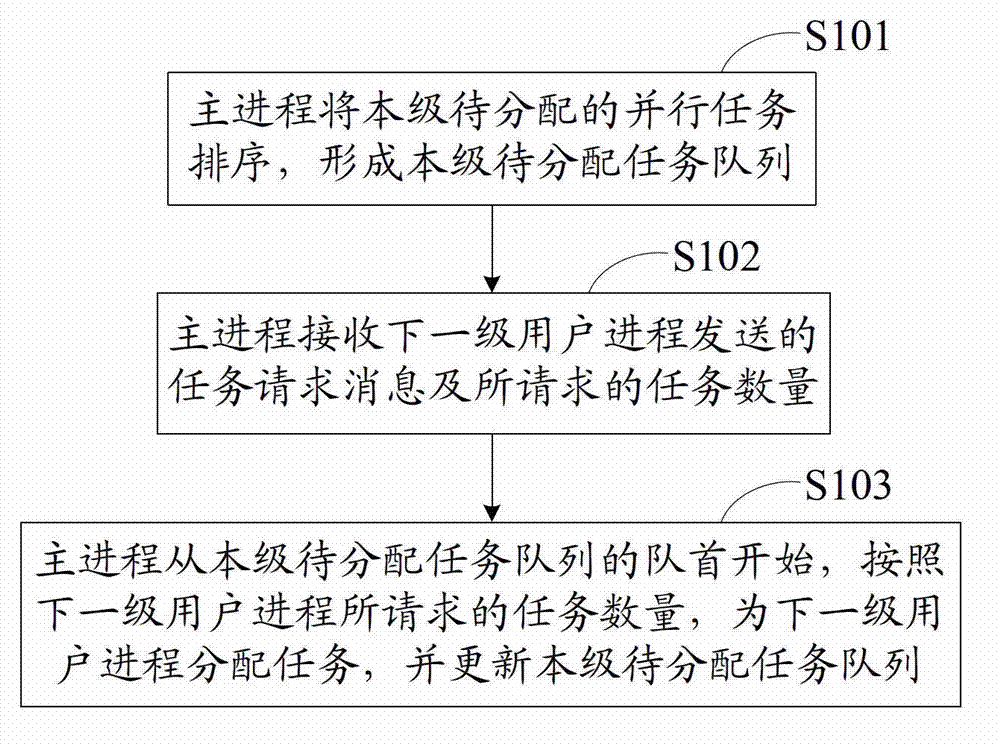

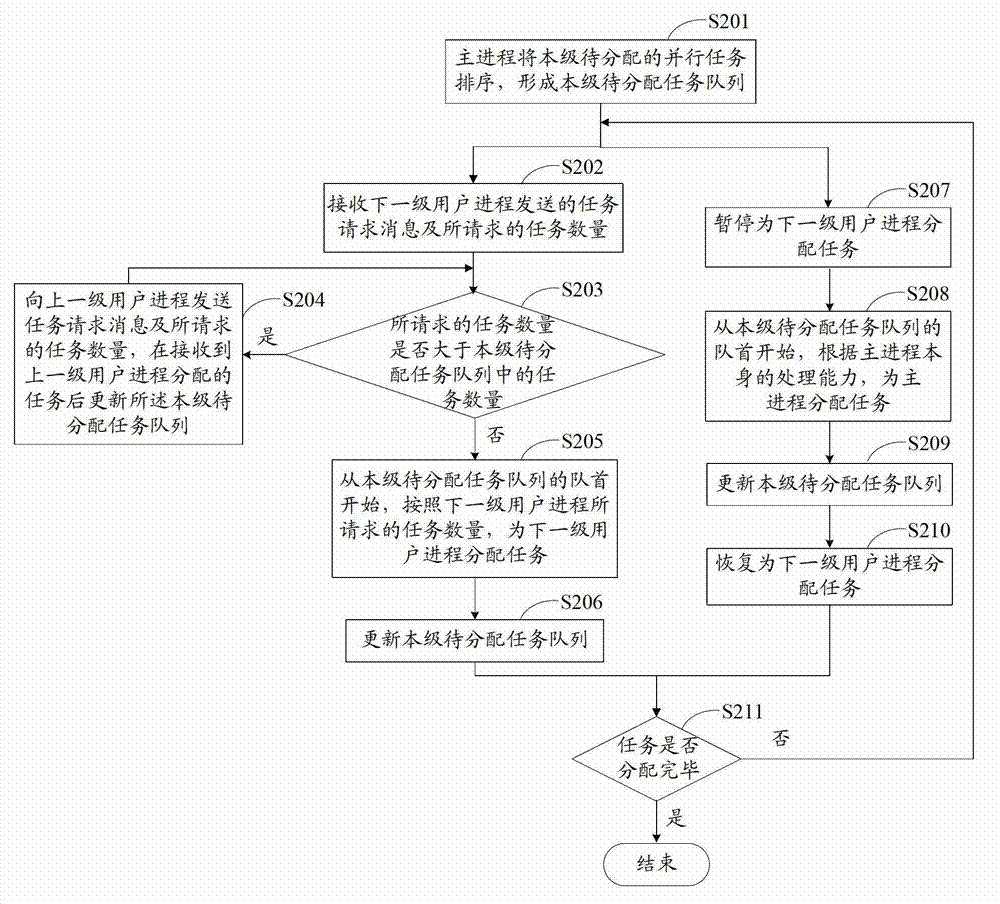

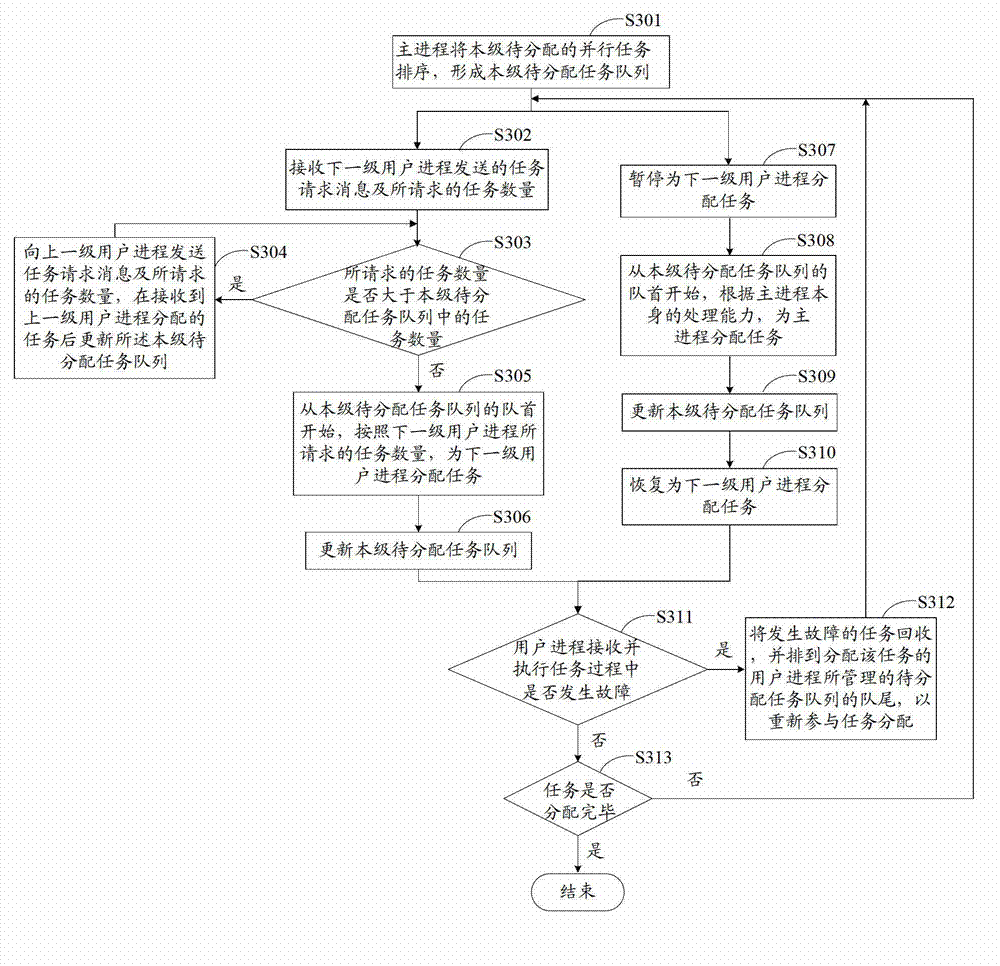

Parallel task dynamical allocation method

ActiveCN102929707ACutting costsTo achieve load balancingResource allocationComputational resourceDistributed computing

The invention discloses a parallel task dynamical allocation method which is suitable for a parallel system including a multilevel user process. The multilevel user process comprises at least two levels of main processes and common processes. The method includes that the main processes arrange parallel tasks to be allocated to form task queues to be allocated at the local level, task request information and the number of requested tasks sent by a next level of user processes are received, beginning from heads of the task queues to be allocated at the local level, the tasks are allocated to a next level of user processes according to the number of the requested tasks of the next level of user processes, and the task queues to be allocated at the local level are updated. The parallel task dynamical allocation method has the advantages that the efficiency of dynamical task allocation can be improved, and the load balance among mass computing resources is achieved.

Owner:JIANGNAN INST OF COMPUTING TECH

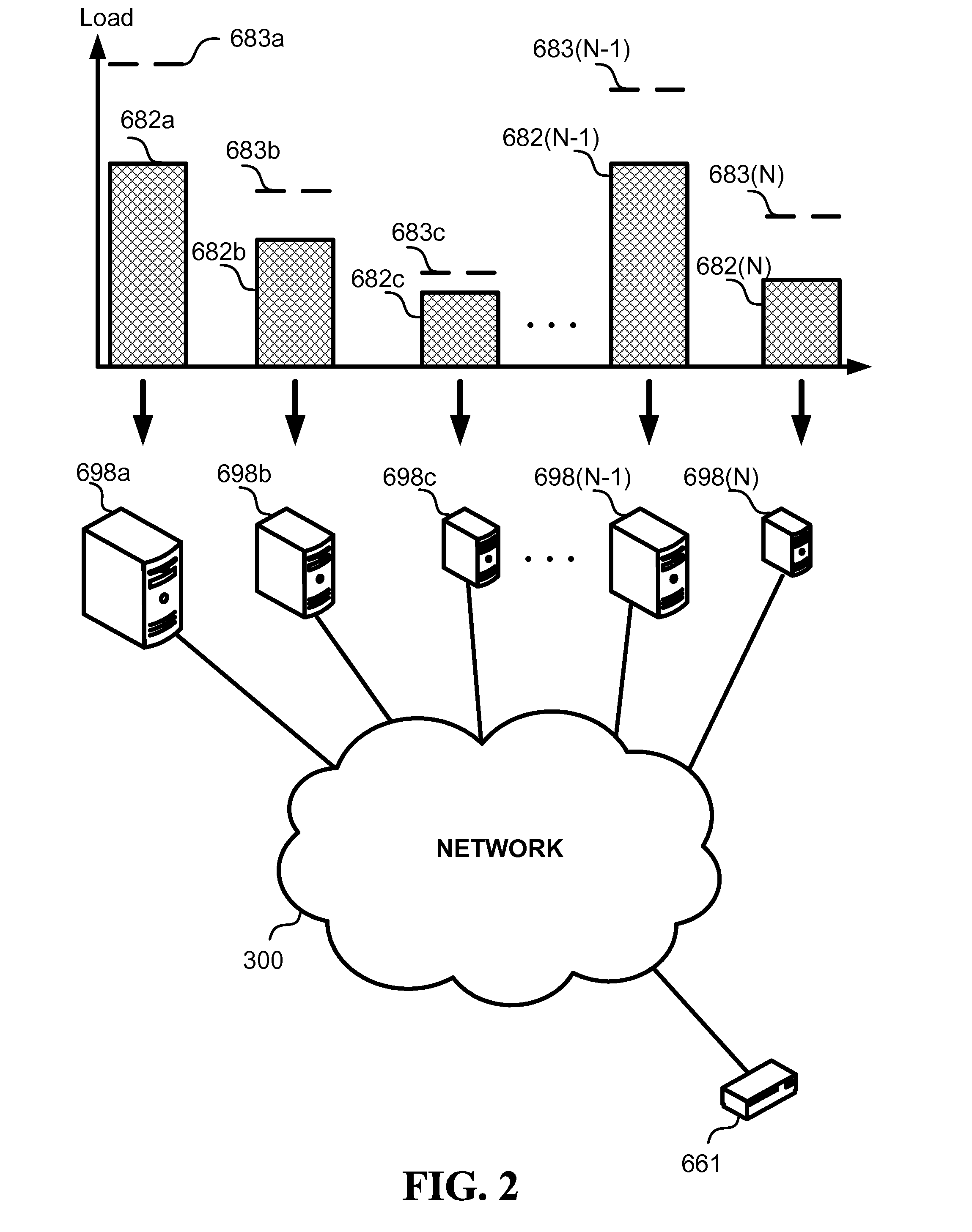

Balancing a distributed system by replacing overloaded servers

ActiveUS20100095004A1Increase their current fragment delivery throughputTo achieve load balancingOther decoding techniquesCode conversionErasure codeDistributed computing

Load-balancing a distributed system by replacing overloaded servers, including the steps of retrieving, by an assembling device using a fragment pull protocol, erasure-coded fragments associated with segments, from a set of fractional-storage servers. Occasionally, while retrieving the fragments, identifying at least one server from the set that is loaded to a degree requiring replacement, and replacing, using the fragment pull protocol, the identified server with a substitute server that is not loaded to the degree requiring replacement. Wherein the substitute server and the remaining servers of the set are capable of delivering enough erasure-coded fragments in the course of reconstructing the segments.

Owner:XENOGENIC DEV LLC

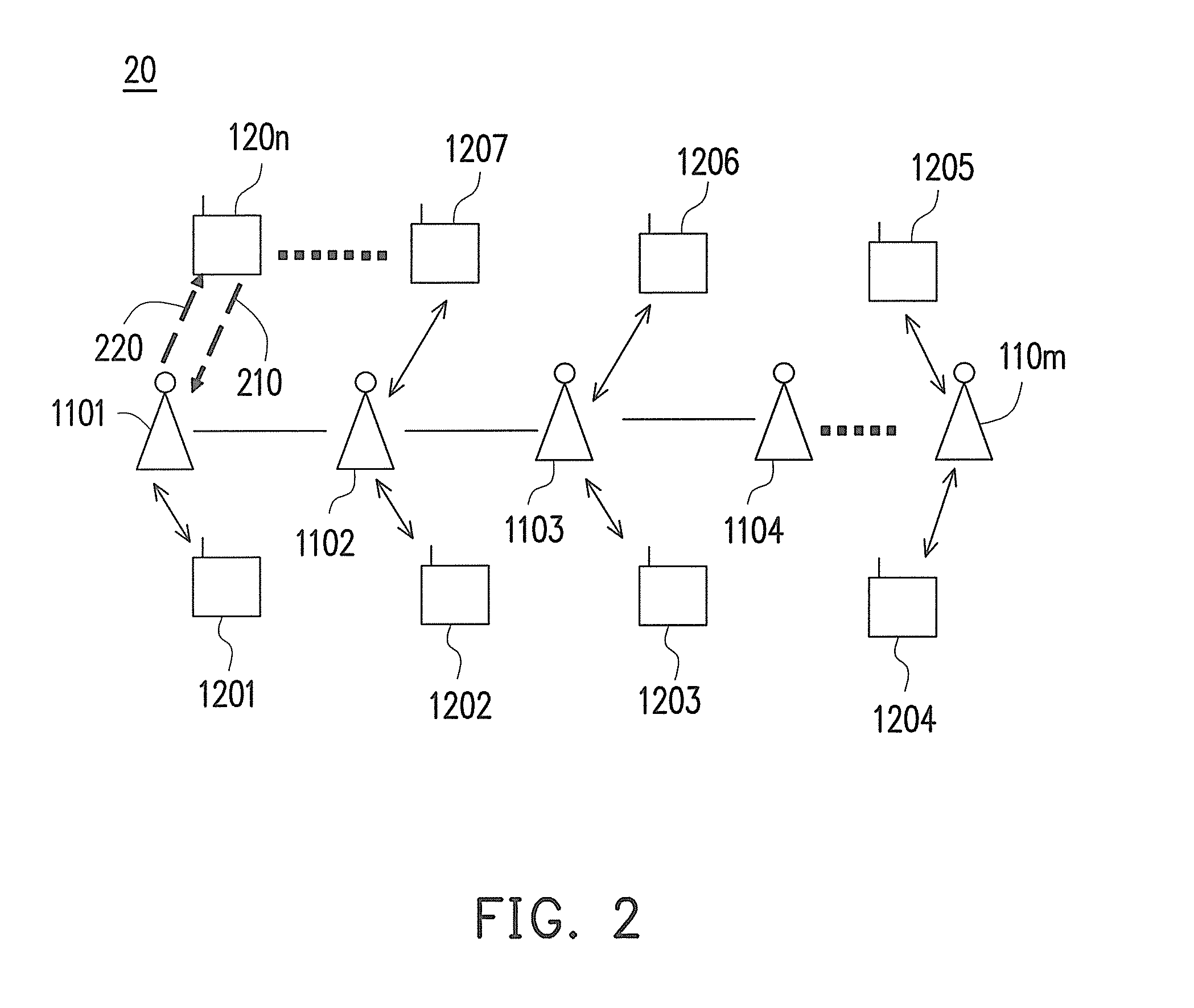

Wireless network system and wireless access point device and wireless terminal device thereof

ActiveUS20120020339A1Unnecessary transmissionReduce bandwidth wasteError preventionFrequency-division multiplex detailsLow loadTerminal equipment

A wireless network system and a wireless access point (AP) device and a wireless terminal device thereof are provided. The wireless network system includes at least a wireless AP device and at least a wireless terminal device. Each wireless AP device broadcasts a beacon including a load state content of the wireless AP device. Each wireless terminal device receives beacons of all wireless AP devices, and ranks load states of all wireless AP devices in a load list according to at least CPU utilization rates in the load state contents of all wireless AP devices respectively. When a wireless terminal device intends to establish a connection with one of the wireless AP devices, the wireless terminal devices searches through the load list to select a wireless AP device being in a low load state, and transmits a connection request message to the selected wireless AP device.

Owner:GEMTEK TECH CO LTD

Resource load balancing method and device in distributed system

InactiveCN106534284ATo achieve load balancingImprove accuracyTransmissionOccupancy rateComputer network technology

The invention provides a resource load balancing method and device in a distributed system, belonging to the technical field of computer networking technology, wherein the distributed system comprises a preset number of servers. The resource load balancing method in a distributed system comprises the following steps: obtaining a task to be processed which is processed by a preset algorithm; confirming the amount of the algorithm resources required while the preset algorithm processes the task; confirming the occupancy rate of the current resources corresponding to each server according to the amount of resources used by each server and the amount of resources of the algorithm; and confirming the target server to process the task according to the occupancy rate of the current resources corresponding to each server, and assigning the task to the target server. The invention provides a resource load balancing method and device in a distributed system, and by means of the resource load balancing method and device in a distributed system, the accuracy of load balancing is enhanced.

Owner:SPACE STAR TECH CO LTD

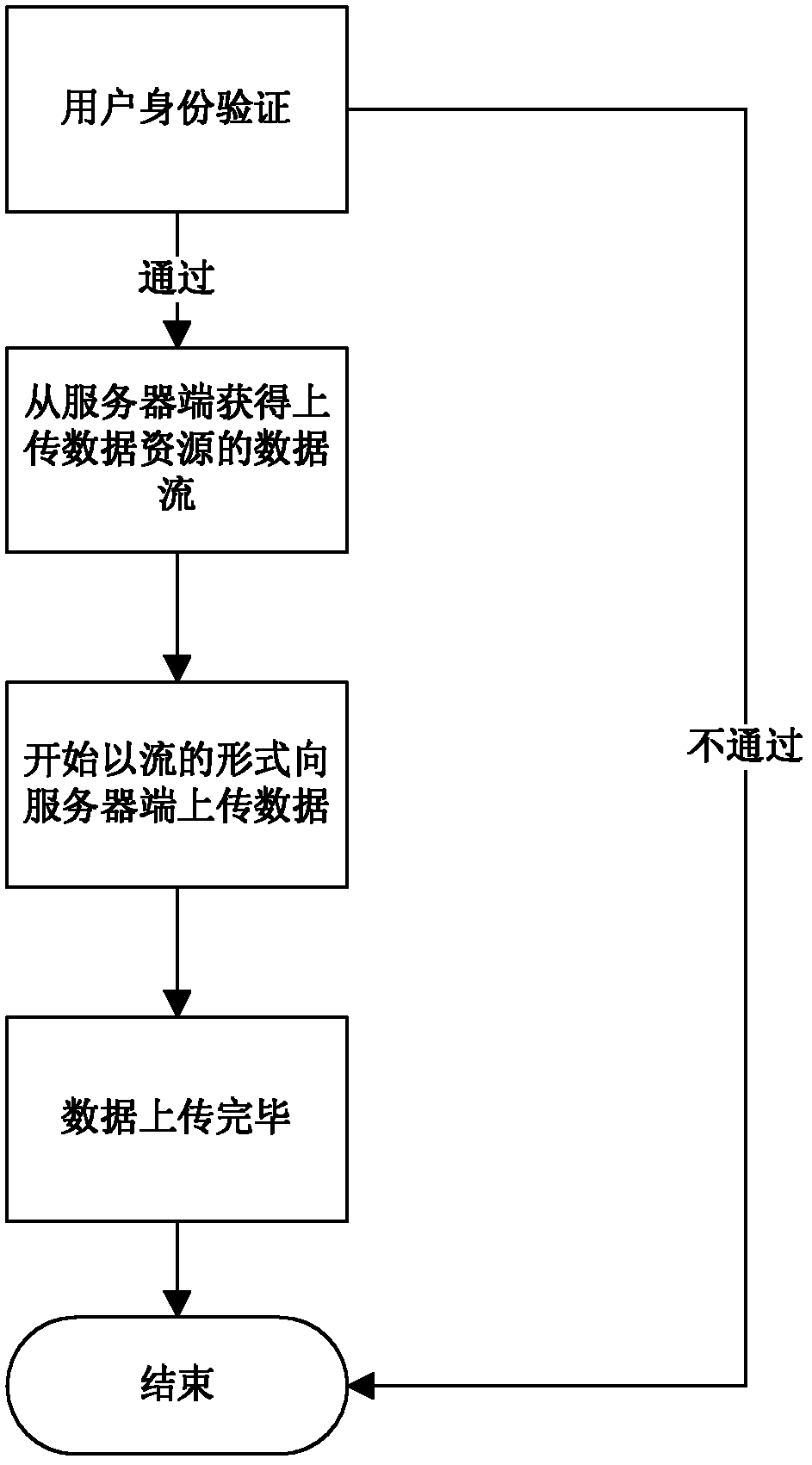

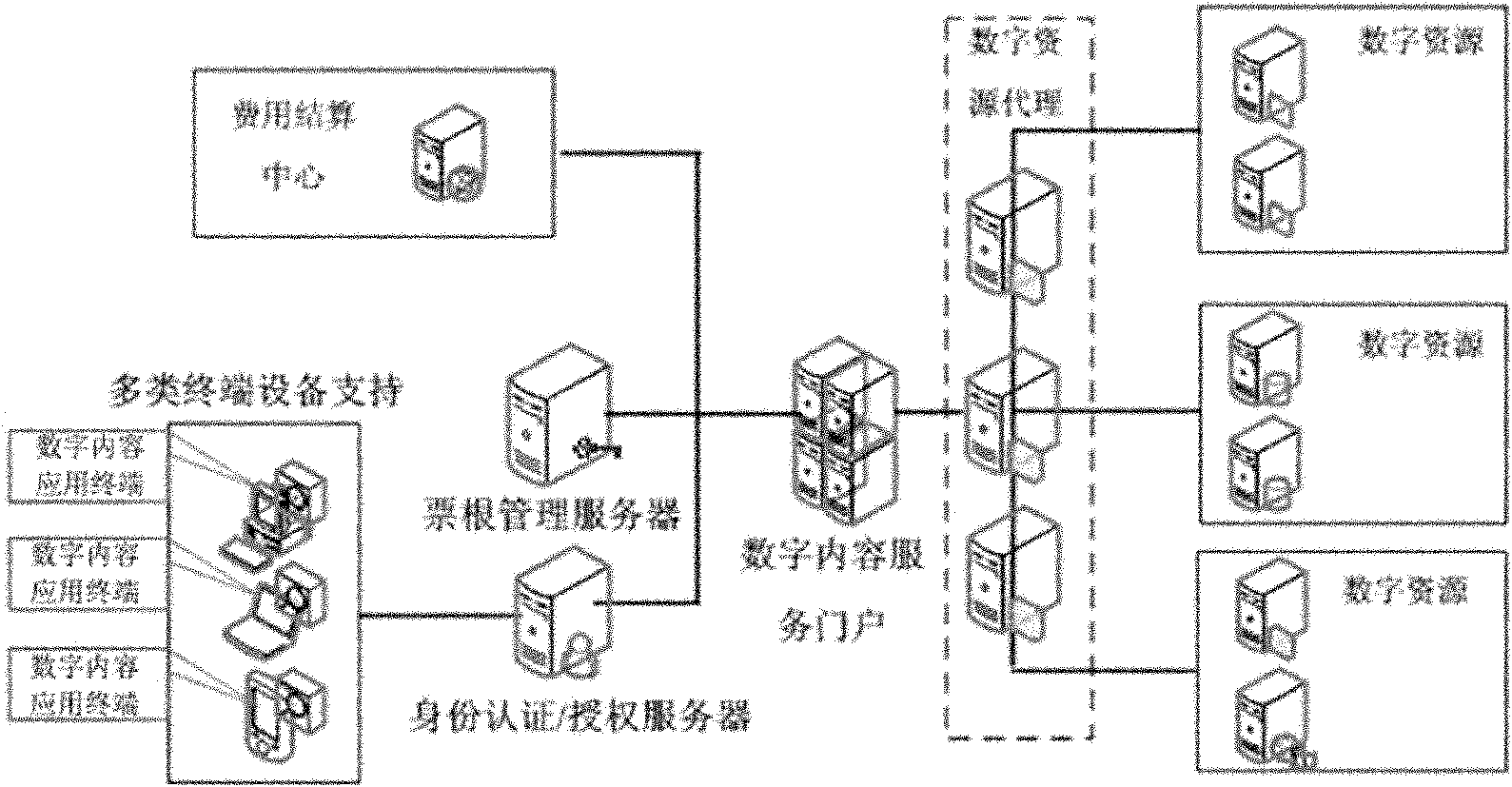

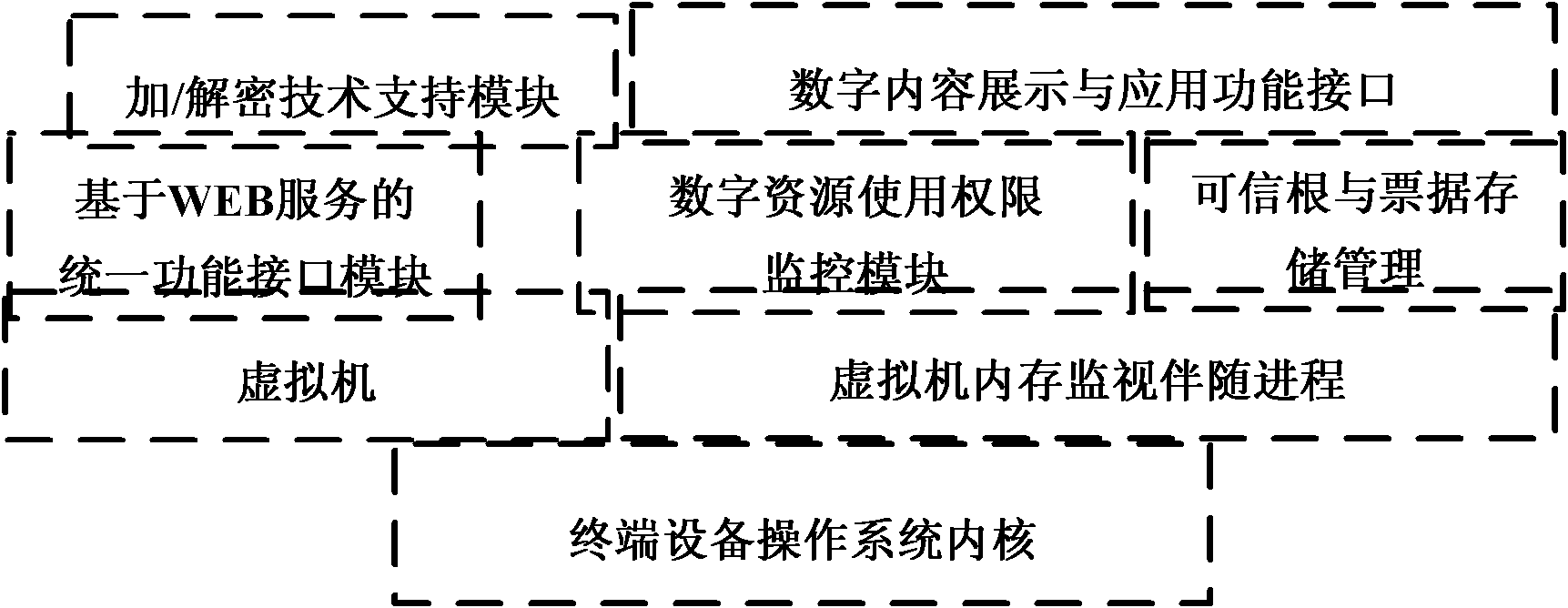

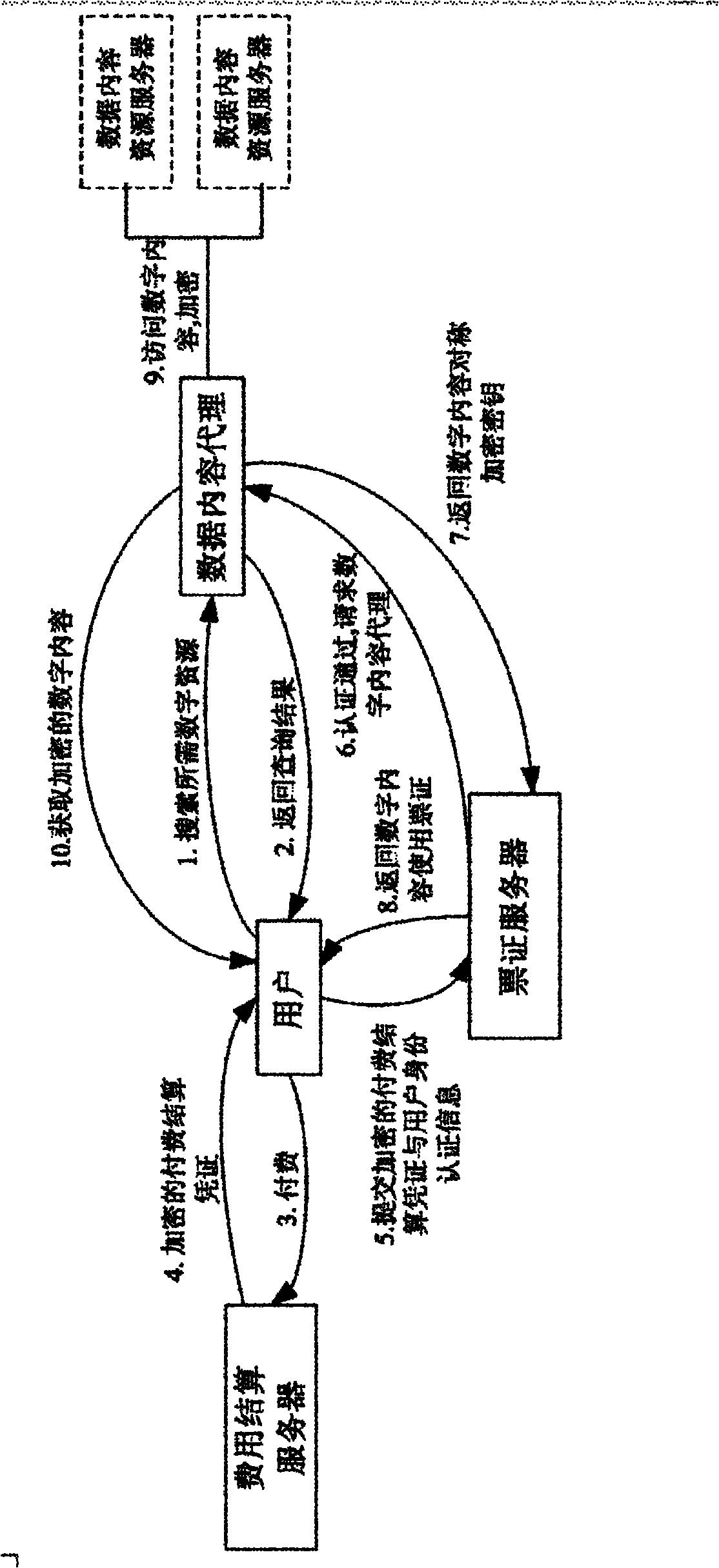

High reliable digital content service method applicable to multiclass terminal equipment

ActiveCN101977183ANo need to care about the usage environmentAchieve protectionUser identity/authority verificationDigital contentWeb service

The invention provides a high reliable digital content service method applicable to multiclass terminal equipment. The technology of the method is as follows: based on a reliable computer technology, a unique parameter of user terminal equipment is acquired to generate a user terminal equipment identification, then the user terminal equipment identification is combined with user identity information to generate a reliable root, and the security verification of the operational environment of application terminal components is realized on the basis of the reliable root; and by digital content application terminal components, cross-platform digital content access management and control can be realized based on a WEB server, thereby protecting the digital content to be used legally and ensuring that the rights and benefits of the owner of the digital content source are not infringed.

Owner:JIANGSU BOZHI SOFTWARE TECH CO LTD

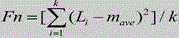

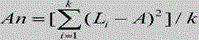

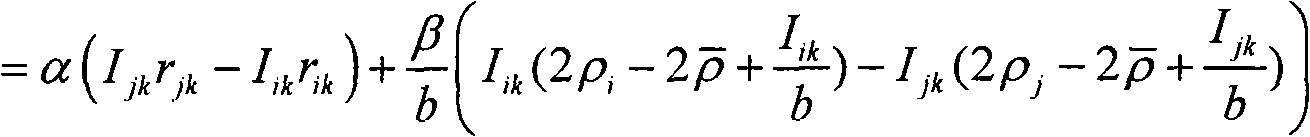

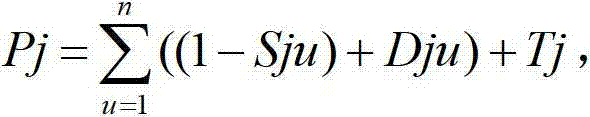

Load balancing and throughput optimization method in mobile communication system

InactiveCN101784078AReduce overheadImprove throughputNetwork traffic/resource managementComputer architectureMobile communication systems

The invention relates to a load balancing and throughput optimization method in a mobile communication system, comprising the following steps of: step one, exchanging the respective load information between base stations and adjacent base stations, sequencing cell load information, obtaining a cell with maximum load and marking the cell as the cell i; step two, if the load of the cell i is more than the given threshold value sigma set by an operation merchant according to the self requirements, switching into step three, otherwise, switching into step five; step three, in the cell i, defining the gain of utility function brought by the user k switched from the cell i to a cell j for all the users and adjacent cells based on the following formula; step four, if the load of the cell i is still more than the given threshold value sigma, switching into the step three, otherwise, ending the process. The method simultaneously considers load balancing and system throughput optimization, thus not only reducing the switching times and rapidly realizing load balance for further reducing the signalling expense of the system, but also optimizing the system throughput.

Owner:SOUTHEAST UNIV +1

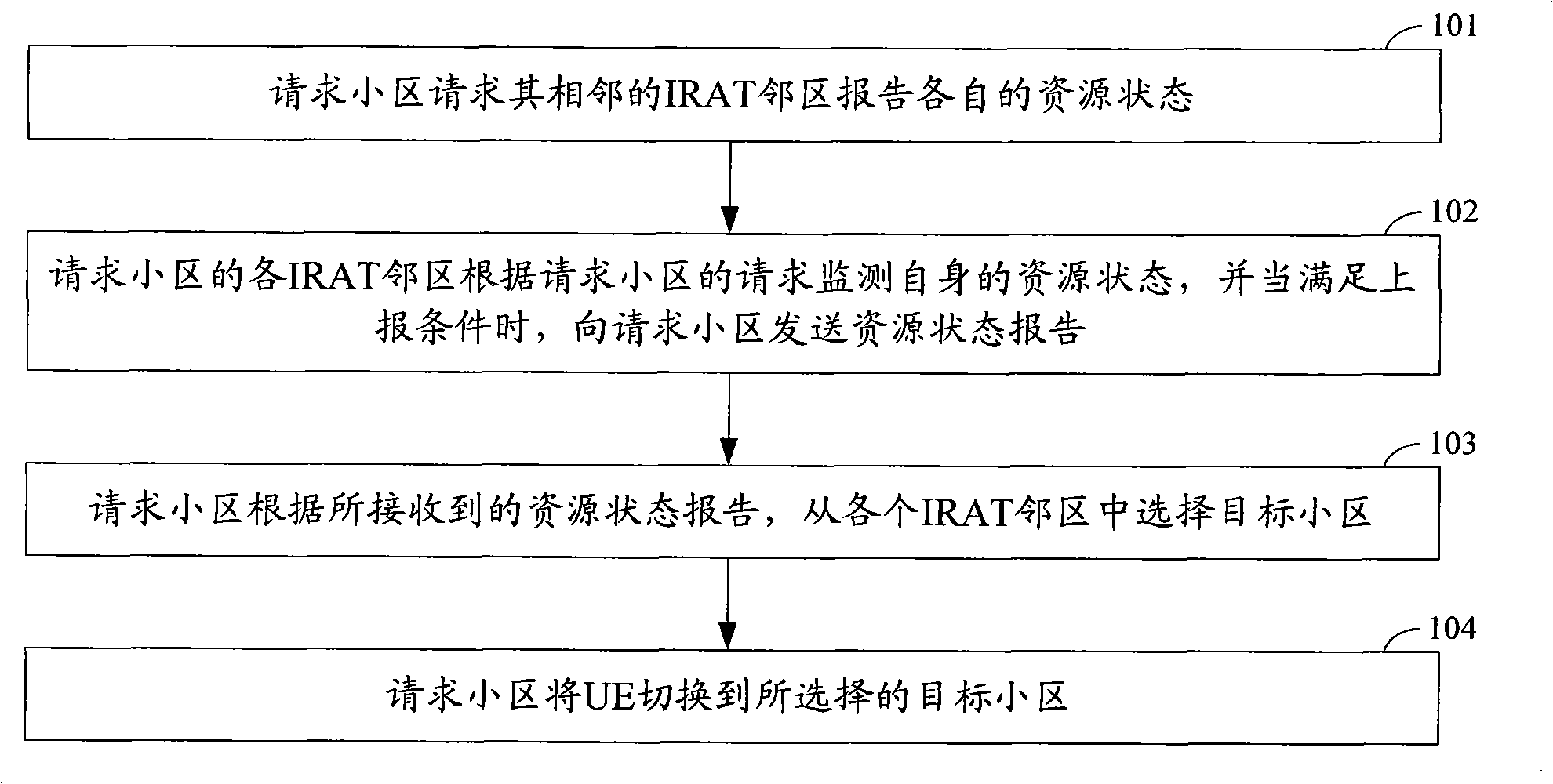

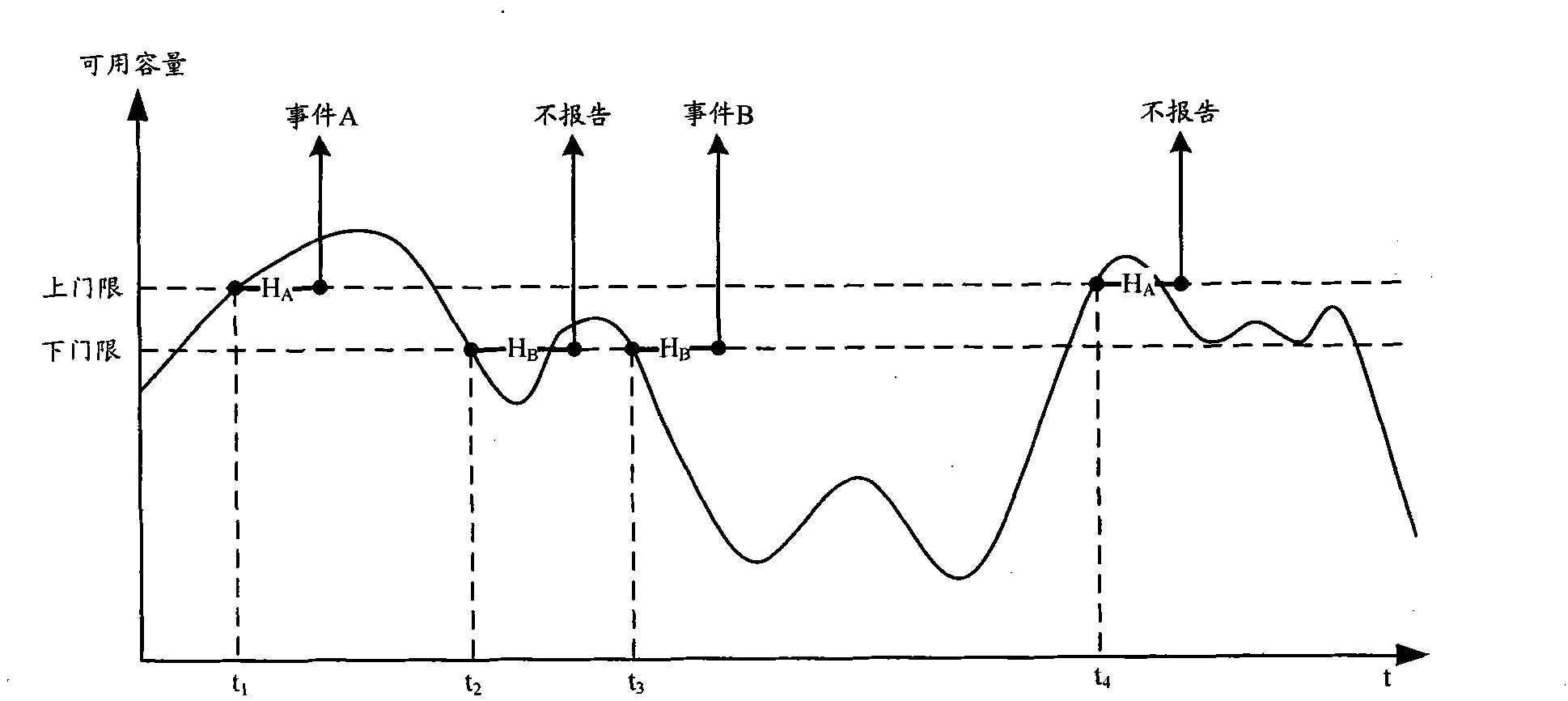

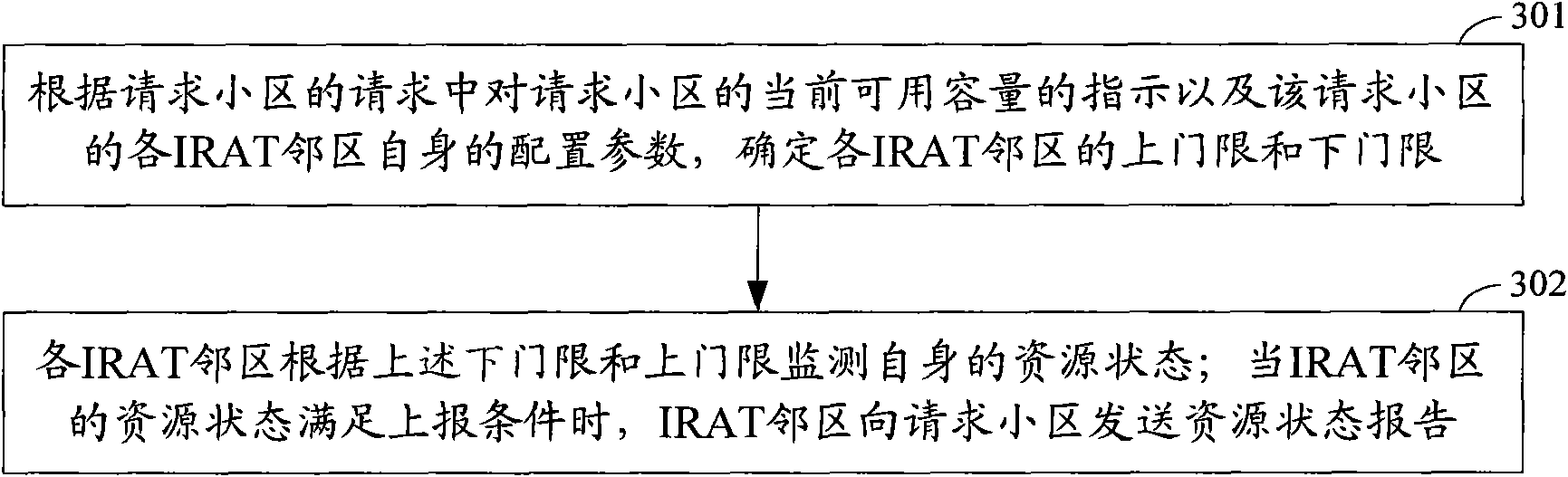

Method for reporting load and method for reducing load for cell

InactiveCN102118797AAdjust load conditionsTo achieve load balancingError preventionNetwork traffic/resource managementRadio access technologyDistributed computing

The invention relates to a method for reporting load and a method for reducing load for a cell. The method for reporting load comprises the following steps: the upper threshold and the lower threshold of each IRAT (Inter-Radio Access Technology) adjacent cell are determined according to the indication (in a request of a request cell) on current available capacity of the request cell as well as the parameters configured for all the IRAT adjacent cells; the resource state of each IRAT adjacent cell per se is monitored according to the upper threshold and the lower threshold; and when the resource state of each IRAT adjacent cell meets the report conditions, the IRAT adjacent cell sends a resource state report to the request cell. By adopting the methods, the load conditions of different systems in each cell can be better adjusted, and the purpose of balancing load of each cell can be better achieved.

Owner:NEW POSTCOM EQUIP

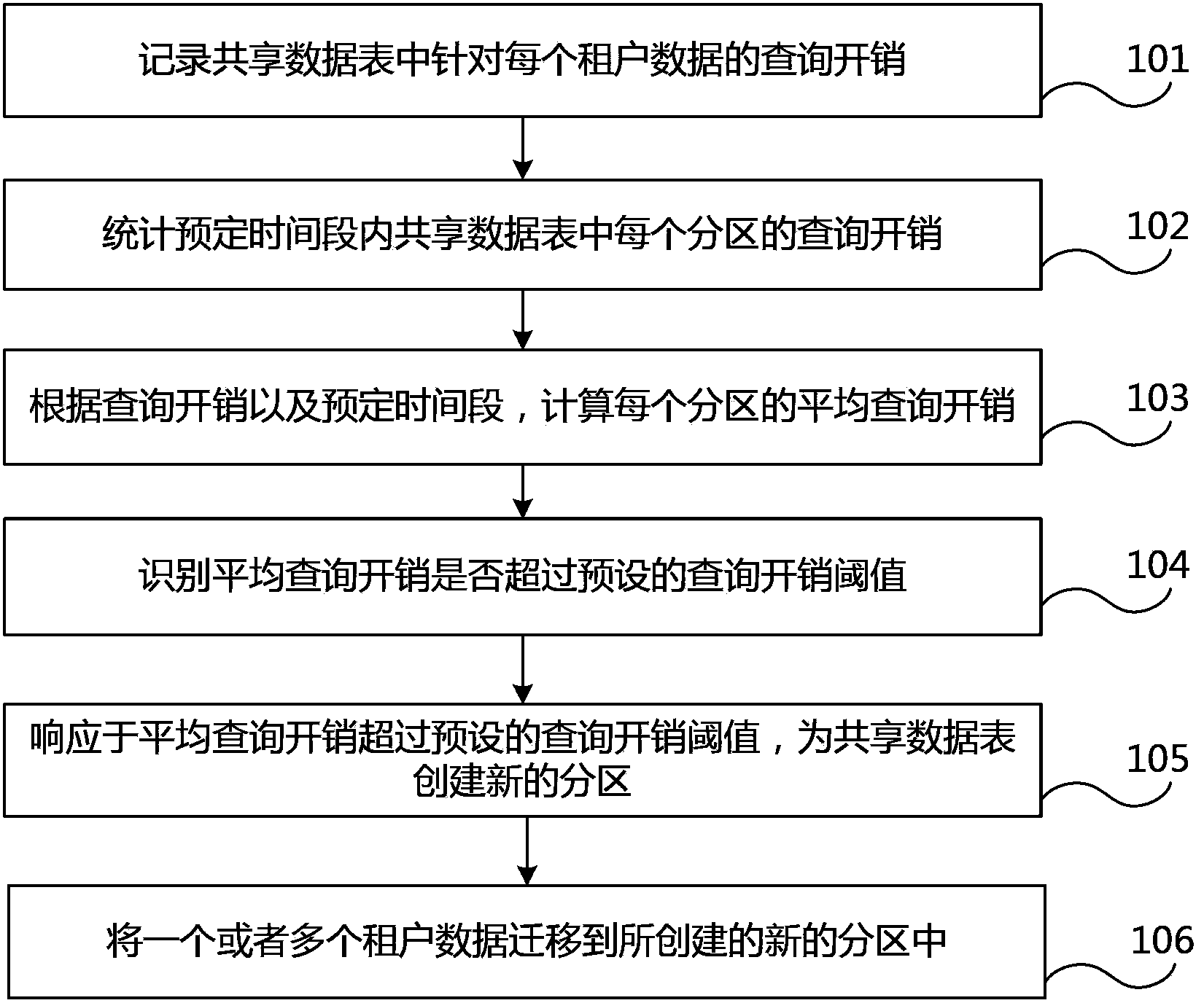

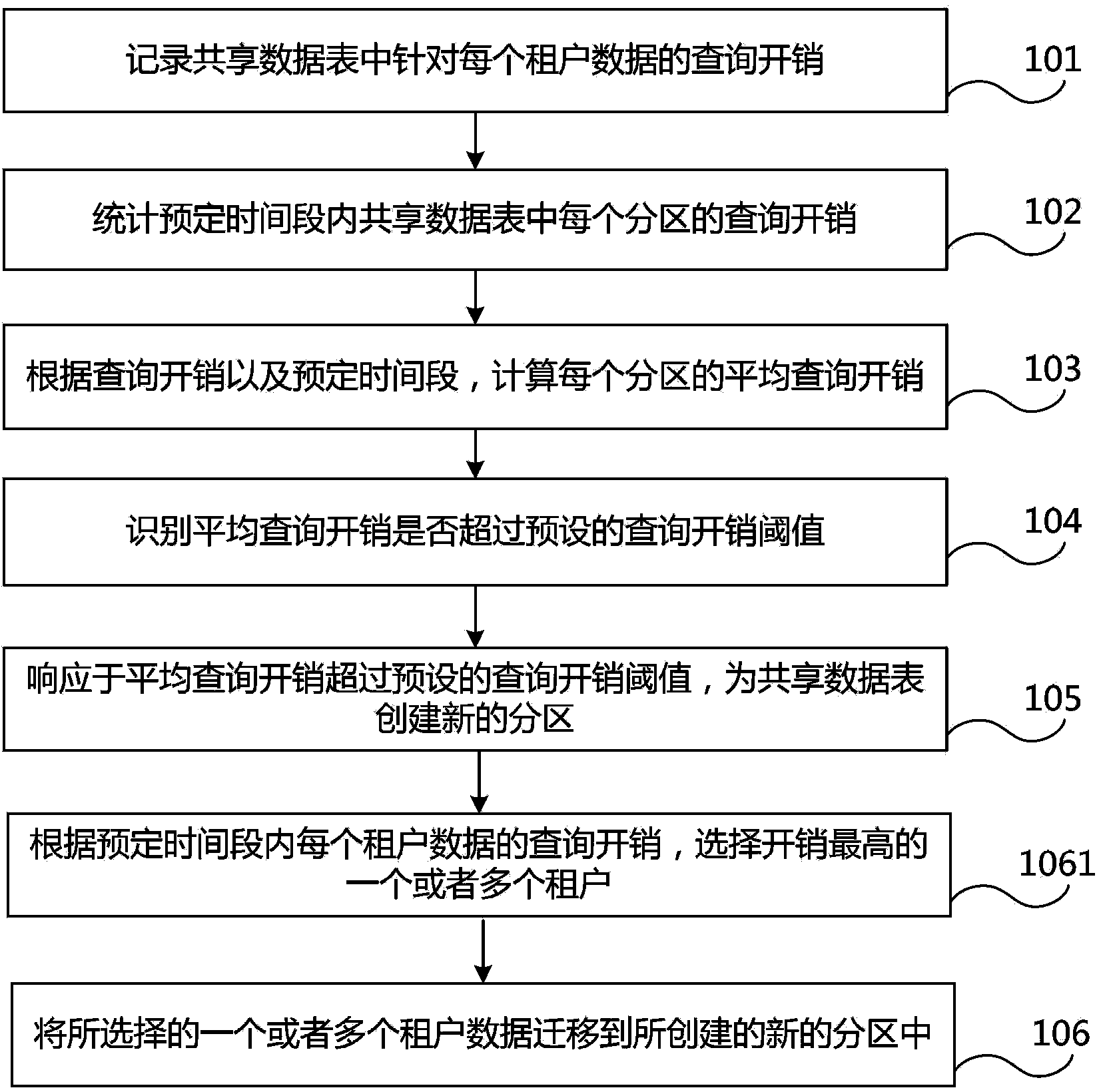

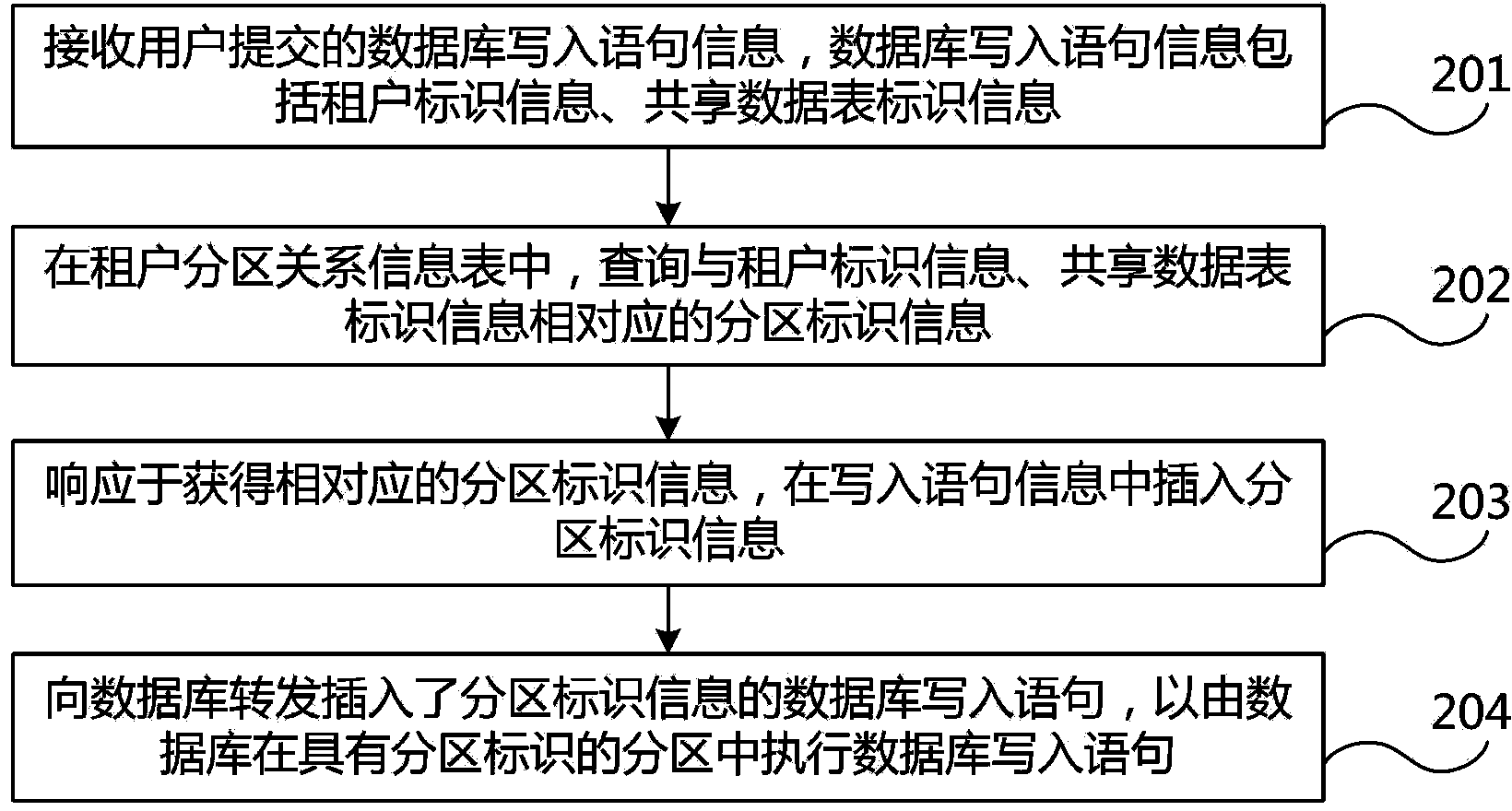

Partitioned management method for multi-tenant shared data table, server and system

ActiveCN104216893ATo achieve load balancingImprove performanceSpecial data processing applicationsWeb data retrieval using information identifiersData migrationData mining

Owner:CHINA TELECOM CORP LTD

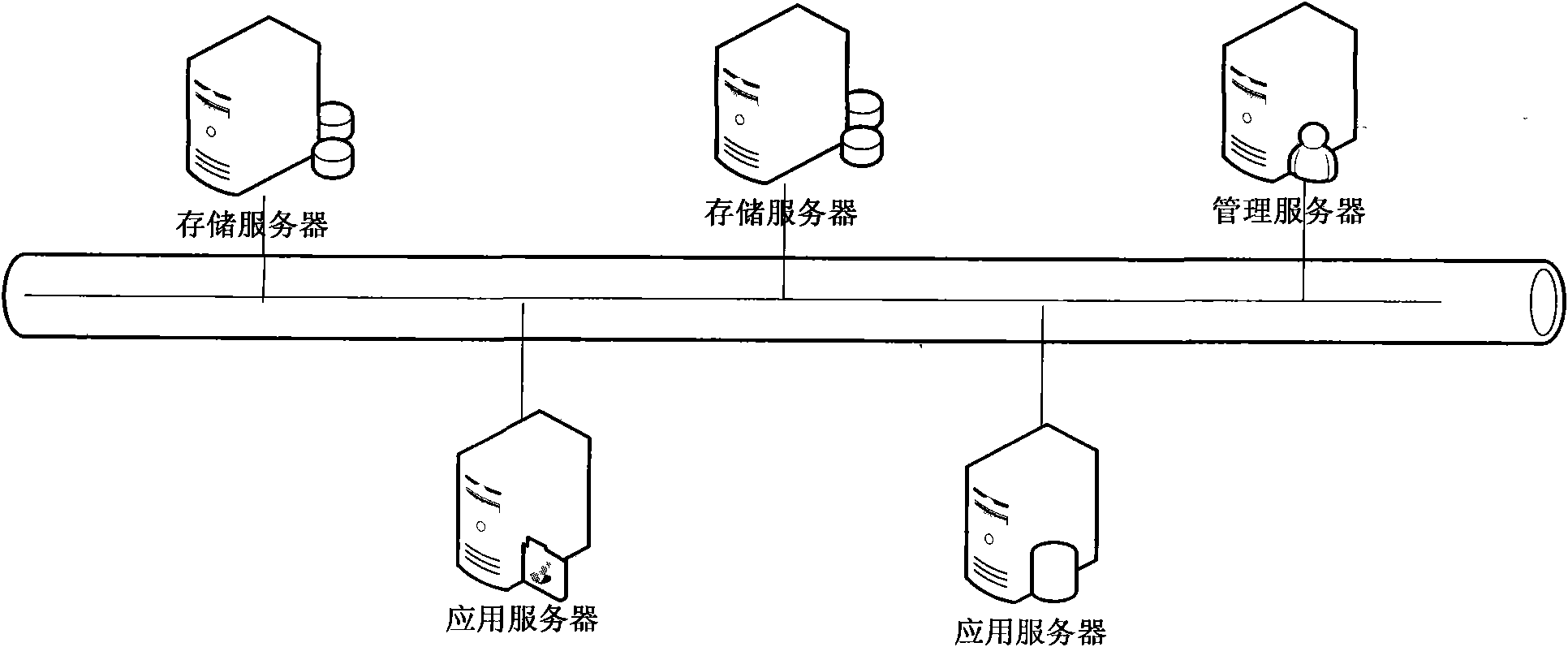

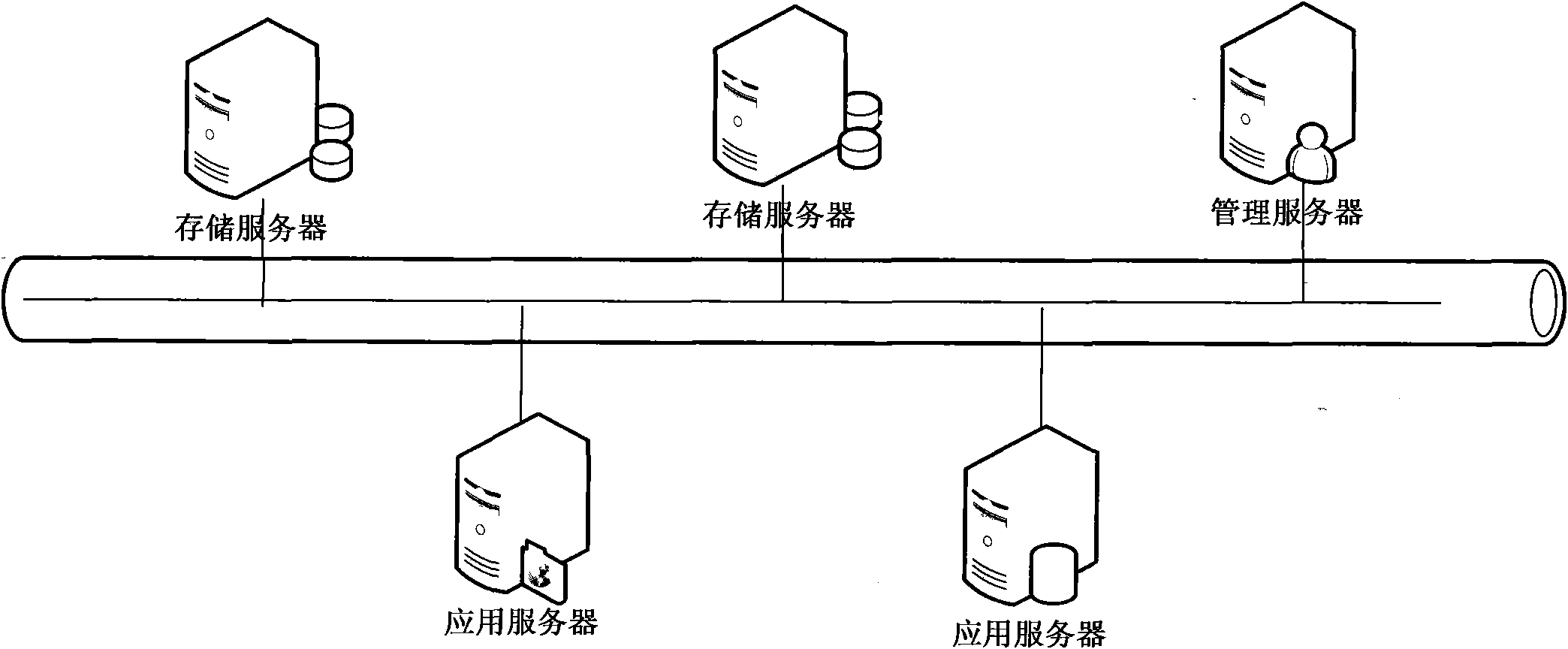

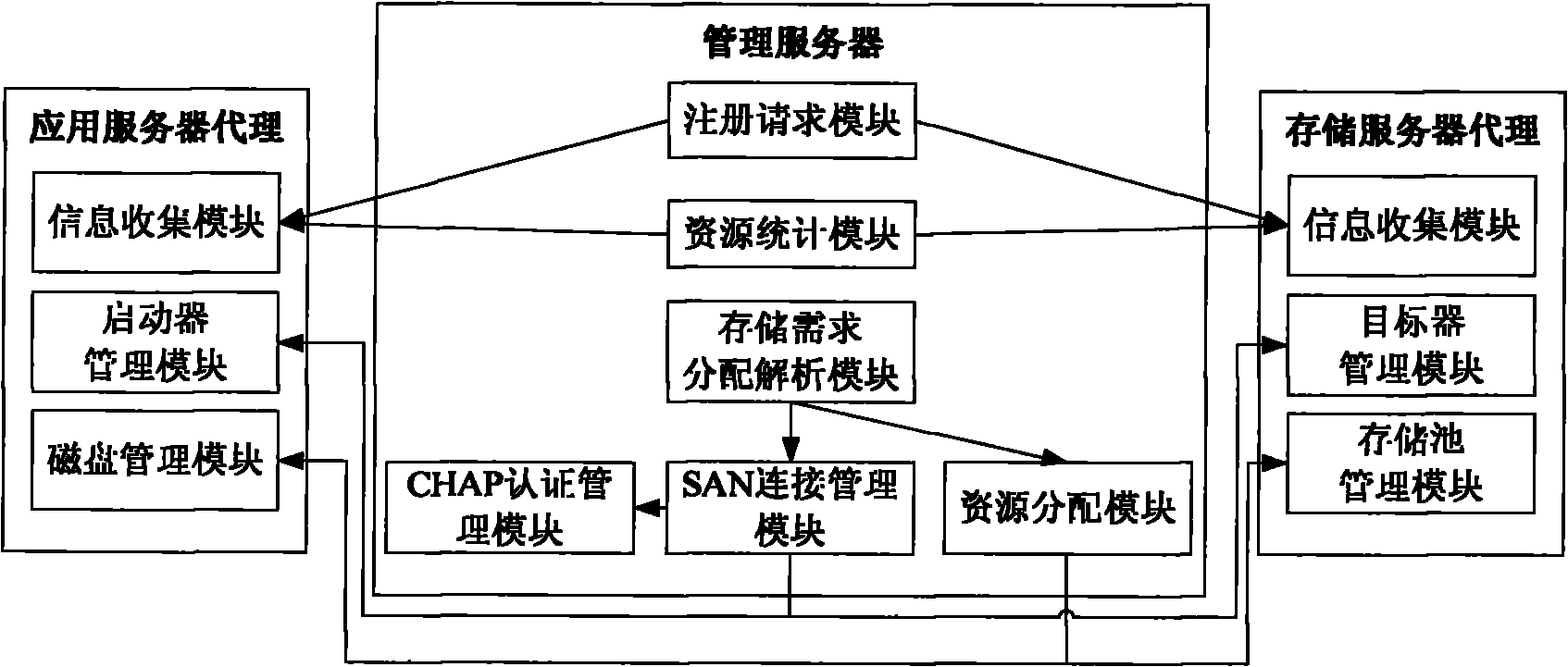

SAN stored resource unified management and distribution method

The invention provides an SAN stored resource unified management and distribution method; a system comprises a server agent, a storage server agent and a management server, wherein an application server is provided with an application server agent, and the application server agent comprises a host information collection module, a starter management module and a disk management module, and the modules are used for realizing host information collection and registering in a resource management server; a target CHAP is authenticated and connected; a logical volume is automatically formatted into a specified file system on demand; resource use information is stored and collected, so as to realize the global view of the SAN environment resource use information; the distribution of the application server to storage server resources is realized on the same management server, and the division of logical partitions of the application server and the formatting of the file system are completed; the connection between the starter and the target between the application server and the storage server is established and the CHAP authentication is configured; and a page displays the use information of the resources of each application server and the utilization status of the resources of each storage server, so as to prevent resource idle and realize the balanced load between the storage servers.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

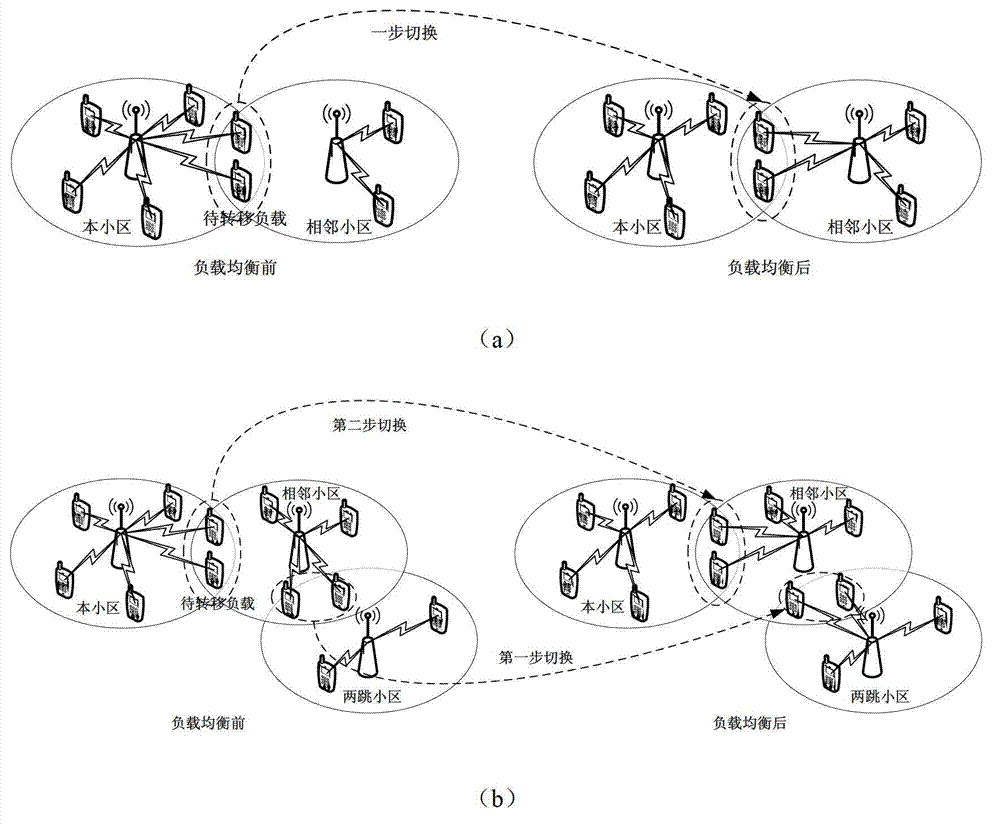

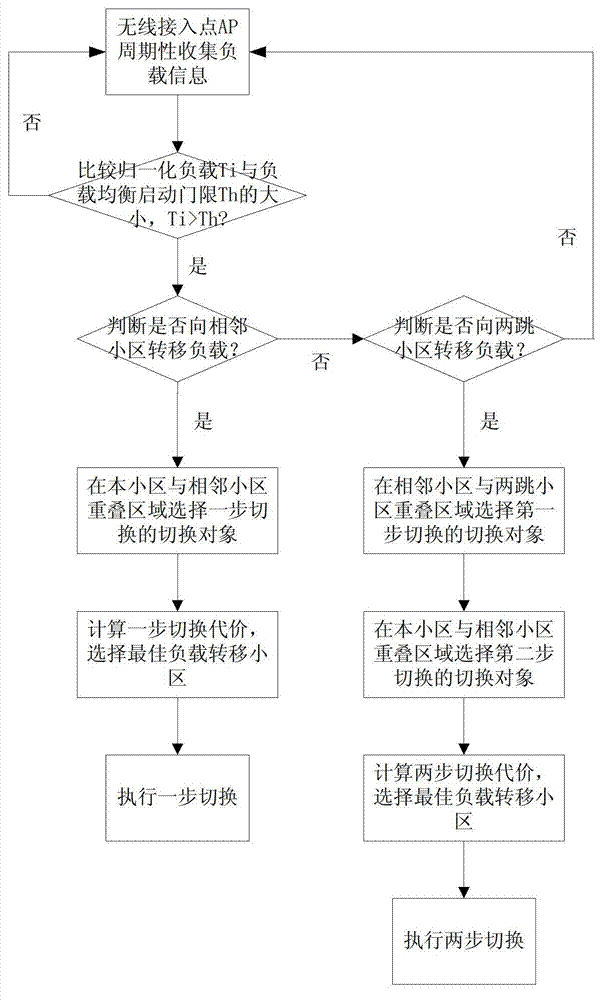

Load balancing method among nonadjacent heterogeneous cells in ubiquitous network

InactiveCN103096382ATo achieve load balancingExpand the scope of load balancingNetwork traffic/resource managementTransfer cellCurrent cell

The invention discloses a load balancing method among nonadjacent heterogeneous cells in a ubiquitous network, and mainly resolves the problem that in the prior art, when load of an adjacent cell is too heavy and load to be transferred of a current cell cannot be received, load balancing cannot be achieved. The load balancing method includes that load information of the current cell, the adjacent cell and a double bounce cell is collected periodically through wireless access points (AP), and whether load balancing is to be achieved or not is judged according to whether uniformization load of the current cell exceeds a load balancing starting threshold or not; according to the load information of the adjacent cell and the double bounce cell, whether load is transferred to the adjacent cell or to the double bounce cell is judged; a switching target is chosen, and according to switching cost of the switching target, the optimum load transferring cell with the minimum switching cost is chosen; and the switching target is indicated to be switched into a corresponding cell to complete the load balancing. According to the load balancing method, the load balancing among nonadjacent heterogeneous cells is achieved, the range of the load balancing is enlarged, network performance and the resource utilization rate are improved, and the load balancing method can be used for resource optimization in a heterogeneous communication environment.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com