Queuing system for buffering data from multiple input-stream, system and method

A queuing system and input stream technology, applied in the field of systems that can receive multiple input streams, can solve problems such as low memory usage efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

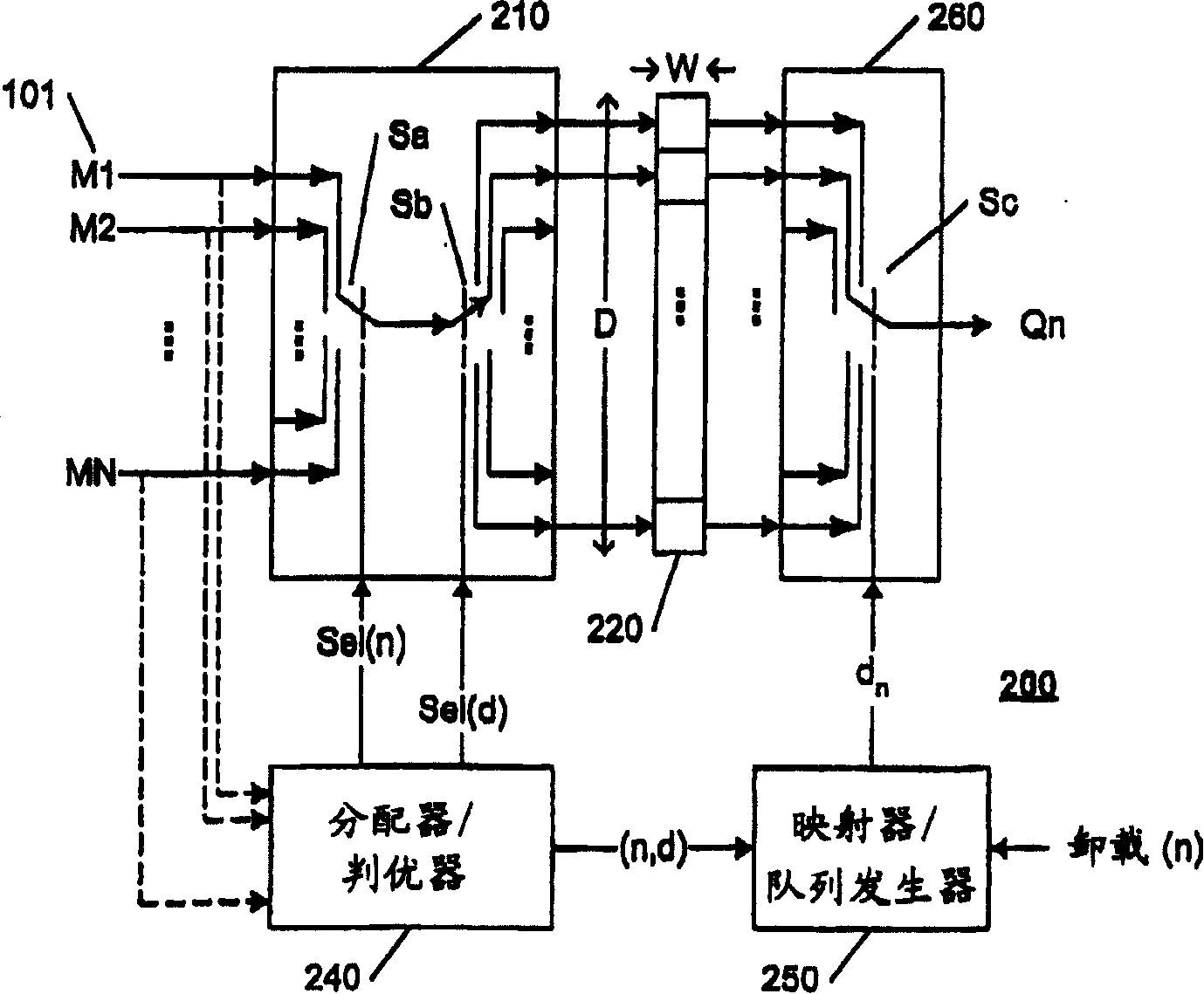

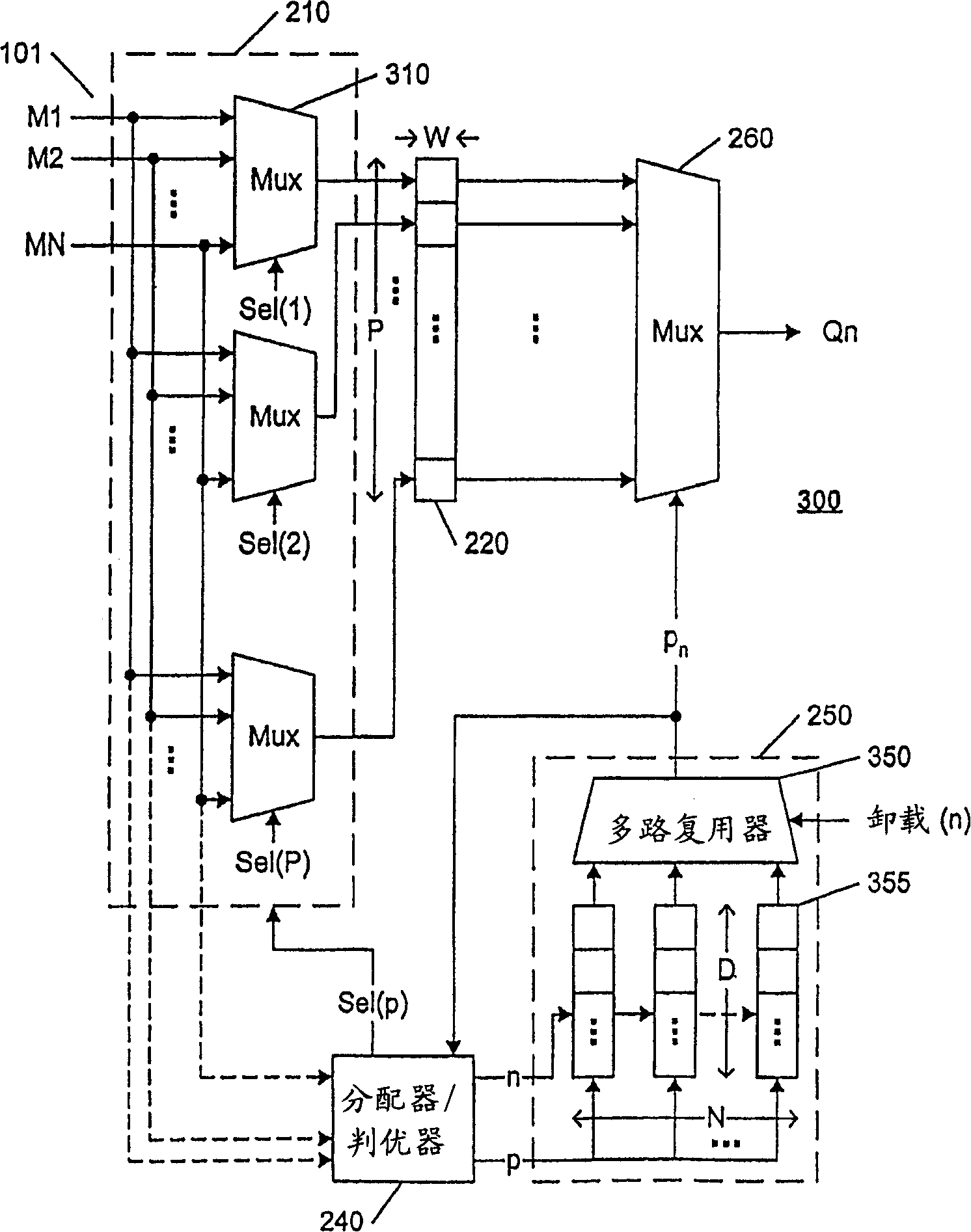

[0033] figure 2 A block diagram of an example of a multiple input queuing system 200 according to the present invention is shown. The system 200 includes a dual-port memory 220, wherein a distributor / arbiter 240 (hereinafter referred to as a distributor 240) is used to control writing to the memory 220, and a mapper / queue generator 250 (hereinafter referred to as a mapper 250) is used to control Reading out of the memory 220 is controlled. Write and read operations to memory 220 are symbolically represented by switch 210 and switch 260, respectively.

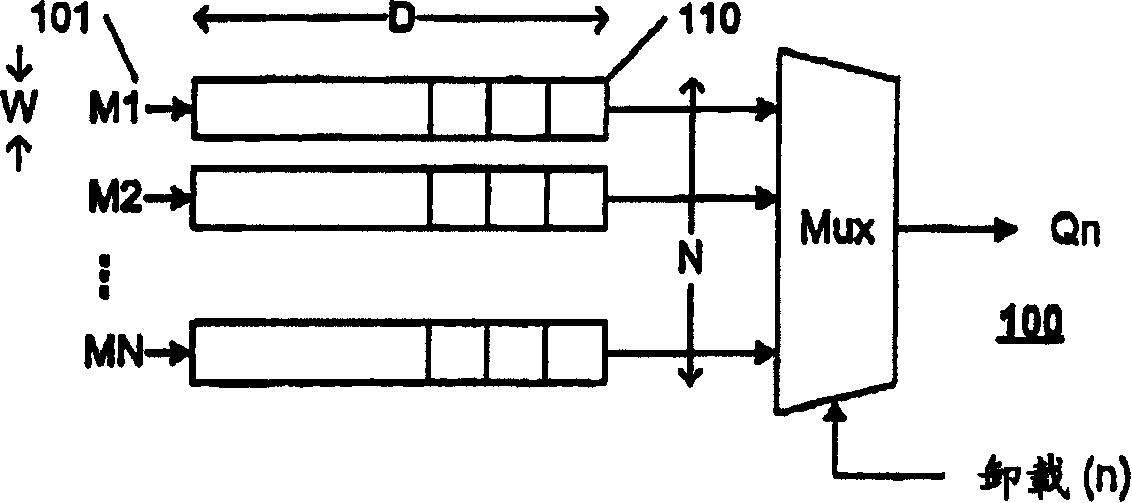

[0034] Such as figure 2 As shown, memory 220 includes P addressable memory cells each having a width W sufficient to accommodate any data item provided by input stream 101 . Refer to above for figure 1 Discussion of Prior Art System 100 Using conventional queuing theory techniques, the number P of storage locations required for a given level of confidence in avoiding memory 220 overflow can be determined from expected inpu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com