Virtual visual point synthesizing method based on depth and block information

A technology of virtual view point and synthesis method, which is applied in the field of virtual view point synthesis based on depth and occlusion information, which can solve problems such as virtual view point holes, difficult to solve hole problems, and unpredictability, so as to improve accuracy, solve occlusion problems, and improve image quality. quality effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0063] The virtual viewpoint synthesis method based on depth and occlusion information includes the following steps:

[0064] 1) Under the parallel optical axis camera array model, determine the 3D rendering equation based on the depth image rendering method;

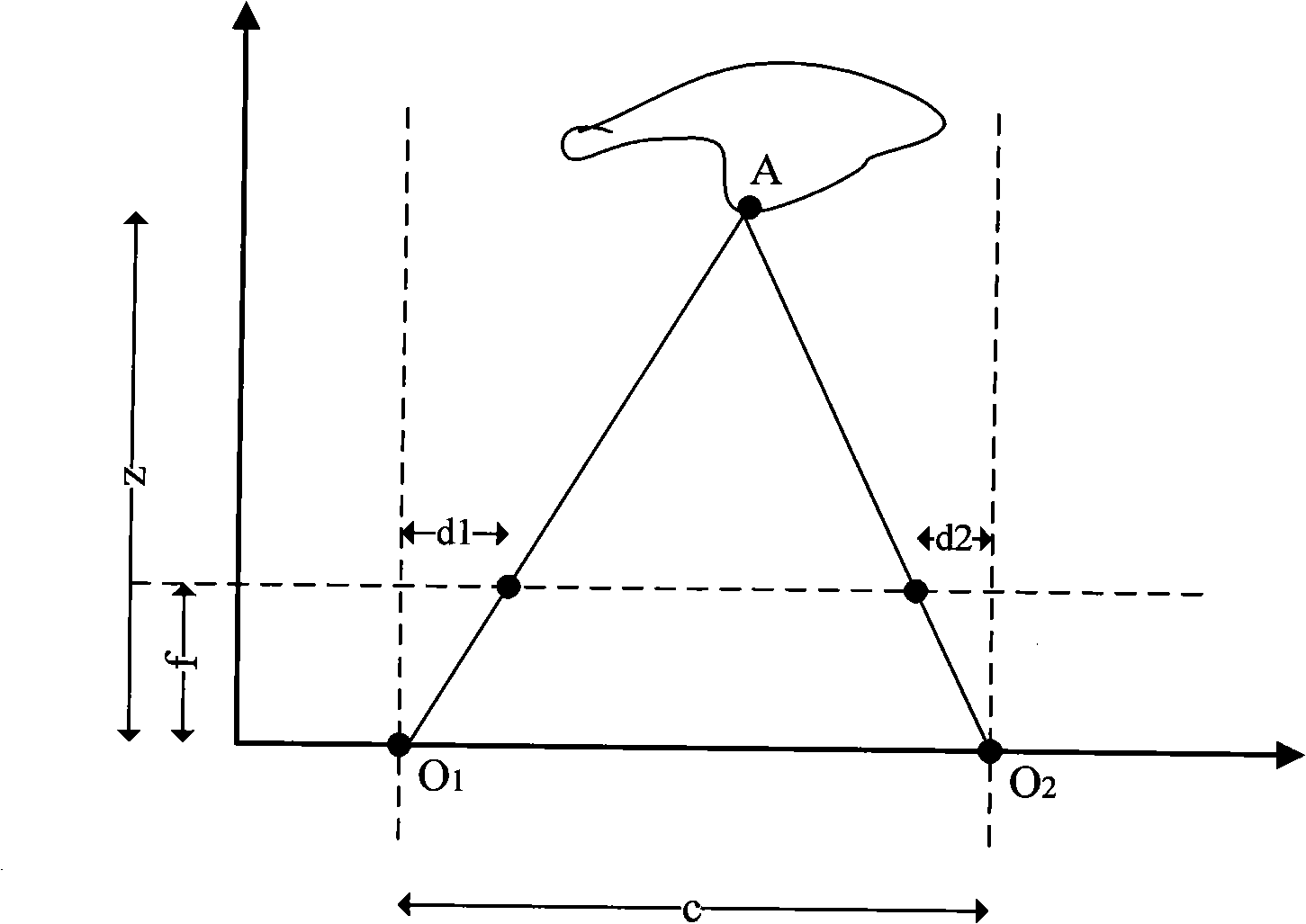

[0065] The reference viewpoint and the camera of the drawn viewpoint conform to the parallel optical axis camera array model, such as figure 1 As shown, this simplifies the rendering equations for depth image-based rendering methods.

[0066] refer to figure 1 The optical axis-parallel camera array model shown, camera O 1 As the reference viewpoint, the camera O 2 is the viewpoint to be drawn, the camera O 1 The coordinate system coincides with the world coordinate system, the focal length of the camera is f, and the viewpoint O is drawn 2 Relative to the reference viewpoint O 1 There is only horizontal displacement c, no rotation, and the depth z of the same object relative to the reference viewpoint is the sam...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com