Speech-emotion recognition method based on improved Fukunage-koontz transformation

A speech emotion recognition and emotion recognition technology, which is applied in speech recognition, speech analysis, instruments, etc., can solve the problems that the internal structure of speech cannot be effectively reflected, the manifold structure cannot be effectively reflected, and the correlation characteristics cannot be considered.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] The technical solutions of the present invention will be further described below in conjunction with the drawings and embodiments.

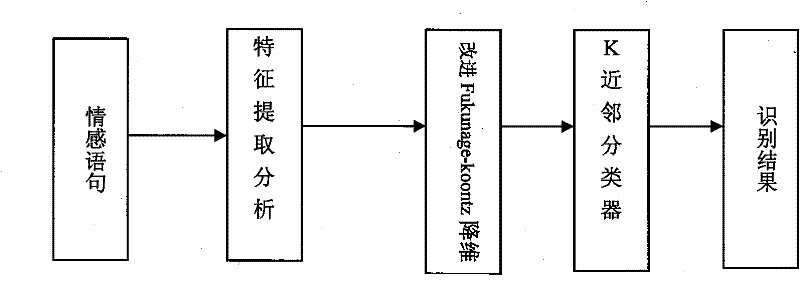

[0057] figure 1 This system block diagram is mainly divided into 3 major blocks: feature extraction and analysis module, improved Fukunage-koontz transformation, and emotion recognition module.

[0058] 1. Emotional feature extraction and analysis module

[0059] 1. Linear prediction cepstral coefficient parameter extraction

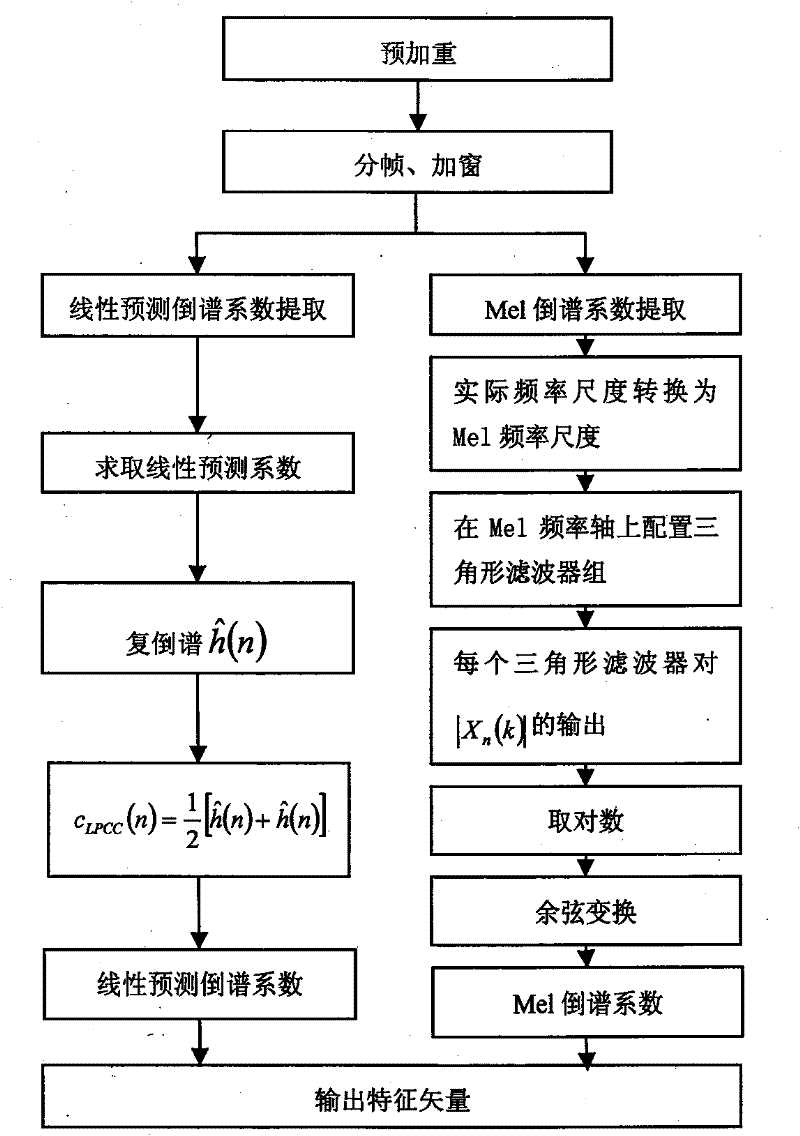

[0060] First, according to figure 2 In the feature parameter extraction process, the feature sentences to be extracted are pre-emphasized, including high-pass filtering, and the detection of the start endpoint and end endpoint of the sentence; then the sentence is divided into frames and windows, and the Durbin fast algorithm is used to calculate the linear prediction coefficient of each frame. , Calculate the complex cepstrum according to the linear prediction coefficient and linear predictor cepstral coeffici...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com