Children speech emotion recognition method

A speech emotion recognition and emotion recognition technology, applied in speech recognition, speech analysis, speech synthesis, etc., can solve the problems of large emotion recognition, slow recognition speed, unfavorable speech recognition, etc., to improve the naturalness and improve the effect of human-computer interaction Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

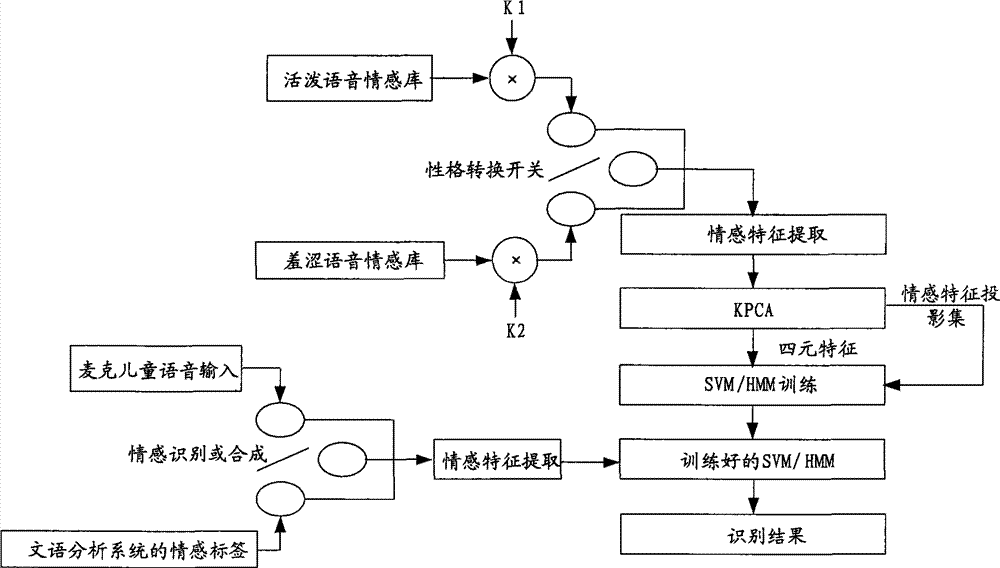

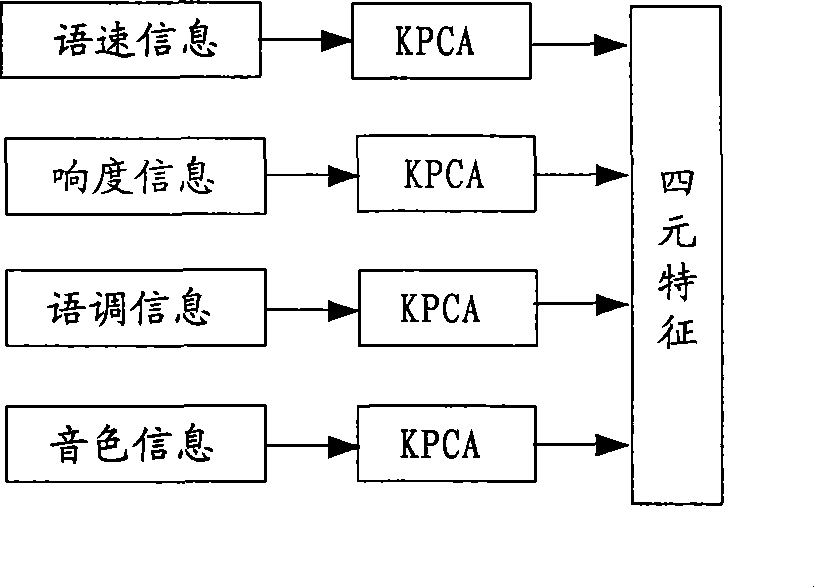

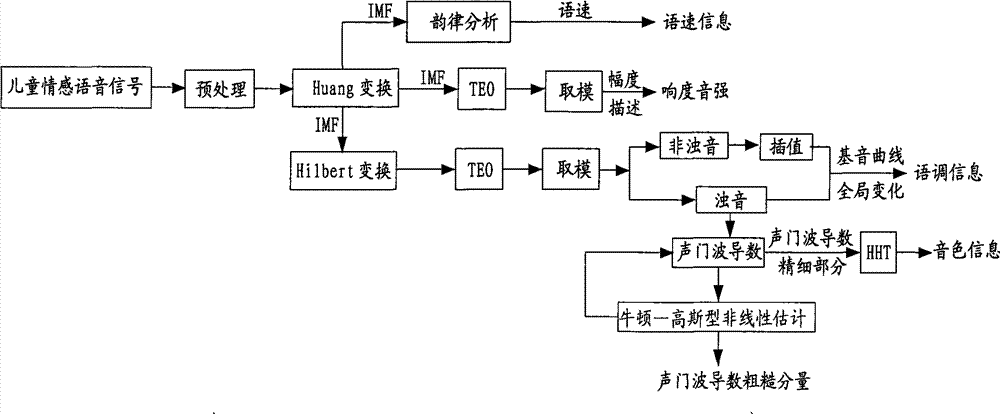

[0021] Speech emotion recognition method of the present invention comprises setting up voice database, carries out emotion classifier training and three parts of speech emotion recognition, as Figure 1 to Figure 4 shown.

[0022] Among them, the establishment of the voice library is mainly for children's voice, including voice collection and classification.

[0023] Generally speaking, babies start to learn to speak when they are about 1 year old, and then their voices will change as they grow older, and there will be a stage of transition from a child's voice to an adult voice, that is, the "voice change period". "Period" occurs at different times, mostly between the ages of 12 and 17, and lasts from six months to a year. The vocal cords will change a lot in the "voice change period", so the child described in the present invention refers to a boy or girl before entering the voice change period. At the same time, in order to ensure the reliability of sampling, children who...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com