Training method of multi-moving object action identification and multi-moving object action identification method

A multi-moving target and moving target technology, which is applied to the training method and recognition field of multi-moving target action behavior recognition, can solve the problems of neglect, lack of effective methods for group action behavior classification, and poor expression ability of action behavior patterns. The effect of good recognition effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The present invention will be described below in conjunction with the accompanying drawings and specific embodiments.

[0040] In the current video, people are the main moving objects. Therefore, in the process of describing the multi-moving object action behavior training and recognition method of the present invention, people are taken as an example to illustrate related methods. Since the method of the present invention is to identify the actions of multiple people in the video, the video to be processed generally should include multiple people.

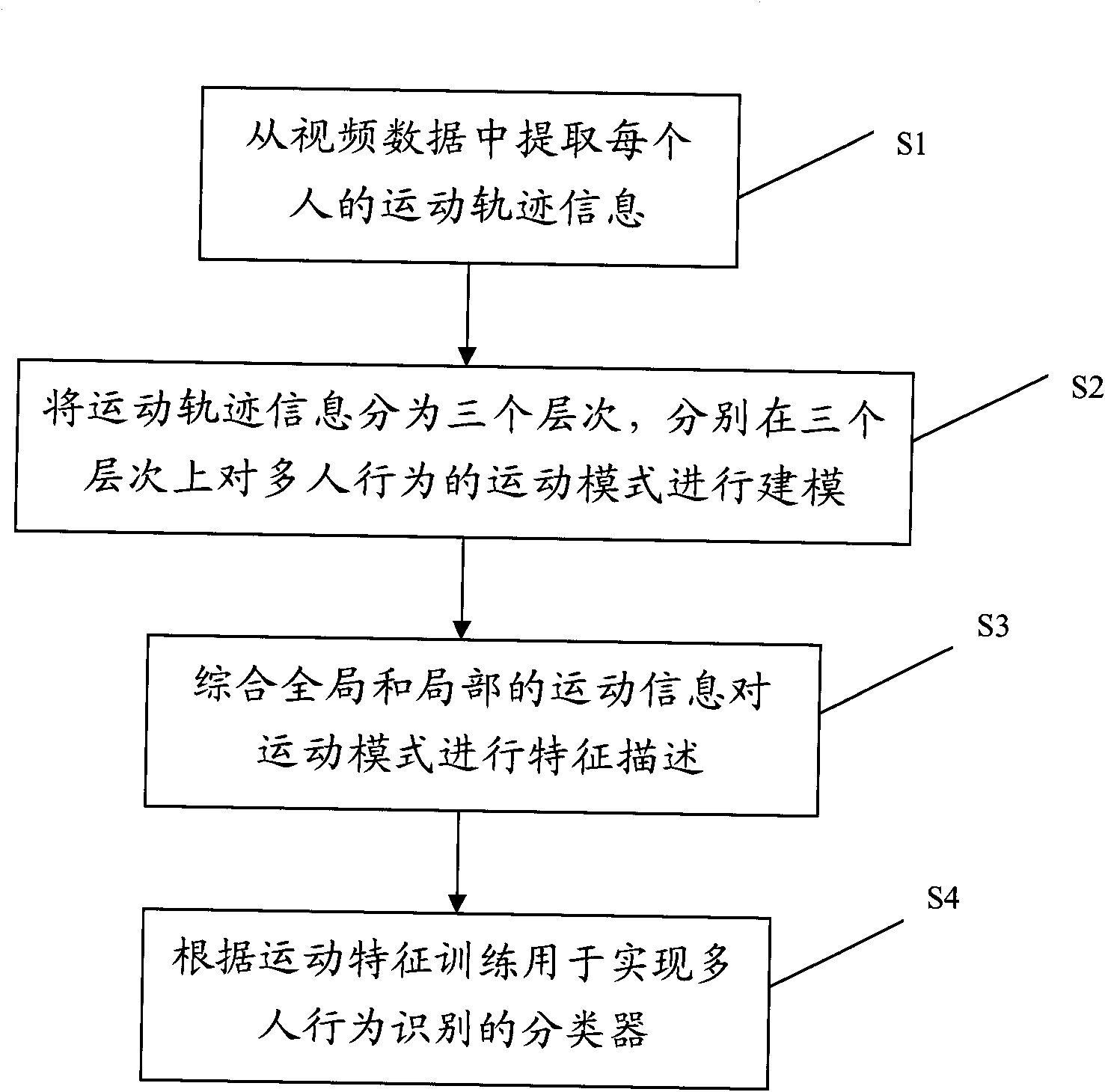

[0041] refer to figure 1, in step S1, extract the motion trajectory information of each person from the video data containing the actions of multiple people. It is common knowledge of those skilled in the art to extract personal movement trajectory information from videos. Related methods in the prior art are used, such as detecting and tracking moving objects in the video respectively, so as to obtain the movement traject...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com