Quick depth video coding method

A technology of depth video and encoding method, which is applied in the field of encoding multi-viewpoint video signals, can solve problems such as poor time continuity, discontinuous depth, and high cost, and achieve the effects of ensuring compression efficiency, reducing computational complexity, and ensuring accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

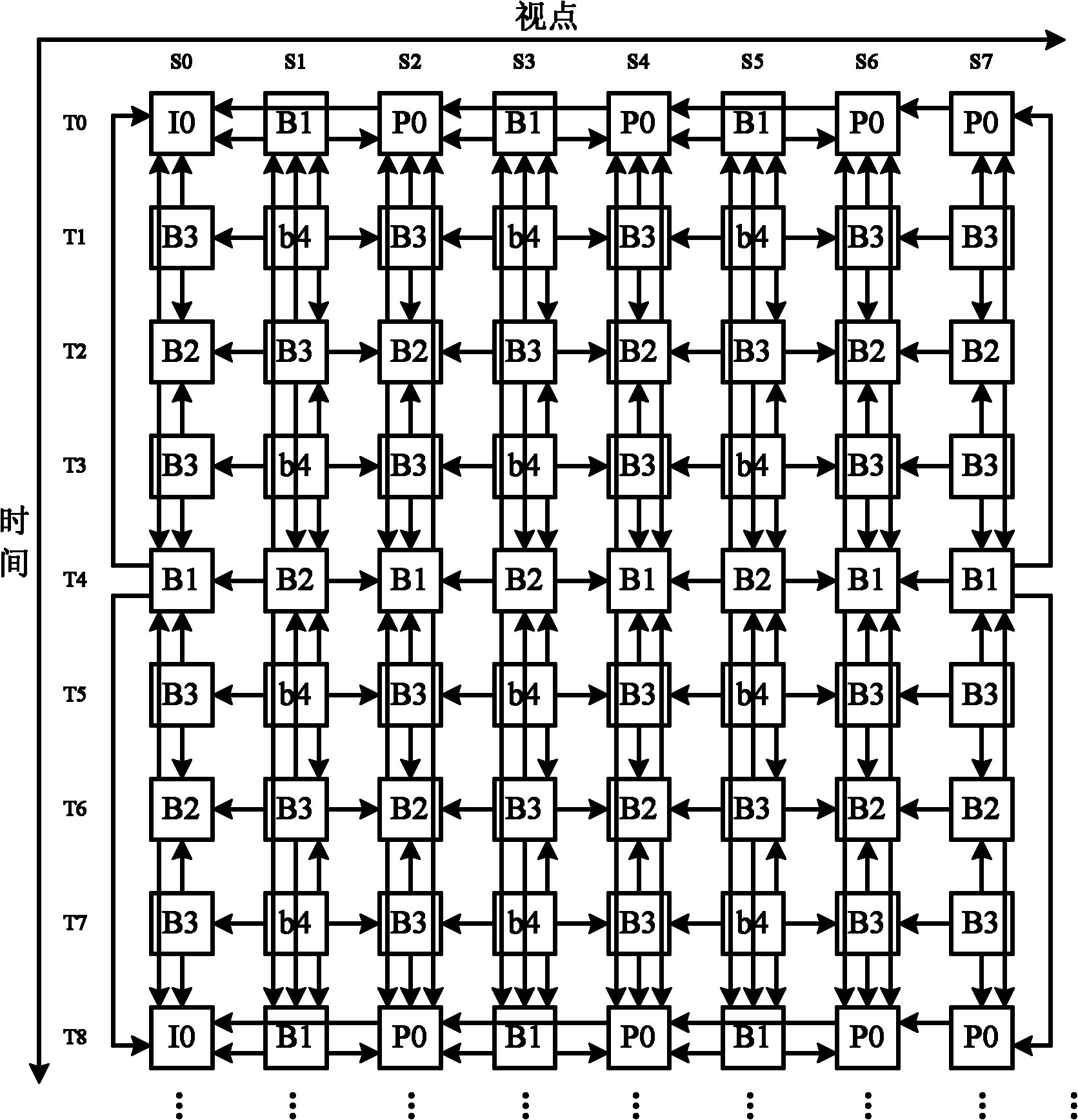

[0033] A fast depth video coding method proposed by the present invention divides all viewpoints in the multi-viewpoint depth video predictive coding structure into three categories: main viewpoint, first-level auxiliary viewpoint and second-level auxiliary viewpoint. The main viewpoint is time-only Viewpoints that are predicted without inter-viewpoint prediction. The first-level auxiliary viewpoint refers to the viewpoint where the key frame performs inter-viewpoint prediction, and the non-keyframe only performs temporal prediction without inter-viewpoint prediction. The second-level auxiliary viewpoint refers to the keyframe. For inter-viewpoint prediction and non-key frames that perform both temporal prediction and inter-viewpoint prediction, the set of keyframes of all viewpoints in the multi-viewpoint depth video prediction coding structur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com