Full-automatic three-dimensional human face detection and posture correction method

A three-dimensional face, fully automatic technology, applied in the direction of image data processing, 3D modeling, instruments, etc., can solve the problems of unstable curvature, error-prone, time-consuming and labor-intensive, and achieve high speed, high accuracy and high reliability Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

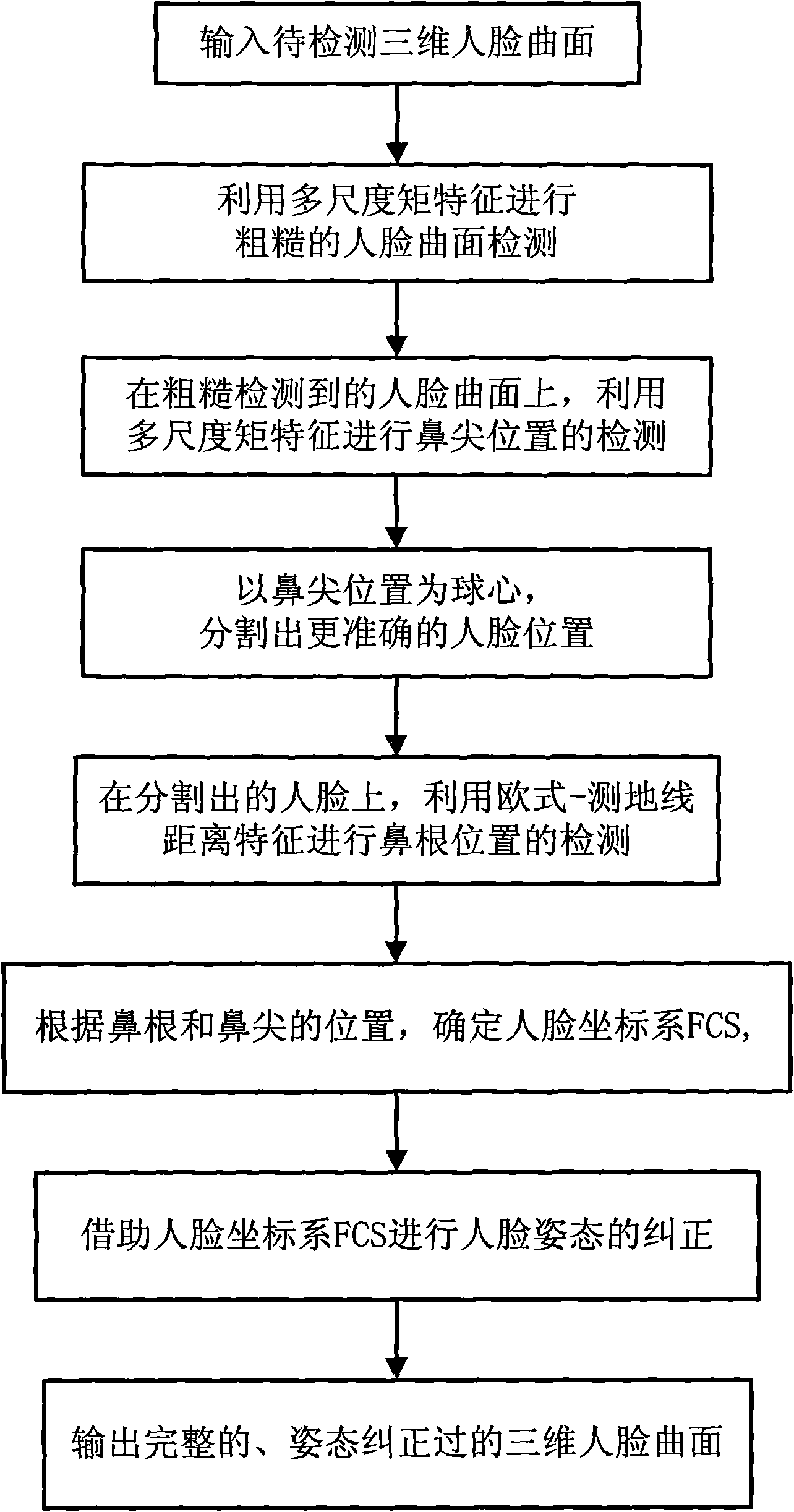

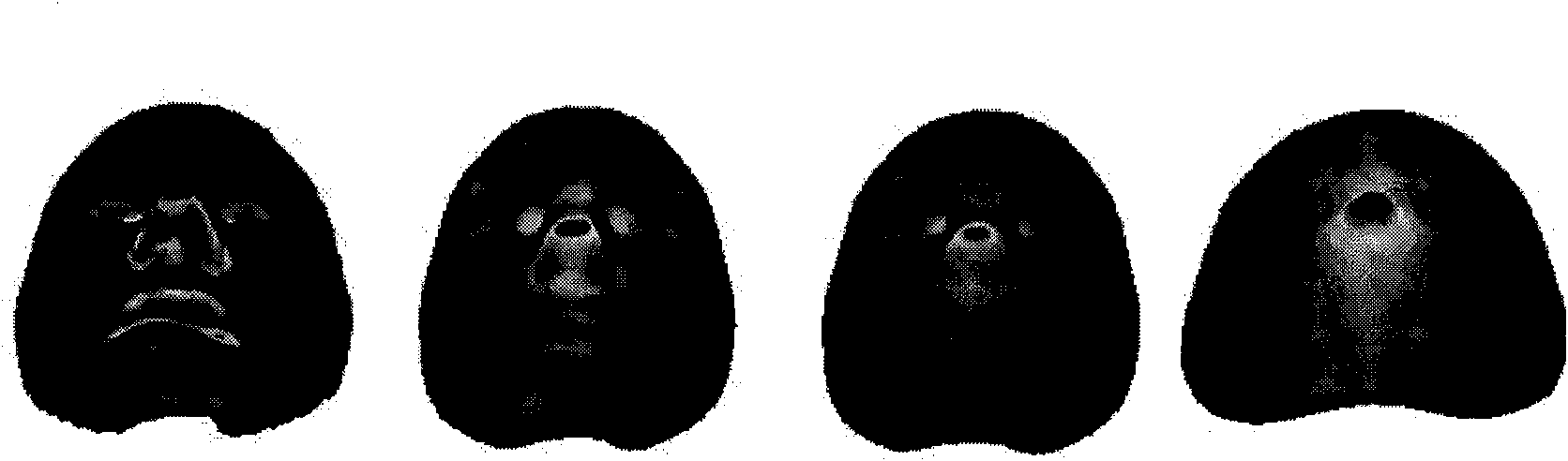

[0033] Three-dimensional human face detection and posture correction method of the present invention (as figure 1 (shown) takes the 3D face surface with complex interference, various expressions and different poses as input, and through multi-scale moment analysis of the 3D face surface, first proposes facial region features to roughly detect the face surface, Secondly, the nasal tip area feature is proposed to accurately locate the position of the nose tip, and then the complete face surface is further accurately segmented according to this position, and then the nasal root area feature is proposed according to the distance information of the human face surface to detect the position of the nasal root , and finally according to the positions of these feature points, a face coordinate system is established, and the face pose is automatically corrected accordingly, and the output is a trimmed, complete, pose-corrected 3D face.

[0034] The specific process of the method of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com