Data equalization processing method, device and system based on mapping and specification

A mapping processing and equalization technology, which is applied in the field of data equalization processing based on mapping and protocol, can solve problems such as extended data processing time, reduced data processing efficiency, and ineffective use of TaskTracker resources, so as to reduce the total time, Reduce load imbalance and improve efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0071] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

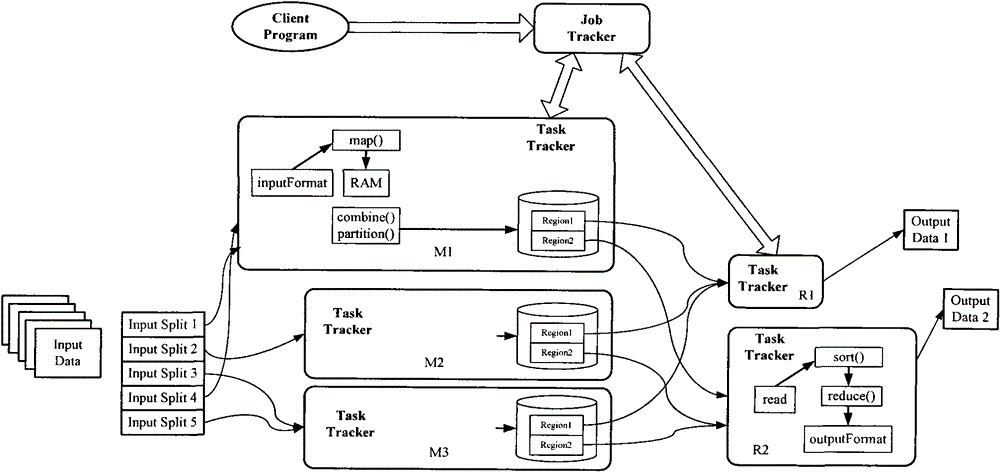

[0072] In the prior art, when the intermediate result processed by Mapper is stored in RAM, and when the intermediate result data stored in RAM is merged and partitioned, the processing is performed in the form of a specified Reduce partition and output to the corresponding buffer, so that The intermediate result data load in the input buffer is unbalanced.

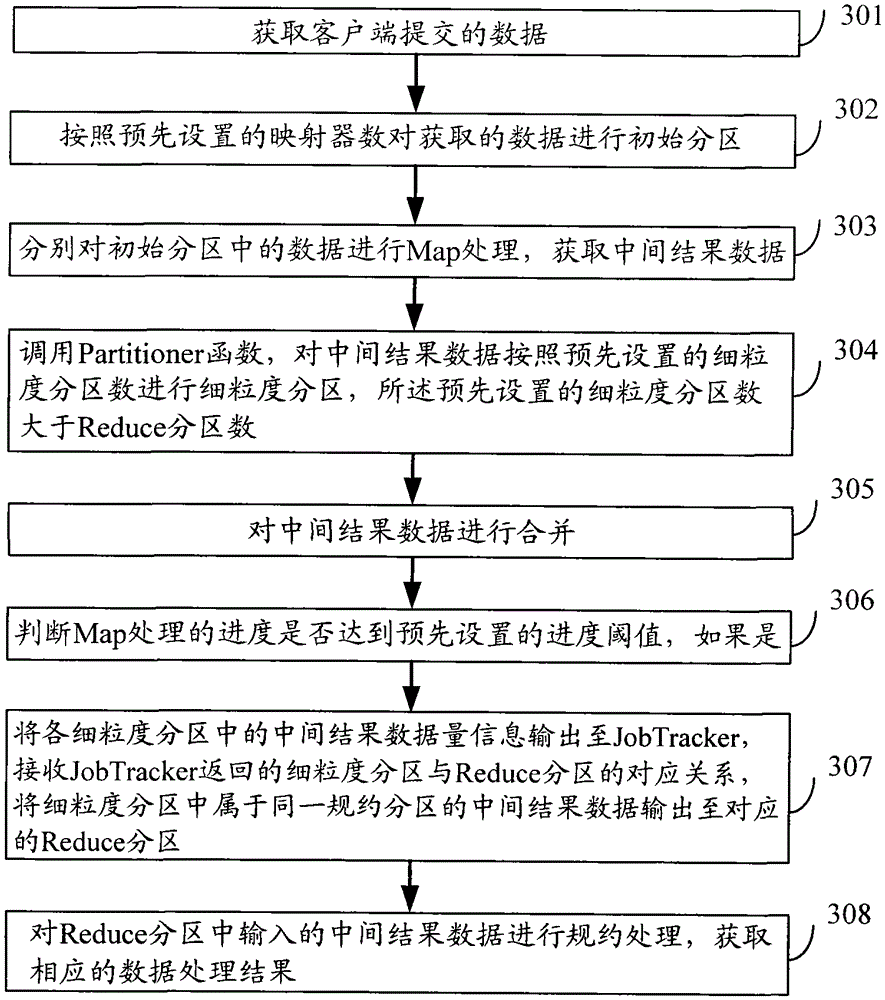

[0073] In the embodiment of the present invention, before partitioning the intermediate result data stored in RAM, the distribution of the intermediate result data of the fine-grained partition is obtained by performing fine-grained partition preprocessing on the intermediate result data, and according to the acquired data distribution According to the pre-set balance strategy, the i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com