Moving object segmentation using depth images

A technology of depth images and moving objects, applied in image analysis, image enhancement, image data processing, etc., can solve problems such as complex algorithm calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The detailed description provided below in connection with the accompanying drawings is intended as a description of examples of the invention and is not intended to represent the only forms in which examples of the invention may be constructed or used. This description sets forth the functionality of an example of the invention, and a sequence of steps for building and operating the example of the invention. However, the same or equivalent functions and sequences can be implemented by different examples.

[0019] Although examples of the present invention are described and shown herein as being implemented in a computer gaming system, the described system is provided by way of example only, and not limitation. Those skilled in the art will appreciate that the present example is suitable for application in various different types of computing systems that use 3D models.

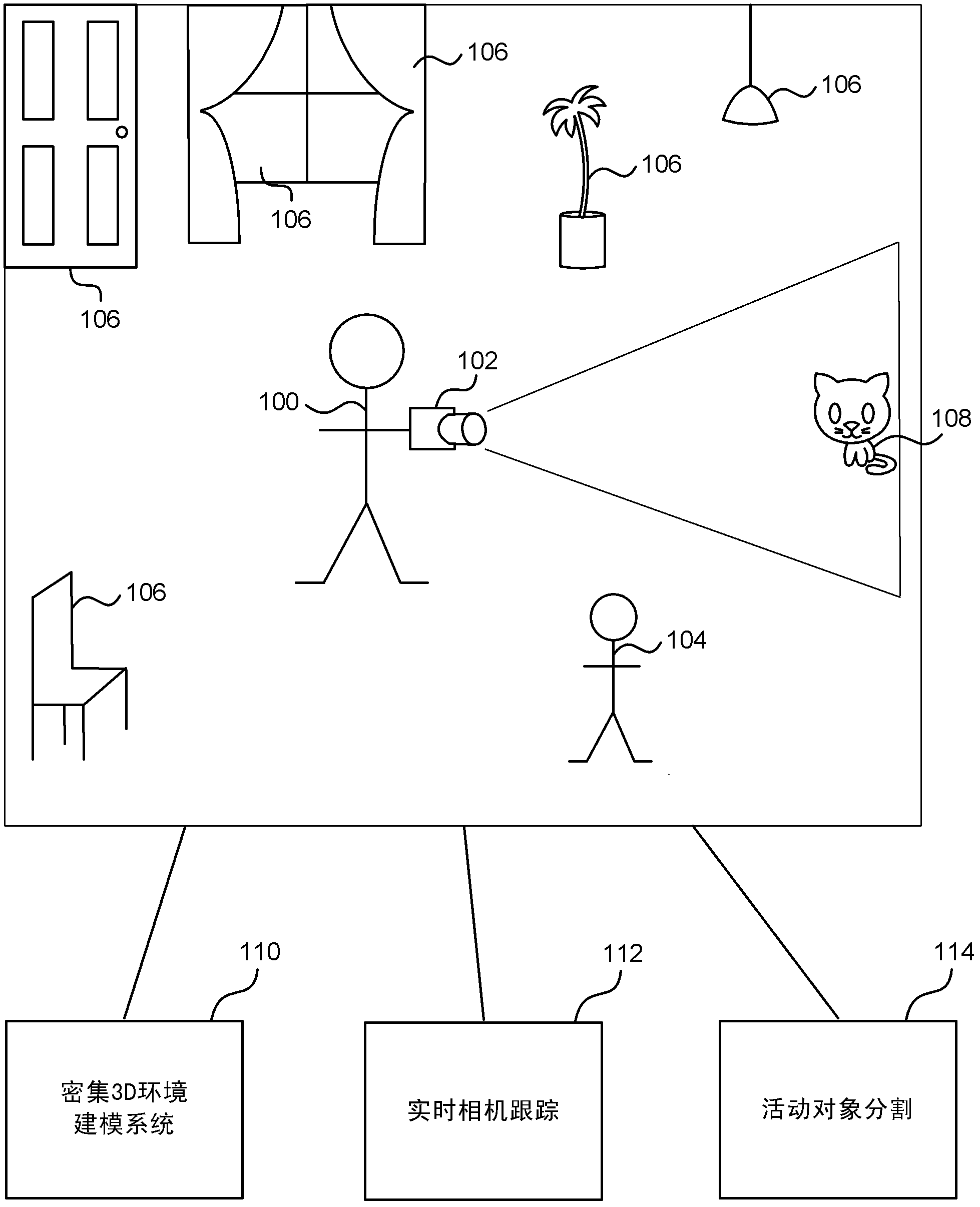

[0020] figure 1 is a schematic diagram of a person 100 standing in a room and holding a mobile de...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com