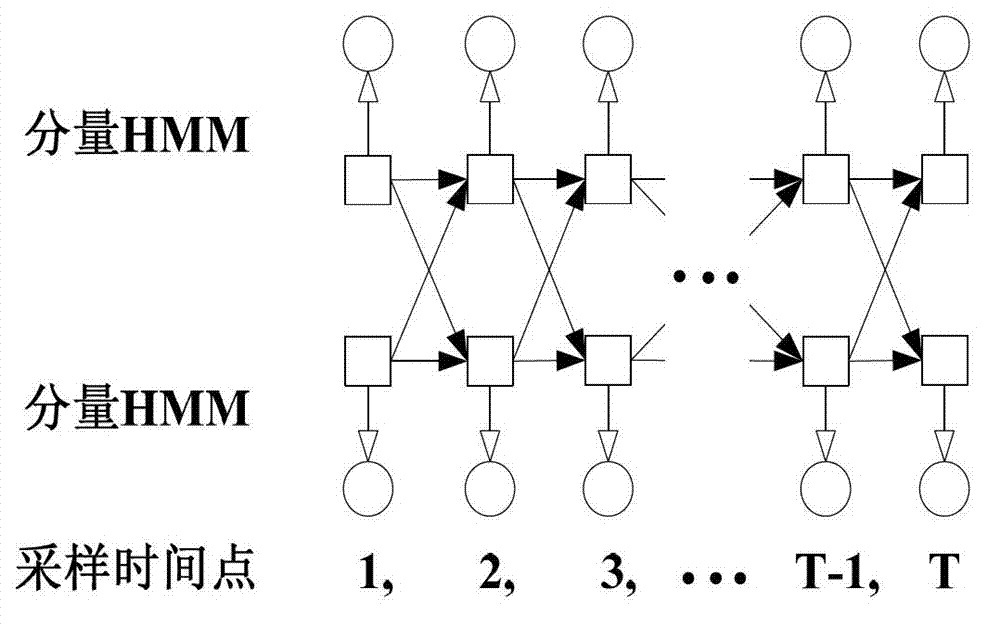

Emotion recognition method for enhancing coupling hidden markov model (HMM) voice-vision fusion

An emotion recognition and emotion technology, applied in character and pattern recognition, instruments, computing, etc., can solve the problem of low recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

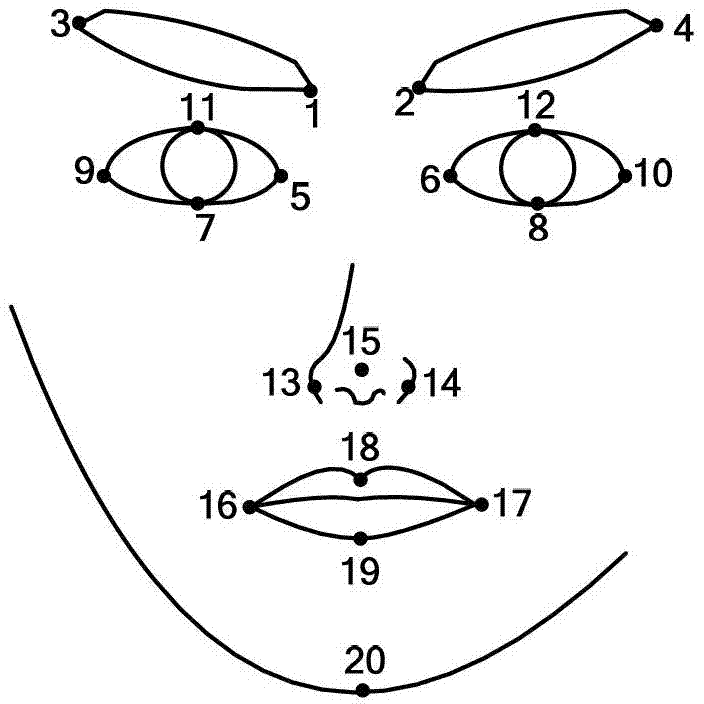

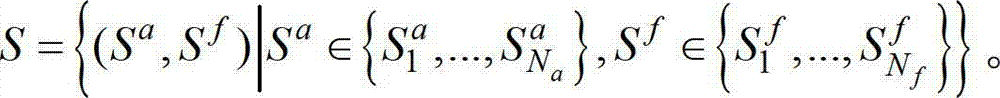

Method used

Image

Examples

Embodiment Construction

[0143] The implementation of the method of the present invention will be described in detail below in conjunction with the accompanying drawings and specific examples.

[0144] In this example, 5 experimenters (2 males and 3 females) read sentences with 7 basic emotions (happy, sad, angry, disgusted, fearful, surprised and neutral) in a guided (Wizard of Oz) scenario , the camera simultaneously records facial expression images and sound data from the front. In the scene script, there are 3 different sentences for each emotion, and each person will repeat each sentence 5 times. The emotional video data of four people are randomly selected as the training data, and the video data of the remaining person is used as the test set. The whole recognition process is independent of the experimenter. Then, the experimental data was re-labeled using the activation-evaluation space rough classification method, that is, samples were divided into positive and negative categories along the ...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap