Real-time registration method for depth maps shot by kinect and video shot by color camera

A color camera and depth map technology, which is applied in image communication, electrical components, stereo systems, etc., can solve the problem of depth data noise affecting calculation efficiency, and achieve the effect of reducing the impact

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

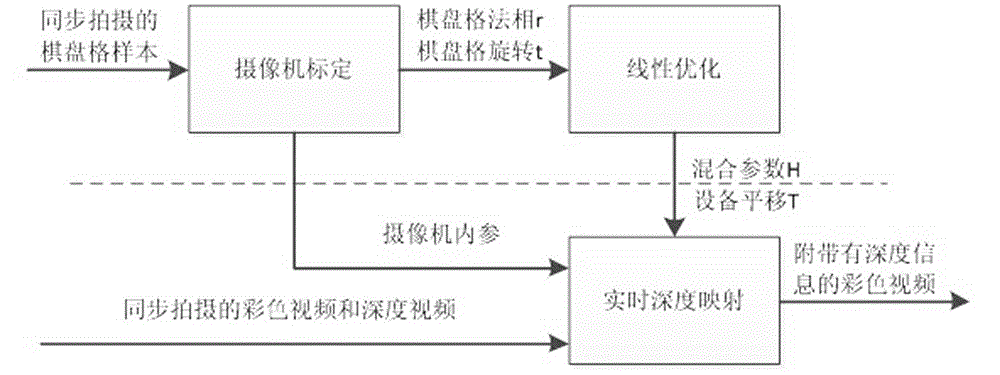

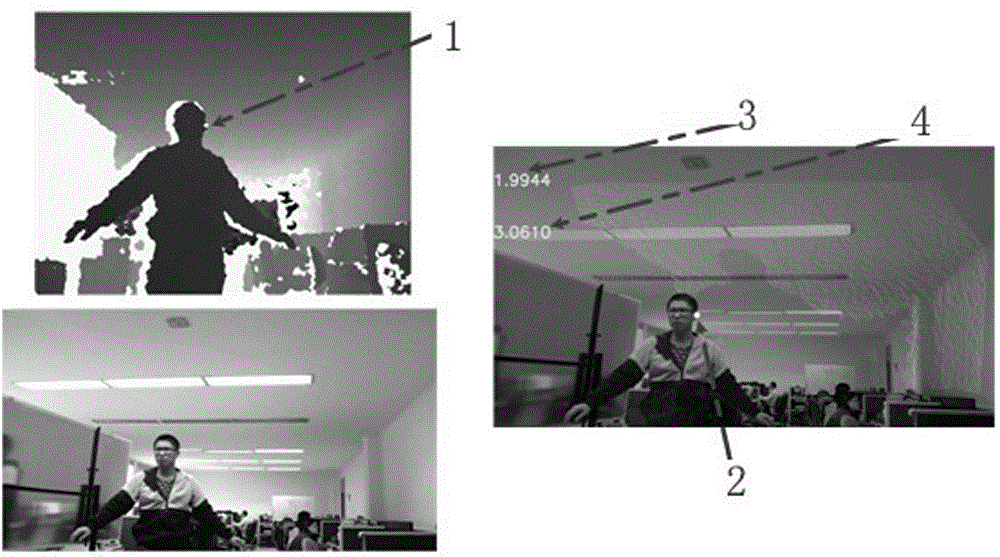

Method used

Image

Examples

Embodiment Construction

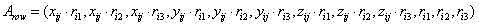

[0035] First define the abbreviations that will be used in the following instructions:

[0036] : Camera internal reference

[0037] : 3D rotation matrix of the checkerboard coordinate system relative to the camera coordinate system;

[0038] : The third column vector of the 3D rotation matrix of the checkerboard coordinate system relative to the camera coordinate system;

[0039] : The translation of the checkerboard coordinate system relative to the camera coordinate system;

[0040] : The homogeneous coordinate representation of the two-dimensional coordinates of the jth point on the checkerboard plate in the depth image captured by kinect in the ith sample and the depth of the point the product of

[0041] : Rotation by camera coordinate system and kinect coordinate system And and kinect internal reference The resulting mixing parameters, ;

[0042] : The translation between the camera coordinate system and the kinect coordinate system. .

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com