Human face feature point positioning method and device thereof

A facial feature, point positioning technology, applied in the field of image processing, can solve the problems of uneven movement, slow processing speed, large amount of calculation, etc., to achieve the effect of good visual effect, smooth movement, and improved processing speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

[0028] refer to figure 1 As shown, it is a schematic diagram of the environment when the method for locating facial feature points provided by the first embodiment of the present invention is applied. In this embodiment, the method for locating facial feature points is applied to an electronic device 1, and the electronic device 1 further includes a screen 10 for outputting images. The electronic device 1 is, for example, a computer, a mobile electronic terminal or other similar computing devices.

[0029] Below in conjunction with specific embodiment the above-mentioned human face feature point localization method is described in detail as follows:

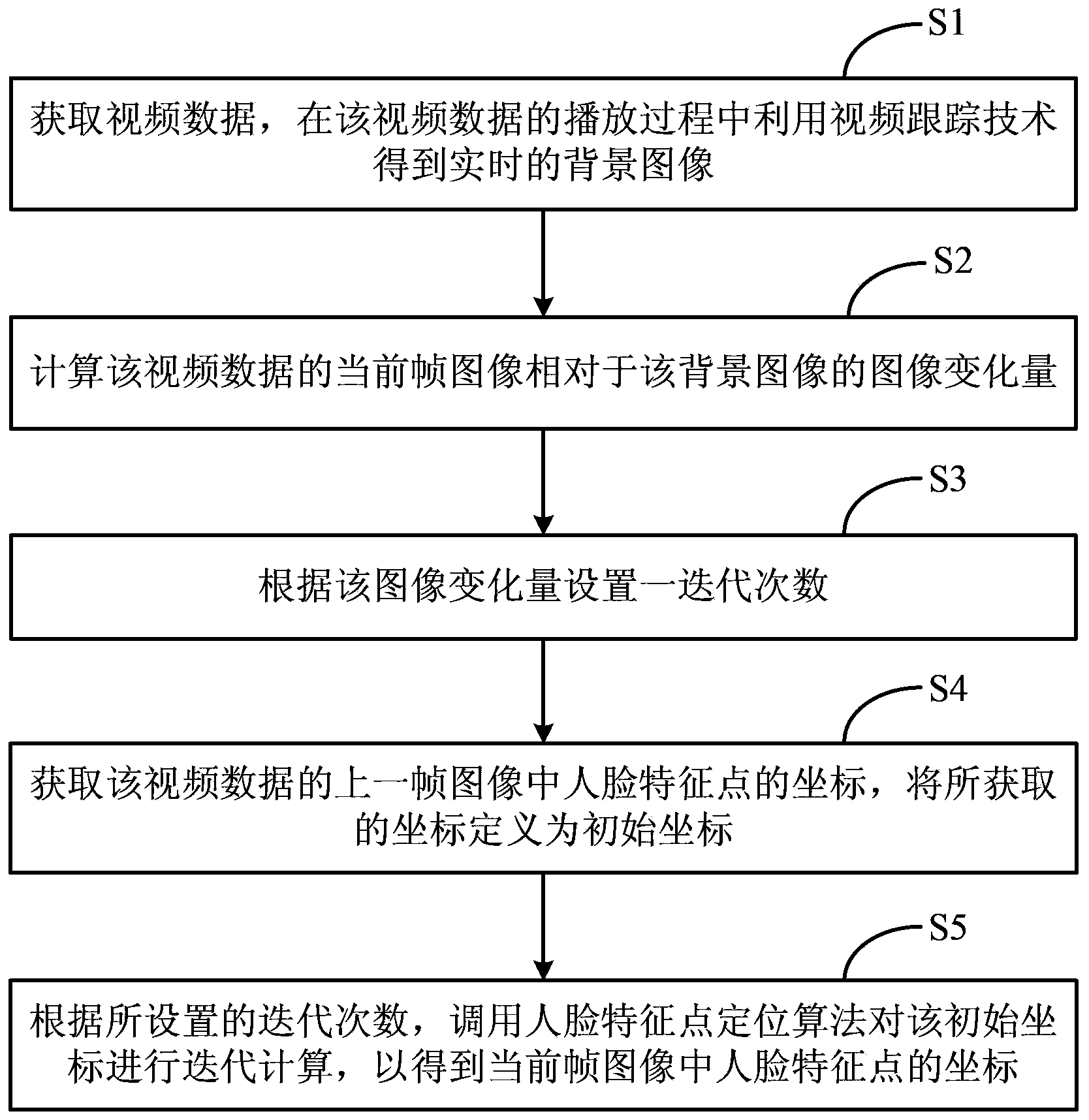

[0030] figure 2 The flowchart of the face feature point location method provided by the first embodiment, the face feature point location method includes the following steps:

[0031] Step S1, obtaining video data, and using video tracking technology to obtain a real-time background image during the playback of the video data...

no. 2 example

[0052] According to the facial feature point positioning method provided in the first embodiment, to locate the coordinates of the facial feature points in the current frame image of the video data, the iterative calculation process needs to be performed on the current frame image. However, in reality, if the face does not move, the current frame image may hardly change compared to the previous frame image. Therefore, if the current frame image is almost unchanged relative to the previous frame image, the iterative calculation is performed on the current frame image to obtain the coordinates of the face feature points in the current frame image, which will increase unnecessary The amount of calculation reduces the processing speed.

[0053] To further address the above issues, see Figure 7 As shown, the second embodiment of the present invention provides a method for locating facial feature points. Compared with the method for locating facial feature points in the first embo...

no. 3 example

[0058] According to the facial feature point positioning method provided in the first embodiment or the second embodiment, to locate the coordinates of the facial feature points in the current frame image of video data, the iterative calculation process needs to be performed on the current frame image as a whole. In fact, since the moving range of the human face is limited, it is only necessary to perform the iterative calculation process on the area where the moving range of the human face is located. Therefore, if the iterative calculation is performed on the current frame image as a whole, unnecessary calculation amount will be increased and the processing speed will be reduced.

[0059] In order to further solve the above problems, the third embodiment of the present invention provides a method for locating facial feature points, which is compared to the method for locating facial feature points in the first embodiment or the second embodiment, see Figure 8 As shown (in t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com