Equipment and method for generating emoticon based on shot image

A technology of expression and equipment, which is applied in the direction of image communication, color TV parts, TV system parts, etc., can solve the problems of consumption, editing, large data flow, etc., and achieve the effect of enriching content, saving traffic and time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0063] Hereinafter, exemplary embodiments of the present invention will be described in detail with reference to the accompanying drawings, in which like reference numerals refer to like components throughout.

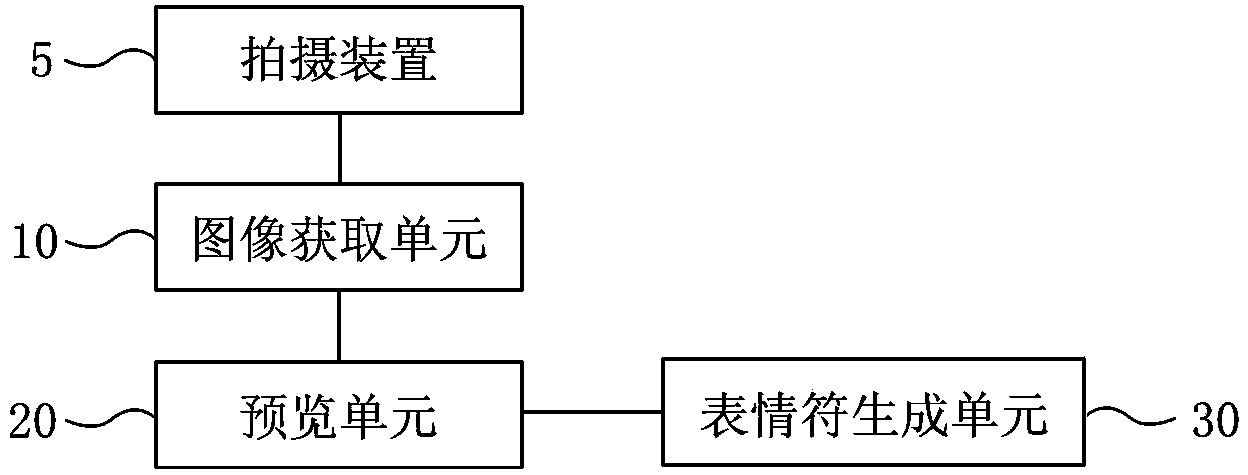

[0064] figure 1 A block diagram illustrating an apparatus for generating an emoticon based on a captured image when a user inputs a message according to an exemplary embodiment of the present invention. As an example, during a user inputting a message in various electronic products such as a personal computer, a smart phone, a tablet, etc., figure 1 The illustrated emoticon generating device can be used to generate emoticons based on captured images.

[0065] Such as figure 1 As shown, the emoticon generation device includes: an image acquisition unit 10, which is used to acquire an image taken by the photographing device 5; a preview unit 20, which is used to generate an emoticon effect map for preview based on the acquired image, and display the emoticon to the use...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com