A method and device for recognizing throwing actions based on a single attitude sensor

A single-sensor, action technology, applied in the field of motion capture, can solve problems such as inability to capture and reduce interactive experience.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

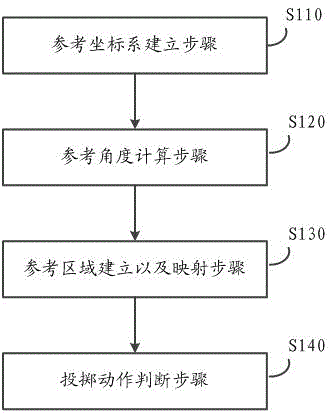

[0044] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the present invention, but not to limit the present invention. In addition, it should be noted that, for the convenience of description, only some structures related to the present invention are shown in the drawings but not all structures.

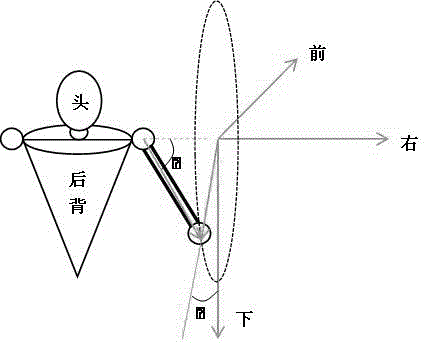

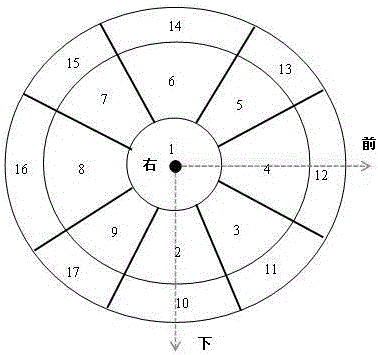

[0045] The throwing action can be regarded as a combination of a series of actions in a short period of time. By dividing all possible postures of the right upper arm in a certain coordinate system, the right half space of the reference coordinate system is divided into several regions, and each region represents a basic state. During the entire throwing process, the state of the upper right arm will be continuously and sequentially switched from one of several possible initial states to the final state.

[0046]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com