Big data appliance realizing method based on CPU-GPU heterogeneous cluster

A technology of heterogeneous clusters and implementation methods, applied in the field of cloud computing, can solve the problems of low operation efficiency of massive data computing, and achieve the effect of low efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

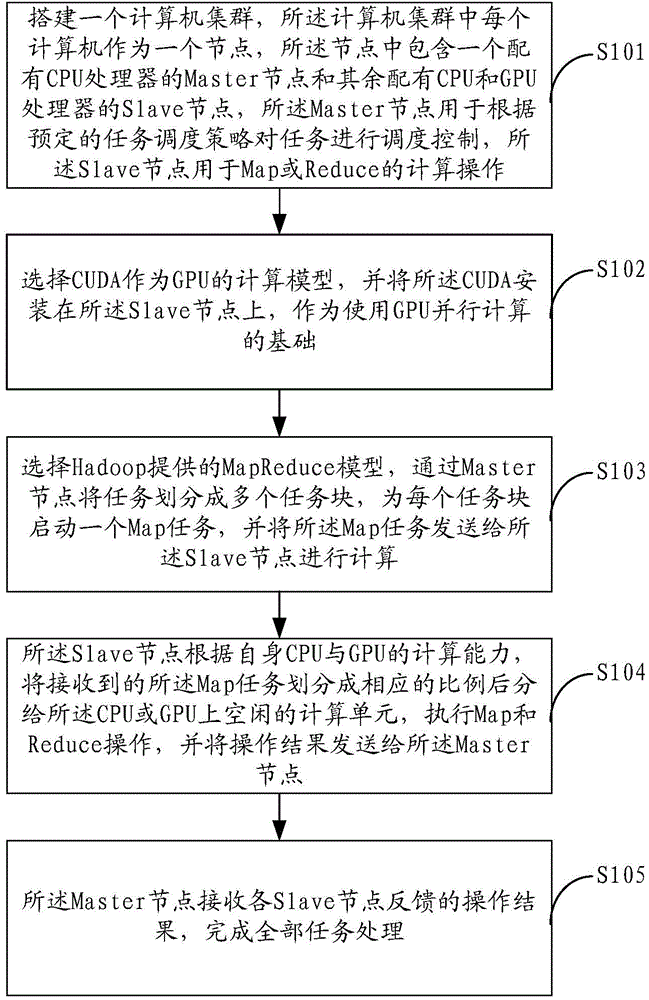

[0019] figure 1 It shows the implementation process of the CPU-GPU heterogeneous cluster-based big data all-in-one machine implementation method provided by Embodiment 1 of the present invention, and the method process is described in detail as follows:

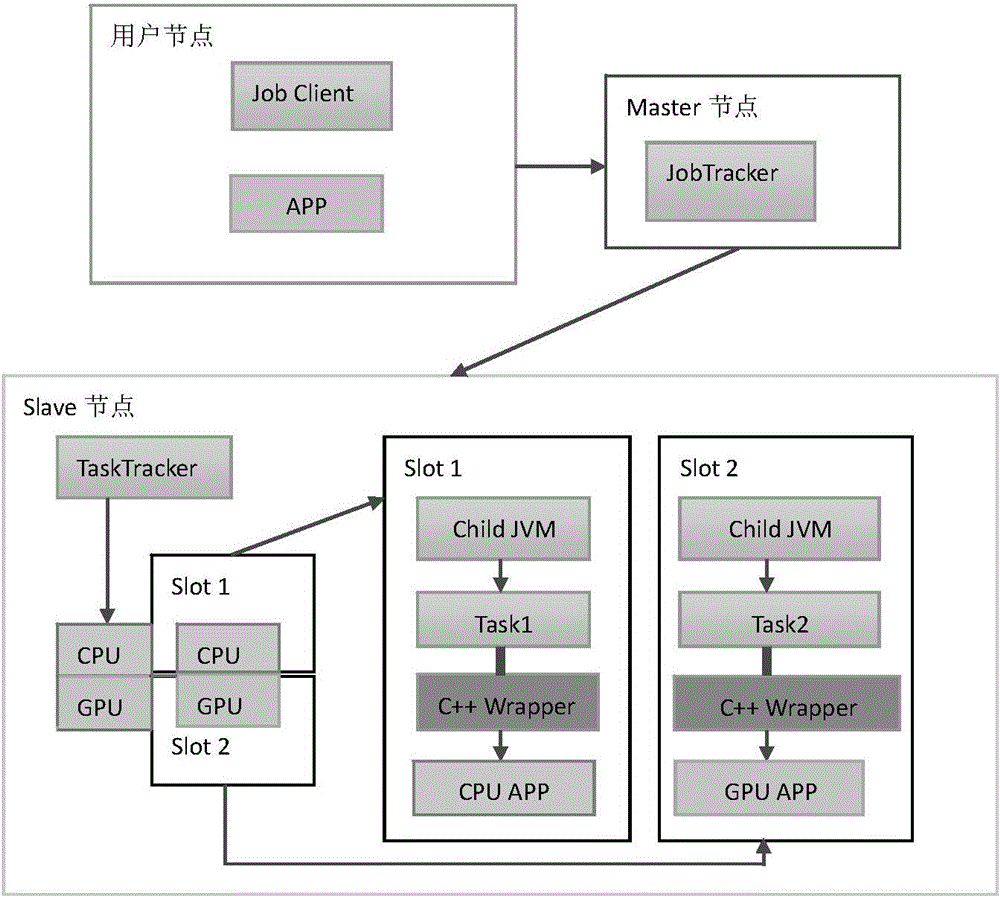

[0020] In step S101, a computer cluster is set up, and each computer in the computer cluster is used as a node, and the node includes a Master node equipped with a CPU processor and other Slave nodes equipped with CPU and GPU processors, so The Master node is used to schedule and control tasks according to a predetermined task scheduling policy, and the Slave node is used for Map or Reduce computing operations.

[0021] In the embodiment of the present invention, the nodes may communicate through a wireless network connection. Exemplarily, each node may communicate through an Infiniband network connection. Each node has its own independent memory and disk. During disk access, each node can access both its own disk and disk...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com