Quick training method of large-scale data recurrent neutral network (RNN)

A regression neural network, large-scale data technology, applied in the field of speech recognition, can solve the problems of underutilization, many iteration steps, slow convergence and so on

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

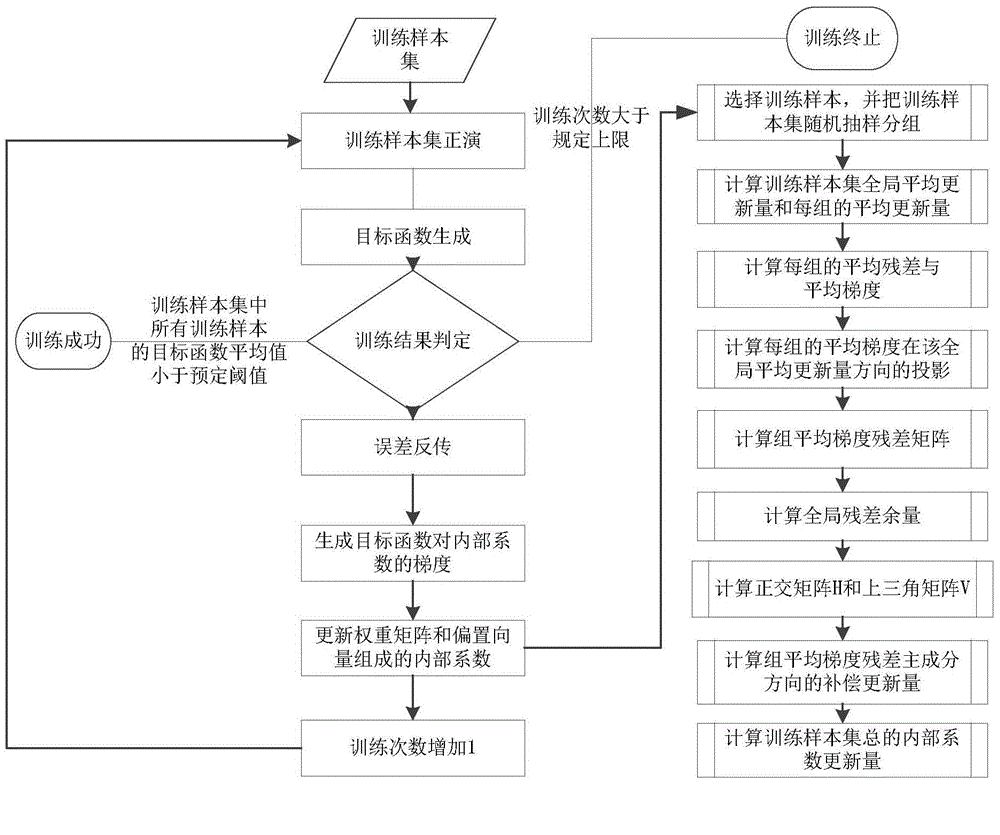

[0084] The present invention proposes a kind of fast training method of large-scale data regression neural network in conjunction with accompanying drawing and embodiment and further explanation is as follows:

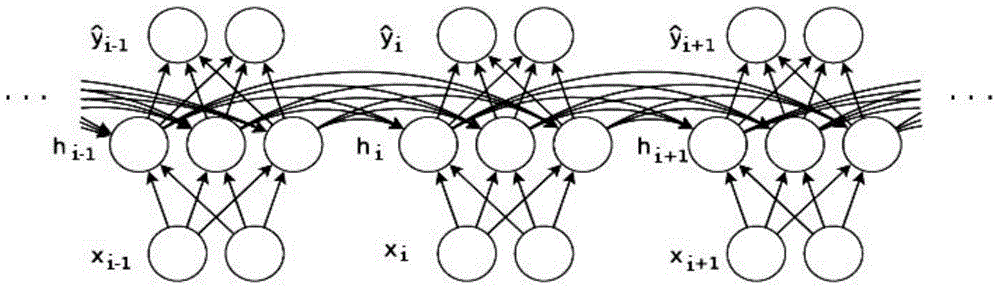

[0085] The present invention proposes a large-scale data regression neural network rapid training method, which is characterized in that the method uses the average gradient direction and the gradient residual principal component direction to simultaneously update the large-scale data of the internal coefficients to perform rapid training of the regression neural network. After the gradient of the objective function to the internal coefficient at each training sample is obtained, the training samples are grouped, and the gradients of the entire training sample set and each group are weighted and averaged according to the objective function value at each training sample, and the global average gradient and group average The orientation of the residual principal component...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com