Cache memory management device and dynamic image system and method using the cache memory management device

A dynamic image and memory technology, applied in the management technology field aiming at reducing cache misses, can solve the problem of high cache miss rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] Firstly, the so-called present invention below is used to refer to the inventive concept presented by these embodiments, but its scope is not limited by these embodiments themselves.

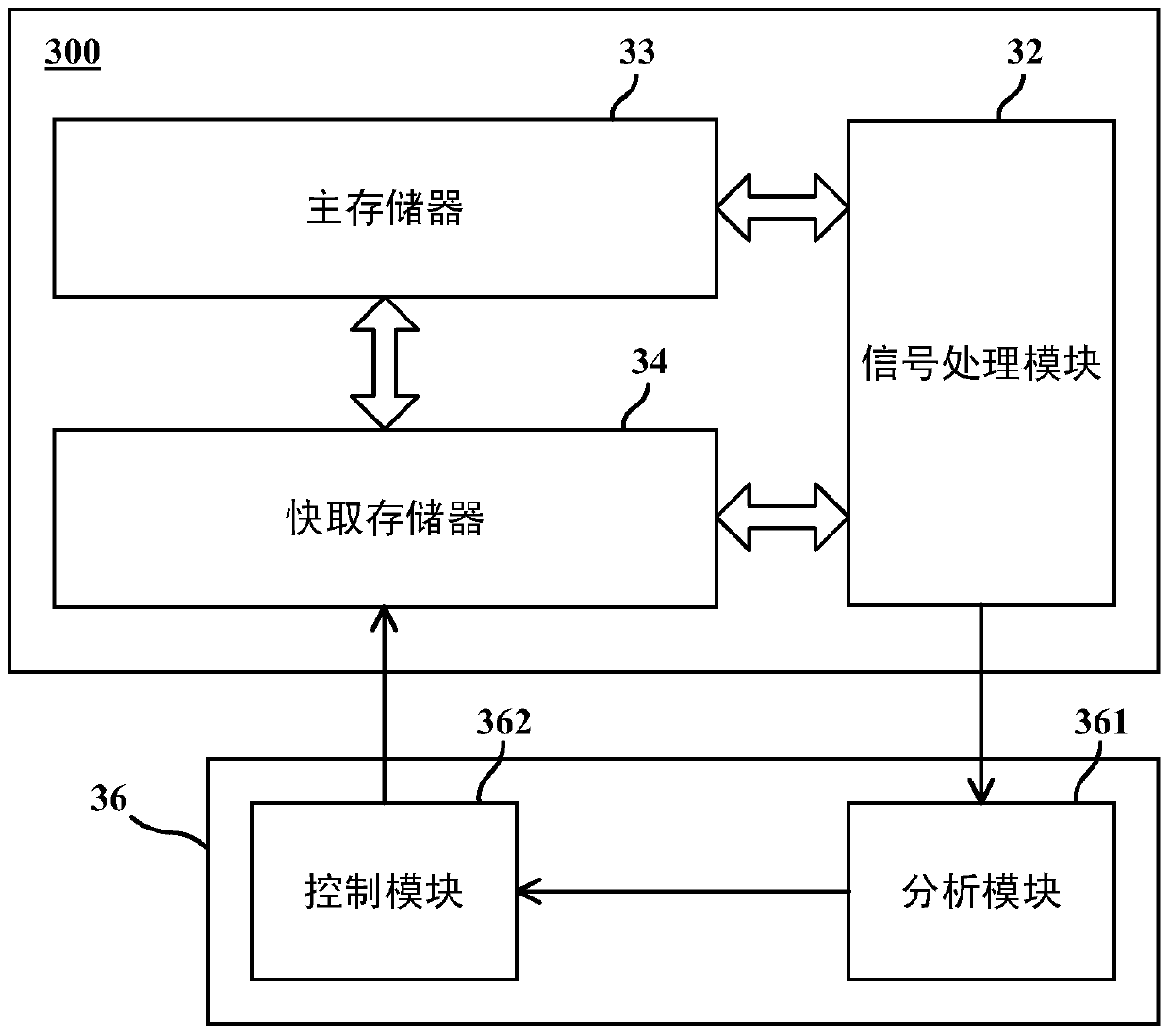

[0025] A specific embodiment according to the present invention is a cache memory management device, and a cache memory associated with it is used to temporarily store a reference data required for processing a data. The cache memory management device includes an analysis module and a control module. The analysis module is used for generating a cache miss analysis information related to the cache memory when processing the data. The control module is used for determining an index content allocation method of the cache memory according to the cache miss analysis information. A functional block diagram of an application example of the cache memory management device is shown in Figure 3A .

[0026] At Figure 3A In the presented example, the cache memory management device 36 includes an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com