Multi-focus image fusion method based on NSCT (Non-Subsampled Contourlet Transform) and depth information incentive PCNN (Pulse Coupled Neural Network)

A multi-focus image and depth information technology, applied in image enhancement, image analysis, image data processing, etc., can solve the problems of fusion image distortion, pseudo-Gibbs phenomenon, and lack of translation invariance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

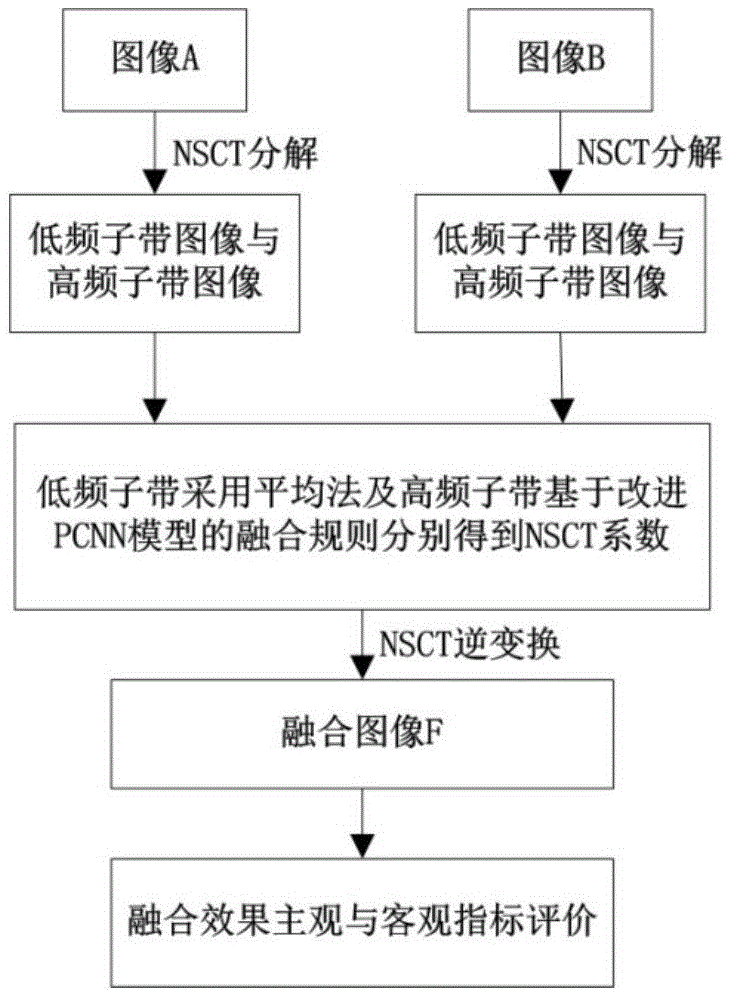

[0040] The present invention is based on non-subsampling Contourlet transform and depth information to stimulate PCNN's multi-focus image fusion method, such as figure 1 Shown: The same non-subsampling Contourlet transformation is performed on the original input multi-focus image to obtain a low-frequency sub-band image and a series of multi-resolution and multi-directional high-frequency sub-band images. Then apply different fusion strategies to the low-frequency sub-band and high-frequency sub-band to obtain fusion coefficients, and finally perform non-subsampling Contourlet inverse transformation on the obtained fusion coefficients to obtain the final fusion result. The specific steps are as follows:

[0041] (1) On the basis of the preprocessing of the registration of the multi-focus images of the same scene, the multi-focus images I A and I BPerform multi-scale, multi-directional non-subsampling Contourlet transformation, and decompose each of the two images into a low-...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com