Complex optical text sequence identification system based on convolution and recurrent neural network

A technology of recursive neural network and convolutional neural network, applied in biological neural network models, character and pattern recognition, neural architecture, etc., can solve the problems of character size and gap difference, cumbersome and time-consuming, loss of available information in pictures, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] The present invention will be further described in detail below in conjunction with test examples and specific embodiments. However, it should not be understood that the scope of the above subject matter of the present invention is limited to the following embodiments, and all technologies realized based on the content of the present invention belong to the scope of the present invention.

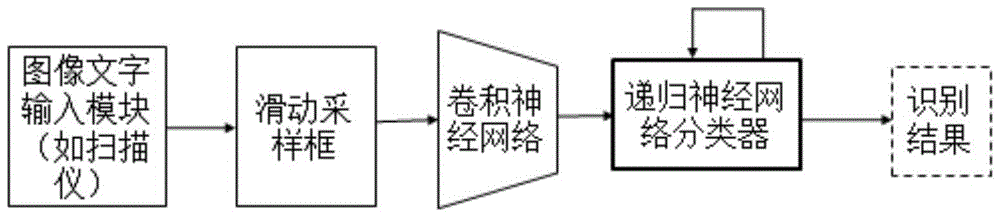

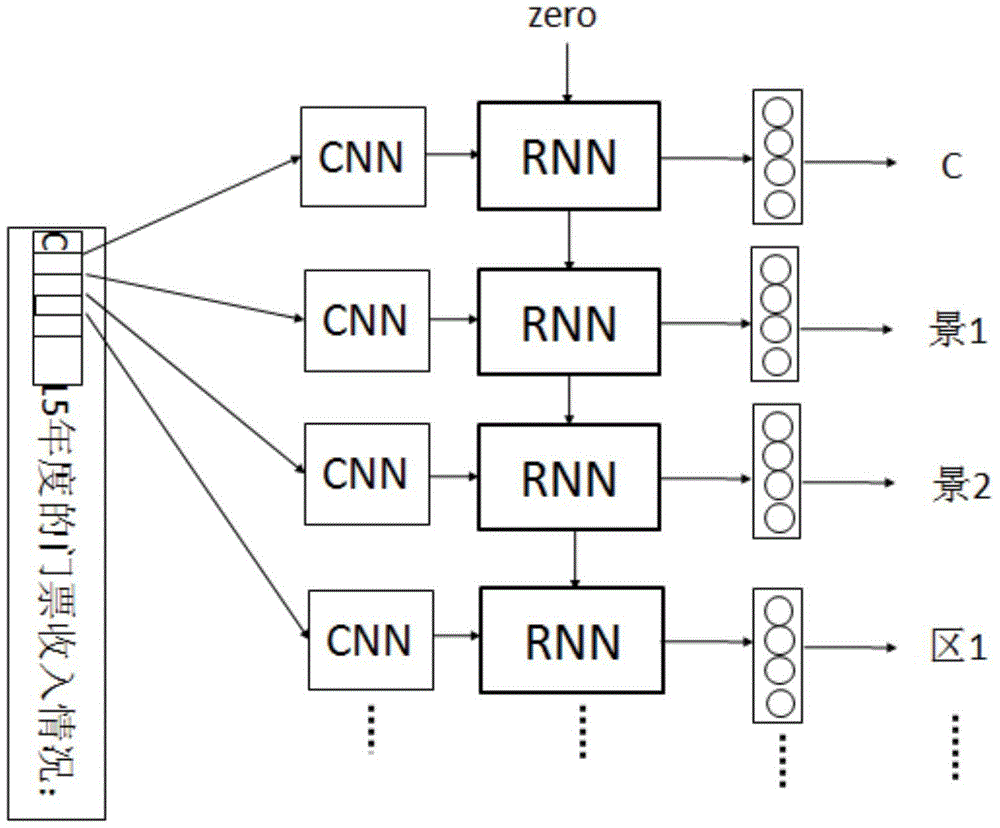

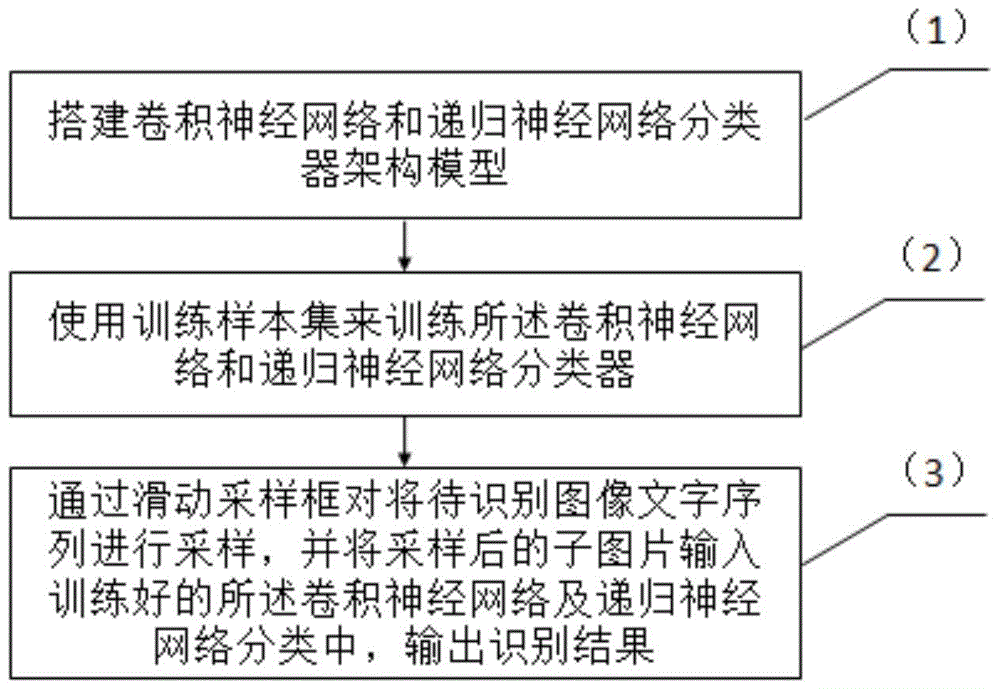

[0042] The present invention provides as figure 1 The technical solution shown: complex optical character sequence recognition system based on convolution and recursive neural network, including image and character input module, sliding sampling module, convolutional neural network and recurrent neural network classifier,

[0043] Wherein the sliding sampling module comprises a sliding sampling frame, and the sliding sampling frame carries out sliding sampling to the image text sequence to be recognized input by the image text input module (scanner, digital camera or image tex...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com