Data-driven iterative image online annotation method

A data-driven, iterative technology, applied in the direction of metadata still image retrieval, electronic digital data processing, still image data retrieval, etc., can solve the problem of prior knowledge dependence of annotation data, cannot fully meet the application requirements of image annotation, and has no image optimization And other issues

- Summary

- Abstract

- Description

- Claims

- Application Information

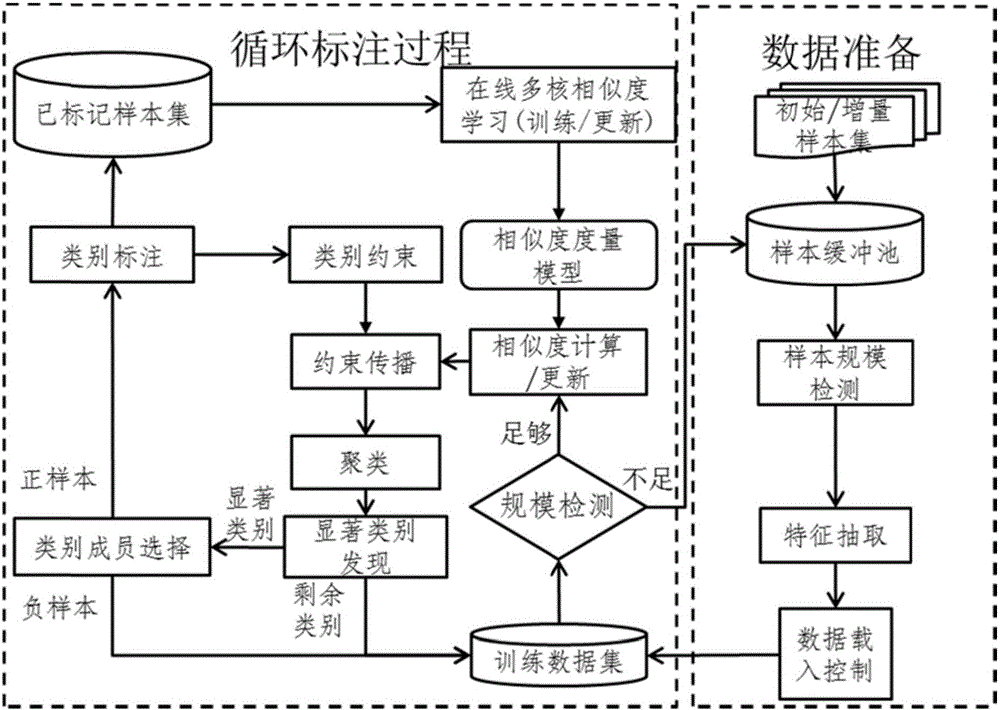

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

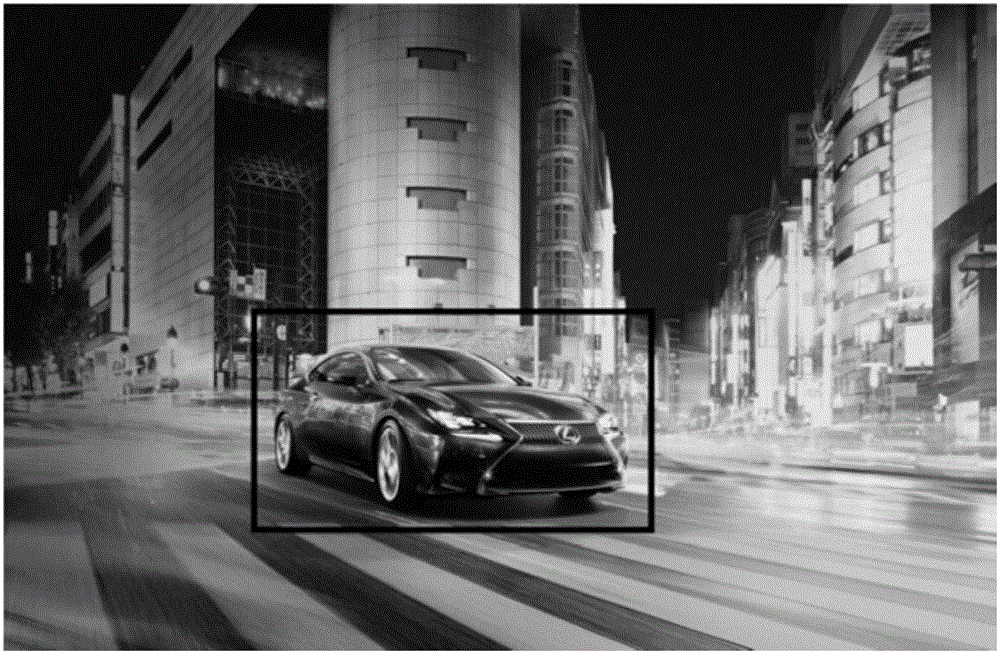

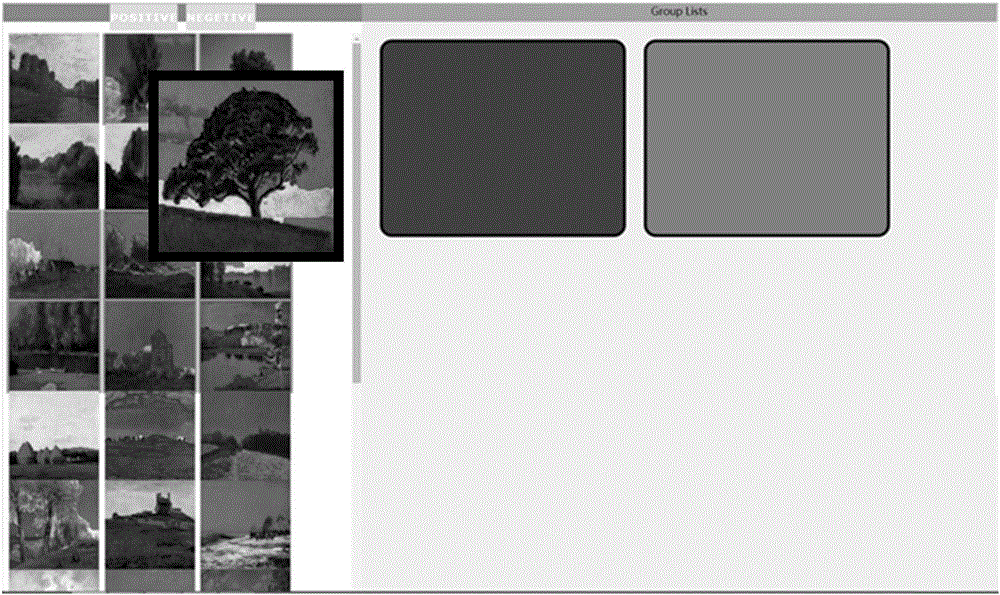

[0082] In this example, if figure 2 is a sample image, where the black box is the image target area to be marked, Figure 3aSelect an example image for the prominent category members of the user interface. The layout on the left is to display the prominent categories pushed to the user in each cycle. The image selected by the user will have a black border around the image to show the confirmation effect. When the top of the image is selected, it will show a magnification effect, so that the user can observe the details of the image carefully. The layout on the right is the category label page. When the user clicks on the gray box, a new category label will be generated. Figure 3b An example of category labeling after selection of prominent category members in the user interface, that is, after the user selects the members of the prominent category, select the label category on the right, and then use positive or negative to submit the labeling results Complete the labeling ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com