Connected domain-based natural scene text detection method

A technology of natural scenes and connected domains, which is applied in character recognition, character and pattern recognition, instruments, etc., can solve problems such as the decline in the accuracy of text detection, achieve the effect of improving accuracy and ensuring detection speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

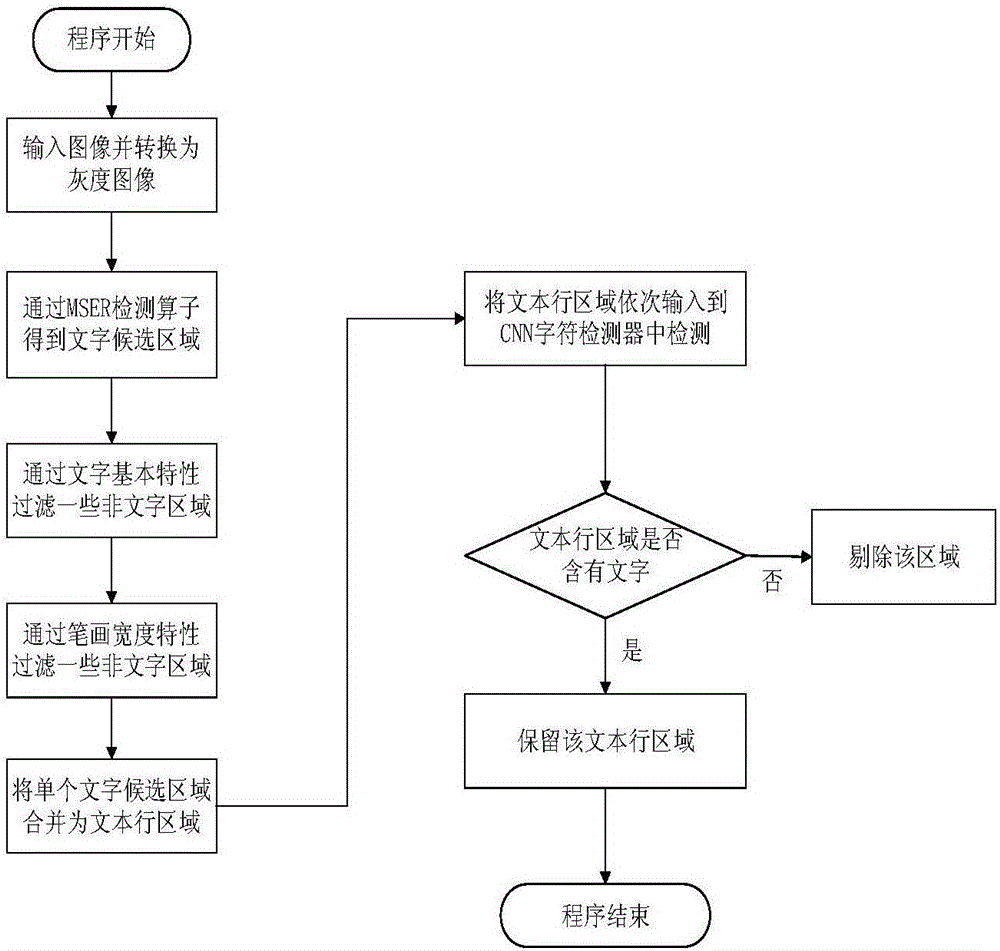

[0030] refer to figure 1 , the present invention is based on the method for the natural scene image character detection of connected domain, comprises the following steps:

[0031] Step 1: Acquiring a Grayscale Image I G .

[0032] Input the original image I, perform grayscale transformation on the original image, and obtain the grayscale image I of the image G .

[0033] Step 2: Get character candidate region image I m .

[0034] Using connected region detection operator MSER to grayscale image I G Perform region detection to obtain connected regions containing text and non-text, use these connected regions as character candidate regions, and place these character candidate regions in image I G The above is displayed in color, and the character candidate area image I is obtained m .

[0035] Step 3: Filter out the character candidate region image I m In some candidate regions that do not contain text, get the character candidate region image I after preliminary filte...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com