Method for importing data into multiple Hadoop components simultaneously

A component and data technology, which is applied in the field of rapid transfer and processing of large amounts of data, can solve problems such as not being provided, achieve wide application prospects, highlight substantive features, and simple structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] The present invention will be described in detail below with reference to the accompanying drawings and specific embodiments. The following embodiments are explanations of the present invention, but the present invention is not limited to the following embodiments.

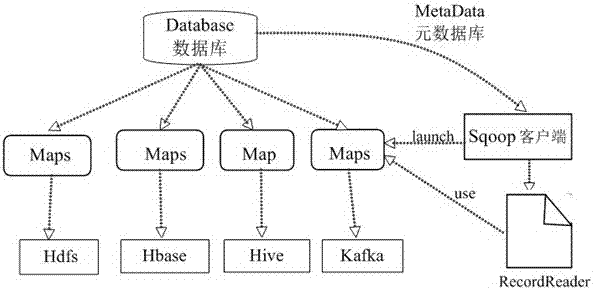

[0020] Such as figure 1 As shown, a method for simultaneously importing data into multiple Hadoop components provided by this embodiment includes the following steps:

[0021] Step 1: Extend the import tool of Sqoop and add the import service to Kafka;

[0022] Step 2: Import the configuration parameters of each component according to the database, and write a parameter verification program;

[0023] Step 3: Expand the import tool of Sqoop and add the service of simultaneously exporting HDFS, Hive, Hbase, and Kafka.

[0024] The implementation process of step 1 includes: modifying the BaseSqoopTool class code and ImportTool class code of Sqoop, designing the MapReduce task to import data to Kafka, and def...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com