System and method for controlling 3D model based on gesture

A gesture control and 3D technology, which is applied in the input/output process of data processing, instruments, electrical digital data processing, etc., can solve the problem of not being able to support translation, rotation, and scaling at the same time, and achieve fast query speed, simple control, and low cost. low effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

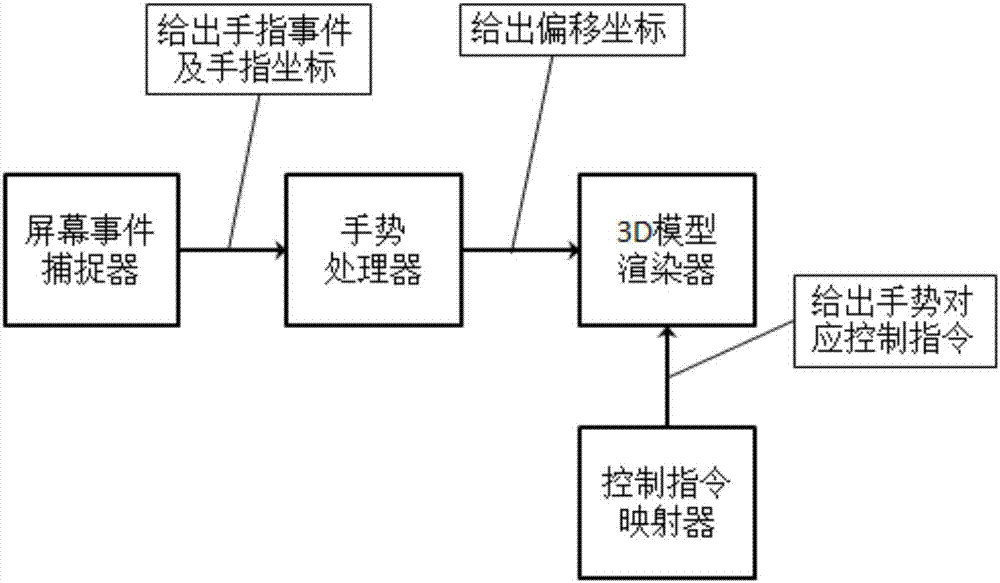

[0046] The present invention constructs a new gesture control system, based on a gesture method in which one finger is stationary and the other finger slides, the relative coordinates of the sliding finger are used to control the translation or rotation of the 3D model, such as figure 2 As shown, a system for controlling a 3D model based on gestures, including a screen event catcher, captures finger events on the touch screen in real time, and obtains the number of fingers on the screen in real time, and the coordinates of the corresponding fingers on the screen; The captured finger information is passed to the gesture processor; the so-called finger event is the event that the finger operates on the touch screen, and this event includes but not limited to events such as finger clicking on the screen, finger sliding on the screen, and finger leaving the screen;

[0047] The gesture processor controls the specific change vector of the 3D model according to the number of fingers...

Embodiment 2

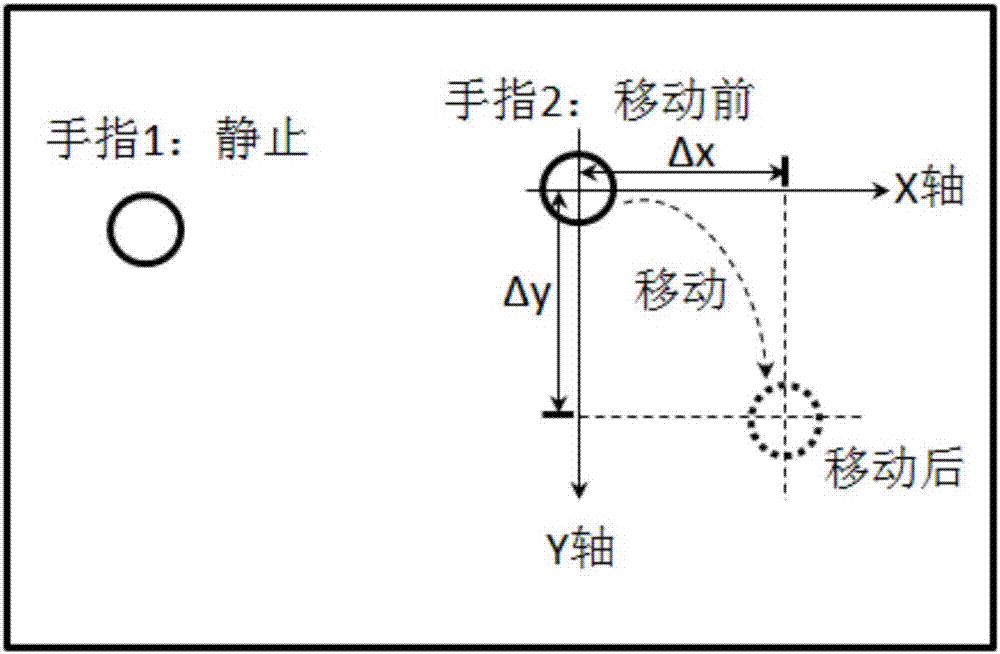

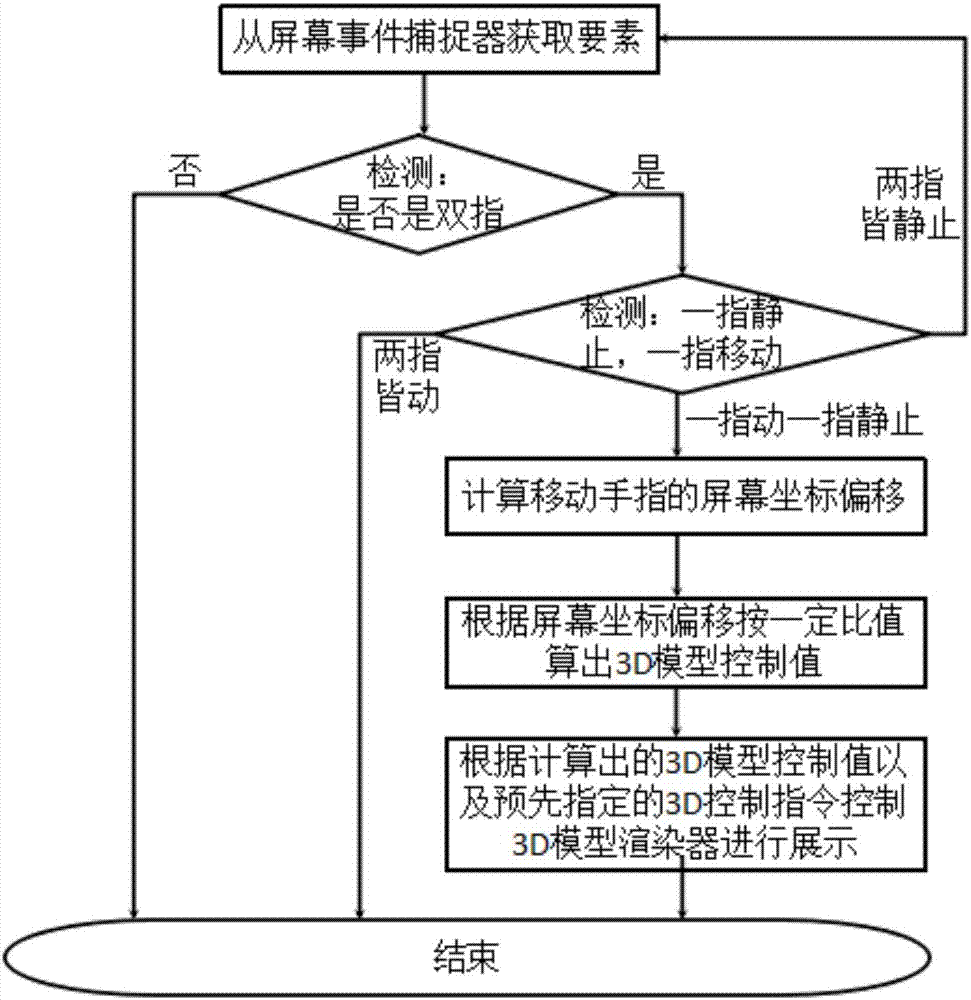

[0053] Such as figure 1 , 3 As shown, a method for controlling a 3D model based on gestures, the steps are as follows:

[0054] Step 1. The screen event catcher acquires event elements from the touch screen; the event elements include the number of fingers, corresponding finger events, and screen coordinates of corresponding fingers.

[0055] Step 2, the gesture processor detects the number of fingers, and judges whether the finger index touched on the screen is equal to 2 according to the number of fingers, if not, then end the process; if yes, continue to process downward;

[0056] Step 3. The gesture processor detects gestures, and judges two of the fingers from the finger events and the screen coordinates of the corresponding fingers. If both fingers are moving, the process ends; if both fingers are stationary, return Go to step 1; if one finger is stationary and the other is moving, continue processing downwards; the judgment of finger movement is determined by the shak...

Embodiment 3

[0062] The 3D model control system also includes gestures of one finger moving and one finger sliding to control the translation of the 3D model, gestures of one finger moving to control the rotation of the 3D model, and gestures of two fingers moving simultaneously to control the scaling of the 3D model. The specific implementation methods are as follows:

[0063] Control command mapper: record the corresponding relationship of the 3D model control command corresponding to the gesture, that is, the 3D model control command corresponding to the gesture of "one finger is still and the other finger slides" is 3D model translation, "there is only one finger and this finger The 3D model control command corresponding to the gesture of "swipe" is 3D model rotation, and the 3D model control command corresponding to the gesture of "sliding with two fingers at the same time" is 3D model scaling;

[0064] Step 1. The screen event catcher acquires event elements from the touch screen, and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com