A Feature Preserving Video Coding Method

A video coding and video frame technology, which is applied in the field of image and video coding, can solve the problems of inaccurate extraction of feature areas, easy compression and loss, etc., and achieve the effect of small key point range, accurate feature area, and guaranteed subjective quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

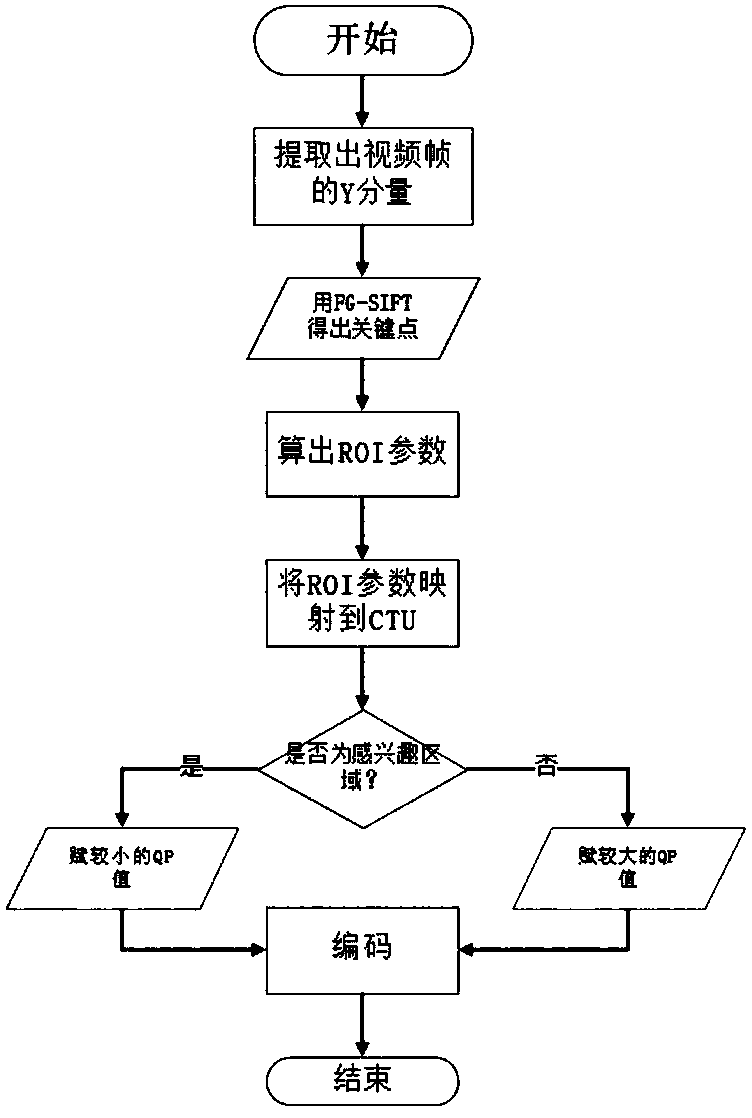

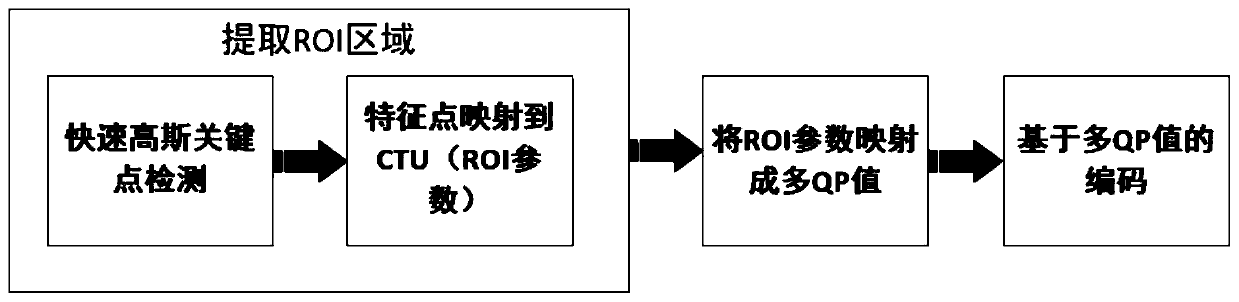

[0032] Such as figure 1 , 2 As shown, the method provided by the invention specifically includes the following steps:

[0033] 1. Extract key points

[0034] In the FG-SIFT feature extraction method, the specific process of extracting key points is as follows:

[0035] 1) Detection of scale space extreme points

[0036] 2) Accurate positioning of key points,

[0037] 3) Key point descriptor generation.

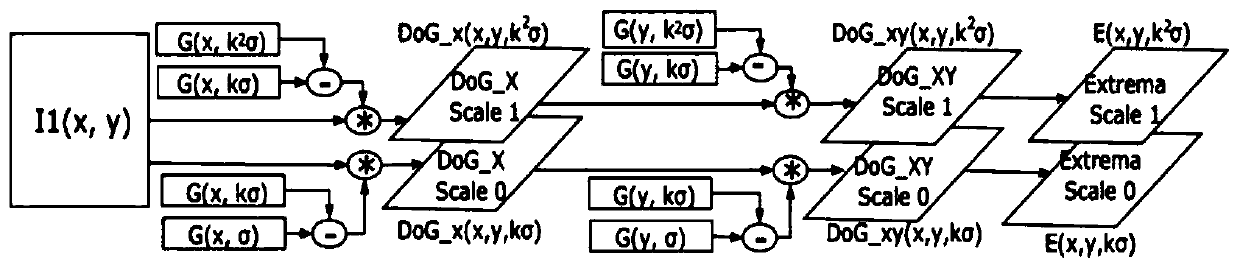

[0038] First briefly introduce the algorithm in one layer (octave). Such as image 3 shown.

[0039] First, the Gaussian difference DoG_X(x, y, kσ) in the x direction in DoG is calculated. In equation (1), DoG_X(x, y, kσ) is the difference of G_X at two nearby scales, where G_X(x, y, σ) is the input image I(x, y) and the image I(x , y) Convolution with a 1-D Gaussian kernel G(x, σ) (1×n vector) in x-dimension. From Eq. (1) DoG_X(x, y, kσ) can be generated directly from the convolution of two Gaussian kernels with the difference of the input image. It can reduce the ...

Embodiment 2

[0062] In this embodiment, HEVC standard test video sequences with different resolutions (1080P, WVGA, WQVGA) are used to evaluate the algorithm proposed by the present invention. The tests are implemented based on the reference HEVC software HM16.5, all test sequences are intra-coded (I-frames) for the first frame, followed by inter-coded frames (P-frames).

[0063] Now the method of the present invention is compared with the own coding mode of HM16.5 in the following two aspects:

[0064] 1. Matching efficiency

[0065] The method of the present invention obtains the key point according to the feature detection algorithm, and then generates the region of interest, reasonably adjusts the coding rate of the region of interest and the region of non-interest according to the region of interest, and appropriately changes the ratio QP (Quantitative Parameters, quantitative parameters), to retain the feature information in each frame of the original video, to meet the high-quality...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com