Multi-lens sensor-based image fusion method

An image fusion and multi-lens technology, applied in image enhancement, image analysis, image data processing, etc., can solve the problems of reducing the work efficiency of line inspection operations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

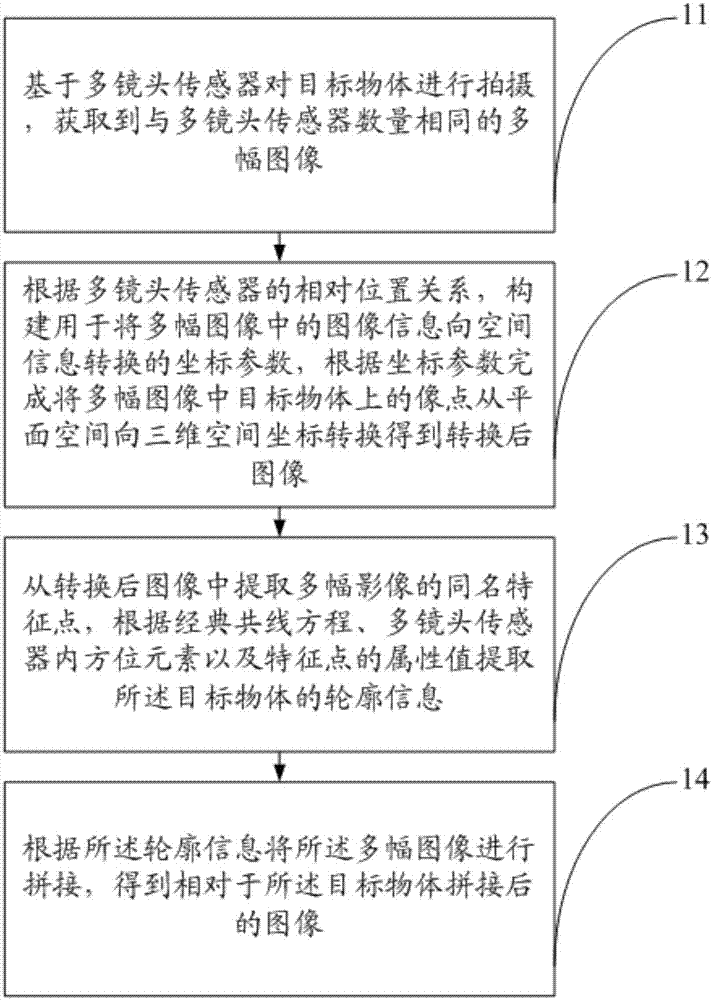

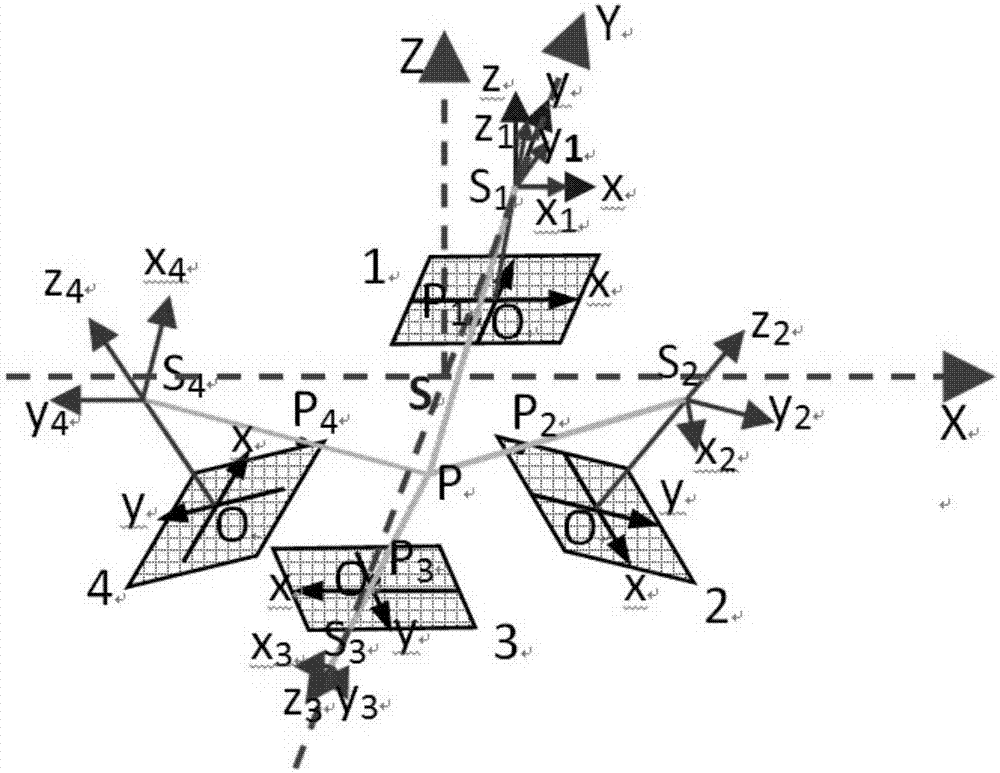

[0036] The present invention provides an image fusion method based on a multi-lens sensor. The multi-lens sensor includes at least four tilt sensors whose shooting central axis is tilted and fixed on the shooting plane at the same angle. Vertical sensors that capture flat surfaces such as figure 1 As shown, the image fusion method includes:

[0037] 11. Shoot the target object based on the multi-lens sensor, and obtain multiple images with the same number as the multi-lens sensor;

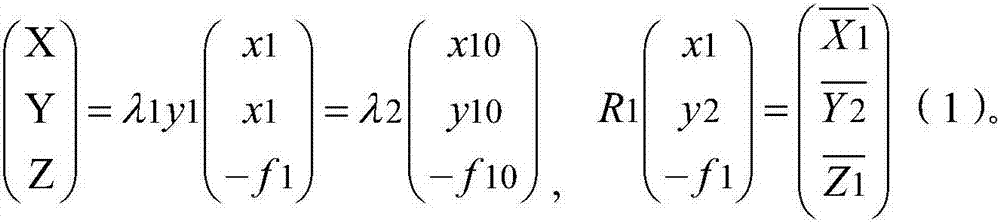

[0038] 12. According to the relative positional relationship of the multi-lens sensor, construct the coordinate parameters used to convert the image information in multiple images to spatial information, and complete the transformation of the image points on the target object in multiple images from plane space to three-dimensional according to the coordinate parameters Transform the space coordinates to obtain the transformed image;

[0039] 13. Extract the feature points of the same name of mu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com