Neural network optimization method of a lifted proximal operator machine (LPOM)

A technology of neural network and optimization method, applied in the field of deep learning, can solve problems such as slow convergence speed and difficulty in training neural network

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

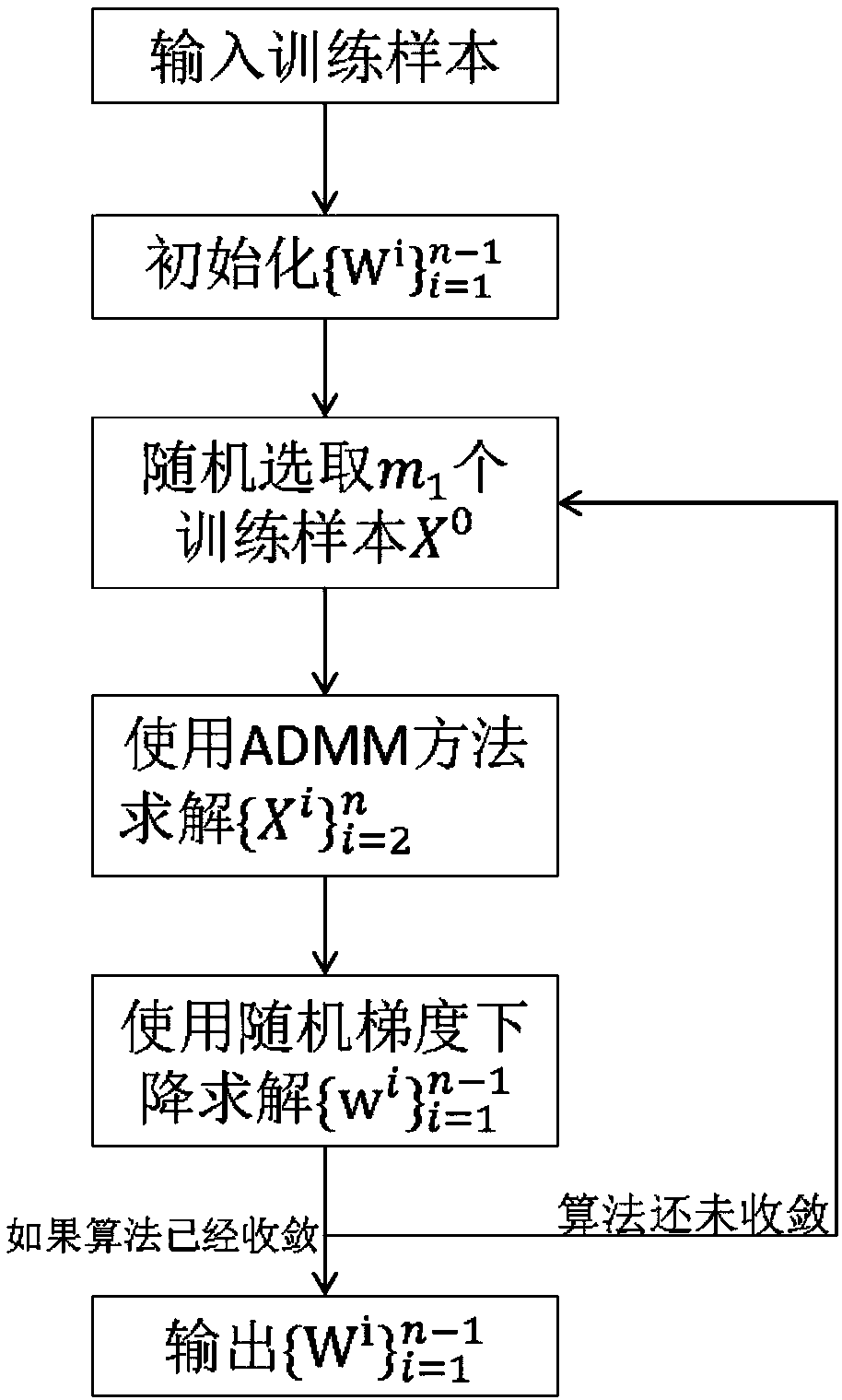

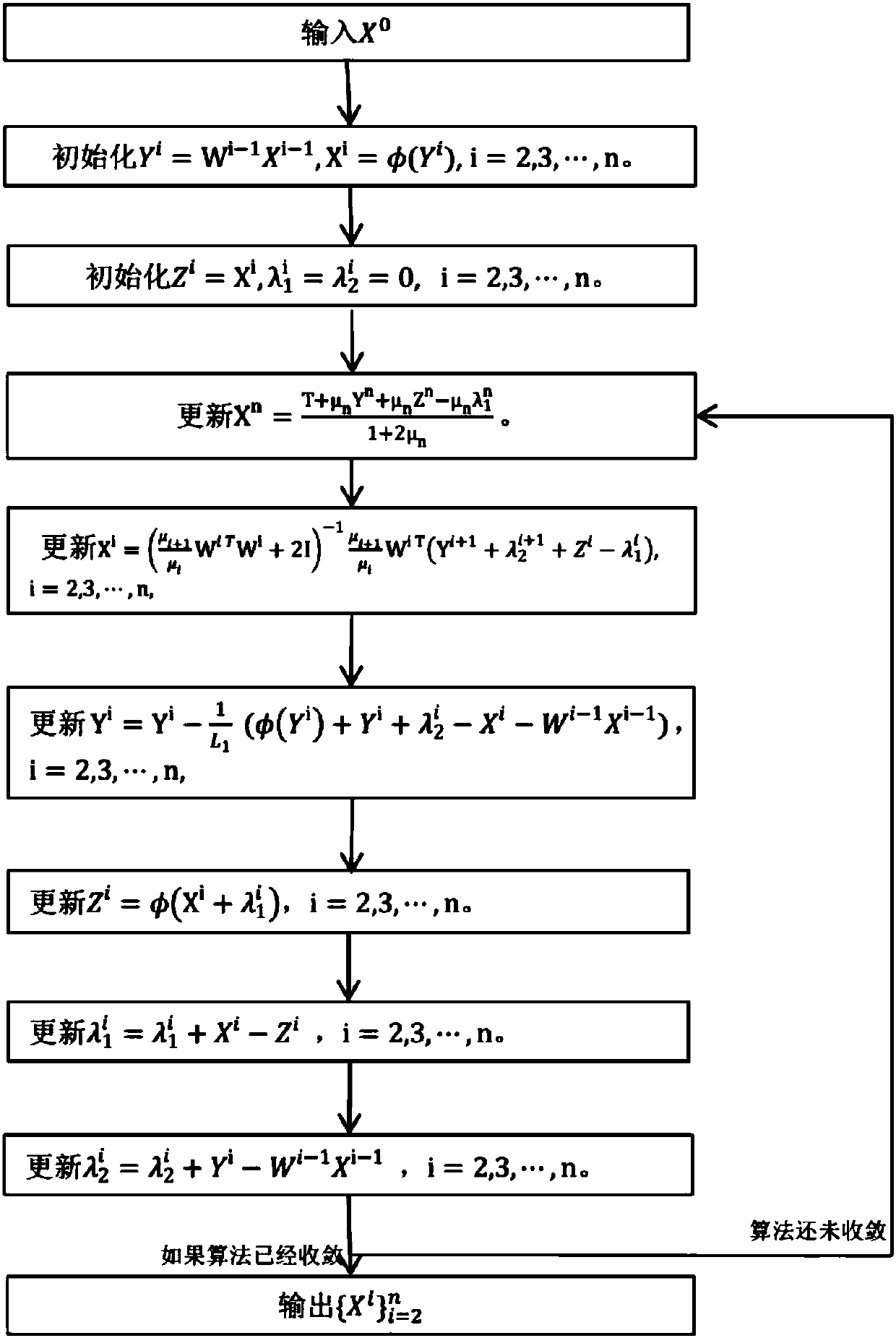

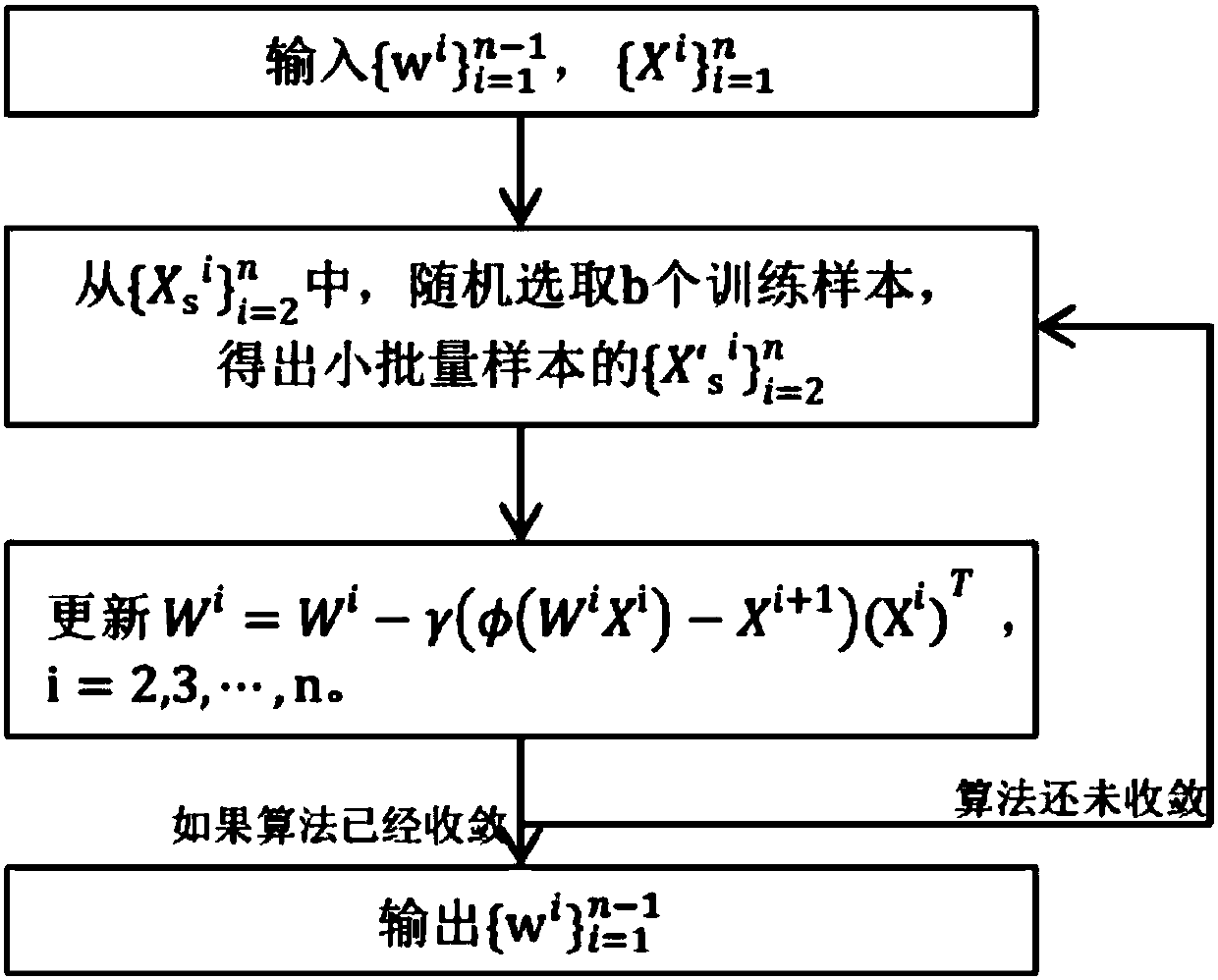

Method used

Image

Examples

Embodiment 1

[0171] Embodiment 1: shallow network

[0172] For a three-layer (n=3) neural network, the number of units in the hidden layer of the neural network is 300, using the LPOM algorithm, we set the hyperparameter μ i = 2 i-n , K 1 =600, K 2 =100, m 1 =1000, b=100.

[0173] Directly compare the final recognition rate results. When we use the LPOM algorithm to optimize the neural network, the final recognition rate is 95.6%. And when the stochastic gradient descent method is used to optimize the problem, the final recognition result is 95.3% (the result is directly from the MNIST official website http: / / yann.lecun.com / exdb / mnist / obtained on ). It can be seen from this that the LPOM method can obtain recognition results comparable to the stochastic gradient descent method on a shallow neural network.

Embodiment 2

[0174] Embodiment 2: Deep Network

[0175] The method of the present invention is adopted on a deep neural network. We set the structure of the neural network as Where n-2 is the number of hidden layers of the network, we set n-2 to 18, 19, and 20. For the LPOM algorithm, use the same hyperparameter μ i = 2 i-n , K 1 =600, K 2 =100, m 1 =1000, b=100. For the stochastic gradient descent method, we search for hyperparameters that satisfy: 1) search for step size parameters from 0.001, 0.005, 0.01, 0.05, 0.1, 0.5, 1, and 2) search for impulse parameters from 0, 0.2, 0.5, 0.9. For the LPOM algorithm and the SGD algorithm (stochastic gradient descent method), use the literature [17] (Glorot X, BengioY. Understanding the difficulty of training deep feedforward neural networks [C] / / Artificial Intelligence and Statistics.2010,9:249-256 .) documented initialization method: parameter from Obtained from a uniform distribution, where n i and n o are the input and output d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com