Vision and inertia combined positioning method

A visual positioning and joint positioning technology, applied in the field of positioning, can solve the problems of uneven positioning output and poor robustness, achieve high precision, improve real-time performance and precision, and reduce the effect of cumulative impact on precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

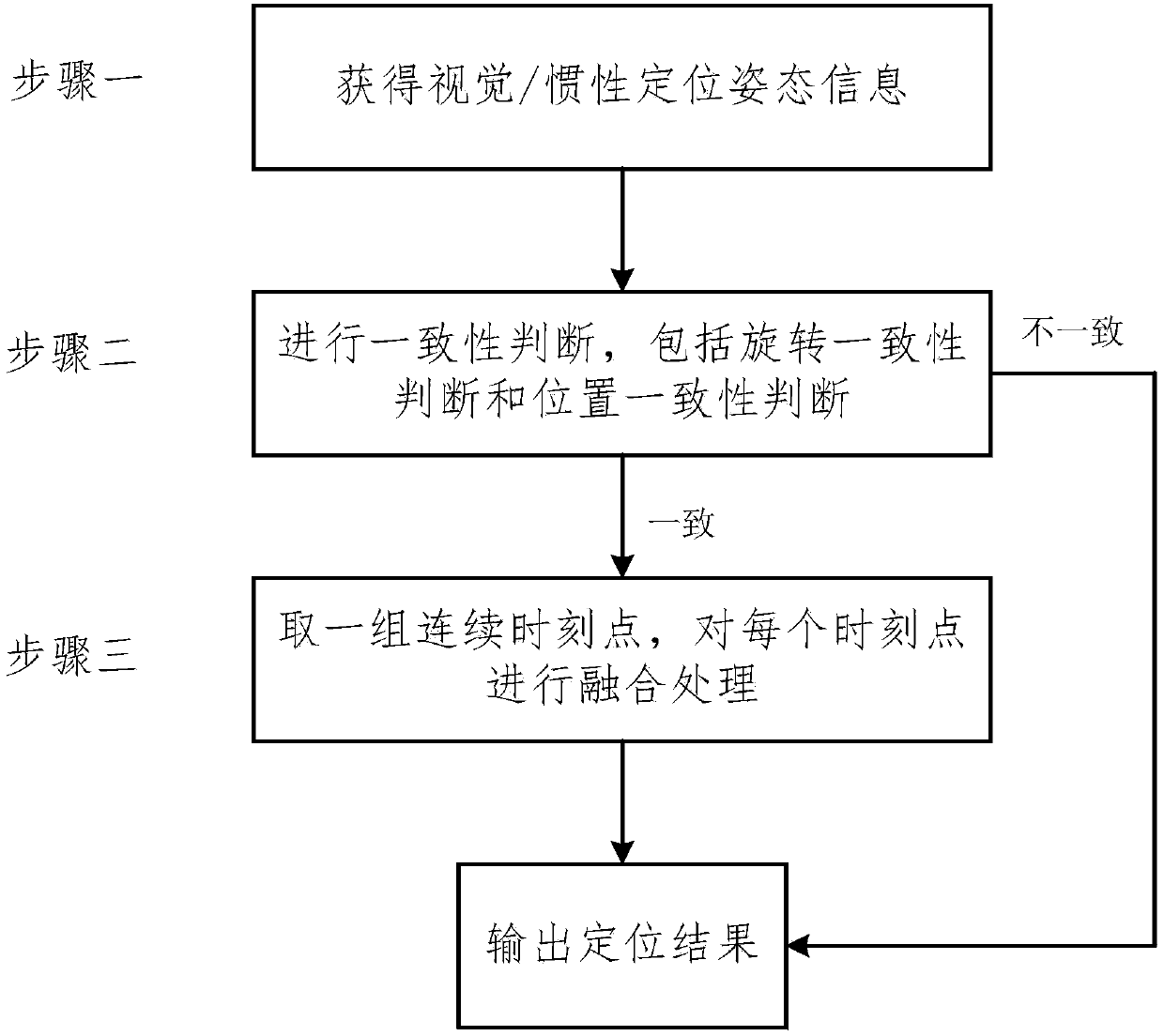

[0040] The joint positioning method mainly uses inertial components to complete the interpolation processing and mutual correction of the visual positioning of the mobile device, so as to obtain high-precision and stable positioning information.

[0041] Visual positioning: collect visual images through imaging equipment, detect changes in different images, and judge motion changes to obtain visual positioning results at a certain moment (taking time k as an example)

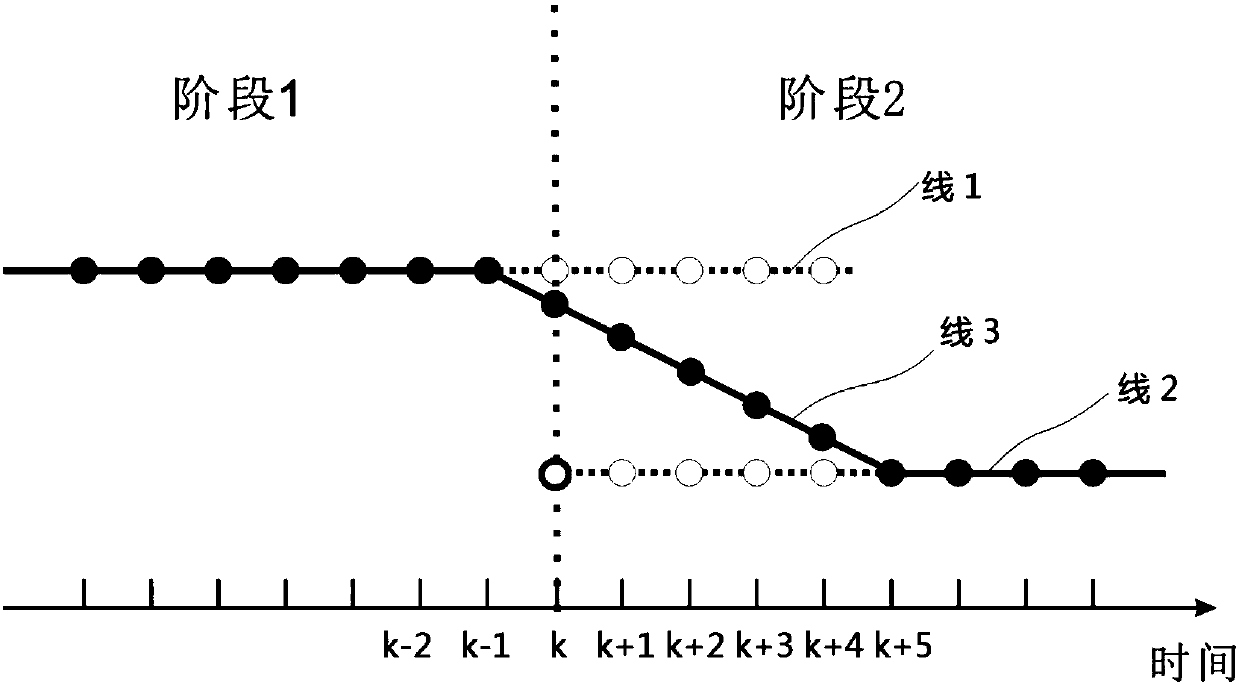

[0042] Inertial element positioning: Measure the linear acceleration and rotational angular rate through the inertial measurement element, and calculate the attitude result of the inertial element at a certain moment (taking time k as an example) according to the change of attitude at continuous moments And deduce the predicted positioning results at time k The predicted positioning results Predict the positioning result from the previous moment, that is, k-1 moment and inertial element attitude results ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com